Chapter 1. About Red Hat Update Infrastructure 4

Red Hat Update Infrastructure 4 (Red Hat Update Infrastructure 4) is a highly scalable, highly redundant framework that enables you to manage repositories and content. It also enables cloud providers to deliver content and updates to Red Hat Enterprise Linux (RHEL) instances. Based on the upstream Pulp project, RHUI allows cloud providers to locally mirror Red Hat-hosted repository content, create custom repositories with their own content, and make those repositories available to a large group of end users through a load-balanced content delivery system.

As a system administrator, you can prepare your infrastructure for participation in the Red Hat Certified Cloud and Service Provider program by installing and configuring the Red Hat Update Appliance (RHUA), content delivery servers (CDS), repositories, shared storage, and load balancing.

Configuring RHUI comprises the following tasks:

- Creating and synchronizing a Red Hat repository

- Creating client entitlement certificates and client configuration RPMs

- Creating client profiles for the RHUI servers

Experienced RHEL system administrators are the target audience. System administrators with limited RHEL skills should consider engaging Red Hat Consulting to provide a Red Hat Certified Cloud Provider Architecture Service.

Learn about configuring, managing, and updating RHUI with the following topics:

- the RHUI components

- content provider types

- the command line interface (CLI) used to manage the components

- utility commands

- certificate management

- content management

1.1. Installation options

The following table presents the various Red Hat Update Infrastructure 4 components.

| Component | Acronym | Function | Alternative |

|---|---|---|---|

| Red Hat Update Appliance | RHUA | Downloads content from the Red Hat content delivery network and stores it on the shared storage | None |

| Content Delivery Server | CDS |

Provides the | None |

| HAProxy | None | Provides load balancing across CDS nodes | Existing load balancing solution |

| Shared storage | None | Provides shared storage | Existing storage solution |

The following table describes how to perform installation tasks.

| Installation Task | Performed on |

|---|---|

| Install RHEL 8 | RHUA, CDS, and HAProxy |

| Register the system with the RHUI consumer type | RHUA |

| Register the system with the default consumer type | CDS and HAProxy |

| Apply updates | RHUA, CDS and HAProxy |

|

Install | RHUA |

|

Run | RHUA |

1.1.1. Option 1: Full installation

- A RHUA with shared storage

- Two or more CDS nodes with this shared storage

- One or more HAProxy load-balancers

1.1.2. Option 2: Installation with an existing storage solution

- A RHUA with an existing storage solution

- Two or more CDS nodes with this existing storage solution

- One or more HAProxy load-balancers

1.1.3. Option 3: Installation with an existing load-balancer solution

- A RHUA with shared storage

- Two or more CDS nodes with this shared storage

- An existing load-balancer

1.1.4. Option 4: Installation with existing storage and load-balancer solutions

- A RHUA with an existing storage solution

- Two or more CDS nodes with this existing shared storage

- An existing load-balancer

Red Hat Update Infrastructure must be used with at least two CDS nodes and a load-balancer node. Installation without any load-balancer node and with a single CDS node is unsupported.

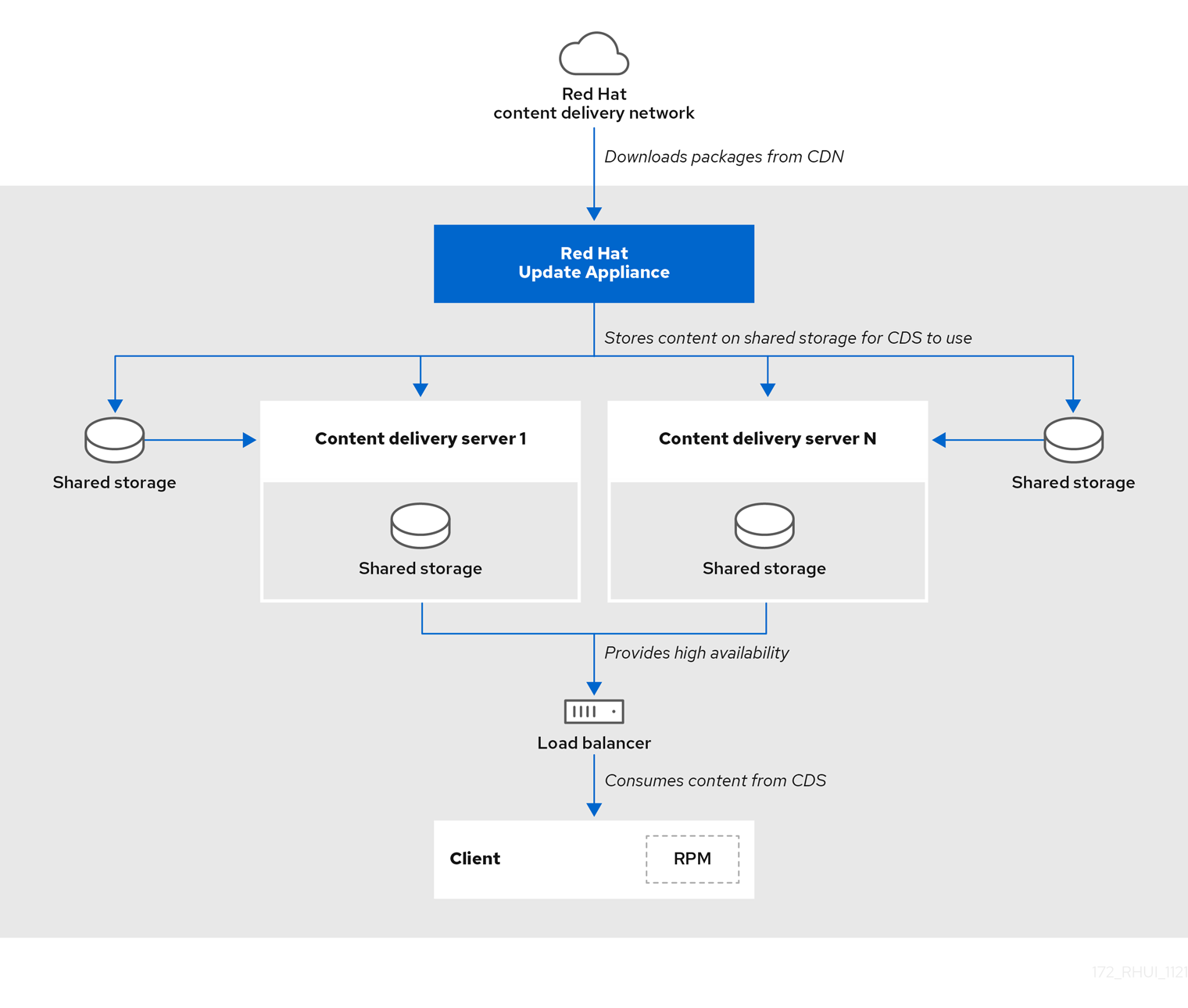

The following figure depicts a high-level view of how the various Red Hat Update Infrastructure 4 components interact.

Figure 1.1. Red Hat Update Infrastructure 4 overview

Install the RHUA and CDS nodes on separate x86_64 servers (bare metal or virtual machines). Ensure all the servers and networks that connect to RHUI can access the Red Hat Subscription Management service.

1.2. RHUI 4 components

Understanding how each RHUI component interacts with other components will make your job as a system administrator a little easier.

1.2.1. Red Hat Update Appliance

There is one RHUA per RHUI installation, though in many cloud environments there will be one RHUI installation per region or data center, for example, Amazon’s EC2 cloud comprises several regions. In every region, there is a separate RHUI set up with its own RHUA node.

The RHUA allows you to perform the following tasks:

- Download new packages from the Red Hat content delivery network (CDN).

- Copy new packages to the shared network storage.

- Verify the RHUI installation’s health and write the results to a file located on the RHUA. Monitoring solutions use this file to determine the RHUI installation’s health.

- Provide a human-readable view of the RHUI installation’s health through a CLI tool.

RHUI uses two main configuration files: /etc/rhui/rhui-tools.conf and /etc/rhui/rhui-subscription-sync.conf.

The /etc/rhui/rhui-tools.conf configuration file contains general options used by the RHUA, such as the default file locations for certificates, and default configuration parameters for the Red Hat CDN synchronization. This file normally does not require editing.

The /etc/rhui/rhui-subscription-sync.conf configuration file contains the credentials for the Pulp database. These credentials must be used when logging in to the rhui-manager interface.

The RHUA employs several services to synchronize, organize, and distribute content for easy delivery.

RHUA services

- Pulp

- The service that manages the repositories.

- PostgreSQL

- The database that Pulp uses to keep track of currently synchronized repositories, packages, and other crucial metadata.

1.2.2. Content delivery server

The CDS nodes provide the repositories that clients connect to for the updated content. There can be as few as one CDS. Because RHUI provides a load-balancer with failover capabilities, we recommended that you use multiple CDS nodes.

The CDS nodes host content to end-user RHEL systems. While there is no required number of systems, the CDS works in a round-robin style load-balanced fashion (A, B, C, A, B, C) to deliver content to end-user systems. The CDS uses HTTP to host content to end-user systems via yum repositories.

During configuration, you specify the CDS directory where packages are synchronized. Similar to the RHUA, the only requirement is that you mount the directory on the CDS. It is up to the cloud provider to determine the best course of action when allocating the necessary devices. The Red Hat Update Infrastructure Management Tool configuration RPM linked the package directory with the NGINX configuration to serve it.

Currently, RHUI supports the following shared storage solutions:

- NFS

If NFS is used,

rhui-installercan configure an NFS share on the RHUA to store the content as well as a directory on the CDS nodes to mount the NFS share. The followingrhui-installeroptions control these settings:-

--remote-fs-mountpointis the file system location where the remote file system share should be mounted (default:/var/lib/rhui/remote_share) -

--remote-fs-serveris the remote mount point for a shared file system to use, for example,nfs.example.com:/path/to/share(no default value)

-

- CephFS

If using CephFS, you must configure CephFS separately and then use it with RHUI as a mount point. The following

rhui-installeroptions control these settings:-

--remote-fs-serveris the remote mount point for a shared file system to use, for example,ceph.example.com:/path/to/share(no default value)

-

This document does not provide instructions to set up or configure Ceph shared file storage. For any Ceph related tasks, consult your system administrator, or see the Ceph documentation.

The expected usage is that you use one shared network file system on the RHUA and all CDS nodes, for example, NFS. It is possible the cloud provider will use some form of shared storage that the RHUA writes packages to and each CDS reads from.

The storage solution must provide an NFS or CephFS endpoint for mounting on the RHUA and CDS nodes. Do not set up the shared file storage on any of the RHUI nodes. You must use an independent storage server.

The only nonstandard logic that takes place on each CDS is the entitlement certificate checking. This checking ensures that the client making requests on the yum repositories is authorized by the cloud provider to access those repositories. The check ensures the following conditions:

- The entitlement certificate was signed by the cloud provider’s Certificate Authority (CA) Certificate. The CA Certificate is installed on the CDS as part of its configuration to facilitate this verification.

- The requested URI matches an entitlement found in the client’s entitlement certificate.

If the CA verification fails, the client sees an SSL error. See the CDS node’s NGINX logs under /var/log/nginx/ for more information.

The NGINX configuration is handled through the /etc/nginx/conf.d/ssl.conf file, which is created during the CDS installation.

1.2.3. HAProxy load-balancer

A load-balancing solution must be in place to spread client HTTPS requests across all CDS servers. RHUI uses HAProxy by default, but it is up to you to choose what load-balancing solution (for example, the one from the cloud provider) to use during the installation. If HAProxy is used, you must also decide how many nodes to bring in.

Clients are not configured to go directly to a CDS; their repository files are configured to point to HAProxy, the RHUI load-balancer. HAProxy is a TCP/HTTP reverse proxy particularly suited for high-availability environments.

If you use an existing load-balancer, ensure port 443 is configured in the load-balancer and that all CDSs in the cluster are in the load-balancer’s pool.

The exact configuration depends on the particular load-balancer software you use. See the following configuration, taken from a typical HAProxy setup, to understand how you should configure your load-balancer:

Keep in mind that when clients fail to connect, it is important to review the nginx logs on the CDS under /var/log/nginx/ to ensure that any request reached the CDS. If requests do not reach the CDS, issues such as DNS or general network connectivity may be at fault.

1.2.4. Repositories and content

A repository is a storage location for software packages (RPMs). RHEL uses yum commands to search a repository, download, install, and update the RPMs. The RPMs contain all the dependencies needed to run an application.

Content, as it relates to RHUI, is the software (such as RPMs) that you download from the Red Hat CDN for use on the RHUA and the CDS nodes. The RPMs provide the files necessary to run specific applications and tools. Clients are granted access by a set of SSL content certificates and keys provided by an rpm package, which also provides a set of generated yum repository files.

1.3. Content provider types

There are three types of cloud computing environments:

- public cloud

- private cloud

- hybrid cloud

This guide focuses on public and private clouds. We assume the audience understands the implications of using public, private, and hybrid clouds.

1.4. Component communications

All RHUI components use the HTTPS communication protocol over port 443.

| Source | Destination | Protocol | Purpose |

|---|---|---|---|

| Red Hat Update Appliance | Red Hat Content Delivery Network | HTTPS | Downloads packages from Red Hat |

| Load-Balancer | Content Delivery Server | HTTPS | Forwards the clients' requests for repository metadata and packages |

| Client | Load-Balancer | HTTPS | Used by yum on the clients to download content |

| Content Delivery Server | Red Hat Update Appliance | HTTPS | Might request information from Pulp API about content |

RHUI nodes require the following network access to communicate with each other.

Make sure that the network port is open and that network access is restricted to only those nodes that you plan to use.

| Node | Port | Access |

|---|---|---|

| RHUA | 443 | RHUA, CDS01, CDS02, … CDSn |

| HAProxy | 443 | Clients |

1.5. Changing the admin password

The rhui-installer sets the initial RHUI login password. It is also written in the /etc/rhui/rhui-subscription-sync.conf file. You can override the initial password with the --rhui-manager-password option.

If you want to change the initial password later, you can change it through the rhui-manager tool or through rhui-installer. Run the rhui-installer --help command to see the full list of rhui-installer options.

Procedure

Navigate to the Red Hat Update Infrastructure Management Tool home screen:

rhui-manager

[root@rhua ~]# rhui-managerCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Press

uto select manage RHUI users. From the User Manager screen, press

pto select change admin’s password (followed by logout):Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter your new password; reenter it to confirm the change.

New Password: Re-enter Password: [localhost] env PULP_SETTINGS=/etc/pulp/settings.py /usr/bin/pulpcore-manager reset-admin-password -p ********

New Password: Re-enter Password: [localhost] env PULP_SETTINGS=/etc/pulp/settings.py /usr/bin/pulpcore-manager reset-admin-password -p ********Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

The following message displays after you change the admin password:

Password successfully updated. For security reasons you have been logged out.

Password successfully updated. For security reasons you have been logged out.Copy to Clipboard Copied! Toggle word wrap Toggle overflow