Este contenido no está disponible en el idioma seleccionado.

Chapter 3. Deploying Red Hat Ceph Storage

This chapter describes how to use the Ansible application to deploy a Red Hat Ceph Storage cluster and other components, such as Metadata Servers or the Ceph Object Gateway.

- To install a Red Hat Ceph Storage cluster, see Section 3.2, “Installing a Red Hat Ceph Storage Cluster”.

- To install Metadata Servers, see Section 3.4, “Installing Metadata Servers”.

-

To install the

ceph-clientrole, see Section 3.5, “Installing the Ceph Client Role”. - To install the Ceph Object Gateway, see Section 3.6, “Installing the Ceph Object Gateway”.

- To configure a multisite Ceph Object Gateway, see Section 3.6.1, “Configuring a multisite Ceph Object Gateway”.

-

To learn about the Ansible

--limitoption, see Section 3.8, “Understanding thelimitoption”.

3.1. Prerequisites

- Obtain a valid customer subscription.

Prepare the cluster nodes. On each node:

3.2. Installing a Red Hat Ceph Storage Cluster

Use the Ansible application with the ceph-ansible playbook to install Red Hat Ceph Storage 3.

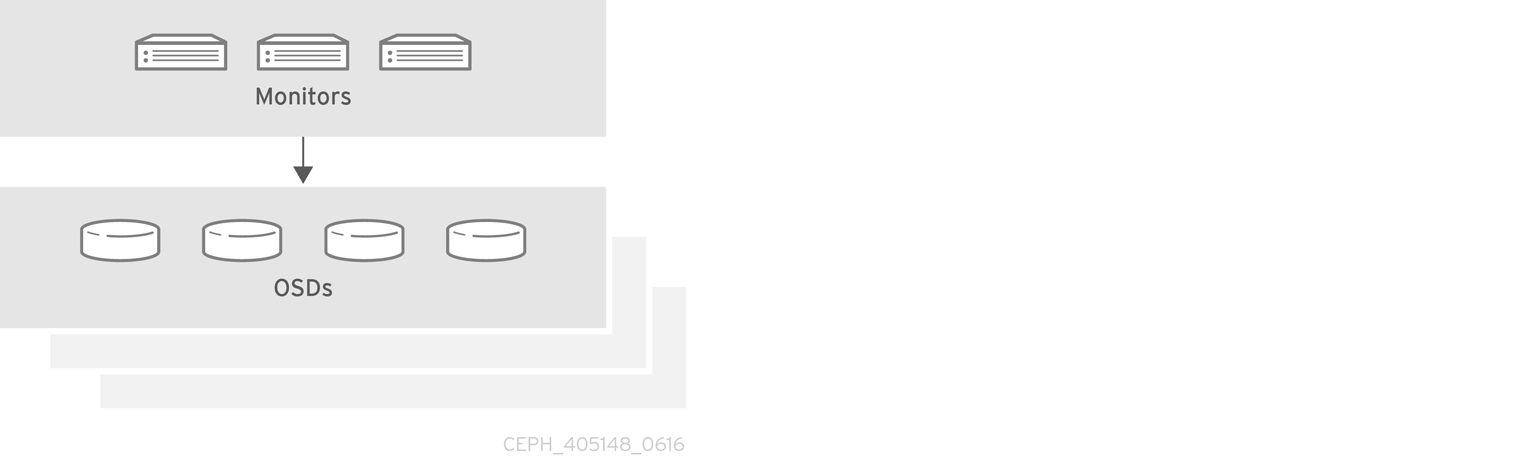

Production Ceph storage clusters start with a minimum of three monitor hosts and three OSD nodes containing multiple OSD daemons.

Prerequisites

Using the root account on the Ansible administration node, install the

ceph-ansiblepackage:yum install ceph-ansible

[root@admin ~]# yum install ceph-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Run the following commands from the Ansible administration node unless instructed otherwise.

As the Ansible user, create the

ceph-ansible-keysdirectory where Ansible stores temporary values generated by theceph-ansibleplaybook.mkdir ~/ceph-ansible-keys

[user@admin ~]$ mkdir ~/ceph-ansible-keysCopy to Clipboard Copied! Toggle word wrap Toggle overflow As root, create a symbolic link to the

/usr/share/ceph-ansible/group_varsdirectory in the/etc/ansible/directory:ln -s /usr/share/ceph-ansible/group_vars /etc/ansible/group_vars

[root@admin ~]# ln -s /usr/share/ceph-ansible/group_vars /etc/ansible/group_varsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Navigate to the

/usr/share/ceph-ansible/directory:cd /usr/share/ceph-ansible

[root@admin ~]$ cd /usr/share/ceph-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create new copies of the

yml.samplefiles:cp group_vars/all.yml.sample group_vars/all.yml cp group_vars/osds.yml.sample group_vars/osds.yml cp site.yml.sample site.yml

[root@admin ceph-ansible]# cp group_vars/all.yml.sample group_vars/all.yml [root@admin ceph-ansible]# cp group_vars/osds.yml.sample group_vars/osds.yml [root@admin ceph-ansible]# cp site.yml.sample site.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the copied files.

Edit the

group_vars/all.ymlfile. See the table below for the most common required and optional parameters to uncomment. Note that the table does not include all parameters.ImportantDo not set the

cluster: cephparameter to any value other thancephbecause using custom cluster names is not supported.Expand Table 3.1. General Ansible Settings Option Value Required Notes ceph_originrepositoryordistroorlocalYes

The

repositoryvalue means Ceph will be installed through a new repository. Thedistrovalue means that no separate repository file will be added, and you will get whatever version of Ceph that is included with the Linux distribution. Thelocalvalue means the Ceph binaries will be copied from the local machine.ceph_repository_typecdnorisoYes

ceph_rhcs_version3Yes

ceph_rhcs_iso_pathThe path to the ISO image

Yes if using an ISO image

monitor_interfaceThe interface that the Monitor nodes listen to

monitor_interface,monitor_address, ormonitor_address_blockis requiredmonitor_addressThe address that the Monitor nodes listen to

monitor_address_blockThe subnet of the Ceph public network

Use when the IP addresses of the nodes are unknown, but the subnet is known

ip_versionipv6Yes if using IPv6 addressing

public_networkThe IP address and netmask of the Ceph public network, or the corresponding IPv6 address if using IPv6

Yes

Section 2.8, “Verifying the Network Configuration for Red Hat Ceph Storage”

cluster_networkThe IP address and netmask of the Ceph cluster network

No, defaults to

public_networkconfigure_firewallAnsible will try to configure the appropriate firewall rules

No. Either set the value to

trueorfalse.An example of the

all.ymlfile can look like:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteBe sure to set

ceph_origintodistroin theall.ymlfile. This ensures that the installation process uses the correct download repository.NoteHaving the

ceph_rhcs_versionoption set to3will pull in the latest version of Red Hat Ceph Storage 3.WarningBy default, Ansible attempts to restart an installed, but masked

firewalldservice, which can cause the Red Hat Ceph Storage deployment to fail. To work around this issue, set theconfigure_firewalloption tofalsein theall.ymlfile. If you are running thefirewalldservice, then there is no requirement to use theconfigure_firewalloption in theall.ymlfile.For additional details, see the

all.ymlfile.Edit the

group_vars/osds.ymlfile. See the table below for the most common required and optional parameters to uncomment. Note that the table does not include all parameters.ImportantUse a different physical device to install an OSD than the device where the operating system is installed. Sharing the same device between the operating system and OSDs causes performance issues.

Expand Table 3.2. OSD Ansible Settings Option Value Required Notes osd_scenariocollocatedto use the same device for write-ahead logging and key/value data (BlueStore) or journal (FileStore) and OSD datanon-collocatedto use a dedicated device, such as SSD or NVMe media to store write-ahead log and key/value data (BlueStore) or journal data (FileStore)lvmto use the Logical Volume Manager to store OSD dataYes

When using

osd_scenario: non-collocated,ceph-ansibleexpects the numbers of variables indevicesanddedicated_devicesto match. For example, if you specify 10 disks indevices, you must specify 10 entries indedicated_devices.osd_auto_discoverytrueto automatically discover OSDsYes if using

osd_scenario: collocatedCannot be used when

devicessetting is useddevicesList of devices where

ceph datais storedYes to specify the list of devices

Cannot be used when

osd_auto_discoverysetting is used. When usinglvmas theosd_scenarioand setting thedevicesoption,ceph-volume lvm batchmode creates the optimized OSD configuration.dedicated_devicesList of dedicated devices for non-collocated OSDs where

ceph journalis storedYes if

osd_scenario: non-collocatedShould be nonpartitioned devices

dmcrypttrueto encrypt OSDsNo

Defaults to

falselvm_volumesA list of FileStore or BlueStore dictionaries

Yes if using

osd_scenario: lvmand storage devices are not defined usingdevicesEach dictionary must contain a

data,journalanddata_vgkeys. Any logical volume or volume group must be the name and not the full path. Thedata, andjournalkeys can be a logical volume (LV) or partition, but do not use one journal for multipledataLVs. Thedata_vgkey must be the volume group containing thedataLV. Optionally, thejournal_vgkey can be used to specify the volume group containing the journal LV, if applicable. See the examples below for various supported configurations.osds_per_deviceThe number of OSDs to create per device.

No

Defaults to

1osd_objectstoreThe Ceph object store type for the OSDs.

No

Defaults to

bluestore. The other option isfilestore. Required for upgrades.The following are examples of the

osds.ymlfile when using the three OSD scenarios:collocated,non-collocated, andlvm. The default OSD object store format is BlueStore, if not specified.Collocated

osd_objectstore: filestore osd_scenario: collocated devices: - /dev/sda - /dev/sdb

osd_objectstore: filestore osd_scenario: collocated devices: - /dev/sda - /dev/sdbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Non-collocated - BlueStore

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This non-collocated example will create four BlueStore OSDs, one per device. In this example, the traditional hard drives (

sda,sdb,sdc,sdd) are used for object data, and the solid state drives (SSDs) (/dev/nvme0n1,/dev/nvme1n1) are used for the BlueStore databases and write-ahead logs. This configuration pairs the/dev/sdaand/dev/sdbdevices with the/dev/nvme0n1device, and pairs the/dev/sdcand/dev/sdddevices with the/dev/nvme1n1device.Non-collocated - FileStore

Copy to Clipboard Copied! Toggle word wrap Toggle overflow LVM simple

osd_objectstore: bluestore osd_scenario: lvm devices: - /dev/sda - /dev/sdb

osd_objectstore: bluestore osd_scenario: lvm devices: - /dev/sda - /dev/sdbCopy to Clipboard Copied! Toggle word wrap Toggle overflow or

Copy to Clipboard Copied! Toggle word wrap Toggle overflow With these simple configurations

ceph-ansibleuses batch mode (ceph-volume lvm batch) to create the OSDs.In the first scenario, if the

devicesare traditional hard drives or SSDs, then one OSD per device is created.In the second scenario, when there is a mix of traditional hard drives and SSDs, the data is placed on the traditional hard drives (

sda,sdb) and the BlueStore database (block.db) is created as large as possible on the SSD (nvme0n1).LVM advance

Copy to Clipboard Copied! Toggle word wrap Toggle overflow or

Copy to Clipboard Copied! Toggle word wrap Toggle overflow With these advance scenario examples, the volume groups and logical volumes must be created beforehand. They will not be created by

ceph-ansible.NoteIf using all NVMe SSDs set the

osd_scenario: lvmandosds_per_device: 4options. For more information, see Configuring OSD Ansible settings for all NVMe Storage for Red Hat Enterprise Linux or Configuring OSD Ansible settings for all NVMe Storage for Ubuntu in the Red Hat Ceph Storage Installation Guides.For additional details, see the comments in the

osds.ymlfile.

Edit the Ansible inventory file located by default at

/etc/ansible/hosts. Remember to comment out example hosts.Add the Monitor nodes under the

[mons]section:[mons] MONITOR_NODE_NAME1 MONITOR_NODE_NAME2 MONITOR_NODE_NAME3

[mons] MONITOR_NODE_NAME1 MONITOR_NODE_NAME2 MONITOR_NODE_NAME3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add OSD nodes under the

[osds]section. If the nodes have sequential naming, consider using a range:[osds] OSD_NODE_NAME1[1:10]

[osds] OSD_NODE_NAME1[1:10]Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFor OSDs in a new installation, the default object store format is BlueStore.

Optionally, use the

devicesanddedicated_devicesoptions to specify devices that the OSD nodes will use. Use a comma-separated list to list multiple devices.Syntax

[osds] CEPH_NODE_NAME devices="['DEVICE_1', 'DEVICE_2']" dedicated_devices="['DEVICE_3', 'DEVICE_4']"

[osds] CEPH_NODE_NAME devices="['DEVICE_1', 'DEVICE_2']" dedicated_devices="['DEVICE_3', 'DEVICE_4']"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[osds] ceph-osd-01 devices="['/dev/sdc', '/dev/sdd']" dedicated_devices="['/dev/sda', '/dev/sdb']" ceph-osd-02 devices="['/dev/sdc', '/dev/sdd', '/dev/sde']" dedicated_devices="['/dev/sdf', '/dev/sdg']"

[osds] ceph-osd-01 devices="['/dev/sdc', '/dev/sdd']" dedicated_devices="['/dev/sda', '/dev/sdb']" ceph-osd-02 devices="['/dev/sdc', '/dev/sdd', '/dev/sde']" dedicated_devices="['/dev/sdf', '/dev/sdg']"Copy to Clipboard Copied! Toggle word wrap Toggle overflow When specifying no devices, set the

osd_auto_discoveryoption totruein theosds.ymlfile.NoteUsing the

devicesanddedicated_devicesparameters is useful when OSDs use devices with different names or when one of the devices failed on one of the OSDs.

Optionally, if you want to use host specific parameters, for all deployments, bare-metal or in containers, create host files in the

host_varsdirectory to include any parameters specific to hosts.Create a new file for each new Ceph OSD node added to the storage cluster, under the

/etc/ansible/host_vars/directory:Syntax

touch /etc/ansible/host_vars/OSD_NODE_NAME

touch /etc/ansible/host_vars/OSD_NODE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

touch /etc/ansible/host_vars/osd07

[root@admin ~]# touch /etc/ansible/host_vars/osd07Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the file with any host specific parameters. In bare-metal deployments, you can add the

devices:anddedicated_devices:sections to the file.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Optionally, for all deployments, bare-metal or in containers, you can create a custom CRUSH hierarchy using

ansible-playbook:Setup your Ansible inventory file. Specify where you want the OSD hosts to be in the CRUSH map’s hierarchy by using the

osd_crush_locationparameter. You must specify at least two CRUSH bucket types to specify the location of the OSD, and one buckettypemust be host. By default, these includeroot,datacenter,room,row,pod,pdu,rack,chassisandhost.Syntax

[osds] CEPH_OSD_NAME osd_crush_location="{ 'root': ROOT_BUCKET', 'rack': 'RACK_BUCKET', 'pod': 'POD_BUCKET', 'host': 'CEPH_HOST_NAME' }"[osds] CEPH_OSD_NAME osd_crush_location="{ 'root': ROOT_BUCKET', 'rack': 'RACK_BUCKET', 'pod': 'POD_BUCKET', 'host': 'CEPH_HOST_NAME' }"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[osds] ceph-osd-01 osd_crush_location="{ 'root': 'default', 'rack': 'rack1', 'pod': 'monpod', 'host': 'ceph-osd-01' }"[osds] ceph-osd-01 osd_crush_location="{ 'root': 'default', 'rack': 'rack1', 'pod': 'monpod', 'host': 'ceph-osd-01' }"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

crush_rule_configandcreate_crush_treeparameters toTrue, and create at least one CRUSH rule if you do not want to use the default CRUSH rules. For example, if you are using HDD devices, edit the paramters as follows:Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you are using SSD devices, then edit the parameters as follows:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe default CRUSH rules fail if both

ssdandhddOSDs are not deployed because the default rules now include theclassparameter, which must be defined.NoteAdditionally, add the custom CRUSH hierarchy to the OSD files in the

host_varsdirectory as described in a step above to make this configuration work.Create

pools, with createdcrush_rulesingroup_vars/clients.ymlfile.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the tree.

ceph osd tree

[root@mon ~]# ceph osd treeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Validate the pools.

for i in $(rados lspools);do echo "pool: $i"; ceph osd pool get $i crush_rule;done pool: pool1 crush_rule: HDD

# for i in $(rados lspools);do echo "pool: $i"; ceph osd pool get $i crush_rule;done pool: pool1 crush_rule: HDDCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For all deployments, bare-metal or in containers, open for editing the Ansible inventory file, by default the

/etc/ansible/hostsfile. Comment out the example hosts.Add the Ceph Manager (

ceph-mgr) nodes under the[mgrs]section. Colocate the Ceph Manager daemon with Monitor nodes.[mgrs] <monitor-host-name> <monitor-host-name> <monitor-host-name>

[mgrs] <monitor-host-name> <monitor-host-name> <monitor-host-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

As the Ansible user, ensure that Ansible can reach the Ceph hosts:

ansible all -m ping

[user@admin ~]$ ansible all -m pingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following line to the

/etc/ansible/ansible.cfgfile:retry_files_save_path = ~/

retry_files_save_path = ~/Copy to Clipboard Copied! Toggle word wrap Toggle overflow As

root, create the/var/log/ansible/directory and assign the appropriate permissions for theansibleuser:mkdir /var/log/ansible chown ansible:ansible /var/log/ansible chmod 755 /var/log/ansible

[root@admin ~]# mkdir /var/log/ansible [root@admin ~]# chown ansible:ansible /var/log/ansible [root@admin ~]# chmod 755 /var/log/ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

/usr/share/ceph-ansible/ansible.cfgfile, updating thelog_pathvalue as follows:log_path = /var/log/ansible/ansible.log

log_path = /var/log/ansible/ansible.logCopy to Clipboard Copied! Toggle word wrap Toggle overflow

As the Ansible user, change to the

/usr/share/ceph-ansible/directory:cd /usr/share/ceph-ansible/

[user@admin ~]$ cd /usr/share/ceph-ansible/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

ceph-ansibleplaybook:ansible-playbook site.yml

[user@admin ceph-ansible]$ ansible-playbook site.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteTo increase the deployment speed, use the

--forksoption toansible-playbook. By default,ceph-ansiblesets forks to20. With this setting, up to twenty nodes will be installed at the same time. To install up to thirty nodes at a time, runansible-playbook --forks 30 PLAYBOOK FILE. The resources on the admin node must be monitored to ensure they are not overused. If they are, lower the number passed to--forks.Using the root account on a Monitor node, verify the status of the Ceph cluster:

ceph health

[root@monitor ~]# ceph health HEALTH_OKCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the cluster is functioning using

rados.From a monitor node, create a test pool with eight placement groups:

Syntax

ceph osd pool create <pool-name> <pg-number>

[root@monitor ~]# ceph osd pool create <pool-name> <pg-number>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

ceph osd pool create test 8

[root@monitor ~]# ceph osd pool create test 8Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a file called

hello-world.txt:Syntax

vim <file-name>

[root@monitor ~]# vim <file-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

vim hello-world.txt

[root@monitor ~]# vim hello-world.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Upload

hello-world.txtto the test pool using the object namehello-world:Syntax

rados --pool <pool-name> put <object-name> <object-file>

[root@monitor ~]# rados --pool <pool-name> put <object-name> <object-file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rados --pool test put hello-world hello-world.txt

[root@monitor ~]# rados --pool test put hello-world hello-world.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Download

hello-worldfrom the test pool as file namefetch.txt:Syntax

rados --pool <pool-name> get <object-name> <object-file>

[root@monitor ~]# rados --pool <pool-name> get <object-name> <object-file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rados --pool test get hello-world fetch.txt

[root@monitor ~]# rados --pool test get hello-world fetch.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the contents of

fetch.txt:cat fetch.txt

[root@monitor ~]# cat fetch.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output should be:

"Hello World!"

"Hello World!"Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIn addition to verifying the cluster status, you can use the

ceph-medicutility to overall diagnose the Ceph Storage Cluster. See the Usingceph-medicto diagnose a Ceph Storage Cluster chapter in the Red Hat Ceph Storage 3 Administration Guide.

3.3. Configuring OSD Ansible settings for all NVMe storage

To optimize performance when using only non-volatile memory express (NVMe) devices for storage, configure four OSDs on each NVMe device. Normally only one OSD is configured per device, which will underutilize the throughput of an NVMe device.

If you mix SSDs and HDDs, then SSDs will be used for either journals or block.db, not OSDs.

In testing, configuring four OSDs on each NVMe device was found to provide optimal performance. It is recommended to set osds_per_device: 4, but it is not required. Other values may provide better performance in your environment.

Prerequisites

- Satisfying all software and hardware requirements for a Ceph cluster.

Procedure

Set

osd_scenario: lvmandosds_per_device: 4ingroup_vars/osds.yml:osd_scenario: lvm osds_per_device: 4

osd_scenario: lvm osds_per_device: 4Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the NVMe devices under

devices:devices: - /dev/nvme0n1 - /dev/nvme1n1 - /dev/nvme2n1 - /dev/nvme3n1

devices: - /dev/nvme0n1 - /dev/nvme1n1 - /dev/nvme2n1 - /dev/nvme3n1Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings in

group_vars/osds.ymlwill look similar to this example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

You must use devices with this configuration, not lvm_volumes. This is because lvm_volumes is generally used with pre-created logical volumes and osds_per_device implies automatic logical volume creation by Ceph.

3.4. Installing Metadata Servers

Use the Ansible automation application to install a Ceph Metadata Server (MDS). Metadata Server daemons are necessary for deploying a Ceph File System.

Prerequisites

- A working Red Hat Ceph Storage cluster.

Procedure

Perform the following steps on the Ansible administration node.

Add a new section

[mdss]to the/etc/ansible/hostsfile:[mdss] hostname hostname hostname

[mdss] hostname hostname hostnameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace hostname with the host names of the nodes where you want to install the Ceph Metadata Servers.

Navigate to the

/usr/share/ceph-ansibledirectory:cd /usr/share/ceph-ansible

[root@admin ~]# cd /usr/share/ceph-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional. Change the default variables.

Create a copy of the

group_vars/mdss.yml.samplefile namedmdss.yml:cp group_vars/mdss.yml.sample group_vars/mdss.yml

[root@admin ceph-ansible]# cp group_vars/mdss.yml.sample group_vars/mdss.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Optionally, edit parameters in

mdss.yml. Seemdss.ymlfor details.

As the Ansible user, run the Ansible playbook:

ansible-playbook site.yml --limit mdss

[user@admin ceph-ansible]$ ansible-playbook site.yml --limit mdssCopy to Clipboard Copied! Toggle word wrap Toggle overflow - After installing Metadata Servers, configure them. For details, see the Configuring Metadata Server Daemons chapter in the Ceph File System Guide for Red Hat Ceph Storage 3.

Additional Resources

- The Ceph File System Guide for Red Hat Ceph Storage 3

-

Understanding the

limitoption

3.5. Installing the Ceph Client Role

The ceph-ansible utility provides the ceph-client role that copies the Ceph configuration file and the administration keyring to nodes. In addition, you can use this role to create custom pools and clients.

Prerequisites

-

A running Ceph storage cluster, preferably in the

active + cleanstate. - Perform the tasks listed in Chapter 2, Requirements for Installing Red Hat Ceph Storage.

Procedure

Perform the following tasks on the Ansible administration node.

Add a new section

[clients]to the/etc/ansible/hostsfile:[clients] <client-hostname>

[clients] <client-hostname>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

<client-hostname>with the host name of the node where you want to install theceph-clientrole.Navigate to the

/usr/share/ceph-ansibledirectory:cd /usr/share/ceph-ansible

[root@admin ~]# cd /usr/share/ceph-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new copy of the

clients.yml.samplefile namedclients.yml:cp group_vars/clients.yml.sample group_vars/clients.yml

[root@admin ceph-ansible ~]# cp group_vars/clients.yml.sample group_vars/clients.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open the

group_vars/clients.ymlfile, and uncomment the following lines:keys: - { name: client.test, caps: { mon: "allow r", osd: "allow class-read object_prefix rbd_children, allow rwx pool=test" }, mode: "{{ ceph_keyring_permissions }}" }keys: - { name: client.test, caps: { mon: "allow r", osd: "allow class-read object_prefix rbd_children, allow rwx pool=test" }, mode: "{{ ceph_keyring_permissions }}" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

client.testwith the real client name, and add the client key to the client definition line, for example:key: "ADD-KEYRING-HERE=="

key: "ADD-KEYRING-HERE=="Copy to Clipboard Copied! Toggle word wrap Toggle overflow Now the whole line example would look similar to this:

- { name: client.test, key: "AQAin8tUMICVFBAALRHNrV0Z4MXupRw4v9JQ6Q==", caps: { mon: "allow r", osd: "allow class-read object_prefix rbd_children, allow rwx pool=test" }, mode: "{{ ceph_keyring_permissions }}" }- { name: client.test, key: "AQAin8tUMICVFBAALRHNrV0Z4MXupRw4v9JQ6Q==", caps: { mon: "allow r", osd: "allow class-read object_prefix rbd_children, allow rwx pool=test" }, mode: "{{ ceph_keyring_permissions }}" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

ceph-authtool --gen-print-keycommand can generate a new client key.

Optionally, instruct

ceph-clientto create pools and clients.Update

clients.yml.-

Uncomment the

user_configsetting and set it totrue. -

Uncomment the

poolsandkeyssections and update them as required. You can define custom pools and client names altogether with thecephxcapabilities.

-

Uncomment the

Add the

osd_pool_default_pg_numsetting to theceph_conf_overridessection in theall.ymlfile:ceph_conf_overrides: global: osd_pool_default_pg_num: <number>ceph_conf_overrides: global: osd_pool_default_pg_num: <number>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

<number>with the default number of placement groups.

Run the Ansible playbook:

ansible-playbook site.yml --limit clients

[user@admin ceph-ansible]$ ansible-playbook site.yml --limit clientsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

3.6. Installing the Ceph Object Gateway

The Ceph Object Gateway, also know as the RADOS gateway, is an object storage interface built on top of the librados API to provide applications with a RESTful gateway to Ceph storage clusters.

Prerequisites

-

A running Red Hat Ceph Storage cluster, preferably in the

active + cleanstate. - On the Ceph Object Gateway node, perform the tasks listed in Chapter 2, Requirements for Installing Red Hat Ceph Storage.

Procedure

Perform the following tasks on the Ansible administration node.

Add gateway hosts to the

/etc/ansible/hostsfile under the[rgws]section to identify their roles to Ansible. If the hosts have sequential naming, use a range, for example:[rgws] <rgw_host_name_1> <rgw_host_name_2> <rgw_host_name[3..10]>

[rgws] <rgw_host_name_1> <rgw_host_name_2> <rgw_host_name[3..10]>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Navigate to the Ansible configuration directory:

cd /usr/share/ceph-ansible

[root@ansible ~]# cd /usr/share/ceph-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

rgws.ymlfile from the sample file:cp group_vars/rgws.yml.sample group_vars/rgws.yml

[root@ansible ~]# cp group_vars/rgws.yml.sample group_vars/rgws.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open and edit the

group_vars/rgws.ymlfile. To copy the administrator key to the Ceph Object Gateway node, uncomment thecopy_admin_keyoption:copy_admin_key: true

copy_admin_key: trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

rgws.ymlfile may specify a different default port than the default port7480. For example:ceph_rgw_civetweb_port: 80

ceph_rgw_civetweb_port: 80Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

all.ymlfile MUST specify aradosgw_interface. For example:radosgw_interface: eth0

radosgw_interface: eth0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Specifying the interface prevents Civetweb from binding to the same IP address as another Civetweb instance when running multiple instances on the same host.

Generally, to change default settings, uncomment the settings in the

rgw.ymlfile, and make changes accordingly. To make additional changes to settings that are not in thergw.ymlfile, useceph_conf_overrides:in theall.ymlfile. For example, set thergw_dns_name:with the host of the DNS server and ensure the cluster’s DNS server to configure it for wild cards to enable S3 subdomains.ceph_conf_overrides: client.rgw.rgw1: rgw_dns_name: <host_name> rgw_override_bucket_index_max_shards: 16 rgw_bucket_default_quota_max_objects: 1638400ceph_conf_overrides: client.rgw.rgw1: rgw_dns_name: <host_name> rgw_override_bucket_index_max_shards: 16 rgw_bucket_default_quota_max_objects: 1638400Copy to Clipboard Copied! Toggle word wrap Toggle overflow For advanced configuration details, see the Red Hat Ceph Storage 3 Ceph Object Gateway for Production guide. Advanced topics include:

- Configuring Ansible Groups

Developing Storage Strategies. See the Creating the Root Pool, Creating System Pools, and Creating Data Placement Strategies sections for additional details on how create and configure the pools.

See Bucket Sharding for configuration details on bucket sharding.

Uncomment the

radosgw_interfaceparameter in thegroup_vars/all.ymlfile.radosgw_interface: <interface>

radosgw_interface: <interface>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace:

-

<interface>with the interface that the Ceph Object Gateway nodes listen to

For additional details, see the

all.ymlfile.-

Run the Ansible playbook:

ansible-playbook site.yml --limit rgws

[user@admin ceph-ansible]$ ansible-playbook site.yml --limit rgwsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Ansible ensures that each Ceph Object Gateway is running.

For a single site configuration, add Ceph Object Gateways to the Ansible configuration.

For multi-site deployments, you should have an Ansible configuration for each zone. That is, Ansible will create a Ceph storage cluster and gateway instances for that zone.

After installation for a multi-site cluster is complete, proceed to the Multi-site chapter in the Object Gateway Guide for Red Hat Enterprise Linux for details on configuring a cluster for multi-site.

Additional Resources

3.6.1. Configuring a multisite Ceph Object Gateway

Ansible will configure the realm, zonegroup, along with the master and secondary zones for a Ceph Object Gateway in a multisite environment.

Prerequisites

- Two running Red Hat Ceph Storage clusters.

- On the Ceph Object Gateway node, perform the tasks listed in the Requirements for Installing Red Hat Ceph Storage found in the Red Hat Ceph Storage Installation Guide.

- Install and configure one Ceph Object Gateway per storage cluster.

Procedure

Do the following steps on Ansible node for the primary storage cluster:

Generate the system keys and capture their output in the

multi-site-keys.txtfile:echo system_access_key: $(cat /dev/urandom | tr -dc 'a-zA-Z0-9' | fold -w 20 | head -n 1) > multi-site-keys.txt echo system_secret_key: $(cat /dev/urandom | tr -dc 'a-zA-Z0-9' | fold -w 40 | head -n 1) >> multi-site-keys.txt

[root@ansible ~]# echo system_access_key: $(cat /dev/urandom | tr -dc 'a-zA-Z0-9' | fold -w 20 | head -n 1) > multi-site-keys.txt [root@ansible ~]# echo system_secret_key: $(cat /dev/urandom | tr -dc 'a-zA-Z0-9' | fold -w 40 | head -n 1) >> multi-site-keys.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Navigate to the Ansible configuration directory,

/usr/share/ceph-ansible:cd /usr/share/ceph-ansible

[root@ansible ~]# cd /usr/share/ceph-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open and edit the

group_vars/all.ymlfile. Enable multisite support by adding the following options, along with updating the$ZONE_NAME,$ZONE_GROUP_NAME,$REALM_NAME,$ACCESS_KEY, and$SECRET_KEYvalues accordingly.When more than one Ceph Object Gateway is in the master zone, then the

rgw_multisite_endpointsoption needs to be set. The value for thergw_multisite_endpointsoption is a comma separated list, with no spaces.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

ansible_fqdndomain name must be resolvable from the secondary storage cluster.NoteWhen adding a new Object Gateway, append it to the end of the

rgw_multisite_endpointslist with the endpoint URL of the new Object Gateway before running the Ansible playbook.Run the Ansible playbook:

ansible-playbook site.yml --limit rgws

[user@ansible ceph-ansible]$ ansible-playbook site.yml --limit rgwsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the Ceph Object Gateway daemon:

systemctl restart ceph-radosgw@rgw.`hostname -s`

[root@rgw ~]# systemctl restart ceph-radosgw@rgw.`hostname -s`Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Do the following steps on Ansible node for the secondary storage cluster:

Navigate to the Ansible configuration directory,

/usr/share/ceph-ansible:cd /usr/share/ceph-ansible

[root@ansible ~]# cd /usr/share/ceph-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open and edit the

group_vars/all.ymlfile. Enable multisite support by adding the following options, along with updating the$ZONE_NAME,$ZONE_GROUP_NAME,$REALM_NAME,$ACCESS_KEY, and$SECRET_KEYvalues accordingly: Thergw_zone_user,system_access_key, andsystem_secret_keymust be the same value as used in the master zone configuration. Thergw_pullhostoption must be the Ceph Object Gateway for the master zone.When more than one Ceph Object Gateway is in the secondary zone, then the

rgw_multisite_endpointsoption needs to be set. The value for thergw_multisite_endpointsoption is a comma separated list, with no spaces.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

ansible_fqdndomain name must be resolvable from the primary storage cluster.NoteWhen adding a new Object Gateway, append it to the end of the

rgw_multisite_endpointslist with the endpoint URL of the new Object Gateway before running the Ansible playbook.Run the Ansible playbook:

ansible-playbook site.yml --limit rgws

[user@ansible ceph-ansible]$ ansible-playbook site.yml --limit rgwsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the Ceph Object Gateway daemon:

systemctl restart ceph-radosgw@rgw.`hostname -s`

[root@rgw ~]# systemctl restart ceph-radosgw@rgw.`hostname -s`Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- After running the Ansible playbook on the master and secondary storage clusters, you will have a running active-active Ceph Object Gateway configuration.

Verify the multisite Ceph Object Gateway configuration:

-

From the Ceph Monitor and Object Gateway nodes at each site, primary and secondary, must be able to

curlthe other site. -

Run the

radosgw-admin sync statuscommand on both sites.

-

From the Ceph Monitor and Object Gateway nodes at each site, primary and secondary, must be able to

3.7. Installing the NFS-Ganesha Gateway

The Ceph NFS Ganesha Gateway is an NFS interface built on top of the Ceph Object Gateway to provide applications with a POSIX filesystem interface to the Ceph Object Gateway for migrating files within filesystems to Ceph Object Storage.

Prerequisites

-

A running Ceph storage cluster, preferably in the

active + cleanstate. - At least one node running a Ceph Object Gateway.

- Perform the Before You Start procedure.

Procedure

Perform the following tasks on the Ansible administration node.

Create the

nfssfile from the sample file:cd /usr/share/ceph-ansible/group_vars cp nfss.yml.sample nfss.yml

[root@ansible ~]# cd /usr/share/ceph-ansible/group_vars [root@ansible ~]# cp nfss.yml.sample nfss.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add gateway hosts to the

/etc/ansible/hostsfile under an[nfss]group to identify their group membership to Ansible. If the hosts have sequential naming, use a range. For example:[nfss] <nfs_host_name_1> <nfs_host_name_2> <nfs_host_name[3..10]>

[nfss] <nfs_host_name_1> <nfs_host_name_2> <nfs_host_name[3..10]>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Navigate to the Ansible configuration directory,

/etc/ansible/:cd /usr/share/ceph-ansible

[root@ansible ~]# cd /usr/share/ceph-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow To copy the administrator key to the Ceph Object Gateway node, uncomment the

copy_admin_keysetting in the/usr/share/ceph-ansible/group_vars/nfss.ymlfile:copy_admin_key: true

copy_admin_key: trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the FSAL (File System Abstraction Layer) sections of the

/usr/share/ceph-ansible/group_vars/nfss.ymlfile. Provide an ID, S3 user ID, S3 access key and secret. For NFSv4, it should look something like this:Copy to Clipboard Copied! Toggle word wrap Toggle overflow WarningAccess and secret keys are optional, and can be generated.

Run the Ansible playbook:

ansible-playbook site-docker.yml --limit nfss

[user@admin ceph-ansible]$ ansible-playbook site-docker.yml --limit nfssCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

3.8. Understanding the limit option

This section contains information about the Ansible --limit option.

Ansible supports the --limit option that enables you to use the site, site-docker, and rolling_upgrade Ansible playbooks for a particular section of the inventory file.

ansible-playbook site.yml|rolling_upgrade.yml|site-docker.yml --limit osds|rgws|clients|mdss|nfss|iscsigws

$ ansible-playbook site.yml|rolling_upgrade.yml|site-docker.yml --limit osds|rgws|clients|mdss|nfss|iscsigwsFor example, to redeploy only OSDs on bare metal, run the following command as the Ansible user:

ansible-playbook /usr/share/ceph-ansible/site.yml --limit osds

$ ansible-playbook /usr/share/ceph-ansible/site.yml --limit osds

If you colocate Ceph components on one node, Ansible applies a playbook to all components on the node despite that only one component type was specified with the limit option. For example, if you run the rolling_update playbook with the --limit osds option on a node that contains OSDs and Metadata Servers (MDS), Ansible will upgrade both components, OSDs and MDSs.