Este contenido no está disponible en el idioma seleccionado.

31.4. Performance Testing Procedures

31.4.1. Phase 1: Effects of I/O Depth, Fixed 4 KB Blocks

- Perform four-corner testing at 4 KB I/O, and I/O depth of 1, 8, 16, 32, 64, 128, 256, 512, 1024:

- Sequential 100% reads, at fixed 4 KB *

- Sequential 100% write, at fixed 4 KB

- Random 100% reads, at fixed 4 KB *

- Random 100% write, at fixed 4 KB **

* Prefill any areas that may be read during the read test by performing a write fio job first** Re-create the VDO volume after 4 KB random write I/O runsExample shell test input stimulus (write):Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Record throughput and latency at each data point, and then graph.

- Repeat test to complete four-corner testing:

--rw=randwrite,--rw=read, and--rw=randread.

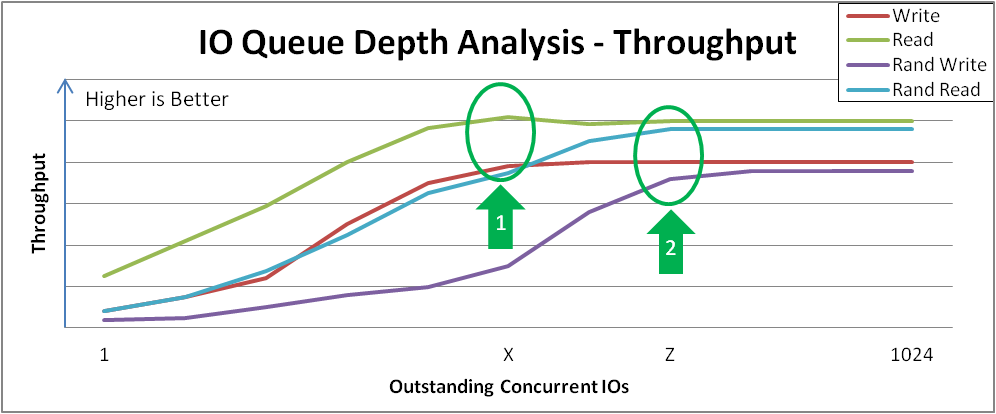

- This particular appliance does not benefit from sequential 4 KB I/O depth > X. Beyond that depth, there are diminishing bandwidth bandwidth gains, and average request latency will increase 1:1 for each additional I/O request.

- This particular appliance does not benefit from random 4 KB I/O depth > Z. Beyond that depth, there are diminishing bandwidth gains, and average request latency will increase 1:1 for each additional I/O request.

Figure 31.1. I/O Depth Analysis

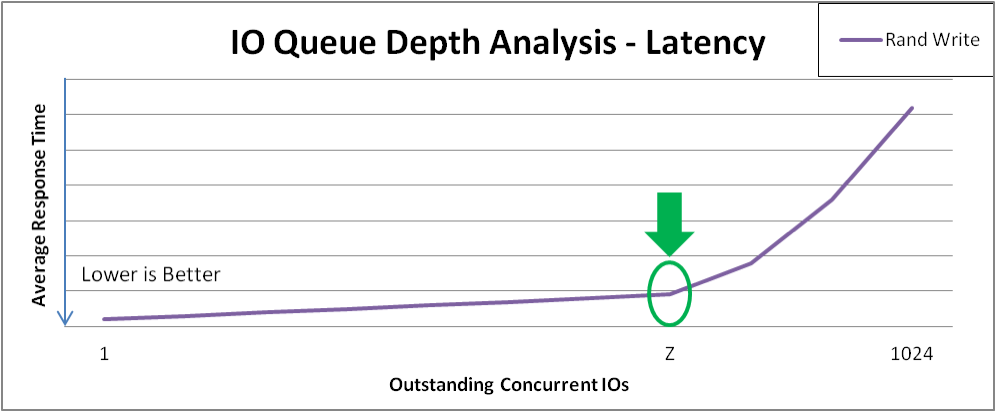

Figure 31.2. Latency Response of Increasing I/O for Random Writes

31.4.2. Phase 2: Effects of I/O Request Size

- Perform four-corner testing at fixed I/O depth, with varied block size (powers of 2) over the range 8 KB to 1 MB. Remember to prefill any areas to be read and to recreate volumes between tests.

- Set the I/O Depth to the value determined in Section 31.4.1, “Phase 1: Effects of I/O Depth, Fixed 4 KB Blocks”.Example test input stimulus (write):

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Record throughput and latency at each data point, and then graph.

- Repeat test to complete four-corner testing:

--rw=randwrite,--rw=read, and--rw=randread.

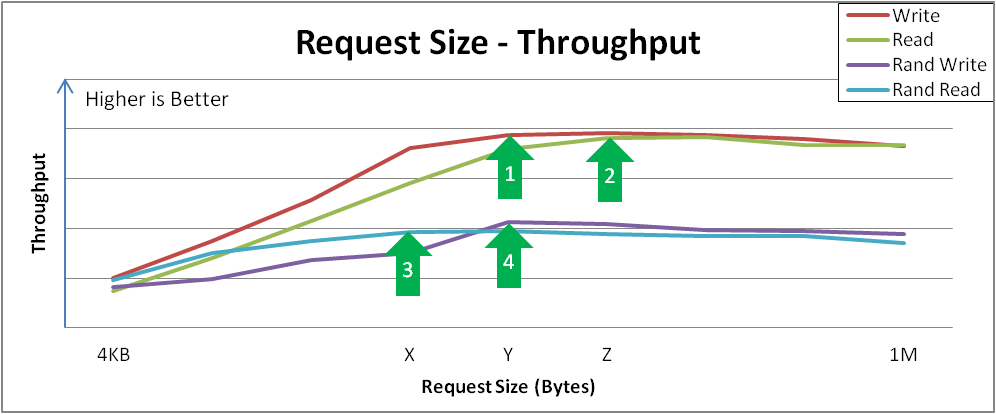

- Sequential writes reach a peak throughput at request size Y. This curve demonstrates how applications that are configurable or naturally dominated by certain request sizes may perceive performance. Larger request sizes often provide more throughput because 4 KB I/Os may benefit from merging.

- Sequential reads reach a similar peak throughput at point Z. Remember that after these peaks, overall latency before the I/O completes will increase with no additional throughput. It would be wise to tune the device to not accept I/Os larger than this size.

- Random reads achieve peak throughput at point X. Some devices may achieve near-sequential throughput rates at large request size random accesses, while others suffer more penalty when varying from purely sequential access.

- Random writes achieve peak throughput at point Y. Random writes involve the most interaction of a deduplication device, and VDO achieves high performance especially when request sizes and/or I/O depths are large.

Figure 31.3. Request Size vs. Throughput Analysis and Key Inflection Points

31.4.3. Phase 3: Effects of Mixing Read & Write I/Os

- Perform four-corner testing at fixed I/O depth, varied block size (powers of 2) over the 8 KB to 256 KB range, and set read percentage at 10% increments, beginning with 0%. Remember to prefill any areas to be read and to recreate volumes between tests.

- Set the I/O Depth to the value determined in Section 31.4.1, “Phase 1: Effects of I/O Depth, Fixed 4 KB Blocks”.Example test input stimulus (read/write mix):

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Record throughput and latency at each data point, and then graph.

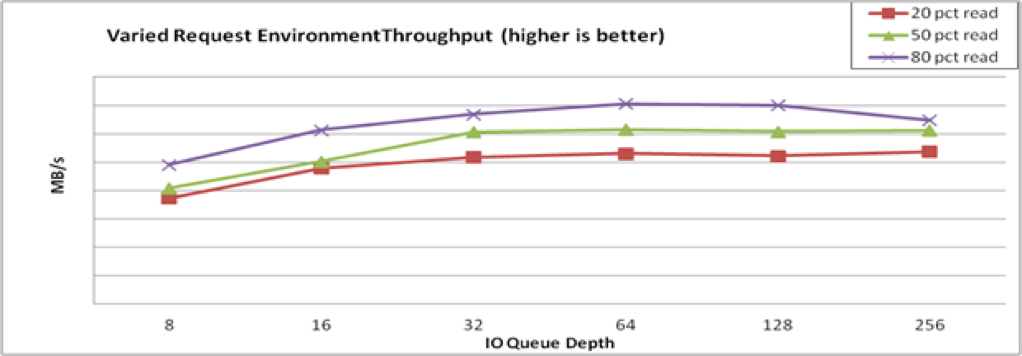

Figure 31.4. Performance Is Consistent across Varying Read/Write Mixes

31.4.4. Phase 4: Application Environments

Figure 31.5. Mixed Environment Performance