Este contenido no está disponible en el idioma seleccionado.

Chapter 18. Configuring network settings by using RHEL system roles

By using the network RHEL system role, you can automate network-related configuration and management tasks.

18.1. Configuring an Ethernet connection with a static IP address by using the network RHEL system role with an interface name

You can use the network RHEL system role to configure an Ethernet connection with static IP addresses, gateways, and DNS settings, and assign them to a specified interface name.

To connect a Red Hat Enterprise Linux host to an Ethernet network, create a NetworkManager connection profile for the network device. By using Ansible and the network RHEL system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

Typically, administrators want to reuse a playbook and not maintain individual playbooks for each host to which Ansible should assign static IP addresses. In this case, you can use variables in the playbook and maintain the settings in the inventory. As a result, you need only one playbook to dynamically assign individual settings to multiple hosts.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. - A physical or virtual Ethernet device exists in the server configuration.

- The managed nodes use NetworkManager to configure the network.

Procedure

Edit the

~/inventoryfile, and append the host-specific settings to the host entries:managed-node-01.example.com interface=enp1s0 ip_v4=192.0.2.1/24 ip_v6=2001:db8:1::1/64 gateway_v4=192.0.2.254 gateway_v6=2001:db8:1::fffe managed-node-02.example.com interface=enp1s0 ip_v4=192.0.2.2/24 ip_v6=2001:db8:1::2/64 gateway_v4=192.0.2.254 gateway_v6=2001:db8:1::fffe

managed-node-01.example.com interface=enp1s0 ip_v4=192.0.2.1/24 ip_v6=2001:db8:1::1/64 gateway_v4=192.0.2.254 gateway_v6=2001:db8:1::fffe managed-node-02.example.com interface=enp1s0 ip_v4=192.0.2.2/24 ip_v6=2001:db8:1::2/64 gateway_v4=192.0.2.254 gateway_v6=2001:db8:1::fffeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow This playbook reads certain values dynamically for each host from the inventory file and uses static values in the playbook for settings which are the same for all hosts.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Query the Ansible facts of the managed node and verify the active network settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.2. Configuring an Ethernet connection with a static IP address by using the network RHEL system role with a device path

You can use the network RHEL system role to configure an Ethernet connection with static IP addresses, gateways, and DNS settings, and assign them to a device based on its path instead of its name.

To connect a Red Hat Enterprise Linux host to an Ethernet network, create a NetworkManager connection profile for the network device. By using Ansible and the network RHEL system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. - A physical or virtual Ethernet device exists in the server’s configuration.

- The managed nodes use NetworkManager to configure the network.

-

You know the path of the device. You can display the device path by using the

udevadm info /sys/class/net/<device_name> | grep ID_PATH=command.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

match-

Defines that a condition must be met in order to apply the settings. You can only use this variable with the

pathoption. path-

Defines the persistent path of a device. You can set it as a fixed path or an expression. Its value can contain modifiers and wildcards. The example applies the settings to devices that match PCI ID

0000:00:0[1-3].0, but not0000:00:02.0.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Query the Ansible facts of the managed node and verify the active network settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.3. Configuring an Ethernet connection with a dynamic IP address by using the network RHEL system role with an interface name

You can use the network RHEL system role to configure an Ethernet connection that retrieves its IP addresses, gateways, and DNS settings from a DHCP server and IPv6 stateless address autoconfiguration (SLAAC). With this role you can assign the connection profile to the specified interface name.

To connect a Red Hat Enterprise Linux host to an Ethernet network, create a NetworkManager connection profile for the network device. By using Ansible and the network RHEL system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. - A physical or virtual Ethernet device exists in the servers' configuration.

- A DHCP server and SLAAC are available in the network.

- The managed nodes use the NetworkManager service to configure the network.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

dhcp4: yes- Enables automatic IPv4 address assignment from DHCP, PPP, or similar services.

auto6: yes-

Enables IPv6 auto-configuration. By default, NetworkManager uses Router Advertisements. If the router announces the

managedflag, NetworkManager requests an IPv6 address and prefix from a DHCPv6 server.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Query the Ansible facts of the managed node and verify that the interface received IP addresses and DNS settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.4. Configuring an Ethernet connection with a dynamic IP address by using the network RHEL system role with a device path

By using the network RHEL system role, you can configure an Ethernet connection to retrieve its IP addresses, gateways, and DNS settings from a DHCP server and IPv6 stateless address autoconfiguration (SLAAC). The role can assign the profile by the device’s path.

To connect a Red Hat Enterprise Linux host to an Ethernet network, create a NetworkManager connection profile for the network device. By using Ansible and the network RHEL system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. - A physical or virtual Ethernet device exists in the server’s configuration.

- A DHCP server and SLAAC are available in the network.

- The managed hosts use NetworkManager to configure the network.

-

You know the path of the device. You can display the device path by using the

udevadm info /sys/class/net/<device_name> | grep ID_PATH=command.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

match: path-

Defines that a condition must be met in order to apply the settings. You can only use this variable with the

pathoption. path: <path_and_expressions>-

Defines the persistent path of a device. You can set it as a fixed path or an expression. Its value can contain modifiers and wildcards. The example applies the settings to devices that match PCI ID

0000:00:0[1-3].0, but not0000:00:02.0. dhcp4: yes- Enables automatic IPv4 address assignment from DHCP, PPP, or similar services.

auto6: yes-

Enables IPv6 auto-configuration. By default, NetworkManager uses Router Advertisements. If the router announces the

managedflag, NetworkManager requests an IPv6 address and prefix from a DHCPv6 server.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Query the Ansible facts of the managed node and verify that the interface received IP addresses and DNS settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.5. Configuring a static Ethernet connection with 802.1X network authentication by using the network RHEL system role

By using the network RHEL system role, you can automate setting up Network Access Control (NAC) on remote hosts. You can define authentication details for clients in a playbook to ensure only authorized clients can access the network.

You can use an Ansible playbook to copy a private key, a certificate, and the CA certificate to the client, and then use the network RHEL system role to configure a connection profile with 802.1X network authentication.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. - The network supports 802.1X network authentication.

- The managed nodes use NetworkManager.

The following files required for the TLS authentication exist on the control node:

-

The client key is stored in the

/srv/data/client.keyfile. -

The client certificate is stored in the

/srv/data/client.crtfile. -

The Certificate Authority (CA) certificate is stored in the

/srv/data/ca.crtfile.

-

The client key is stored in the

Procedure

Store your sensitive variables in an encrypted file:

Create the vault:

ansible-vault create ~/vault.yml

$ ansible-vault create ~/vault.yml New Vault password: <vault_password> Confirm New Vault password: <vault_password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow After the

ansible-vault createcommand opens an editor, enter the sensitive data in the<key>: <value>format:pwd: <password>

pwd: <password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the changes, and close the editor. Ansible encrypts the data in the vault.

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

ieee802_1x- This variable contains the 802.1X-related settings.

eap: tls-

Configures the profile to use the certificate-based

TLSauthentication method for the Extensible Authentication Protocol (EAP).

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --ask-vault-pass --syntax-check ~/playbook.yml

$ ansible-playbook --ask-vault-pass --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook --ask-vault-pass ~/playbook.yml

$ ansible-playbook --ask-vault-pass ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

- Access resources on the network that require network authentication.

18.6. Configuring a network bond by using the network RHEL system role

You can use the network RHEL system role to configure a network bond and, if a connection profile for the bond’s parent device does not exist, the role can create it as well.

You can combine network interfaces in a bond to provide a logical interface with higher throughput or redundancy. To configure a bond, create a NetworkManager connection profile. By using Ansible and the network RHEL system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. - Two or more physical or virtual network devices are installed on the server.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

type: <profile_type>- Sets the type of the profile to create. The example playbook creates three connection profiles: One for the bond and two for the Ethernet devices.

dhcp4: yes- Enables automatic IPv4 address assignment from DHCP, PPP, or similar services.

auto6: yes-

Enables IPv6 auto-configuration. By default, NetworkManager uses Router Advertisements. If the router announces the

managedflag, NetworkManager requests an IPv6 address and prefix from a DHCPv6 server. mode: <bond_mode>Sets the bonding mode. Possible values are:

-

balance-rr(default) -

active-backup -

balance-xor -

broadcast -

802.3ad -

balance-tlb -

balance-alb.

Depending on the mode you set, you need to set additional variables in the playbook.

-

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Temporarily remove the network cable from one of the network devices and check if the other device in the bond is handling the traffic.

Note that there is no method to properly test link failure events using software utilities. Tools that deactivate connections, such as

nmcli, show only the bonding driver’s ability to handle port configuration changes and not actual link failure events.

18.7. Configuring VLAN tagging by using the network RHEL system role

You can use the network RHEL system role to configure VLAN tagging and, if a connection profile for the VLAN’s parent device does not exist, the role can create it as well.

If your network uses Virtual Local Area Networks (VLANs) to separate network traffic into logical networks, create a NetworkManager connection profile to configure VLAN tagging. By using Ansible and the network RHEL system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

If the VLAN device requires an IP address, default gateway, and DNS settings, configure them on the VLAN device and not on the parent device.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

type: <profile_type>- Sets the type of the profile to create. The example playbook creates two connection profiles: One for the parent Ethernet device and one for the VLAN device.

dhcp4: <value>-

If set to

yes, automatic IPv4 address assignment from DHCP, PPP, or similar services is enabled. Disable the IP address configuration on the parent device. auto6: <value>-

If set to

yes, IPv6 auto-configuration is enabled. In this case, by default, NetworkManager uses Router Advertisements and, if the router announces themanagedflag, NetworkManager requests an IPv6 address and prefix from a DHCPv6 server. Disable the IP address configuration on the parent device. parent: <parent_device>- Sets the parent device of the VLAN connection profile. In the example, the parent is the Ethernet interface.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify the VLAN settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.8. Configuring a network bridge by using the network RHEL system role

You can use the network RHEL system role to configure a bridge and, if a connection profile for the bridge’s parent device does not exist, the role can create it as well.

You can connect multiple networks on layer 2 of the Open Systems Interconnection (OSI) model by creating a network bridge. To configure a bridge, create a connection profile in NetworkManager. By using Ansible and the network RHEL system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

If you want to assign IP addresses, gateways, and DNS settings to a bridge, configure them on the bridge and not on its ports.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. - Two or more physical or virtual network devices are installed on the server.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

type: <profile_type>- Sets the type of the profile to create. The example playbook creates three connection profiles: One for the bridge and two for the Ethernet devices.

dhcp4: yes- Enables automatic IPv4 address assignment from DHCP, PPP, or similar services.

auto6: yes-

Enables IPv6 auto-configuration. By default, NetworkManager uses Router Advertisements. If the router announces the

managedflag, NetworkManager requests an IPv6 address and prefix from a DHCPv6 server.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Display the link status of Ethernet devices that are ports of a specific bridge:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the status of Ethernet devices that are ports of any bridge device:

ansible managed-node-01.example.com -m command -a 'bridge link show'

# ansible managed-node-01.example.com -m command -a 'bridge link show' managed-node-01.example.com | CHANGED | rc=0 >> 3: enp7s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 master bridge0 state forwarding priority 32 cost 100 4: enp8s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 master bridge0 state listening priority 32 cost 100Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.9. Setting the default gateway on an existing connection by using the network RHEL system role

By using the network RHEL system role, you can automate setting the default gateway in a NetworkManager connection profile. With this method, you can remotely configure the default gateway on hosts defined in a playbook.

In most situations, administrators set the default gateway when they create a connection. However, you can also set or update the default gateway setting on a previously-created connection.

You cannot use the network RHEL system role to update only specific values in an existing connection profile. The role ensures that a connection profile exactly matches the settings in a playbook. If a connection profile with the same name already exists, the role applies the settings from the playbook and resets all other settings in the profile to their defaults. To prevent resetting values, always specify the whole configuration of the network connection profile in the playbook, including the settings that you do not want to change.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Query the Ansible facts of the managed node and verify the active network settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.10. Configuring a static route by using the network RHEL system role

You can use the network RHEL system role to configure static routes.

When you run a play that uses the network RHEL system role and if the setting values do not match the values specified in the play, the role overrides the existing connection profile with the same name. To prevent resetting these values to their defaults, always specify the whole configuration of the network connection profile in the play, even if the configuration, for example the IP configuration, already exists.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Display the IPv4 routes:

ansible managed-node-01.example.com -m command -a 'ip -4 route'

# ansible managed-node-01.example.com -m command -a 'ip -4 route' managed-node-01.example.com | CHANGED | rc=0 >> ... 198.51.100.0/24 via 192.0.2.10 dev enp7s0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the IPv6 routes:

ansible managed-node-01.example.com -m command -a 'ip -6 route'

# ansible managed-node-01.example.com -m command -a 'ip -6 route' managed-node-01.example.com | CHANGED | rc=0 >> ... 2001:db8:2::/64 via 2001:db8:1::10 dev enp7s0 metric 1024 pref mediumCopy to Clipboard Copied! Toggle word wrap Toggle overflow

18.11. Routing traffic from a specific subnet to a different default gateway by using the network RHEL system role

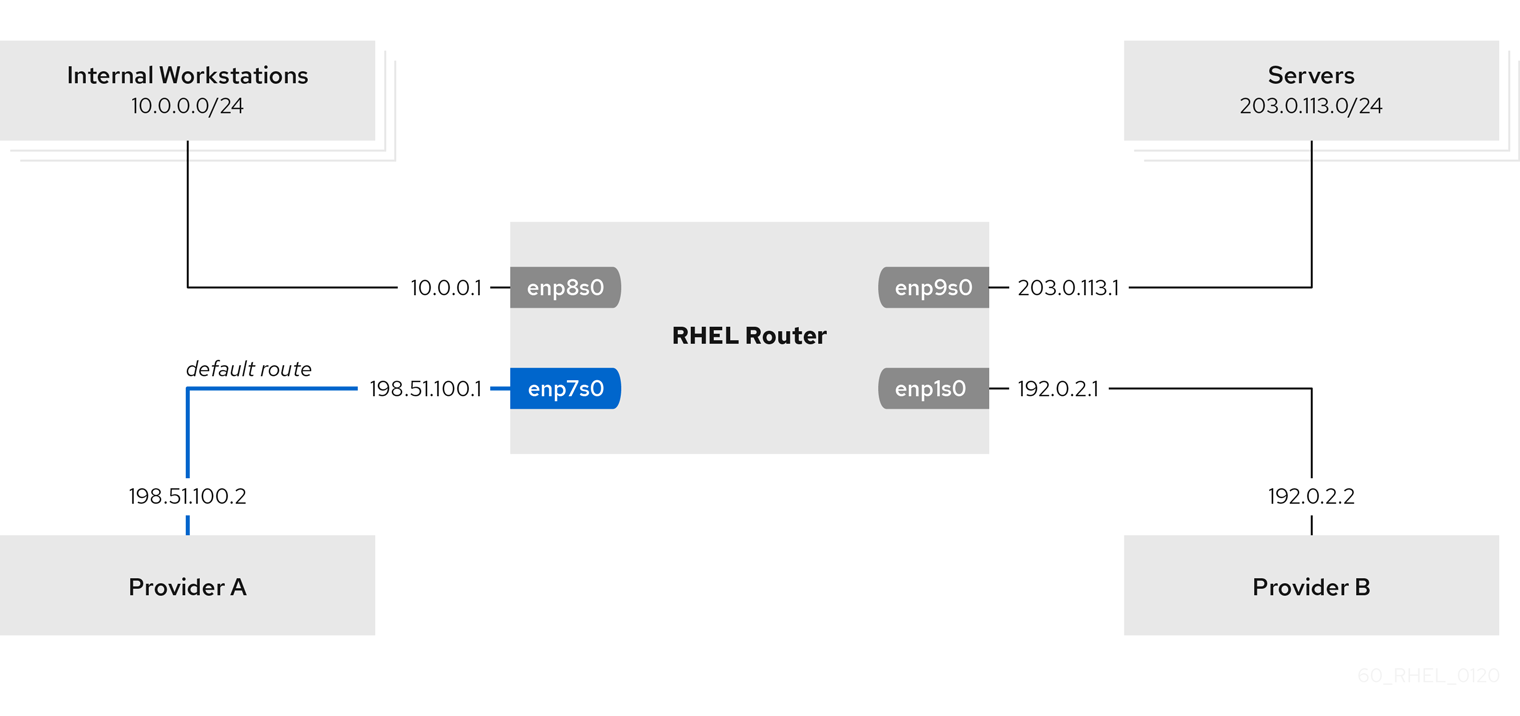

You can use policy-based routing to configure a different default gateway for traffic from certain subnets. By using the network RHEL system role, you can automate the creation of the connection profiles, including routing tables and rules.

For example, you can configure RHEL as a router that, by default, routes all traffic to internet provider A using the default route. However, traffic received from the internal workstations subnet is routed to provider B. By using Ansible and the network RHEL system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

This procedure assumes the following network topology:

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. -

The managed nodes use NetworkManager and the

firewalldservice. The managed nodes you want to configure has four network interfaces:

-

The

enp7s0interface is connected to the network of provider A. The gateway IP in the provider’s network is198.51.100.2, and the network uses a/30network mask. -

The

enp1s0interface is connected to the network of provider B. The gateway IP in the provider’s network is192.0.2.2, and the network uses a/30network mask. -

The

enp8s0interface is connected to the10.0.0.0/24subnet with internal workstations. -

The

enp9s0interface is connected to the203.0.113.0/24subnet with the company’s servers.

-

The

-

Hosts in the internal workstations subnet use

10.0.0.1as the default gateway. In the procedure, you assign this IP address to theenp8s0network interface of the router. -

Hosts in the server subnet use

203.0.113.1as the default gateway. In the procedure, you assign this IP address to theenp9s0network interface of the router.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

table: <value>-

Assigns the route from the same list entry as the

tablevariable to the specified routing table. routing_rule: <list>- Defines the priority of the specified routing rule and from a connection profile to which routing table the rule is assigned.

zone: <zone_name>-

Assigns the network interface from a connection profile to the specified

firewalldzone.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

On a RHEL host in the internal workstation subnet:

Install the

traceroutepackage:yum install traceroute

# yum install tracerouteCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

tracerouteutility to display the route to a host on the internet:traceroute redhat.com

# traceroute redhat.com traceroute to redhat.com (209.132.183.105), 30 hops max, 60 byte packets 1 10.0.0.1 (10.0.0.1) 0.337 ms 0.260 ms 0.223 ms 2 192.0.2.2 (192.0.2.2) 0.884 ms 1.066 ms 1.248 ms ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow The output of the command displays that the router sends packets over

192.0.2.1, which is the network of provider B.

On a RHEL host in the server subnet:

Install the

traceroutepackage:yum install traceroute

# yum install tracerouteCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

tracerouteutility to display the route to a host on the internet:traceroute redhat.com

# traceroute redhat.com traceroute to redhat.com (209.132.183.105), 30 hops max, 60 byte packets 1 203.0.113.1 (203.0.113.1) 2.179 ms 2.073 ms 1.944 ms 2 198.51.100.2 (198.51.100.2) 1.868 ms 1.798 ms 1.549 ms ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow The output of the command displays that the router sends packets over

198.51.100.2, which is the network of provider A.

On the RHEL router that you configured using the RHEL system role:

Display the rule list:

ip rule list

# ip rule list 0: from all lookup local 5: from 10.0.0.0/24 lookup 5000 32766: from all lookup main 32767: from all lookup defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow By default, RHEL contains rules for the tables

local,main, anddefault.Display the routes in table

5000:ip route list table 5000

# ip route list table 5000 0.0.0.0/0 via 192.0.2.2 dev enp1s0 proto static metric 100 10.0.0.0/24 dev enp8s0 proto static scope link src 192.0.2.1 metric 102Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the interfaces and firewall zones:

firewall-cmd --get-active-zones

# firewall-cmd --get-active-zones external interfaces: enp1s0 enp7s0 trusted interfaces: enp8s0 enp9s0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the

externalzone has masquerading enabled:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.12. Configuring an ethtool offload feature by using the network RHEL system role

You can use the network RHEL system role to automate configuring TCP offload engine (TOE) to offload processing certain operations to the network controller. TOE improves the network throughput.

You cannot use the network RHEL system role to update only specific values in an existing connection profile. The role ensures that a connection profile exactly matches the settings in a playbook. If a connection profile with the same name already exists, the role applies the settings from the playbook and resets all other settings in the profile to their defaults. To prevent resetting values, always specify the whole configuration of the network connection profile in the playbook, including the settings that you do not want to change.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

gro: no- Disables Generic receive offload (GRO).

gso: yes- Enables Generic segmentation offload (GSO).

tx_sctp_segmentation: no- Disables TX stream control transmission protocol (SCTP) segmentation.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Query the Ansible facts of the managed node and verify the offload settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.13. Configuring an ethtool coalesce settings by using the network RHEL system role

Interrupt coalescing collects network packets and generates a single interrupt for multiple packets. This reduces interrupt load and maximizes throughput. You can automate the configuration of these settings in the NetworkManager connection profile by using the network RHEL system role.

You cannot use the network RHEL system role to update only specific values in an existing connection profile. The role ensures that a connection profile exactly matches the settings in a playbook. If a connection profile with the same name already exists, the role applies the settings from the playbook and resets all other settings in the profile to their defaults. To prevent resetting values, always specify the whole configuration of the network connection profile in the playbook, including the settings that you do not want to change.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

rx_frames: <value>- Sets the number of RX frames.

gso: <value>- Sets the number of TX frames.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Display the current offload features of the network device:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.14. Increasing the ring buffer size to reduce a high packet drop rate by using the network RHEL system role

Increase the size of an Ethernet device’s ring buffers if the packet drop rate causes applications to report a loss of data, timeouts, or other issues.

Ring buffers are circular buffers where an overflow overwrites existing data. The network card assigns a transmit (TX) and receive (RX) ring buffer. Receive ring buffers are shared between the device driver and the network interface controller (NIC). Data can move from NIC to the kernel through either hardware interrupts or software interrupts, also called SoftIRQs.

The kernel uses the RX ring buffer to store incoming packets until the device driver can process them. The device driver drains the RX ring, typically by using SoftIRQs, which puts the incoming packets into a kernel data structure called an sk_buff or skb to begin its journey through the kernel and up to the application that owns the relevant socket.

The kernel uses the TX ring buffer to hold outgoing packets which should be sent to the network. These ring buffers reside at the bottom of the stack and are a crucial point at which packet drop can occur, which in turn will adversely affect network performance.

You configure ring buffer settings in the NetworkManager connection profiles. By using Ansible and the network RHEL system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

You cannot use the network RHEL system role to update only specific values in an existing connection profile. The role ensures that a connection profile exactly matches the settings in a playbook. If a connection profile with the same name already exists, the role applies the settings from the playbook and resets all other settings in the profile to their defaults. To prevent resetting values, always specify the whole configuration of the network connection profile in the playbook, including the settings that you do not want to change.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. - You know the maximum ring buffer sizes that the device supports.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

rx: <value>- Sets the maximum number of received ring buffer entries.

tx: <value>- Sets the maximum number of transmitted ring buffer entries.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Display the maximum ring buffer sizes:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

18.15. Configuring an IPoIB connection by using the network RHEL system role

To configure IP over InfiniBand (IPoIB), create a NetworkManager connection profile. You can automate this process by using the network RHEL system role and remotely configure connection profiles on hosts defined in a playbook.

You can use the network RHEL system role to configure IPoIB and, if a connection profile for the InfiniBand’s parent device does not exist, the role can create it as well.

Prerequisites

- You have prepared the control node and the managed nodes.

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. -

An InfiniBand device named

mlx4_ib0is installed in the managed nodes. - The managed nodes use NetworkManager to configure the network.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

type: <profile_type>- Sets the type of the profile to create. The example playbook creates two connection profiles: One for the InfiniBand connection and one for the IPoIB device.

parent: <parent_device>- Sets the parent device of the IPoIB connection profile.

p_key: <value>-

Sets the InfiniBand partition key. If you set this variable, do not set

interface_nameon the IPoIB device. transport_mode: <mode>-

Sets the IPoIB connection operation mode. You can set this variable to

datagram(default) orconnected.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Display the IP settings of the

mlx4_ib0.8002device:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the partition key (P_Key) of the

mlx4_ib0.8002device:ansible managed-node-01.example.com -m command -a 'cat /sys/class/net/mlx4_ib0.8002/pkey' managed-node-01.example.com | CHANGED | rc=0 >> 0x8002

# ansible managed-node-01.example.com -m command -a 'cat /sys/class/net/mlx4_ib0.8002/pkey' managed-node-01.example.com | CHANGED | rc=0 >> 0x8002Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the mode of the

mlx4_ib0.8002device:ansible managed-node-01.example.com -m command -a 'cat /sys/class/net/mlx4_ib0.8002/mode' managed-node-01.example.com | CHANGED | rc=0 >> datagram

# ansible managed-node-01.example.com -m command -a 'cat /sys/class/net/mlx4_ib0.8002/mode' managed-node-01.example.com | CHANGED | rc=0 >> datagramCopy to Clipboard Copied! Toggle word wrap Toggle overflow

18.16. Network states for the network RHEL system role

The network RHEL system role supports state configurations in playbooks to configure the devices. For this, use the network_state variable followed by the state configurations.

Benefits of using the network_state variable in a playbook:

- Using the declarative method with the state configurations, you can configure interfaces, and the NetworkManager creates a profile for these interfaces in the background.

-

With the

network_statevariable, you can specify the options that you require to change, and all the other options will remain the same as they are. However, with thenetwork_connectionsvariable, you must specify all settings to change the network connection profile.

You can set only Nmstate YAML instructions in network_state. These instructions differ from the variables you can set in network_connections.

For example, to create an Ethernet connection with dynamic IP address settings, use the following vars block in your playbook:

| Playbook with state configurations | Regular playbook |

|

|

|

For example, to only change the connection status of dynamic IP address settings that you created as above, use the following vars block in your playbook:

| Playbook with state configurations | Regular playbook |

|

|

|