OpenShift Container Storage is now OpenShift Data Foundation starting with version 4.9.

Este contenido no está disponible en el idioma seleccionado.

Chapter 5. Installing zone aware sample application

Use this section to deploy a zone aware sample application for validating an OpenShift Container Storage Metro Disaster recovery setup is configured correctly.

With latency between the data zones, one can expect to see performance degradation compared to an OpenShift cluster with low latency between nodes and zones (for example, all nodes in the same location). How much will the performance get degraded, will depend on the latency between the zones and on the application behavior using the storage (such as heavy write traffic). Please make sure to test critical applications with Metro DR cluster configuration to ensure sufficient application performance for the required service levels.

5.1. Install Zone Aware Sample Application

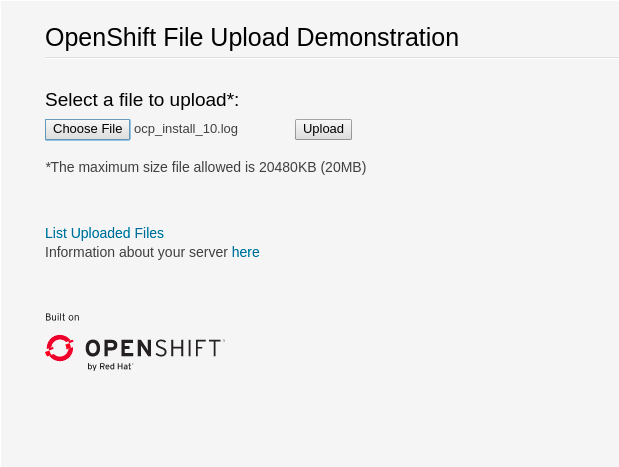

In this section the ocs-storagecluster-cephfs storage class is used to create a Read-Write-Many(RWX) PVC that can be used by multiple pods at the same time. The application we will use is called File Uploader.

Since this application shares the same RWX volume for storing files, we can demonstrate how an application can be spread across topology zones so that in the event of a site outage it is still available. This works for persistent data access as well because OpenShift Container Storage is configured as a Metro DR stretched cluster with zone awareness and high availability.

Create a new project:

oc new-project my-shared-storage

oc new-project my-shared-storageCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy the example PHP application called file-uploader:

oc new-app openshift/php:7.2-ubi8~https://github.com/christianh814/openshift-php-upload-demo --name=file-uploader

oc new-app openshift/php:7.2-ubi8~https://github.com/christianh814/openshift-php-upload-demo --name=file-uploaderCopy to Clipboard Copied! Toggle word wrap Toggle overflow Sample Output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the build log and wait for the application to be deployed:

oc logs -f bc/file-uploader -n my-shared-storage

oc logs -f bc/file-uploader -n my-shared-storageCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The command prompt returns out of the tail mode once you see Push successful.

The new-app command deploys the application directly from the git repository and does not use the OpenShift template, hence OpenShift route resource is not created by default. We would need to create the route manually.

Let’s make our application zone aware and available by scaling it to 4 replicas and exposing it’s services:

oc expose svc/file-uploader -n my-shared-storage oc scale --replicas=4 deploy/file-uploader -n my-shared-storage oc get pods -o wide -n my-shared-storage

oc expose svc/file-uploader -n my-shared-storage

oc scale --replicas=4 deploy/file-uploader -n my-shared-storage

oc get pods -o wide -n my-shared-storageYou should have 4 file-uploader Pods in a few minutes. Repeat the command above until there are 4 file-uploader Pods in Running STATUS.

You can create a PersistentVolumeClaim and attach it into an application with the oc set volume command. Execute the following

oc set volume deploy/file-uploader --add --name=my-shared-storage \ -t pvc --claim-mode=ReadWriteMany --claim-size=10Gi \ --claim-name=my-shared-storage --claim-class=ocs-storagecluster-cephfs \ --mount-path=/opt/app-root/src/uploaded \ -n my-shared-storage

oc set volume deploy/file-uploader --add --name=my-shared-storage \

-t pvc --claim-mode=ReadWriteMany --claim-size=10Gi \

--claim-name=my-shared-storage --claim-class=ocs-storagecluster-cephfs \

--mount-path=/opt/app-root/src/uploaded \

-n my-shared-storageThis command will:

- create a PersistentVolumeClaim

- update the application deployment to include a volume definition

- update the application deployment to attach a volumemount into the specified mount-path

- cause a new deployment of the 4 application Pods

Now, let’s look at the result of adding the volume:

oc get pvc -n my-shared-storage

oc get pvc -n my-shared-storageExample Output:

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE my-shared-storage Bound pvc-5402cc8a-e874-4d7e-af76-1eb05bd2e7c7 10Gi RWX ocs-storagecluster-cephfs 52s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

my-shared-storage Bound pvc-5402cc8a-e874-4d7e-af76-1eb05bd2e7c7 10Gi RWX ocs-storagecluster-cephfs 52sNotice the ACCESSMODE being set to RWX (ReadWriteMany).

All 4 file-uploaderPods are using the same RWX volume. Without this ACCESSMODE, OpenShift will not attempt to attach multiple Pods to the same Persistent Volume reliably. If you attempt to scale up deployments that are using RWO ( ReadWriteOnce) persistent volume, the Pods will get co-located on the same node.

5.2. Modify Deployment to be Zone Aware

Currently, the file-uploader Deployment is not zone aware and can schedule all the Pods in the same zone. In this case if there is a site outage then the application would be unavailable. For more information, see using pod topology spread constraints

To make our app zone aware, we need to add pod placement rule in application deployment configuration. Run the following command and review the output as shown below. In the next step we will modify the Deployment to use the topology zone labels as shown in Start and End sections under below output.

oc get deployment file-uploader -o yaml -n my-shared-storage | less

$ oc get deployment file-uploader -o yaml -n my-shared-storage | lessCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the deployment and add the following new lines between the start and end as shown above:

oc edit deployment file-uploader -n my-shared-storage

$ oc edit deployment file-uploader -n my-shared-storageCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

deployment.apps/file-uploader edited

deployment.apps/file-uploader editedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Scale the deployment to zero Pods and then back to four Pods. This is needed because the deployment changed in terms of Pod placement.

oc scale deployment file-uploader --replicas=0 -n my-shared-storage

oc scale deployment file-uploader --replicas=0 -n my-shared-storageCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

deployment.apps/file-uploader scaled

deployment.apps/file-uploader scaledCopy to Clipboard Copied! Toggle word wrap Toggle overflow And then back to 4 Pods.

oc scale deployment file-uploader --replicas=4 -n my-shared-storage

$ oc scale deployment file-uploader --replicas=4 -n my-shared-storageCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

deployment.apps/file-uploader scaled

deployment.apps/file-uploader scaledCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the four Pods are spread across the four nodes in datacenter1 and datacenter2 zones.

oc get pods -o wide -n my-shared-storage | egrep '^file-uploader'| grep -v build | awk '{print $7}' | sort | uniq -c$ oc get pods -o wide -n my-shared-storage | egrep '^file-uploader'| grep -v build | awk '{print $7}' | sort | uniq -cCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

1 perf1-mz8bt-worker-d2hdm 1 perf1-mz8bt-worker-k68rv 1 perf1-mz8bt-worker-ntkp8 1 perf1-mz8bt-worker-qpwsr

1 perf1-mz8bt-worker-d2hdm 1 perf1-mz8bt-worker-k68rv 1 perf1-mz8bt-worker-ntkp8 1 perf1-mz8bt-worker-qpwsrCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc get nodes -L topology.kubernetes.io/zone | grep datacenter | grep -v master

$ oc get nodes -L topology.kubernetes.io/zone | grep datacenter | grep -v masterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

perf1-mz8bt-worker-d2hdm Ready worker 35d v1.20.0+5fbfd19 datacenter1 perf1-mz8bt-worker-k68rv Ready worker 35d v1.20.0+5fbfd19 datacenter1 perf1-mz8bt-worker-ntkp8 Ready worker 35d v1.20.0+5fbfd19 datacenter2 perf1-mz8bt-worker-qpwsr Ready worker 35d v1.20.0+5fbfd19 datacenter2

perf1-mz8bt-worker-d2hdm Ready worker 35d v1.20.0+5fbfd19 datacenter1 perf1-mz8bt-worker-k68rv Ready worker 35d v1.20.0+5fbfd19 datacenter1 perf1-mz8bt-worker-ntkp8 Ready worker 35d v1.20.0+5fbfd19 datacenter2 perf1-mz8bt-worker-qpwsr Ready worker 35d v1.20.0+5fbfd19 datacenter2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the file-uploader web application using your browser to upload new files.

Find the Route that has been created:

oc get route file-uploader -n my-shared-storage -o jsonpath --template="http://{.spec.host}{'\n'}"$ oc get route file-uploader -n my-shared-storage -o jsonpath --template="http://{.spec.host}{'\n'}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow This will return a route similar to this one.

Sample Output:

http://file-uploader-my-shared-storage.apps.cluster-ocs4-abdf.ocs4-abdf.sandbox744.opentlc.com

http://file-uploader-my-shared-storage.apps.cluster-ocs4-abdf.ocs4-abdf.sandbox744.opentlc.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Point your browser to the web application using your route above. Your route will be different.

The web app simply lists all uploaded files and offers the ability to upload new ones as well as download the existing data. Right now there is nothing.

Select an arbitrary file from your local machine and upload it to the app.

Figure 5.1. A simple PHP-based file upload tool

- Click List uploaded files to see the list of all currently uploaded files.

The OpenShift Container Platform image registry, ingress routing, and monitoring services are not zone-aware