Este contenido no está disponible en el idioma seleccionado.

Chapter 6. Channels

6.1. Channels and subscriptions

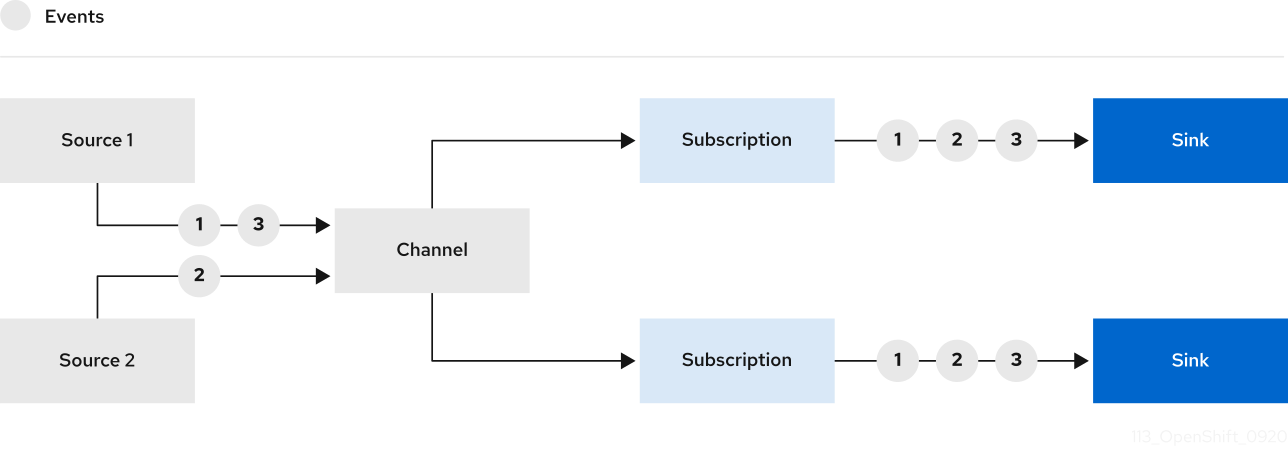

Channels are custom resources that define a single event-forwarding and persistence layer. After events have been sent to a channel from an event source or producer, these events can be sent to multiple Knative services or other sinks by using a subscription.

You can create channels by instantiating a supported Channel object, and configure re-delivery attempts by modifying the delivery spec in a Subscription object.

After you create a Channel object, a mutating admission webhook adds a set of spec.channelTemplate properties for the Channel object based on the default channel implementation. For example, for an InMemoryChannel default implementation, the Channel object looks as follows:

The channel controller then creates the backing channel instance based on the spec.channelTemplate configuration.

The spec.channelTemplate properties cannot be changed after creation, because they are set by the default channel mechanism rather than by the user.

When this mechanism is used with the preceding example, two objects are created: a generic backing channel and an InMemoryChannel channel. If you are using a different default channel implementation, the InMemoryChannel is replaced with one that is specific to your implementation. For example, with the Knative broker for Apache Kafka, the KafkaChannel channel is created.

The backing channel acts as a proxy that copies its subscriptions to the user-created channel object, and sets the user-created channel object status to reflect the status of the backing channel.

6.1.1. Channel implementation types

OpenShift Serverless supports the InMemoryChannel and KafkaChannel channels implementations. The InMemoryChannel channel is recommended for development use only due to its limitations. You can use the KafkaChannel channel for a production environment.

The following are limitations of InMemoryChannel type channels:

- No event persistence is available. If a pod goes down, events on that pod are lost.

-

InMemoryChannelchannels do not implement event ordering, so two events that are received in the channel at the same time can be delivered to a subscriber in any order. -

If a subscriber rejects an event, there are no re-delivery attempts by default. You can configure re-delivery attempts by modifying the

deliveryspec in theSubscriptionobject.

6.2. Creating channels

Channels are custom resources that define a single event-forwarding and persistence layer. After events have been sent to a channel from an event source or producer, these events can be sent to multiple Knative services or other sinks by using a subscription.

You can create channels by instantiating a supported Channel object, and configure re-delivery attempts by modifying the delivery spec in a Subscription object.

6.2.1. Creating a channel by using the Administrator perspective

After Knative Eventing is installed on your cluster, you can create a channel by using the Administrator perspective.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on your OpenShift Container Platform cluster.

- You have logged in to the web console and are in the Administrator perspective.

- You have cluster administrator permissions on OpenShift Container Platform, or you have cluster or dedicated administrator permissions on Red Hat OpenShift Service on AWS or OpenShift Dedicated.

Procedure

-

In the Administrator perspective of the OpenShift Container Platform web console, navigate to Serverless

Eventing. - In the Create list, select Channel. You will be directed to the Channel page.

Select the type of

Channelobject that you want to create in the Type list.NoteCurrently only

InMemoryChannelchannel objects are supported by default. Knative channels for Apache Kafka are available if you have installed the Knative broker implementation for Apache Kafka on OpenShift Serverless.- Click Create.

6.2.2. Creating a channel by using the Developer perspective

Using the OpenShift Container Platform web console provides a streamlined and intuitive user interface to create a channel. After Knative Eventing is installed on your cluster, you can create a channel by using the web console.

Prerequisites

- You have logged in to the OpenShift Container Platform web console.

- The OpenShift Serverless Operator and Knative Eventing are installed on your OpenShift Container Platform cluster.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

-

In the Developer perspective, navigate to +Add

Channel. -

Select the type of

Channelobject that you want to create in the Type list. - Click Create.

Verification

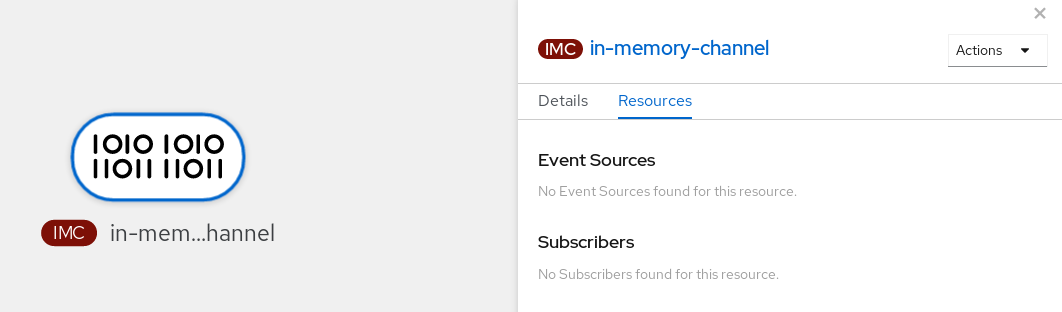

Confirm that the channel now exists by navigating to the Topology page.

6.2.3. Creating a channel by using the Knative CLI

Using the Knative (kn) CLI to create channels provides a more streamlined and intuitive user interface than modifying YAML files directly. You can use the kn channel create command to create a channel.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on the cluster.

-

You have installed the Knative (

kn) CLI. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

Create a channel:

kn channel create <channel_name> --type <channel_type>

$ kn channel create <channel_name> --type <channel_type>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The channel type is optional, but where specified, must be given in the format

Group:Version:Kind. For example, you can create anInMemoryChannelobject:kn channel create mychannel --type messaging.knative.dev:v1:InMemoryChannel

$ kn channel create mychannel --type messaging.knative.dev:v1:InMemoryChannelCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Channel 'mychannel' created in namespace 'default'.

Channel 'mychannel' created in namespace 'default'.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To confirm that the channel now exists, list the existing channels and inspect the output:

kn channel list

$ kn channel listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

kn channel list NAME TYPE URL AGE READY REASON mychannel InMemoryChannel http://mychannel-kn-channel.default.svc.cluster.local 93s True

kn channel list NAME TYPE URL AGE READY REASON mychannel InMemoryChannel http://mychannel-kn-channel.default.svc.cluster.local 93s TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Deleting a channel

Delete a channel:

kn channel delete <channel_name>

$ kn channel delete <channel_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.4. Creating a default implementation channel by using YAML

Creating Knative resources by using YAML files uses a declarative API, which enables you to describe channels declaratively and in a reproducible manner. To create a serverless channel by using YAML, you must create a YAML file that defines a Channel object, then apply it by using the oc apply command.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on the cluster.

-

Install the OpenShift CLI (

oc). - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

Create a

Channelobject as a YAML file:apiVersion: messaging.knative.dev/v1 kind: Channel metadata: name: example-channel namespace: default

apiVersion: messaging.knative.dev/v1 kind: Channel metadata: name: example-channel namespace: defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the YAML file:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.5. Creating a channel for Apache Kafka by using YAML

Creating Knative resources by using YAML files uses a declarative API, which enables you to describe channels declaratively and in a reproducible manner. You can create a Knative Eventing channel that is backed by Kafka topics by creating a Kafka channel. To create a Kafka channel by using YAML, you must create a YAML file that defines a KafkaChannel object, then apply it by using the oc apply command.

Prerequisites

-

The OpenShift Serverless Operator, Knative Eventing, and the

KnativeKafkacustom resource are installed on your OpenShift Container Platform cluster. -

Install the OpenShift CLI (

oc). - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

Create a

KafkaChannelobject as a YAML file:Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantOnly the

v1beta1version of the API forKafkaChannelobjects on OpenShift Serverless is supported. Do not use thev1alpha1version of this API, as this version is now deprecated.Apply the

KafkaChannelYAML file:oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.6. Next steps

- After you have created a channel, you can connect the channel to a sink so that the sink can receive events.

- Configure event delivery parameters that are applied in cases where an event fails to be delivered to an event sink.

6.3. Connecting channels to sinks

Events that have been sent to a channel from an event source or producer can be forwarded to one or more sinks by using subscriptions. You can create subscriptions by configuring a Subscription object, which specifies the channel and the sink (also known as a subscriber) that consumes the events sent to that channel.

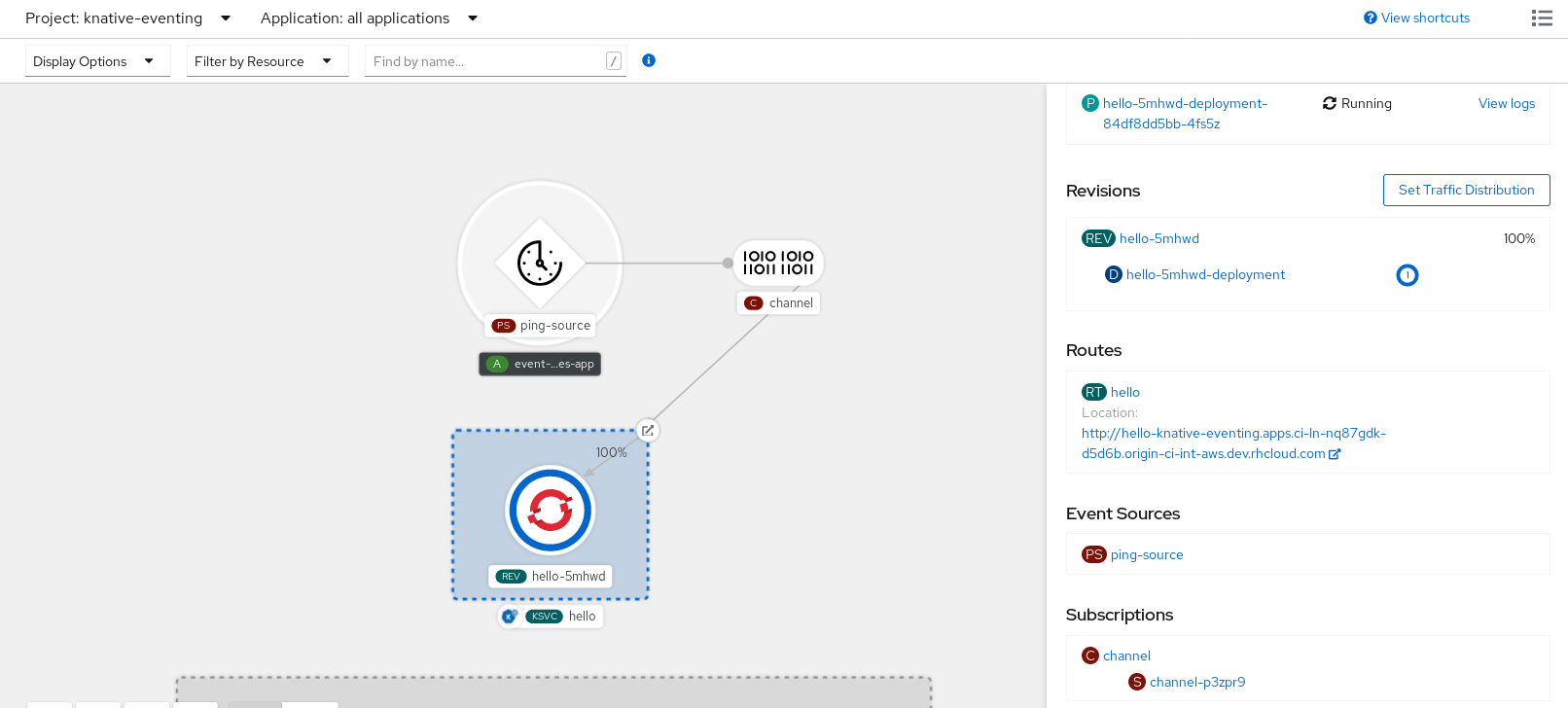

6.3.1. Creating a subscription by using the Developer perspective

After you have created a channel and an event sink, you can create a subscription to enable event delivery. Using the OpenShift Container Platform web console provides a streamlined and intuitive user interface to create a subscription.

Prerequisites

- The OpenShift Serverless Operator, Knative Serving, and Knative Eventing are installed on your OpenShift Container Platform cluster.

- You have logged in to the web console.

- You have created an event sink, such as a Knative service, and a channel.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

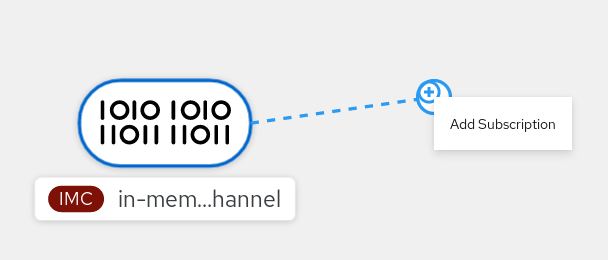

- In the Developer perspective, navigate to the Topology page.

Create a subscription using one of the following methods:

Hover over the channel that you want to create a subscription for, and drag the arrow. The Add Subscription option is displayed.

- Select your sink in the Subscriber list.

- Click Add.

- If the service is available in the Topology view under the same namespace or project as the channel, click on the channel that you want to create a subscription for, and drag the arrow directly to a service to immediately create a subscription from the channel to that service.

Verification

After the subscription has been created, you can see it represented as a line that connects the channel to the service in the Topology view:

6.3.2. Creating a subscription by using YAML

After you have created a channel and an event sink, you can create a subscription to enable event delivery. Creating Knative resources by using YAML files uses a declarative API, which enables you to describe subscriptions declaratively and in a reproducible manner. To create a subscription by using YAML, you must create a YAML file that defines a Subscription object, then apply it by using the oc apply command.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on the cluster.

-

Install the OpenShift CLI (

oc). - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

Create a

Subscriptionobject:Create a YAML file and copy the following sample code into it:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Name of the subscription.

- 2

- Configuration settings for the channel that the subscription connects to.

- 3

- Configuration settings for event delivery. This tells the subscription what happens to events that cannot be delivered to the subscriber. When this is configured, events that failed to be consumed are sent to the

deadLetterSink. The event is dropped, no re-delivery of the event is attempted, and an error is logged in the system. ThedeadLetterSinkvalue must be a Destination. - 4

- Configuration settings for the subscriber. This is the event sink that events are delivered to from the channel.

Apply the YAML file:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.3. Creating a subscription by using the Knative CLI

After you have created a channel and an event sink, you can create a subscription to enable event delivery. Using the Knative (kn) CLI to create subscriptions provides a more streamlined and intuitive user interface than modifying YAML files directly. You can use the kn subscription create command with the appropriate flags to create a subscription.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on your OpenShift Container Platform cluster.

-

You have installed the Knative (

kn) CLI. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

Create a subscription to connect a sink to a channel:

kn subscription create <subscription_name> \ --channel <group:version:kind>:<channel_name> \ --sink <sink_prefix>:<sink_name> \ --sink-dead-letter <sink_prefix>:<sink_name>

$ kn subscription create <subscription_name> \ --channel <group:version:kind>:<channel_name> \1 --sink <sink_prefix>:<sink_name> \2 --sink-dead-letter <sink_prefix>:<sink_name>3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

--channelspecifies the source for cloud events that should be processed. You must provide the channel name. If you are not using the defaultInMemoryChannelchannel that is backed by theChannelcustom resource, you must prefix the channel name with the<group:version:kind>for the specified channel type. For example, this will bemessaging.knative.dev:v1beta1:KafkaChannelfor an Apache Kafka backed channel.- 2

--sinkspecifies the target destination to which the event should be delivered. By default, the<sink_name>is interpreted as a Knative service of this name, in the same namespace as the subscription. You can specify the type of the sink by using one of the following prefixes:ksvc- A Knative service.

channel- A channel that should be used as destination. Only default channel types can be referenced here.

broker- An Eventing broker.

- 3

- Optional:

--sink-dead-letteris an optional flag that can be used to specify a sink which events should be sent to in cases where events fail to be delivered. For more information, see the OpenShift Serverless Event delivery documentation.Example command

kn subscription create mysubscription --channel mychannel --sink ksvc:event-display

$ kn subscription create mysubscription --channel mychannel --sink ksvc:event-displayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Subscription 'mysubscription' created in namespace 'default'.

Subscription 'mysubscription' created in namespace 'default'.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To confirm that the channel is connected to the event sink, or subscriber, by a subscription, list the existing subscriptions and inspect the output:

kn subscription list

$ kn subscription listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME CHANNEL SUBSCRIBER REPLY DEAD LETTER SINK READY REASON mysubscription Channel:mychannel ksvc:event-display True

NAME CHANNEL SUBSCRIBER REPLY DEAD LETTER SINK READY REASON mysubscription Channel:mychannel ksvc:event-display TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Deleting a subscription

Delete a subscription:

kn subscription delete <subscription_name>

$ kn subscription delete <subscription_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.4. Creating a subscription by using the Administrator perspective

After you have created a channel and an event sink, also known as a subscriber, you can create a subscription to enable event delivery. Subscriptions are created by configuring a Subscription object, which specifies the channel and the subscriber to deliver events to. You can also specify some subscriber-specific options, such as how to handle failures.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on your OpenShift Container Platform cluster.

- You have logged in to the web console and are in the Administrator perspective.

- You have cluster administrator permissions on OpenShift Container Platform, or you have cluster or dedicated administrator permissions on Red Hat OpenShift Service on AWS or OpenShift Dedicated.

- You have created a Knative channel.

- You have created a Knative service to use as a subscriber.

Procedure

-

In the Administrator perspective of the OpenShift Container Platform web console, navigate to Serverless

Eventing. -

In the Channel tab, select the Options menu

for the channel that you want to add a subscription to.

for the channel that you want to add a subscription to.

- Click Add Subscription in the list.

- In the Add Subscription dialogue box, select a Subscriber for the subscription. The subscriber is the Knative service that receives events from the channel.

- Click Add.

6.3.5. Next steps

- Configure event delivery parameters that are applied in cases where an event fails to be delivered to an event sink.

6.4. Default channel implementation

You can use the default-ch-webhook config map to specify the default channel implementation of Knative Eventing. You can specify the default channel implementation for the entire cluster or for one or more namespaces. Currently the InMemoryChannel and KafkaChannel channel types are supported.

6.4.1. Configuring the default channel implementation

Prerequisites

- You have administrator permissions on OpenShift Container Platform.

- You have installed the OpenShift Serverless Operator and Knative Eventing on your cluster.

-

If you want to use Knative channels for Apache Kafka as the default channel implementation, you must also install the

KnativeKafkaCR on your cluster.

Procedure

Modify the

KnativeEventingcustom resource to add configuration details for thedefault-ch-webhookconfig map:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- In

spec.config, you can specify the config maps that you want to add modified configurations for. - 2

- The

default-ch-webhookconfig map can be used to specify the default channel implementation for the cluster or for one or more namespaces. - 3

- The cluster-wide default channel type configuration. In this example, the default channel implementation for the cluster is

InMemoryChannel. - 4

- The namespace-scoped default channel type configuration. In this example, the default channel implementation for the

my-namespacenamespace isKafkaChannel.

ImportantConfiguring a namespace-specific default overrides any cluster-wide settings.

6.5. Security configuration for channels

6.5.1. Configuring TLS authentication for Knative channels for Apache Kafka

Transport Layer Security (TLS) is used by Apache Kafka clients and servers to encrypt traffic between Knative and Kafka, as well as for authentication. TLS is the only supported method of traffic encryption for the Knative broker implementation for Apache Kafka.

Prerequisites

- You have cluster or dedicated administrator permissions on OpenShift Container Platform.

-

The OpenShift Serverless Operator, Knative Eventing, and the

KnativeKafkaCR are installed on your OpenShift Container Platform cluster. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

-

You have a Kafka cluster CA certificate stored as a

.pemfile. -

You have a Kafka cluster client certificate and a key stored as

.pemfiles. -

Install the OpenShift CLI (

oc).

Procedure

Create the certificate files as secrets in your chosen namespace:

oc create secret -n <namespace> generic <kafka_auth_secret> \ --from-file=ca.crt=caroot.pem \ --from-file=user.crt=certificate.pem \ --from-file=user.key=key.pem

$ oc create secret -n <namespace> generic <kafka_auth_secret> \ --from-file=ca.crt=caroot.pem \ --from-file=user.crt=certificate.pem \ --from-file=user.key=key.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantUse the key names

ca.crt,user.crt, anduser.key. Do not change them.Start editing the

KnativeKafkacustom resource:oc edit knativekafka

$ oc edit knativekafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reference your secret and the namespace of the secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteMake sure to specify the matching port in the bootstrap server.

For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.5.2. Configuring SASL authentication for Knative channels for Apache Kafka

Simple Authentication and Security Layer (SASL) is used by Apache Kafka for authentication. If you use SASL authentication on your cluster, users must provide credentials to Knative for communicating with the Kafka cluster; otherwise events cannot be produced or consumed.

Prerequisites

- You have cluster or dedicated administrator permissions on OpenShift Container Platform.

-

The OpenShift Serverless Operator, Knative Eventing, and the

KnativeKafkaCR are installed on your OpenShift Container Platform cluster. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

- You have a username and password for a Kafka cluster.

-

You have chosen the SASL mechanism to use, for example,

PLAIN,SCRAM-SHA-256, orSCRAM-SHA-512. -

If TLS is enabled, you also need the

ca.crtcertificate file for the Kafka cluster. -

Install the OpenShift CLI (

oc).

Procedure

Create the certificate files as secrets in your chosen namespace:

oc create secret -n <namespace> generic <kafka_auth_secret> \ --from-file=ca.crt=caroot.pem \ --from-literal=password="SecretPassword" \ --from-literal=saslType="SCRAM-SHA-512" \ --from-literal=user="my-sasl-user"

$ oc create secret -n <namespace> generic <kafka_auth_secret> \ --from-file=ca.crt=caroot.pem \ --from-literal=password="SecretPassword" \ --from-literal=saslType="SCRAM-SHA-512" \ --from-literal=user="my-sasl-user"Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Use the key names

ca.crt,password, andsasl.mechanism. Do not change them. If you want to use SASL with public CA certificates, you must use the

tls.enabled=trueflag, rather than theca.crtargument, when creating the secret. For example:oc create secret -n <namespace> generic <kafka_auth_secret> \ --from-literal=tls.enabled=true \ --from-literal=password="SecretPassword" \ --from-literal=saslType="SCRAM-SHA-512" \ --from-literal=user="my-sasl-user"

$ oc create secret -n <namespace> generic <kafka_auth_secret> \ --from-literal=tls.enabled=true \ --from-literal=password="SecretPassword" \ --from-literal=saslType="SCRAM-SHA-512" \ --from-literal=user="my-sasl-user"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Use the key names

Start editing the

KnativeKafkacustom resource:oc edit knativekafka

$ oc edit knativekafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reference your secret and the namespace of the secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteMake sure to specify the matching port in the bootstrap server.

For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow