Este contenido no está disponible en el idioma seleccionado.

Cluster administration

Configuring Red Hat OpenShift Service on AWS clusters

Abstract

Chapter 1. Cluster notifications

Cluster notifications (sometimes referred to as service logs) are messages about the status, health, or performance of your cluster.

Cluster notifications are the primary way that Red Hat Site Reliability Engineering (SRE) communicates with you about the health of your managed cluster. Red Hat SRE may also use cluster notifications to prompt you to perform an action in order to resolve or prevent an issue with your cluster.

Cluster owners and administrators must regularly review and action cluster notifications to ensure clusters remain healthy and supported.

You can view cluster notifications in the Red Hat Hybrid Cloud Console, in the Cluster history tab for your cluster. By default, only the cluster owner receives cluster notifications as emails. If other users need to receive cluster notification emails, add each user as a notification contact for your cluster.

1.2. What to expect from cluster notifications

As a cluster administrator, you need to be aware of when and why cluster notifications are sent, as well as their types and severity levels, in order to effectively understand the health and administration needs of your cluster.

1.2.1. Cluster notification policy

Cluster notifications are designed to keep you informed about the health of your cluster and high impact events that affect it.

Most cluster notifications are generated and sent automatically to ensure that you are immediately informed of problems or important changes to the state of your cluster.

In certain situations, Red Hat Site Reliability Engineering (SRE) creates and sends cluster notifications to provide additional context and guidance for a complex issue.

Cluster notifications are not sent for low-impact events, low-risk security updates, routine operations and maintenance, or minor, transient issues that are quickly resolved by Red Hat SRE.

Red Hat services automatically send notifications when:

- Remote health monitoring or environment verification checks detect an issue in your cluster, for example, when a worker node has low disk space.

- Significant cluster life cycle events occur, for example, when scheduled maintenance or upgrades begin, or cluster operations are impacted by an event, but do not require customer intervention.

- Significant cluster management changes occur, for example, when cluster ownership or administrative control is transferred from one user to another.

- Your cluster subscription is changed or updated, for example, when Red Hat makes updates to subscription terms or features available to your cluster.

SRE creates and sends notifications when:

- An incident results in a degradation or outage that impacts your cluster’s availability or performance, for example, your cloud provider has a regional outage. SRE sends subsequent notifications to inform you of incident resolution progress, and when the incident is resolved.

- A security vulnerability, security breach, or unusual activity is detected on your cluster.

- Red Hat detects that changes you have made are creating or may result in cluster instability.

- Red Hat detects that your workloads are causing performance degradation or instability in your cluster.

1.2.2. Cluster notification severity levels

Each cluster notification has an associated severity level to help you identify notifications with the greatest impact to your business. You can filter cluster notifications according to these severity levels in the Red Hat Hybrid Cloud Console, in the Cluster history tab for your cluster.

Red Hat uses the following severity levels for cluster notifications, from most to least severe:

- Critical

- Immediate action is required. One or more key functions of a service or cluster is not working, or will stop working soon. A critical alert is important enough to page on-call staff and interrupt regular workflows.

- Major

- Immediate action is strongly recommended. One or more key functions of the cluster will soon stop working. A major issue may lead to a critical issue if it is not addressed in a timely manner.

- Warning

- Action is required as soon as possible. One or more key functions of the cluster are not working optimally and may degrade further, but do not pose an immediate danger to the functioning of the cluster.

- Info

- No action necessary. This severity does not describe problems that need to be addressed, only important information about meaningful or important life cycle, service, or cluster events.

- Debug

- No action necessary. Debug notifications provide low-level information about less important lifecycle, service, or cluster events to aid in debugging unexpected behavior.

1.2.3. Cluster notification types

Each cluster notification has an associated notification type to help you identify notifications that are relevant to your role and responsibilities. You can filter cluster notifications according to these types in the Red Hat Hybrid Cloud Console, in the Cluster history tab for your cluster.

Red Hat uses the following notification types to indicate notification relevance.

- Capacity management

- Notifications for events related to updating, creating, or deleting node pools, machine pools, compute replicas or quotas (load balancer, storage, etc.).

- Cluster access

- Notifications for events related to adding or deleting groups, roles or identity providers, for example, when SRE cannot access your cluster because STS credentials have expired, when there is a configuration problem with your AWS roles, or when you add or remove identity providers.

- Cluster add-ons

- Notifications for events related to add-on management or upgrade maintenance for add-ons, for example, when an add-on is installed, upgraded, or removed, or cannot be installed due to unmet requirements.

- Cluster configuration

- Notifications for cluster tuning events, workload monitoring, and inflight checks.

- Cluster lifecycle

- Notifications for cluster or cluster resource creation, deletion, and registration, or change in cluster or resource status (for example, ready or hibernating).

- Cluster networking

- Notifications related to cluster networking, including HTTP/S proxy, router, and ingress state.

- Cluster ownership

- Notifications related to cluster ownership transfer from one user to another.

- Cluster scaling

- Notifications related to updating, creating, or deleting node pools, machine pools, compute replicas or quota.

- Cluster security

- Events related to cluster security, for example, an increased number of failed access attempts, updates to trust bundles, or software updates with security impact.

- Cluster subscription

- Cluster expiration, trial cluster notifications, or switching from free to paid.

- Cluster updates

- Anything relating to upgrades, such as upgrade maintenance or enablement.

- Customer support

- Updates on support case status.

- General notification

- The default notification type. This is only used for notifications that do not have a more specific category.

1.3. Viewing cluster notifications using the Red Hat Hybrid Cloud Console

Cluster notifications provide important information about the health of your cluster. You can view notifications that have been sent to your cluster in the Cluster history tab on the Red Hat Hybrid Cloud Console.

Prerequisites

- You are logged in to the Hybrid Cloud Console.

Procedure

- Navigate to the Clusters page of the Hybrid Cloud Console.

- Click the name of your cluster to go to the cluster details page.

Click the Cluster history tab.

Cluster notifications appear under the Cluster history heading.

Optional: Filter for relevant cluster notifications

Use the filter controls to hide cluster notifications that are not relevant to you, so that you can focus on your area of expertise or on resolving a critical issue. You can filter notifications based on text in the notification description, severity level, notification type, when the notification was received, and which system or person triggered the notification.

1.4. Cluster notification emails

By default, when a cluster notification is sent to the cluster, it is also sent as an email to the cluster owner. You can configure additional recipients for notification emails to ensure that all appropriate users remain informed about the state of the cluster.

1.4.1. Adding notification contacts to your cluster

Notification contacts receive emails when cluster notifications are sent to the cluster. By default, only the cluster owner receives cluster notification emails. You can configure other cluster users as additional notification contacts in your cluster support settings.

Prerequisites

- Your cluster is deployed and registered to the Red Hat Hybrid Cloud Console.

- You are logged in to the Hybrid Cloud Console as the cluster owner or as a user with the cluster editor role.

- The intended notification recipient has a Red Hat Customer Portal account associated with the same organization as the cluster owner.

Procedure

- Navigate to the Clusters page of the Hybrid Cloud Console.

- Click the name of your cluster to go to the cluster details page.

- Click the Support tab.

- On the Support tab, find the Notification contacts section.

- Click Add notification contact.

- In the Red Hat username or email field, enter the email address or the user name of the new recipient.

- Click Add contact.

Verification steps

- The "Notification contact added successfully" message displays.

Troubleshooting

- The Add notification contact button is disabled

- This button is disabled for users who do not have permission to add a notification contact. Log in to an account with the cluster owner, cluster editor, or cluster administrator role and try again.

- Error:

Could not find any account identified by <username>or<email-address> - This error occurs when the intended notification recipient is not part of the same Red Hat account organization as the cluster owner. Contact your organization administrator to add the intended recipient to the relevant organization and try again.

1.4.2. Removing notification contacts from your cluster

Notification contacts receive emails when cluster notifications are sent to the cluster.

You can remove notification contacts in your cluster support settings to prevent them from receiving notification emails.

Prerequisites

- Your cluster is deployed and registered to the Red Hat Hybrid Cloud Console.

- You are logged in to the Hybrid Cloud Console as the cluster owner or as a user with the cluster editor role.

Procedure

- Navigate to the Clusters page of the Hybrid Cloud Console.

- Click the name of your cluster to go to the cluster details page.

- Click the Support tab.

- On the Support tab, find the Notification contacts section.

- Click the options menu (⚙) beside the recipient you want to remove.

- Click Delete.

Verification steps

- The "Notification contact deleted successfully" message displays.

1.5. Troubleshooting

If you are not receiving cluster notification emails

-

Ensure that emails sent from

@redhat.comaddresses are not filtered out of your email inbox. - Ensure that your correct email address is listed as a notification contact for the cluster.

- Ask the cluster owner or administrator to add you as a notification contact: Cluster notification emails.

If your cluster does not receive notifications

-

Ensure that your cluster can access resources at

api.openshift.com.

Chapter 2. Configuring private connections

2.1. Configuring private connections

Private cluster access can be implemented to suit the needs of your Red Hat OpenShift Service on AWS environment.

Procedure

Access your Red Hat OpenShift Service on AWS AWS account and use one or more of the following methods to establish a private connection to your cluster:

- Configuring AWS VPC peering: Enable VPC peering to route network traffic between two private IP addresses.

- Configuring AWS VPN: Establish a Virtual Private Network to securely connect your private network to your Amazon Virtual Private Cloud.

- Configuring AWS Direct Connect: Configure AWS Direct Connect to establish a dedicated network connection between your private network and an AWS Direct Connect location.

2.2. Configuring AWS VPC peering

This sample process configures an Amazon Web Services (AWS) VPC containing an Red Hat OpenShift Service on AWS cluster to peer with another AWS VPC network. For more information about creating an AWS VPC Peering connection or for other possible configurations, see the AWS VPC Peering guide.

2.2.1. VPC peering terms

When setting up a VPC peering connection between two VPCs on two separate AWS accounts, the following terms are used:

| Red Hat OpenShift Service on AWS AWS Account | The AWS account that contains the Red Hat OpenShift Service on AWS cluster. |

| Red Hat OpenShift Service on AWS Cluster VPC | The VPC that contains the Red Hat OpenShift Service on AWS cluster. |

| Customer AWS Account | Your non-Red Hat OpenShift Service on AWS AWS Account that you would like to peer with. |

| Customer VPC | The VPC in your AWS Account that you would like to peer with. |

| Customer VPC Region | The region where the customer’s VPC resides. |

As of July 2018, AWS supports inter-region VPC peering between all commercial regions excluding China.

2.2.2. Initiating the VPC peer request

You can send a VPC peering connection request from the Red Hat OpenShift Service on AWS AWS Account to the Customer AWS Account.

Prerequisites

Gather the following information about the Customer VPC required to initiate the peering request:

- Customer AWS account number

- Customer VPC ID

- Customer VPC Region

- Customer VPC CIDR

- Check the CIDR block used by the Red Hat OpenShift Service on AWS Cluster VPC. If it overlaps or matches the CIDR block for the Customer VPC, then peering between these two VPCs is not possible; see the Amazon VPC Unsupported VPC Peering Configurations documentation for details. If the CIDR blocks do not overlap, you can continue with the procedure.

Procedure

- Log in to the Web Console for the Red Hat OpenShift Service on AWS AWS Account and navigate to the VPC Dashboard in the region where the cluster is being hosted.

- Go to the Peering Connections page and click the Create Peering Connection button.

Verify the details of the account you are logged in to and the details of the account and VPC you are connecting to:

- Peering connection name tag: Set a descriptive name for the VPC Peering Connection.

- VPC (Requester): Select the Red Hat OpenShift Service on AWS Cluster VPC ID from the list.

- Account: Select Another account and provide the Customer AWS Account number *(without dashes).

- Region: If the Customer VPC Region differs from the current region, select Another Region and select the customer VPC Region from the list.

- VPC (Accepter): Set the Customer VPC ID.

- Click Create Peering Connection.

- Confirm that the request enters a Pending state. If it enters a Failed state, confirm the details and repeat the process.

2.2.3. Accepting the VPC peer request

After you create the VPC peering connection, you must accept the request in the Customer AWS Account.

Prerequisites

- Initiate the VPC peer request.

Procedure

- Log in to the AWS Web Console.

- Navigate to VPC Service.

- Go to Peering Connections.

- Click on Pending peering connection.

- Confirm the AWS Account and VPC ID that the request originated from. This should be from the Red Hat OpenShift Service on AWS AWS Account and Red Hat OpenShift Service on AWS Cluster VPC.

- Click Accept Request.

2.2.4. Configuring the routing tables

After you accept the VPC peering request, both VPCs must configure their routes to communicate across the peering connection.

Prerequisites

- Initiate and accept the VPC peer request.

Procedure

- Log in to the AWS Web Console for the Red Hat OpenShift Service on AWS AWS Account.

- Navigate to the VPC Service, then Route tables.

Select the Route Table for the Red Hat OpenShift Service on AWS Cluster VPC.

NoteOn some clusters, there may be more than one route table for a particular VPC. Select the private one that has a number of explicitly associated subnets.

- Select the Routes tab, then Edit.

- Enter the Customer VPC CIDR block in the Destination text box.

- Enter the Peering Connection ID in the Target text box.

- Click Save.

You must complete the same process with the other VPC’s CIDR block:

- Log into the Customer AWS Web Console → VPC Service → Route Tables.

- Select the Route Table for your VPC.

- Select the Routes tab, then Edit.

- Enter the Red Hat OpenShift Service on AWS Cluster VPC CIDR block in the Destination text box.

- Enter the Peering Connection ID in the Target text box.

- Click Save changes.

The VPC peering connection is now complete. Follow the verification procedure to ensure connectivity across the peering connection is working.

2.2.5. Verifying and troubleshooting VPC peering

After you set up a VPC peering connection, it is best to confirm it has been configured and is working correctly.

Prerequisites

- Initiate and accept the VPC peer request.

- Configure the routing tables.

Procedure

In the AWS console, look at the route table for the cluster VPC that is peered. Ensure that the steps for configuring the routing tables were followed and that there is a route table entry pointing the VPC CIDR range destination to the peering connection target.

If the routes look correct on both the Red Hat OpenShift Service on AWS Cluster VPC route table and Customer VPC route table, then the connection should be tested using the

netcatmethod below. If the test calls are successful, then VPC peering is working correctly.To test network connectivity to an endpoint device,

nc(ornetcat) is a helpful troubleshooting tool. It is included in the default image and provides quick and clear output if a connection can be established:Create a temporary pod using the

busyboximage, which cleans up after itself:oc run netcat-test \ --image=busybox -i -t \ --restart=Never --rm \ -- /bin/sh$ oc run netcat-test \ --image=busybox -i -t \ --restart=Never --rm \ -- /bin/shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the connection using

nc.Example successful connection results:

/ nc -zvv 192.168.1.1 8080 10.181.3.180 (10.181.3.180:8080) open sent 0, rcvd 0

/ nc -zvv 192.168.1.1 8080 10.181.3.180 (10.181.3.180:8080) open sent 0, rcvd 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example failed connection results:

/ nc -zvv 192.168.1.2 8080 nc: 10.181.3.180 (10.181.3.180:8081): Connection refused sent 0, rcvd 0

/ nc -zvv 192.168.1.2 8080 nc: 10.181.3.180 (10.181.3.180:8081): Connection refused sent 0, rcvd 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Exit the container, which automatically deletes the Pod:

/ exit

/ exitCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3. Configuring AWS VPN

This sample process configures an Amazon Web Services (AWS) Red Hat OpenShift Service on AWS cluster to use a customer’s on-site hardware VPN device.

AWS VPN does not currently provide a managed option to apply NAT to VPN traffic. See the AWS Knowledge Center for more details.

Routing all traffic, for example 0.0.0.0/0, through a private connection is not supported. This requires deleting the internet gateway, which disables SRE management traffic.

For more information about connecting an AWS VPC to remote networks using a hardware VPN device, see the Amazon VPC VPN Connections documentation.

2.3.1. Creating a VPN connection

You can configure an Amazon Web Services (AWS) Red Hat OpenShift Service on AWS cluster to use a customer’s on-site hardware VPN device using the following procedures.

Prerequisites

- Hardware VPN gateway device model and software version, for example Cisco ASA running version 8.3. See the Amazon VPC Network Administrator Guide to confirm whether your gateway device is supported by AWS.

- Public, static IP address for the VPN gateway device.

- BGP or static routing: if BGP, the ASN is required. If static routing, you must configure at least one static route.

- Optional: IP and Port/Protocol of a reachable service to test the VPN connection.

2.3.1.1. Configuring the VPN connection

Procedure

- Log in to the Red Hat OpenShift Service on AWS AWS Account Dashboard, and navigate to the VPC Dashboard.

- Under Virtual private cloud click on Your VPCs and identify the name and VPC ID for the VPC containing the Red Hat OpenShift Service on AWS cluster.

- Under Virtual private network (VPN) click Customer gateways.

- Click Create customer gateway and give it a meaningful name.

- Enter the ASN of your customer gateway device in the BGP ASN field.

- Enter the IP address for your customer gateway devices’s external interface in the IP address field.

- Click Create customer gateway.

If you do not already have a Virtual Private Gateway attached to the intended VPC:

- From the VPC Dashboard, click on Virtual Private Gateways.

- Click Create virtual private gateway, give it a meaningful name.

- Click Create virtual private gateway, leaving the Amazon default ASN.

- Select the newly created gateway.

- Select Actions from the list and click Attach to VPC.

- Select the newly created gateway under Available VPC’s and click Attach to VPC to attach it to the cluster VPC you identified earlier.

2.3.1.2. Establishing the VPN Connection

Procedure

- From the VPC dashboard, under Virtual private network (VPN) click on Site-to-Site VPN connections.

Click Create VPN connection.

- Give it a meaningful name tag.

- Select the Virtual private gateway created previously.

- For Customer gateway, select Existing.

- Select the Customer gateway id by name.

- If the VPN will use BGP, select Dynamic, otherwise select Static and enter the Static IP CIDRs. If there are multiple CIDRs, add each CIDR as Another Rule.

- Click Create VPN connection.

- Under State wait for the VPN status to change from Pending to Available, approximately 5 to 10 minutes.

Select the VPN you just created and click Download configuration.

- From the list, select the vendor, platform, and version of the customer gateway device, then click Download.

- The Generic vendor configuration is also available for retrieving information in a plain text format.

After the VPN connection has been established, be sure to set up Route Propagation or the VPN may not function as expected.

Note the VPC subnet information, which you must add to your configuration as the remote network.

2.3.1.3. Enabling VPN route propagation

After you have set up the VPN connection, you must ensure that route propagation is enabled so that the necessary routes are added to the VPC’s route table.

Procedure

- From the VPC Dashboard, under Virtual private cloud, click on Route tables.

Select the private Route table associated with the VPC that contains your Red Hat OpenShift Service on AWS cluster.

NoteOn some clusters, there may be more than one route table for a particular VPC. Select the private one that has a number of explicitly associated subnets.

- Click on the Route Propagation tab.

In the table that appears, you should see the Virtual Private Gateway you created previously. Check the value in the Propagate column.

- If Propagation is set to No, click Edit route propagation, check the Enable checkbox in Propagation and click Save.

After you configure your VPN tunnel and AWS detects it as Up, your static or BGP routes are automatically added to the route table.

2.3.2. Verifying the VPN connection

After you have set up your side of the VPN tunnel, you can verify that the tunnel is up in the AWS console and that connectivity across the tunnel is working.

Prerequisites

- Created a VPN connection.

Procedure

Verify the tunnel is up in AWS.

- From the VPC Dashboard, under Virtual private network (VPN), click on Site-to-Site VPN connections.

- Select the VPN connection you created previously and click the Tunnel details tab.

- You should see that at least one of the VPN tunnels is in an Up status.

Verify the connection.

To test network connectivity to an endpoint device,

nc(ornetcat) is a helpful troubleshooting tool. It is included in the default image and provides quick and clear output if a connection can be established:Create a temporary pod using the

busyboximage, which cleans up after itself:oc run netcat-test \ --image=busybox -i -t \ --restart=Never --rm \ -- /bin/sh$ oc run netcat-test \ --image=busybox -i -t \ --restart=Never --rm \ -- /bin/shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the connection using

nc.Example successful connection results:

/ nc -zvv 192.168.1.1 8080 10.181.3.180 (10.181.3.180:8080) open sent 0, rcvd 0

/ nc -zvv 192.168.1.1 8080 10.181.3.180 (10.181.3.180:8080) open sent 0, rcvd 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example failed connection results:

/ nc -zvv 192.168.1.2 8080 nc: 10.181.3.180 (10.181.3.180:8081): Connection refused sent 0, rcvd 0

/ nc -zvv 192.168.1.2 8080 nc: 10.181.3.180 (10.181.3.180:8081): Connection refused sent 0, rcvd 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Exit the container, which automatically deletes the Pod:

/ exit

/ exitCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.3. Troubleshooting the VPN connection

Tunnel does not connect

If the tunnel connection is still Down, there are several things you can verify:

- The AWS tunnel will not initiate a VPN connection. The connection attempt must be initiated from the Customer Gateway.

- Ensure that your source traffic is coming from the same IP as the configured customer gateway. AWS will silently drop all traffic to the gateway whose source IP address does not match.

- Ensure that your configuration matches values supported by AWS. This includes IKE versions, DH groups, IKE lifetime, and more.

- Recheck the route table for the VPC. Ensure that propagation is enabled and that there are entries in the route table that have the virtual private gateway you created earlier as a target.

- Confirm that you do not have any firewall rules that could be causing an interruption.

- Check if you are using a policy-based VPN as this can cause complications depending on how it is configured.

- Further troubleshooting steps can be found at the AWS Knowledge Center.

Tunnel does not stay connected

If the tunnel connection has trouble staying Up consistently, know that all AWS tunnel connections must be initiated from your gateway. AWS tunnels do not initiate tunneling.

Red Hat recommends setting up an SLA Monitor (Cisco ASA) or some device on your side of the tunnel that constantly sends "interesting" traffic, for example ping, nc, or telnet, at any IP address configured within the VPC CIDR range. It does not matter whether the connection is successful, just that the traffic is being directed at the tunnel.

Secondary tunnel in Down state

When a VPN tunnel is created, AWS creates an additional failover tunnel. Depending upon the gateway device, sometimes the secondary tunnel will be seen as in the Down state.

The AWS Notification is as follows:

You have new non-redundant VPN connections One or more of your vpn connections are not using both tunnels. This mode of operation is not highly available and we strongly recommend you configure your second tunnel. View your non-redundant VPN connections.

You have new non-redundant VPN connections

One or more of your vpn connections are not using both tunnels. This mode of

operation is not highly available and we strongly recommend you configure your

second tunnel. View your non-redundant VPN connections.2.4. Configuring AWS Direct Connect

This process describes accepting an AWS Direct Connect virtual interface with Red Hat OpenShift Service on AWS. For more information about AWS Direct Connect types and configuration, see the AWS Direct Connect components documentation.

2.4.1. AWS Direct Connect methods

A Direct Connect connection requires a hosted Virtual Interface (VIF) connected to a Direct Connect Gateway (DXGateway), which is in turn associated to a Virtual Gateway (VGW) or a Transit Gateway in order to access a remote VPC in the same or another account.

If you do not have an existing DXGateway, the typical process involves creating the hosted VIF, with the DXGateway and VGW being created in the Red Hat OpenShift Service on AWS AWS Account.

If you have an existing DXGateway connected to one or more existing VGWs, the process involves the Red Hat OpenShift Service on AWS AWS Account sending an Association Proposal to the DXGateway owner. The DXGateway owner must ensure that the proposed CIDR will not conflict with any other VGWs they have associated.

See the following AWS documentation for more details:

When connecting to an existing DXGateway, you are responsible for the costs.

There are two configuration options available:

| Method 1 | Create the hosted VIF and then the DXGateway and VGW. |

| Method 2 | Request a connection via an existing Direct Connect Gateway that you own. |

2.4.2. Creating the hosted Virtual Interface

Prerequisites

- Gather Red Hat OpenShift Service on AWS AWS Account ID.

2.4.2.1. Determining the type of Direct Connect connection

View the Direct Connect Virtual Interface details to determine the type of connection.

Procedure

- Log in to the Red Hat OpenShift Service on AWS AWS Account Dashboard and select the correct region.

- Select Direct Connect from the Services menu.

- There will be one or more Virtual Interfaces waiting to be accepted, select one of them to view the Summary.

- View the Virtual Interface type: private or public.

- Record the Amazon side ASN value.

If the Direct Connect Virtual Interface type is Private, a Virtual Private Gateway is created. If the Direct Connect Virtual Interface is Public, a Direct Connect Gateway is created.

2.4.2.2. Creating a Private Direct Connect

A Private Direct Connect is created if the Direct Connect Virtual Interface type is Private.

Procedure

- Log in to the Red Hat OpenShift Service on AWS AWS Account Dashboard and select the correct region.

- From the AWS region, select VPC from the Services menu.

- From Virtual private network (VPN), select Virtual private gateways.

- Click Create virtual private gateway.

- Give the Virtual Private Gateway a suitable name.

- Select Custom ASN in the Enter custom ASN field enter the Amazon side ASN value gathered previously.

- Click Create virtual private gateway.

- Click the newly created Virtual Private Gateway and choose Attach to VPC from the Actions tab.

- Select the Red Hat OpenShift Service on AWS Cluster VPC from the list, and click Attach VPC.

Note: Editing the kubelet config will cause the nodes for your machine pool to be recreated. This ma???

2.4.2.3. Creating a Public Direct Connect

A Public Direct Connect is created if the Direct Connect Virtual Interface type is Public.

Procedure

- Log in to the Red Hat OpenShift Service on AWS AWS Account Dashboard and select the correct region.

- From the Red Hat OpenShift Service on AWS AWS Account region, select Direct Connect from the Services menu.

- Select Direct Connect gateways and Create Direct Connect gateway.

- Give the Direct Connect gateway a suitable name.

- In the Amazon side ASN, enter the Amazon side ASN value gathered previously.

- Click Create the Direct Connect gateway.

2.4.2.4. Verifying the Virtual Interfaces

After the Direct Connect Virtual Interfaces have been accepted, wait a short period and view the status of the Interfaces.

Procedure

- Log in to the Red Hat OpenShift Service on AWS AWS Account Dashboard and select the correct region.

- From the Red Hat OpenShift Service on AWS AWS Account region, select Direct Connect from the Services menu.

- Select one of the Direct Connect Virtual Interfaces from the list.

- Check the Interface State has become Available

- Check the Interface BGP Status has become Up.

- Repeat this verification for any remaining Direct Connect Interfaces.

After the Direct Connect Virtual Interfaces are available, you can log in to the Red Hat OpenShift Service on AWS AWS Account Dashboard and download the Direct Connect configuration file for configuration on your side.

2.4.3. Connecting to an existing Direct Connect Gateway

Prerequisites

- Confirm the CIDR range of the Red Hat OpenShift Service on AWS VPC will not conflict with any other VGWs you have associated.

Gather the following information:

- The Direct Connect Gateway ID.

- The AWS Account ID associated with the virtual interface.

- The BGP ASN assigned for the DXGateway. Optional: the Amazon default ASN may also be used.

Procedure

- Log in to the Red Hat OpenShift Service on AWS AWS Account Dashboard and select the correct region.

- From the Red Hat OpenShift Service on AWS AWS Account region, select VPC from the Services menu.

- From Virtual private network (VPN), select Virtual private gateways.

- Select Create virtual private gateway.

- Give the virtual private gateway a suitable name in the Details field.

- Click Custom ASN and enter the Amazon side ASN value gathered previously or use the Amazon Provided ASN.

- Click Create virtual private gateway.

- From the Red Hat OpenShift Service on AWS AWS Account region, select Direct Connect from the Services menu.

- Click virtual private gateways and select the virtual private gateway.

- Click View details.

- Click the Direct Connect gateway associations tab.

- Click Associate Direct Connect gateway

- Under Association account type, for Account owner, click Another account.

- Under Association settings, for Direct Connect gateway ID, enter the ID of the Direct Connect gateway.

- For Direct Connect gateway owner, enter the ID of the AWS account that owns the Direct Connect gateway.

- Optional: Add prefixes to Allowed prefixes, separating them using commas or put them on separate lines.

- Click Associate Direct Connect gateway.

- After the Association Proposal has been sent, it will be waiting for your acceptance. The final steps you must perform are available in the AWS Documentation.

2.4.4. Troubleshooting Direct Connect

Further troubleshooting can be found in the Troubleshooting AWS Direct Connect documentation.

Chapter 3. Cluster autoscaling for Red Hat OpenShift Service on AWS

Applying autoscaling to Red Hat OpenShift Service on AWS clusters involves configuring one or more machine pools with autoscaling. You can use the Cluster Autoscaler to further configure cluster-wide autoscaling that is applicable to all of the machine pools that are autoscaling.

3.1. About the cluster autoscaler

The cluster autoscaler adjusts the size of a Red Hat OpenShift Service on AWS cluster to meet its current deployment needs. It uses declarative, Kubernetes-style arguments to provide infrastructure management that does not rely on objects of a specific cloud provider. The cluster autoscaler has a cluster scope, and is not associated with a particular namespace. In Red Hat OpenShift Service on AWS, the Cluster Autoscaler is fully managed, which means it is hosted along with the control plane.

The cluster autoscaler increases the size of the cluster when there are pods that fail to schedule on any of the current worker nodes due to insufficient resources or when another node is necessary to meet deployment needs. The cluster autoscaler does not increase the cluster resources beyond the limits that you specify.

The cluster autoscaler computes the total memory, CPU, and GPU only on the nodes that belong to autoscaling machine pools. All of the machine pool nodes that are not autoscaling are excluded from this aggregation. For example, if you set the maxNodesTotal to 50 on a Red Hat OpenShift Service on AWS cluster with three machine pools in which a single machine pool is not autoscaling, the cluster autoscaler restricts the total nodes to 50 in only those two machine pools that are autoscaling. The single manually scaling machine pool can have additional nodes, making the overall cluster nodes total more than 50.

3.1.1. Automatic node removal

Every 10 seconds, the cluster autoscaler checks which nodes are unnecessary in the cluster and removes them. The cluster autoscaler considers a node for removal if the following conditions apply:

-

The node utilization is less than the node utilization level threshold for the cluster. The node utilization level is the sum of the requested resources divided by the allocated resources for the node. If you do not specify a value in the

ClusterAutoscalercustom resource, the cluster autoscaler uses a default value of0.5, which corresponds to 50% utilization. - The cluster autoscaler can move all pods running on the node to the other nodes. The Kubernetes scheduler is responsible for scheduling pods on the nodes.

- The cluster autoscaler does not have scale down disabled annotation.

If the following types of pods are present on a node, the cluster autoscaler will not remove the node:

- Pods with restrictive pod disruption budgets (PDBs).

- Kube-system pods that do not run on the node by default.

- Kube-system pods that do not have a PDB or have a PDB that is too restrictive.

- Pods that are not backed by a controller object such as a deployment, replica set, or stateful set.

- Pods with local storage.

- Pods that cannot be moved elsewhere because of a lack of resources, incompatible node selectors or affinity, matching anti-affinity, and so on.

-

Unless they also have a

"cluster-autoscaler.kubernetes.io/safe-to-evict": "true"annotation, pods that have a"cluster-autoscaler.kubernetes.io/safe-to-evict": "false"annotation.

For example, you set the maximum CPU limit to 64 cores and configure the cluster autoscaler to only create machines that have 8 cores each. If your cluster starts with 30 cores, the cluster autoscaler can add up to 4 more nodes with 32 cores, for a total of 62.

3.1.2. Limitations

If you configure the cluster autoscaler, additional usage restrictions apply:

- Do not modify the nodes that are in autoscaled node groups directly. All nodes within the same node group have the same capacity and labels and run the same system pods.

- Specify requests for your pods.

- If you have to prevent pods from being deleted too quickly, configure appropriate PDBs.

- Confirm that your cloud provider quota is large enough to support the maximum node pools that you configure.

- Do not run additional node group autoscalers, especially the ones offered by your cloud provider.

The cluster autoscaler only adds nodes in autoscaled node groups if doing so would result in a schedulable pod. If the available node types cannot meet the requirements for a pod request, or if the node groups that could meet these requirements are at their maximum size, the cluster autoscaler cannot scale up.

3.1.3. Interaction with other scheduling features

The horizontal pod autoscaler (HPA) and the cluster autoscaler modify cluster resources in different ways. The HPA changes the deployment’s or replica set’s number of replicas based on the current CPU load. If the load increases, the HPA creates new replicas, regardless of the amount of resources available to the cluster. If there are not enough resources, the cluster autoscaler adds resources so that the HPA-created pods can run. If the load decreases, the HPA stops some replicas. If this action causes some nodes to be underutilized or completely empty, the cluster autoscaler deletes the unnecessary nodes.

The cluster autoscaler takes pod priorities into account. The Pod Priority and Preemption feature enables scheduling pods based on priorities if the cluster does not have enough resources, but the cluster autoscaler ensures that the cluster has resources to run all pods. To honor the intention of both features, the cluster autoscaler includes a priority cutoff function. You can use this cutoff to schedule "best-effort" pods, which do not cause the cluster autoscaler to increase resources but instead run only when spare resources are available.

Pods with priority lower than the cutoff value do not cause the cluster to scale up or prevent the cluster from scaling down. No new nodes are added to run the pods, and nodes running these pods might be deleted to free resources.

3.2. Enable autoscaling during cluster creation by using the interactive mode with the ROSA CLI

You can use the ROSA command-line interface (CLI) (rosa) in interactive mode of your terminal, if available, to set cluster-wide autoscaling behavior during cluster creation.

Interactive mode provides more information about available configurable parameters. Interactive mode also does basic checks and preflight validations, meaning that if a provided value is invalid, the terminal outputs a prompt for a valid input.

Procedure

During cluster creation, use the

--enable-autoscalingand--interactiveparameters to enable cluster autoscaling:Example

rosa create cluster --cluster-name <cluster_name> --enable-autoscaling --interactive

$ rosa create cluster --cluster-name <cluster_name> --enable-autoscaling --interactiveCopy to Clipboard Copied! Toggle word wrap Toggle overflow

If your cluster name is longer than 15 characters, it will contain an autogenerated domain prefix as a sub-domain for your provisioned cluster on *.openshiftapps.com.

To customize the subdomain, use the --domain-prefix flag. The domain prefix cannot be longer than 15 characters, must be unique, and cannot be changed after cluster creation.

When the following prompt appears, enter y to go through all available autoscaling options.

Example interactive prompt

? Configure cluster-autoscaler (optional): [? for help] (y/N) y <enter>

? Configure cluster-autoscaler (optional): [? for help] (y/N) y <enter>3.3. Enable autoscaling during cluster creation with the ROSA CLI

You can use the ROSA command-line interface (CLI) (rosa) to set cluster-wide autoscaling behavior during cluster creation. You can enable the autoscaler on the entire machine or just a cluster.

Procedure

-

During cluster creation, type

--enable autoscalingafter the cluster name to enable machine autoscaling:

If your cluster name is longer than 15 characters, it will contain an autogenerated domain prefix as a sub-domain for your provisioned cluster on *.openshiftapps.com.

To customize the subdomain, use the --domain-prefix flag. The domain prefix cannot be longer than 15 characters, must be unique, and cannot be changed after cluster creation.

Example

rosa create cluster --cluster-name <cluster_name> --enable-autoscaling

$ rosa create cluster --cluster-name <cluster_name> --enable-autoscalingSet at least one parameter to enable cluster autoscaling by running the following command:

Example

rosa create cluster --cluster-name <cluster_name> --enable-autoscaling <parameter>

$ rosa create cluster --cluster-name <cluster_name> --enable-autoscaling <parameter>3.4. Cluster autoscaling parameters using the ROSA CLI

You can add the following parameters to the cluster creation command to configure autoscaler parameters when using the ROSA command-line interface (CLI) (rosa).

| Setting | Description | Type or Range | Example/Instruction |

|---|---|---|---|

|

| Gives pods graceful termination time before scaling down, measured in seconds. Replace int in the command with the number of seconds you want to use. |

|

|

|

| The priority that a pod must exceed to cause the cluster autoscaler to deploy additional nodes. Replace int in the command with the number you want to use, can be negative. |

|

|

|

| Maximum time that the cluster autoscaler waits for a node to be provisioned. Replace string in the command with an integer and time unit (ns,us,µs,ms,s,m,h). |

|

|

|

| Maximum amount of nodes in the cluster, including the autoscaled nodes. Replace int in the command with the number you want to use. |

|

|

3.5. Edit autoscaling after cluster creation with the ROSA CLI

You can edit any specific parameters of the cluster autoscaler after creating the autoscaler when using the ROSA command-line interface (CLI) (rosa).

Procedure

To edit the cluster autoscaler, run the following command:

Example

rosa edit autoscaler --cluster=<mycluster>

$ rosa edit autoscaler --cluster=<mycluster>Copy to Clipboard Copied! Toggle word wrap Toggle overflow To edit a specific parameter, run the following command:

Example

rosa edit autoscaler --cluster=<mycluster> <parameter>

$ rosa edit autoscaler --cluster=<mycluster> <parameter>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.6. View autoscaler configurations with the ROSA CLI

You can view your cluster autoscaler configurations using the rosa describe autoscaler command when using the ROSA command-line interface (CLI) (rosa).

Procedure

To view cluster autoscaler configurations, run the following command:

Example

rosa describe autoscaler -h --cluster=<mycluster>

$ rosa describe autoscaler -h --cluster=<mycluster>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 4. Managing compute nodes using machine pools

4.1. About machine pools

Red Hat OpenShift Service on AWS uses machine pools as an elastic, dynamic provisioning method on top of your cloud infrastructure.

The primary resources are machines, compute machine sets, and machine pools.

4.1.1. Machines

A machine is a fundamental unit that describes the host for a worker node.

4.1.2. Machine sets

MachineSet resources are groups of compute machines. If you need more machines or must scale them down, change the number of replicas in the machine pool to which the compute machine sets belong.

Machine sets are not directly modifiable in Red Hat OpenShift Service on AWS.

4.1.3. Machine pools

Machine pools are a higher level construct to compute machine sets.

A machine pool creates compute machine sets that are all clones of the same configuration across availability zones. Machine pools perform all of the host node provisioning management actions on a worker node. If you need more machines or must scale them down, change the number of replicas in the machine pool to meet your compute needs. You can manually configure scaling or set autoscaling.

In Red Hat OpenShift Service on AWS clusters, the hosted control plane spans multiple availability zones (AZ) in the installed cloud region. Each machine pool in a Red Hat OpenShift Service on AWS cluster deploys in a single subnet within a single AZ.

Worker nodes are not guaranteed longevity, and may be replaced at any time as part of the normal operation and management of OpenShift. For more details about the node lifecycle, refer to additional resources.

Multiple machine pools can exist on a single cluster, and each machine pool can contain a unique node type and node size (AWS EC2 instance type and size) configuration.

4.1.3.1. Machine pools during cluster installation

By default, a cluster has one machine pool. During cluster installation, you can define instance type or size and add labels to this machine pool as well as define the size of the root disk.

4.1.3.2. Configuring machine pools after cluster installation

After a cluster’s installation:

- You can remove or add labels to any machine pool.

- You can add additional machine pools to an existing cluster.

- You can add taints to any machine pool if there is one machine pool without any taints.

You can create or delete a machine pool if there is one machine pool without any taints and at least two replicas.

NoteYou cannot change the machine pool node type or size. The machine pool node type or size is specified during their creation only. If you need a different node type or size, you must re-create a machine pool and specify the required node type or size values.

- You can add a label to each added machine pool.

Worker nodes are not guaranteed longevity, and may be replaced at any time as part of the normal operation and management of OpenShift. For more details about the node lifecycle, refer to additional resources.

Procedure

Optional: Add a label to the default machine pool after configuration by using the default machine pool labels and running the following command:

rosa edit machinepool -c <cluster_name> <machinepool_name> -i

$ rosa edit machinepool -c <cluster_name> <machinepool_name> -iCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example input

rosa edit machinepool -c mycluster worker -i ? Enable autoscaling: No ? Replicas: 3 ? Labels: mylabel=true I: Updated machine pool 'worker' on cluster 'mycluster'

$ rosa edit machinepool -c mycluster worker -i ? Enable autoscaling: No ? Replicas: 3 ? Labels: mylabel=true I: Updated machine pool 'worker' on cluster 'mycluster'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.3.3. Machine pool upgrade requirements

Each machine pool in a Red Hat OpenShift Service on AWS cluster upgrades independently. Because the machine pools upgrade independently, they must remain within 2 minor (Y-stream) versions of the hosted control plane. For example, if your hosted control plane is 4.16.z, your machine pools must be at least 4.14.z.

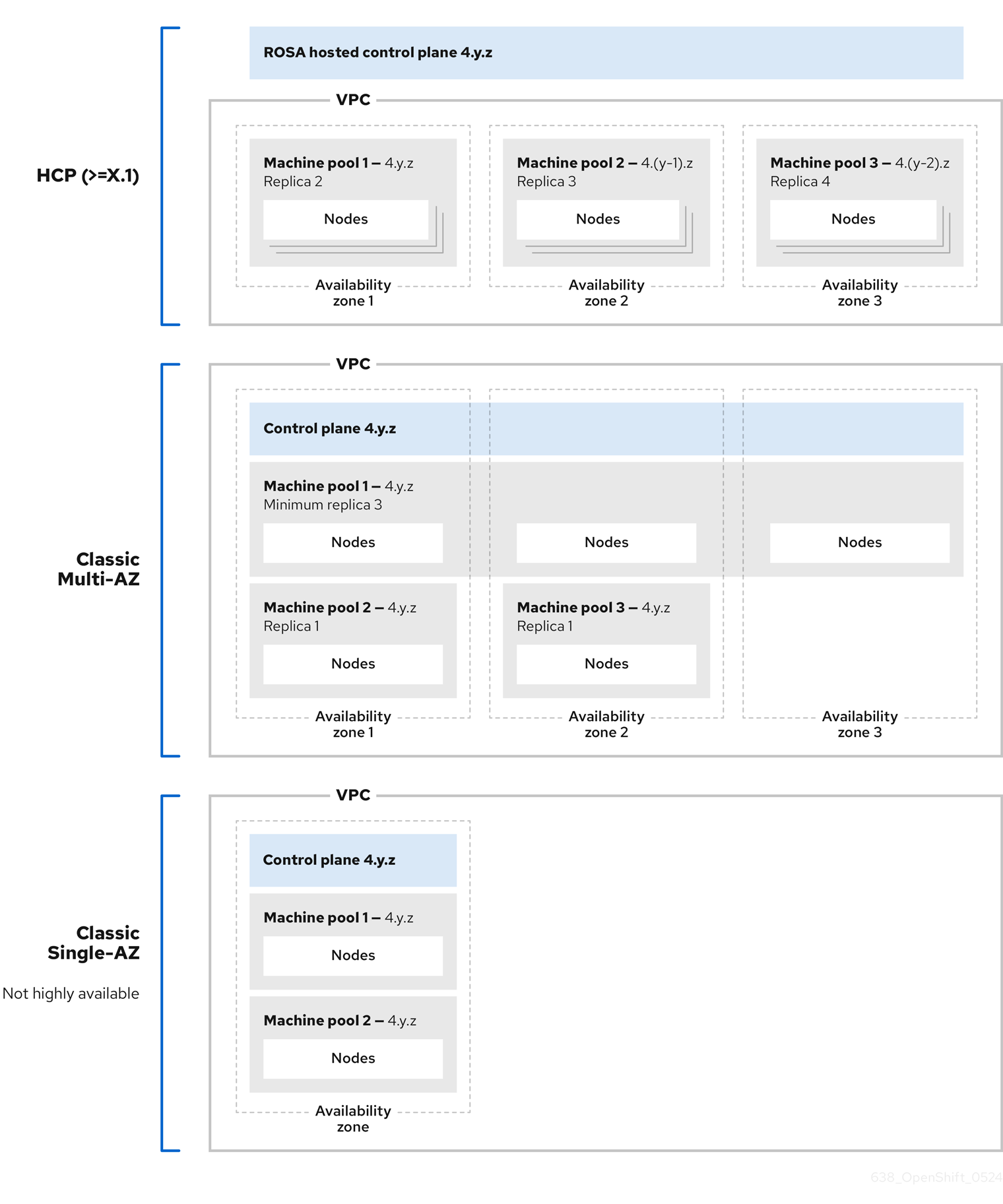

The following image depicts how machine pools work within Red Hat OpenShift Service on AWS clusters:

Machine pools in Red Hat OpenShift Service on AWS clusters each upgrade independently and the machine pool versions must remain within two minor (Y-stream) versions of the control plane.

4.1.4. Additional resources

4.2. Managing compute nodes

With Red Hat OpenShift Service on AWS, you can manage compute (also known as worker) nodes to create and configure optimal compute capacity for your workloads.

The majority of changes for compute nodes are configured on machine pools. A machine pool is a group of compute nodes in a cluster that have the same configuration, providing ease of management.

You can edit machine pool configuration options such as scaling, adding node labels, and adding taints.

You can also create new machine pools with Capacity Reservations.

Overview of AWS Capacity Reservations

If you have reserved compute capacity using AWS Capacity Reservations for a specific instance type and Availability Zone (AZ), you can use it for your Red Hat OpenShift Service on AWS worker nodes. Both On-Demand Capacity Reservations and Capacity Blocks for machine learning (ML) workloads are supported.

Purchase and manage a Capacity Reservation directly with AWS. After reserving the capacity, add a Capacity Reservation ID to a new machine pool when you create it in your Red Hat OpenShift Service on AWS cluster. You can also use a Capacity Reservation shared with you from another AWS account within your AWS Organization.

Once you configure Capacity Reservations in Red Hat OpenShift Service on AWS, you can use your AWS account to monitor reserved capacity usage across all workloads in the account.

Using Capacity Reservations on machine pools in Red Hat OpenShift Service on AWS clusters has the following prerequisites and limitations:

- You installed and configured the latest ROSA CLI.

- Your Red Hat OpenShift Service on AWS cluster is version 4.19 or later.

- The cluster already has a machine pool that is not using a Capacity Reservation or taints. The machine pool must have at least 2 worker nodes.

- You have purchased a Capacity Reservation for the instance type required in the AZ of the machine pool that you are creating.

- You can only add a Capacity Reservation ID to a new machine pool.

- You cannot use autoscaling with Capacity Reservations if you create a machine pool using the ROSA CLI. However, you can enable both autoscaling and Capacity Reservations on machine pools created using OpenShift Cluster Manager.

You can create a machine pool with a Capacity Reservation using either OpenShift Cluster Manager or the ROSA CLI.

4.2.1. Creating a machine pool

A machine pool is created when you install a Red Hat OpenShift Service on AWS cluster. After installation, you can create additional machine pools for your cluster by using OpenShift Cluster Manager or the ROSA command-line interface (CLI) (rosa).

For users of rosa version 1.2.25 and earlier versions, the machine pool created along with the cluster is identified as Default. For users of rosa version 1.2.26 and later, the machine pool created along with the cluster is identified as worker.

4.2.1.1. Creating a machine pool using OpenShift Cluster Manager

You can create additional machine pools for your Red Hat OpenShift Service on AWS cluster by using OpenShift Cluster Manager.

Prerequisites

- You created a Red Hat OpenShift Service on AWS cluster.

Procedure

- Navigate to OpenShift Cluster Manager and select your cluster.

- Under the Machine pools tab, click Add machine pool.

- Add a Machine pool name.

Select a Compute node instance type from the list. The instance type defines the vCPU and memory allocation for each compute node in the machine pool.

NoteYou cannot change the instance type for a machine pool after the pool is created.

Optional: If you are using OpenShift Virtualization on a Red Hat OpenShift Service on AWS cluster, you might want to run Windows VMs. In order to be license-compliant with Microsoft Windows in AWS, the hosts (x86-64 bare metal EC2 instances) running these VMs must be enabled with AWS EC2 Windows License Included. To enable the machine pool for AWS Windows License Included, select the Enable machine pool for AWS Windows License Included checkbox.

You can only select this option when the host cluster is a Red Hat OpenShift Service on AWS cluster version 4.19 and later and the instance type is x86-64 bare metal EC2.

ImportantEnabling AWS Windows LI on a machine pool applies the associated licensing fees on that specific machine pool. This includes billing for the full vCPU allocation of each AWS Windows LI enabled host in your Red Hat OpenShift Service on AWS cluster. Windows LI enabled machine pools will also deny vCPU over-allocation on OpenShift Virtualization VMs. For more information, see Microsoft Licensing on AWS and the Red Hat OpenShift Service on AWS instance types.

Optional: Configure autoscaling for the machine pool:

- Select Enable autoscaling to automatically scale the number of machines in your machine pool to meet the deployment needs.

Set the minimum and maximum node count limits for autoscaling. The cluster autoscaler does not reduce or increase the machine pool node count beyond the limits that you specify.

NoteAlternatively, you can set your autoscaling preferences for the machine pool after the machine pool is created.

- If you did not enable autoscaling, select a Compute node count from the drop-down menu. This defines the number of compute nodes to provision to the machine pool for the availability zone.

- Optional: Configure Root disk size.

Optional: Add reserved capacity to your machine pool.

Select a Reservation Preference from the list. Valid preferences include:

- None: The instance does not use a Capacity Reservation even if one is available. The instance runs as an EC2 On-Demand instance. Choose this option when you want to avoid consuming purchased reserved capacity and use it for other workloads.

-

Open: The instance can run in any

openCapacity Reservation that has matching attributes such as the instance type, platform, AZ, or tenancy. Choose this option for flexibility; if a reservation is not available, the instance can use regular unreserved EC2 capacity. - CR only (capacity reservation only): The instance can only run in a Capacity Reservation. If capacity is not available, the instance fails to launch.

-

Add a Reservation ID. You get an ID in the

cr-<capacity_reservation_id>format when you purchase a Capacity Reservation from AWS. The ID can be for both On-Demand Capacity Reservations or Capacity Blocks for ML.

Optional: Add node labels and taints for your machine pool:

- Expand the Edit node labels and taints menu.

- Under Node labels, add Key and Value entries for your node labels.

Under Taints, add Key and Value entries for your taints.

NoteCreating a machine pool with taints is only possible if the cluster already has at least one machine pool without a taint.

For each taint, select an Effect from the drop-down menu. Available options include

NoSchedule,PreferNoSchedule, andNoExecute.NoteAlternatively, you can add the node labels and taints after you create the machine pool.

Optional: Select additional custom security groups to use for nodes in this machine pool. You must have already created the security groups and associated them with the VPC that you selected for this cluster. You cannot add or edit security groups after you create the machine pool.

ImportantYou can use up to ten additional security groups for machine pools on Red Hat OpenShift Service on AWS clusters.

- Click Add machine pool to create the machine pool.

Verification

- Verify that the machine pool is visible on the Machine pools page and the configuration is as expected.

4.2.1.2. Creating a machine pool using the ROSA CLI

You can create additional machine pools for your Red Hat OpenShift Service on AWS cluster by using the ROSA command-line interface (CLI) (rosa).

To add a pre-purchased Capacity Reservation to a machine pool, see Creating a machine pool with Capacity Reservations.

Prerequisites

- You installed and configured the latest ROSA CLI on your workstation.

- You logged in to your Red Hat account using the ROSA CLI.

- You created a Red Hat OpenShift Service on AWS cluster.

Procedure

To add a machine pool that does not use autoscaling, create the machine pool and define the instance type, compute (also known as worker) node count, and node labels:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

--name=<machine_pool_id>- Specifies the name of the machine pool.

--replicas=<replica_count>-

Specifies the number of compute nodes to provision. If you deployed Red Hat OpenShift Service on AWS using a single availability zone, this defines the number of compute nodes to provision to the machine pool for the zone. If you deployed your cluster using multiple availability zones, this defines the number of compute nodes to provision in total across all zones and the count must be a multiple of 3. The

--replicasargument is required when autoscaling is not configured. --instance-type=<instance_type>-

Optional: Sets the instance type for the compute nodes in your machine pool. The instance type defines the vCPU and memory allocation for each compute node in the pool. Replace

<instance_type>with an instance type. The default ism5.xlarge. You cannot change the instance type for a machine pool after the pool is created. --labels=<key>=<value>,<key>=<value>-

Optional: Defines the labels for the machine pool. Replace

<key>=<value>,<key>=<value>with a comma-delimited list of key-value pairs, for example--labels=key1=value1,key2=value2. --taints=<key>=<value>:<effect>,<key>=<value>:<effect>-

Optional: Defines the taints for the machine pool. Replace

<key>=<value>:<effect>,<key>=<value>:<effect>with a key, value, and effect for each taint, for example--taints=key1=value1:NoSchedule,key2=value2:NoExecute. Available effects includeNoSchedule,PreferNoSchedule, andNoExecute. --disk-size=<disk_size>-

Optional: Specifies the worker node disk size. The value can be in GB, GiB, TB, or TiB. Replace

<disk_size>with a numeric value and unit, for example--disk-size=200GiB. --availability-zone=<availability_zone_name>-

Optional: You can create a machine pool in an availability zone of your choice. Replace

<availability_zone_name>with an availability zone name. --additional-security-group-ids <sec_group_id>Optional: For machine pools in clusters that do not have Red Hat managed VPCs, you can select additional custom security groups to use in your machine pools. You must have already created the security groups and associated them with the VPC that you selected for this cluster. You cannot add or edit security groups after you create the machine pool.

ImportantYou can use up to ten additional security groups for machine pools on Red Hat OpenShift Service on AWS clusters.

--subnet <subnet_id>Optional: For BYO VPC clusters, you can select a subnet to create a Single-AZ machine pool. If the subnet is out of your cluster creation subnets, there must be a tag with a key

kubernetes.io/cluster/<infra-id>and valueshared. Customers can obtain the Infra ID by using the following command:rosa describe cluster -c <cluster name>|grep "Infra ID:"

$ rosa describe cluster -c <cluster name>|grep "Infra ID:"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Infra ID: mycluster-xqvj7

Infra ID: mycluster-xqvj7Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou cannot set both

--subnetand--availability-zoneat the same time, only 1 is allowed for a Single-AZ machine pool creation.

The following example creates a machine pool called

mymachinepoolthat uses them5.xlargeinstance type and has 2 compute node replicas. The example also adds 2 workload-specific labels:rosa create machinepool --cluster=mycluster --name=mymachinepool --replicas=2 --instance-type=m5.xlarge --labels=app=db,tier=backend

$ rosa create machinepool --cluster=mycluster --name=mymachinepool --replicas=2 --instance-type=m5.xlarge --labels=app=db,tier=backendCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

I: Machine pool 'mymachinepool' created successfully on cluster 'mycluster' I: To view all machine pools, run 'rosa list machinepools -c mycluster'

I: Machine pool 'mymachinepool' created successfully on cluster 'mycluster' I: To view all machine pools, run 'rosa list machinepools -c mycluster'Copy to Clipboard Copied! Toggle word wrap Toggle overflow To add a machine pool that uses autoscaling, create the machine pool and define the autoscaling configuration, instance type and node labels:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

--name=<machine_pool_id>-

Specifies the name of the machine pool. Replace

<machine_pool_id>with the name of your machine pool. --enable-autoscaling- Enables autoscaling in the machine pool to meet the deployment needs.

--min-replicas=<minimum_replica_count>and--max-replicas=<maximum_replica_count>Defines the minimum and maximum compute node limits. The cluster autoscaler does not reduce or increase the machine pool node count beyond the limits that you specify.

The

--min-replicasand--max-replicasarguments define the autoscaling limits in the machine pool for the availability zone.--instance-type=<instance_type>-

Optional: Sets the instance type for the compute nodes in your machine pool. The instance type defines the vCPU and memory allocation for each compute node in the pool. Replace

<instance_type>with an instance type. The default ism5.xlarge. You cannot change the instance type for a machine pool after the pool is created. --labels=<key>=<value>,<key>=<value>-

Optional: Defines the labels for the machine pool. Replace

<key>=<value>,<key>=<value>with a comma-delimited list of key-value pairs, for example--labels=key1=value1,key2=value2. --taints=<key>=<value>:<effect>,<key>=<value>:<effect>-

Optional: Defines the taints for the machine pool. Replace

<key>=<value>:<effect>,<key>=<value>:<effect>with a key, value, and effect for each taint, for example--taints=key1=value1:NoSchedule,key2=value2:NoExecute. Available effects includeNoSchedule,PreferNoSchedule, andNoExecute. --availability-zone=<availability_zone_name>-

Optional: You can create a machine pool in an availability zone of your choice. Replace

<availability_zone_name>with an availability zone name.

The following example creates a machine pool called

mymachinepoolthat uses them5.xlargeinstance type and has autoscaling enabled. The minimum compute node limit is 3 and the maximum is 6 overall. The example also adds 2 workload-specific labels:rosa create machinepool --cluster=mycluster --name=mymachinepool --enable-autoscaling --min-replicas=3 --max-replicas=6 --instance-type=m5.xlarge --labels=app=db,tier=backend

$ rosa create machinepool --cluster=mycluster --name=mymachinepool --enable-autoscaling --min-replicas=3 --max-replicas=6 --instance-type=m5.xlarge --labels=app=db,tier=backendCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

I: Machine pool 'mymachinepool' created successfully on hosted cluster 'mycluster' I: To view all machine pools, run 'rosa list machinepools -c mycluster'

I: Machine pool 'mymachinepool' created successfully on hosted cluster 'mycluster' I: To view all machine pools, run 'rosa list machinepools -c mycluster'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

You can list all machine pools on your cluster or describe individual machine pools.

List the available machine pools on your cluster:

rosa list machinepools --cluster=<cluster_name>

$ rosa list machinepools --cluster=<cluster_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

ID AUTOSCALING REPLICAS INSTANCE TYPE LABELS TAINTS AVAILABILITY ZONE SUBNET VERSION AUTOREPAIR Default No 1/1 m5. xlarge us-east-2c subnet-00552ad67728a6ba3 4.14.34 Yes mymachinepool Yes 3/3-6 m5.xlarge app=db, tier=backend us-east-2a subnet-0cb56f5f41880c413 4.14.34 Yes

ID AUTOSCALING REPLICAS INSTANCE TYPE LABELS TAINTS AVAILABILITY ZONE SUBNET VERSION AUTOREPAIR Default No 1/1 m5. xlarge us-east-2c subnet-00552ad67728a6ba3 4.14.34 Yes mymachinepool Yes 3/3-6 m5.xlarge app=db, tier=backend us-east-2a subnet-0cb56f5f41880c413 4.14.34 YesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Describe the information of a specific machine pool in your cluster:

rosa describe machinepool --cluster=<cluster_name> --machinepool=mymachinepool

$ rosa describe machinepool --cluster=<cluster_name> --machinepool=mymachinepoolCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify that the machine pool is included in the output and the configuration is as expected.

4.2.1.3. Creating a machine pool with AWS Windows License Included enabled using the ROSA CLI

If you are using OpenShift Virtualization on a Red Hat OpenShift Service on AWS cluster running Windows VMs you need to be license-compliant with Microsoft Windows in AWS. The hosts (AWS x86-64 bare metal EC2 instances) running these VMs must be enabled with AWS EC2 Windows License Included (LI).

Enabling AWS Windows LI on a machine pool applies the associated licensing fees on that specific machine pool. This includes billing for the full vCPU allocation of each AWS Windows LI enabled host in your Red Hat OpenShift Service on AWS cluster. Windows LI enabled machine pools will also deny vCPU over-allocation on OpenShift Virtualization VMs. For more information, see Microsoft Licensing on AWS and the Red Hat OpenShift Service on AWS instance types.

Prerequisites

- You installed and configured the ROSA CLI version 1.2.58 or above.

- You logged in to your Red Hat account using the ROSA CLI.

- You created a Red Hat OpenShift Service on AWS cluster version 4.19 or above.

- You identified an x86-64 bare metal EC2 instance type to use OpenShift Virtualization. For more, see Amazon EC2 Instance Types.

- You are in compliance with Microsoft and AWS requirements for the Microsoft licenses and associated costs.

Procedure

To add a Windows LI enabled machine pool to a Red Hat OpenShift Service on AWS cluster, create the machine pool with the following definitions:

rosa create machinepool --cluster=<cluster-name> \ --name=<machine_pool_id> \ --replicas=<replica_count> \ --instance-type=<instance_type> \ --type=<image_type>$ rosa create machinepool --cluster=<cluster-name> \ --name=<machine_pool_id> \ --replicas=<replica_count> \ --instance-type=<instance_type> \ --type=<image_type>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

--name=<machine_pool_id>-

Specifies the name of the machine pool. Replace

<machine_pool_id>with the name of your machine pool. --replicas=<replica_count>-

Specifies the number of compute nodes to provision. If you deployed Red Hat OpenShift Service on AWS using a single availability zone, this defines the number of compute nodes to provision to the machine pool for the zone. If you deployed your cluster using multiple availability zones, this defines the number of compute nodes to provision in total across all zones and the count must be a multiple of 3. The

--replicasargument is required when autoscaling is not configured. --instance-type=<instance_type>-

Specifies the instance type. You can only select an x86-64 bare metal instance type to enable Windows LI. For example, you can use

m5zn.metalori3.metal. You cannot change the instance type for a machine pool after the pool is created. --type=<type>You must specify

Windowsto ensure the machine pool is created with Windows LI enabled.The following command creates a Windows LI enabled machine pool called

mymachinepoolusing them5zn.metalinstance type with 1 compute node replica:rosa create machinepool --cluster=mycluster --name=mymachinepool --type=Windows --instance-type=m5zn.metal --replicas=1

$ rosa create machinepool --cluster=mycluster --name=mymachinepool --type=Windows --instance-type=m5zn.metal --replicas=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

I: Machine pool 'mymachinepool' created successfully on cluster 'mycluster' I: To view all machine pools, run 'rosa list machinepools -c mycluster'

I: Machine pool 'mymachinepool' created successfully on cluster 'mycluster' I: To view all machine pools, run 'rosa list machinepools -c mycluster'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

List the available machine pools on your cluster by running the following command:

rosa list machinepools --cluster=<cluster_name>

$ rosa list machinepools --cluster=<cluster_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Describe the information of a specific machine pool in your cluster:

rosa describe machinepool --cluster=<cluster_name> --machinepool=mymachinepool

$ rosa describe machinepool --cluster=<cluster_name> --machinepool=mymachinepoolCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output has the image type set to

Windowsas shown in the following example:Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - For more information about running virtualized Windows workloads after you have set up a Windows LI enabled machine pool, see Creating a Windows VM compliant to AWS EC2 Windows License Included.

4.2.1.4. Creating a machine pool with Capacity Reservations using the ROSA CLI

You can create a new machine pool with Capacity Reservations by using the ROSA command-line interface (CLI) (rosa). Both On-Demand Capacity Reservations and Capacity Blocks for ML are supported.

Currently, autoscaling is not supported on machine pools with Capacity Reservations.

Prerequisites

- You installed and configured the latest ROSA CLI.

- You logged in to your Red Hat account using the ROSA CLI.

- You created a Red Hat OpenShift Service on AWS cluster version 4.19 or above.

- The cluster already has a machine pool that is not using a Capacity Reservation or taints. The machine pool must have at least 2 worker nodes.

- You have a Capacity Reservation ID and capacity is reserved for the instance type required in the Availability Zone (AZ) of the machine pool that you are creating.

Procedure

Create the machine pool and define the Capacity Reservation preference and ID by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

where:

- <machine_pool_id>

- Specifies the name of the machine pool.

- <replica_count>

-

Specifies the number of provisioned compute nodes. If you deploy Red Hat OpenShift Service on AWS using a single AZ, this defines the number of compute nodes provisioned to the machine pool for the AZ. If you deploy your cluster using multiple AZs, this defines the total number of compute nodes provisioned across all AZs. For multi-zone clusters, the compute node count must be a multiple of 3. The

--replicasargument is required when autoscaling is not configured. - <capacity_reservation_preference>

Specifies the Capacity Reservation behaviour. Valid preferences include:

-

none: The instance does not use a Capacity Reservation even if one is available. The instance runs as an EC2 On-Demand instance. Choose this option when you want to avoid consuming purchased reserved capacity and use it for other workloads. -

open: The instance can run in anyopenCapacity Reservation that has matching attributes such as the instance type, platform, AZ, or tenancy. Choose this option for flexibility; if a reservation is not available, the instance can use regular unreserved EC2 capacity. -

capacity-reservations-only: The instance can only run in a Capacity Reservation. If capacity is not available, the instance fails to launch.

-

- cr-<capacity_reservation_id>

-

Specifies the reservation ID. You get an ID in the

cr-<capacity_reservation_id>format when you purchase a Capacity Reservation from AWS. The ID can be for both On-Demand Capacity Reservations or Capacity Blocks for ML, you do not need to specify the reservation type. - <instance_type>

-

Optional: Specifies the instance type for the compute nodes in your machine pool. The instance type defines the vCPU and memory allocation for each compute node in the pool. Replace

<instance_type>with an instance type. The default ism5.xlarge. You cannot change the instance type for a machine pool after the pool is created. - <subnet_id>

Optional: Specifies the subnet ID. For Bring Your Own Virtual Private Cloud (BYO VPC) clusters, you can select a subnet to create a single-AZ machine pool. If you select a subnet that was not specified during the initial cluster creation, you must tag the subnet with the

kubernetes.io/cluster/<infra_id>key andsharedvalue. Customers can obtain the Infra ID by running the following command:rosa describe cluster --cluster <cluster_name>|grep "Infra ID:"

$ rosa describe cluster --cluster <cluster_name>|grep "Infra ID:"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Infra ID: mycluster-xqvj7

Infra ID: mycluster-xqvj7Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Example

The following example creates a machine pool called mymachinepool that uses the c5.xlarge instance type and has 1 compute node replica. The example also adds a Capacity Reservation ID. Example input and output:

rosa create machinepool --cluster=mycluster --name=mymachinepool --replicas 1 --capacity-reservation-id <capacity_reservation_id> --subnet <subnet_id> --instance-type c5.xlarge

$ rosa create machinepool --cluster=mycluster --name=mymachinepool --replicas 1 --capacity-reservation-id <capacity_reservation_id> --subnet <subnet_id> --instance-type c5.xlargeI: Checking available instance types for machine pool 'mymachinepool' I: Machine pool 'mymachinepool' created successfully on hosted cluster 'mycluster'

I: Checking available instance types for machine pool 'mymachinepool'

I: Machine pool 'mymachinepool' created successfully on hosted cluster 'mycluster'Verification

You can list all machine pools on your cluster or describe individual machine pools.

List the available machine pools on your cluster by running the following command:

rosa list machinepools --cluster <cluster_name>

$ rosa list machinepools --cluster <cluster_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Describe the information of a specific machine pool in your cluster by running the following command.

rosa describe machinepool --cluster <cluster_name> --machinepool <machine_pool_name>