Este contenido no está disponible en el idioma seleccionado.

Chapter 2. Example deployment: High availability cluster with Compute and Ceph

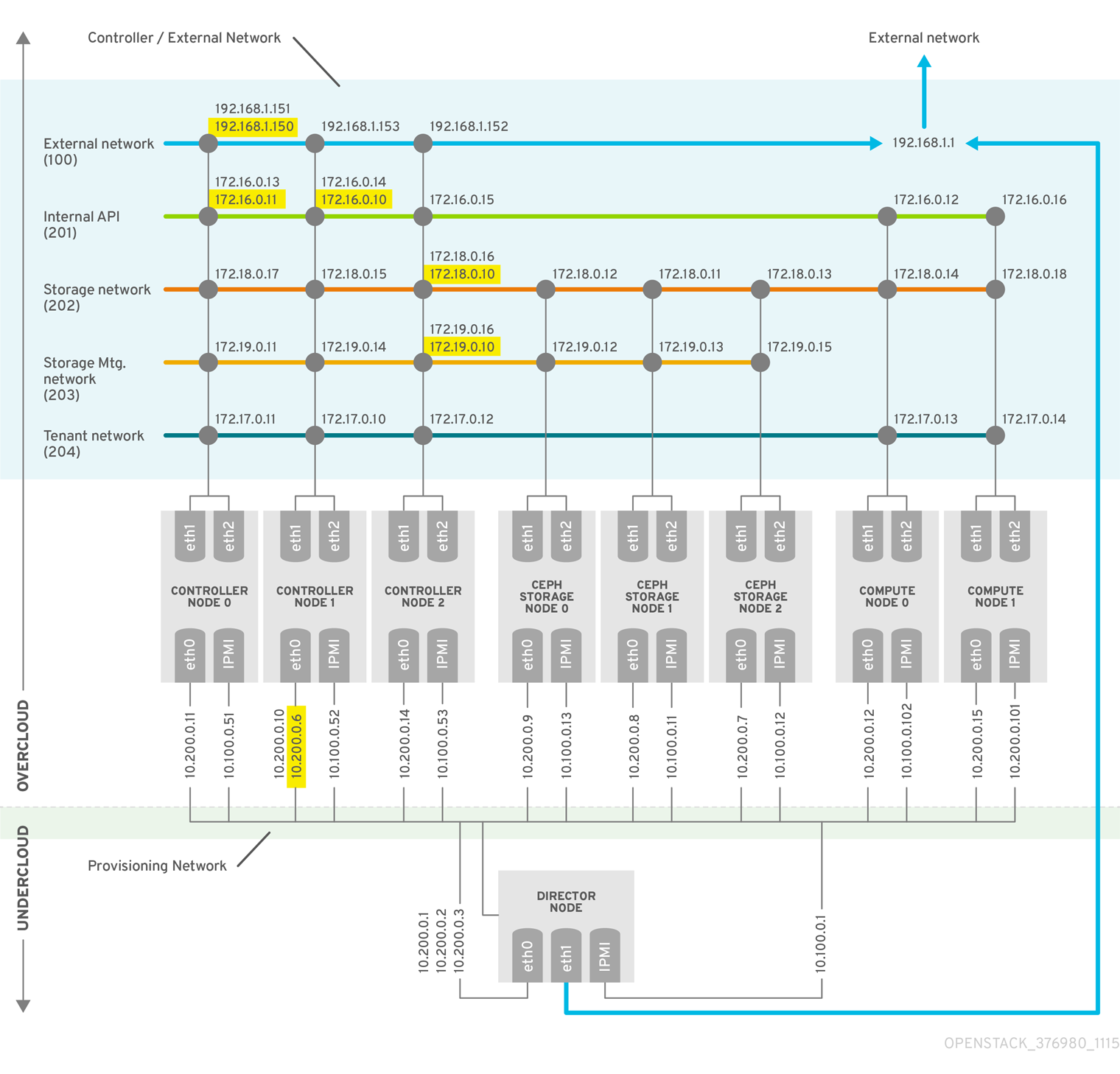

The following example scenario shows the architecture, hardware and network specifications, as well as the undercloud and overcloud configuration files for a high availability deployment with the OpenStack Compute service and Red Hat Ceph Storage.

This deployment is intended to use as a reference for test environments and is not supported for production environments.

Figure 2.1. Example high availability deployment architecture

For more information about deploying a Red Hat Ceph Storage cluster, see Deploying an Overcloud with Containerized Red Hat Ceph.

For more information about deploying Red Hat OpenStack Platform with director, see Director Installation and Usage.

2.1. Hardware specifications

The following table shows the hardware used in the example deployment. You can adjust the CPU, memory, storage, or NICs as needed in your own test deployment.

| Number of Computers | Purpose | CPUs | Memory | Disk Space | Power Management | NICs |

|---|---|---|---|---|---|---|

| 1 | undercloud node | 4 | 6144 MB | 40 GB | IPMI | 2 (1 external; 1 on provisioning) + 1 IPMI |

| 3 | Controller nodes | 4 | 6144 MB | 40 GB | IPMI | 3 (2 bonded on overcloud; 1 on provisioning) + 1 IPMI |

| 3 | Ceph Storage nodes | 4 | 6144 MB | 40 GB | IPMI | 3 (2 bonded on overcloud; 1 on provisioning) + 1 IPMI |

| 2 | Compute nodes (add more as needed) | 4 | 6144 MB | 40 GB | IPMI | 3 (2 bonded on overcloud; 1 on provisioning) + 1 IPMI |

Review the following guidelines when you plan hardware assignments:

- Controller nodes

- Most non-storage services run on Controller nodes. All services are replicated across the three nodes, and are configured as active-active or active-passive services. An HA environment requires a minimum of three nodes.

- Red Hat Ceph Storage nodes

- Storage services run on these nodes and provide pools of Red Hat Ceph Storage areas to the Compute nodes. A minimum of three nodes are required.

- Compute nodes

- Virtual machine (VM) instances run on Compute nodes. You can deploy as many Compute nodes as you need to meet your capacity requirements, as well as migration and reboot operations. You must connect Compute nodes to the storage network and to the project network, to ensure that VMs can access the storage nodes, the VMs on other Compute nodes, and the public networks.

- STONITH

- You must configure a STONITH device for each node that is a part of the Pacemaker cluster in a highly available overcloud. Deploying a highly available overcloud without STONITH is not supported. For more information on STONITH and Pacemaker, see Fencing in a Red Hat High Availability Cluster and Support Policies for RHEL High Availability Clusters.

2.2. Network specifications

The following table shows the network configuration used in the example deployment.

| Physical NICs | Purpose | VLANs | Description |

|---|---|---|---|

| eth0 | Provisioning network (undercloud) | N/A | Manages all nodes from director (undercloud) |

| eth1 and eth2 | Controller/External (overcloud) | N/A | Bonded NICs with VLANs |

| External network | VLAN 100 | Allows access from outside the environment to the project networks, internal API, and OpenStack Horizon Dashboard | |

| Internal API | VLAN 201 | Provides access to the internal API between Compute nodes and Controller nodes | |

| Storage access | VLAN 202 | Connects Compute nodes to storage media | |

| Storage management | VLAN 203 | Manages storage media | |

| Project network | VLAN 204 | Provides project network services to RHOSP |

In addition to the network configuration, you must deploy the following components:

- Provisioning network switch

- This switch must be able to connect the undercloud to all the physical computers in the overcloud.

- The NIC on each overcloud node that is connected to this switch must be able to PXE boot from the undercloud.

-

The

portfastparameter must be enabled.

- Controller/External network switch

- This switch must be configured to perform VLAN tagging for the other VLANs in the deployment.

- Allow only VLAN 100 traffic to external networks.

2.3. Undercloud configuration files

The example deployment uses the following undercloud configuration files.

instackenv.json

undercloud.conf

network-environment.yaml

2.4. Overcloud configuration files

The example deployment uses the following overcloud configuration files.

/var/lib/config-data/haproxy/etc/haproxy/haproxy.cfg (Controller nodes)

This file identifies the services that HAProxy manages. It contains the settings for the services that HAProxy monitors. This file is identical on all Controller nodes.

/etc/corosync/corosync.conf file (Controller nodes)

This file defines the cluster infrastructure, and is available on all Controller nodes.

/etc/ceph/ceph.conf (Ceph nodes)

This file contains Ceph high availability settings, including the hostnames and IP addresses of the monitoring hosts.