Ce contenu n'est pas disponible dans la langue sélectionnée.

Chapter 14. Setting up a broker cluster

A cluster consists of multiple broker instances that have been grouped together. Broker clusters enhance performance by distributing the message processing load across multiple brokers. In addition, broker clusters can minimize downtime through high availability.

You can connect brokers together in many different cluster topologies. Within the cluster, each active broker manages its own messages and handles its own connections.

You can also balance client connections across the cluster and redistribute messages to avoid broker starvation.

14.1. Understanding broker clusters

Before creating a broker cluster, you should understand some important clustering concepts.

14.1.1. How broker clusters balance message load

When brokers are connected to form a cluster, AMQ Broker automatically balances the message load between the brokers. This ensures that the cluster can maintain high message throughput.

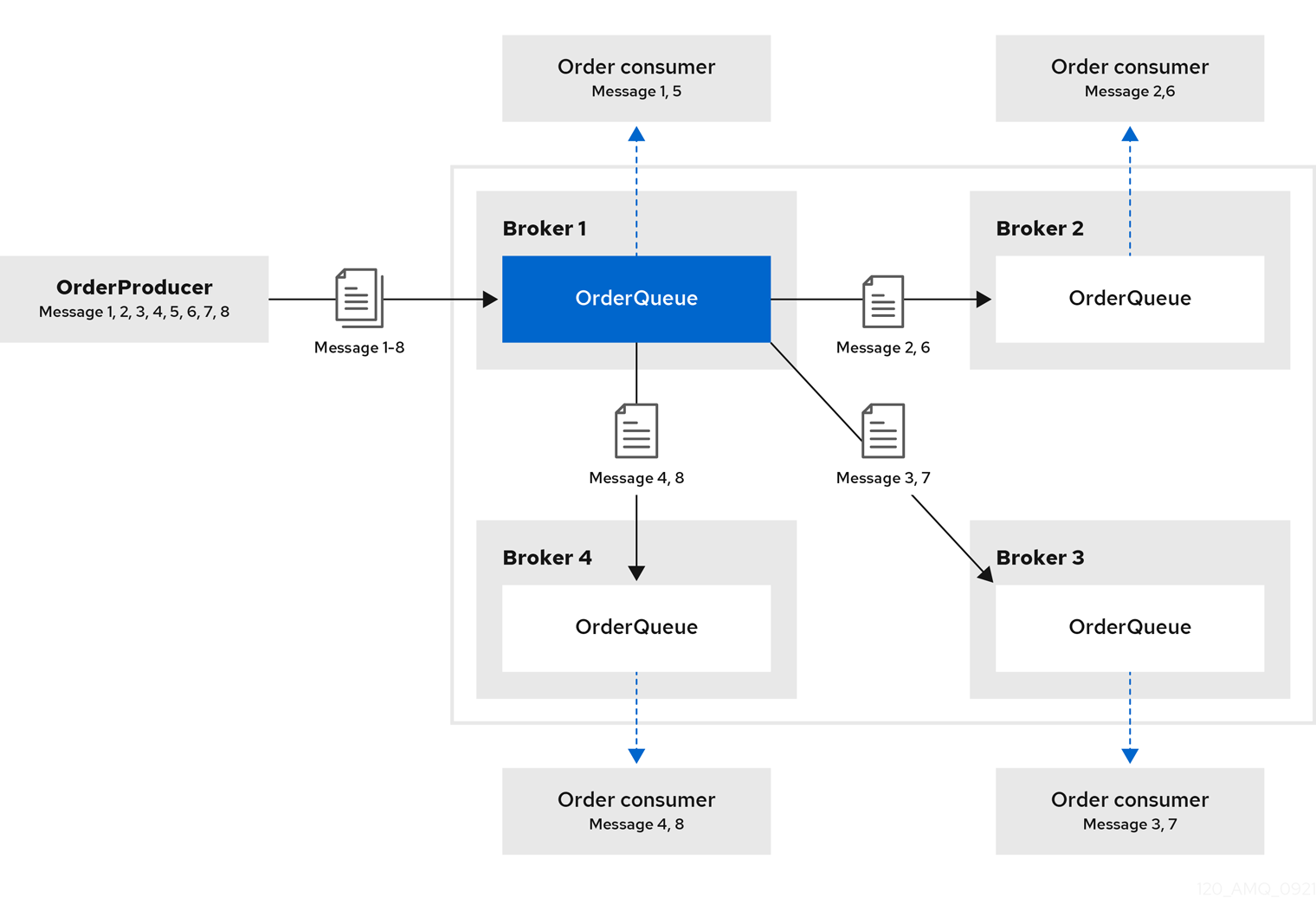

Consider a symmetric cluster of four brokers. Each broker is configured with a queue named OrderQueue. The OrderProducer client connects to Broker1 and sends messages to OrderQueue. Broker1 forwards the messages to the other brokers in round-robin fashion. The OrderConsumer clients connected to each broker consume the messages.

Figure 14.1. Message load balancing in a cluster

Without message load balancing, the messages sent to Broker1 would stay on Broker1 and only OrderConsumer1 would be able to consume them.

The AMQ Broker automatically load balances messages by default, distributing the first group of messages to the first broker and the second group of messages to the second broker. The order in which the brokers were started determines which broker is first, second and so on.

You can configure:

- the cluster to load balance messages to brokers that have a matching queue.

- the cluster to load balance messages to brokers that have a matching queue with active consumers.

- the cluster to not load balance, but to perform redistribution of messages from queues that do not have any consumers to queues that do have consumers.

- an address to automatically redistribute messages from queues that do not have any consumers to queues that do have consumers.

Additional resources

-

The message load balancing policy is configured with the

message-load-balancingproperty in each broker’s cluster connection. For more information, see Appendix C, Cluster Connection Configuration Elements. - For more information about message redistribution, see Section 14.4.2, “Configuring message redistribution”.

14.1.2. How broker clusters improve reliability

Broker clusters make high availability and failover possible, which makes them more reliable than standalone brokers. By configuring high availability, you can ensure that client applications can continue to send and receive messages even if a broker encounters a failure event.

With high availability, the brokers in the cluster are grouped into live-backup groups. A live-backup group consists of a live broker that serves client requests, and one or more backup brokers that wait passively to replace the live broker if it fails. If a failure occurs, the backup brokers replaces the live broker in its live-backup group, and the clients reconnect and continue their work.

14.1.3. Cluster limitations

The following limitation applies when you use AMQ broker in a clustered environment.

- Temporary Queues

- During a failover, if a client has consumers that use temporary queues, these queues are automatically recreated. The recreated queue name does not match the original queue name, which causes message redistribution to fail and can leave messages stranded in existing temporary queues. Red Hat recommends that you avoid using temporary queues in a cluster. For example, applications that use a request/reply pattern should use fixed queues for the JMSReplyTo address.

14.1.4. Understanding node IDs

The broker node ID is a Globally Unique Identifier (GUID) generated programmatically when the journal for a broker instance is first created and initialized. The node ID is stored in the server.lock file. The node ID is used to uniquely identify a broker instance, regardless of whether the broker is a standalone instance, or part of a cluster. Live-backup broker pairs share the same node ID, since they share the same journal.

In a broker cluster, broker instances (nodes) connect to each other and create bridges and internal "store-and-forward" queues. The names of these internal queues are based on the node IDs of the other broker instances. Broker instances also monitor cluster broadcasts for node IDs that match their own. A broker produces a warning message in the log if it identifies a duplicate ID.

When you are using the replication high availability (HA) policy, a master broker that starts and has check-for-live-server set to true searches for a broker that is using its node ID. If the master broker finds another broker using the same node ID, it either does not start, or initiates failback, based on the HA configuration.

The node ID is durable, meaning that it survives restarts of the broker. However, if you delete a broker instance (including its journal), then the node ID is also permanently deleted.

Additional resources

- For more information about configuring the replication HA policy, see Configuring replication high availability.

14.1.5. Common broker cluster topologies

You can connect brokers to form either a symmetric or chain cluster topology. The topology you implement depends on your environment and messaging requirements.

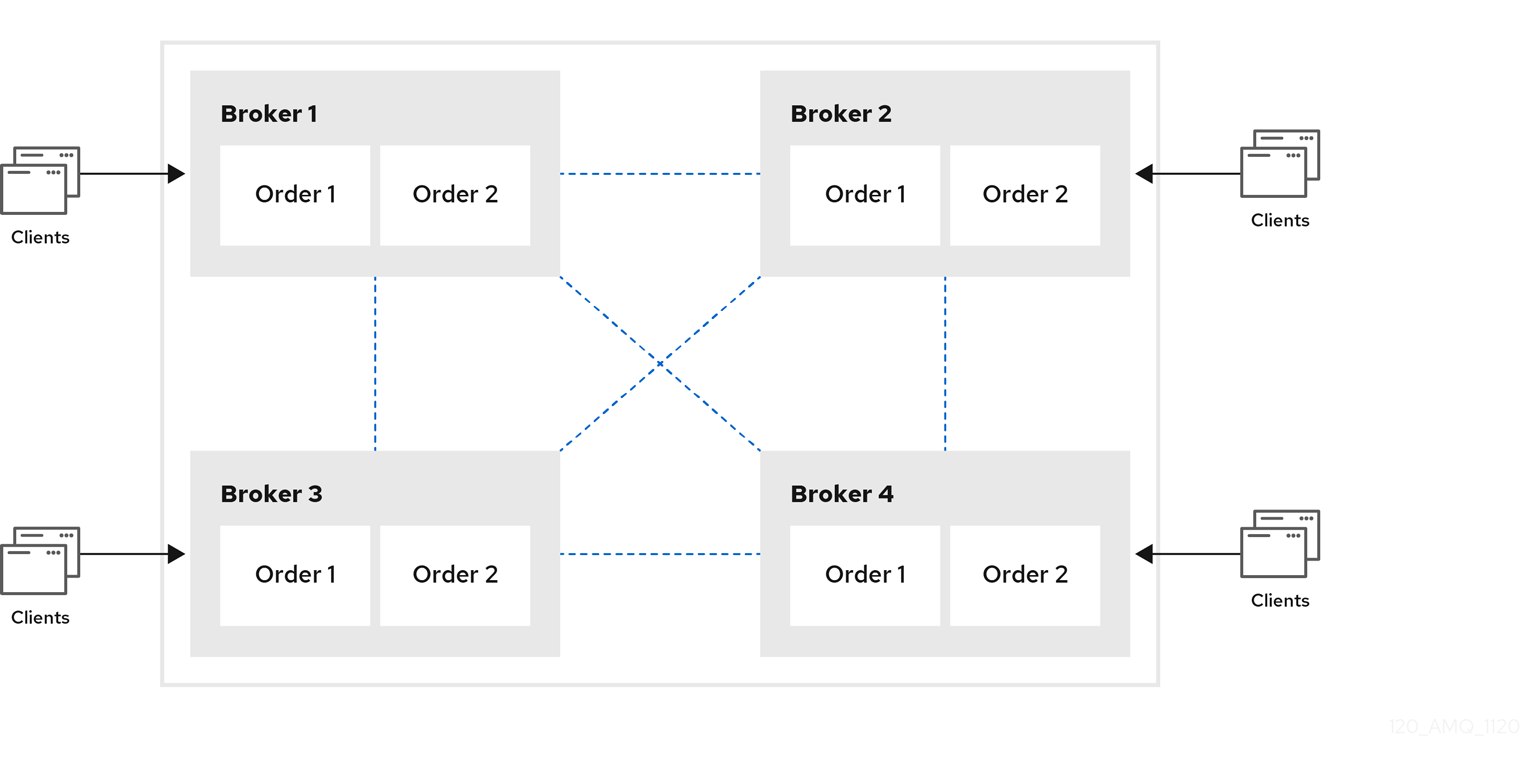

Symmetric clusters

In a symmetric cluster, every broker is connected to every other broker. This means that every broker is no more than one hop away from every other broker.

Figure 14.2. Symmetric cluster topology

Each broker in a symmetric cluster is aware of all of the queues that exist on every other broker in the cluster and the consumers that are listening on those queues. Therefore, symmetric clusters are able to load balance and redistribute messages more optimally than a chain cluster.

Symmetric clusters are easier to set up than chain clusters, but they can be difficult to use in environments in which network restrictions prevent brokers from being directly connected.

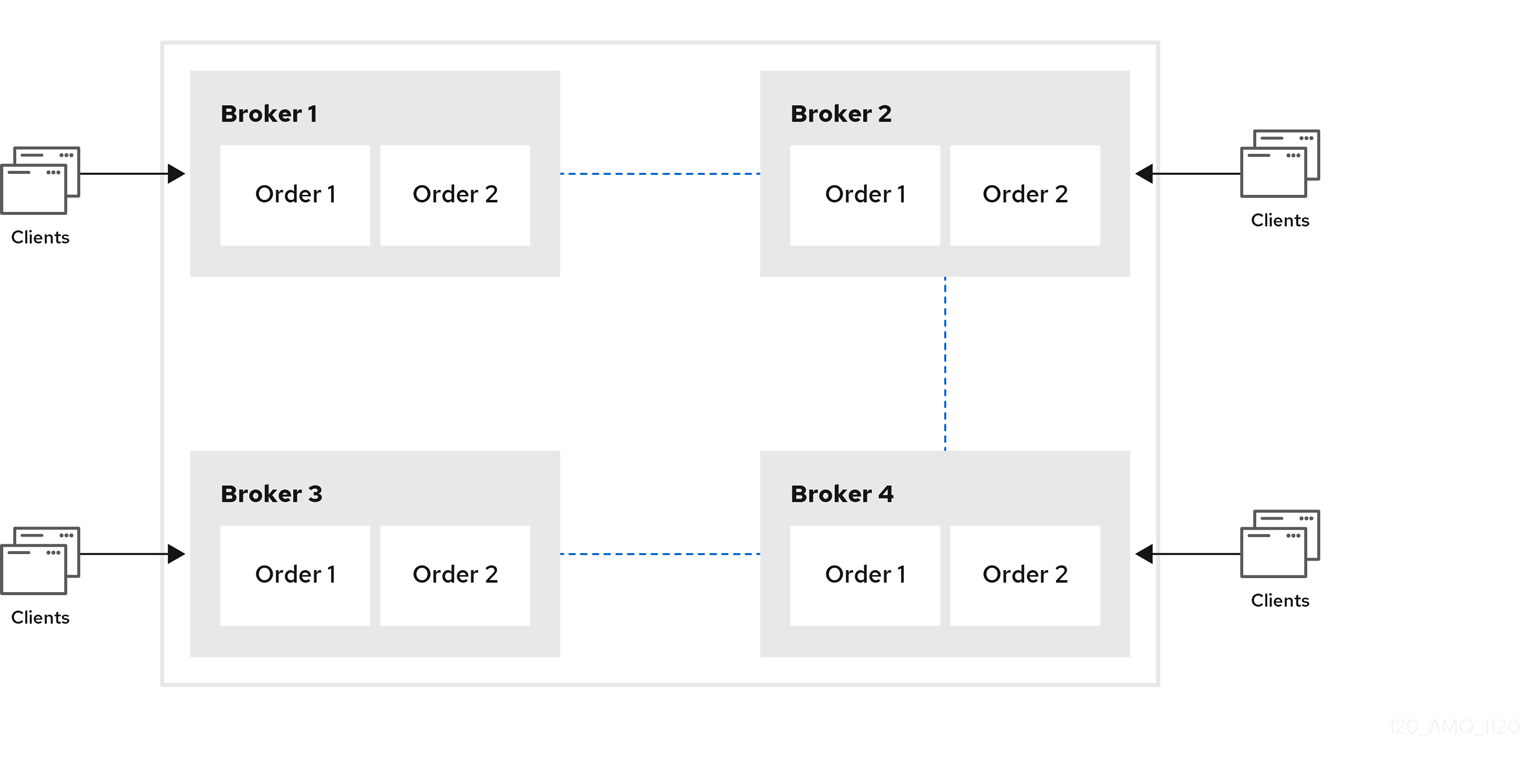

Chain clusters

In a chain cluster, each broker in the cluster is not connected to every broker in the cluster directly. Instead, the brokers form a chain with a broker on each end of the chain and all other brokers just connecting to the previous and next brokers in the chain.

Figure 14.3. Chain cluster topology

Chain clusters are more difficult to set up than symmetric clusters, but can be useful when brokers are on separate networks and cannot be directly connected. By using a chain cluster, an intermediary broker can indirectly connect two brokers to enable messages to flow between them even though the two brokers are not directly connected.

14.1.6. Broker discovery methods

Discovery is the mechanism by which brokers in a cluster propagate their connection details to each other. AMQ Broker supports both dynamic discovery and static discovery.

Dynamic discovery

Each broker in the cluster broadcasts its connection settings to the other members through either UDP multicast or JGroups. In this method, each broker uses:

- A broadcast group to push information about its cluster connection to other potential members of the cluster.

- A discovery group to receive and store cluster connection information about the other brokers in the cluster.

Static discovery

If you are not able to use UDP or JGroups in your network, or if you want to manually specify each member of the cluster, you can use static discovery. In this method, a broker "joins" the cluster by connecting to a second broker and sending its connection details. The second broker then propagates those details to the other brokers in the cluster.

14.1.7. Cluster sizing considerations

Before creating a broker cluster, consider your messaging throughput, topology, and high availability requirements. These factors affect the number of brokers to include in the cluster.

After creating the cluster, you can adjust the size by adding and removing brokers. You can add and remove brokers without losing any messages.

Messaging throughput

The cluster should contain enough brokers to provide the messaging throughput that you require. The more brokers in the cluster, the greater the throughput. However, large clusters can be complex to manage.

Topology

You can create either symmetric clusters or chain clusters. The type of topology you choose affects the number of brokers you may need.

For more information, see Section 14.1.5, “Common broker cluster topologies”.

High availability

If you require high availability (HA), consider choosing an HA policy before creating the cluster. The HA policy affects the size of the cluster, because each master broker should have at least one slave broker.

For more information, see Section 14.3, “Implementing high availability”.

14.2. Creating a broker cluster

You create a broker cluster by configuring a cluster connection on each broker that should participate in the cluster. The cluster connection defines how the broker should connect to the other brokers.

You can create a broker cluster that uses static discovery or dynamic discovery (either UDP multicast or JGroups).

Prerequisites

You should have determined the size of the broker cluster.

For more information, see Section 14.1.7, “Cluster sizing considerations”.

14.2.1. Creating a broker cluster with static discovery

You can create a broker cluster by specifying a static list of brokers. Use this static discovery method if you are unable to use UDP multicast or JGroups on your network.

Procedure

-

Open the

<broker_instance_dir>/etc/broker.xmlconfiguration file. Within the

<core>element, add the following connectors:- A connector that defines how other brokers can connect to this one

- One or more connectors that define how this broker can connect to other brokers in the cluster

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This connector defines connection information that other brokers can use to connect to this one. This information will be sent to other brokers in the cluster during discovery.

- 2

- The

broker2andbroker3connectors define how this broker can connect to two other brokers in the cluster, one of which will always be available. If there are other brokers in the cluster, they will be discovered by one of these connectors when the initial connection is made.

For more information about connectors, see Section 2.3, “About connectors”.

Add a cluster connection and configure it to use static discovery.

By default, the cluster connection will load balance messages for all addresses in a symmetric topology.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow cluster-connection-

Use the

nameattribute to specify the name of the cluster connection. connector-ref- The connector that defines how other brokers can connect to this one.

static-connectors- One or more connectors that this broker can use to make an initial connection to another broker in the cluster. After making this initial connection, the broker will discover the other brokers in the cluster. You only need to configure this property if the cluster uses static discovery.

Configure any additional properties for the cluster connection.

These additional cluster connection properties have default values that are suitable for most common use cases. Therefore, you only need to configure these properties if you do not want the default behavior. For more information, see Appendix C, Cluster Connection Configuration Elements.

Create the cluster user and password.

AMQ Broker ships with default cluster credentials, but you should change them to prevent unauthorized remote clients from using these default credentials to connect to the broker.

ImportantThe cluster password must be the same on every broker in the cluster.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat this procedure on each additional broker.

You can copy the cluster configuration to each additional broker. However, do not copy any of the other AMQ Broker data files (such as the bindings, journal, and large messages directories). These files must be unique among the nodes in the cluster or the cluster will not form properly.

Additional resources

-

For an example of a broker cluster that uses static discovery, see the

clustered-static-discoveryexample.

14.2.2. Creating a broker cluster with UDP-based dynamic discovery

You can create a broker cluster in which the brokers discover each other dynamically through UDP multicast.

Procedure

-

Open the

<broker_instance_dir>/etc/broker.xmlconfiguration file. Within the

<core>element, add a connector.This connector defines connection information that other brokers can use to connect to this one. This information will be sent to other brokers in the cluster during discovery.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a UDP broadcast group.

The broadcast group enables the broker to push information about its cluster connection to the other brokers in the cluster. This broadcast group uses UDP to broadcast the connection settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following parameters are required unless otherwise noted:

broadcast-group-

Use the

nameattribute to specify a unique name for the broadcast group. local-bind-address- The address to which the UDP socket is bound. If you have multiple network interfaces on your broker, you should specify which one you want to use for broadcasts. If this property is not specified, the socket will be bound to an IP address chosen by the operating system. This is a UDP-specific attribute.

local-bind-port-

The port to which the datagram socket is bound. In most cases, use the default value of

-1, which specifies an anonymous port. This parameter is used in connection withlocal-bind-address. This is a UDP-specific attribute. group-address-

The multicast address to which the data will be broadcast. It is a class D IP address in the range

224.0.0.0-239.255.255.255inclusive. The address224.0.0.0is reserved and is not available for use. This is a UDP-specific attribute. group-port- The UDP port number used for broadcasting. This is a UDP-specific attribute.

broadcast-period(optional)- The interval in milliseconds between consecutive broadcasts. The default value is 2000 milliseconds.

connector-ref- The previously configured cluster connector that should be broadcasted.

Add a UDP discovery group.

The discovery group defines how this broker receives connector information from other brokers. The broker maintains a list of connectors (one entry for each broker). As it receives broadcasts from a broker, it updates its entry. If it does not receive a broadcast from a broker for a length of time, it removes the entry.

This discovery group uses UDP to discover the brokers in the cluster:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following parameters are required unless otherwise noted:

discovery-group-

Use the

nameattribute to specify a unique name for the discovery group. local-bind-address(optional)- If the machine on which the broker is running uses multiple network interfaces, you can specify the network interface to which the discovery group should listen. This is a UDP-specific attribute.

group-address-

The multicast address of the group on which to listen. It should match the

group-addressin the broadcast group that you want to listen from. This is a UDP-specific attribute. group-port-

The UDP port number of the multicast group. It should match the

group-portin the broadcast group that you want to listen from. This is a UDP-specific attribute. refresh-timeout(optional)The amount of time in milliseconds that the discovery group waits after receiving the last broadcast from a particular broker before removing that broker’s connector pair entry from its list. The default is 10000 milliseconds (10 seconds).

Set this to a much higher value than the

broadcast-periodon the broadcast group. Otherwise, brokers might periodically disappear from the list even though they are still broadcasting (due to slight differences in timing).

Create a cluster connection and configure it to use dynamic discovery.

By default, the cluster connection will load balance messages for all addresses in a symmetric topology.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow cluster-connection-

Use the

nameattribute to specify the name of the cluster connection. connector-ref- The connector that defines how other brokers can connect to this one.

discovery-group-ref- The discovery group that this broker should use to locate other members of the cluster. You only need to configure this property if the cluster uses dynamic discovery.

Configure any additional properties for the cluster connection.

These additional cluster connection properties have default values that are suitable for most common use cases. Therefore, you only need to configure these properties if you do not want the default behavior. For more information, see Appendix C, Cluster Connection Configuration Elements.

Create the cluster user and password.

AMQ Broker ships with default cluster credentials, but you should change them to prevent unauthorized remote clients from using these default credentials to connect to the broker.

ImportantThe cluster password must be the same on every broker in the cluster.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat this procedure on each additional broker.

You can copy the cluster configuration to each additional broker. However, do not copy any of the other AMQ Broker data files (such as the bindings, journal, and large messages directories). These files must be unique among the nodes in the cluster or the cluster will not form properly.

Additional resources

-

For an example of a broker cluster configuration that uses dynamic discovery with UDP, see the

clustered-queueexample.

14.2.3. Creating a broker cluster with JGroups-based dynamic discovery

If you are already using JGroups in your environment, you can use it to create a broker cluster in which the brokers discover each other dynamically.

Prerequisites

JGroups must be installed and configured.

For an example of a JGroups configuration file, see the

clustered-jgroupsexample.

Procedure

-

Open the

<broker_instance_dir>/etc/broker.xmlconfiguration file. Within the

<core>element, add a connector.This connector defines connection information that other brokers can use to connect to this one. This information will be sent to other brokers in the cluster during discovery.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Within the

<core>element, add a JGroups broadcast group.The broadcast group enables the broker to push information about its cluster connection to the other brokers in the cluster. This broadcast group uses JGroups to broadcast the connection settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following parameters are required unless otherwise noted:

broadcast-group-

Use the

nameattribute to specify a unique name for the broadcast group. jgroups-file- The name of JGroups configuration file to initialize JGroups channels. The file must be in the Java resource path so that the broker can load it.

jgroups-channel- The name of the JGroups channel to connect to for broadcasting.

broadcast-period(optional)- The interval, in milliseconds, between consecutive broadcasts. The default value is 2000 milliseconds.

connector-ref- The previously configured cluster connector that should be broadcasted.

Add a JGroups discovery group.

The discovery group defines how connector information is received. The broker maintains a list of connectors (one entry for each broker). As it receives broadcasts from a broker, it updates its entry. If it does not receive a broadcast from a broker for a length of time, it removes the entry.

This discovery group uses JGroups to discover the brokers in the cluster:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following parameters are required unless otherwise noted:

discovery-group-

Use the

nameattribute to specify a unique name for the discovery group. jgroups-file- The name of JGroups configuration file to initialize JGroups channels. The file must be in the Java resource path so that the broker can load it.

jgroups-channel- The name of the JGroups channel to connect to for receiving broadcasts.

refresh-timeout(optional)The amount of time in milliseconds that the discovery group waits after receiving the last broadcast from a particular broker before removing that broker’s connector pair entry from its list. The default is 10000 milliseconds (10 seconds).

Set this to a much higher value than the

broadcast-periodon the broadcast group. Otherwise, brokers might periodically disappear from the list even though they are still broadcasting (due to slight differences in timing).

Create a cluster connection and configure it to use dynamic discovery.

By default, the cluster connection will load balance messages for all addresses in a symmetric topology.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow cluster-connection-

Use the

nameattribute to specify the name of the cluster connection. connector-ref- The connector that defines how other brokers can connect to this one.

discovery-group-ref- The discovery group that this broker should use to locate other members of the cluster. You only need to configure this property if the cluster uses dynamic discovery.

Configure any additional properties for the cluster connection.

These additional cluster connection properties have default values that are suitable for most common use cases. Therefore, you only need to configure these properties if you do not want the default behavior. For more information, see Appendix C, Cluster Connection Configuration Elements.

Create the cluster user and password.

AMQ Broker ships with default cluster credentials, but you should change them to prevent unauthorized remote clients from using these default credentials to connect to the broker.

ImportantThe cluster password must be the same on every broker in the cluster.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat this procedure on each additional broker.

You can copy the cluster configuration to each additional broker. However, do not copy any of the other AMQ Broker data files (such as the bindings, journal, and large messages directories). These files must be unique among the nodes in the cluster or the cluster will not form properly.

Additional resources

- For an example of a broker cluster that uses dynamic discovery with JGroups, see the clustered-jgroups example.

14.3. Implementing high availability

You can improve its reliability by implementing high availability (HA), enabling the broker cluster continue to function even if one or more brokers go offline.

Implementing HA involves several steps:

- Configure a broker cluster for your HA implementation as described in Section 14.2, “Creating a broker cluster”.

- You should understand what live-backup groups are, and choose an HA policy that best meets your requirements. See Understanding how HA works in AMQ Broker.

When you have chosen a suitable HA policy, configure the HA policy on each broker in the cluster. See:

- Configure your client applications to use failover.

In the later event that you need to troubleshoot a broker cluster configured for high availability, it is recommended that you enable Garbage Collection (GC) logging for each Java Virtual Machine (JVM) instance that is running a broker in the cluster. To learn how to enable GC logs on your JVM, consult the official documentation for the Java Development Kit (JDK) version used by your JVM. For more information on the JVM versions that AMQ Broker supports, see Red Hat AMQ 7 Supported Configurations.

14.3.1. Understanding high availability

In AMQ Broker, you implement high availability (HA) by grouping the brokers in the cluster into live-backup groups. In a live-backup group, a live broker is linked to a backup broker, which can take over for the live broker if it fails. AMQ Broker also provides several different strategies for failover (called HA policies) within a live-backup group.

14.3.1.1. How live-backup groups provide high availability

In AMQ Broker, you implement high availability (HA) by linking together the brokers in your cluster to form live-backup groups. Live-backup groups provide failover, which means that if one broker fails, another broker can take over its message processing.

A live-backup group consists of one live broker (sometimes called the master broker) linked to one or more backup brokers (sometimes called slave brokers). The live broker serves client requests, while the backup brokers wait in passive mode. If the live broker fails, a backup broker replaces the live broker, enabling the clients to reconnect and continue their work.

14.3.1.2. High availability policies

A high availability (HA) policy defines how failover happens in a live-backup group. AMQ Broker provides several different HA policies:

- Shared store (recommended)

The live and backup brokers store their messaging data in a common directory on a shared file system; typically a Storage Area Network (SAN) or Network File System (NFS) server. You can also store broker data in a specified database if you have configured JDBC-based persistence. With shared store, if a live broker fails, the backup broker loads the message data from the shared store and takes over for the failed live broker.

In most cases, you should use shared store instead of replication. Because shared store does not replicate data over the network, it typically provides better performance than replication. Shared store also avoids network isolation (also called "split brain") issues in which a live broker and its backup become live at the same time.

Figure 14.4. Shared store high availability

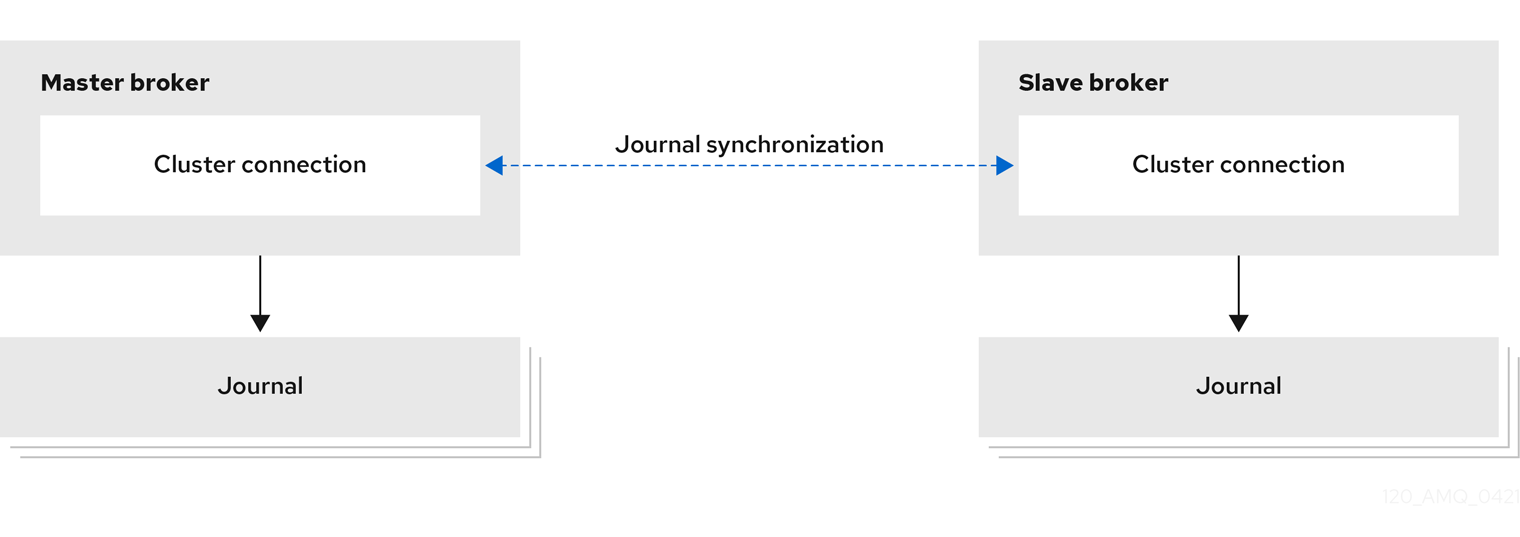

- Replication

The live and backup brokers continuously synchronize their messaging data over the network. If the live broker fails, the backup broker loads the synchronized data and takes over for the failed live broker.

Data synchronization between the live and backup brokers ensures that no messaging data is lost if the live broker fails. When the live and backup brokers initially join together, the live broker replicates all of its existing data to the backup broker over the network. Once this initial phase is complete, the live broker replicates persistent data to the backup broker as the live broker receives it. This means that if the live broker drops off the network, the backup broker has all of the persistent data that the live broker has received up to that point.

Because replication synchronizes data over the network, network failures can result in network isolation in which a live broker and its backup become live at the same time.

Figure 14.5. Replication high availability

- Live-only (limited HA)

When a live broker is stopped gracefully, it copies its messages and transaction state to another live broker and then shuts down. Clients can then reconnect to the other broker to continue sending and receiving messages.

Figure 14.6. Live-only high availability

Additional resources

- For more information about the persistent message data that is shared between brokers in a live-backup group, see Section 6.1, “Persisting message data in journals”.

14.3.1.3. Replication policy limitations

When you use replication to provide high availability, a risk exists that both live and backup brokers can become live at the same time, which is referred to as "split brain".

Split brain can happen if a live broker and its backup lose their connection. In this situation, both a live broker and its backup can become active at the same time. Because there is no message replication between the brokers in this situation, they each serve clients and process messages without the other knowing it. In this case, each broker has a completely different journal. Recovering from this situation can be very difficult and in some cases, not possible.

- To eliminate any possibility of split brain, use the shared store HA policy.

If you do use the replication HA policy, take the following steps to reduce the risk of split brain occurring.

If you want the brokers to use the ZooKeeper Coordination Service to coordinate brokers, deploy ZooKeeper on at least three nodes. If the brokers lose connection to one ZooKeeper node, using at least three nodes ensures that a majority of nodes are available to coordinate the brokers when a live-backup broker pair experiences a replication interruption.

If you want to use the embedded broker coordination, which uses the other available brokers in the cluster to provide a quorum vote, you can reduce (but not eliminate) the chance of encountering split brain by using at least three live-backup pairs. Using at least three live-backup pairs ensures that a majority result can be achieved in any quorum vote that takes place when a live-backup broker pair experiences a replication interruption.

Some additional considerations when you use the replication HA policy are described below:

- When a live broker fails and the backup transitions to live, no further replication takes place until a new backup broker is attached to the live, or failback to the original live broker occurs.

- If the backup broker in a live-backup group fails, the live broker continues to serve messages. However, messages are not replicated until another broker is added as a backup, or the original backup broker is restarted. During that time, messages are persisted only to the live broker.

- If the brokers use the embedded broker coordination and both brokers in a live-backup pair are shut down, to avoid message loss, you must restart the most recently active broker first. If the most recently active broker was the backup broker, you need to manually reconfigure this broker as a master broker to enable it to be restarted first.

14.3.3. Configuring replication high availability

You can use the replication high availability (HA) policy to implement HA in a broker cluster. With replication, persistent data is synchronized between the live and backup brokers. If a live broker encounters a failure, message data is synchronized to the backup broker and it takes over for the failed live broker.

You should use replication as an alternative to shared store, if you do not have a shared file system. However, replication can result in a scenario in which a live broker and its backup become live at the same time.

Because the live and backup brokers must synchronize their messaging data over the network, replication adds a performance overhead. This synchronization process blocks journal operations, but it does not block clients. You can configure the maximum amount of time that journal operations can be blocked for data synchronization.

If the replication connection between the live-backup broker pair is interrupted, the brokers require a way to coordinate to determine if the live broker is still active or if it is unavailable and a failover to the backup broker is required. To provide this coordination, you can configure the brokers to use either of the following coordination methods.

- The Apache ZooKeeper coordination service.

- The embedded broker coordination, which uses other brokers in the cluster to provide a quorum vote.

14.3.3.1. Choosing a coordination method

Red Hat recommends that you use the Apache ZooKeeper coordination service to coordinate broker activation. When choosing a coordination method, it is useful to understand the differences in infrastructure requirements and the management of data consistency between both coordination methods.

Infrastructure requirements

- If you use the ZooKeeper coordination service, you can operate with a single live-backup broker pair. However, you must connect the brokers to at least 3 Apache ZooKeeper nodes to ensure that brokers can continue to function if they lose connection to one node. To provide a coordination service to brokers, you can share existing ZooKeeper nodes that are used by other applications. For more information on setting up Apache ZooKeeper, see the Apache ZooKeeper documentation.

- If you want to use the embedded broker coordination, which uses the other available brokers in the cluster to provide a quorum vote, you must have at least three live-backup broker pairs. Using at least three live-backup pairs ensures that a majority result can be achieved in any quorum vote that occurs when a live-backup broker pair experiences a replication interruption.

Data consistency

- If you use the Apache ZooKeeper coordination service, ZooKeeper tracks the version of the data on each broker so only the broker that has the most up-to-date journal data can activate as the live broker, irrespective of whether the broker is configured as a primary or backup broker for replication purposes. Version tracking eliminates the possibility that a broker can activate with an out-of-date journal and start serving clients.

- If you use the embedded broker coordination, no mechanism exists to track the version of the data on each broker to ensure that only the broker that has the most up-to-date journal can become the live broker. Therefore, it is possible for a broker that has an out-of-date journal to become live and start serving clients, which causes a divergence in the journal.

14.3.3.2. How brokers coordinate after a replication interruption

This section explains how both coordination methods work after a replication connection is interrupted.

Using the ZooKeeper coordination service

If you use the ZooKeeper coordination service to manage replication interruptions, both brokers must be connected to multiple Apache ZooKeeper nodes.

- If, at any time, the live broker loses connection to a majority of the ZooKeeper nodes, it shuts down to avoid the risk of "split brain" occurring.

- If, at any time, the backup broker loses connection to a majority of the ZooKeeper nodes, it stops receiving replication data and waits until it can connect to a majority of the ZooKeeper nodes before it acts as a backup broker again. When the connection is restored to a majority of the ZooKeeper nodes, the backup broker uses ZooKeeper to determine if it needs to discard its data and search for a live broker from which to replicate, or if it can become the live broker with its current data.

ZooKeeper uses the following control mechanisms to manage the failover process:

- A shared lease lock that can be owned only by a single live broker at any time.

- An activation sequence counter that tracks the latest version of the broker data. Each broker tracks the version of its journal data in a local counter stored in its server lock file, along with its NodeID. The live broker also shares its version in a coordinated activation sequence counter on ZooKeeper.

If the replication connection between the live broker and the backup broker is lost, the live broker increases both its local activation sequence counter value and the coordinated activation sequence counter value on ZooKeeper by 1 to advertise that it has the most up-to-date data. The backup broker’s data is now considered stale and the broker cannot become the live broker until the replication connection is restored and the up-to-date data is synchronized.

After the replication connection is lost, the backup broker checks if the ZooKeeper lock is owned by the live broker and if the coordinated activation sequence counter on ZooKeeper matches its local counter value.

- If the lock is owned by the live broker, the backup broker detects that the activation sequence counter on ZooKeeper was updated by the live broker when the replication connection was lost. This indicates that the live broker is running so the backup broker does not try to failover.

- If the lock is not owned by the live broker, the live broker is not alive. If the value of the activation sequence counter on the backup broker is the same as the coordinated activation sequence counter value on ZooKeeper, which indicates that the backup broker has up-to-date data, the backup broker fails over.

- If the lock is not owned by the live broker but the value of the activation sequence counter on the backup broker is less than the counter value on ZooKeeper, the data on the backup broker is not up-to-date and the backup broker cannot fail over.

Using the embedded broker coordination

If a live-backup broker pair use the embedded broker coordination to coordinate a replication interruption, the following two types of quorum votes can be initiated.

| Vote type | Description | Initiator | Required configuration | Participants | Action based on vote result |

|---|---|---|---|---|---|

| Backup vote | If a backup broker loses its replication connection to the live broker, the backup broker decides whether or not to start based on the result of this vote. | Backup broker | None. A backup vote happens automatically when a backup broker loses connection to its replication partner. However, you can control the properties of a backup vote by specifying custom values for these parameters:

| Other live brokers in the cluster | The backup broker starts if it receives a majority (that is, a quorum) vote from the other live brokers in the cluster, indicating that its replication partner is no longer available. |

| Live vote | If a live broker loses connection to its replication partner, the live broker decides whether to continue running based on this vote. | Live broker |

A live vote happens when a live broker loses connection to its replication partner and | Other live brokers in the cluster | The live broker shuts down if it doesn’t receive a majority vote from the other live brokers in the cluster, indicating that its cluster connection is still active. |

Listed below are some important things to note about how the configuration of your broker cluster affects the behavior of quorum voting.

- For a quorum vote to succeed, the size of your cluster must allow a majority result to be achieved. Therefore, your cluster should have at least three live-backup broker pairs.

- The more live-backup broker pairs that you add to your cluster, the more you increase the overall fault tolerance of the cluster. For example, suppose you have three live-backup pairs. If you lose a complete live-backup pair, the two remaining live-backup pairs cannot achieve a majority result in any subsequent quorum vote. This situation means that any further replication interruption in the cluster might cause a live broker to shut down, and prevent its backup broker from starting up. By configuring your cluster with, say, five broker pairs, the cluster can experience at least two failures, while still ensuring a majority result from any quorum vote.

- If you intentionally reduce the number of live-backup broker pairs in your cluster, the previously established threshold for a majority vote does not automatically decrease. During this time, any quorum vote triggered by a lost replication connection cannot succeed, making your cluster more vulnerable to split brain. To make your cluster recalculate the majority threshold for a quorum vote, first shut down the live-backup pairs that you are removing from your cluster. Then, restart the remaining live-backup pairs in the cluster. When all of the remaining brokers have been restarted, the cluster recalculates the quorum vote threshold.

14.3.3.3. Configuring replication for a broker cluster using the ZooKeeper coordination service

You must specify the same replication configuration for both brokers in a live-backup pair that uses the Apache ZooKeeper coordination service. The brokers then coordinate to determine which broker is the primary broker and which is the backup broker.

Prerequisites

- At least 3 Apache ZooKeeper nodes to ensure that brokers can continue to operate if they lose the connection to one node.

- The broker machines have a similar hardware specification, that is, you do not have a preference for which machine runs the live broker and which runs the backup broker at any point in time.

- ZooKeeper must have sufficient resources to ensure that pause times are significantly less than the ZooKeeper server tick time. Depending on the expected load of the broker, consider carefully if the broker and ZooKeeper node can share the same node. For more information, see https://zookeeper.apache.org/.

Procedure

-

Open the

<broker_instance_dir>/etc/broker.xmlconfiguration file for both brokers in the live-backup pair. Configure the same replication configuration for both brokers in the pair. For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow primary- Configure the replication type as primary to indicate that either broker can be the primary broker depending on the result of the broker coordination.

Coordination-id-

Specify a common string value for both brokers in the live-backup pair. Brokers with the same

Coordination-idstring coordinate activation together. During the coordination process, both brokers use theCoordination-idstring as the node Id and attempt to obtain a lock in ZooKeeper. The first broker that obtains a lock and has up-to-date data starts as a live broker and the other broker becomes the backup. propertiesSpecify a

propertyelement within which you can specify a set of key-value pairs to provide the connection details for the ZooKeeper nodes:Expand Table 14.2. ZooKeeper connection details Key Value connect-string

Specify a comma-separated list of the IP addresses and port numbers of the ZooKeeper nodes. For example,

value="192.168.1.10:6666,192.168.2.10:6667,192.168.3.10:6668".session-ms

The duration that the broker waits before it shuts down after losing connection to a majority of the ZooKeeper nodes. The default value is

18000ms. A valid value is between 2 times and 20 times the ZooKeeper server tick time.NoteThe ZooKeeper pause time for garbage collection must be less than 0.33 of the value of the

session-msproperty in order to allow the ZooKeeper heartbeat to function reliably. If it is not possible to ensure that pause times are less than this limit, increase the value of thesession-msproperty for each broker and accept a slower failover.ImportantBroker replication partners automatically exchange "ping" packets every 2 seconds to confirm that the partner broker is available. When a backup broker does not receive a response from the live broker, the backup waits for a response until the broker’s connection time-to-live (ttl) expires. The default connection-ttl is

60000ms which means that a backup broker attempts to fail over after 60 seconds. It is recommended that you set the connection-ttl value to a similar value to thesession-msproperty value to allow a faster failover. To set a new connection-ttl, configure theconnection-ttl-overrideproperty.namespace (optional)

If the brokers share the ZooKeeper nodes with other applications, you can create a ZooKeeper namespace to store the files that provide a coordination service to brokers. You must specify the same namespace for both brokers in a live-backup pair.

Configure any additional HA properties for the brokers.

These additional HA properties have default values that are suitable for most common use cases. Therefore, you only need to configure these properties if you do not want the default behavior. For more information, see Appendix F, Additional Replication High Availability Configuration Elements.

- Repeat steps 1 to 3 to configure each additional live-backup broker pair in the cluster.

Additional resources

- For examples of broker clusters that use replication for HA, see the HA examples.

- For more information about node IDs, see Understanding node IDs.

14.3.3.4. Configuring a broker cluster for replication high availability using the embedded broker coordination

Replication using the embedded broker coordination requires at least three live-backup pairs to lessen (but not eliminate) the risk of "split brain".

The following procedure describes how to configure replication high-availability (HA) for a six-broker cluster. In this topology, the six brokers are grouped into three live-backup pairs: each of the three live brokers is paired with a dedicated backup broker.

Prerequisites

You must have a broker cluster with at least six brokers.

The six brokers are configured into three live-backup pairs. For more information about adding brokers to a cluster, see Chapter 14, Setting up a broker cluster.

Procedure

Group the brokers in your cluster into live-backup groups.

In most cases, a live-backup group should consist of two brokers: a live broker and a backup broker. If you have six brokers in your cluster, you need three live-backup groups.

Create the first live-backup group consisting of one live broker and one backup broker.

-

Open the live broker’s

<broker_instance_dir>/etc/broker.xmlconfiguration file. Configure the live broker to use replication for its HA policy.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow check-for-live-serverIf the live broker fails, this property controls whether clients should fail back to it when it restarts.

If you set this property to

true, when the live broker restarts after a previous failover, it searches for another broker in the cluster with the same node ID. If the live broker finds another broker with the same node ID, this indicates that a backup broker successfully started upon failure of the live broker. In this case, the live broker synchronizes its data with the backup broker. The live broker then requests the backup broker to shut down. If the backup broker is configured for failback, as shown below, it shuts down. The live broker then resumes its active role, and clients reconnect to it.WarningIf you do not set

check-for-live-servertotrueon the live broker, you might experience duplicate messaging handling when you restart the live broker after a previous failover. Specifically, if you restart a live broker with this property set tofalse, the live broker does not synchronize data with its backup broker. In this case, the live broker might process the same messages that the backup broker has already handled, causing duplicates.group-name- A name for this live-backup group (optional). To form a live-backup group, the live and backup brokers must be configured with the same group name. If you don’t specify a group-name, a backup broker can replicate with any live broker.

vote-on-replication-failureThis property controls whether a live broker initiates a quorum vote called a live vote in the event of an interrupted replication connection.

A live vote is a way for a live broker to determine whether it or its partner is the cause of the interrupted replication connection. Based on the result of the vote, the live broker either stays running or shuts down.

ImportantFor a quorum vote to succeed, the size of your cluster must allow a majority result to be achieved. Therefore, when you use the replication HA policy, your cluster should have at least three live-backup broker pairs.

The more broker pairs you configure in your cluster, the more you increase the overall fault tolerance of the cluster. For example, suppose you have three live-backup broker pairs. If you lose connection to a complete live-backup pair, the two remaining live-backup pairs can no longer achieve a majority result in a quorum vote. This situation means that any subsequent replication interruption might cause a live broker to shut down, and prevent its backup broker from starting up. By configuring your cluster with, say, five broker pairs, the cluster can experience at least two failures, while still ensuring a majority result from any quorum vote.

Configure any additional HA properties for the live broker.

These additional HA properties have default values that are suitable for most common use cases. Therefore, you only need to configure these properties if you do not want the default behavior. For more information, see Appendix F, Additional Replication High Availability Configuration Elements.

-

Open the backup broker’s

<broker_instance_dir>/etc/broker.xmlconfiguration file. Configure the backup broker to use replication for its HA policy.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow allow-failbackIf failover has occurred and the backup broker has taken over for the live broker, this property controls whether the backup broker should fail back to the original live broker when it restarts and reconnects to the cluster.

NoteFailback is intended for a live-backup pair (one live broker paired with a single backup broker). If the live broker is configured with multiple backups, then failback will not occur. Instead, if a failover event occurs, the backup broker will become live, and the next backup will become its backup. When the original live broker comes back online, it will not be able to initiate failback, because the broker that is now live already has a backup.

group-name- A name for this live-backup group (optional). To form a live-backup group, the live and backup brokers must be configured with the same group name. If you don’t specify a group-name, a backup broker can replicate with any live broker.

vote-on-replication-failureThis property controls whether a live broker initiates a quorum vote called a live vote in the event of an interrupted replication connection. A backup broker that has become active is considered a live broker and can initiate a live vote.

A live vote is a way for a live broker to determine whether it or its partner is the cause of the interrupted replication connection. Based on the result of the vote, the live broker either stays running or shuts down.

(Optional) Configure properties of the quorum votes that the backup broker initiates.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow vote-retries- This property controls how many times the backup broker retries the quorum vote in order to receive a majority result that allows the backup broker to start up.

vote-retry-wait- This property controls how long, in milliseconds, that the backup broker waits between each retry of the quorum vote.

Configure any additional HA properties for the backup broker.

These additional HA properties have default values that are suitable for most common use cases. Therefore, you only need to configure these properties if you do not want the default behavior. For more information, see Appendix F, Additional Replication High Availability Configuration Elements.

-

Open the live broker’s

Repeat step 2 for each additional live-backup group in the cluster.

If there are six brokers in the cluster, repeat this procedure two more times; once for each remaining live-backup group.

Additional resources

- For examples of broker clusters that use replication for HA, see the HA examples.

- For more information about node IDs, see Understanding node IDs.

14.3.4. Configuring limited high availability with live-only

The live-only HA policy enables you to shut down a broker in a cluster without losing any messages. With live-only, when a live broker is stopped gracefully, it copies its messages and transaction state to another live broker and then shuts down. Clients can then reconnect to the other broker to continue sending and receiving messages.

The live-only HA policy only handles cases when the broker is stopped gracefully. It does not handle unexpected broker failures.

While live-only HA prevents message loss, it may not preserve message order. If a broker configured with live-only HA is stopped, its messages will be appended to the ends of the queues of another broker.

When a broker is preparing to scale down, it sends a message to its clients before they are disconnected informing them which new broker is ready to process their messages. However, clients should reconnect to the new broker only after their initial broker has finished scaling down. This ensures that any state, such as queues or transactions, is available on the other broker when the client reconnects. The normal reconnect settings apply when the client is reconnecting, so you should set these high enough to deal with the time needed to scale down.

This procedure describes how to configure each broker in the cluster to scale down. After completing this procedure, whenever a broker is stopped gracefully, it will copy its messages and transaction state to another broker in the cluster.

Procedure

-

Open the first broker’s

<broker_instance_dir>/etc/broker.xmlconfiguration file. Configure the broker to use the live-only HA policy.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure a method for scaling down the broker cluster.

Specify the broker or group of brokers to which this broker should scale down.

Expand Table 14.3. Methods for scaling down a broker cluster To scale down to… Do this… A specific broker in the cluster

Specify the connector of the broker to which you want to scale down.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Any broker in the cluster

Specify the broker cluster’s discovery group.

<live-only> <scale-down> <discovery-group-ref discovery-group-name="my-discovery-group"/> </scale-down> </live-only><live-only> <scale-down> <discovery-group-ref discovery-group-name="my-discovery-group"/> </scale-down> </live-only>Copy to Clipboard Copied! Toggle word wrap Toggle overflow A broker in a particular broker group

Specify a broker group.

<live-only> <scale-down> <group-name>my-group-name</group-name> </scale-down> </live-only><live-only> <scale-down> <group-name>my-group-name</group-name> </scale-down> </live-only>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Repeat this procedure for each remaining broker in the cluster.

Additional resources

-

For an example of a broker cluster that uses live-only to scale down the cluster, see the

scale-downexample.

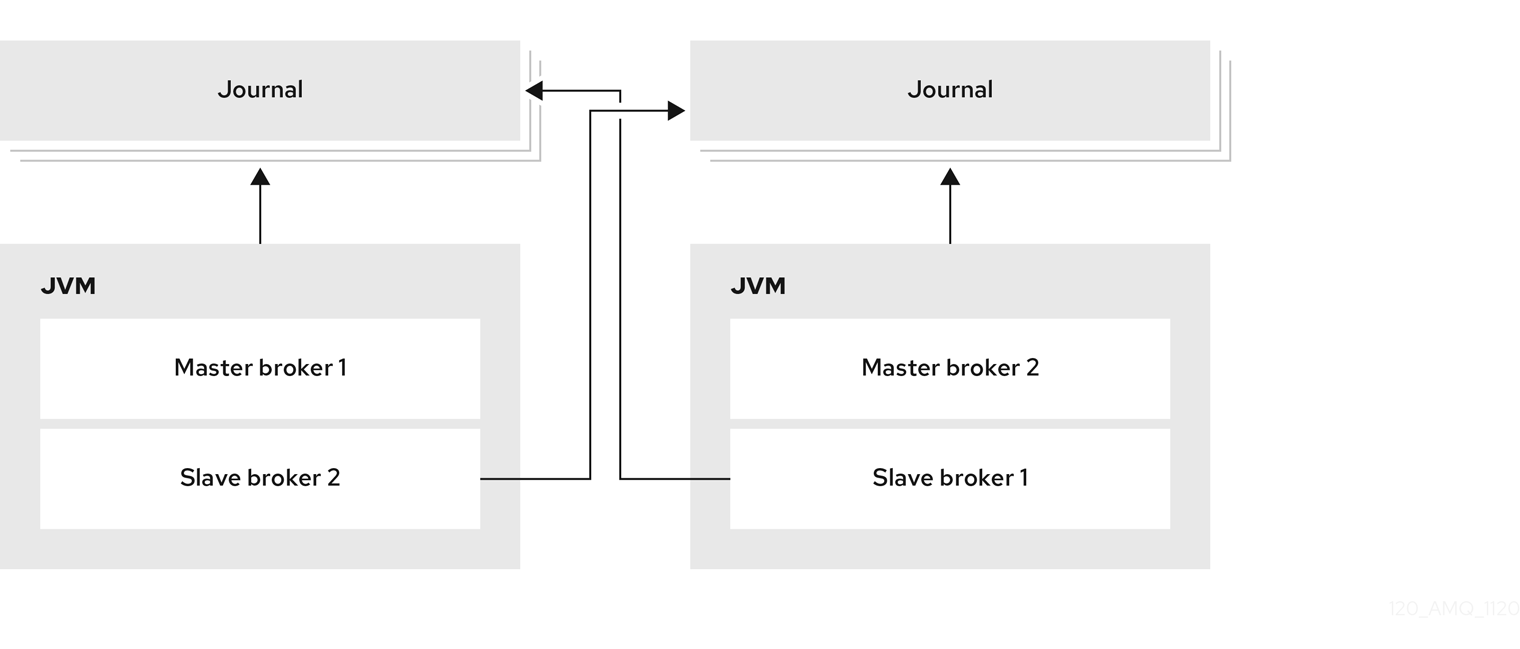

14.3.5. Configuring high availability with colocated backups

Rather than configure live-backup groups, you can colocate backup brokers in the same JVM as another live broker. In this configuration, each live broker is configured to request another live broker to create and start a backup broker in its JVM.

Figure 14.7. Colocated live and backup brokers

You can use colocation with either shared store or replication as the high availability (HA) policy. The new backup broker inherits its configuration from the live broker that creates it. The name of the backup is set to colocated_backup_n where n is the number of backups the live broker has created.

In addition, the backup broker inherits the configuration for its connectors and acceptors from the live broker that creates it. By default, port offset of 100 is applied to each. For example, if the live broker has an acceptor for port 61616, the first backup broker created will use port 61716, the second backup will use 61816, and so on.

Directories for the journal, large messages, and paging are set according to the HA policy you choose. If you choose shared store, the requesting broker notifies the target broker which directories to use. If replication is chosen, directories are inherited from the creating broker and have the new backup’s name appended to them.

This procedure configures each broker in the cluster to use shared store HA, and to request a backup to be created and colocated with another broker in the cluster.

Procedure

-

Open the first broker’s

<broker_instance_dir>/etc/broker.xmlconfiguration file. Configure the broker to use an HA policy and colocation.

In this example, the broker is configured with shared store HA and colocation.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow request-backup-

By setting this property to

true, this broker will request a backup broker to be created by another live broker in the cluster. max-backups-

The number of backup brokers that this broker can create. If you set this property to

0, this broker will not accept backup requests from other brokers in the cluster. backup-request-retries-

The number of times this broker should try to request a backup broker to be created. The default is

-1, which means unlimited tries. backup-request-retry-interval-

The amount of time in milliseconds that the broker should wait before retrying a request to create a backup broker. The default is

5000, or 5 seconds. backup-port-offset-

The port offset to use for the acceptors and connectors for a new backup broker. If this broker receives a request to create a backup for another broker in the cluster, it will create the backup broker with the ports offset by this amount. The default is

100. excludes(optional)-

Excludes connectors from the backup port offset. If you have configured any connectors for external brokers that should be excluded from the backup port offset, add a

<connector-ref>for each of the connectors. master- The shared store or replication failover configuration for this broker.

slave- The shared store or replication failover configuration for this broker’s backup.

- Repeat this procedure for each remaining broker in the cluster.

Additional resources

- For examples of broker clusters that use colocated backups, see the HA examples.

14.3.6. Configuring clients to fail over

After configuring high availability in a broker cluster, you configure your clients to fail over. Client failover ensures that if a broker fails, the clients connected to it can reconnect to another broker in the cluster with minimal downtime.

In the event of transient network problems, AMQ Broker automatically reattaches connections to the same broker. This is similar to failover, except that the client reconnects to the same broker.

You can configure two different types of client failover:

- Automatic client failover

- The client receives information about the broker cluster when it first connects. If the broker to which it is connected fails, the client automatically reconnects to the broker’s backup, and the backup broker re-creates any sessions and consumers that existed on each connection before failover.

- Application-level client failover

- As an alternative to automatic client failover, you can instead code your client applications with your own custom reconnection logic in a failure handler.

Procedure

Use AMQ Core Protocol JMS to configure your client application with automatic or application-level failover.

For more information, see Using the AMQ Core Protocol JMS Client.

14.4. Enabling message redistribution

If your broker cluster is configured with message-load-balancing set to ON_DEMAND or OFF_WITH_REDISTRIBUTION, you can configure message redistribution to prevent messages from being "stuck" in a queue that does not have a consumer to consume the messages.

This section contains information about:

14.4.1. Understanding message redistribution

Broker clusters use load balancing to distribute the message load across the cluster. When configuring load balancing in the cluster connection, you can enable redistribution using the following message-load-balancing settings:

-

ON_DEMAND- enable load balancing and allow redistribution -

OFF_WITH_REDISTRIBUTION- disable load balancing but allow redistribution

In both cases, the broker forwards messages only to other brokers that have matching consumers. This behavior ensures that messages are not moved to queues that do not have any consumers to consume the messages. However, if the consumers attached to a queue close after the messages are forwarded to the broker, those messages become "stuck" in the queue and are not consumed. This issue is sometimes called starvation.

Message redistribution prevents starvation by automatically redistributing the messages from queues that have no consumers to brokers in the cluster that do have matching consumers.

With OFF_WITH_REDISTRIBUTION, the broker only forwards messages to other brokers that have matching consumers if there are no active local consumers, enabling you to prioritize a broker while providing an alternative when consumers are not available.

Message redistribution supports the use of filters (also know as selectors), that is, messages are redistributed when they do not match the selectors of the available local consumers.

Additional resources

- For more information about cluster load balancing, see Section 14.1.1, “How broker clusters balance message load”.

14.4.2. Configuring message redistribution

This procedure shows how to configure message redistribution with load balancing. If you want message redistribution without load balancing, set <message-load-balancing> is set to OFF_WITH_REDISTRIBUTION.

Procedure

-

Open the

<broker_instance_dir>/etc/broker.xmlconfiguration file. In the

<cluster-connection>element, verify that<message-load-balancing>is set toON_DEMAND.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Within the

<address-settings>element, set the redistribution delay for a queue or set of queues.In this example, messages load balanced to

my.queuewill be redistributed 5000 milliseconds after the last consumer closes.Copy to Clipboard Copied! Toggle word wrap Toggle overflow address-setting-

Set the

matchattribute to be the name of the queue for which you want messages to be redistributed. You can use the broker wildcard syntax to specify a range of queues. For more information, see Section 4.2, “Applying address settings to sets of addresses”. redistribution-delay-

The amount of time (in milliseconds) that the broker should wait after this queue’s final consumer closes before redistributing messages to other brokers in the cluster. If you set this to

0, messages will be redistributed immediately. However, you should typically set a delay before redistributing - it is common for a consumer to close but another one to be quickly created on the same queue.

- Repeat this procedure for each additional broker in the cluster.

Additional resources

-

For an example of a broker cluster configuration that redistributes messages, see the

queue-message-redistributionexample.

14.5. Configuring clustered message grouping

Message grouping enables clients to send groups of messages of a particular type to be processed serially by the same consumer. By adding a grouping handler to each broker in the cluster, you ensure that clients can send grouped messages to any broker in the cluster and still have those messages consumed in the correct order by the same consumer.

Grouping and clustering techniques can be summarized as follows:

- Message grouping imposes an order on message consumption. In a group, each message must be fully consumed and acknowledged prior to proceeding with the next message. This methodology leads to serial message processing, where concurrency is not an option.

- Clustering aims to horizontally scale brokers to boost message throughput. Horizontal scaling is achieved by adding additional consumers that can process messages concurrently.

Because these techniques contradict each other, avoid using clustering and grouping together.

There are two types of grouping handlers: local handlers and remote handlers. They enable the broker cluster to route all of the messages in a particular group to the appropriate queue so that the intended consumer can consume them in the correct order.

Prerequisites

There should be at least one consumer on each broker in the cluster.

When a message is pinned to a consumer on a queue, all messages with the same group ID will be routed to that queue. If the consumer is removed, the queue will continue to receive the messages even if there are no consumers.

Procedure

Configure a local handler on one broker in the cluster.

If you are using high availability, this should be a master broker.

-

Open the broker’s

<broker_instance_dir>/etc/broker.xmlconfiguration file. Within the

<core>element, add a local handler:The local handler serves as an arbiter for the remote handlers. It stores route information and communicates it to the other brokers.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow grouping-handler-

Use the

nameattribute to specify a unique name for the grouping handler. type-

Set this to

LOCAL. timeoutThe amount of time to wait (in milliseconds) for a decision to be made about where to route the message. The default is 5000 milliseconds. If the timeout is reached before a routing decision is made, an exception is thrown, which ensures strict message ordering.

When the broker receives a message with a group ID, it proposes a route to a queue to which the consumer is attached. If the route is accepted by the grouping handlers on the other brokers in the cluster, then the route is established: all brokers in the cluster will forward messages with this group ID to that queue. If the broker’s route proposal is rejected, then it proposes an alternate route, repeating the process until a route is accepted.

-

Open the broker’s

If you are using high availability, copy the local handler configuration to the master broker’s slave broker.

Copying the local handler configuration to the slave broker prevents a single point of failure for the local handler.

On each remaining broker in the cluster, configure a remote handler.

-

Open the broker’s

<broker_instance_dir>/etc/broker.xmlconfiguration file. Within the

<core>element, add a remote handler:Copy to Clipboard Copied! Toggle word wrap Toggle overflow grouping-handler-

Use the

nameattribute to specify a unique name for the grouping handler. type-

Set this to

REMOTE. timeout- The amount of time to wait (in milliseconds) for a decision to be made about where to route the message. The default is 5000 milliseconds. Set this value to at least half of the value of the local handler.

-

Open the broker’s

Additional resources

- For an example of a broker cluster configured for message grouping, see the JMS clustered grouping example.

14.6. Connecting clients to a broker cluster

You can use the Red Hat build of Apache Qpid JMS clients to connect to the cluster. By using JMS, you can configure your messaging clients to discover the list of brokers dynamically or statically. You can also configure client-side load balancing to distribute the client sessions created from the connection across the cluster.

Procedure

Use AMQ Core Protocol JMS to configure your client application to connect to the broker cluster.

For more information, see Using the AMQ Core Protocol JMS Client.

14.7. Partitioning client connections

Partitioning client connections involves routing connections for individual clients to the same broker each time the client initiates a connection.

Two use cases for partitioning client connections are:

- Partitioning clients of durable subscriptions to ensure that a subscriber always connects to the broker where the durable subscriber queue is located.

- Minimizing the need to move data by attracting clients to data where it originates, also known as data gravity.

Durable subscriptions

A durable subscription is represented as a queue on a broker and is created when a durable subscriber first connects to the broker. This queue remains on the broker and receives messages until the client unsubscribes. Therefore, you want the client to connect to the same broker repeatedly to consume the messages that are in the subscriber queue.

To partition clients for durable subscription queues, you can filter the client ID in client connections.

Data gravity

If you scale up the number of brokers in your environment without considering data gravity, some of the performance benefits are lost because of the need to move messages between brokers. To support date gravity, you should partition your client connections so that client consumers connect to the broker on which the messages that they need to consume are produced.

To partition client connections to support data gravity, you can filter any of the following attributes of a client connection:

- a role assigned to the connecting user (ROLE_NAME)

- the username of the user (USER_NAME)

- the hostname of the client (SNI_HOST)

- the IP address of the client (SOURCE_IP)

14.7.1. Partitioning client connections to support durable subscriptions

To partition clients for durable subscriptions, you can filter client IDs in incoming connections by using a consistent hashing algorithm or a regular expression.

Prerequisites

Clients are configured so they can connect to all of the brokers in the cluster, for example, by using a load balancer or by having all of the broker instances configured in the connection URL. If a broker rejects a connection because the client details do not match the partition configuration for that broker, the client must be able to connect to the other brokers in the cluster to find a broker that accepts connections from it.

14.7.1.1. Filtering client IDs using a consistent hashing algorithm

You can configure each broker in a cluster to use a consistent hashing algorithm to hash the client ID in each client connection. After the broker hashes the client ID, it performs a modulo operation on the hashed value to return an integer value, which identifies the target broker for the client connection. The broker compares the integer value returned to a unique value configured on the broker. If there is a match, the broker accepts the connection. If the values don’t match, the broker rejects the connection. This process is repeated on each broker in the cluster until a match is found and a broker accepts the connection.

Procedure

-

Open the

<broker_instance_dir>/etc/broker.xmlconfiguration file for the first broker. Create a

connection-routerselement and create aconnection-routeto filter client IDs by using a consistent hashing algorithm. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow connection-route-

For the

connection-route name, specify an identifying string for this connection routing configuration. You must add this name to each broker acceptor that you want to apply the consistent hashing filter to. key-

The key type to apply the filter to. To filter the client ID, specify

CLIENT_IDin thekeyfield. local-target-filter-

The value that the broker compares to the integer value returned by the modulo operation to determine if there is a match and the broker can accept the connection. The value of

NULL|0in the example provides a match for connections that have no client ID (NULL) and connections where the number returned by the modulo operation is0. policyAccepts a

moduloproperty key, which performs a modulo operation on the hashed client ID to identify the target broker. The value of themoduloproperty key must equal the number of brokers in the cluster.ImportantThe

policy namemust beCONSISTENT_HASH_MODULO.

-

Open the

<broker_instance_dir>/etc/broker.xmlconfiguration file for the second broker. Create a

connection-routerselement and create aconnection routeto filter client IDs by using a consistent hashing algorithm.In the following example, the

local-target-filtervalue ofNULL|1provides a match for connections that have no client ID (NULL) and connections where the value returned by the modulo operation is1.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Repeat this procedure to create a consistent hash filter for each additional broker in the cluster.

14.7.1.2. Filtering client IDs using a regular expression

You can partition client connections by configuring brokers to apply a regular expression filter to a part of the client ID in client connections. A broker only accepts a connection if the result of the regular expression filter matches the local target filter configured for the broker. If a match is not found, the broker rejects the connection. This process is repeated on each broker in the cluster until a match is found and a broker accepts the connection.

Prerequisites

- A common string in each client ID that can be filtered by a regular expression.

Procedure

-

Open the

<broker_instance_dir>/etc/broker.xmlconfiguration file for the first broker. Create a

connection-routerselement and create aconnection-routeto filter part of the client ID. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow connection-route-

For the

connection-route name, specify an identifying string for this routing configuration. You must add this name to each broker acceptor that you want to apply the regular expression filter to. key-

The key to apply the filter to. To filter the client ID, specify

CLIENT_IDin thekeyfield. key-filterThe part of the client ID string to which the regular expression is applied to extract a key value. In the example for the first broker above, the broker extracts a key value that is the first 3 characters of the client ID. If, for example, the client ID string is

CL100.consumer, the broker extracts a key value ofCL1. After the broker extracts the key value, it compares it to the value of thelocal-target-filter.If an incoming connection does not have a client ID, or if the broker is unable to extract a key value by using the regular expression specified for the

key-filter, the key value is set to NULL.local-target-filter-

The value that the broker compares to the key value to determine if there is a match and the broker can accept the connection. A value of

NULL|CL1, as shown in the example for the first broker above, matches connections that have no client ID (NULL) or have a 3-character prefix ofCL1in the client ID.

-

Open the

<broker_instance_dir>/etc/broker.xmlconfiguration file for the second broker. Create a

connection-routerselement and create aconnection routeto filter connections based on a part of the client ID.In the following filter example, the broker uses a regular expression to extract a key value that is the first 3 characters of the client ID. The broker compares the values of

NULLandCL2to the key value to determine if there is a match and the broker can accept the connection.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Repeat this procedure and create the appropriate connection routing filter for each additional broker in the cluster.

14.7.2. Partitioning client connections to support data gravity

To support date gravity, you can partition client connections so that client consumers connect to the broker where the messages that they need to consume are produced. For example, if you have a set of addresses that are used by producer and consumer applications, you can configure the addresses on a specific broker. You can then partition client connections for both producers and consumers that use those addresses so they can only connect to that broker.

You can partition client connections based on attributes such as the role assigned to the connecting user, the username of the user, or the hostname or IP address of the client. This section shows an example of how to partition client connections by filtering user roles assigned to client users. If clients are required to authenticate to connect to brokers, you can assign roles to client users and filter connections so only users that match the role criteria can connect to a broker.

Prerequisites

- Clients are configured so they can connect to all of the brokers in the cluster, for example, by using a load balancer or by having all of the broker instances configured in the connection URL. If a broker rejects a connection because the client does not match the partitioning filter criteria configured for that broker, the client must be able to connect to the other brokers in the cluster to find a broker that accepts connections from it.

Procedure

-

Open the

<broker_instance_dir>/etc/artemis-roles.propertiesfile for the first broker. Add abroker1usersrole and add users to the role. -

Open the

<broker_instance_dir>/etc/broker.xmlconfiguration file for the first broker. Create a

connection-routerselement and create aconnection-routeto filter connections based on the roles assigned to users. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow connection-route-

For the

connection-route name, specify an identifying string for this routing configuration. You must add this name to each broker acceptor that you want to apply the role filter to. key-

The key to apply the filter to. To configure role-based filtering, specify

ROLE_NAMEin thekeyfield. key-filter-