Ce contenu n'est pas disponible dans la langue sélectionnée.

Chapter 5. Advanced configuration

As a storage administrator, you can configure some of the more advanced features of the Ceph Object Gateway. You can configure a multisite Ceph Object Gateway and integrate it with directory services, such as Microsoft Active Directory and OpenStack Keystone service.

5.1. Prerequisites

- A healthy running Red Hat Ceph Storage cluster.

5.2. Multi-site configuration and administration

As a storage administrator, you can configure and administer multiple Ceph Object Gateways for a variety of use cases. You can learn what to do during a disaster recovery and failover events. Also, you can learn more about realms, zones, and syncing policies in multi-site Ceph Object Gateway environments.

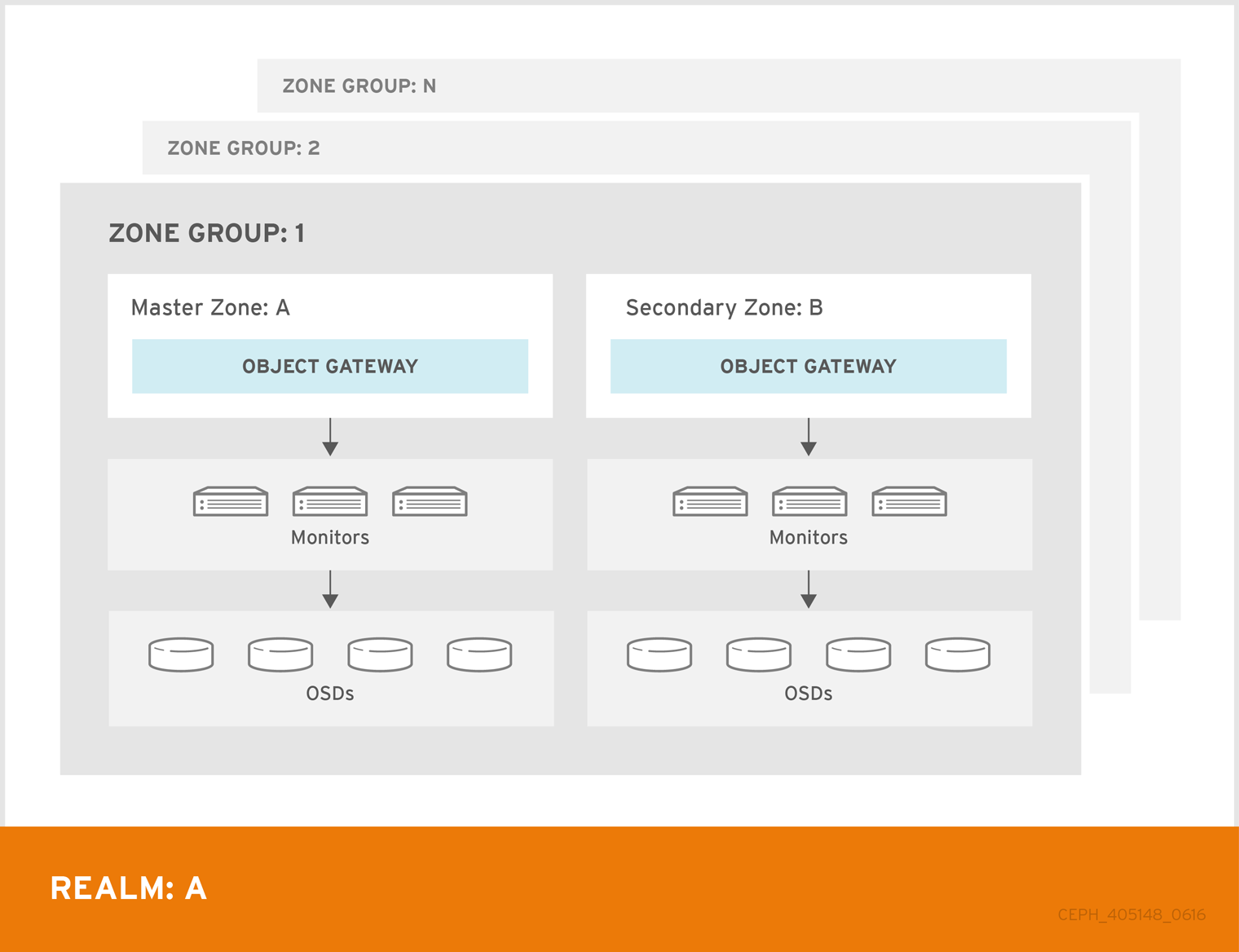

A single zone configuration typically consists of one zone group containing one zone and one or more ceph-radosgw instances where you may load-balance gateway client requests between the instances. In a single zone configuration, typically multiple gateway instances point to a single Ceph storage cluster. However, Red Hat supports several multi-site configuration options for the Ceph Object Gateway:

-

Multi-zone: A more advanced configuration consists of one zone group and multiple zones, each zone with one or more

ceph-radosgwinstances. Each zone is backed by its own Ceph Storage Cluster. Multiple zones in a zone group provides disaster recovery for the zone group should one of the zones experience a significant failure. Each zone is active and may receive write operations. In addition to disaster recovery, multiple active zones may also serve as a foundation for content delivery networks. - Multi-zone-group: Formerly called 'regions', the Ceph Object Gateway can also support multiple zone groups, each zone group with one or more zones. Objects stored to zone groups within the same realm share a global namespace, ensuring unique object IDs across zone groups and zones.

- Multiple Realms: The Ceph Object Gateway supports the notion of realms, which can be a single zone group or multiple zone groups and a globally unique namespace for the realm. Multiple realms provides the ability to support numerous configurations and namespaces.

Prerequisites

- A healthy running Red Hat Ceph Storage cluster.

- Deployment of the Ceph Object Gateway software.

5.2.1. Requirements and Assumptions

A multi-site configuration requires at least two Ceph storage clusters, and At least two Ceph object gateway instances, one for each Ceph storage cluster.

This guide assumes at least two Ceph storage clusters in geographically separate locations; however, the configuration can work on the same physical site. This guide also assumes four Ceph object gateway servers named rgw1, rgw2, rgw3 and rgw4 respectively.

A multi-site configuration requires a master zone group and a master zone. Additionally, each zone group requires a master zone. Zone groups may have one or more secondary or non-master zones.

When planning network considerations for multi-site, it is important to understand the relation bandwidth and latency observed on the multi-site synchronization network and the clients ingest rate in direct correlation with the current sync state of the objects owed to the secondary site. The network link between Red Hat Ceph Storage multi-site clusters must be able to handle the ingest into the primary cluster to maintain an effective recovery time on the secondary site. Multi-site synchronization is asynchronous and one of the limitations is the rate at which the sync gateways can process data across the link. An example to look at in terms of network inter-connectivity speed could be 1 GbE or inter-datacenter connectivity, for every 8 TB or cumulative receive data, per client gateway. Thus, if you replicate to two other sites, and ingest 16 TB a day, you need 6 GbE of dedicated bandwidth for multi-site replication.

Red Hat also recommends private Ethernet or Dense wavelength-division multiplexing (DWDM) as a VPN over the internet is not ideal due to the additional overhead incurred.

The master zone within the master zone group of a realm is responsible for storing the master copy of the realm’s metadata, including users, quotas and buckets (created by the radosgw-admin CLI). This metadata gets synchronized to secondary zones and secondary zone groups automatically. Metadata operations executed with the radosgw-admin CLI MUST be executed on a host within the master zone of the master zone group in order to ensure that they get synchronized to the secondary zone groups and zones. Currently, it is possible to execute metadata operations on secondary zones and zone groups, but it is NOT recommended because they WILL NOT be synchronized, leading to fragmented metadata.

In the following examples, the rgw1 host will serve as the master zone of the master zone group; the rgw2 host will serve as the secondary zone of the master zone group; the rgw3 host will serve as the master zone of the secondary zone group; and the rgw4 host will serve as the secondary zone of the secondary zone group.

When you have a large cluster with more Ceph Object Gateways configured in a multi-site storage cluster, Red Hat recommends to dedicate not more than three sync-enabled Ceph Object Gateways with HAProxy load balancer per site for multi-site synchronization. If there are more than three syncing Ceph Object Gateways, it has diminishing returns sync rate in terms of performance and the increased contention creates an incremental risk for hitting timing-related error conditions. This is due to a sync-fairness known issue BZ#1740782.

For the rest of the Ceph Object Gateways in such a configuration, which are dedicated for client I/O operations through load balancers, run the ceph config set client.rgw.CLIENT_NODE rgw_run_sync_thread false command to prevent them from performing sync operations, and then restart the Ceph Object Gateway.

Following is a typical configuration file for HAProxy for syncing gateways:

Example

5.2.2. Pools

Red Hat recommends using the Ceph Placement Group’s per Pool Calculator to calculate a suitable number of placement groups for the pools the radosgw daemon will create. Set the calculated values as defaults in the Ceph configuration database.

Example

[ceph: root@host01 /]# ceph config set osd osd_pool_default_pg_num 50 [ceph: root@host01 /]# ceph config set osd osd_pool_default_pgp_num 50

[ceph: root@host01 /]# ceph config set osd osd_pool_default_pg_num 50

[ceph: root@host01 /]# ceph config set osd osd_pool_default_pgp_num 50Making this change to the Ceph configuration will use those defaults when the Ceph Object Gateway instance creates the pools. Alternatively, you can create the pools manually.

Pool names particular to a zone follow the naming convention ZONE_NAME.POOL_NAME. For example, a zone named us-east will have the following pools:

-

.rgw.root -

us-east.rgw.control -

us-east.rgw.meta -

us-east.rgw.log -

us-east.rgw.buckets.index -

us-east.rgw.buckets.data -

us-east.rgw.buckets.non-ec -

us-east.rgw.meta:users.keys -

us-east.rgw.meta:users.email -

us-east.rgw.meta:users.swift -

us-east.rgw.meta:users.uid

5.2.3. Migrating a single site system to multi-site

To migrate from a single site system with a default zone group and zone to a multi-site system, use the following steps:

Create a realm. Replace

NAMEwith the realm name.Syntax

radosgw-admin realm create --rgw-realm=NAME --default

radosgw-admin realm create --rgw-realm=NAME --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Rename the default zone and zonegroup. Replace

<name>with the zonegroup or zone name.Syntax

radosgw-admin zonegroup rename --rgw-zonegroup default --zonegroup-new-name=NEW_ZONE_GROUP_NAME radosgw-admin zone rename --rgw-zone default --zone-new-name us-east-1 --rgw-zonegroup=ZONE_GROUP_NAME

radosgw-admin zonegroup rename --rgw-zonegroup default --zonegroup-new-name=NEW_ZONE_GROUP_NAME radosgw-admin zone rename --rgw-zone default --zone-new-name us-east-1 --rgw-zonegroup=ZONE_GROUP_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the primary zonegroup. Replace

NAMEwith the realm or zonegroup name. ReplaceFQDNwith the fully qualified domain name(s) in the zonegroup.Syntax

radosgw-admin zonegroup modify --rgw-realm=REALM_NAME --rgw-zonegroup=ZONE_GROUP_NAME --endpoints http://FQDN:80 --master --default

radosgw-admin zonegroup modify --rgw-realm=REALM_NAME --rgw-zonegroup=ZONE_GROUP_NAME --endpoints http://FQDN:80 --master --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a system user. Replace

USER_IDwith the username. ReplaceDISPLAY_NAMEwith a display name. It can contain spaces.Syntax

radosgw-admin user create --uid=USER_ID \ --display-name="DISPLAY_NAME" \ --access-key=ACCESS_KEY --secret=SECRET_KEY \ --systemradosgw-admin user create --uid=USER_ID \ --display-name="DISPLAY_NAME" \ --access-key=ACCESS_KEY --secret=SECRET_KEY \ --systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the primary zone. Replace

NAMEwith the realm, zonegroup, or zone name. ReplaceFQDNwith the fully qualified domain name(s) in the zonegroup.Syntax

radosgw-admin zone modify --rgw-realm=REALM_NAME --rgw-zonegroup=ZONE_GROUP_NAME \ --rgw-zone=ZONE_NAME --endpoints http://FQDN:80 \ --access-key=ACCESS_KEY --secret=SECRET_KEY \ --master --defaultradosgw-admin zone modify --rgw-realm=REALM_NAME --rgw-zonegroup=ZONE_GROUP_NAME \ --rgw-zone=ZONE_NAME --endpoints http://FQDN:80 \ --access-key=ACCESS_KEY --secret=SECRET_KEY \ --master --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If you specified the realm and zone in the service specification during the deployment of the Ceph Object Gateway, update the

specsection of the specification file:Syntax

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAME

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the Ceph configuration database:

Syntax

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone PRIMARY_ZONE_NAME

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone PRIMARY_ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm test_realm [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup us [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone us-east-1

[ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm test_realm [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup us [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone us-east-1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Commit the updated configuration:

Example

[ceph: root@host01 /]# radosgw-admin period update --commit

[ceph: root@host01 /]# radosgw-admin period update --commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the Ceph Object Gateway:

NoteUse the output from the

ceph orch pscommand, under theNAMEcolumn, to get the SERVICE_TYPE.ID information.To restart the Ceph Object Gateway on an individual node in the storage cluster:

Syntax

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.service

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.service

[root@host01 ~]# systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow To restart the Ceph Object Gateways on all nodes in the storage cluster:

Syntax

ceph orch restart SERVICE_TYPE

ceph orch restart SERVICE_TYPECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch restart rgw

[ceph: root@host01 /]# ceph orch restart rgwCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Establish the secondary zone. See the Establishing a secondary zone section.

5.2.4. Establishing a secondary zone

Zones within a zone group replicate all data to ensure that each zone has the same data. When creating the secondary zone, issue ALL of the radosgw-admin zone operations on a host identified to serve the secondary zone.

To add a additional zones, follow the same procedures as for adding the secondary zone. Use a different zone name.

-

You must run metadata operations, such as user creation and quotas, on a host within the master zone of the master zonegroup. The master zone and the secondary zone can receive bucket operations from the RESTful APIs, but the secondary zone redirects bucket operations to the master zone. If the master zone is down, bucket operations will fail. If you create a bucket using the

radosgw-adminCLI, you must run it on a host within the master zone of the master zone group so that the buckets will synchronize with other zone groups and zones. -

Bucket creation for a particular user is not supported, even if you create a user in the secondary zone with

--yes-i-really-mean-it.

Prerequisites

- At least two running Red Hat Ceph Storage clusters.

- At least two Ceph Object Gateway instances, one for each Red Hat Ceph Storage cluster.

- Root-level access to all the nodes.

- Nodes or containers are added to the storage cluster.

- All Ceph Manager, Monitor, and OSD daemons are deployed.

Procedure

Log into the

cephadmshell:Example

cephadm shell

[root@host04 ~]# cephadm shellCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the primary realm configuration from the host:

Syntax

radosgw-admin realm pull --url=URL_TO_PRIMARY_ZONE_GATEWAY --access-key=ACCESS_KEY --secret-key=SECRET_KEY

radosgw-admin realm pull --url=URL_TO_PRIMARY_ZONE_GATEWAY --access-key=ACCESS_KEY --secret-key=SECRET_KEYCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# radosgw-admin realm pull --url=http://10.74.249.26:80 --access-key=LIPEYZJLTWXRKXS9LPJC --secret-key=IsAje0AVDNXNw48LjMAimpCpI7VaxJYSnfD0FFKQ

[ceph: root@host04 /]# radosgw-admin realm pull --url=http://10.74.249.26:80 --access-key=LIPEYZJLTWXRKXS9LPJC --secret-key=IsAje0AVDNXNw48LjMAimpCpI7VaxJYSnfD0FFKQCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the primary period configuration from the host:

Syntax

radosgw-admin period pull --url=URL_TO_PRIMARY_ZONE_GATEWAY --access-key=ACCESS_KEY --secret-key=SECRET_KEY

radosgw-admin period pull --url=URL_TO_PRIMARY_ZONE_GATEWAY --access-key=ACCESS_KEY --secret-key=SECRET_KEYCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# radosgw-admin period pull --url=http://10.74.249.26:80 --access-key=LIPEYZJLTWXRKXS9LPJC --secret-key=IsAje0AVDNXNw48LjMAimpCpI7VaxJYSnfD0FFKQ

[ceph: root@host04 /]# radosgw-admin period pull --url=http://10.74.249.26:80 --access-key=LIPEYZJLTWXRKXS9LPJC --secret-key=IsAje0AVDNXNw48LjMAimpCpI7VaxJYSnfD0FFKQCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure a secondary zone:

NoteAll zones run in an active-active configuration by default; that is, a gateway client might write data to any zone and the zone will replicate the data to all other zones within the zone group. If the secondary zone should not accept write operations, specify the

`--read-onlyflag to create an active-passive configuration between the master zone and the secondary zone. Additionally, provide theaccess_keyandsecret_keyof the generated system user stored in the master zone of the master zone group.Syntax

radosgw-admin zone create --rgw-zonegroup=_ZONE_GROUP_NAME_ \ --rgw-zone=_SECONDARY_ZONE_NAME_ --endpoints=http://_RGW_SECONDARY_HOSTNAME_:_RGW_PRIMARY_PORT_NUMBER_1_ \ --access-key=_SYSTEM_ACCESS_KEY_ --secret=_SYSTEM_SECRET_KEY_ \ [--read-only]radosgw-admin zone create --rgw-zonegroup=_ZONE_GROUP_NAME_ \ --rgw-zone=_SECONDARY_ZONE_NAME_ --endpoints=http://_RGW_SECONDARY_HOSTNAME_:_RGW_PRIMARY_PORT_NUMBER_1_ \ --access-key=_SYSTEM_ACCESS_KEY_ --secret=_SYSTEM_SECRET_KEY_ \ [--read-only]Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# radosgw-admin zone create --rgw-zonegroup=us --rgw-zone=us-east-2 --endpoints=http://rgw2:80 --access-key=LIPEYZJLTWXRKXS9LPJC --secret-key=IsAje0AVDNXNw48LjMAimpCpI7VaxJYSnfD0FFKQ

[ceph: root@host04 /]# radosgw-admin zone create --rgw-zonegroup=us --rgw-zone=us-east-2 --endpoints=http://rgw2:80 --access-key=LIPEYZJLTWXRKXS9LPJC --secret-key=IsAje0AVDNXNw48LjMAimpCpI7VaxJYSnfD0FFKQCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Delete the default zone:

ImportantDo not delete the default zone and its pools if you are using the default zone and zone group to store data.

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If you specified the realm and zone in the service specification during the deployment of the Ceph Object Gateway, update the

specsection of the specification file:Syntax

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAME

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the Ceph configuration database:

Syntax

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone SECONDARY_ZONE_NAME

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone SECONDARY_ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm test_realm [ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup us [ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone us-east-2

[ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm test_realm [ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup us [ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone us-east-2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Commit the changes:

Syntax

radosgw-admin period update --commit

radosgw-admin period update --commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# radosgw-admin period update --commit

[ceph: root@host04 /]# radosgw-admin period update --commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Outside the

cephadmshell, fetch the FSID of the storage cluster and the processes:Example

systemctl list-units | grep ceph

[root@host04 ~]# systemctl list-units | grep cephCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start the Ceph Object Gateway daemon:

Syntax

systemctl start ceph-FSID@DAEMON_NAME systemctl enable ceph-FSID@DAEMON_NAME

systemctl start ceph-FSID@DAEMON_NAME systemctl enable ceph-FSID@DAEMON_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

systemctl start ceph-62a081a6-88aa-11eb-a367-001a4a000672@rgw.test_realm.us-east-2.host04.ahdtsw.service systemctl enable ceph-62a081a6-88aa-11eb-a367-001a4a000672@rgw.test_realm.us-east-2.host04.ahdtsw.service

[root@host04 ~]# systemctl start ceph-62a081a6-88aa-11eb-a367-001a4a000672@rgw.test_realm.us-east-2.host04.ahdtsw.service [root@host04 ~]# systemctl enable ceph-62a081a6-88aa-11eb-a367-001a4a000672@rgw.test_realm.us-east-2.host04.ahdtsw.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.5. Configuring the archive zone (Technology Preview)

Ensure you have a realm before configuring a zone as an archive. Without a realm, you cannot archive data through an archive zone for default zone/zonegroups.

The archive sync module uses the versioning feature of S3 objects in Ceph Object Gateway to have an archive zone. The archive zone has a history of versions of S3 objects that can only be eliminated through the gateways that are associated with the archive zone. It captures all the data updates and metadata to consolidate them as versions of S3 objects.

The archive sync module is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs), might not be functionally complete, and Red Hat does not recommend using them for production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. See the support scope for Red Hat Technology Preview features for more details.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to a Ceph Monitor node.

- Installation of the Ceph Object Gateway software.

Procedure

Configure the archive zone when creating a new zone by using the

archivetier:Syntax

radosgw-admin zone create --rgw-zonegroup={ZONE_GROUP_NAME} --rgw-zone={ZONE_NAME} --endpoints={http://FQDN:PORT},{http://FQDN:PORT} --tier-type=archiveradosgw-admin zone create --rgw-zonegroup={ZONE_GROUP_NAME} --rgw-zone={ZONE_NAME} --endpoints={http://FQDN:PORT},{http://FQDN:PORT} --tier-type=archiveCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin zone create --rgw-zonegroup=us --rgw-zone=us-east --endpoints={http://example.com:8080} --tier-type=archive[ceph: root@host01 /]# radosgw-admin zone create --rgw-zonegroup=us --rgw-zone=us-east --endpoints={http://example.com:8080} --tier-type=archiveCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.5.1. Deleting objects in archive zone

You can use an S3 lifecycle policy extension to delete objects within an <ArchiveZone> element.

Archive zone objects can only be deleted using the expiration lifecycle policy rule.

-

If any

<Rule>section contains an<ArchiveZone>element, that rule executes in archive zone and are the ONLY rules which run in an archive zone. -

Rules marked

<ArchiveZone>do NOT execute in non-archive zones.

The rules within the lifecycle policy determine when and what objects to delete. For more information about lifecycle creation and management, see Bucket lifecycle.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to a Ceph Monitor node.

- Installation of the Ceph Object Gateway software.

Procedure

Set the

<ArchiveZone>lifecycle policy rule. For more information about creating a lifecycle policy, see See the Creating a lifecycle management policy section in the Red Hat Ceph Storage Object Gateway Guide for more details.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: See if a specific lifecycle policy contains an archive zone rule.

Syntax

radosgw-admin lc get --bucket BUCKET_NAME

radosgw-admin lc get --bucket BUCKET_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the Ceph Object Gateway user is deleted, the buckets at the archive site owned by that user is inaccessible. Link those buckets to another Ceph Object Gateway user to access the data.

Syntax

radosgw-admin bucket link --uid NEW_USER_ID --bucket BUCKET_NAME --yes-i-really-mean-it

radosgw-admin bucket link --uid NEW_USER_ID --bucket BUCKET_NAME --yes-i-really-mean-itCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin bucket link --uid arcuser1 --bucket arc1-deleted-da473fbbaded232dc5d1e434675c1068 --yes-i-really-mean-it

[ceph: root@host01 /]# radosgw-admin bucket link --uid arcuser1 --bucket arc1-deleted-da473fbbaded232dc5d1e434675c1068 --yes-i-really-mean-itCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.5.2. Deleting objects in archive module

Starting from Red Hat Ceph Storage 5.3 and later, you can use an S3 lifecycle policy extension to delete objects within an <ArchiveZone> element.

-

If any

<Rule>section contains an<ArchiveZone>element, that rule executes in archive zone and are the ONLY rules which run in an archive zone. -

Rules marked

<ArchiveZone>do NOT execute in non-archive zones.

The rules within the lifecycle policy determine when and what objects to delete. For more information about lifecycle creation and management, see Bucket lifecycle.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to a Ceph Monitor node.

- Installation of the Ceph Object Gateway software.

Procedure

Set the

<ArchiveZone>lifecycle policy rule. For more information about creating a lifecycle policy, see * See the Creating a lifecycle management policy section in the Red Hat Ceph Storage Object Gateway Guide for more details.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: See if a specific lifecycle policy contains an archive zone rule.

Syntax

radosgw-admin lc get -- _BUCKET_NAME_

radosgw-admin lc get -- _BUCKET_NAME_Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.6. Failover and disaster recovery

If the primary zone fails, failover to the secondary zone for disaster recovery.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to a Ceph Monitor node.

- Installation of the Ceph Object Gateway software.

Procedure

Make the secondary zone the primary and default zone. For example:

Syntax

radosgw-admin zone modify --rgw-zone=ZONE_NAME --master --default

radosgw-admin zone modify --rgw-zone=ZONE_NAME --master --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow By default, Ceph Object Gateway runs in an active-active configuration. If the cluster was configured to run in an active-passive configuration, the secondary zone is a read-only zone. Remove the

--read-onlystatus to allow the zone to receive write operations. For example:Syntax

radosgw-admin zone modify --rgw-zone=ZONE_NAME --master --default --read-only=false

radosgw-admin zone modify --rgw-zone=ZONE_NAME --master --default --read-only=falseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the period to make the changes take effect:

Example

[ceph: root@host01 /]# radosgw-admin period update --commit

[ceph: root@host01 /]# radosgw-admin period update --commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the Ceph Object Gateway.

NoteUse the output from the

ceph orch pscommand, under theNAMEcolumn, to get the SERVICE_TYPE.ID information.To restart the Ceph Object Gateway on an individual node in the storage cluster:

Syntax

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.service

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.service

[root@host01 ~]# systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow To restart the Ceph Object Gateways on all nodes in the storage cluster:

Syntax

ceph orch restart SERVICE_TYPE

ceph orch restart SERVICE_TYPECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch restart rgw

[ceph: root@host01 /]# ceph orch restart rgwCopy to Clipboard Copied! Toggle word wrap Toggle overflow

If the former primary zone recovers, revert the operation.

From the recovered zone, pull the realm from the current primary zone:

Syntax

radosgw-admin realm pull --url=URL_TO_PRIMARY_ZONE_GATEWAY \ --access-key=ACCESS_KEY --secret=SECRET_KEYradosgw-admin realm pull --url=URL_TO_PRIMARY_ZONE_GATEWAY \ --access-key=ACCESS_KEY --secret=SECRET_KEYCopy to Clipboard Copied! Toggle word wrap Toggle overflow Make the recovered zone the primary and default zone:

Syntax

radosgw-admin zone modify --rgw-zone=ZONE_NAME --master --default

radosgw-admin zone modify --rgw-zone=ZONE_NAME --master --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the period to make the changes take effect:

Example

[ceph: root@host01 /]# radosgw-admin period update --commit

[ceph: root@host01 /]# radosgw-admin period update --commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the Ceph Object Gateway in the recovered zone:

Syntax

ceph orch restart SERVICE_TYPE

ceph orch restart SERVICE_TYPECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch restart rgw

[ceph: root@host01 /]# ceph orch restart rgwCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the secondary zone needs to be a read-only configuration, update the secondary zone:

Syntax

radosgw-admin zone modify --rgw-zone=ZONE_NAME --read-only radosgw-admin zone modify --rgw-zone=ZONE_NAME --read-only

radosgw-admin zone modify --rgw-zone=ZONE_NAME --read-only radosgw-admin zone modify --rgw-zone=ZONE_NAME --read-onlyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the period to make the changes take effect:

Example

[ceph: root@host01 /]# radosgw-admin period update --commit

[ceph: root@host01 /]# radosgw-admin period update --commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the Ceph Object Gateway in the secondary zone:

Syntax

ceph orch restart SERVICE_TYPE

ceph orch restart SERVICE_TYPECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch restart rgw

[ceph: root@host01 /]# ceph orch restart rgwCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.7. Synchronizing multi-site data logs

By default, in Red Hat Ceph Storage 4 and earlier versions, multi-site data logging is set to object map (OMAP) data logs.

It is recommended to use default datalog type.

You do not have to synchronize and trim everything down when switching. The Red Hat Ceph Storage cluster starts a data log of the requested type when you use the radosgw-admin data log type, and continues synchronizing and trimming the old log, purging it when it is empty, before going to the new log.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Ceph Object Gateway multi-site installed.

- Root-level access on all the nodes.

Procedure

View the type of data log:

Example

radosgw-admin datalog status { "marker": "1_1657793517.559260_543389.1", "last_update": "2022-07-14 10:11:57.559260Z" },[root@host01 ~]# radosgw-admin datalog status { "marker": "1_1657793517.559260_543389.1", "last_update": "2022-07-14 10:11:57.559260Z" },Copy to Clipboard Copied! Toggle word wrap Toggle overflow 1_in marker reflects OMAP data log type.Change the data log type to FIFO:

NoteConfiguration values are case-sensitive. Use

fifoin lowercase to set configuration options.NoteAfter upgrading from Red Hat Ceph Storage 4 to Red Hat Ceph Storage 5, change the default data log type to

fifo.Example

radosgw-admin --log-type fifo datalog type

[root@host01 ~]# radosgw-admin --log-type fifo datalog typeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the changes:

Example

radosgw-admin datalog status { "marker": "G00000000000000000001@00000000000000000037:00000000000003563105", "last_update": "2022-07-14T10:14:07.516629Z" },[root@host01 ~]# radosgw-admin datalog status { "marker": "G00000000000000000001@00000000000000000037:00000000000003563105", "last_update": "2022-07-14T10:14:07.516629Z" },Copy to Clipboard Copied! Toggle word wrap Toggle overflow :in marker reflects FIFO data log type.

5.2.8. Configuring multiple realms in the same storage cluster

You can configure multiple realms in the same storage cluster. This is a more advanced use case for multi-site. Configuring multiple realms in the same storage cluster enables you to use a local realm to handle local Ceph Object Gateway client traffic, as well as a replicated realm for data that will be replicated to a secondary site.

Red Hat recommends that each realm has its own Ceph Object Gateway.

Prerequisites

- Two running Red Hat Ceph Storage data centers in a storage cluster.

- The access key and secret key for each data center in the storage cluster.

- Root-level access to all the Ceph Object Gateway nodes.

- Each data center has its own local realm. They share a realm that replicates on both sites.

Procedure

Create one local realm on the first data center in the storage cluster:

Syntax

radosgw-admin realm create --rgw-realm=REALM_NAME --default

radosgw-admin realm create --rgw-realm=REALM_NAME --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin realm create --rgw-realm=ldc1 --default

[ceph: root@host01 /]# radosgw-admin realm create --rgw-realm=ldc1 --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create one local master zonegroup on the first data center:

Syntax

radosgw-admin zonegroup create --rgw-zonegroup=ZONE_GROUP_NAME --endpoints=http://RGW_NODE_NAME:80 --rgw-realm=REALM_NAME --master --default

radosgw-admin zonegroup create --rgw-zonegroup=ZONE_GROUP_NAME --endpoints=http://RGW_NODE_NAME:80 --rgw-realm=REALM_NAME --master --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin zonegroup create --rgw-zonegroup=ldc1zg --endpoints=http://rgw1:80 --rgw-realm=ldc1 --master --default

[ceph: root@host01 /]# radosgw-admin zonegroup create --rgw-zonegroup=ldc1zg --endpoints=http://rgw1:80 --rgw-realm=ldc1 --master --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create one local zone on the first data center:

Syntax

radosgw-admin zone create --rgw-zonegroup=ZONE_GROUP_NAME --rgw-zone=ZONE_NAME --master --default --endpoints=HTTP_FQDN[,HTTP_FQDN]

radosgw-admin zone create --rgw-zonegroup=ZONE_GROUP_NAME --rgw-zone=ZONE_NAME --master --default --endpoints=HTTP_FQDN[,HTTP_FQDN]Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin zone create --rgw-zonegroup=ldc1zg --rgw-zone=ldc1z --master --default --endpoints=http://rgw.example.com

[ceph: root@host01 /]# radosgw-admin zone create --rgw-zonegroup=ldc1zg --rgw-zone=ldc1z --master --default --endpoints=http://rgw.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Commit the period:

Example

[ceph: root@host01 /]# radosgw-admin period update --commit

[ceph: root@host01 /]# radosgw-admin period update --commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If you specified the realm and zone in the service specification during the deployment of the Ceph Object Gateway, update the

specsection of the specification file:Syntax

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAME

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow You can either deploy the Ceph Object Gateway daemons with the appropriate realm and zone or update the configuration database:

Deploy the Ceph Object Gateway using placement specification:

Syntax

ceph orch apply rgw SERVICE_NAME --realm=REALM_NAME --zone=ZONE_NAME --placement="NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_2"

ceph orch apply rgw SERVICE_NAME --realm=REALM_NAME --zone=ZONE_NAME --placement="NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_2"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch apply rgw rgw --realm=ldc1 --zone=ldc1z --placement="1 host01"

[ceph: root@host01 /]# ceph orch apply rgw rgw --realm=ldc1 --zone=ldc1z --placement="1 host01"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the Ceph configuration database:

Syntax

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone ZONE_NAME

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm ldc1 [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup ldc1zg [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone ldc1z

[ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm ldc1 [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup ldc1zg [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone ldc1zCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Restart the Ceph Object Gateway.

NoteUse the output from the

ceph orch pscommand, under theNAMEcolumn, to get the SERVICE_TYPE.ID information.To restart the Ceph Object Gateway on an individual node in the storage cluster:

Syntax

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.service

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.service

[root@host01 ~]# systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow To restart the Ceph Object Gateways on all nodes in the storage cluster:

Syntax

ceph orch restart SERVICE_TYPE

ceph orch restart SERVICE_TYPECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch restart rgw

[ceph: root@host01 /]# ceph orch restart rgwCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create one local realm on the second data center in the storage cluster:

Syntax

radosgw-admin realm create --rgw-realm=REALM_NAME --default

radosgw-admin realm create --rgw-realm=REALM_NAME --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# radosgw-admin realm create --rgw-realm=ldc2 --default

[ceph: root@host04 /]# radosgw-admin realm create --rgw-realm=ldc2 --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create one local master zonegroup on the second data center:

Syntax

radosgw-admin zonegroup create --rgw-zonegroup=ZONE_GROUP_NAME --endpoints=http://RGW_NODE_NAME:80 --rgw-realm=REALM_NAME --master --default

radosgw-admin zonegroup create --rgw-zonegroup=ZONE_GROUP_NAME --endpoints=http://RGW_NODE_NAME:80 --rgw-realm=REALM_NAME --master --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# radosgw-admin zonegroup create --rgw-zonegroup=ldc2zg --endpoints=http://rgw2:80 --rgw-realm=ldc2 --master --default

[ceph: root@host04 /]# radosgw-admin zonegroup create --rgw-zonegroup=ldc2zg --endpoints=http://rgw2:80 --rgw-realm=ldc2 --master --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create one local zone on the second data center:

Syntax

radosgw-admin zone create --rgw-zonegroup=ZONE_GROUP_NAME --rgw-zone=ZONE_NAME --master --default --endpoints=HTTP_FQDN[, HTTP_FQDN]

radosgw-admin zone create --rgw-zonegroup=ZONE_GROUP_NAME --rgw-zone=ZONE_NAME --master --default --endpoints=HTTP_FQDN[, HTTP_FQDN]Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# radosgw-admin zone create --rgw-zonegroup=ldc2zg --rgw-zone=ldc2z --master --default --endpoints=http://rgw.example.com

[ceph: root@host04 /]# radosgw-admin zone create --rgw-zonegroup=ldc2zg --rgw-zone=ldc2z --master --default --endpoints=http://rgw.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Commit the period:

Example

[ceph: root@host04 /]# radosgw-admin period update --commit

[ceph: root@host04 /]# radosgw-admin period update --commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If you specified the realm and zone in the service specification during the deployment of the Ceph Object Gateway, update the

specsection of the specification file:Syntax

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAME

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow You can either deploy the Ceph Object Gateway daemons with the appropriate realm and zone or update the configuration database:

Deploy the Ceph Object Gateway using placement specification:

Syntax

ceph orch apply rgw SERVICE_NAME --realm=REALM_NAME --zone=ZONE_NAME --placement="NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_2"

ceph orch apply rgw SERVICE_NAME --realm=REALM_NAME --zone=ZONE_NAME --placement="NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_2"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch apply rgw rgw --realm=ldc2 --zone=ldc2z --placement="1 host01"

[ceph: root@host01 /]# ceph orch apply rgw rgw --realm=ldc2 --zone=ldc2z --placement="1 host01"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the Ceph configuration database:

Syntax

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone ZONE_NAME

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm ldc2 [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup ldc2zg [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone ldc2z

[ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm ldc2 [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup ldc2zg [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone ldc2zCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Restart the Ceph Object Gateway.

NoteUse the output from the

ceph orch pscommand, under theNAMEcolumn, to get the SERVICE_TYPE.ID information.To restart the Ceph Object Gateway on individual node in the storage cluster:

Syntax

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.service

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.service

[root@host04 ~]# systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow To restart the Ceph Object Gateways on all nodes in the storage cluster:

Syntax

ceph orch restart SERVICE_TYPE

ceph orch restart SERVICE_TYPECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# ceph orch restart rgw

[ceph: root@host04 /]# ceph orch restart rgwCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a replicated realm on the first data center in the storage cluster:

Syntax

radosgw-admin realm create --rgw-realm=REPLICATED_REALM_1 --default

radosgw-admin realm create --rgw-realm=REPLICATED_REALM_1 --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin realm create --rgw-realm=rdc1 --default

[ceph: root@host01 /]# radosgw-admin realm create --rgw-realm=rdc1 --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

--defaultflag to make the replicated realm default on the primary site.Create a master zonegroup for the first data center:

Syntax

radosgw-admin zonegroup create --rgw-zonegroup=RGW_ZONE_GROUP --endpoints=http://_RGW_NODE_NAME:80 --rgw-realm=_RGW_REALM_NAME --master --default

radosgw-admin zonegroup create --rgw-zonegroup=RGW_ZONE_GROUP --endpoints=http://_RGW_NODE_NAME:80 --rgw-realm=_RGW_REALM_NAME --master --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin zonegroup create --rgw-zonegroup=rdc1zg --endpoints=http://rgw1:80 --rgw-realm=rdc1 --master --default

[ceph: root@host01 /]# radosgw-admin zonegroup create --rgw-zonegroup=rdc1zg --endpoints=http://rgw1:80 --rgw-realm=rdc1 --master --defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a master zone on the first data center:

Syntax

radosgw-admin zone create --rgw-zonegroup=RGW_ZONE_GROUP --rgw-zone=_MASTER_RGW_NODE_NAME --master --default --endpoints=HTTP_FQDN[,HTTP_FQDN]

radosgw-admin zone create --rgw-zonegroup=RGW_ZONE_GROUP --rgw-zone=_MASTER_RGW_NODE_NAME --master --default --endpoints=HTTP_FQDN[,HTTP_FQDN]Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin zone create --rgw-zonegroup=rdc1zg --rgw-zone=rdc1z --master --default --endpoints=http://rgw.example.com

[ceph: root@host01 /]# radosgw-admin zone create --rgw-zonegroup=rdc1zg --rgw-zone=rdc1z --master --default --endpoints=http://rgw.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a synchronization user and add the system user to the master zone for multi-site:

Syntax

radosgw-admin user create --uid="SYNCHRONIZATION_USER" --display-name="Synchronization User" --system radosgw-admin zone modify --rgw-zone=RGW_ZONE --access-key=ACCESS_KEY --secret=SECRET_KEY

radosgw-admin user create --uid="SYNCHRONIZATION_USER" --display-name="Synchronization User" --system radosgw-admin zone modify --rgw-zone=RGW_ZONE --access-key=ACCESS_KEY --secret=SECRET_KEYCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

radosgw-admin user create --uid="synchronization-user" --display-name="Synchronization User" --system [ceph: root@host01 /]# radosgw-admin zone modify --rgw-zone=rdc1zg --access-key=3QV0D6ZMMCJZMSCXJ2QJ --secret=VpvQWcsfI9OPzUCpR4kynDLAbqa1OIKqRB6WEnH8

radosgw-admin user create --uid="synchronization-user" --display-name="Synchronization User" --system [ceph: root@host01 /]# radosgw-admin zone modify --rgw-zone=rdc1zg --access-key=3QV0D6ZMMCJZMSCXJ2QJ --secret=VpvQWcsfI9OPzUCpR4kynDLAbqa1OIKqRB6WEnH8Copy to Clipboard Copied! Toggle word wrap Toggle overflow Commit the period:

Example

[ceph: root@host01 /]# radosgw-admin period update --commit

[ceph: root@host01 /]# radosgw-admin period update --commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If you specified the realm and zone in the service specification during the deployment of the Ceph Object Gateway, update the

specsection of the specification file:Syntax

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAME

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow You can either deploy the Ceph Object Gateway daemons with the appropriate realm and zone or update the configuration database:

Deploy the Ceph Object Gateway using placement specification:

Syntax

ceph orch apply rgw SERVICE_NAME --realm=REALM_NAME --zone=ZONE_NAME --placement="NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_2"

ceph orch apply rgw SERVICE_NAME --realm=REALM_NAME --zone=ZONE_NAME --placement="NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_2"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch apply rgw rgw --realm=rdc1 --zone=rdc1z --placement="1 host01"

[ceph: root@host01 /]# ceph orch apply rgw rgw --realm=rdc1 --zone=rdc1z --placement="1 host01"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the Ceph configuration database:

Syntax

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone ZONE_NAME

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm rdc1 [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup rdc1zg [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone rdc1z

[ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm rdc1 [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup rdc1zg [ceph: root@host01 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone rdc1zCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Restart the Ceph Object Gateway.

NoteUse the output from the

ceph orch pscommand, under theNAMEcolumn, to get the SERVICE_TYPE.ID information.To restart the Ceph Object Gateway on individual node in the storage cluster:

Syntax

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.service

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.service

[root@host01 ~]# systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow To restart the Ceph Object Gateways on all nodes in the storage cluster:

Syntax

ceph orch restart SERVICE_TYPE

ceph orch restart SERVICE_TYPECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch restart rgw

[ceph: root@host01 /]# ceph orch restart rgwCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Pull the replicated realm on the second data center:

Syntax

radosgw-admin realm pull --url=https://tower-osd1.cephtips.com --access-key=ACCESS_KEY --secret-key=SECRET_KEY

radosgw-admin realm pull --url=https://tower-osd1.cephtips.com --access-key=ACCESS_KEY --secret-key=SECRET_KEYCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin realm pull --url=https://tower-osd1.cephtips.com --access-key=3QV0D6ZMMCJZMSCXJ2QJ --secret-key=VpvQWcsfI9OPzUCpR4kynDLAbqa1OIKqRB6WEnH8

[ceph: root@host01 /]# radosgw-admin realm pull --url=https://tower-osd1.cephtips.com --access-key=3QV0D6ZMMCJZMSCXJ2QJ --secret-key=VpvQWcsfI9OPzUCpR4kynDLAbqa1OIKqRB6WEnH8Copy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the period from the first data center:

Syntax

radosgw-admin period pull --url=https://tower-osd1.cephtips.com --access-key=ACCESS_KEY --secret-key=SECRET_KEY

radosgw-admin period pull --url=https://tower-osd1.cephtips.com --access-key=ACCESS_KEY --secret-key=SECRET_KEYCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin period pull --url=https://tower-osd1.cephtips.com --access-key=3QV0D6ZMMCJZMSCXJ2QJ --secret-key=VpvQWcsfI9OPzUCpR4kynDLAbqa1OIKqRB6WEnH8

[ceph: root@host01 /]# radosgw-admin period pull --url=https://tower-osd1.cephtips.com --access-key=3QV0D6ZMMCJZMSCXJ2QJ --secret-key=VpvQWcsfI9OPzUCpR4kynDLAbqa1OIKqRB6WEnH8Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the secondary zone on the second data center:

Syntax

radosgw-admin zone create --rgw-zone=RGW_ZONE --rgw-zonegroup=RGW_ZONE_GROUP --endpoints=https://tower-osd4.cephtips.com --access-key=_ACCESS_KEY --secret-key=SECRET_KEY

radosgw-admin zone create --rgw-zone=RGW_ZONE --rgw-zonegroup=RGW_ZONE_GROUP --endpoints=https://tower-osd4.cephtips.com --access-key=_ACCESS_KEY --secret-key=SECRET_KEYCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# radosgw-admin zone create --rgw-zone=rdc2z --rgw-zonegroup=rdc1zg --endpoints=https://tower-osd4.cephtips.com --access-key=3QV0D6ZMMCJZMSCXJ2QJ --secret-key=VpvQWcsfI9OPzUCpR4kynDLAbqa1OIKqRB6WEnH8

[ceph: root@host04 /]# radosgw-admin zone create --rgw-zone=rdc2z --rgw-zonegroup=rdc1zg --endpoints=https://tower-osd4.cephtips.com --access-key=3QV0D6ZMMCJZMSCXJ2QJ --secret-key=VpvQWcsfI9OPzUCpR4kynDLAbqa1OIKqRB6WEnH8Copy to Clipboard Copied! Toggle word wrap Toggle overflow Commit the period:

Example

[ceph: root@host04 /]# radosgw-admin period update --commit

[ceph: root@host04 /]# radosgw-admin period update --commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If you specified the realm and zone in the service specification during the deployment of the Ceph Object Gateway, update the

specsection of the specification file:Syntax

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAME

spec: rgw_realm: REALM_NAME rgw_zone: ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow You can either deploy the Ceph Object Gateway daemons with the appropriate realm and zone or update the configuration database:

Deploy the Ceph Object Gateway using placement specification:

Syntax

ceph orch apply rgw SERVICE_NAME --realm=REALM_NAME --zone=ZONE_NAME --placement="NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_2"

ceph orch apply rgw SERVICE_NAME --realm=REALM_NAME --zone=ZONE_NAME --placement="NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_2"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# ceph orch apply rgw rgw --realm=rdc1 --zone=rdc2z --placement="1 host04"

[ceph: root@host04 /]# ceph orch apply rgw rgw --realm=rdc1 --zone=rdc2z --placement="1 host04"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the Ceph configuration database:

Syntax

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone ZONE_NAME

ceph config set client.rgw.SERVICE_NAME rgw_realm REALM_NAME ceph config set client.rgw.SERVICE_NAME rgw_zonegroup ZONE_GROUP_NAME ceph config set client.rgw.SERVICE_NAME rgw_zone ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm rdc1 [ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup rdc1zg [ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone rdc2z

[ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_realm rdc1 [ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zonegroup rdc1zg [ceph: root@host04 /]# ceph config set client.rgw.rgwsvcid.mons-1.jwgwwp rgw_zone rdc2zCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Restart the Ceph Object Gateway.

NoteUse the output from the

ceph orch pscommand, under theNAMEcolumn, to get the SERVICE_TYPE.ID information.To restart the Ceph Object Gateway on individual node in the storage cluster:

Syntax

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.service

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.service

[root@host02 ~]# systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow To restart the Ceph Object Gateways on all nodes in the storage cluster:

Syntax

ceph orch restart SERVICE_TYPE

ceph orch restart SERVICE_TYPECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# ceph orch restart rgw

[ceph: root@host04 /]# ceph orch restart rgwCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Log in as

rooton the endpoint for the second data center. Verify the synchronization status on the master realm:

Syntax

radosgw-admin sync status

radosgw-admin sync statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantIn Red Hat Ceph Storage 5.3z5 release,

compress-encryptedfeature is displayed withradosgw-admin sync statuscommand and it is disabled by default. Do not enable this feature as it is not supported until Red Hat Ceph Storage 6.1z2.-

Log in as

rooton the endpoint for the first data center. Verify the synchronization status for the replication-synchronization realm:

Syntax

radosgw-admin sync status --rgw-realm RGW_REALM_NAME

radosgw-admin sync status --rgw-realm RGW_REALM_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To store and access data in the local site, create the user for local realm:

Syntax

radosgw-admin user create --uid="LOCAL_USER" --display-name="Local user" --rgw-realm=_REALM_NAME --rgw-zonegroup=ZONE_GROUP_NAME --rgw-zone=ZONE_NAME

radosgw-admin user create --uid="LOCAL_USER" --display-name="Local user" --rgw-realm=_REALM_NAME --rgw-zonegroup=ZONE_GROUP_NAME --rgw-zone=ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host04 /]# radosgw-admin user create --uid="local-user" --display-name="Local user" --rgw-realm=ldc1 --rgw-zonegroup=ldc1zg --rgw-zone=ldc1z

[ceph: root@host04 /]# radosgw-admin user create --uid="local-user" --display-name="Local user" --rgw-realm=ldc1 --rgw-zonegroup=ldc1zg --rgw-zone=ldc1zCopy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantBy default, users are created under the default realm. For the users to access data in the local realm, the

radosgw-admincommand requires the--rgw-realmargument.

5.2.9. Using multi-site sync policies (Technology Preview)

The Ceph Object Gateway multi-site sync policies are a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs), might not be functionally complete, and Red Hat does not recommend using them for production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. See the support scope for Red Hat Technology Preview features for more details.

As a storage administrator, you can use multi-site sync policies at the bucket level to control data movement between buckets in different zones. These policies are called bucket-granularity sync policies. Previously, all buckets within zones were treated symmetrically. This means that each zone contained a mirror copy of a given bucket, and the copies of buckets were identical in all of the zones. The sync process assumed that the bucket sync source and the bucket sync destination referred to the same bucket.

Using bucket-granularity sync policies allows for the buckets in different zones to contain different data. This enables a bucket to pull data from other buckets in other zones, and those buckets do not have the same name or ID as the bucket pulling the data. In this case, the bucket sync source and the bucket sync destination refer to different buckets.

The sync policy supersedes the old zone group coarse configuration (sync_from*). The sync policy can be configured at the zone group level. If it is configured, it replaces the old-style configuration at the zone group level, but it can also be configured at the bucket level.

5.2.9.1. Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to a Ceph Monitor node.

- Installation of the Ceph Object Gateway software.

5.2.9.2. Multi-site sync policy group state

In the sync policy, multiple groups that can contain lists of data-flow configurations can be defined, as well as lists of pipe configurations. The data-flow defines the flow of data between the different zones. It can define symmetrical data flow, in which multiple zones sync data from each other, and it can define directional data flow, in which the data moves in one way from one zone to another.

A pipe defines the actual buckets that can use these data flows, and the properties that are associated with it, such as the source object prefix.

A sync policy group can be in 3 states:

- Enabled — sync is allowed and enabled.

- Allowed — sync is allowed.

- Forbidden — sync, as defined by this group, is not allowed. Sync states in this group can override other groups.

A policy can be defined at the bucket level. A bucket level sync policy inherits the data flow of the zonegroup policy, and can only define a subset of what the zonegroup allows.

A wildcard zone, and a wildcard bucket parameter in the policy defines all relevant zones, or all relevant buckets. In the context of a bucket policy it means the current bucket instance. A disaster recovery configuration where entire zones are mirrored does not require configuring anything on the buckets. However, for a fine grained bucket sync it would be better to configure the pipes to be synced by allowing (status=allowed) them at the zonegroup level, such as using wildcards, but only enable the specific sync at the bucket level (status=enabled). If needed, the policy at the bucket level can limit the data movement to specific relevant zones.

| ZoneGroup | Bucket | Sync in the bucket |

|---|---|---|

| enabled | enabled | enabled |

| enabled | allowed | enabled |

| enabled | forbidden | disabled |

| allowed | enabled | enabled |

| allowed | allowed | disabled |

| allowed | forbidden | disabled |

| forbidden | enabled | disabled |

| forbidden | allowed | disabled |

| forbidden | forbidden | disabled |

For multiple group polices that are set to reflect for any sync pair (SOURCE_ZONE, SOURCE_BUCKET), (DESTINATION_ZONE, DESTINATION_BUCKET), the following rules are applied in the following order:

-

Even if one sync policy is

forbidden, the sync isdisabled. -

At least one policy should be

enabledfor the sync to beallowed.

Sync states in this group can override other groups.

A wildcard zone, and a wildcard bucket parameter in the policy defines all relevant zones, or all relevant buckets. In the context of a bucket policy, it means the current bucket instance. A disaster recovery configuration where entire zones are mirrored does not require configuring anything on the buckets. However, for a fine grained bucket sync it would be better to configure the pipes to be synced by allowing (status=allowed) them at the zonegroup level (for example, by using wildcard). However, enable the specific sync at the bucket level (status=enabled) only.

Any changes to the zonegroup policy need to be applied on the zonegroup master zone, and require period update and commit. Changes to the bucket policy need to be applied on the zonegroup master zone. Ceph Object Gateway handles these changes dynamically.

5.2.9.3. Retrieving the current policy

You can use the get command to retrieve the current zonegroup sync policy, or a specific bucket policy.

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Root or

sudoaccess. - The Ceph Object Gateway is installed.

Procedure

Retrieve the current zonegroup sync policy or bucket policy. To retrieve a specific bucket policy, use the

--bucketoption:Syntax

radosgw-admin sync policy get --bucket=BUCKET_NAME

radosgw-admin sync policy get --bucket=BUCKET_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin sync policy get --bucket=mybucket

[ceph: root@host01 /]# radosgw-admin sync policy get --bucket=mybucketCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.9.4. Creating a sync policy group

You can create a sync policy group for the current zone group, or for a specific bucket.

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Root or

sudoaccess. - The Ceph Object Gateway is installed.

Procedure

Create a sync policy group or a bucket policy. To create a bucket policy, use the

--bucketoption:Syntax

radosgw-admin sync group create --bucket=BUCKET_NAME --group-id=GROUP_ID --status=enabled | allowed | forbidden

radosgw-admin sync group create --bucket=BUCKET_NAME --group-id=GROUP_ID --status=enabled | allowed | forbiddenCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin sync group create --group-id=mygroup1 --status=enabled

[ceph: root@host01 /]# radosgw-admin sync group create --group-id=mygroup1 --status=enabledCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.9.5. Modifying a sync policy group

You can modify an existing sync policy group for the current zone group, or for a specific bucket.

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Root or

sudoaccess. - The Ceph Object Gateway is installed.

Procedure

Modify the sync policy group or a bucket policy. To modify a bucket policy, use the

--bucketoption:Syntax

radosgw-admin sync group modify --bucket=BUCKET_NAME --group-id=GROUP_ID --status=enabled | allowed | forbidden

radosgw-admin sync group modify --bucket=BUCKET_NAME --group-id=GROUP_ID --status=enabled | allowed | forbiddenCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin sync group modify --group-id=mygroup1 --status=forbidden

[ceph: root@host01 /]# radosgw-admin sync group modify --group-id=mygroup1 --status=forbiddenCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.9.6. Get a sync policy group

You can use the group get command to show the current sync policy group by group ID, or to show a specific bucket policy.

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Root or

sudoaccess. - The Ceph Object Gateway is installed.

Procedure

Show the current sync policy group or bucket policy. To show a specific bucket policy, use the

--bucketoption:NoteIf

--bucketoption is not provided, then the group created at zonegroup-level is retrieved and not bucket-level.Syntax

radosgw-admin sync group get --bucket=BUCKET_NAME --group-id=GROUP_ID

radosgw-admin sync group get --bucket=BUCKET_NAME --group-id=GROUP_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin sync group get --group-id=mygroup

[ceph: root@host01 /]# radosgw-admin sync group get --group-id=mygroupCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.9.7. Removing a sync policy group

You can use the group remove command to remove the current sync policy group by group ID, or to remove a specific bucket policy.

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Root or

sudoaccess. - The Ceph Object Gateway is installed.

Procedure

Remove the current sync policy group or bucket policy. To remove a specific bucket policy, use the

--bucketoption:Syntax

radosgw-admin sync group remove --bucket=BUCKET_NAME --group-id=GROUP_ID

radosgw-admin sync group remove --bucket=BUCKET_NAME --group-id=GROUP_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# radosgw-admin sync group remove --group-id=mygroup

[ceph: root@host01 /]# radosgw-admin sync group remove --group-id=mygroupCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.9.8. Creating a sync flow

You can create two different types of flows for a sync policy group or for a specific bucket:

- Directional sync flow

- Symmetrical sync flow

The group flow create command creates a sync flow. If you issue the group flow create command for a sync policy group or bucket that already has a sync flow, the command overwrites the existing settings for the sync flow and applies the settings you specify.

| Option | Description | Required/Optional |

|---|---|---|

| --bucket | Name of the bucket to which the sync policy needs to be configured. Used only in bucket-level sync policy. | Optional |

| --group-id | ID of the sync group. | Required |

| --flow-id | ID of the flow. | Required |

| --flow-type | Types of flows for a sync policy group or for a specific bucket - directional or symmetrical. | Required |

| --source-zone | To specify the source zone from which sync should happen. Zone that send data to the sync group. Required if flow type of sync group is directional. | Optional |

| --dest-zone | To specify the destination zone to which sync should happen. Zone that receive data from the sync group. Required if flow type of sync group is directional. | Optional |

| --zones | Zones that part of the sync group. Zones mention will be both sender and receiver zone. Specify zones separated by ",". Required if flow type of sync group is symmetrical. | Optional |

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Root or

sudoaccess. - The Ceph Object Gateway is installed.

Procedure

Create or update a directional sync flow. To create or update directional sync flow for a specific bucket, use the

--bucketoption.Syntax

radosgw-admin sync group flow create --bucket=BUCKET_NAME --group-id=GROUP_ID --flow-id=FLOW_ID --flow-type=directional --source-zone=SOURCE_ZONE --dest-zone=DESTINATION_ZONE

radosgw-admin sync group flow create --bucket=BUCKET_NAME --group-id=GROUP_ID --flow-id=FLOW_ID --flow-type=directional --source-zone=SOURCE_ZONE --dest-zone=DESTINATION_ZONECopy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update a symmetrical sync flow. To specify multiple zones for a symmetrical flow type, use a comma-separated list for the

--zonesoption.Syntax

radosgw-admin sync group flow create --bucket=BUCKET_NAME --group-id=GROUP_ID --flow-id=FLOW_ID --flow-type=symmetrical --zones=ZONE_NAME

radosgw-admin sync group flow create --bucket=BUCKET_NAME --group-id=GROUP_ID --flow-id=FLOW_ID --flow-type=symmetrical --zones=ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.9.9. Removing sync flows and zones

The group flow remove command removes sync flows or zones from a sync policy group or bucket.

For sync policy groups or buckets using directional flows, group flow remove command removes the flow. For sync policy groups or buckets using symmetrical flows, you can use the group flow remove command to remove specified zones from the flow, or to remove the flow.

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Root or

sudoaccess. - The Ceph Object Gateway is installed.

Procedure

Remove a directional sync flow. To remove the directional sync flow for a specific bucket, use the

--bucketoption.Syntax

radosgw-admin sync group flow remove --bucket=BUCKET_NAME --group-id=GROUP_ID --flow-id=FLOW_ID --flow-type=directional --source-zone=SOURCE_ZONE --dest-zone=DESTINATION_ZONE

radosgw-admin sync group flow remove --bucket=BUCKET_NAME --group-id=GROUP_ID --flow-id=FLOW_ID --flow-type=directional --source-zone=SOURCE_ZONE --dest-zone=DESTINATION_ZONECopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove specific zones from a symmetrical sync flow. To remove multiple zones from a symmetrical flow, use a comma-separated list for the

--zonesoption.Syntax

radosgw-admin sync group flow remove --bucket=BUCKET_NAME --group-id=GROUP_ID --flow-id=FLOW_ID --flow-type=symmetrical --zones=ZONE_NAME

radosgw-admin sync group flow remove --bucket=BUCKET_NAME --group-id=GROUP_ID --flow-id=FLOW_ID --flow-type=symmetrical --zones=ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove a symmetrical sync flow. To remove the sync flow from a bucket, use the

--bucketoption.Syntax

radosgw-admin sync group flow remove --bucket=BUCKET_NAME --group-id=GROUP_ID --flow-id=FLOW_ID --flow-type=symmetrical --zones=ZONE_NAME

radosgw-admin sync group flow remove --bucket=BUCKET_NAME --group-id=GROUP_ID --flow-id=FLOW_ID --flow-type=symmetrical --zones=ZONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.9.10. Creating or modifying a sync group pipe

As a storage administrator, you can define pipes to specify which buckets can use your configured data flows and the properties associated with those data flows.

The sync group pipe create command enables you to create pipes, which are custom sync group data flows between specific buckets or groups of buckets, or between specific zones or groups of zones.

This command uses the following options:

| Option | Description | Required/Optional |

|---|---|---|

| --bucket | Name of the bucket to which sync policy need to be configured. Used only in bucket-level sync policy. | Optional |

| --group-id | ID of the sync group | Required |

| --pipe-id | ID of the pipe | Required |

| --source-zones |

Zones that send data to the sync group. Use single quotes (') for value. Use commas to separate multiple zones. Use the wildcard | Required |

| --source-bucket |

Bucket or buckets that send data to the sync group. If bucket name is not mentioned, then | Optional |

| --source-bucket-id | ID of the source bucket. | Optional |

| --dest-zones |

Zone or zones that receive the sync data. Use single quotes (') for value. Use commas to separate multiple zones. Use the wildcard | Required |

| --dest-bucket |

Bucket or buckets that receive the sync data. If bucket name is not mentioned, then | Optional |

| --dest-bucket-id | ID of the destination bucket. | Optional |

| --prefix |

Bucket prefix. Use the wildcard | Optional |

| --prefix-rm | Do not use bucket prefix for filtering. | Optional |

| --tags-add | Comma-separated list of key=value pairs. | Optional |

| --tags-rm | Removes one or more key=value pairs of tags. | Optional |

| --dest-owner | Destination owner of the objects from source. | Optional |

| --storage-class | Destination storage class for the objects from source. | Optional |

| --mode |

Use | Optional |

| --uid | Used for permissions validation in user mode. Specifies the user ID under which the sync operation will be issued. | Optional |

To enable or disable sync at zonegroup level for certain buckets, set zonegroup level sync policy to enable or disable state respectively, and create a pipe for each bucket with --source-bucket and --dest-bucket with its bucket name or with bucket-id, i.e, --source-bucket-id and --dest-bucket-id.

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Root or

sudoaccess. - The Ceph Object Gateway is installed.

Procedure

Create the sync group pipe:

Syntax

radosgw-admin sync group pipe create --bucket=BUCKET_NAME --group-id=GROUP_ID --pipe-id=PIPE_ID --source-zones='ZONE_NAME','ZONE_NAME2'... --source-bucket=SOURCE_BUCKET1 --source-bucket-id=SOURCE_BUCKET_ID --dest-zones='ZONE_NAME','ZONE_NAME2'... --dest-bucket=DESTINATION_BUCKET1 --dest-bucket-id=DESTINATION_BUCKET_ID --prefix=SOURCE_PREFIX --prefix-rm --tags-add=KEY1=VALUE1, KEY2=VALUE2, ... --tags-rm=KEY1=VALUE1, KEY2=VALUE2, ... --dest-owner=OWNER_ID --storage-class=STORAGE_CLASS --mode=USER --uid=USER_ID

radosgw-admin sync group pipe create --bucket=BUCKET_NAME --group-id=GROUP_ID --pipe-id=PIPE_ID --source-zones='ZONE_NAME','ZONE_NAME2'... --source-bucket=SOURCE_BUCKET1 --source-bucket-id=SOURCE_BUCKET_ID --dest-zones='ZONE_NAME','ZONE_NAME2'... --dest-bucket=DESTINATION_BUCKET1 --dest-bucket-id=DESTINATION_BUCKET_ID --prefix=SOURCE_PREFIX --prefix-rm --tags-add=KEY1=VALUE1, KEY2=VALUE2, ... --tags-rm=KEY1=VALUE1, KEY2=VALUE2, ... --dest-owner=OWNER_ID --storage-class=STORAGE_CLASS --mode=USER --uid=USER_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.9.11. Modifying or deleting a sync group pipe

As a storage administrator, you can use the sync group pipe modify command to modify the sync group pipe and sync group pipe remove to remove the sync group pipe.

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Root or

sudoaccess. - The Ceph Object Gateway is installed.

Procedure

Modify the sync group pipe options.

Syntax