Ce contenu n'est pas disponible dans la langue sélectionnée.

Chapter 1. Overview

1.1. Introduction

SAP NetWeaver or SAP S/4HANA based systems play an important role in many business processes; thus, it is critical to ensure the continuous and reliable availability of those systems to the business. This can be achieved by using HA clustering for managing the instances of such SAP NetWeaver or SAP S/4HANA systems.

The underlying idea of HA clustering is a fairly simple one: not a single large machine bears all of the load and risk, but rather one or more machines automatically drop in as an instant full replacement for the service or the machine that has failed. In the best case, this replacement process causes no interruption to the systems' users.

1.2. Audience

Designing highly available solutions and implementing them based on SAP NetWeaver or SAP S/4HANA can be very complex, so deep knowledge about each layer of the infrastructure and every aspect of the deployment is needed to ensure reliable, repeatable, accurate, and quick automated actions.

This document is intended for SAP and Red Hat certified or trained administrators and consultants who already have experience setting up SAP NetWeaver or S/4HANA application server instances and HA clusters using the RHEL HA add-on or other clustering solutions. Access to both the SAP Support Portal and the Red Hat Customer Portal is required to be able to download software and additional documentation.

Red Hat Consulting is highly recommended to set up the cluster and customize the solution to meet customers' data center requirements, which are normally more complex than the solution presented in this document.

1.3. Concepts

1.3.1. SAP NetWeaver or S/4HANA High Availability

A typical SAP NetWeaver or S/4HANA environment consists of three distinctive components:

-

SAP

(A)SCSinstance - SAP application server instances (Primary Application Server (PAS) and Additional Application Server (AAS) instances)

- Database instance

The (A)SCS instance and the database instance are single points of failure (SPOF); therefore, it is important to ensure they are protected by an HA solution to avoid data loss or corruption and unnecessary outages of the SAP system. For more information on SPOF, please refer to Single point of failure.

For the application servers, the enqueue lock table that is managed by the enqueue server is the most critical component. To protect it, SAP has developed the “Enqueue Replication Server” (ERS), which maintains a backup copy of the enqueue lock table. While the (A)SCS is running on one server, the ERS always needs to maintain a copy of the current enqueue table on another server.

This document describes how to set up a two-node or three-node HA cluster solution for managing (A)SCS and ERS instances that conforms to the guidelines for high availability that have been established by both SAP and Red Hat. The HA solution can either be used for the “Standalone Enqueue Server” (ENSA1) that is typically used with SAP NetWeaver or the “Standalone Enqueue Server 2” (ENSA2) that is used by SAP S/4HANA.

Additionally, it also provides guidelines for setting up HA cluster resources for managing other SAP instance types, like Primary Application Server (PAS) or Additional Application Server (AAS) instances that can either be managed as part of the same HA cluster or on a separate HA cluster.

1.3.2. ENSA1 vs. ENSA2

1.3.2.1. Standalone Enqueue Server (ENSA1)

In case there is an issue with the (A)SCS instance, for the Standalone Enqueue Server (ENSA1), it is required that the (A)SCS instance "follows” the ERS instance. That is, an HA cluster has to start the (A)SCS instance on the host where the ERS instance is currently running. Until the host where the (A)SCS instance was running has been fenced, it can be noticed that both instances stay running on that same node. When the HA cluster node where the (A)SCS instance was previously running is back online, the HA cluster should move the ERS instance to that HA cluster node so that Enqueue Replication can resume.

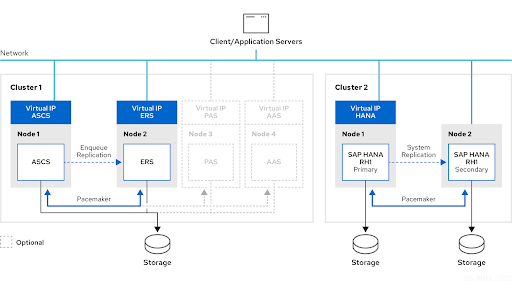

The following diagram shows the typical architecture of a Pacemaker HA cluster for managing SAP NetWeaver setups with the Standalone Enqueue Server (ENSA1).

Even though the diagram shows that it is optionally possible to also have Primary and Additional Application Server (PAS/AAS) instances managed on separate servers, it is also supported to have these instances running on the same HA cluster nodes as the (A)SCS and ERS instances and have them managed by the cluster.

Please see the following SAP documentation for more information on how the Standalone Enqueue Server (ENSA1) works: Standalone Enqueue Server.

1.3.2.2. Standalone Enqueue Server 2 (ENSA2)

As shown above with ENSA1, if there is a failover, the Standalone Enqueue Server is required to "follow" the Enqueue Replication Server. That is, the HA software had to start the (A)SCS instance on the host where the ERS instance is currently running.

In contrast to the Standalone Enqueue Server (ENSA1), the new Standalone Enqueue Server 2 (ENSA2) and Enqueue Replicator 2 no longer have these restrictions, which means that the ASCS instance can either be restarted on the same cluster node in case of a failure. Or it can also be moved to another HA cluster node, which doesn’t have to be the HA cluster node where the ERS instance is running. This makes it possible to use a multi-node HA cluster setup with more than two HA cluster nodes when Standalone Enqueue Server 2 (ENSA2) is used.

When using more than two HA cluster nodes, the ASCS will failover to a spare node, as illustrated in the following picture:

For more information on ENSA2, please refer to SAP Note 2630416 - Support for Standalone Enqueue Server 2.

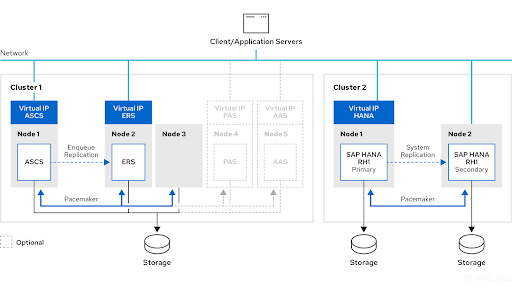

The following diagram shows the architecture of a three-node cluster that can be used for managing SAP S/4HANA setups with the Standalone Enqueue Server 2 (ENSA2).

Even though the diagram shows that it is optionally possible to also have Primary and Additional Application Server (PAS/AAS) instances managed on separate servers, it is also supported to have these instances running on the same HA cluster nodes as the ASCS and ERS instances and have them managed by the cluster.

For SAP S/4HANA, it is also possible to use a “cost-optimized” HA cluster setup, where the cluster nodes used for managing the HANA System Replication setup are also used for managing the ASCS and ERS instances.

1.4. Resource Agents

The following resource agents are provided on RHEL 9 for managing different instance types of SAP environments via the resource-agents-sap RPM package.

1.4.1. SAPInstance resource agent

The SAPInstance resource agent can be used for managing SAP application server instances using the SAP Start Service that is part of the SAP Kernel. In addition to the (A)SCS, ERS, PAS, and AAS instances, it can also be used for managing other SAP instance types, like standalone SAP Web Dispatcher or standalone SAP Gateway instances (see How to manage standalone SAP Web Dispatcher instances using the RHEL HA Add-On for information on how to configure a pacemaker resource for managing such instances).

All operations of the SAPInstance resource agent are done by using commands provided by the SAP Startup Framework, which communicate with the sapstartsrv process of each SAP instance. sapstartsrv knows 4 status colors:

| Color | Meaning |

|---|---|

| GREEN | Everything is fine. |

| YELLOW | Something is wrong, but the service is still working. |

| RED | The service does not work. |

| GRAY | The service has not been started. |

The SAPInstance resource agent will interpret GREEN and YELLOW as OK, while statuses RED and GRAY are reported as NOT_RUNNING to the cluster.

The versions of the SAPInstance resource agent shipped with RHEL 9 also support SAP instances that are managed by the systemd-enabled SAP Startup Framework (see The Systemd-Based SAP Startup Framework for further details).

1.4.1.1. Important SAPInstance resource agent parameters

| Attribute Name | Required | Default value | Description |

|---|---|---|---|

|

| yes | null |

The full SAP instance profile name ( |

|

| no | null | The full path to the SAP Start Profile (with SAP NetWeaver 7.1 and newer, the SAP Start profile is identical to the instance profile). |

|

| no | false |

Only used for |

|

| no | null |

The full qualified path where to find |

|

| no | null | The full qualified path where to find the SAP START profile (only needed if the default location for the instance profiles has been changed). |

|

| no | false |

The SAPInstance resource agent tries to recover a failed start attempt automatically one time. This is done by killing running instance processes, removing the |

|

| no |

|

The list of services of an SAP instance that need to be monitored to determine the health of the instance. To monitor more/less, or other services that |

The full list of parameters can be obtained by running pcs resource describe SAPInstance.

1.4.2. SAPDatabase resource agent

The SAPDatabase resource agent can be used to manage single Oracle, IBM DB2, SAP ASE, or MaxDB database instances as part of a SAP NetWeaver based HA cluster setup. For more information, refer to Support Policies for RHEL High Availability Clusters - Management of SAP NetWeaver in a Cluster for the list of supported database versions on RHEL 9.

The SAPDatabase resource agent does not run any database commands directly. It uses the SAP Host Agent to control the database. Therefore, the SAP Host Agent must be installed on each cluster node.

Since the SAPDatabase resource agent only provides basic functionality for managing database instances, it is recommended to use the HA features of the databases instead (for example, Oracle RAC and IBM DB2 HA/DR) if more HA capabilities are required for the database instance.

For S/4HANA HA setups, it is recommended to use HANA System Replication to make the HANA instance more robust against failures. The HANA System Replication HA setup can either be done using a separate cluster, or alternatively, it is also possible to use a “cost-optimized” S/4HANA HA setup where the ASCS and ERS instances are managed by the same HA cluster that is used for managing the HANA System Replication setup.

1.4.2.1. Important SAPDatabase resource agent parameters

| Attribute Name | Required | Default value | Description |

|---|---|---|---|

| SID | yes | null | The unique database system identifier (usually identical to the SAP SID). |

| DBTYPE | yes | null |

The type of database to manage. Valid values are: |

| DBINSTANCE | no | null | Must be used for special database implementations when the database instance name is not equal to the SID (e.g., Oracle DataGuard). |

| DBOSUSER | no |

ADA=taken from | The parameter can be set if the database processes on the operating system level are not executed with the default user of the used database type. |

| STRICT_MONITORING | no | false |

This controls how the resource agent monitors the database. If set to |

| MONITOR_SERVICES | no |

|

Defines which services are monitored by the |

| AUTOMATIC_RECOVER | no | false |

If you set this to |

The full list of parameters can be obtained by running pcs resource describe SAPDatabase.

1.5. Multi-SID Support (optional)

The setup described in this document can also be used to manage the (A)SCS/ERS instances for multiple SAP environments (Multi-SID) within the same HA cluster. For example, SAP products that contain both ABAP and Java application server instances (like SAP Solution Manager) could be candidates for a Multi-SID cluster.

However, some additional considerations need to be taken into account for such setups.

1.5.1. Unique SID and Instance Number

To avoid conflicts, each pair of (A)SCS/ERS instances must use a different SID, and each instance must use a unique Instance Number even if they belong to a different SID.

1.5.2. Sizing

Each HA cluster node must meet the SAP requirements for sizing to support multiple instances.

1.5.3. Installation

For each (A)SCS/ERS pair, please repeat all the steps documented in sections 4.5, 4.6, and 4.7. Each (A)SCS/ERS pair will failover independently, following the configuration rules.

With the default pacemaker configuration for RHEL 9, certain failures of resource actions (for example, the stop of a resource fails) will cause the cluster node to be fenced. This means that, for example, if the stop of the resource for one (A)SCS instance on a HA cluster node fails, it would cause an outage for all other resources running on the same HA cluster node. Please see the description of the on-fail property for monitoring operations in Configuring and managing high availability clusters - Chapter 21. Resource monitoring operations for options on how to modify this behavior.