Ce contenu n'est pas disponible dans la langue sélectionnée.

Chapter 6. Content distribution with Red Hat Quay

Content distribution features in Red Hat Quay include:

6.1. Repository mirroring

Red Hat Quay repository mirroring lets you mirror images from external container registries, or another local registry, into your Red Hat Quay cluster. Using repository mirroring, you can synchronize images to Red Hat Quay based on repository names and tags.

From your Red Hat Quay cluster with repository mirroring enabled, you can perform the following:

- Choose a repository from an external registry to mirror

- Add credentials to access the external registry

- Identify specific container image repository names and tags to sync

- Set intervals at which a repository is synced

- Check the current state of synchronization

To use the mirroring functionality, you need to perform the following actions:

- Enable repository mirroring in the Red Hat Quay configuration file

- Run a repository mirroring worker

- Create mirrored repositories

All repository mirroring configurations can be performed using the configuration tool UI or by the Red Hat Quay API.

6.1.1. Using repository mirroring

The following list shows features and limitations of Red Hat Quay repository mirroring:

- With repository mirroring, you can mirror an entire repository or selectively limit which images are synced. Filters can be based on a comma-separated list of tags, a range of tags, or other means of identifying tags through Unix shell-style wildcards. For more information, see the documentation for wildcards.

- When a repository is set as mirrored, you cannot manually add other images to that repository.

- Because the mirrored repository is based on the repository and tags you set, it will hold only the content represented by the repository and tag pair. For example if you change the tag so that some images in the repository no longer match, those images will be deleted.

- Only the designated robot can push images to a mirrored repository, superseding any role-based access control permissions set on the repository.

- Mirroring can be configured to rollback on failure, or to run on a best-effort basis.

- With a mirrored repository, a user with read permissions can pull images from the repository but cannot push images to the repository.

-

Changing settings on your mirrored repository can be performed in the Red Hat Quay user interface, using the Repositories

Mirrors tab for the mirrored repository you create. - Images are synced at set intervals, but can also be synced on demand.

6.1.2. Repository mirroring recommendations

Best practices for repository mirroring include the following:

- Repository mirroring pods can run on any node. This means that you can run mirroring on nodes where Red Hat Quay is already running.

- Repository mirroring is scheduled in the database and runs in batches. As a result, repository workers check each repository mirror configuration file and reads when the next sync needs to be. More mirror workers means more repositories can be mirrored at the same time. For example, running 10 mirror workers means that a user can run 10 mirroring operators in parallel. If a user only has 2 workers with 10 mirror configurations, only 2 operators can be performed.

The optimal number of mirroring pods depends on the following conditions:

- The total number of repositories to be mirrored

- The number of images and tags in the repositories and the frequency of changes

Parallel batching

For example, if a user is mirroring a repository that has 100 tags, the mirror will be completed by one worker. Users must consider how many repositories one wants to mirror in parallel, and base the number of workers around that.

Multiple tags in the same repository cannot be mirrored in parallel.

6.1.3. Event notifications for mirroring

There are three notification events for repository mirroring:

- Repository Mirror Started

- Repository Mirror Success

- Repository Mirror Unsuccessful

The events can be configured inside of the Settings tab for each repository, and all existing notification methods such as email, Slack, Quay UI, and webhooks are supported.

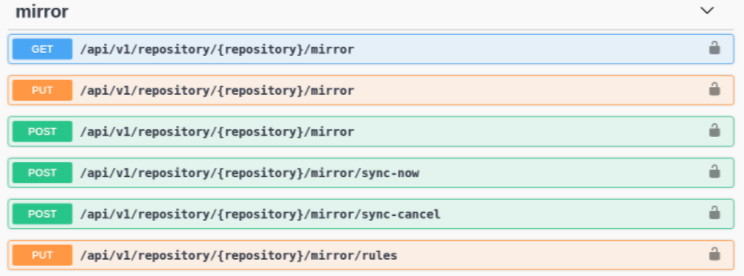

6.1.4. Mirroring API

You can use the Red Hat Quay API to configure repository mirroring:

Mirroring API

More information is available in the Red Hat Quay API Guide

6.2. Geo-replication

Geo-replication allows multiple, geographically distributed Red Hat Quay deployments to work as a single registry from the perspective of a client or user. It significantly improves push and pull performance in a globally-distributed Red Hat Quay setup. Image data is asynchronously replicated in the background with transparent failover and redirect for clients.

Deployments of Red Hat Quay with geo-replication is supported on standalone and Operator deployments.

6.2.1. Geo-replication features

- When geo-replication is configured, container image pushes will be written to the preferred storage engine for that Red Hat Quay instance. This is typically the nearest storage backend within the region.

- After the initial push, image data will be replicated in the background to other storage engines.

- The list of replication locations is configurable and those can be different storage backends.

- An image pull will always use the closest available storage engine, to maximize pull performance.

- If replication has not been completed yet, the pull will use the source storage backend instead.

6.2.2. Geo-replication requirements and constraints

- In geo-replicated setups, Red Hat Quay requires that all regions are able to read and write to all other region’s object storage. Object storage must be geographically accessible by all other regions.

- In case of an object storage system failure of one geo-replicating site, that site’s Red Hat Quay deployment must be shut down so that clients are redirected to the remaining site with intact storage systems by a global load balancer. Otherwise, clients will experience pull and push failures.

- Red Hat Quay has no internal awareness of the health or availability of the connected object storage system. Users must configure a global load balancer (LB) to monitor the health of your distributed system and to route traffic to different sites based on their storage status.

-

To check the status of your geo-replication deployment, you must use the

/health/endtoendcheckpoint, which is used for global health monitoring. You must configure the redirect manually using the/health/endtoendendpoint. The/health/instanceend point only checks local instance health. - If the object storage system of one site becomes unavailable, there will be no automatic redirect to the remaining storage system, or systems, of the remaining site, or sites.

- Geo-replication is asynchronous. The permanent loss of a site incurs the loss of the data that has been saved in that sites' object storage system but has not yet been replicated to the remaining sites at the time of failure.

A single database, and therefore all metadata and Red Hat Quay configuration, is shared across all regions.

Geo-replication does not replicate the database. In the event of an outage, Red Hat Quay with geo-replication enabled will not failover to another database.

- A single Redis cache is shared across the entire Red Hat Quay setup and needs to be accessible by all Red Hat Quay pods.

-

The exact same configuration should be used across all regions, with exception of the storage backend, which can be configured explicitly using the

QUAY_DISTRIBUTED_STORAGE_PREFERENCEenvironment variable. - Geo-replication requires object storage in each region. It does not work with local storage.

- Each region must be able to access every storage engine in each region, which requires a network path.

- Alternatively, the storage proxy option can be used.

- The entire storage backend, for example, all blobs, is replicated. Repository mirroring, by contrast, can be limited to a repository, or an image.

- All Red Hat Quay instances must share the same entrypoint, typically through a load balancer.

- All Red Hat Quay instances must have the same set of superusers, as they are defined inside the common configuration file.

-

Geo-replication requires your Clair configuration to be set to

unmanaged. An unmanaged Clair database allows the Red Hat Quay Operator to work in a geo-replicated environment, where multiple instances of the Red Hat Quay Operator must communicate with the same database. For more information, see Advanced Clair configuration. - Geo-Replication requires SSL/TLS certificates and keys. For more information, see * Geo-Replication requires SSL/TLS certificates and keys. For more information, see Proof of concept deployment using SSL/TLS certificates. .

If the above requirements cannot be met, you should instead use two or more distinct Red Hat Quay deployments and take advantage of repository mirroring functions.

6.2.3. Geo-replication using standalone Red Hat Quay

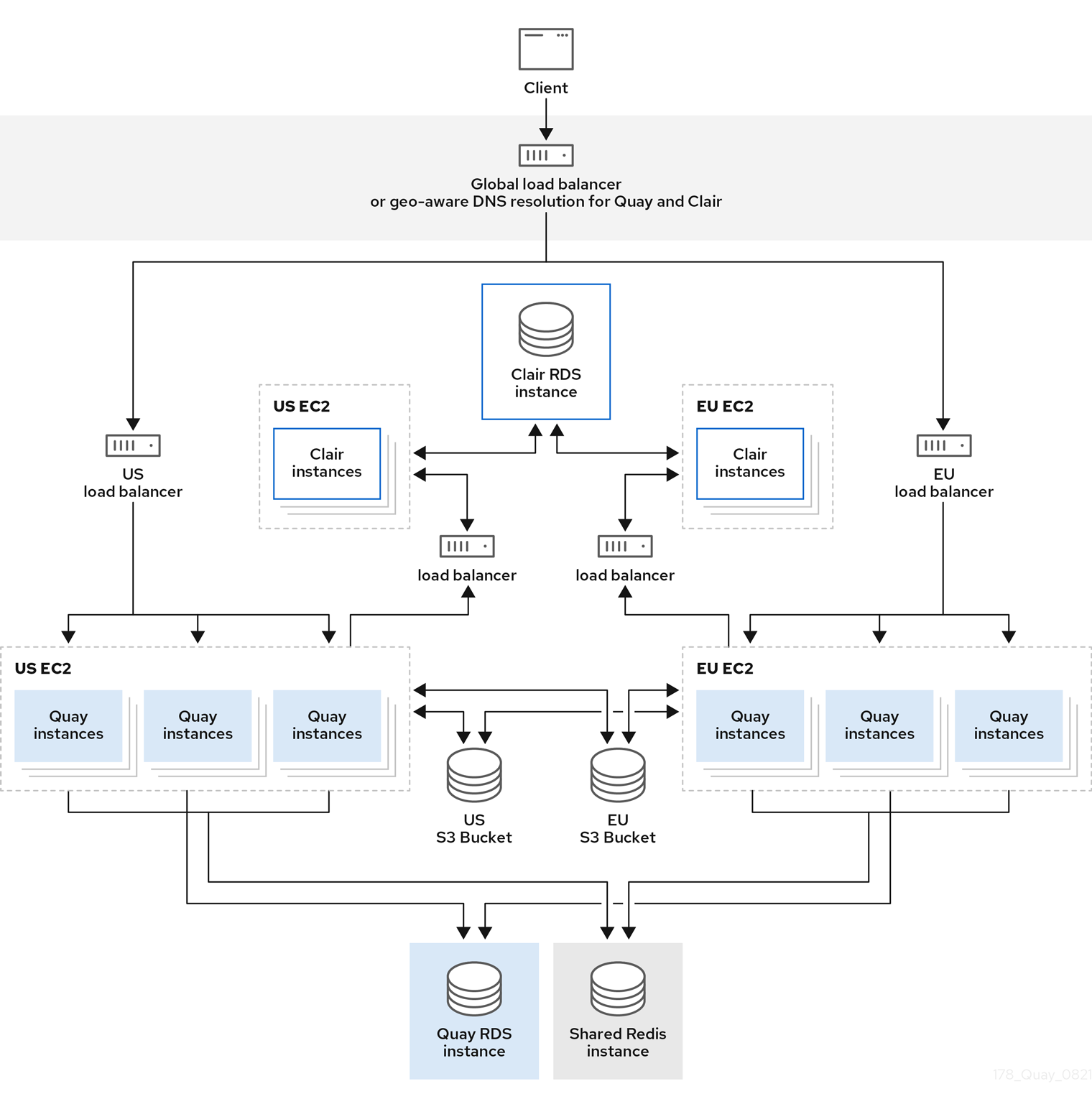

In the following image, Red Hat Quay is running standalone in two separate regions, with a common database and a common Redis instance. Localized image storage is provided in each region and image pulls are served from the closest available storage engine. Container image pushes are written to the preferred storage engine for the Red Hat Quay instance, and will then be replicated, in the background, to the other storage engines.

If Clair fails in one cluster, for example, the US cluster, US users would not see vulnerability reports in Red Hat Quay for the second cluster (EU). This is because all Clair instances have the same state. When Clair fails, it is usually because of a problem within the cluster.

Geo-replication architecture

6.2.4. Geo-replication using the Red Hat Quay Operator

In the example shown above, the Red Hat Quay Operator is deployed in two separate regions, with a common database and a common Redis instance. Localized image storage is provided in each region and image pulls are served from the closest available storage engine. Container image pushes are written to the preferred storage engine for the Quay instance, and will then be replicated, in the background, to the other storage engines.

Because the Operator now manages the Clair security scanner and its database separately, geo-replication setups can be leveraged so that they do not manage the Clair database. Instead, an external shared database would be used. Red Hat Quay and Clair support several providers and vendors of PostgreSQL, which can be found in the Red Hat Quay 3.x test matrix. Additionally, the Operator also supports custom Clair configurations that can be injected into the deployment, which allows users to configure Clair with the connection credentials for the external database.

6.2.5. Mixed storage for geo-replication

Red Hat Quay geo-replication supports the use of different and multiple replication targets, for example, using AWS S3 storage on public cloud and using Ceph storage on premise. This complicates the key requirement of granting access to all storage backends from all Red Hat Quay pods and cluster nodes. As a result, it is recommended that you use the following:

- A VPN to prevent visibility of the internal storage, or

- A token pair that only allows access to the specified bucket used by Red Hat Quay

This results in the public cloud instance of Red Hat Quay having access to on-premise storage, but the network will be encrypted, protected, and will use ACLs, thereby meeting security requirements.

If you cannot implement these security measures, it might be preferable to deploy two distinct Red Hat Quay registries and to use repository mirroring as an alternative to geo-replication.

6.3. Repository mirroring compared to geo-replication

Red Hat Quay geo-replication mirrors the entire image storage backend data between 2 or more different storage backends while the database is shared, for example, one Red Hat Quay registry with two different blob storage endpoints. The primary use cases for geo-replication include the following:

- Speeding up access to the binary blobs for geographically dispersed setups

- Guaranteeing that the image content is the same across regions

Repository mirroring synchronizes selected repositories, or subsets of repositories, from one registry to another. The registries are distinct, with each registry having a separate database and separate image storage.

The primary use cases for mirroring include the following:

- Independent registry deployments in different data centers or regions, where a certain subset of the overall content is supposed to be shared across the data centers and regions

- Automatic synchronization or mirroring of selected (allowlisted) upstream repositories from external registries into a local Red Hat Quay deployment

Repository mirroring and geo-replication can be used simultaneously.

| Feature / Capability | Geo-replication | Repository mirroring |

|---|---|---|

| What is the feature designed to do? | A shared, global registry | Distinct, different registries |

| What happens if replication or mirroring has not been completed yet? | The remote copy is used (slower) | No image is served |

| Is access to all storage backends in both regions required? | Yes (all Red Hat Quay nodes) | No (distinct storage) |

| Can users push images from both sites to the same repository? | Yes | No |

| Is all registry content and configuration identical across all regions (shared database)? | Yes | No |

| Can users select individual namespaces or repositories to be mirrored? | No | Yes |

| Can users apply filters to synchronization rules? | No | Yes |

| Are individual / different role-base access control configurations allowed in each region | No | Yes |

6.4. Air-gapped or disconnected deployments

In the following diagram, the upper deployment in the diagram shows Red Hat Quay and Clair connected to the internet, with an air-gapped OpenShift Container Platform cluster accessing the Red Hat Quay registry through an explicit, allowlisted hole in the firewall.

The lower deployment in the diagram shows Red Hat Quay and Clair running inside of the firewall, with image and CVE data transferred to the target system using offline media. The data is exported from a separate Red Hat Quay and Clair deployment that is connected to the internet.

The following diagram shows how Red Hat Quay and Clair can be deployed in air-gapped or disconnected environments:

Red Hat Quay and Clair in disconnected, or air-gapped, environments