Ce contenu n'est pas disponible dans la langue sélectionnée.

Chapter 3. Clair security scanner

3.1. Clair vulnerability databases

Clair uses the following vulnerability databases to report for issues in your images:

- Ubuntu Oval database

- Debian Security Tracker

- Red Hat Enterprise Linux (RHEL) Oval database

- SUSE Oval database

- Oracle Oval database

- Alpine SecDB database

- VMware Photon OS database

- Amazon Web Services (AWS) UpdateInfo

- Open Source Vulnerability (OSV) Database

For information about how Clair does security mapping with the different databases, see Claircore Severity Mapping.

3.1.1. Information about Open Source Vulnerability (OSV) database for Clair

Open Source Vulnerability (OSV) is a vulnerability database and monitoring service that focuses on tracking and managing security vulnerabilities in open source software.

OSV provides a comprehensive and up-to-date database of known security vulnerabilities in open source projects. It covers a wide range of open source software, including libraries, frameworks, and other components that are used in software development. For a full list of included ecosystems, see defined ecosystems.

Clair also reports vulnerability and security information for golang, java, and ruby ecosystems through the Open Source Vulnerability (OSV) database.

By leveraging OSV, developers and organizations can proactively monitor and address security vulnerabilities in open source components that they use, which helps to reduce the risk of security breaches and data compromises in projects.

For more information about OSV, see the OSV website.

3.2. Clair on OpenShift Container Platform

To set up Clair v4 (Clair) on a Red Hat Quay deployment on OpenShift Container Platform, it is recommended to use the Red Hat Quay Operator. By default, the Red Hat Quay Operator installs or upgrades a Clair deployment along with your Red Hat Quay deployment and configure Clair automatically.

3.3. Testing Clair

Use the following procedure to test Clair on either a standalone Red Hat Quay deployment, or on an OpenShift Container Platform Operator-based deployment.

Prerequisites

- You have deployed the Clair container image.

Procedure

Pull a sample image by entering the following command:

podman pull ubuntu:20.04

$ podman pull ubuntu:20.04Copy to Clipboard Copied! Toggle word wrap Toggle overflow Tag the image to your registry by entering the following command:

sudo podman tag docker.io/library/ubuntu:20.04 <quay-server.example.com>/<user-name>/ubuntu:20.04

$ sudo podman tag docker.io/library/ubuntu:20.04 <quay-server.example.com>/<user-name>/ubuntu:20.04Copy to Clipboard Copied! Toggle word wrap Toggle overflow Push the image to your Red Hat Quay registry by entering the following command:

sudo podman push --tls-verify=false quay-server.example.com/quayadmin/ubuntu:20.04

$ sudo podman push --tls-verify=false quay-server.example.com/quayadmin/ubuntu:20.04Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Log in to your Red Hat Quay deployment through the UI.

- Click the repository name, for example, quayadmin/ubuntu.

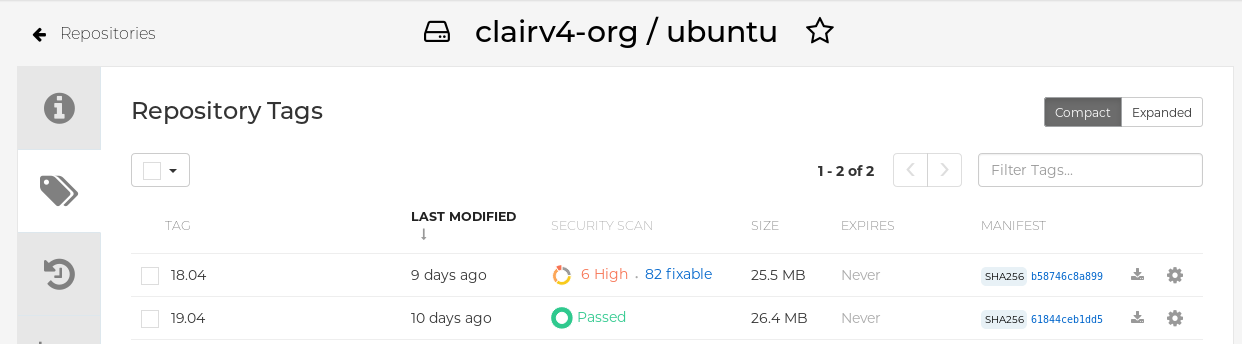

In the navigation pane, click Tags.

Report summary

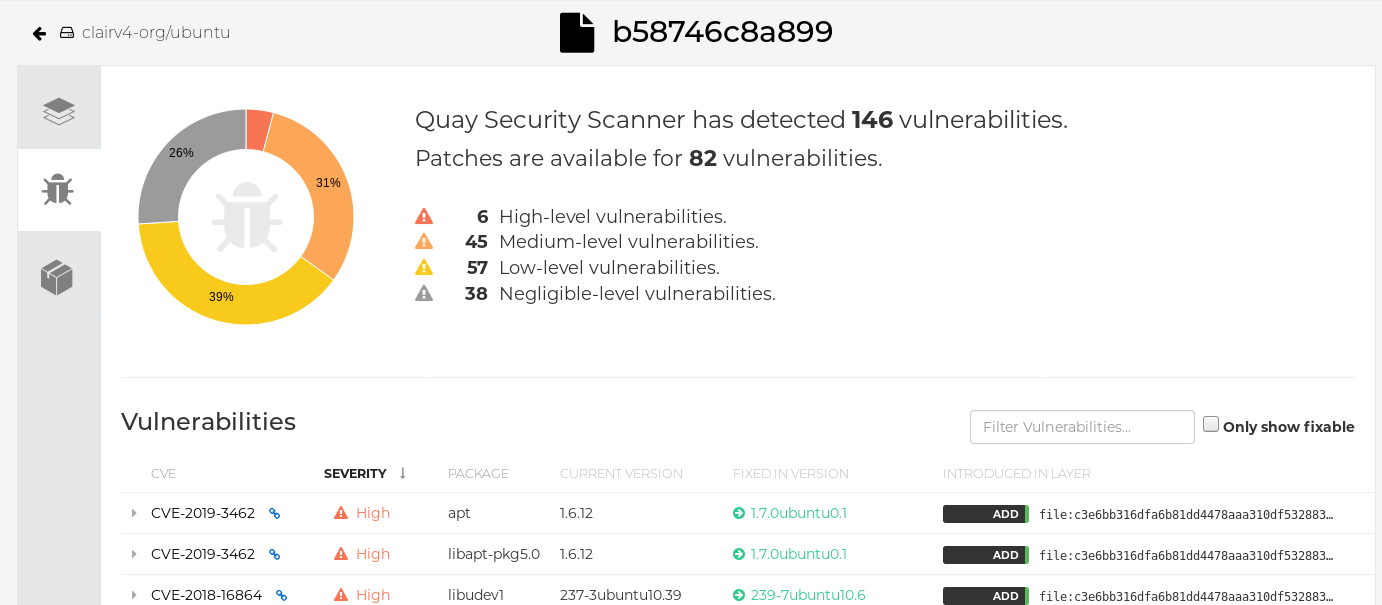

Click the image report, for example, 45 medium, to show a more detailed report:

Report details

Note

NoteIn some cases, Clair shows duplicate reports on images, for example,

ubi8/nodejs-12orubi8/nodejs-16. This occurs because vulnerabilities with same name are for different packages. This behavior is expected with Clair vulnerability reporting and will not be addressed as a bug.

3.4. Advanced Clair configuration

Use the procedures in the following sections to configure advanced Clair settings.

3.4.1. Unmanaged Clair configuration

Red Hat Quay users can run an unmanaged Clair configuration with the Red Hat Quay OpenShift Container Platform Operator. This feature allows users to create an unmanaged Clair database, or run their custom Clair configuration without an unmanaged database.

An unmanaged Clair database allows the Red Hat Quay Operator to work in a geo-replicated environment, where multiple instances of the Operator must communicate with the same database. An unmanaged Clair database can also be used when a user requires a highly-available (HA) Clair database that exists outside of a cluster.

3.4.1.1. Running a custom Clair configuration with an unmanaged Clair database

Use the following procedure to set your Clair database to unmanaged.

You must not use the same externally managed PostgreSQL database for both Red Hat Quay and Clair deployments. Your PostgreSQL database must also not be shared with other workloads, as it might exhaust the natural connection limit on the PostgreSQL side when connection-intensive workloads, like Red Hat Quay or Clair, contend for resources. Additionally, pgBouncer is not supported with Red Hat Quay or Clair, so it is not an option to resolve this issue.

Procedure

In the Quay Operator, set the

clairpostgrescomponent of theQuayRegistrycustom resource tomanaged: false:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.1.2. Configuring a custom Clair database with an unmanaged Clair database

Red Hat Quay on OpenShift Container Platform allows users to provide their own Clair database.

Use the following procedure to create a custom Clair database.

The following procedure sets up Clair with SSL/TLS certifications. To view a similar procedure that does not set up Clair with SSL/TLS certifications, see "Configuring a custom Clair database with a managed Clair configuration".

Procedure

Create a Quay configuration bundle secret that includes the

clair-config.yamlby entering the following command:oc create secret generic --from-file config.yaml=./config.yaml --from-file extra_ca_cert_rds-ca-2019-root.pem=./rds-ca-2019-root.pem --from-file clair-config.yaml=./clair-config.yaml --from-file ssl.cert=./ssl.cert --from-file ssl.key=./ssl.key config-bundle-secret

$ oc create secret generic --from-file config.yaml=./config.yaml --from-file extra_ca_cert_rds-ca-2019-root.pem=./rds-ca-2019-root.pem --from-file clair-config.yaml=./clair-config.yaml --from-file ssl.cert=./ssl.cert --from-file ssl.key=./ssl.key config-bundle-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Clair

config.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note-

The database certificate is mounted under

/run/certs/rds-ca-2019-root.pemon the Clair application pod in theclair-config.yaml. It must be specified when configuring yourclair-config.yaml. -

An example

clair-config.yamlcan be found at Clair on OpenShift config.

-

The database certificate is mounted under

Add the

clair-config.yamlfile to your bundle secret, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteWhen updated, the provided

clair-config.yamlfile is mounted into the Clair pod. Any fields not provided are automatically populated with defaults using the Clair configuration module.You can check the status of your Clair pod by clicking the commit in the Build History page, or by running

oc get pods -n <namespace>. For example:oc get pods -n <namespace>

$ oc get pods -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE f192fe4a-c802-4275-bcce-d2031e635126-9l2b5-25lg2 1/1 Running 0 7s

NAME READY STATUS RESTARTS AGE f192fe4a-c802-4275-bcce-d2031e635126-9l2b5-25lg2 1/1 Running 0 7sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.2. Running a custom Clair configuration with a managed Clair database

In some cases, users might want to run a custom Clair configuration with a managed Clair database. This is useful in the following scenarios:

- When a user wants to disable specific updater resources.

When a user is running Red Hat Quay in an disconnected environment. For more information about running Clair in a disconnected environment, see Clair in disconnected environments.

Note-

If you are running Red Hat Quay in an disconnected environment, the

airgapparameter of yourclair-config.yamlmust be set toTrue. - If you are running Red Hat Quay in an disconnected environment, you should disable all updater components.

-

If you are running Red Hat Quay in an disconnected environment, the

3.4.2.1. Setting a Clair database to managed

Use the following procedure to set your Clair database to managed.

Procedure

In the Quay Operator, set the

clairpostgrescomponent of theQuayRegistrycustom resource tomanaged: true:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.2.2. Configuring a custom Clair database with a managed Clair configuration

Red Hat Quay on OpenShift Container Platform allows users to provide their own Clair database.

Use the following procedure to create a custom Clair database.

Procedure

Create a Quay configuration bundle secret that includes the

clair-config.yamlby entering the following command:oc create secret generic --from-file config.yaml=./config.yaml --from-file extra_ca_cert_rds-ca-2019-root.pem=./rds-ca-2019-root.pem --from-file clair-config.yaml=./clair-config.yaml config-bundle-secret

$ oc create secret generic --from-file config.yaml=./config.yaml --from-file extra_ca_cert_rds-ca-2019-root.pem=./rds-ca-2019-root.pem --from-file clair-config.yaml=./clair-config.yaml config-bundle-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Clair

config.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note-

The database certificate is mounted under

/run/certs/rds-ca-2019-root.pemon the Clair application pod in theclair-config.yaml. It must be specified when configuring yourclair-config.yaml. -

An example

clair-config.yamlcan be found at Clair on OpenShift config.

-

The database certificate is mounted under

Add the

clair-config.yamlfile to your bundle secret, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note-

When updated, the provided

clair-config.yamlfile is mounted into the Clair pod. Any fields not provided are automatically populated with defaults using the Clair configuration module.

-

When updated, the provided

You can check the status of your Clair pod by clicking the commit in the Build History page, or by running

oc get pods -n <namespace>. For example:oc get pods -n <namespace>

$ oc get pods -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE f192fe4a-c802-4275-bcce-d2031e635126-9l2b5-25lg2 1/1 Running 0 7s

NAME READY STATUS RESTARTS AGE f192fe4a-c802-4275-bcce-d2031e635126-9l2b5-25lg2 1/1 Running 0 7sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3. Clair in disconnected environments

Currently, deploying Clair in disconnected environments is not supported on IBM Power and IBM Z.

Clair uses a set of components called updaters to handle the fetching and parsing of data from various vulnerability databases. Updaters are set up by default to pull vulnerability data directly from the internet and work for immediate use. However, some users might require Red Hat Quay to run in a disconnected environment, or an environment without direct access to the internet. Clair supports disconnected environments by working with different types of update workflows that take network isolation into consideration. This works by using the clairctl command line interface tool, which obtains updater data from the internet by using an open host, securely transferring the data to an isolated host, and then important the updater data on the isolated host into Clair.

Use this guide to deploy Clair in a disconnected environment.

Currently, Clair enrichment data is CVSS data. Enrichment data is currently unsupported in disconnected environments.

For more information about Clair updaters, see "Clair updaters".

3.4.3.1. Setting up Clair in a disconnected OpenShift Container Platform cluster

Use the following procedures to set up an OpenShift Container Platform provisioned Clair pod in a disconnected OpenShift Container Platform cluster.

3.4.3.1.1. Installing the clairctl command line utility tool for OpenShift Container Platform deployments

Use the following procedure to install the clairctl CLI tool for OpenShift Container Platform deployments.

Procedure

Install the

clairctlprogram for a Clair deployment in an OpenShift Container Platform cluster by entering the following command:oc -n quay-enterprise exec example-registry-clair-app-64dd48f866-6ptgw -- cat /usr/bin/clairctl > clairctl

$ oc -n quay-enterprise exec example-registry-clair-app-64dd48f866-6ptgw -- cat /usr/bin/clairctl > clairctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteUnofficially, the

clairctltool can be downloadedSet the permissions of the

clairctlfile so that it can be executed and run by the user, for example:chmod u+x ./clairctl

$ chmod u+x ./clairctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.1.2. Retrieving and decoding the Clair configuration secret for Clair deployments on OpenShift Container Platform

Use the following procedure to retrieve and decode the configuration secret for an OpenShift Container Platform provisioned Clair instance on OpenShift Container Platform.

Prerequisites

-

You have installed the

clairctlcommand line utility tool.

Procedure

Enter the following command to retrieve and decode the configuration secret, and then save it to a Clair configuration YAML:

oc get secret -n quay-enterprise example-registry-clair-config-secret -o "jsonpath={$.data['config\.yaml']}" | base64 -d > clair-config.yaml$ oc get secret -n quay-enterprise example-registry-clair-config-secret -o "jsonpath={$.data['config\.yaml']}" | base64 -d > clair-config.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the

clair-config.yamlfile so that thedisable_updatersandairgapparameters are set toTrue, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.1.3. Exporting the updaters bundle from a connected Clair instance

Use the following procedure to export the updaters bundle from a Clair instance that has access to the internet.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. -

You have retrieved and decoded the Clair configuration secret, and saved it to a Clair

config.yamlfile. -

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile.

Procedure

From a Clair instance that has access to the internet, use the

clairctlCLI tool with your configuration file to export the updaters bundle. For example:./clairctl --config ./config.yaml export-updaters updates.gz

$ ./clairctl --config ./config.yaml export-updaters updates.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.1.4. Configuring access to the Clair database in the disconnected OpenShift Container Platform cluster

Use the following procedure to configure access to the Clair database in your disconnected OpenShift Container Platform cluster.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. -

You have retrieved and decoded the Clair configuration secret, and saved it to a Clair

config.yamlfile. -

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile. - You have exported the updaters bundle from a Clair instance that has access to the internet.

Procedure

Determine your Clair database service by using the

ocCLI tool, for example:oc get svc -n quay-enterprise

$ oc get svc -n quay-enterpriseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE example-registry-clair-app ClusterIP 172.30.224.93 <none> 80/TCP,8089/TCP 4d21h example-registry-clair-postgres ClusterIP 172.30.246.88 <none> 5432/TCP 4d21h ...

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE example-registry-clair-app ClusterIP 172.30.224.93 <none> 80/TCP,8089/TCP 4d21h example-registry-clair-postgres ClusterIP 172.30.246.88 <none> 5432/TCP 4d21h ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Forward the Clair database port so that it is accessible from the local machine. For example:

oc port-forward -n quay-enterprise service/example-registry-clair-postgres 5432:5432

$ oc port-forward -n quay-enterprise service/example-registry-clair-postgres 5432:5432Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update your Clair

config.yamlfile, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace the value of the

hostin the multipleconnstringfields withlocalhost. - 2

- For more information about the

rhel-repository-scannerparameter, see "Mapping repositories to Common Product Enumeration information". - 3

- For more information about the

rhel_containerscannerparameter, see "Mapping repositories to Common Product Enumeration information".

3.4.3.1.5. Importing the updaters bundle into the disconnected OpenShift Container Platform cluster

Use the following procedure to import the updaters bundle into your disconnected OpenShift Container Platform cluster.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. -

You have retrieved and decoded the Clair configuration secret, and saved it to a Clair

config.yamlfile. -

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile. - You have exported the updaters bundle from a Clair instance that has access to the internet.

- You have transferred the updaters bundle into your disconnected environment.

Procedure

Use the

clairctlCLI tool to import the updaters bundle into the Clair database that is deployed by OpenShift Container Platform. For example:./clairctl --config ./clair-config.yaml import-updaters updates.gz

$ ./clairctl --config ./clair-config.yaml import-updaters updates.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.2. Setting up a self-managed deployment of Clair for a disconnected OpenShift Container Platform cluster

Use the following procedures to set up a self-managed deployment of Clair for a disconnected OpenShift Container Platform cluster.

3.4.3.2.1. Installing the clairctl command line utility tool for a self-managed Clair deployment on OpenShift Container Platform

Use the following procedure to install the clairctl CLI tool for self-managed Clair deployments on OpenShift Container Platform.

Procedure

Install the

clairctlprogram for a self-managed Clair deployment by using thepodman cpcommand, for example:sudo podman cp clairv4:/usr/bin/clairctl ./clairctl

$ sudo podman cp clairv4:/usr/bin/clairctl ./clairctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the permissions of the

clairctlfile so that it can be executed and run by the user, for example:chmod u+x ./clairctl

$ chmod u+x ./clairctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.2.2. Deploying a self-managed Clair container for disconnected OpenShift Container Platform clusters

Use the following procedure to deploy a self-managed Clair container for disconnected OpenShift Container Platform clusters.

Prerequisites

-

You have installed the

clairctlcommand line utility tool.

Procedure

Create a folder for your Clair configuration file, for example:

mkdir /etc/clairv4/config/

$ mkdir /etc/clairv4/config/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a Clair configuration file with the

disable_updatersparameter set toTrue, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Start Clair by using the container image, mounting in the configuration from the file you created:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.2.3. Exporting the updaters bundle from a connected Clair instance

Use the following procedure to export the updaters bundle from a Clair instance that has access to the internet.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. - You have deployed Clair.

-

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile.

Procedure

From a Clair instance that has access to the internet, use the

clairctlCLI tool with your configuration file to export the updaters bundle. For example:./clairctl --config ./config.yaml export-updaters updates.gz

$ ./clairctl --config ./config.yaml export-updaters updates.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.2.4. Configuring access to the Clair database in the disconnected OpenShift Container Platform cluster

Use the following procedure to configure access to the Clair database in your disconnected OpenShift Container Platform cluster.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. - You have deployed Clair.

-

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile. - You have exported the updaters bundle from a Clair instance that has access to the internet.

Procedure

Determine your Clair database service by using the

ocCLI tool, for example:oc get svc -n quay-enterprise

$ oc get svc -n quay-enterpriseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE example-registry-clair-app ClusterIP 172.30.224.93 <none> 80/TCP,8089/TCP 4d21h example-registry-clair-postgres ClusterIP 172.30.246.88 <none> 5432/TCP 4d21h ...

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE example-registry-clair-app ClusterIP 172.30.224.93 <none> 80/TCP,8089/TCP 4d21h example-registry-clair-postgres ClusterIP 172.30.246.88 <none> 5432/TCP 4d21h ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Forward the Clair database port so that it is accessible from the local machine. For example:

oc port-forward -n quay-enterprise service/example-registry-clair-postgres 5432:5432

$ oc port-forward -n quay-enterprise service/example-registry-clair-postgres 5432:5432Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update your Clair

config.yamlfile, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace the value of the

hostin the multipleconnstringfields withlocalhost. - 2

- For more information about the

rhel-repository-scannerparameter, see "Mapping repositories to Common Product Enumeration information". - 3

- For more information about the

rhel_containerscannerparameter, see "Mapping repositories to Common Product Enumeration information".

3.4.3.2.5. Importing the updaters bundle into the disconnected OpenShift Container Platform cluster

Use the following procedure to import the updaters bundle into your disconnected OpenShift Container Platform cluster.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. - You have deployed Clair.

-

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile. - You have exported the updaters bundle from a Clair instance that has access to the internet.

- You have transferred the updaters bundle into your disconnected environment.

Procedure

Use the

clairctlCLI tool to import the updaters bundle into the Clair database that is deployed by OpenShift Container Platform:./clairctl --config ./clair-config.yaml import-updaters updates.gz

$ ./clairctl --config ./clair-config.yaml import-updaters updates.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.4. Mapping repositories to Common Product Enumeration information

Currently, mapping repositories to Common Product Enumeration information is not supported on IBM Power and IBM Z.

Clair’s Red Hat Enterprise Linux (RHEL) scanner relies on a Common Product Enumeration (CPE) file to map RPM packages to the corresponding security data to produce matching results. These files are owned by product security and updated daily.

The CPE file must be present, or access to the file must be allowed, for the scanner to properly process RPM packages. If the file is not present, RPM packages installed in the container image will not be scanned.

| CPE | Link to JSON mapping file |

|---|---|

|

| |

|

|

In addition to uploading CVE information to the database for disconnected Clair installations, you must also make the mapping file available locally:

- For standalone Red Hat Quay and Clair deployments, the mapping file must be loaded into the Clair pod.

-

For Red Hat Quay on OpenShift Container Platform deployments, you must set the Clair component to

unmanaged. Then, Clair must be deployed manually, setting the configuration to load a local copy of the mapping file.

3.4.4.1. Mapping repositories to Common Product Enumeration example configuration

Use the repo2cpe_mapping_file and name2repos_mapping_file fields in your Clair configuration to include the CPE JSON mapping files. For example:

For more information, see How to accurately match OVAL security data to installed RPMs.

3.5. Resizing Managed Storage

When deploying Red Hat Quay on OpenShift Container Platform, three distinct persistent volume claims (PVCs) are deployed:

- One for the PostgreSQL 13 registry.

- One for the Clair PostgreSQL 13 registry.

- One that uses NooBaa as a backend storage.

The connection between Red Hat Quay and NooBaa is done through the S3 API and ObjectBucketClaim API in OpenShift Container Platform. Red Hat Quay leverages that API group to create a bucket in NooBaa, obtain access keys, and automatically set everything up. On the backend, or NooBaa, side, that bucket is creating inside of the backing store. As a result, NooBaa PVCs are not mounted or connected to Red Hat Quay pods.

The default size for the PostgreSQL 13 and Clair PostgreSQL 13 PVCs is set to 50 GiB. You can expand storage for these PVCs on the OpenShift Container Platform console by using the following procedure.

The following procedure shares commonality with Expanding Persistent Volume Claims on Red Hat OpenShift Data Foundation.

3.5.1. Resizing PostgreSQL 13 PVCs on Red Hat Quay

Use the following procedure to resize the PostgreSQL 13 and Clair PostgreSQL 13 PVCs.

Prerequisites

- You have cluster admin privileges on OpenShift Container Platform.

Procedure

-

Log into the OpenShift Container Platform console and select Storage

Persistent Volume Claims. -

Select the desired

PersistentVolumeClaimfor either PostgreSQL 13 or Clair PostgreSQL 13, for example,example-registry-quay-postgres-13. - From the Action menu, select Expand PVC.

Enter the new size of the Persistent Volume Claim and select Expand.

After a few minutes, the expanded size should reflect in the PVC’s Capacity field.

3.6. Customizing Default Operator Images

Currently, customizing default Operator images is not supported on IBM Power and IBM Z.

In certain circumstances, it might be useful to override the default images used by the Red Hat Quay Operator. This can be done by setting one or more environment variables in the Red Hat Quay Operator ClusterServiceVersion.

Using this mechanism is not supported for production Red Hat Quay environments and is strongly encouraged only for development or testing purposes. There is no guarantee your deployment will work correctly when using non-default images with the Red Hat Quay Operator.

3.6.1. Environment Variables

The following environment variables are used in the Red Hat Quay Operator to override component images:

| Environment Variable | Component |

|

|

|

|

|

|

|

|

|

|

|

|

Overridden images must be referenced by manifest (@sha256:) and not by tag (:latest).

3.6.2. Applying overrides to a running Operator

When the Red Hat Quay Operator is installed in a cluster through the Operator Lifecycle Manager (OLM), the managed component container images can be easily overridden by modifying the ClusterServiceVersion object.

Use the following procedure to apply overrides to a running Red Hat Quay Operator.

Procedure

The

ClusterServiceVersionobject is Operator Lifecycle Manager’s representation of a running Operator in the cluster. Find the Red Hat Quay Operator’sClusterServiceVersionby using a Kubernetes UI or thekubectl/ocCLI tool. For example:oc get clusterserviceversions -n <your-namespace>

$ oc get clusterserviceversions -n <your-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Using the UI,

oc edit, or another method, modify the Red Hat QuayClusterServiceVersionto include the environment variables outlined above to point to the override images:JSONPath:

spec.install.spec.deployments[0].spec.template.spec.containers[0].envCopy to Clipboard Copied! Toggle word wrap Toggle overflow

This is done at the Operator level, so every QuayRegistry will be deployed using these same overrides.

3.7. AWS S3 CloudFront

Currently, using AWS S3 CloudFront is not supported on IBM Power and IBM Z.

Use the following procedure if you are using AWS S3 Cloudfront for your backend registry storage.

Procedure

Enter the following command to specify the registry key:

oc create secret generic --from-file config.yaml=./config_awss3cloudfront.yaml --from-file default-cloudfront-signing-key.pem=./default-cloudfront-signing-key.pem test-config-bundle

$ oc create secret generic --from-file config.yaml=./config_awss3cloudfront.yaml --from-file default-cloudfront-signing-key.pem=./default-cloudfront-signing-key.pem test-config-bundleCopy to Clipboard Copied! Toggle word wrap Toggle overflow