This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Questo contenuto non è disponibile nella lingua selezionata.

Chapter 5. Developing Operators

5.1. About the Operator SDK

The Operator Framework is an open source toolkit to manage Kubernetes native applications, called Operators, in an effective, automated, and scalable way. Operators take advantage of Kubernetes extensibility to deliver the automation advantages of cloud services, like provisioning, scaling, and backup and restore, while being able to run anywhere that Kubernetes can run.

Operators make it easy to manage complex, stateful applications on top of Kubernetes. However, writing an Operator today can be difficult because of challenges such as using low-level APIs, writing boilerplate, and a lack of modularity, which leads to duplication.

The Operator SDK, a component of the Operator Framework, provides a command-line interface (CLI) tool that Operator developers can use to build, test, and deploy an Operator.

Why use the Operator SDK?

The Operator SDK simplifies this process of building Kubernetes-native applications, which can require deep, application-specific operational knowledge. The Operator SDK not only lowers that barrier, but it also helps reduce the amount of boilerplate code required for many common management capabilities, such as metering or monitoring.

The Operator SDK is a framework that uses the controller-runtime library to make writing Operators easier by providing the following features:

- High-level APIs and abstractions to write the operational logic more intuitively

- Tools for scaffolding and code generation to quickly bootstrap a new project

- Integration with Operator Lifecycle Manager (OLM) to streamline packaging, installing, and running Operators on a cluster

- Extensions to cover common Operator use cases

- Metrics set up automatically in any generated Go-based Operator for use on clusters where the Prometheus Operator is deployed

Operator authors with cluster administrator access to a Kubernetes-based cluster (such as OpenShift Container Platform) can use the Operator SDK CLI to develop their own Operators based on Go, Ansible, or Helm. Kubebuilder is embedded into the Operator SDK as the scaffolding solution for Go-based Operators, which means existing Kubebuilder projects can be used as is with the Operator SDK and continue to work.

OpenShift Container Platform 4.8 supports Operator SDK v1.8.0 or later.

5.1.1. What are Operators?

For an overview about basic Operator concepts and terminology, see Understanding Operators.

5.1.2. Development workflow

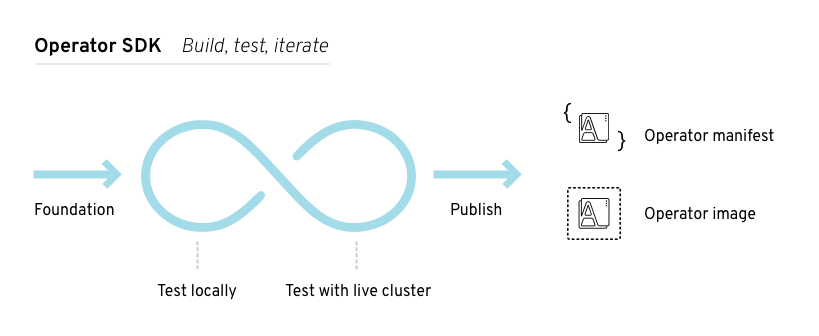

The Operator SDK provides the following workflow to develop a new Operator:

- Create an Operator project by using the Operator SDK command-line interface (CLI).

- Define new resource APIs by adding custom resource definitions (CRDs).

- Specify resources to watch by using the Operator SDK API.

- Define the Operator reconciling logic in a designated handler and use the Operator SDK API to interact with resources.

- Use the Operator SDK CLI to build and generate the Operator deployment manifests.

Figure 5.1. Operator SDK workflow

At a high level, an Operator that uses the Operator SDK processes events for watched resources in an Operator author-defined handler and takes actions to reconcile the state of the application.

5.2. Installing the Operator SDK CLI

The Operator SDK provides a command-line interface (CLI) tool that Operator developers can use to build, test, and deploy an Operator. You can install the Operator SDK CLI on your workstation so that you are prepared to start authoring your own Operators.

OpenShift Container Platform 4.8 supports Operator SDK v1.8.0.

5.2.1. Installing the Operator SDK CLI

You can install the OpenShift SDK CLI tool on Linux.

Prerequisites

- Go v1.16+

-

dockerv17.03+,podmanv1.9.3+, orbuildahv1.7+

Procedure

- Navigate to the OpenShift mirror site.

-

From the

4.8.4directory, download the latest version of the tarball for Linux. Unpack the archive:

tar xvf operator-sdk-v1.8.0-ocp-linux-x86_64.tar.gz

$ tar xvf operator-sdk-v1.8.0-ocp-linux-x86_64.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow Make the file executable:

chmod +x operator-sdk

$ chmod +x operator-sdkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Move the extracted

operator-sdkbinary to a directory that is on yourPATH.TipTo check your

PATH:echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow sudo mv ./operator-sdk /usr/local/bin/operator-sdk

$ sudo mv ./operator-sdk /usr/local/bin/operator-sdkCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

After you install the Operator SDK CLI, verify that it is available:

operator-sdk version

$ operator-sdk versionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

operator-sdk version: "v1.8.0-ocp", ...

operator-sdk version: "v1.8.0-ocp", ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3. Upgrading projects for newer Operator SDK versions

OpenShift Container Platform 4.8 supports Operator SDK v1.8.0. If you already have the v1.3.0 CLI installed on your workstation, you can upgrade the CLI to v1.8.0 by installing the latest version.

However, to ensure your existing Operator projects maintain compatibility with Operator SDK v1.8.0, upgrade steps are required for the associated breaking changes introduced since v1.3.0. You must perform the upgrade steps manually in any of your Operator projects that were previously created or maintained with v1.3.0.

5.3.1. Upgrading projects for Operator SDK v1.8.0

The following upgrade steps must be performed to upgrade an existing Operator project for compatibility with v1.8.0.

Prerequisites

- Operator SDK v1.8.0 installed

- Operator project that was previously created or maintained with Operator SDK v1.3.0

Procedure

Make the following changes to your

PROJECTfile:Update the

PROJECTfilepluginsobject to usemanifestsandscorecardobjects.The

manifestsandscorecardplug-ins that create Operator Lifecycle Manager (OLM) and scorecard manifests now have plug-in objects for runningcreatesubcommands to create related files.For Go-based Operator projects, an existing Go-based plug-in configuration object is already present. While the old configuration is still supported, these new objects will be useful in the future as configuration options are added to their respective plug-ins:

Old configuration

version: 3-alpha ... plugins: go.sdk.operatorframework.io/v2-alpha: {}version: 3-alpha ... plugins: go.sdk.operatorframework.io/v2-alpha: {}Copy to Clipboard Copied! Toggle word wrap Toggle overflow New configuration

version: 3-alpha ... plugins: manifests.sdk.operatorframework.io/v2: {} scorecard.sdk.operatorframework.io/v2: {}version: 3-alpha ... plugins: manifests.sdk.operatorframework.io/v2: {} scorecard.sdk.operatorframework.io/v2: {}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: For Ansible- and Helm-based Operator projects, the plug-in configuration object previously did not exist. While you are not required to add the plug-in configuration objects, these new objects will be useful in the future as configuration options are added to their respective plug-ins:

version: 3-alpha ... plugins: manifests.sdk.operatorframework.io/v2: {} scorecard.sdk.operatorframework.io/v2: {}version: 3-alpha ... plugins: manifests.sdk.operatorframework.io/v2: {} scorecard.sdk.operatorframework.io/v2: {}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The

PROJECTconfig version3-alphamust be upgraded to3. Theversionkey in yourPROJECTfile represents thePROJECTconfig version:Old

PROJECTfileversion: 3-alpha resources: - crdVersion: v1 ...

version: 3-alpha resources: - crdVersion: v1 ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Version

3-alphahas been stabilized as version 3 and contains a set of config fields sufficient to fully describe a project. While this change is not technically breaking because the spec at that version was alpha, it was used by default inoperator-sdkcommands, so it should be marked as breaking and have a convenient upgrade path.Run the

alpha config-3alpha-to-3command to convert most of yourPROJECTfile from version3-alphato3:operator-sdk alpha config-3alpha-to-3

$ operator-sdk alpha config-3alpha-to-3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Your PROJECT config file has been converted from version 3-alpha to 3. Please make sure all config data is correct.

Your PROJECT config file has been converted from version 3-alpha to 3. Please make sure all config data is correct.Copy to Clipboard Copied! Toggle word wrap Toggle overflow The command will also output comments with directions where automatic conversion is not possible.

Verify the change:

New

PROJECTfileversion: "3" resources: - api: crdVersion: v1 ...

version: "3" resources: - api: crdVersion: v1 ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Make the following changes to your

config/manager/manager.yamlfile:For Ansible- and Helm-based Operator projects, add liveness and readiness probes.

New projects built with the Operator SDK have the probes configured by default. The endpoints

/healthzand/readyzare available now in the provided image base. You can update your existing projects to use the probes by updating theDockerfileto use the latest base image, then add the following to themanagercontainer in theconfig/manager/manager.yamlfile:Example 5.1. Configuration for Ansible-based Operator projects

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 5.2. Configuration for Helm-based Operator projects

Copy to Clipboard Copied! Toggle word wrap Toggle overflow For Ansible- and Helm-based Operator projects, add security contexts to your manager’s deployment.

In the

config/manager/manager.yamlfile, add the following security contexts:Example 5.3.

config/manager/manager.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Make the following changes to your

Makefile:For Ansible- and Helm-based Operator projects, update the

helm-operatorandansible-operatorURLs in theMakefile:For Ansible-based Operator projects, change:

https://github.com/operator-framework/operator-sdk/releases/download/v1.3.0/ansible-operator-v1.3.0-$(ARCHOPER)-$(OSOPER)

https://github.com/operator-framework/operator-sdk/releases/download/v1.3.0/ansible-operator-v1.3.0-$(ARCHOPER)-$(OSOPER)Copy to Clipboard Copied! Toggle word wrap Toggle overflow to:

https://github.com/operator-framework/operator-sdk/releases/download/v1.8.0/ansible-operator_$(OS)_$(ARCH)

https://github.com/operator-framework/operator-sdk/releases/download/v1.8.0/ansible-operator_$(OS)_$(ARCH)Copy to Clipboard Copied! Toggle word wrap Toggle overflow For Helm-based Operator projects, change:

https://github.com/operator-framework/operator-sdk/releases/download/v1.3.0/helm-operator-v1.3.0-$(ARCHOPER)-$(OSOPER)

https://github.com/operator-framework/operator-sdk/releases/download/v1.3.0/helm-operator-v1.3.0-$(ARCHOPER)-$(OSOPER)Copy to Clipboard Copied! Toggle word wrap Toggle overflow to:

https://github.com/operator-framework/operator-sdk/releases/download/v1.8.0/helm-operator_$(OS)_$(ARCH)

https://github.com/operator-framework/operator-sdk/releases/download/v1.8.0/helm-operator_$(OS)_$(ARCH)Copy to Clipboard Copied! Toggle word wrap Toggle overflow

For Ansible- and Helm-based Operator projects, update the

helm-operator,ansible-operator, andkustomizerules in theMakefile. These rules download a local binary but do not use it if a global binary is present:Example 5.4.

Makefilediff for Ansible-based Operator projectsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 5.5.

Makefilediff for Helm-based Operator projectsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Move the positional directory argument

.in themaketarget fordocker-build.The directory argument

.in thedocker-buildtarget was moved to the last positional argument to align withpodmanCLI expectations, which makes substitution cleaner:Old target

docker-build: docker build . -t ${IMG}docker-build: docker build . -t ${IMG}Copy to Clipboard Copied! Toggle word wrap Toggle overflow New target

docker-build: docker build -t ${IMG} .docker-build: docker build -t ${IMG} .Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can make this change by running the following command:

sed -i 's/docker build . -t ${IMG}/docker build -t ${IMG} ./' $(git grep -l 'docker.*build \. ')$ sed -i 's/docker build . -t ${IMG}/docker build -t ${IMG} ./' $(git grep -l 'docker.*build \. ')Copy to Clipboard Copied! Toggle word wrap Toggle overflow For Ansible- and Helm-based Operator projects, add a

helptarget to theMakefile.Ansible- and Helm-based projects now provide

helptarget in theMakefileby default, similar to a--helpflag. You can manually add this target to yourMakefileusing the following lines:Example 5.6.

helptargetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add

opmandcatalog-buildtargets. You can use these targets to create your own catalogs for your Operator or add your Operator bundles to an existing catalog:Add the targets to your

Makefileby adding the following lines:Example 5.7.

opmandcatalog-buildtargetsCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are updating a Go-based Operator project, also add the following

Makefilevariables:Example 5.8.

MakefilevariablesOS = $(shell go env GOOS) ARCH = $(shell go env GOARCH)

OS = $(shell go env GOOS) ARCH = $(shell go env GOARCH)Copy to Clipboard Copied! Toggle word wrap Toggle overflow

For Go-based Operator projects, set the

SHELLvariable in yourMakefileto the systembashbinary.Importing the

setup-envtest.shscript requiresbash, so theSHELLvariable must be set tobashwith error options:Example 5.9.

MakefilediffCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For Go-based Operator projects, upgrade

controller-runtimeto v0.8.3 and Kubernetes dependencies to v0.20.2 by changing the following entries in yourgo.modfile, then rebuild your project:Example 5.10.

go.modfile... k8s.io/api v0.20.2 k8s.io/apimachinery v0.20.2 k8s.io/client-go v0.20.2 sigs.k8s.io/controller-runtime v0.8.3

... k8s.io/api v0.20.2 k8s.io/apimachinery v0.20.2 k8s.io/client-go v0.20.2 sigs.k8s.io/controller-runtime v0.8.3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a

system:controller-managerservice account to your project. A non-default service accountcontroller-manageris now generated by theoperator-sdk initcommand to improve security for Operators installed in shared namespaces. To add this service account to your existing project, follow these steps:Create the

ServiceAccountdefinition in a file:Example 5.11.

config/rbac/service_account.yamlfileapiVersion: v1 kind: ServiceAccount metadata: name: controller-manager namespace: system

apiVersion: v1 kind: ServiceAccount metadata: name: controller-manager namespace: systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the service account to the list of RBAC resources:

echo "- service_account.yaml" >> config/rbac/kustomization.yaml

$ echo "- service_account.yaml" >> config/rbac/kustomization.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update all

RoleBindingandClusterRoleBindingobjects that reference the Operator’s service account:find config/rbac -name *_binding.yaml -exec sed -i -E 's/ name: default/ name: controller-manager/g' {} \;$ find config/rbac -name *_binding.yaml -exec sed -i -E 's/ name: default/ name: controller-manager/g' {} \;Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the service account name to the manager deployment’s

spec.template.spec.serviceAccountNamefield:sed -i -E 's/([ ]+)(terminationGracePeriodSeconds:)/\1serviceAccountName: controller-manager\n\1\2/g' config/manager/manager.yaml

$ sed -i -E 's/([ ]+)(terminationGracePeriodSeconds:)/\1serviceAccountName: controller-manager\n\1\2/g' config/manager/manager.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the changes look like the following diffs:

Example 5.12.

config/manager/manager.yamlfile diffCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 5.13.

config/rbac/auth_proxy_role_binding.yamlfile diffCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 5.14.

config/rbac/kustomization.yamlfile diffresources: +- service_account.yaml - role.yaml - role_binding.yaml - leader_election_role.yaml

resources: +- service_account.yaml - role.yaml - role_binding.yaml - leader_election_role.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 5.15.

config/rbac/leader_election_role_binding.yamlfile diffCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 5.16.

config/rbac/role_binding.yamlfile diffCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 5.17.

config/rbac/service_account.yamlfile diff+apiVersion: v1 +kind: ServiceAccount +metadata: + name: controller-manager + namespace: system

+apiVersion: v1 +kind: ServiceAccount +metadata: + name: controller-manager + namespace: systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Make the following changes to your

config/manifests/kustomization.yamlfile:Add a Kustomize patch to remove the cert-manager

volumeandvolumeMountobjects from your cluster service version (CSV).Because Operator Lifecycle Manager (OLM) does not yet support cert-manager, a JSON patch was added to remove this volume and mount so OLM can create and manage certificates for your Operator.

In the

config/manifests/kustomization.yamlfile, add the following lines:Example 5.18.

config/manifests/kustomization.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: For Ansible- and Helm-based Operator projects, configure

ansible-operatorandhelm-operatorwith a component config. To add this option, follow these steps:Create the following file:

Example 5.19.

config/default/manager_config_patch.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the following file:

Example 5.20.

config/manager/controller_manager_config.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the

config/default/kustomization.yamlfile by applying the following changes toresources:Example 5.21.

config/default/kustomization.yamlfileresources: ... - manager_config_patch.yaml

resources: ... - manager_config_patch.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the

config/manager/kustomization.yamlfile by applying the following changes:Example 5.22.

config/manager/kustomization.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Optional: Add a manager config patch to the

config/default/kustomization.yamlfile.The generated

--configflag was not added to either theansible-operatororhelm-operatorbinary when config file support was originally added, so it does not currently work. The--configflag supports configuration of both binaries by file; this method of configuration only applies to the underlying controller manager and not the Operator as a whole.To optionally configure the Operator’s deployment with a config file, make changes to the

config/default/kustomization.yamlfile as shown in the following diff:Example 5.23.

config/default/kustomization.yamlfile diff# If you want your controller-manager to expose the /metrics # endpoint w/o any authn/z, please comment the following line. \- manager_auth_proxy_patch.yaml +# Mount the controller config file for loading manager configurations +# through a ComponentConfig type +- manager_config_patch.yaml

# If you want your controller-manager to expose the /metrics # endpoint w/o any authn/z, please comment the following line. \- manager_auth_proxy_patch.yaml +# Mount the controller config file for loading manager configurations +# through a ComponentConfig type +- manager_config_patch.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Flags can be used as is or to override config file values.

For Ansible- and Helm-based Operator projects, add role rules for leader election by making the following changes to the

config/rbac/leader_election_role.yamlfile:Example 5.24.

config/rbac/leader_election_role.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Ansible-based Operator projects, update Ansible collections.

In your

requirements.ymlfile, change theversionfield forcommunity.kubernetesto1.2.1, and theversionfield foroperator_sdk.utilto0.2.0.Make the following changes to your

config/default/manager_auth_proxy_patch.yamlfile:For Ansible-based Operator projects, add the

--health-probe-bind-address=:6789argument to theconfig/default/manager_auth_proxy_patch.yamlfile:Example 5.25.

config/default/manager_auth_proxy_patch.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Helm-based Operator projects:

Add the

--health-probe-bind-address=:8081argument to theconfig/default/manager_auth_proxy_patch.yamlfile:Example 5.26.

config/default/manager_auth_proxy_patch.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace the deprecated flag

--enable-leader-electionwith--leader-elect, and the deprecated flag--metrics-addrwith--metrics-bind-address.

Make the following changes to your

config/prometheus/monitor.yamlfile:Add scheme, token, and TLS config to the Prometheus

ServiceMonitormetrics endpoint.The

/metricsendpoint, while specifying thehttpsport on the manager pod, was not actually configured to serve over HTTPS because notlsConfigwas set. Becausekube-rbac-proxysecures this endpoint as a manager sidecar, using the service account token mounted into the pod by default corrects this problem.Apply the changes to the

config/prometheus/monitor.yamlfile as shown in the following diff:Example 5.27.

config/prometheus/monitor.yamlfile diffCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you removed

kube-rbac-proxyfrom your project, ensure that you secure the/metricsendpoint using a proper TLS configuration.

Ensure that existing dependent resources have owner annotations.

For Ansible-based Operator projects, owner reference annotations on cluster-scoped dependent resources and dependent resources in other namespaces were not applied correctly. A workaround was to add these annotations manually, which is no longer required as this bug has been fixed.

Deprecate support for package manifests.

The Operator Framework is removing support for the Operator package manifest format in a future release. As part of the ongoing deprecation process, the

operator-sdk generate packagemanifestsandoperator-sdk run packagemanifestscommands are now deprecated. To migrate package manifests to bundles, theoperator-sdk pkgman-to-bundlecommand can be used.Run the

operator-sdk pkgman-to-bundle --helpcommand and see "Migrating package manifest projects to bundle format" for more details.Update the finalizer names for your Operator.

The finalizer name format suggested by Kubernetes documentation is:

<qualified_group>/<finalizer_name>

<qualified_group>/<finalizer_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow while the format previously documented for Operator SDK was:

<finalizer_name>.<qualified_group>

<finalizer_name>.<qualified_group>Copy to Clipboard Copied! Toggle word wrap Toggle overflow If your Operator uses any finalizers with names that match the incorrect format, change them to match the official format. For example,

finalizer.cache.example.commust be changed tocache.example.com/finalizer.

Your Operator project is now compatible with Operator SDK v1.8.0.

5.4. Go-based Operators

5.4.1. Getting started with Operator SDK for Go-based Operators

To demonstrate the basics of setting up and running a Go-based Operator using tools and libraries provided by the Operator SDK, Operator developers can build an example Go-based Operator for Memcached, a distributed key-value store, and deploy it to a cluster.

5.4.1.1. Prerequisites

- Operator SDK CLI installed

-

OpenShift CLI (

oc) v4.8+ installed -

Logged into an OpenShift Container Platform 4.8 cluster with

ocwith an account that hascluster-adminpermissions - To allow the cluster pull the image, the repository where you push your image must be set as public, or you must configure an image pull secret

5.4.1.2. Creating and deploying Go-based Operators

You can build and deploy a simple Go-based Operator for Memcached by using the Operator SDK.

Procedure

Create a project.

Create your project directory:

mkdir memcached-operator

$ mkdir memcached-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change into the project directory:

cd memcached-operator

$ cd memcached-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

operator-sdk initcommand to initialize the project:operator-sdk init \ --domain=example.com \ --repo=github.com/example-inc/memcached-operator$ operator-sdk init \ --domain=example.com \ --repo=github.com/example-inc/memcached-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow The command uses the Go plugin by default.

Create an API.

Create a simple Memcached API:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Build and push the Operator image.

Use the default

Makefiletargets to build and push your Operator. SetIMGwith a pull spec for your image that uses a registry you can push to:make docker-build docker-push IMG=<registry>/<user>/<image_name>:<tag>

$ make docker-build docker-push IMG=<registry>/<user>/<image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the Operator.

Install the CRD:

make install

$ make installCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy the project to the cluster. Set

IMGto the image that you pushed:make deploy IMG=<registry>/<user>/<image_name>:<tag>

$ make deploy IMG=<registry>/<user>/<image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a sample custom resource (CR).

Create a sample CR:

oc apply -f config/samples/cache_v1_memcached.yaml \ -n memcached-operator-system$ oc apply -f config/samples/cache_v1_memcached.yaml \ -n memcached-operator-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Watch for the CR to reconcile the Operator:

oc logs deployment.apps/memcached-operator-controller-manager \ -c manager \ -n memcached-operator-system$ oc logs deployment.apps/memcached-operator-controller-manager \ -c manager \ -n memcached-operator-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Clean up.

Run the following command to clean up the resources that have been created as part of this procedure:

make undeploy

$ make undeployCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4.1.3. Next steps

- See Operator SDK tutorial for Go-based Operators for a more in-depth walkthrough on building a Go-based Operator.

5.4.2. Operator SDK tutorial for Go-based Operators

Operator developers can take advantage of Go programming language support in the Operator SDK to build an example Go-based Operator for Memcached, a distributed key-value store, and manage its lifecycle.

This process is accomplished using two centerpieces of the Operator Framework:

- Operator SDK

-

The

operator-sdkCLI tool andcontroller-runtimelibrary API - Operator Lifecycle Manager (OLM)

- Installation, upgrade, and role-based access control (RBAC) of Operators on a cluster

This tutorial goes into greater detail than Getting started with Operator SDK for Go-based Operators.

5.4.2.1. Prerequisites

- Operator SDK CLI installed

-

OpenShift CLI (

oc) v4.8+ installed -

Logged into an OpenShift Container Platform 4.8 cluster with

ocwith an account that hascluster-adminpermissions - To allow the cluster pull the image, the repository where you push your image must be set as public, or you must configure an image pull secret

5.4.2.2. Creating a project

Use the Operator SDK CLI to create a project called memcached-operator.

Procedure

Create a directory for the project:

mkdir -p $HOME/projects/memcached-operator

$ mkdir -p $HOME/projects/memcached-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change to the directory:

cd $HOME/projects/memcached-operator

$ cd $HOME/projects/memcached-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Activate support for Go modules:

export GO111MODULE=on

$ export GO111MODULE=onCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

operator-sdk initcommand to initialize the project:operator-sdk init \ --domain=example.com \ --repo=github.com/example-inc/memcached-operator$ operator-sdk init \ --domain=example.com \ --repo=github.com/example-inc/memcached-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

operator-sdk initcommand uses the Go plugin by default.The

operator-sdk initcommand generates ago.modfile to be used with Go modules. The--repoflag is required when creating a project outside of$GOPATH/src/, because generated files require a valid module path.

5.4.2.2.1. PROJECT file

Among the files generated by the operator-sdk init command is a Kubebuilder PROJECT file. Subsequent operator-sdk commands, as well as help output, that are run from the project root read this file and are aware that the project type is Go. For example:

5.4.2.2.2. About the Manager

The main program for the Operator is the main.go file, which initializes and runs the Manager. The Manager automatically registers the Scheme for all custom resource (CR) API definitions and sets up and runs controllers and webhooks.

The Manager can restrict the namespace that all controllers watch for resources:

mgr, err := ctrl.NewManager(cfg, manager.Options{Namespace: namespace})

mgr, err := ctrl.NewManager(cfg, manager.Options{Namespace: namespace})

By default, the Manager watches the namespace where the Operator runs. To watch all namespaces, you can leave the namespace option empty:

mgr, err := ctrl.NewManager(cfg, manager.Options{Namespace: ""})

mgr, err := ctrl.NewManager(cfg, manager.Options{Namespace: ""})

You can also use the MultiNamespacedCacheBuilder function to watch a specific set of namespaces:

var namespaces []string

mgr, err := ctrl.NewManager(cfg, manager.Options{

NewCache: cache.MultiNamespacedCacheBuilder(namespaces),

})

var namespaces []string

mgr, err := ctrl.NewManager(cfg, manager.Options{

NewCache: cache.MultiNamespacedCacheBuilder(namespaces),

})5.4.2.2.3. About multi-group APIs

Before you create an API and controller, consider whether your Operator requires multiple API groups. This tutorial covers the default case of a single group API, but to change the layout of your project to support multi-group APIs, you can run the following command:

operator-sdk edit --multigroup=true

$ operator-sdk edit --multigroup=true

This command updates the PROJECT file, which should look like the following example:

domain: example.com layout: go.kubebuilder.io/v3 multigroup: true ...

domain: example.com

layout: go.kubebuilder.io/v3

multigroup: true

...

For multi-group projects, the API Go type files are created in the apis/<group>/<version>/ directory, and the controllers are created in the controllers/<group>/ directory. The Dockerfile is then updated accordingly.

Additional resource

- For more details on migrating to a multi-group project, see the Kubebuilder documentation.

5.4.2.3. Creating an API and controller

Use the Operator SDK CLI to create a custom resource definition (CRD) API and controller.

Procedure

Run the following command to create an API with group

cache, version,v1, and kindMemcached:operator-sdk create api \ --group=cache \ --version=v1 \ --kind=Memcached$ operator-sdk create api \ --group=cache \ --version=v1 \ --kind=MemcachedCopy to Clipboard Copied! Toggle word wrap Toggle overflow When prompted, enter

yfor creating both the resource and controller:Create Resource [y/n] y Create Controller [y/n] y

Create Resource [y/n] y Create Controller [y/n] yCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Writing scaffold for you to edit... api/v1/memcached_types.go controllers/memcached_controller.go ...

Writing scaffold for you to edit... api/v1/memcached_types.go controllers/memcached_controller.go ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

This process generates the Memcached resource API at api/v1/memcached_types.go and the controller at controllers/memcached_controller.go.

5.4.2.3.1. Defining the API

Define the API for the Memcached custom resource (CR).

Procedure

Modify the Go type definitions at

api/v1/memcached_types.goto have the followingspecandstatus:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the generated code for the resource type:

make generate

$ make generateCopy to Clipboard Copied! Toggle word wrap Toggle overflow TipAfter you modify a

*_types.gofile, you must run themake generatecommand to update the generated code for that resource type.The above Makefile target invokes the

controller-genutility to update theapi/v1/zz_generated.deepcopy.gofile. This ensures your API Go type definitions implement theruntime.Objectinterface that all Kind types must implement.

5.4.2.3.2. Generating CRD manifests

After the API is defined with spec and status fields and custom resource definition (CRD) validation markers, you can generate CRD manifests.

Procedure

Run the following command to generate and update CRD manifests:

make manifests

$ make manifestsCopy to Clipboard Copied! Toggle word wrap Toggle overflow This Makefile target invokes the

controller-genutility to generate the CRD manifests in theconfig/crd/bases/cache.example.com_memcacheds.yamlfile.

5.4.2.3.2.1. About OpenAPI validation

OpenAPIv3 schemas are added to CRD manifests in the spec.validation block when the manifests are generated. This validation block allows Kubernetes to validate the properties in a Memcached custom resource (CR) when it is created or updated.

Markers, or annotations, are available to configure validations for your API. These markers always have a +kubebuilder:validation prefix.

5.4.2.4. Implementing the controller

After creating a new API and controller, you can implement the controller logic.

Procedure

For this example, replace the generated controller file

controllers/memcached_controller.gowith following example implementation:Example 5.28. Example

memcached_controller.goCopy to Clipboard Copied! Toggle word wrap Toggle overflow The example controller runs the following reconciliation logic for each

Memcachedcustom resource (CR):- Create a Memcached deployment if it does not exist.

-

Ensure that the deployment size is the same as specified by the

MemcachedCR spec. -

Update the

MemcachedCR status with the names of thememcachedpods.

The next subsections explain how the controller in the example implementation watches resources and how the reconcile loop is triggered. You can skip these subsections to go directly to Running the Operator.

5.4.2.4.1. Resources watched by the controller

The SetupWithManager() function in controllers/memcached_controller.go specifies how the controller is built to watch a CR and other resources that are owned and managed by that controller.

NewControllerManagedBy() provides a controller builder that allows various controller configurations.

For(&cachev1.Memcached{}) specifies the Memcached type as the primary resource to watch. For each Add, Update, or Delete event for a Memcached type, the reconcile loop is sent a reconcile Request argument, which consists of a namespace and name key, for that Memcached object.

Owns(&appsv1.Deployment{}) specifies the Deployment type as the secondary resource to watch. For each Deployment type Add, Update, or Delete event, the event handler maps each event to a reconcile request for the owner of the deployment. In this case, the owner is the Memcached object for which the deployment was created.

5.4.2.4.2. Controller configurations

You can initialize a controller by using many other useful configurations. For example:

Set the maximum number of concurrent reconciles for the controller by using the

MaxConcurrentReconcilesoption, which defaults to1:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Filter watch events using predicates.

-

Choose the type of EventHandler to change how a watch event translates to reconcile requests for the reconcile loop. For Operator relationships that are more complex than primary and secondary resources, you can use the

EnqueueRequestsFromMapFunchandler to transform a watch event into an arbitrary set of reconcile requests.

For more details on these and other configurations, see the upstream Builder and Controller GoDocs.

5.4.2.4.3. Reconcile loop

Every controller has a reconciler object with a Reconcile() method that implements the reconcile loop. The reconcile loop is passed the Request argument, which is a namespace and name key used to find the primary resource object, Memcached, from the cache:

Based on the return values, result, and error, the request might be requeued and the reconcile loop might be triggered again:

You can set the Result.RequeueAfter to requeue the request after a grace period as well:

import "time"

// Reconcile for any reason other than an error after 5 seconds

return ctrl.Result{RequeueAfter: time.Second*5}, nil

import "time"

// Reconcile for any reason other than an error after 5 seconds

return ctrl.Result{RequeueAfter: time.Second*5}, nil

You can return Result with RequeueAfter set to periodically reconcile a CR.

For more on reconcilers, clients, and interacting with resource events, see the Controller Runtime Client API documentation.

5.4.2.4.4. Permissions and RBAC manifests

The controller requires certain RBAC permissions to interact with the resources it manages. These are specified using RBAC markers, such as the following:

The ClusterRole object manifest at config/rbac/role.yaml is generated from the previous markers by using the controller-gen utility whenever the make manifests command is run.

5.4.2.5. Running the Operator

There are three ways you can use the Operator SDK CLI to build and run your Operator:

- Run locally outside the cluster as a Go program.

- Run as a deployment on the cluster.

- Bundle your Operator and use Operator Lifecycle Manager (OLM) to deploy on the cluster.

Before running your Go-based Operator as either a deployment on OpenShift Container Platform or as a bundle that uses OLM, ensure that your project has been updated to use supported images.

5.4.2.5.1. Running locally outside the cluster

You can run your Operator project as a Go program outside of the cluster. This is useful for development purposes to speed up deployment and testing.

Procedure

Run the following command to install the custom resource definitions (CRDs) in the cluster configured in your

~/.kube/configfile and run the Operator locally:make install run

$ make install runCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4.2.5.2. Running as a deployment on the cluster

You can run your Operator project as a deployment on your cluster.

Prerequisites

- Prepared your Go-based Operator to run on OpenShift Container Platform by updating the project to use supported images

Procedure

Run the following

makecommands to build and push the Operator image. Modify theIMGargument in the following steps to reference a repository that you have access to. You can obtain an account for storing containers at repository sites such as Quay.io.Build the image:

make docker-build IMG=<registry>/<user>/<image_name>:<tag>

$ make docker-build IMG=<registry>/<user>/<image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe Dockerfile generated by the SDK for the Operator explicitly references

GOARCH=amd64forgo build. This can be amended toGOARCH=$TARGETARCHfor non-AMD64 architectures. Docker will automatically set the environment variable to the value specified by–platform. With Buildah, the–build-argwill need to be used for the purpose. For more information, see Multiple Architectures.Push the image to a repository:

make docker-push IMG=<registry>/<user>/<image_name>:<tag>

$ make docker-push IMG=<registry>/<user>/<image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe name and tag of the image, for example

IMG=<registry>/<user>/<image_name>:<tag>, in both the commands can also be set in your Makefile. Modify theIMG ?= controller:latestvalue to set your default image name.

Run the following command to deploy the Operator:

make deploy IMG=<registry>/<user>/<image_name>:<tag>

$ make deploy IMG=<registry>/<user>/<image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow By default, this command creates a namespace with the name of your Operator project in the form

<project_name>-systemand is used for the deployment. This command also installs the RBAC manifests fromconfig/rbac.Verify that the Operator is running:

oc get deployment -n <project_name>-system

$ oc get deployment -n <project_name>-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE <project_name>-controller-manager 1/1 1 1 8m

NAME READY UP-TO-DATE AVAILABLE AGE <project_name>-controller-manager 1/1 1 1 8mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4.2.5.3. Bundling an Operator and deploying with Operator Lifecycle Manager

5.4.2.5.3.1. Bundling an Operator

The Operator bundle format is the default packaging method for Operator SDK and Operator Lifecycle Manager (OLM). You can get your Operator ready for use on OLM by using the Operator SDK to build and push your Operator project as a bundle image.

Prerequisites

- Operator SDK CLI installed on a development workstation

-

OpenShift CLI (

oc) v4.8+ installed - Operator project initialized by using the Operator SDK

- If your Operator is Go-based, your project must be updated to use supported images for running on OpenShift Container Platform

Procedure

Run the following

makecommands in your Operator project directory to build and push your Operator image. Modify theIMGargument in the following steps to reference a repository that you have access to. You can obtain an account for storing containers at repository sites such as Quay.io.Build the image:

make docker-build IMG=<registry>/<user>/<operator_image_name>:<tag>

$ make docker-build IMG=<registry>/<user>/<operator_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe Dockerfile generated by the SDK for the Operator explicitly references

GOARCH=amd64forgo build. This can be amended toGOARCH=$TARGETARCHfor non-AMD64 architectures. Docker will automatically set the environment variable to the value specified by–platform. With Buildah, the–build-argwill need to be used for the purpose. For more information, see Multiple Architectures.Push the image to a repository:

make docker-push IMG=<registry>/<user>/<operator_image_name>:<tag>

$ make docker-push IMG=<registry>/<user>/<operator_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Create your Operator bundle manifest by running the

make bundlecommand, which invokes several commands, including the Operator SDKgenerate bundleandbundle validatesubcommands:make bundle IMG=<registry>/<user>/<operator_image_name>:<tag>

$ make bundle IMG=<registry>/<user>/<operator_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Bundle manifests for an Operator describe how to display, create, and manage an application. The

make bundlecommand creates the following files and directories in your Operator project:-

A bundle manifests directory named

bundle/manifeststhat contains aClusterServiceVersionobject -

A bundle metadata directory named

bundle/metadata -

All custom resource definitions (CRDs) in a

config/crddirectory -

A Dockerfile

bundle.Dockerfile

These files are then automatically validated by using

operator-sdk bundle validateto ensure the on-disk bundle representation is correct.-

A bundle manifests directory named

Build and push your bundle image by running the following commands. OLM consumes Operator bundles using an index image, which reference one or more bundle images.

Build the bundle image. Set

BUNDLE_IMGwith the details for the registry, user namespace, and image tag where you intend to push the image:make bundle-build BUNDLE_IMG=<registry>/<user>/<bundle_image_name>:<tag>

$ make bundle-build BUNDLE_IMG=<registry>/<user>/<bundle_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Push the bundle image:

docker push <registry>/<user>/<bundle_image_name>:<tag>

$ docker push <registry>/<user>/<bundle_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4.2.5.3.2. Deploying an Operator with Operator Lifecycle Manager

Operator Lifecycle Manager (OLM) helps you to install, update, and manage the lifecycle of Operators and their associated services on a Kubernetes cluster. OLM is installed by default on OpenShift Container Platform and runs as a Kubernetes extension so that you can use the web console and the OpenShift CLI (oc) for all Operator lifecycle management functions without any additional tools.

The Operator bundle format is the default packaging method for Operator SDK and OLM. You can use the Operator SDK to quickly run a bundle image on OLM to ensure that it runs properly.

Prerequisites

- Operator SDK CLI installed on a development workstation

- Operator bundle image built and pushed to a registry

-

OLM installed on a Kubernetes-based cluster (v1.16.0 or later if you use

apiextensions.k8s.io/v1CRDs, for example OpenShift Container Platform 4.8) -

Logged in to the cluster with

ocusing an account withcluster-adminpermissions - If your Operator is Go-based, your project must be updated to use supported images for running on OpenShift Container Platform

Procedure

Enter the following command to run the Operator on the cluster:

operator-sdk run bundle \ [-n <namespace>] \ <registry>/<user>/<bundle_image_name>:<tag>$ operator-sdk run bundle \ [-n <namespace>] \1 <registry>/<user>/<bundle_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- By default, the command installs the Operator in the currently active project in your

~/.kube/configfile. You can add the-nflag to set a different namespace scope for the installation.

This command performs the following actions:

- Create an index image referencing your bundle image. The index image is opaque and ephemeral, but accurately reflects how a bundle would be added to a catalog in production.

- Create a catalog source that points to your new index image, which enables OperatorHub to discover your Operator.

-

Deploy your Operator to your cluster by creating an

OperatorGroup,Subscription,InstallPlan, and all other required objects, including RBAC.

5.4.2.6. Creating a custom resource

After your Operator is installed, you can test it by creating a custom resource (CR) that is now provided on the cluster by the Operator.

Prerequisites

-

Example Memcached Operator, which provides the

MemcachedCR, installed on a cluster

Procedure

Change to the namespace where your Operator is installed. For example, if you deployed the Operator using the

make deploycommand:oc project memcached-operator-system

$ oc project memcached-operator-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the sample

MemcachedCR manifest atconfig/samples/cache_v1_memcached.yamlto contain the following specification:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the CR:

oc apply -f config/samples/cache_v1_memcached.yaml

$ oc apply -f config/samples/cache_v1_memcached.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure that the

MemcachedOperator creates the deployment for the sample CR with the correct size:oc get deployments

$ oc get deploymentsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE memcached-operator-controller-manager 1/1 1 1 8m memcached-sample 3/3 3 3 1m

NAME READY UP-TO-DATE AVAILABLE AGE memcached-operator-controller-manager 1/1 1 1 8m memcached-sample 3/3 3 3 1mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the pods and CR status to confirm the status is updated with the Memcached pod names.

Check the pods:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE memcached-sample-6fd7c98d8-7dqdr 1/1 Running 0 1m memcached-sample-6fd7c98d8-g5k7v 1/1 Running 0 1m memcached-sample-6fd7c98d8-m7vn7 1/1 Running 0 1m

NAME READY STATUS RESTARTS AGE memcached-sample-6fd7c98d8-7dqdr 1/1 Running 0 1m memcached-sample-6fd7c98d8-g5k7v 1/1 Running 0 1m memcached-sample-6fd7c98d8-m7vn7 1/1 Running 0 1mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the CR status:

oc get memcached/memcached-sample -o yaml

$ oc get memcached/memcached-sample -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Update the deployment size.

Update

config/samples/cache_v1_memcached.yamlfile to change thespec.sizefield in theMemcachedCR from3to5:oc patch memcached memcached-sample \ -p '{"spec":{"size": 5}}' \ --type=merge$ oc patch memcached memcached-sample \ -p '{"spec":{"size": 5}}' \ --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the Operator changes the deployment size:

oc get deployments

$ oc get deploymentsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE memcached-operator-controller-manager 1/1 1 1 10m memcached-sample 5/5 5 5 3m

NAME READY UP-TO-DATE AVAILABLE AGE memcached-operator-controller-manager 1/1 1 1 10m memcached-sample 5/5 5 5 3mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Clean up the resources that have been created as part of this tutorial.

If you used the

make deploycommand to test the Operator, run the following command:make undeploy

$ make undeployCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you used the

operator-sdk run bundlecommand to test the Operator, run the following command:operator-sdk cleanup <project_name>

$ operator-sdk cleanup <project_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4.3. Project layout for Go-based Operators

The operator-sdk CLI can generate, or scaffold, a number of packages and files for each Operator project.

5.4.3.1. Go-based project layout

Go-based Operator projects, the default type, generated using the operator-sdk init command contain the following files and directories:

| File or directory | Purpose |

|---|---|

|

|

Main program of the Operator. This instantiates a new manager that registers all custom resource definitions (CRDs) in the |

|

|

Directory tree that defines the APIs of the CRDs. You must edit the |

|

|

Controller implementations. Edit the |

|

| Kubernetes manifests used to deploy your controller on a cluster, including CRDs, RBAC, and certificates. |

|

| Targets used to build and deploy your controller. |

|

| Instructions used by a container engine to build your Operator. |

|

| Kubernetes manifests for registering CRDs, setting up RBAC, and deploying the Operator as a deployment. |

5.5. Ansible-based Operators

5.5.1. Getting started with Operator SDK for Ansible-based Operators

The Operator SDK includes options for generating an Operator project that leverages existing Ansible playbooks and modules to deploy Kubernetes resources as a unified application, without having to write any Go code.

To demonstrate the basics of setting up and running an Ansible-based Operator using tools and libraries provided by the Operator SDK, Operator developers can build an example Ansible-based Operator for Memcached, a distributed key-value store, and deploy it to a cluster.

5.5.1.1. Prerequisites

- Operator SDK CLI installed

-

OpenShift CLI (

oc) v4.8+ installed - Ansible version v2.9.0

- Ansible Runner version v1.1.0+

- Ansible Runner HTTP Event Emitter plugin version v1.0.0+

- OpenShift Python client version v0.11.2+

-

Logged into an OpenShift Container Platform 4.8 cluster with

ocwith an account that hascluster-adminpermissions - To allow the cluster pull the image, the repository where you push your image must be set as public, or you must configure an image pull secret

5.5.1.2. Creating and deploying Ansible-based Operators

You can build and deploy a simple Ansible-based Operator for Memcached by using the Operator SDK.

Procedure

Create a project.

Create your project directory:

mkdir memcached-operator

$ mkdir memcached-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change into the project directory:

cd memcached-operator

$ cd memcached-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

operator-sdk initcommand with theansibleplugin to initialize the project:operator-sdk init \ --plugins=ansible \ --domain=example.com$ operator-sdk init \ --plugins=ansible \ --domain=example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create an API.

Create a simple Memcached API:

operator-sdk create api \ --group cache \ --version v1 \ --kind Memcached \ --generate-role$ operator-sdk create api \ --group cache \ --version v1 \ --kind Memcached \ --generate-role1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Generates an Ansible role for the API.

Build and push the Operator image.

Use the default

Makefiletargets to build and push your Operator. SetIMGwith a pull spec for your image that uses a registry you can push to:make docker-build docker-push IMG=<registry>/<user>/<image_name>:<tag>

$ make docker-build docker-push IMG=<registry>/<user>/<image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the Operator.

Install the CRD:

make install

$ make installCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy the project to the cluster. Set

IMGto the image that you pushed:make deploy IMG=<registry>/<user>/<image_name>:<tag>

$ make deploy IMG=<registry>/<user>/<image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a sample custom resource (CR).

Create a sample CR:

oc apply -f config/samples/cache_v1_memcached.yaml \ -n memcached-operator-system$ oc apply -f config/samples/cache_v1_memcached.yaml \ -n memcached-operator-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Watch for the CR to reconcile the Operator:

oc logs deployment.apps/memcached-operator-controller-manager \ -c manager \ -n memcached-operator-system$ oc logs deployment.apps/memcached-operator-controller-manager \ -c manager \ -n memcached-operator-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Clean up.

Run the following command to clean up the resources that have been created as part of this procedure:

make undeploy

$ make undeployCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5.1.3. Next steps

- See Operator SDK tutorial for Ansible-based Operators for a more in-depth walkthrough on building an Ansible-based Operator.

5.5.2. Operator SDK tutorial for Ansible-based Operators

Operator developers can take advantage of Ansible support in the Operator SDK to build an example Ansible-based Operator for Memcached, a distributed key-value store, and manage its lifecycle. This tutorial walks through the following process:

- Create a Memcached deployment

-

Ensure that the deployment size is the same as specified by the

Memcachedcustom resource (CR) spec -

Update the

MemcachedCR status using the status writer with the names of thememcachedpods

This process is accomplished by using two centerpieces of the Operator Framework:

- Operator SDK

-

The

operator-sdkCLI tool andcontroller-runtimelibrary API - Operator Lifecycle Manager (OLM)

- Installation, upgrade, and role-based access control (RBAC) of Operators on a cluster

This tutorial goes into greater detail than Getting started with Operator SDK for Ansible-based Operators.

5.5.2.1. Prerequisites

- Operator SDK CLI installed

-

OpenShift CLI (

oc) v4.8+ installed - Ansible version v2.9.0

- Ansible Runner version v1.1.0+

- Ansible Runner HTTP Event Emitter plugin version v1.0.0+

- OpenShift Python client version v0.11.2+

-

Logged into an OpenShift Container Platform 4.8 cluster with

ocwith an account that hascluster-adminpermissions - To allow the cluster pull the image, the repository where you push your image must be set as public, or you must configure an image pull secret

5.5.2.2. Creating a project

Use the Operator SDK CLI to create a project called memcached-operator.

Procedure

Create a directory for the project:

mkdir -p $HOME/projects/memcached-operator

$ mkdir -p $HOME/projects/memcached-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change to the directory:

cd $HOME/projects/memcached-operator

$ cd $HOME/projects/memcached-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

operator-sdk initcommand with theansibleplugin to initialize the project:operator-sdk init \ --plugins=ansible \ --domain=example.com$ operator-sdk init \ --plugins=ansible \ --domain=example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5.2.2.1. PROJECT file

Among the files generated by the operator-sdk init command is a Kubebuilder PROJECT file. Subsequent operator-sdk commands, as well as help output, that are run from the project root read this file and are aware that the project type is Ansible. For example:

domain: example.com layout: ansible.sdk.operatorframework.io/v1 projectName: memcached-operator version: 3

domain: example.com

layout: ansible.sdk.operatorframework.io/v1

projectName: memcached-operator

version: 35.5.2.3. Creating an API

Use the Operator SDK CLI to create a Memcached API.

Procedure

Run the following command to create an API with group

cache, version,v1, and kindMemcached:operator-sdk create api \ --group cache \ --version v1 \ --kind Memcached \ --generate-role$ operator-sdk create api \ --group cache \ --version v1 \ --kind Memcached \ --generate-role1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Generates an Ansible role for the API.

After creating the API, your Operator project updates with the following structure:

- Memcached CRD

-

Includes a sample

Memcachedresource - Manager

Program that reconciles the state of the cluster to the desired state by using:

- A reconciler, either an Ansible role or playbook

-

A

watches.yamlfile, which connects theMemcachedresource to thememcachedAnsible role

5.5.2.4. Modifying the manager

Update your Operator project to provide the reconcile logic, in the form of an Ansible role, which runs every time a Memcached resource is created, updated, or deleted.

Procedure

Update the

roles/memcached/tasks/main.ymlfile with the following structure:Copy to Clipboard Copied! Toggle word wrap Toggle overflow This

memcachedrole ensures amemcacheddeployment exist and sets the deployment size.Set default values for variables used in your Ansible role by editing the

roles/memcached/defaults/main.ymlfile:--- # defaults file for Memcached size: 1

--- # defaults file for Memcached size: 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the

Memcachedsample resource in theconfig/samples/cache_v1_memcached.yamlfile with the following structure:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The key-value pairs in the custom resource (CR) spec are passed to Ansible as extra variables.

The names of all variables in the spec field are converted to snake case, meaning lowercase with an underscore, by the Operator before running Ansible. For example, serviceAccount in the spec becomes service_account in Ansible.

You can disable this case conversion by setting the snakeCaseParameters option to false in your watches.yaml file. It is recommended that you perform some type validation in Ansible on the variables to ensure that your application is receiving expected input.

5.5.2.5. Running the Operator

There are three ways you can use the Operator SDK CLI to build and run your Operator:

- Run locally outside the cluster as a Go program.

- Run as a deployment on the cluster.

- Bundle your Operator and use Operator Lifecycle Manager (OLM) to deploy on the cluster.

5.5.2.5.1. Running locally outside the cluster

You can run your Operator project as a Go program outside of the cluster. This is useful for development purposes to speed up deployment and testing.

Procedure

Run the following command to install the custom resource definitions (CRDs) in the cluster configured in your

~/.kube/configfile and run the Operator locally:make install run

$ make install runCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5.2.5.2. Running as a deployment on the cluster

You can run your Operator project as a deployment on your cluster.

Procedure

Run the following

makecommands to build and push the Operator image. Modify theIMGargument in the following steps to reference a repository that you have access to. You can obtain an account for storing containers at repository sites such as Quay.io.Build the image:

make docker-build IMG=<registry>/<user>/<image_name>:<tag>

$ make docker-build IMG=<registry>/<user>/<image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe Dockerfile generated by the SDK for the Operator explicitly references

GOARCH=amd64forgo build. This can be amended toGOARCH=$TARGETARCHfor non-AMD64 architectures. Docker will automatically set the environment variable to the value specified by–platform. With Buildah, the–build-argwill need to be used for the purpose. For more information, see Multiple Architectures.Push the image to a repository:

make docker-push IMG=<registry>/<user>/<image_name>:<tag>

$ make docker-push IMG=<registry>/<user>/<image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe name and tag of the image, for example

IMG=<registry>/<user>/<image_name>:<tag>, in both the commands can also be set in your Makefile. Modify theIMG ?= controller:latestvalue to set your default image name.

Run the following command to deploy the Operator:

make deploy IMG=<registry>/<user>/<image_name>:<tag>

$ make deploy IMG=<registry>/<user>/<image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow By default, this command creates a namespace with the name of your Operator project in the form

<project_name>-systemand is used for the deployment. This command also installs the RBAC manifests fromconfig/rbac.Verify that the Operator is running:

oc get deployment -n <project_name>-system

$ oc get deployment -n <project_name>-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE <project_name>-controller-manager 1/1 1 1 8m

NAME READY UP-TO-DATE AVAILABLE AGE <project_name>-controller-manager 1/1 1 1 8mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5.2.5.3. Bundling an Operator and deploying with Operator Lifecycle Manager

5.5.2.5.3.1. Bundling an Operator

The Operator bundle format is the default packaging method for Operator SDK and Operator Lifecycle Manager (OLM). You can get your Operator ready for use on OLM by using the Operator SDK to build and push your Operator project as a bundle image.

Prerequisites

- Operator SDK CLI installed on a development workstation

-

OpenShift CLI (

oc) v4.8+ installed - Operator project initialized by using the Operator SDK

Procedure

Run the following

makecommands in your Operator project directory to build and push your Operator image. Modify theIMGargument in the following steps to reference a repository that you have access to. You can obtain an account for storing containers at repository sites such as Quay.io.Build the image:

make docker-build IMG=<registry>/<user>/<operator_image_name>:<tag>

$ make docker-build IMG=<registry>/<user>/<operator_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe Dockerfile generated by the SDK for the Operator explicitly references

GOARCH=amd64forgo build. This can be amended toGOARCH=$TARGETARCHfor non-AMD64 architectures. Docker will automatically set the environment variable to the value specified by–platform. With Buildah, the–build-argwill need to be used for the purpose. For more information, see Multiple Architectures.Push the image to a repository:

make docker-push IMG=<registry>/<user>/<operator_image_name>:<tag>

$ make docker-push IMG=<registry>/<user>/<operator_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Create your Operator bundle manifest by running the

make bundlecommand, which invokes several commands, including the Operator SDKgenerate bundleandbundle validatesubcommands:make bundle IMG=<registry>/<user>/<operator_image_name>:<tag>

$ make bundle IMG=<registry>/<user>/<operator_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Bundle manifests for an Operator describe how to display, create, and manage an application. The

make bundlecommand creates the following files and directories in your Operator project:-

A bundle manifests directory named

bundle/manifeststhat contains aClusterServiceVersionobject -

A bundle metadata directory named

bundle/metadata -

All custom resource definitions (CRDs) in a

config/crddirectory -

A Dockerfile

bundle.Dockerfile

These files are then automatically validated by using

operator-sdk bundle validateto ensure the on-disk bundle representation is correct.-

A bundle manifests directory named

Build and push your bundle image by running the following commands. OLM consumes Operator bundles using an index image, which reference one or more bundle images.

Build the bundle image. Set

BUNDLE_IMGwith the details for the registry, user namespace, and image tag where you intend to push the image:make bundle-build BUNDLE_IMG=<registry>/<user>/<bundle_image_name>:<tag>

$ make bundle-build BUNDLE_IMG=<registry>/<user>/<bundle_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Push the bundle image:

docker push <registry>/<user>/<bundle_image_name>:<tag>

$ docker push <registry>/<user>/<bundle_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5.2.5.3.2. Deploying an Operator with Operator Lifecycle Manager

Operator Lifecycle Manager (OLM) helps you to install, update, and manage the lifecycle of Operators and their associated services on a Kubernetes cluster. OLM is installed by default on OpenShift Container Platform and runs as a Kubernetes extension so that you can use the web console and the OpenShift CLI (oc) for all Operator lifecycle management functions without any additional tools.

The Operator bundle format is the default packaging method for Operator SDK and OLM. You can use the Operator SDK to quickly run a bundle image on OLM to ensure that it runs properly.

Prerequisites

- Operator SDK CLI installed on a development workstation

- Operator bundle image built and pushed to a registry

-

OLM installed on a Kubernetes-based cluster (v1.16.0 or later if you use

apiextensions.k8s.io/v1CRDs, for example OpenShift Container Platform 4.8) -

Logged in to the cluster with

ocusing an account withcluster-adminpermissions

Procedure

Enter the following command to run the Operator on the cluster:

operator-sdk run bundle \ [-n <namespace>] \ <registry>/<user>/<bundle_image_name>:<tag>$ operator-sdk run bundle \ [-n <namespace>] \1 <registry>/<user>/<bundle_image_name>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- By default, the command installs the Operator in the currently active project in your

~/.kube/configfile. You can add the-nflag to set a different namespace scope for the installation.

This command performs the following actions:

- Create an index image referencing your bundle image. The index image is opaque and ephemeral, but accurately reflects how a bundle would be added to a catalog in production.

- Create a catalog source that points to your new index image, which enables OperatorHub to discover your Operator.

-

Deploy your Operator to your cluster by creating an

OperatorGroup,Subscription,InstallPlan, and all other required objects, including RBAC.

5.5.2.6. Creating a custom resource

After your Operator is installed, you can test it by creating a custom resource (CR) that is now provided on the cluster by the Operator.

Prerequisites

-

Example Memcached Operator, which provides the

MemcachedCR, installed on a cluster

Procedure

Change to the namespace where your Operator is installed. For example, if you deployed the Operator using the

make deploycommand:oc project memcached-operator-system

$ oc project memcached-operator-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the sample

MemcachedCR manifest atconfig/samples/cache_v1_memcached.yamlto contain the following specification:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the CR:

oc apply -f config/samples/cache_v1_memcached.yaml

$ oc apply -f config/samples/cache_v1_memcached.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure that the

MemcachedOperator creates the deployment for the sample CR with the correct size:oc get deployments

$ oc get deploymentsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE memcached-operator-controller-manager 1/1 1 1 8m memcached-sample 3/3 3 3 1m

NAME READY UP-TO-DATE AVAILABLE AGE memcached-operator-controller-manager 1/1 1 1 8m memcached-sample 3/3 3 3 1mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the pods and CR status to confirm the status is updated with the Memcached pod names.

Check the pods:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE memcached-sample-6fd7c98d8-7dqdr 1/1 Running 0 1m memcached-sample-6fd7c98d8-g5k7v 1/1 Running 0 1m memcached-sample-6fd7c98d8-m7vn7 1/1 Running 0 1m

NAME READY STATUS RESTARTS AGE memcached-sample-6fd7c98d8-7dqdr 1/1 Running 0 1m memcached-sample-6fd7c98d8-g5k7v 1/1 Running 0 1m memcached-sample-6fd7c98d8-m7vn7 1/1 Running 0 1mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the CR status:

oc get memcached/memcached-sample -o yaml

$ oc get memcached/memcached-sample -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Update the deployment size.

Update

config/samples/cache_v1_memcached.yamlfile to change thespec.sizefield in theMemcachedCR from3to5:oc patch memcached memcached-sample \ -p '{"spec":{"size": 5}}' \ --type=merge$ oc patch memcached memcached-sample \ -p '{"spec":{"size": 5}}' \ --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the Operator changes the deployment size:

oc get deployments

$ oc get deploymentsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE memcached-operator-controller-manager 1/1 1 1 10m memcached-sample 5/5 5 5 3m

NAME READY UP-TO-DATE AVAILABLE AGE memcached-operator-controller-manager 1/1 1 1 10m memcached-sample 5/5 5 5 3mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Clean up the resources that have been created as part of this tutorial.

If you used the

make deploycommand to test the Operator, run the following command:make undeploy

$ make undeployCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you used the

operator-sdk run bundlecommand to test the Operator, run the following command:operator-sdk cleanup <project_name>

$ operator-sdk cleanup <project_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5.3. Project layout for Ansible-based Operators

The operator-sdk CLI can generate, or scaffold, a number of packages and files for each Operator project.

5.5.3.1. Ansible-based project layout

Ansible-based Operator projects generated using the operator-sdk init --plugins ansible command contain the following directories and files:

| File or directory | Purpose |

|---|---|

|

| Dockerfile for building the container image for the Operator. |

|

| Targets for building, publishing, deploying the container image that wraps the Operator binary, and targets for installing and uninstalling the custom resource definition (CRD). |

|

| YAML file containing metadata information for the Operator. |

|

|

Base CRD files and the |

|

|

Collects all Operator manifests for deployment. Use by the |

|

| Controller manager deployment. |

|

|

|

|

| Role and role binding for leader election and authentication proxy. |

|

| Sample resources created for the CRDs. |

|

| Sample configurations for testing. |

|

| A subdirectory for the playbooks to run. |

|

| Subdirectory for the roles tree to run. |

|

|

Group/version/kind (GVK) of the resources to watch, and the Ansible invocation method. New entries are added by using the |

|

| YAML file containing the Ansible collections and role dependencies to install during a build. |

|

| Molecule scenarios for end-to-end testing of your role and Operator. |

5.5.4. Ansible support in Operator SDK

5.5.4.1. Custom resource files

Operators use the Kubernetes extension mechanism, custom resource definitions (CRDs), so your custom resource (CR) looks and acts just like the built-in, native Kubernetes objects.

The CR file format is a Kubernetes resource file. The object has mandatory and optional fields:

| Field | Description |

|---|---|

|

| Version of the CR to be created. |

|

| Kind of the CR to be created. |

|

| Kubernetes-specific metadata to be created. |

|

| Key-value list of variables which are passed to Ansible. This field is empty by default. |

|

|

Summarizes the current state of the object. For Ansible-based Operators, the |

|

| Kubernetes-specific annotations to be appended to the CR. |

The following list of CR annotations modify the behavior of the Operator:

| Annotation | Description |

|---|---|

|

|

Specifies the reconciliation interval for the CR. This value is parsed using the standard Golang package |

Example Ansible-based Operator annotation

5.5.4.2. watches.yaml file

A group/version/kind (GVK) is a unique identifier for a Kubernetes API. The watches.yaml file contains a list of mappings from custom resources (CRs), identified by its GVK, to an Ansible role or playbook. The Operator expects this mapping file in a predefined location at /opt/ansible/watches.yaml.

| Field | Description |

|---|---|

|

| Group of CR to watch. |

|

| Version of CR to watch. |

|