Questo contenuto non è disponibile nella lingua selezionata.

Chapter 3. Ceph Object Gateway and the S3 API

As a developer, you can use a RESTful application programming interface (API) that is compatible with the Amazon S3 data access model. You can manage the buckets and objects stored in a Red Hat Ceph Storage cluster through the Ceph Object Gateway.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- A RESTful client.

3.1. S3 limitations

The following limitations should be used with caution. There are implications related to your hardware selections, so you should always discuss these requirements with your Red Hat account team.

-

Maximum object size when using Amazon S3: Individual Amazon S3 objects can range in size from a minimum of 0B to a maximum of 5TB. The largest object that can be uploaded in a single

PUTis 5GB. For objects larger than 100MB, you should consider using the Multipart Upload capability. - Maximum metadata size when using Amazon S3: There is no defined limit on the total size of user metadata that can be applied to an object, but a single HTTP request is limited to 16,000 bytes.

- The amount of data overhead Red Hat Ceph Storage cluster produces to store S3 objects and metadata: The estimate here is 200-300 bytes plus the length of the object name. Versioned objects consume additional space proportional to the number of versions. Also, transient overhead is produced during multi-part upload and other transactional updates, but these overheads are recovered during garbage collection.

Additional Resources

- See the Red Hat Ceph Storage Developer Guide for details on the unsupported header fields.

3.2. Accessing the Ceph Object Gateway with the S3 API

As a developer, you must configure access to the Ceph Object Gateway and the Secure Token Service (STS) before you can start using the Amazon S3 API.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- A running Ceph Object Gateway.

- A RESTful client.

3.2.1. S3 authentication

Requests to the Ceph Object Gateway can be either authenticated or unauthenticated. Ceph Object Gateway assumes unauthenticated requests are sent by an anonymous user. Ceph Object Gateway supports canned ACLs.

For most use cases, clients use existing open source libraries like the Amazon SDK’s AmazonS3Client for Java, and Python Boto. With open source libraries you simply pass in the access key and secret key and the library builds the request header and authentication signature for you. However, you can create requests and sign them too.

Authenticating a request requires including an access key and a base 64-encoded hash-based Message Authentication Code (HMAC) in the request before it is sent to the Ceph Object Gateway server. Ceph Object Gateway uses an S3-compatible authentication approach.

Example

In the above example, replace ACCESS_KEY with the value for the access key ID followed by a colon (:). Replace HASH_OF_HEADER_AND_SECRET with a hash of a canonicalized header string and the secret corresponding to the access key ID.

Generate hash of header string and secret

To generate the hash of the header string and secret:

- Get the value of the header string.

- Normalize the request header string into canonical form.

- Generate an HMAC using a SHA-1 hashing algorithm.

-

Encode the

hmacresult as base-64.

Normalize header

To normalize the header into canonical form:

-

Get all

content-headers. -

Remove all

content-headers except forcontent-typeandcontent-md5. -

Ensure the

content-header names are lowercase. -

Sort the

content-headers lexicographically. -

Ensure you have a

Dateheader AND ensure the specified date uses GMT and not an offset. -

Get all headers beginning with

x-amz-. -

Ensure that the

x-amz-headers are all lowercase. -

Sort the

x-amz-headers lexicographically. - Combine multiple instances of the same field name into a single field and separate the field values with a comma.

- Replace white space and line breaks in header values with a single space.

- Remove white space before and after colons.

- Append a new line after each header.

- Merge the headers back into the request header.

Replace the HASH_OF_HEADER_AND_SECRET with the base-64 encoded HMAC string.

Additional Resources

- For additional details, consult the Signing and Authenticating REST Requests section of Amazon Simple Storage Service documentation.

3.2.2. S3-server-side encryption

The Ceph Object Gateway supports server-side encryption of uploaded objects for the S3 application programming interface (API). Server-side encryption means that the S3 client sends data over HTTP in its unencrypted form, and the Ceph Object Gateway stores that data in the Red Hat Ceph Storage cluster in encrypted form.

Red Hat does NOT support S3 object encryption of Static Large Object (SLO) or Dynamic Large Object (DLO).

To use encryption, client requests MUST send requests over an SSL connection. Red Hat does not support S3 encryption from a client unless the Ceph Object Gateway uses SSL. However, for testing purposes, administrators can disable SSL during testing by setting the rgw_crypt_require_ssl configuration setting to false at runtime, using the ceph config set client.rgw command, and then restarting the Ceph Object Gateway instance.

In a production environment, it might not be possible to send encrypted requests over SSL. In such a case, send requests using HTTP with server-side encryption.

For information about how to configure HTTP with server-side encryption, see the Additional Resources section below.

There are two options for the management of encryption keys:

Customer-provided Keys

When using customer-provided keys, the S3 client passes an encryption key along with each request to read or write encrypted data. It is the customer’s responsibility to manage those keys. Customers must remember which key the Ceph Object Gateway used to encrypt each object.

Ceph Object Gateway implements the customer-provided key behavior in the S3 API according to the Amazon SSE-C specification.

Since the customer handles the key management and the S3 client passes keys to the Ceph Object Gateway, the Ceph Object Gateway requires no special configuration to support this encryption mode.

Key Management Service

When using a key management service, the secure key management service stores the keys and the Ceph Object Gateway retrieves them on demand to serve requests to encrypt or decrypt data.

Ceph Object Gateway implements the key management service behavior in the S3 API according to the Amazon SSE-KMS specification.

Currently, the only tested key management implementations are HashiCorp Vault, and OpenStack Barbican. However, OpenStack Barbican is a Technology Preview and is not supported for use in production systems.

Additional Resources

3.2.3. S3 access control lists

Ceph Object Gateway supports S3-compatible Access Control Lists (ACL) functionality. An ACL is a list of access grants that specify which operations a user can perform on a bucket or on an object. Each grant has a different meaning when applied to a bucket versus applied to an object:

| Permission | Bucket | Object |

|---|---|---|

|

| Grantee can list the objects in the bucket. | Grantee can read the object. |

|

| Grantee can write or delete objects in the bucket. | N/A |

|

| Grantee can read bucket ACL. | Grantee can read the object ACL. |

|

| Grantee can write bucket ACL. | Grantee can write to the object ACL. |

|

| Grantee has full permissions for object in the bucket. | Grantee can read or write to the object ACL. |

3.2.4. Preparing access to the Ceph Object Gateway using S3

You have to follow some pre-requisites on the Ceph Object Gateway node before attempting to access the gateway server.

Prerequisites

- Installation of the Ceph Object Gateway software.

- Root-level access to the Ceph Object Gateway node.

Procedure

As

root, open port8080on the firewall:firewall-cmd --zone=public --add-port=8080/tcp --permanent firewall-cmd --reload

[root@rgw ~]# firewall-cmd --zone=public --add-port=8080/tcp --permanent [root@rgw ~]# firewall-cmd --reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a wildcard to the DNS server that you are using for the gateway as mentioned in the Object Gateway Configuration and Administration Guide.

You can also set up the gateway node for local DNS caching. To do so, execute the following steps:

As

root, install and setupdnsmasq:yum install dnsmasq echo "address=/.FQDN_OF_GATEWAY_NODE/IP_OF_GATEWAY_NODE" | tee --append /etc/dnsmasq.conf systemctl start dnsmasq systemctl enable dnsmasq

[root@rgw ~]# yum install dnsmasq [root@rgw ~]# echo "address=/.FQDN_OF_GATEWAY_NODE/IP_OF_GATEWAY_NODE" | tee --append /etc/dnsmasq.conf [root@rgw ~]# systemctl start dnsmasq [root@rgw ~]# systemctl enable dnsmasqCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

IP_OF_GATEWAY_NODEandFQDN_OF_GATEWAY_NODEwith the IP address and FQDN of the gateway node.As

root, stop NetworkManager:systemctl stop NetworkManager systemctl disable NetworkManager

[root@rgw ~]# systemctl stop NetworkManager [root@rgw ~]# systemctl disable NetworkManagerCopy to Clipboard Copied! Toggle word wrap Toggle overflow As

root, set the gateway server’s IP as the nameserver:echo "DNS1=IP_OF_GATEWAY_NODE" | tee --append /etc/sysconfig/network-scripts/ifcfg-eth0 echo "IP_OF_GATEWAY_NODE FQDN_OF_GATEWAY_NODE" | tee --append /etc/hosts systemctl restart network systemctl enable network systemctl restart dnsmasq

[root@rgw ~]# echo "DNS1=IP_OF_GATEWAY_NODE" | tee --append /etc/sysconfig/network-scripts/ifcfg-eth0 [root@rgw ~]# echo "IP_OF_GATEWAY_NODE FQDN_OF_GATEWAY_NODE" | tee --append /etc/hosts [root@rgw ~]# systemctl restart network [root@rgw ~]# systemctl enable network [root@rgw ~]# systemctl restart dnsmasqCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

IP_OF_GATEWAY_NODEandFQDN_OF_GATEWAY_NODEwith the IP address and FQDN of the gateway node.Verify subdomain requests:

ping mybucket.FQDN_OF_GATEWAY_NODE

[user@rgw ~]$ ping mybucket.FQDN_OF_GATEWAY_NODECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

FQDN_OF_GATEWAY_NODEwith the FQDN of the gateway node.WarningSetting up the gateway server for local DNS caching is for testing purposes only. You won’t be able to access the outside network after doing this. It is strongly recommended to use a proper DNS server for the Red Hat Ceph Storage cluster and gateway node.

-

Create the

radosgwuser forS3access carefully as mentioned in the Object Gateway Configuration and Administration Guide and copy the generatedaccess_keyandsecret_key. You will need these keys forS3access and subsequent bucket management tasks.

3.2.5. Accessing the Ceph Object Gateway using Ruby AWS S3

You can use Ruby programming language along with aws-s3 gem for S3 access. Execute the steps mentioned below on the node used for accessing the Ceph Object Gateway server with Ruby AWS::S3.

Prerequisites

- User-level access to Ceph Object Gateway.

- Root-level access to the node accessing the Ceph Object Gateway.

- Internet access.

Procedure

Install the

rubypackage:yum install ruby

[root@dev ~]# yum install rubyCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe above command will install

rubyand its essential dependencies likerubygemsandruby-libs. If somehow the command does not install all the dependencies, install them separately.Install the

aws-s3Ruby package:gem install aws-s3

[root@dev ~]# gem install aws-s3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a project directory:

mkdir ruby_aws_s3 cd ruby_aws_s3

[user@dev ~]$ mkdir ruby_aws_s3 [user@dev ~]$ cd ruby_aws_s3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the connection file:

vim conn.rb

[user@dev ~]$ vim conn.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the

conn.rbfile:Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

FQDN_OF_GATEWAY_NODEwith the FQDN of the Ceph Object Gateway node. ReplaceMY_ACCESS_KEYandMY_SECRET_KEYwith theaccess_keyandsecret_keythat were generated when you created theradosgwuser forS3access as mentioned in the Red Hat Ceph Storage Object Gateway Configuration and Administration Guide.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x conn.rb

[user@dev ~]$ chmod +x conn.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./conn.rb | echo $?

[user@dev ~]$ ./conn.rb | echo $?Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you have provided the values correctly in the file, the output of the command will be

0.Create a new file for creating a bucket:

vim create_bucket.rb

[user@dev ~]$ vim create_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

#!/usr/bin/env ruby load 'conn.rb' AWS::S3::Bucket.create('my-new-bucket1')#!/usr/bin/env ruby load 'conn.rb' AWS::S3::Bucket.create('my-new-bucket1')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x create_bucket.rb

[user@dev ~]$ chmod +x create_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./create_bucket.rb

[user@dev ~]$ ./create_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the output of the command is

trueit would mean that bucketmy-new-bucket1was created successfully.Create a new file for listing owned buckets:

vim list_owned_buckets.rb

[user@dev ~]$ vim list_owned_buckets.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following content into the file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x list_owned_buckets.rb

[user@dev ~]$ chmod +x list_owned_buckets.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./list_owned_buckets.rb

[user@dev ~]$ ./list_owned_buckets.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output should look something like this:

my-new-bucket1 2020-01-21 10:33:19 UTC

my-new-bucket1 2020-01-21 10:33:19 UTCCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new file for creating an object:

vim create_object.rb

[user@dev ~]$ vim create_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x create_object.rb

[user@dev ~]$ chmod +x create_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./create_object.rb

[user@dev ~]$ ./create_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow This will create a file

hello.txtwith the stringHello World!.Create a new file for listing a bucket’s content:

vim list_bucket_content.rb

[user@dev ~]$ vim list_bucket_content.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following content into the file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable.

chmod +x list_bucket_content.rb

[user@dev ~]$ chmod +x list_bucket_content.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./list_bucket_content.rb

[user@dev ~]$ ./list_bucket_content.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output will look something like this:

hello.txt 12 Fri, 22 Jan 2020 15:54:52 GMT

hello.txt 12 Fri, 22 Jan 2020 15:54:52 GMTCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new file for deleting an empty bucket:

vim del_empty_bucket.rb

[user@dev ~]$ vim del_empty_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

#!/usr/bin/env ruby load 'conn.rb' AWS::S3::Bucket.delete('my-new-bucket1')#!/usr/bin/env ruby load 'conn.rb' AWS::S3::Bucket.delete('my-new-bucket1')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x del_empty_bucket.rb

[user@dev ~]$ chmod +x del_empty_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./del_empty_bucket.rb | echo $?

[user@dev ~]$ ./del_empty_bucket.rb | echo $?Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the bucket is successfully deleted, the command will return

0as output.NoteEdit the

create_bucket.rbfile to create empty buckets, for example,my-new-bucket4,my-new-bucket5. Next, edit the above-mentioneddel_empty_bucket.rbfile accordingly before trying to delete empty buckets.Create a new file for deleting non-empty buckets:

vim del_non_empty_bucket.rb

[user@dev ~]$ vim del_non_empty_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

#!/usr/bin/env ruby load 'conn.rb' AWS::S3::Bucket.delete('my-new-bucket1', :force => true)#!/usr/bin/env ruby load 'conn.rb' AWS::S3::Bucket.delete('my-new-bucket1', :force => true)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x del_non_empty_bucket.rb

[user@dev ~]$ chmod +x del_non_empty_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./del_non_empty_bucket.rb | echo $?

[user@dev ~]$ ./del_non_empty_bucket.rb | echo $?Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the bucket is successfully deleted, the command will return

0as output.Create a new file for deleting an object:

vim delete_object.rb

[user@dev ~]$ vim delete_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

#!/usr/bin/env ruby load 'conn.rb' AWS::S3::S3Object.delete('hello.txt', 'my-new-bucket1')#!/usr/bin/env ruby load 'conn.rb' AWS::S3::S3Object.delete('hello.txt', 'my-new-bucket1')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x delete_object.rb

[user@dev ~]$ chmod +x delete_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./delete_object.rb

[user@dev ~]$ ./delete_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow This will delete the object

hello.txt.

3.2.6. Accessing the Ceph Object Gateway using Ruby AWS SDK

You can use the Ruby programming language along with aws-sdk gem for S3 access. Execute the steps mentioned below on the node used for accessing the Ceph Object Gateway server with Ruby AWS::SDK.

Prerequisites

- User-level access to Ceph Object Gateway.

- Root-level access to the node accessing the Ceph Object Gateway.

- Internet access.

Procedure

Install the

rubypackage:yum install ruby

[root@dev ~]# yum install rubyCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe above command will install

rubyand its essential dependencies likerubygemsandruby-libs. If somehow the command does not install all the dependencies, install them separately.Install the

aws-sdkRuby package:gem install aws-sdk

[root@dev ~]# gem install aws-sdkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a project directory:

mkdir ruby_aws_sdk cd ruby_aws_sdk

[user@dev ~]$ mkdir ruby_aws_sdk [user@dev ~]$ cd ruby_aws_sdkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the connection file:

vim conn.rb

[user@dev ~]$ vim conn.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the

conn.rbfile:Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

FQDN_OF_GATEWAY_NODEwith the FQDN of the Ceph Object Gateway node. ReplaceMY_ACCESS_KEYandMY_SECRET_KEYwith theaccess_keyandsecret_keythat were generated when you created theradosgwuser forS3access as mentioned in the Red Hat Ceph Storage Object Gateway Configuration and Administration Guide.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x conn.rb

[user@dev ~]$ chmod +x conn.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./conn.rb | echo $?

[user@dev ~]$ ./conn.rb | echo $?Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you have provided the values correctly in the file, the output of the command will be

0.Create a new file for creating a bucket:

vim create_bucket.rb

[user@dev ~]$ vim create_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x create_bucket.rb

[user@dev ~]$ chmod +x create_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./create_bucket.rb

[user@dev ~]$ ./create_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the output of the command is

true, this means that bucketmy-new-bucket2was created successfully.Create a new file for listing owned buckets:

vim list_owned_buckets.rb

[user@dev ~]$ vim list_owned_buckets.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following content into the file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x list_owned_buckets.rb

[user@dev ~]$ chmod +x list_owned_buckets.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./list_owned_buckets.rb

[user@dev ~]$ ./list_owned_buckets.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output should look something like this:

my-new-bucket2 2020-01-21 10:33:19 UTC

my-new-bucket2 2020-01-21 10:33:19 UTCCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new file for creating an object:

vim create_object.rb

[user@dev ~]$ vim create_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x create_object.rb

[user@dev ~]$ chmod +x create_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./create_object.rb

[user@dev ~]$ ./create_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow This will create a file

hello.txtwith the stringHello World!.Create a new file for listing a bucket’s content:

vim list_bucket_content.rb

[user@dev ~]$ vim list_bucket_content.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following content into the file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable.

chmod +x list_bucket_content.rb

[user@dev ~]$ chmod +x list_bucket_content.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./list_bucket_content.rb

[user@dev ~]$ ./list_bucket_content.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output will look something like this:

hello.txt 12 Fri, 22 Jan 2020 15:54:52 GMT

hello.txt 12 Fri, 22 Jan 2020 15:54:52 GMTCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new file for deleting an empty bucket:

vim del_empty_bucket.rb

[user@dev ~]$ vim del_empty_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x del_empty_bucket.rb

[user@dev ~]$ chmod +x del_empty_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./del_empty_bucket.rb | echo $?

[user@dev ~]$ ./del_empty_bucket.rb | echo $?Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the bucket is successfully deleted, the command will return

0as output.NoteEdit the

create_bucket.rbfile to create empty buckets, for example,my-new-bucket6,my-new-bucket7. Next, edit the above-mentioneddel_empty_bucket.rbfile accordingly before trying to delete empty buckets.Create a new file for deleting a non-empty bucket:

vim del_non_empty_bucket.rb

[user@dev ~]$ vim del_non_empty_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x del_non_empty_bucket.rb

[user@dev ~]$ chmod +x del_non_empty_bucket.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./del_non_empty_bucket.rb | echo $?

[user@dev ~]$ ./del_non_empty_bucket.rb | echo $?Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the bucket is successfully deleted, the command will return

0as output.Create a new file for deleting an object:

vim delete_object.rb

[user@dev ~]$ vim delete_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Make the file executable:

chmod +x delete_object.rb

[user@dev ~]$ chmod +x delete_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the file:

./delete_object.rb

[user@dev ~]$ ./delete_object.rbCopy to Clipboard Copied! Toggle word wrap Toggle overflow This will delete the object

hello.txt.

3.2.7. Accessing the Ceph Object Gateway using PHP

You can use PHP scripts for S3 access. This procedure provides some example PHP scripts to do various tasks, such as deleting a bucket or an object.

The examples given below are tested against php v5.4.16 and aws-sdk v2.8.24.

Prerequisites

- Root-level access to a development workstation.

- Internet access.

Procedure

Install the

phppackage:yum install php

[root@dev ~]# yum install phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Download the zip archive of

aws-sdkfor PHP and extract it. Create a project directory:

mkdir php_s3 cd php_s3

[user@dev ~]$ mkdir php_s3 [user@dev ~]$ cd php_s3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the extracted

awsdirectory to the project directory. For example:cp -r ~/Downloads/aws/ ~/php_s3/

[user@dev ~]$ cp -r ~/Downloads/aws/ ~/php_s3/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the connection file:

vim conn.php

[user@dev ~]$ vim conn.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents in the

conn.phpfile:Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

FQDN_OF_GATEWAY_NODEwith the FQDN of the gateway node. ReplaceMY_ACCESS_KEYandMY_SECRET_KEYwith theaccess_keyandsecret_keythat were generated when creating theradosgwuser forS3access as mentioned in the Red Hat Ceph Storage Object Gateway Configuration and Administration Guide. ReplacePATH_TO_AWSwith the absolute path to the extractedawsdirectory that you copied to thephpproject directory.Save the file and exit the editor.

Run the file:

php -f conn.php | echo $?

[user@dev ~]$ php -f conn.php | echo $?Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you have provided the values correctly in the file, the output of the command will be

0.Create a new file for creating a bucket:

vim create_bucket.php

[user@dev ~]$ vim create_bucket.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the new file:

Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Run the file:

php -f create_bucket.php

[user@dev ~]$ php -f create_bucket.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new file for listing owned buckets:

vim list_owned_buckets.php

[user@dev ~]$ vim list_owned_buckets.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following content into the file:

Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Run the file:

php -f list_owned_buckets.php

[user@dev ~]$ php -f list_owned_buckets.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output should look similar to this:

my-new-bucket3 2020-01-21 10:33:19 UTC

my-new-bucket3 2020-01-21 10:33:19 UTCCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create an object by first creating a source file named

hello.txt:echo "Hello World!" > hello.txt

[user@dev ~]$ echo "Hello World!" > hello.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new php file:

vim create_object.php

[user@dev ~]$ vim create_object.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Run the file:

php -f create_object.php

[user@dev ~]$ php -f create_object.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow This will create the object

hello.txtin bucketmy-new-bucket3.Create a new file for listing a bucket’s content:

vim list_bucket_content.php

[user@dev ~]$ vim list_bucket_content.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following content into the file:

Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Run the file:

php -f list_bucket_content.php

[user@dev ~]$ php -f list_bucket_content.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output will look similar to this:

hello.txt 12 Fri, 22 Jan 2020 15:54:52 GMT

hello.txt 12 Fri, 22 Jan 2020 15:54:52 GMTCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new file for deleting an empty bucket:

vim del_empty_bucket.php

[user@dev ~]$ vim del_empty_bucket.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Run the file:

php -f del_empty_bucket.php | echo $?

[user@dev ~]$ php -f del_empty_bucket.php | echo $?Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the bucket is successfully deleted, the command will return

0as output.NoteEdit the

create_bucket.phpfile to create empty buckets, for example,my-new-bucket4,my-new-bucket5. Next, edit the above-mentioneddel_empty_bucket.phpfile accordingly before trying to delete empty buckets.ImportantDeleting a non-empty bucket is currently not supported in PHP 2 and newer versions of

aws-sdk.Create a new file for deleting an object:

vim delete_object.php

[user@dev ~]$ vim delete_object.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Paste the following contents into the file:

Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and exit the editor.

Run the file:

php -f delete_object.php

[user@dev ~]$ php -f delete_object.phpCopy to Clipboard Copied! Toggle word wrap Toggle overflow This will delete the object

hello.txt.

3.2.8. Secure Token Service

The Amazon Web Services' Secure Token Service (STS) returns a set of temporary security credentials for authenticating users.

Red Hat Ceph Storage Object Gateway supports a subset of Amazon STS application programming interfaces (APIs) for identity and access management (IAM).

Users first authenticate against STS and receive a short-lived S3 access key and secret key that can be used in subsequent requests.

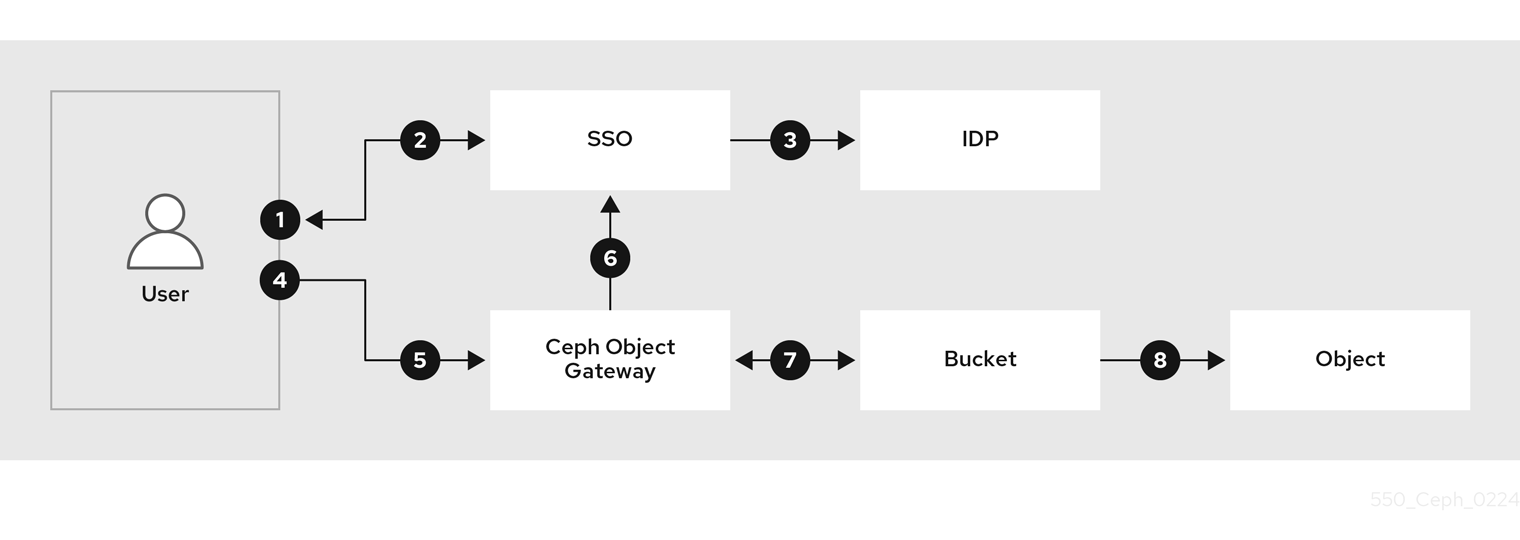

Red Hat Ceph Storage can authenticate S3 users by integrating with a Single Sign-On by configuring an OIDC provider. This feature enables Object Storage users to authenticate against an enterprise identity provider rather than the local Ceph Object Gateway database. For instance, if the SSO is connected to an enterprise IDP in the backend, Object Storage users can use their enterprise credentials to authenticate and get access to the Ceph Object Gateway S3 endpoint.

By using STS along with the IAM role policy feature, you can create finely tuned authorization policies to control access to your data. This enables you to implement either a Role-Based Access Control (RBAC) or Attribute-Based Access Control (ABAC) authorization model for your object storage data, giving you complete control over who can access the data.

Simplifies workflow to access S3 resources with STS

- The user wants access S3 resources in Red Hat Ceph Storage.

- The user needs to authenticate against the SSO provider.

- The SSO provider is federated with an IDP and checks if the user credentials are valid, the user gets authenticated and the SSO provides a Token to the user.

- Using the Token provided by the SSO, the user accesses the Ceph Object Gateway STS endpoint, asking to assume an IAM role that provides the user with access to S3 resources.

- The Red Hat Ceph Storage gateway receives the user token and asks the SSO to validate the token.

- Once the SSO validates the token, the user is allowed to assume the role. Through STS, the user is with temporary access and secret keys that give the user access to the S3 resources.

- Depending on the policies attached to the IAM role the user has assumed, the user can access a set of S3 resources.

- For example, read for bucket A and write to bucket B.

Additional Resources

- Amazon Web Services Secure Token Service welcome page.

- See the Configuring and using STS Lite with Keystone section of the Red Hat Ceph Storage Developer Guide for details on STS Lite and Keystone.

- See the Working around the limitations of using STS Lite with Keystone section of the Red Hat Ceph Storage Developer Guide for details on the limitations of STS Lite and Keystone.

3.2.8.1. The Secure Token Service application programming interfaces

The Ceph Object Gateway implements the following Secure Token Service (STS) application programming interfaces (APIs):

AssumeRole

This API returns a set of temporary credentials for cross-account access. These temporary credentials allow for both, permission policies attached with Role and policies attached with AssumeRole API. The RoleArn and the RoleSessionName request parameters are required, but the other request parameters are optional.

RoleArn- Description

- The role to assume for the Amazon Resource Name (ARN) with a length of 20 to 2048 characters.

- Type

- String

- Required

- Yes

RoleSessionName- Description

-

Identifying the role session name to assume. The role session name can uniquely identify a session when different principals or different reasons assume a role. This parameter’s value has a length of 2 to 64 characters. The

=,,,.,@, and-characters are allowed, but no spaces allowed. - Type

- String

- Required

- Yes

Policy- Description

- An identity and access management policy (IAM) in a JSON format for use in an inline session. This parameter’s value has a length of 1 to 2048 characters.

- Type

- String

- Required

- No

DurationSeconds- Description

-

The duration of the session in seconds, with a minimum value of

900seconds to a maximum value of43200seconds. The default value is3600seconds. - Type

- Integer

- Required

- No

ExternalId- Description

- When assuming a role for another account, provide the unique external identifier if available. This parameter’s value has a length of 2 to 1224 characters.

- Type

- String

- Required

- No

SerialNumber- Description

- A user’s identification number from their associated multi-factor authentication (MFA) device. The parameter’s value can be the serial number of a hardware device or a virtual device, with a length of 9 to 256 characters.

- Type

- String

- Required

- No

TokenCode- Description

- The value generated from the multi-factor authentication (MFA) device, if the trust policy requires MFA. If an MFA device is required, and if this parameter’s value is empty or expired, then AssumeRole call returns an "access denied" error message. This parameter’s value has a fixed length of 6 characters.

- Type

- String

- Required

- No

AssumeRoleWithWebIdentity

This API returns a set of temporary credentials for users who have been authenticated by an application, such as OpenID Connect or OAuth 2.0 Identity Provider. The RoleArn and the RoleSessionName request parameters are required, but the other request parameters are optional.

RoleArn- Description

- The role to assume for the Amazon Resource Name (ARN) with a length of 20 to 2048 characters.

- Type

- String

- Required

- Yes

RoleSessionName- Description

-

Identifying the role session name to assume. The role session name can uniquely identify a session when different principals or different reasons assume a role. This parameter’s value has a length of 2 to 64 characters. The

=,,,.,@, and-characters are allowed, but no spaces are allowed. - Type

- String

- Required

- Yes

Policy- Description

- An identity and access management policy (IAM) in a JSON format for use in an inline session. This parameter’s value has a length of 1 to 2048 characters.

- Type

- String

- Required

- No

DurationSeconds- Description

-

The duration of the session in seconds, with a minimum value of

900seconds to a maximum value of43200seconds. The default value is3600seconds. - Type

- Integer

- Required

- No

ProviderId- Description

- The fully qualified host component of the domain name from the identity provider. This parameter’s value is only valid for OAuth 2.0 access tokens, with a length of 4 to 2048 characters.

- Type

- String

- Required

- No

WebIdentityToken- Description

- The OpenID Connect identity token or OAuth 2.0 access token provided from an identity provider. This parameter’s value has a length of 4 to 2048 characters.

- Type

- String

- Required

- No

Additional Resources

- See the Examples using the Secure Token Service APIs section of the Red Hat Ceph Storage Developer Guide for more details.

- Amazon Web Services Security Token Service, the AssumeRole action.

- Amazon Web Services Security Token Service, the AssumeRoleWithWebIdentity action.

3.2.8.2. Configuring the Secure Token Service

Configure the Secure Token Service (STS) for use with the Ceph Object Gateway by setting the rgw_sts_key, and rgw_s3_auth_use_sts options.

The S3 and STS APIs co-exist in the same namespace, and both can be accessed from the same endpoint in the Ceph Object Gateway.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- A running Ceph Object Gateway.

- Root-level access to a Ceph Manager node.

Procedure

Set the following configuration options for the Ceph Object Gateway client:

Syntax

ceph config set RGW_CLIENT_NAME rgw_sts_key STS_KEY ceph config set RGW_CLIENT_NAME rgw_s3_auth_use_sts true

ceph config set RGW_CLIENT_NAME rgw_sts_key STS_KEY ceph config set RGW_CLIENT_NAME rgw_s3_auth_use_sts trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

rgw_sts_keyis the STS key for encrypting or decrypting the session token and is exactly 16 hex characters.ImportantThe STS key needs to be alphanumeric.

Example

ceph config set client.rgw rgw_sts_key 7f8fd8dd4700mnop ceph config set client.rgw rgw_s3_auth_use_sts true

[root@mgr ~]# ceph config set client.rgw rgw_sts_key 7f8fd8dd4700mnop [root@mgr ~]# ceph config set client.rgw rgw_s3_auth_use_sts trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the Ceph Object Gateway for the added key to take effect.

NoteUse the output from the

ceph orch pscommand, under theNAMEcolumn, to get the SERVICE_TYPE.ID information.To restart the Ceph Object Gateway on an individual node in the storage cluster:

Syntax

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.service

systemctl restart ceph-CLUSTER_ID@SERVICE_TYPE.ID.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.service

[root@host01 ~]# systemctl restart ceph-c4b34c6f-8365-11ba-dc31-529020a7702d@rgw.realm.zone.host01.gwasto.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow To restart the Ceph Object Gateways on all nodes in the storage cluster:

Syntax

ceph orch restart SERVICE_TYPE

ceph orch restart SERVICE_TYPECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch restart rgw

[ceph: root@host01 /]# ceph orch restart rgwCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- See Secure Token Service application programming interfaces section in the Red Hat Ceph Storage Developer Guide for more details on the STS APIs.

- See the The basics of Ceph configuration chapter in the Red Hat Ceph Storage Configuration Guide for more details on using the Ceph configuration database.

3.2.8.3. Creating a user for an OpenID Connect provider

To establish trust between the Ceph Object Gateway and the OpenID Connect Provider create a user entity and a role trust policy.

Prerequisites

- User-level access to the Ceph Object Gateway node.

- Secure Token Service configured.

Procedure

Create a new Ceph user:

Syntax

radosgw-admin --uid USER_NAME --display-name "DISPLAY_NAME" --access_key USER_NAME --secret SECRET user create

radosgw-admin --uid USER_NAME --display-name "DISPLAY_NAME" --access_key USER_NAME --secret SECRET user createCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

radosgw-admin --uid TESTER --display-name "TestUser" --access_key TESTER --secret test123 user create

[user@rgw ~]$ radosgw-admin --uid TESTER --display-name "TestUser" --access_key TESTER --secret test123 user createCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the Ceph user capabilities:

Syntax

radosgw-admin caps add --uid="USER_NAME" --caps="oidc-provider=*"

radosgw-admin caps add --uid="USER_NAME" --caps="oidc-provider=*"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

radosgw-admin caps add --uid="TESTER" --caps="oidc-provider=*"

[user@rgw ~]$ radosgw-admin caps add --uid="TESTER" --caps="oidc-provider=*"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a condition to the role trust policy using the Secure Token Service (STS) API:

Syntax

"{\"Version\":\"2020-01-17\",\"Statement\":[{\"Effect\":\"Allow\",\"Principal\":{\"Federated\":[\"arn:aws:iam:::oidc-provider/IDP_URL\"]},\"Action\":[\"sts:AssumeRoleWithWebIdentity\"],\"Condition\":{\"StringEquals\":{\"IDP_URL:app_id\":\"AUD_FIELD\"\}\}\}\]\}""{\"Version\":\"2020-01-17\",\"Statement\":[{\"Effect\":\"Allow\",\"Principal\":{\"Federated\":[\"arn:aws:iam:::oidc-provider/IDP_URL\"]},\"Action\":[\"sts:AssumeRoleWithWebIdentity\"],\"Condition\":{\"StringEquals\":{\"IDP_URL:app_id\":\"AUD_FIELD\"\}\}\}\]\}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantThe

app_idin the syntax example above must match theAUD_FIELDfield of the incoming token.

Additional Resources

- See the Obtaining the Root CA Thumbprint for an OpenID Connect Identity Provider article on Amazon’s website.

- See the Secure Token Service application programming interfaces section in the Red Hat Ceph Storage Developer Guide for more details on the STS APIs.

- See the Examples using the Secure Token Service APIs section of the Red Hat Ceph Storage Developer Guide for more details.

3.2.8.4. Obtaining a thumbprint of an OpenID Connect provider

Get the OpenID Connect provider’s (IDP) configuration document.

Any SSO that follows the OIDC protocol standards is expected to work with the Ceph Object Gateway. Red Hat has tested with the following SSO providers:

- Red Hat Single Sing-on

- Keycloak

Prerequisites

-

Installation of the

opensslandcurlpackages.

Procedure

Get the configuration document from the IDP’s URL:

Syntax

curl -k -v \ -X GET \ -H "Content-Type: application/x-www-form-urlencoded" \ "IDP_URL:8000/CONTEXT/realms/REALM/.well-known/openid-configuration" \ | jq .curl -k -v \ -X GET \ -H "Content-Type: application/x-www-form-urlencoded" \ "IDP_URL:8000/CONTEXT/realms/REALM/.well-known/openid-configuration" \ | jq .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

curl -k -v \ -X GET \ -H "Content-Type: application/x-www-form-urlencoded" \ "http://www.example.com:8000/auth/realms/quickstart/.well-known/openid-configuration" \ | jq .[user@client ~]$ curl -k -v \ -X GET \ -H "Content-Type: application/x-www-form-urlencoded" \ "http://www.example.com:8000/auth/realms/quickstart/.well-known/openid-configuration" \ | jq .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Get the IDP certificate:

Syntax

curl -k -v \ -X GET \ -H "Content-Type: application/x-www-form-urlencoded" \ "IDP_URL/CONTEXT/realms/REALM/protocol/openid-connect/certs" \ | jq .curl -k -v \ -X GET \ -H "Content-Type: application/x-www-form-urlencoded" \ "IDP_URL/CONTEXT/realms/REALM/protocol/openid-connect/certs" \ | jq .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

curl -k -v \ -X GET \ -H "Content-Type: application/x-www-form-urlencoded" \ "http://www.example.com/auth/realms/quickstart/protocol/openid-connect/certs" \ | jq .[user@client ~]$ curl -k -v \ -X GET \ -H "Content-Type: application/x-www-form-urlencoded" \ "http://www.example.com/auth/realms/quickstart/protocol/openid-connect/certs" \ | jq .Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

x5ccert can be available on the/certspath or in the/jwkspath depending on the SSO provider.Copy the result of the "x5c" response from the previous command and paste it into the

certificate.crtfile. Include—–BEGIN CERTIFICATE—–at the beginning and—–END CERTIFICATE—–at the end.Example

-----BEGIN CERTIFICATE----- MIIDYjCCAkqgAwIBAgIEEEd2CDANBgkqhkiG9w0BAQsFADBzMQkwBwYDVQQGEwAxCTAHBgNVBAgTADEJMAcGA1UEBxMAMQkwBwYDVQQKEwAxCTAHBgNVBAsTADE6MDgGA1UEAxMxYXV0aHN2Yy1pbmxpbmVtZmEuZGV2LnZlcmlmeS5pYm1jbG91ZHNlY3VyaXR5LmNvbTAeFw0yMTA3MDUxMzU2MzZaFw0zMTA3MDMxMzU2MzZaMHMxCTAHBgNVBAYTADEJMAcGA1UECBMAMQkwBwYDVQQHEwAxCTAHBgNVBAoTADEJMAcGA1UECxMAMTowOAYDVQQDEzFhdXRoc3ZjLWlubGluZW1mYS5kZXYudmVyaWZ5LmlibWNsb3Vkc2VjdXJpdHkuY29tMIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAphyu3HaAZ14JH/EXetZxtNnerNuqcnfxcmLhBz9SsTlFD59ta+BOVlRnK5SdYEqO3ws2iGEzTvC55rczF+hDVHFZEBJLVLQe8ABmi22RAtG1P0dA/Bq8ReFxpOFVWJUBc31QM+ummW0T4yw44wQJI51LZTMz7PznB0ScpObxKe+frFKd1TCMXPlWOSzmTeFYKzR83Fg9hsnz7Y8SKGxi+RoBbTLT+ektfWpR7O+oWZIf4INe1VYJRxZvn+qWcwI5uMRCtQkiMknc3Rj6Eupiqq6FlAjDs0p//EzsHAlW244jMYnHCGq0UP3oE7vViLJyiOmZw7J3rvs3m9mOQiPLoQIDAQABMA0GCSqGSIb3DQEBCwUAA4IBAQCeVqAzSh7Tp8LgaTIFUuRbdjBAKXC9Nw3+pRBHoiUTdhqO3ualyGih9m/js/clb8Vq/39zl0VPeaslWl2NNX9zaK7xo+ckVIOY3ucCaTC04ZUn1KzZu/7azlN0C5XSWg/CfXgU2P3BeMNzc1UNY1BASGyWn2lEplIVWKLaDZpNdSyyGyaoQAIBdzxeNCyzDfPCa2oSO8WH1czmFiNPqR5kdknHI96CmsQdi+DT4jwzVsYgrLfcHXmiWyIAb883hR3Pobp+Bsw7LUnxebQ5ewccjYmrJzOk5Wb5FpXBhaJH1B3AEd6RGalRUyc/zUKdvEy0nIRMDS9x2BP3NVvZSADD -----END CERTIFICATE-----

-----BEGIN CERTIFICATE----- MIIDYjCCAkqgAwIBAgIEEEd2CDANBgkqhkiG9w0BAQsFADBzMQkwBwYDVQQGEwAxCTAHBgNVBAgTADEJMAcGA1UEBxMAMQkwBwYDVQQKEwAxCTAHBgNVBAsTADE6MDgGA1UEAxMxYXV0aHN2Yy1pbmxpbmVtZmEuZGV2LnZlcmlmeS5pYm1jbG91ZHNlY3VyaXR5LmNvbTAeFw0yMTA3MDUxMzU2MzZaFw0zMTA3MDMxMzU2MzZaMHMxCTAHBgNVBAYTADEJMAcGA1UECBMAMQkwBwYDVQQHEwAxCTAHBgNVBAoTADEJMAcGA1UECxMAMTowOAYDVQQDEzFhdXRoc3ZjLWlubGluZW1mYS5kZXYudmVyaWZ5LmlibWNsb3Vkc2VjdXJpdHkuY29tMIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAphyu3HaAZ14JH/EXetZxtNnerNuqcnfxcmLhBz9SsTlFD59ta+BOVlRnK5SdYEqO3ws2iGEzTvC55rczF+hDVHFZEBJLVLQe8ABmi22RAtG1P0dA/Bq8ReFxpOFVWJUBc31QM+ummW0T4yw44wQJI51LZTMz7PznB0ScpObxKe+frFKd1TCMXPlWOSzmTeFYKzR83Fg9hsnz7Y8SKGxi+RoBbTLT+ektfWpR7O+oWZIf4INe1VYJRxZvn+qWcwI5uMRCtQkiMknc3Rj6Eupiqq6FlAjDs0p//EzsHAlW244jMYnHCGq0UP3oE7vViLJyiOmZw7J3rvs3m9mOQiPLoQIDAQABMA0GCSqGSIb3DQEBCwUAA4IBAQCeVqAzSh7Tp8LgaTIFUuRbdjBAKXC9Nw3+pRBHoiUTdhqO3ualyGih9m/js/clb8Vq/39zl0VPeaslWl2NNX9zaK7xo+ckVIOY3ucCaTC04ZUn1KzZu/7azlN0C5XSWg/CfXgU2P3BeMNzc1UNY1BASGyWn2lEplIVWKLaDZpNdSyyGyaoQAIBdzxeNCyzDfPCa2oSO8WH1czmFiNPqR5kdknHI96CmsQdi+DT4jwzVsYgrLfcHXmiWyIAb883hR3Pobp+Bsw7LUnxebQ5ewccjYmrJzOk5Wb5FpXBhaJH1B3AEd6RGalRUyc/zUKdvEy0nIRMDS9x2BP3NVvZSADD -----END CERTIFICATE-----Copy to Clipboard Copied! Toggle word wrap Toggle overflow Get the certificate thumbprint:

Syntax

openssl x509 -in CERT_FILE -fingerprint -noout

openssl x509 -in CERT_FILE -fingerprint -nooutCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

openssl x509 -in certificate.crt -fingerprint -noout SHA1 Fingerprint=F7:D7:B3:51:5D:D0:D3:19:DD:21:9A:43:A9:EA:72:7A:D6:06:52:87

[user@client ~]$ openssl x509 -in certificate.crt -fingerprint -noout SHA1 Fingerprint=F7:D7:B3:51:5D:D0:D3:19:DD:21:9A:43:A9:EA:72:7A:D6:06:52:87Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Remove all the colons from the SHA1 fingerprint and use this as the input for creating the IDP entity in the IAM request.

Additional Resources

- See the Obtaining the Root CA Thumbprint for an OpenID Connect Identity Provider article on Amazon’s website.

- See the Secure Token Service application programming interfaces section in the Red Hat Ceph Storage Developer Guide for more details on the STS APIs.

- See the Examples using the Secure Token Service APIs section of the Red Hat Ceph Storage Developer Guide for more details.

3.2.8.5. Registering the OpenID Connect provider

Register the OpenID Connect provider’s (IDP) configuration document.

Prerequisites

-

Installation of the

opensslandcurlpackages. - Secure Token Service configured.

- User created for an OIDC provider.

- Thumbprint of an OIDC obtained.

Procedure

Extract URL from the token.

Example

bash check_token_isv.sh | jq .iss "https://keycloak-sso.apps.ocp.example.com/auth/realms/ceph"

[root@host01 ~]# bash check_token_isv.sh | jq .iss "https://keycloak-sso.apps.ocp.example.com/auth/realms/ceph"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Register the OIDC provider with Ceph Object Gateway.

Example

aws --endpoint https://cephproxy1.example.com:8443 iam create-open-id-connect-provider --url https://keycloak-sso.apps.ocp.example.com/auth/realms/ceph --thumbprint-list 00E9CFD697E0B16DD13C86B0FFDC29957E5D24DF

[root@host01 ~]# aws --endpoint https://cephproxy1.example.com:8443 iam create-open-id-connect-provider --url https://keycloak-sso.apps.ocp.example.com/auth/realms/ceph --thumbprint-list 00E9CFD697E0B16DD13C86B0FFDC29957E5D24DFCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the OIDC provider is added to the Ceph Object Gateway.

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.2.8.6. Creating IAM roles and policies

Create IAM roles and policies.

Prerequisites

-

Installation of the

opensslandcurlpackages. - Secure Token Service configured.

- User created for an OIDC provider.

- Thumbprint of an OIDC obtained.

- The OIDC provider in Ceph Object Gateway registered.

Procedure

Retrieve and validate JWT token.

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the token.

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, the jq filter is used by the subfield in the token and is set to ceph.

Create a JSON file with role properties. Set

StatementtoAllowand theActionasAssumeRoleWithWebIdentity. Allow access to any user with the JWT token that matches the condition withsub:ceph.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a Ceph Object Gateway role using the JSON file.

Example

radosgw-admin role create --role-name rgwadmins \ --assume-role-policy-doc=$(jq -rc . /root/role-rgwadmins.json)

[root@host01 ~]# radosgw-admin role create --role-name rgwadmins \ --assume-role-policy-doc=$(jq -rc . /root/role-rgwadmins.json)Copy to Clipboard Copied! Toggle word wrap Toggle overflow

.

3.2.8.7. Accessing S3 resources

Verify the Assume Role with STS credentials to access S3 resources.

Prerequisites

-

Installation of the

opensslandcurlpackages. - Secure Token Service configured.

- User created for an OIDC provider.

- Thumbprint of an OIDC obtained.

- The OIDC provider in Ceph Object Gateway registered.

- IAM roles and policies created

Procedure

Following is an example of assume Role with STS to get temporary access and secret key to access S3 resources.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the script.

Example

source ./test-assume-role.sh s3admin passw0rd rgwadmins aws s3 mb s3://testbucket aws s3 ls

[root@host01 ~]# source ./test-assume-role.sh s3admin passw0rd rgwadmins [root@host01 ~]# aws s3 mb s3://testbucket [root@host01 ~]# aws s3 lsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.2.9. Configuring and using STS Lite with Keystone (Technology Preview)

The Amazon Secure Token Service (STS) and S3 APIs co-exist in the same namespace. The STS options can be configured in conjunction with the Keystone options.

Both S3 and STS APIs can be accessed using the same endpoint in Ceph Object Gateway.

Prerequisites

- Red Hat Ceph Storage 5.0 or higher.

- A running Ceph Object Gateway.

- Installation of the Boto Python module, version 3 or higher.

- Root-level access to a Ceph Manager node.

- User-level access to an OpenStack node.

Procedure

Set the following configuration options for the Ceph Object Gateway client:

Syntax

ceph config set RGW_CLIENT_NAME rgw_sts_key STS_KEY ceph config set RGW_CLIENT_NAME rgw_s3_auth_use_sts true

ceph config set RGW_CLIENT_NAME rgw_sts_key STS_KEY ceph config set RGW_CLIENT_NAME rgw_s3_auth_use_sts trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

rgw_sts_keyis the STS key for encrypting or decrypting the session token and is exactly 16 hex characters.ImportantThe STS key needs to be alphanumeric.

Example

ceph config set client.rgw rgw_sts_key 7f8fd8dd4700mnop ceph config set client.rgw rgw_s3_auth_use_sts true

[root@mgr ~]# ceph config set client.rgw rgw_sts_key 7f8fd8dd4700mnop [root@mgr ~]# ceph config set client.rgw rgw_s3_auth_use_sts trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Generate the EC2 credentials on the OpenStack node:

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the generated credentials to get back a set of temporary security credentials using GetSessionToken API:

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Obtaining the temporary credentials can be used for making S3 calls:

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new S3Access role and configure a policy.

Assign a user with administrative CAPS:

Syntax

radosgw-admin caps add --uid="USER" --caps="roles=*"

radosgw-admin caps add --uid="USER" --caps="roles=*"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

radosgw-admin caps add --uid="gwadmin" --caps="roles=*"

[root@mgr ~]# radosgw-admin caps add --uid="gwadmin" --caps="roles=*"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the S3Access role:

Syntax

radosgw-admin role create --role-name=ROLE_NAME --path=PATH --assume-role-policy-doc=TRUST_POLICY_DOC

radosgw-admin role create --role-name=ROLE_NAME --path=PATH --assume-role-policy-doc=TRUST_POLICY_DOCCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

radosgw-admin role create --role-name=S3Access --path=/application_abc/component_xyz/ --assume-role-policy-doc=\{\"Version\":\"2012-10-17\",\"Statement\":\[\{\"Effect\":\"Allow\",\"Principal\":\{\"AWS\":\[\"arn:aws:iam:::user/TESTER\"\]\},\"Action\":\[\"sts:AssumeRole\"\]\}\]\}[root@mgr ~]# radosgw-admin role create --role-name=S3Access --path=/application_abc/component_xyz/ --assume-role-policy-doc=\{\"Version\":\"2012-10-17\",\"Statement\":\[\{\"Effect\":\"Allow\",\"Principal\":\{\"AWS\":\[\"arn:aws:iam:::user/TESTER\"\]\},\"Action\":\[\"sts:AssumeRole\"\]\}\]\}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Attach a permission policy to the S3Access role:

Syntax

radosgw-admin role-policy put --role-name=ROLE_NAME --policy-name=POLICY_NAME --policy-doc=PERMISSION_POLICY_DOC

radosgw-admin role-policy put --role-name=ROLE_NAME --policy-name=POLICY_NAME --policy-doc=PERMISSION_POLICY_DOCCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

radosgw-admin role-policy put --role-name=S3Access --policy-name=Policy --policy-doc=\{\"Version\":\"2012-10-17\",\"Statement\":\[\{\"Effect\":\"Allow\",\"Action\":\[\"s3:*\"\],\"Resource\":\"arn:aws:s3:::example_bucket\"\}\]\}[root@mgr ~]# radosgw-admin role-policy put --role-name=S3Access --policy-name=Policy --policy-doc=\{\"Version\":\"2012-10-17\",\"Statement\":\[\{\"Effect\":\"Allow\",\"Action\":\[\"s3:*\"\],\"Resource\":\"arn:aws:s3:::example_bucket\"\}\]\}Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Now another user can assume the role of the

gwadminuser. For example, thegwuseruser can assume the permissions of thegwadminuser. Make a note of the assuming user’s

access_keyandsecret_keyvalues.Example

radosgw-admin user info --uid=gwuser | grep -A1 access_key

[root@mgr ~]# radosgw-admin user info --uid=gwuser | grep -A1 access_keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Use the AssumeRole API call, providing the

access_keyandsecret_keyvalues from the assuming user:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantThe AssumeRole API requires the S3Access role.

Additional Resources

- See the Test S3 Access section in the Red Hat Ceph Storage Object Gateway Guide for more information on installing the Boto Python module.

- See the Create a User section in the Red Hat Ceph Storage Object Gateway Guide for more information.

3.2.10. Working around the limitations of using STS Lite with Keystone (Technology Preview)

A limitation with Keystone is that it does not supports Secure Token Service (STS) requests. Another limitation is the payload hash is not included with the request. To work around these two limitations the Boto authentication code must be modified.

Prerequisites

- A running Red Hat Ceph Storage cluster, version 5.0 or higher.

- A running Ceph Object Gateway.

- Installation of Boto Python module, version 3 or higher.

Procedure

Open and edit Boto’s

auth.pyfile.Add the following four lines to the code block:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following two lines to the code block:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- See the Test S3 Access section in the Red Hat Ceph Storage Object Gateway Guide for more information on installing the Boto Python module.

3.3. S3 bucket operations

As a developer, you can perform bucket operations with the Amazon S3 application programming interface (API) through the Ceph Object Gateway.

The following table list the Amazon S3 functional operations for buckets, along with the function’s support status.

| Feature | Status | Notes |

|---|---|---|

| Supported | ||

| Supported | Different set of canned ACLs. | |

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Partially Supported |

| |

| Partially Supported |

| |

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | Different set of canned ACLs | |

| Supported | Different set of canned ACLs | |

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Partially Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Supported | ||

| Supported |

Prerequisites

- A running Red Hat Ceph Storage cluster.

- A RESTful client.

3.3.1. S3 create bucket notifications

Create bucket notifications at the bucket level. The notification configuration has the Red Hat Ceph Storage Object Gateway S3 events, ObjectCreated, ObjectRemoved, and ObjectLifecycle:Expiration. These need to be published and the destination to send the bucket notifications. Bucket notifications are S3 operations.

To create a bucket notification for s3:objectCreate, s3:objectRemove and s3:ObjectLifecycle:Expiration events, use PUT:

Example

Red Hat supports ObjectCreate events, such as put, post, multipartUpload, and copy. Red Hat also supports ObjectRemove events, such as object_delete and s3_multi_object_delete.

Request Entities

NotificationConfiguration- Description

-

list of

TopicConfigurationentities. - Type

- Container

- Required

- Yes

TopicConfiguration- Description

-

Id,Topic, andlistof Event entities. - Type

- Container

- Required

- Yes

id- Description

- Name of the notification.

- Type

- String

- Required

- Yes

Topic- Description

Topic Amazon Resource Name(ARN)

NoteThe topic must be created beforehand.

- Type

- String

- Required

- Yes

Event- Description

- List of supported events. Multiple event entities can be used. If omitted, all events are handled.

- Type

- String

- Required

- No

Filter- Description

-

S3Key,S3MetadataandS3Tagsentities. - Type

- Container

- Required

- No

S3Key- Description

-

A list of

FilterRuleentities, for filtering based on the object key. At most, 3 entities may be in the list, for exampleNamewould beprefix,suffix, orregex. All filter rules in the list must match for the filter to match. - Type

- Container

- Required

- No

S3Metadata- Description

-

A list of

FilterRuleentities, for filtering based on object metadata. All filter rules in the list must match the metadata defined on the object. However, the object still matches if it has other metadata entries not listed in the filter. - Type

- Container

- Required

- No

S3Tags- Description

-

A list of

FilterRuleentities, for filtering based on object tags. All filter rules in the list must match the tags defined on the object. However, the object still matches if it has other tags not listed in the filter. - Type

- Container

- Required

- No

S3Key.FilterRule- Description

-

NameandValueentities. Name is :prefix,suffix, orregex. TheValuewould hold the key prefix, key suffix, or a regular expression for matching the key, accordingly. - Type

- Container

- Required

- Yes

S3Metadata.FilterRule- Description

-

NameandValueentities. Name is the name of the metadata attribute for examplex-amz-meta-xxx. The value is the expected value for this attribute. - Type

- Container

- Required

- Yes

S3Tags.FilterRule- Description

-

NameandValueentities. Name is the tag key, and the value is the tag value. - Type

- Container

- Required

- Yes

HTTP response

400- Status Code

-

MalformedXML - Description

- The XML is not well-formed.

400- Status Code

-

InvalidArgument - Description

- Missing Id or missing or invalid topic ARN or invalid event.

404- Status Code

-

NoSuchBucket - Description

- The bucket does not exist.

404- Status Code

-

NoSuchKey - Description

- The topic does not exist.

3.3.2. S3 get bucket notifications

Get a specific notification or list all the notifications configured on a bucket.

Syntax

Get /BUCKET?notification=NOTIFICATION_ID HTTP/1.1 Host: cname.domain.com Date: date Authorization: AWS ACCESS_KEY:HASH_OF_HEADER_AND_SECRET

Get /BUCKET?notification=NOTIFICATION_ID HTTP/1.1

Host: cname.domain.com

Date: date

Authorization: AWS ACCESS_KEY:HASH_OF_HEADER_AND_SECRETExample

Get /testbucket?notification=testnotificationID HTTP/1.1 Host: cname.domain.com Date: date Authorization: AWS ACCESS_KEY:HASH_OF_HEADER_AND_SECRET

Get /testbucket?notification=testnotificationID HTTP/1.1

Host: cname.domain.com

Date: date

Authorization: AWS ACCESS_KEY:HASH_OF_HEADER_AND_SECRETExample Response

The notification subresource returns the bucket notification configuration or an empty NotificationConfiguration element. The caller must be the bucket owner.

Request Entities

notification-id- Description

- Name of the notification. All notifications are listed if the ID is not provided.

- Type

- String

NotificationConfiguration- Description

-

list of

TopicConfigurationentities. - Type

- Container

- Required

- Yes

TopicConfiguration- Description

-

Id,Topic, andlistof Event entities. - Type

- Container

- Required

- Yes

id- Description

- Name of the notification.

- Type

- String

- Required

- Yes

Topic- Description

Topic Amazon Resource Name(ARN)

NoteThe topic must be created beforehand.

- Type

- String

- Required

- Yes

Event- Description

- Handled event. Multiple event entities may exist.

- Type

- String

- Required

- Yes

Filter- Description

- The filters for the specified configuration.

- Type

- Container

- Required

- No

HTTP response

404- Status Code

-

NoSuchBucket - Description

- The bucket does not exist.

404- Status Code

-

NoSuchKey - Description

- The notification does not exist if it has been provided.

3.3.3. S3 delete bucket notifications

Delete a specific or all notifications from a bucket.

Notification deletion is an extension to the S3 notification API. Any defined notifications on a bucket are deleted when the bucket is deleted. Deleting an unknown notification for example double delete, is not considered an error.

To delete a specific or all notifications use DELETE:

Syntax

DELETE /BUCKET?notification=NOTIFICATION_ID HTTP/1.1

DELETE /BUCKET?notification=NOTIFICATION_ID HTTP/1.1Example

DELETE /testbucket?notification=testnotificationID HTTP/1.1

DELETE /testbucket?notification=testnotificationID HTTP/1.1Request Entities

notification-id- Description

- Name of the notification. All notifications on the bucket are deleted if the notification ID is not provided.

- Type

- String

HTTP response

404- Status Code

-

NoSuchBucket - Description

- The bucket does not exist.

3.3.4. Accessing bucket host names

There are two different modes of accessing the buckets. The first, and preferred method identifies the bucket as the top-level directory in the URI.

Example

GET /mybucket HTTP/1.1 Host: cname.domain.com

GET /mybucket HTTP/1.1

Host: cname.domain.comThe second method identifies the bucket via a virtual bucket host name.

Example

GET / HTTP/1.1 Host: mybucket.cname.domain.com

GET / HTTP/1.1

Host: mybucket.cname.domain.comRed Hat prefers the first method, because the second method requires expensive domain certification and DNS wild cards.

3.3.5. S3 list buckets

GET / returns a list of buckets created by the user making the request. GET / only returns buckets created by an authenticated user. You cannot make an anonymous request.

Syntax

GET / HTTP/1.1 Host: cname.domain.com Authorization: AWS ACCESS_KEY:HASH_OF_HEADER_AND_SECRET

GET / HTTP/1.1

Host: cname.domain.com

Authorization: AWS ACCESS_KEY:HASH_OF_HEADER_AND_SECRETResponse Entities

Buckets- Description

- Container for list of buckets.

- Type

- Container

Bucket- Description

- Container for bucket information.

- Type

- Container

Name- Description

- Bucket name.

- Type

- String

CreationDate- Description

- UTC time when the bucket was created.

- Type

- Date

ListAllMyBucketsResult- Description

- A container for the result.

- Type

- Container

Owner- Description

-

A container for the bucket owner’s

IDandDisplayName. - Type

- Container

ID- Description

- The bucket owner’s ID.

- Type

- String

DisplayName- Description

- The bucket owner’s display name.

- Type

- String

3.3.6. S3 return a list of bucket objects

Returns a list of bucket objects.

Syntax

GET /BUCKET?max-keys=25 HTTP/1.1 Host: cname.domain.com

GET /BUCKET?max-keys=25 HTTP/1.1

Host: cname.domain.comParameters

prefix- Description

- Only returns objects that contain the specified prefix.

- Type

- String

delimiter- Description

- The delimiter between the prefix and the rest of the object name.

- Type

- String

marker- Description

- A beginning index for the list of objects returned.

- Type

- String

max-keys- Description

- The maximum number of keys to return. Default is 1000.

- Type

- Integer

HTTP Response

200- Status Code

-

OK - Description

- Buckets retrieved.

GET /BUCKET returns a container for buckets with the following fields:

Bucket Response Entities

ListBucketResult- Description

- The container for the list of objects.

- Type

- Entity

Name- Description

- The name of the bucket whose contents will be returned.

- Type

- String

Prefix- Description

- A prefix for the object keys.

- Type

- String

Marker- Description

- A beginning index for the list of objects returned.

- Type

- String

MaxKeys- Description

- The maximum number of keys returned.

- Type

- Integer

Delimiter- Description

-

If set, objects with the same prefix will appear in the

CommonPrefixeslist. - Type

- String

IsTruncated- Description

-

If

true, only a subset of the bucket’s contents were returned. - Type

- Boolean

CommonPrefixes- Description

- If multiple objects contain the same prefix, they will appear in this list.

- Type

- Container

The ListBucketResult contains objects, where each object is within a Contents container.

Object Response Entities

Contents- Description

- A container for the object.

- Type

- Object

Key- Description

- The object’s key.

- Type

- String

LastModified- Description

- The object’s last-modified date and time.

- Type

- Date

ETag- Description

- An MD-5 hash of the object. Etag is an entity tag.

- Type

- String

Size- Description

- The object’s size.

- Type

- Integer

StorageClass- Description

-

Should always return

STANDARD. - Type

- String

3.3.7. S3 create a new bucket

Creates a new bucket. To create a bucket, you must have a user ID and a valid AWS Access Key ID to authenticate requests. You can not create buckets as an anonymous user.

Constraints

In general, bucket names should follow domain name constraints.

- Bucket names must be unique.

- Bucket names cannot be formatted as IP address.

- Bucket names can be between 3 and 63 characters long.

- Bucket names must not contain uppercase characters or underscores.

- Bucket names must start with a lowercase letter or number.

- Bucket names can contain a dash (-).

- Bucket names must be a series of one or more labels. Adjacent labels are separated by a single period (.). Bucket names can contain lowercase letters, numbers, and hyphens. Each label must start and end with a lowercase letter or a number.

The above constraints are relaxed if rgw_relaxed_s3_bucket_names is set to true. The bucket names must still be unique, cannot be formatted as IP address, and can contain letters, numbers, periods, dashes, and underscores of up to 255 characters long.

Syntax

PUT /BUCKET HTTP/1.1 Host: cname.domain.com x-amz-acl: public-read-write Authorization: AWS ACCESS_KEY:HASH_OF_HEADER_AND_SECRET

PUT /BUCKET HTTP/1.1

Host: cname.domain.com

x-amz-acl: public-read-write

Authorization: AWS ACCESS_KEY:HASH_OF_HEADER_AND_SECRETParameters

x-amz-acl- Description

- Canned ACLs.

- Valid Values

-

private,public-read,public-read-write,authenticated-read - Required

- No

HTTP Response

If the bucket name is unique, within constraints, and unused, the operation will succeed. If a bucket with the same name already exists and the user is the bucket owner, the operation will succeed. If the bucket name is already in use, the operation will fail.

409- Status Code

-

BucketAlreadyExists - Description

- Bucket already exists under different user’s ownership.

3.3.8. S3 put bucket website

The put bucket website API sets the configuration of the website that is specified in the website subresource. To configure a bucket as a website, the website subresource can be added on the bucket.

Put operation requires S3:PutBucketWebsite permission. By default, only the bucket owner can configure the website attached to a bucket.

Syntax

PUT /BUCKET?website-configuration=HTTP/1.1

PUT /BUCKET?website-configuration=HTTP/1.1Example

PUT /testbucket?website-configuration=HTTP/1.1

PUT /testbucket?website-configuration=HTTP/1.13.3.9. S3 get bucket website

The get bucket website API retrieves the configuration of the website that is specified in the website subresource.

Get operation requires the S3:GetBucketWebsite permission. By default, only the bucket owner can read the bucket website configuration.

Syntax

GET /BUCKET?website-configuration=HTTP/1.1

GET /BUCKET?website-configuration=HTTP/1.1Example

GET /testbucket?website-configuration=HTTP/1.1

GET /testbucket?website-configuration=HTTP/1.13.3.10. S3 delete bucket website

The delete bucket website API removes the website configuration for a bucket.

Syntax

DELETE /BUCKET?website-configuration=HTTP/1.1

DELETE /BUCKET?website-configuration=HTTP/1.1Example

DELETE /testbucket?website-configuration=HTTP/1.1

DELETE /testbucket?website-configuration=HTTP/1.13.3.11. S3 put bucket replication

The put bucket replication API configures replication configuration for a bucket or replaces an existing one.

Syntax

PUT /BUCKET?replication HTTP/1.1

PUT /BUCKET?replication HTTP/1.1Example

PUT /testbucket?replication HTTP/1.1

PUT /testbucket?replication HTTP/1.13.3.12. S3 get bucket replication

The get bucket replication API returns the replication configuration of a bucket.

Syntax

GET /BUCKET?replication HTTP/1.1

GET /BUCKET?replication HTTP/1.1Example

GET /testbucket?replication HTTP/1.1

GET /testbucket?replication HTTP/1.13.3.13. S3 delete bucket replication

The delete bucket replication API deletes the replication configuration from a bucket.

Syntax

DELETE /BUCKET?replication HTTP/1.1

DELETE /BUCKET?replication HTTP/1.1Example

DELETE /testbucket?replication HTTP/1.1

DELETE /testbucket?replication HTTP/1.13.3.14. S3 delete a bucket

Deletes a bucket. You can reuse bucket names following a successful bucket removal.

Syntax

DELETE /BUCKET HTTP/1.1 Host: cname.domain.com Authorization: AWS ACCESS_KEY:HASH_OF_HEADER_AND_SECRET