Questo contenuto non è disponibile nella lingua selezionata.

Chapter 45. Set Up Cross-Datacenter Replication

45.1. Cross-Datacenter Replication

In Red Hat JBoss Data Grid, Cross-Datacenter Replication allows the administrator to create data backups in multiple clusters. These clusters can be at the same physical location or different ones. JBoss Data Grid’s Cross-Site Replication implementation is based on JGroups' RELAY2 protocol.

Cross-Datacenter Replication ensures data redundancy across clusters. In addition to creating backups for data restoration, these datasets may also be used in an active-active mode. When configured in this manner systems in separate environments are able to handle sessions should one cluster fail. Ideally, each of these clusters should be in a different physical location than the others.

45.2. Cross-Datacenter Replication Operations

Red Hat JBoss Data Grid’s Cross-Datacenter Replication operation is explained through the use of an example, as follows:

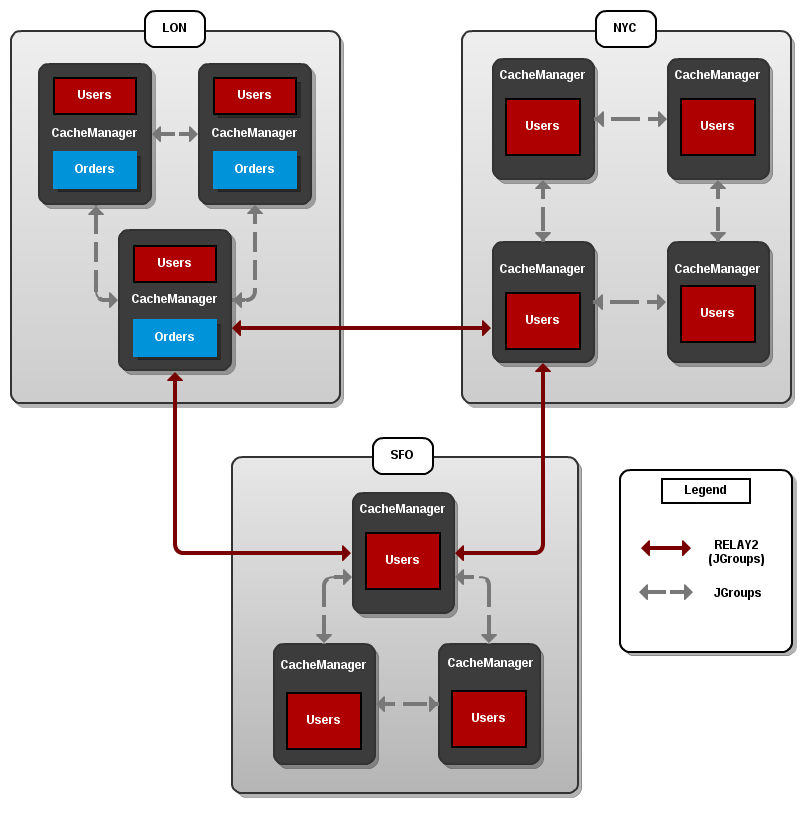

Figure 45.1. Cross-Datacenter Replication Example

Three sites are configured in this example: LON, NYC and SFO. Each site hosts a running JBoss Data Grid cluster made up of three to four physical nodes.

The Users cache is active in all three sites - LON, NYC and SFO. Changes to the Users cache at the any one of these sites will be replicated to the other two as long as the cache defines the other two sites as its backups through configuration. The Orders cache, however, is only available locally at the LON site because it is not replicated to the other sites.

The Users cache can use different replication mechanisms each site. For example, it can back up data synchronously to SFO and asynchronously to NYC and LON.

The Users cache can also have a different configuration from one site to another. For example, it can be configured as a distributed cache with owners set to 2 in the LON site, as a replicated cache in the NYC site and as a distributed cache with owners set to 1 in the SFO site.

JGroups is used for communication within each site as well as inter-site communication. Specifically, a JGroups protocol called RELAY2 facilitates communication between sites. For more information, refer to the RELAY2 section in the JBoss Data Grid Administration Guide .

45.3. Configure Cross-Datacenter Replication Programmatically

The programmatic method to configure cross-datacenter replication in Red Hat JBoss Data Grid is as follows:

Configure Cross-Datacenter Replication Programmatically

Identify the Node Location

Declare the site the node resides in:

globalConfiguration.site().localSite("LON");globalConfiguration.site().localSite("LON");Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure JGroups

Configure JGroups to use the RELAY protocol:

globalConfiguration.transport().addProperty("configurationFile",“jgroups-with-relay.xml”);globalConfiguration.transport().addProperty("configurationFile",“jgroups-with-relay.xml”);Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set Up the Remote Site

Set up JBoss Data Grid caches to replicate to the remote site:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Configure the Backup Caches

JBoss Data Grid implicitly replicates data to a cache with same name as the remote site. If a backup cache on the remote site has a different name, users must specify a

backupForcache to ensure data is replicated to the correct cache.NoteThis step is optional and only required if the remote site’s caches are named differently from the original caches.

Configure the cache in site

NYCto receive backup data fromLON:ConfigurationBuilder NYCbackupOfLon = new ConfigurationBuilder(); NYCbackupOfLon.sites().backupFor().remoteCache("lon").remoteSite("LON");ConfigurationBuilder NYCbackupOfLon = new ConfigurationBuilder(); NYCbackupOfLon.sites().backupFor().remoteCache("lon").remoteSite("LON");Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the cache in site

SFOto receive backup data fromLON:ConfigurationBuilder SFObackupOfLon = new ConfigurationBuilder(); SFObackupOfLon.sites().backupFor().remoteCache("lon").remoteSite("LON");ConfigurationBuilder SFObackupOfLon = new ConfigurationBuilder(); SFObackupOfLon.sites().backupFor().remoteCache("lon").remoteSite("LON");Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Add the Contents of the Configuration File

As a default, Red Hat JBoss Data Grid includes JGroups configuration files such as default-configs/default-jgroups-tcp.xml and default-configs/default-jgroups-udp.xml in the infinispan-embedded-{VERSION}.jar package.

Copy the JGroups configuration to a new file (in this example, it is named jgroups-with-relay.xml ) and add the provided configuration information to this file. Note that the relay.RELAY2 protocol configuration must be the last protocol in the configuration stack.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the relay.xml File

Set up the relay.RELAY2 configuration in the relay.xml file. This file describes the global cluster configuration.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the Global Cluster

The file jgroups-global.xml referenced in relay.xml contains another JGroups configuration which is used for the global cluster: communication between sites.

The global cluster configuration is usually TCP -based and uses the TCPPING protocol (instead of PING or MPING ) to discover members. Copy the contents of default-configs/default-jgroups-tcp.xml into jgroups-global.xml and add the following configuration in order to configure TCPPING :

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the hostnames (or IP addresses) in

TCPPING.initial_hostswith those used for your site masters. The ports (7800in this example) must match theTCP.bind_port.For more information about the TCPPING protocol, refer to the JBoss Data Grid Administration and Configuration Guide .

45.4. Taking a Site Offline

In Red Hat JBoss Data Grid’s Cross-datacenter replication configuration, if backing up to one site fails a certain number of times during a time interval, that site can be marked as offline automatically. This feature removes the need for manual intervention by an administrator to mark the site as offline.

To configure taking a Cross-datacenter replication site offline automatically in Red Hat JBoss Data Grid programmatically:

Taking a Site Offline Programmatically

45.5. Hot Rod Cross Site Cluster Failover

Besides in-cluster failover, Hot Rod clients can failover to different clusters each representing independent sites. Hot Rod Cross Site cluster failover is available in both automatic and manual modes.

Automatic Cross Site Cluster Failover

If the main/primary cluster nodes are unavailable, the client application checks for alternatively defined clusters and will attempt to failover to them. Upon successful failover, the client will remain connected to the alternative cluster until it becomes unavailable. After that, the client will try to failover to other defined clusters and finally switch over to the main/primary cluster with the original server settings if the connectivity is restored.

To configure an alternative cluster in the Hot Rod client, provide details of at least one host/port pair for each of the clusters configured as shown in the following example.

Configure Alternate Cluster

org.infinispan.client.hotrod.configuration.ConfigurationBuilder cb

= new org.infinispan.client.hotrod.configuration.ConfigurationBuilder();

cb.addCluster("remote-cluster").addClusterNode("remote-cluster-host", 11222);

RemoteCacheManager rcm = new RemoteCacheManager(cb.build());

org.infinispan.client.hotrod.configuration.ConfigurationBuilder cb

= new org.infinispan.client.hotrod.configuration.ConfigurationBuilder();

cb.addCluster("remote-cluster").addClusterNode("remote-cluster-host", 11222);

RemoteCacheManager rcm = new RemoteCacheManager(cb.build());Regardless of the cluster definitions, the initial server(s) configuration must be provided unless the initial servers can be resolved using the default server host and port details.

Manual Cross Site Cluster Failover

For manual site cluster switchover, call RemoteCacheManager’s switchToCluster(clusterName) or switchToDefaultCluster().

Using switchToCluster(clusterName), users can force a client to switch to one of the clusters predefined in the Hot Rod client configuration. To switch to the default cluster use switchToDefaultCluster() instead.