Questo contenuto non è disponibile nella lingua selezionata.

Resource Management Guide

Managing system resources on Red Hat Enterprise Linux 6

Edition 6

Abstract

Chapter 1. Introduction to Control Groups (Cgroups)

cgconfig (control group config) service can be configured to start up at boot time and reestablish your predefined cgroups, thus making them persistent across reboots.

1.1. How Control Groups Are Organized

The Linux Process Model

init process, which is executed by the kernel at boot time and starts other processes (which may in turn start child processes of their own). Because all processes descend from a single parent, the Linux process model is a single hierarchy, or tree.

init inherits the environment (such as the PATH variable)[1] and certain other attributes (such as open file descriptors) of its parent process.

The Cgroup Model

- they are hierarchical, and

- child cgroups inherit certain attributes from their parent cgroup.

Available Subsystems in Red Hat Enterprise Linux

blkio— this subsystem sets limits on input/output access to and from block devices such as physical drives (disk, solid state, or USB).cpu— this subsystem uses the scheduler to provide cgroup tasks access to the CPU.cpuacct— this subsystem generates automatic reports on CPU resources used by tasks in a cgroup.cpuset— this subsystem assigns individual CPUs (on a multicore system) and memory nodes to tasks in a cgroup.devices— this subsystem allows or denies access to devices by tasks in a cgroup.freezer— this subsystem suspends or resumes tasks in a cgroup.memory— this subsystem sets limits on memory use by tasks in a cgroup and generates automatic reports on memory resources used by those tasks.net_cls— this subsystem tags network packets with a class identifier (classid) that allows the Linux traffic controller (tc) to identify packets originating from a particular cgroup task.net_prio— this subsystem provides a way to dynamically set the priority of network traffic per network interface.ns— the namespace subsystem.perf_event— this subsystem identifies cgroup membership of tasks and can be used for performance analysis.

Note

1.2. Relationships Between Subsystems, Hierarchies, Control Groups and Tasks

Rule 1

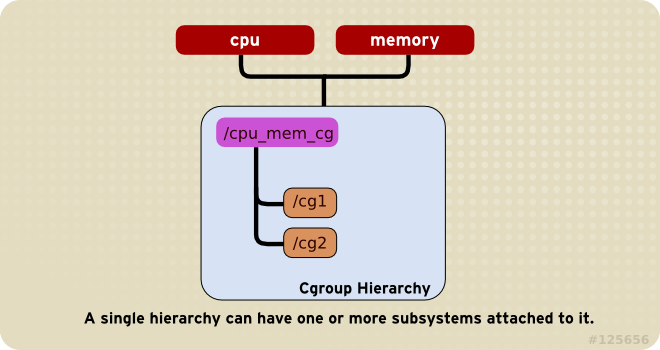

cpu and memory subsystems (or any number of subsystems) can be attached to a single hierarchy, as long as each one is not attached to any other hierarchy which has any other subsystems attached to it already (see Rule 2).

Figure 1.1. Rule 1

Rule 2

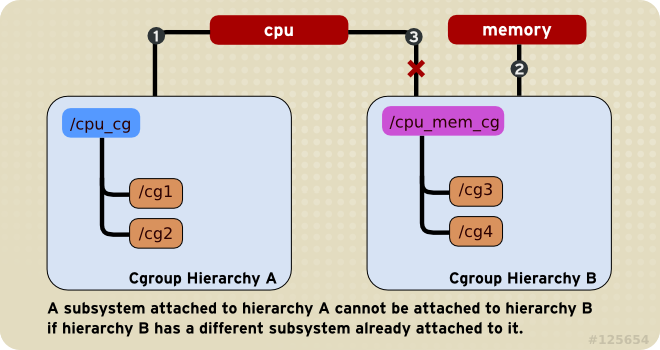

cpu) cannot be attached to more than one hierarchy if one of those hierarchies has a different subsystem attached to it already.

cpu subsystem can never be attached to two different hierarchies if one of those hierarchies already has the memory subsystem attached to it. However, a single subsystem can be attached to two hierarchies if both of those hierarchies have only that subsystem attached.

Figure 1.2. Rule 2—The numbered bullets represent a time sequence in which the subsystems are attached.

Rule 3

cpu and memory subsystems are attached to a hierarchy named cpu_mem_cg, and the net_cls subsystem is attached to a hierarchy named net, then a running httpd process could be a member of any one cgroup in cpu_mem_cg, and any one cgroup in net.

cpu_mem_cg that the httpd process is a member of might restrict its CPU time to half of that allotted to other processes, and limit its memory usage to a maximum of 1024 MB. Additionally, the cgroup in net that the httpd process is a member of might limit its transmission rate to 30 MB/s (megabytes per second).

Figure 1.3. Rule 3

Rule 4

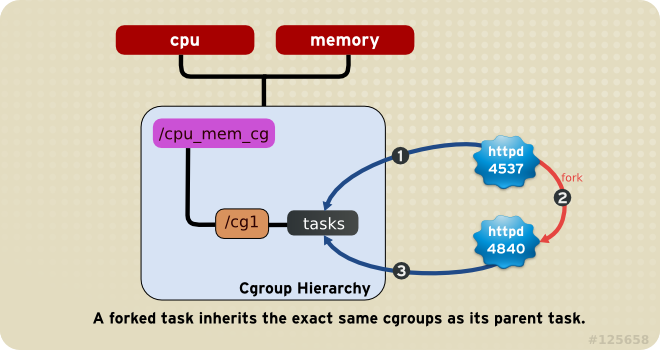

httpd task that is a member of the cgroup named half_cpu_1gb_max in the cpu_and_mem hierarchy, and a member of the cgroup trans_rate_30 in the net hierarchy. When that httpd process forks itself, its child process automatically becomes a member of the half_cpu_1gb_max cgroup, and the trans_rate_30 cgroup. It inherits the exact same cgroups its parent task belongs to.

Figure 1.4. Rule 4—The numbered bullets represent a time sequence in which the task forks.

1.3. Implications for Resource Management

- Because a task can belong to only a single cgroup in any one hierarchy, there is only one way that a task can be limited or affected by any single subsystem. This is logical: a feature, not a limitation.

- You can group several subsystems together so that they affect all tasks in a single hierarchy. Because cgroups in that hierarchy have different parameters set, those tasks will be affected differently.

- It may sometimes be necessary to refactor a hierarchy. An example would be removing a subsystem from a hierarchy that has several subsystems attached, and attaching it to a new, separate hierarchy.

- Conversely, if the need for splitting subsystems among separate hierarchies is reduced, you can remove a hierarchy and attach its subsystems to an existing one.

- The design allows for simple cgroup usage, such as setting a few parameters for specific tasks in a single hierarchy, such as one with just the

cpuandmemorysubsystems attached. - The design also allows for highly specific configuration: each task (process) on a system could be a member of each hierarchy, each of which has a single attached subsystem. Such a configuration would give the system administrator absolute control over all parameters for every single task.

Chapter 2. Using Control Groups

Note

yum install libcgroup

~]# yum install libcgroup2.1. The cgconfig Service

cgconfig service installed with the libcgroup package provides a convenient way to create hierarchies, attach subsystems to hierarchies, and manage cgroups within those hierarchies. It is recommended that you use cgconfig to manage hierarchies and cgroups on your system.

cgconfig service is not started by default on Red Hat Enterprise Linux 6. When you start the service with chkconfig, it reads the cgroup configuration file — /etc/cgconfig.conf. Cgroups are therefore recreated from session to session and remain persistent. Depending on the contents of the configuration file, cgconfig can create hierarchies, mount necessary file systems, create cgroups, and set subsystem parameters for each group.

/etc/cgconfig.conf file installed with the libcgroup package creates and mounts an individual hierarchy for each subsystem, and attaches the subsystems to these hierarchies. The cgconfig service also allows to create configuration files in the /etc/cgconfig.d/ directory and to invoke them from /etc/cgconfig.conf.

cgconfig service (with the service cgconfig stop command), it unmounts all the hierarchies that it mounted.

2.1.1. The /etc/cgconfig.conf File

/etc/cgconfig.conf file contains two major types of entries — mount and group. Mount entries create and mount hierarchies as virtual file systems, and attach subsystems to those hierarchies. Mount entries are defined using the following syntax:

mount {

subsystem = /cgroup/hierarchy;

…

}

mount {

subsystem = /cgroup/hierarchy;

…

}

/etc/cgconfig.conf file when it is installed. The default configuration file looks as follows:

/cgroup/ directory. It is recommended to use these default hierarchies for specifying control groups. However, in certain cases you may need to create hierarchies manually, for example when they were deleted before, or it is beneficial to have a single hierarchy for multiple subsystems (as in Section 4.3, “Per-group Division of CPU and Memory Resources”). Note that multiple subsystems can be mounted to a single hierarchy, but each subsystem can be mounted only once. See Example 2.1, “Creating a mount entry” for an example of creating a hierarchy.

Example 2.1. Creating a mount entry

cpuset subsystem:

mount {

cpuset = /cgroup/red;

}

mount {

cpuset = /cgroup/red;

}

mkdir /cgroup/red mount -t cgroup -o cpuset red /cgroup/red

~]# mkdir /cgroup/red

~]# mount -t cgroup -o cpuset red /cgroup/redcpuset is already mounted.

permissions section is optional. To define permissions for a group entry, use the following syntax:

Example 2.2. Creating a group entry

sqladmin group to add tasks to the cgroup and the root user to modify subsystem parameters:

Note

cgconfig service for the changes in the /etc/cgconfig.conf file to take effect. However, note that restarting this service causes the entire cgroup hierarchy to be rebuilt, which removes any previously existing cgroups (for example, any existing cgroups used by libvirtd). To restart the cgconfig service, use the following command:

service cgconfig restart

~]# service cgconfig restart/etc/cgconfig.conf. The hash symbols ('#') at the start of each line comment that line out and make it invisible to the cgconfig service.

2.1.2. The /etc/cgconfig.d/ Directory

/etc/cgconfig.d/ directory is reserved for storing configuration files for specific applications and use cases. These files should be created with the .conf suffix and adhere to the same syntax rules as /etc/cgconfig.conf.

cgconfig service first parses the /etc/cgconfig.conf file and then continues with files in the /etc/cgconfig.d/ directory. Note that the order of file parsing is not defined, because it does not make a difference provided that each configuration file is unique. Therefore, do not define the same group or template in multiple configuration files, otherwise they would interfere with each other.

/etc/cgconfig.d/.

2.2. Creating a Hierarchy and Attaching Subsystems

Warning

cgconfig service) these commands fail unless you first unmount existing hierarchies, which affects the operation of the system. Do not experiment with these instructions on production systems.

mount section of the /etc/cgconfig.conf file as root. Entries in the mount section have the following format:

subsystem = /cgroup/hierarchy;

subsystem = /cgroup/hierarchy;cgconfig next starts, it will create the hierarchy and attach the subsystems to it.

cpu_and_mem and attaches the cpu, cpuset, cpuacct, and memory subsystems to it.

Alternative method

mkdir /cgroup/name

~]# mkdir /cgroup/namemkdir /cgroup/cpu_and_mem

~]# mkdir /cgroup/cpu_and_memmount command to mount the hierarchy and simultaneously attach one or more subsystems. For example:

mount -t cgroup -o subsystems name /cgroup/name

~]# mount -t cgroup -o subsystems name /cgroup/nameExample 2.3. Using the mount command to attach subsystems

/cgroup/cpu_and_mem already exists and will serve as the mount point for the hierarchy that you create. Attach the cpu, cpuset, and memory subsystems to a hierarchy named cpu_and_mem, and mount the cpu_and_mem hierarchy on /cgroup/cpu_and_mem:

mount -t cgroup -o cpu,cpuset,memory cpu_and_mem /cgroup/cpu_and_mem

~]# mount -t cgroup -o cpu,cpuset,memory cpu_and_mem /cgroup/cpu_and_memlssubsys [3] command:

- the

cpu,cpuset, andmemorysubsystems are attached to a hierarchy mounted on/cgroup/cpu_and_mem, and - the

net_cls,ns,cpuacct,devices,freezer, andblkiosubsystems are as yet unattached to any hierarchy, as illustrated by the lack of a corresponding mount point.

2.3. Attaching Subsystems to, and Detaching Them from, an Existing Hierarchy

mount section of the /etc/cgconfig.conf file as root, using the same syntax described in Section 2.2, “Creating a Hierarchy and Attaching Subsystems”. When cgconfig next starts, it will reorganize the subsystems according to the hierarchies that you specify.

Alternative method

mount command, together with the remount option.

Example 2.4. Remounting a hierarchy to add a subsystem

lssubsys command shows cpu, cpuset, and memory subsystems attached to the cpu_and_mem hierarchy:

cpu_and_mem hierarchy, using the remount option, and include cpuacct in the list of subsystems:

mount -t cgroup -o remount,cpu,cpuset,cpuacct,memory cpu_and_mem /cgroup/cpu_and_mem

~]# mount -t cgroup -o remount,cpu,cpuset,cpuacct,memory cpu_and_mem /cgroup/cpu_and_memlssubsys command now shows cpuacct attached to the cpu_and_mem hierarchy:

-o options. For example, to then detach the cpuacct subsystem, simply remount and omit it:

mount -t cgroup -o remount,cpu,cpuset,memory cpu_and_mem /cgroup/cpu_and_mem

~]# mount -t cgroup -o remount,cpu,cpuset,memory cpu_and_mem /cgroup/cpu_and_mem2.4. Unmounting a Hierarchy

umount command:

umount /cgroup/name

~]# umount /cgroup/nameumount /cgroup/cpu_and_mem

~]# umount /cgroup/cpu_and_memcgclear command which can deactivate a hierarchy even when it is not empty — refer to Section 2.12, “Unloading Control Groups”.

2.5. Creating Control Groups

cgcreate command to create cgroups. The syntax for cgcreate is:

cgcreate -t uid:gid -a uid:gid -g subsystems:path

cgcreate -t uid:gid -a uid:gid -g subsystems:path-t(optional) — specifies a user (by user ID, uid) and a group (by group ID, gid) to own thetaskspseudofile for this cgroup. This user can add tasks to the cgroup.Note

Note that the only way to remove a task from a cgroup is to move it to a different cgroup. To move a task, the user has to have write access to the destination cgroup; write access to the source cgroup is not necessary.-a(optional) — specifies a user (by user ID, uid) and a group (by group ID, gid) to own all pseudofiles other thantasksfor this cgroup. This user can modify access of the tasks in this cgroup to system resources.-g— specifies the hierarchy in which the cgroup should be created, as a comma‑separated list of subsystems associated with hierarchies. If the subsystems in this list are in different hierarchies, the group is created in each of these hierarchies. The list of hierarchies is followed by a colon and the path to the child group relative to the hierarchy. Do not include the hierarchy mount point in the path.For example, the cgroup located in the directory/cgroup/cpu_and_mem/lab1/is called justlab1— its path is already uniquely determined because there is at most one hierarchy for a given subsystem. Note also that the group is controlled by all the subsystems that exist in the hierarchies in which the cgroup is created, even though these subsystems have not been specified in thecgcreatecommand — refer to Example 2.5, “cgcreate usage”.

Example 2.5. cgcreate usage

cpu and memory subsystems are mounted together in the cpu_and_mem hierarchy, and the net_cls controller is mounted in a separate hierarchy called net. Run the following command:

cgcreate -g cpu,net_cls:/test-subgroup

~]# cgcreate -g cpu,net_cls:/test-subgroupcgcreate command creates two groups named test-subgroup, one in the cpu_and_mem hierarchy and one in the net hierarchy. The test-subgroup group in the cpu_and_mem hierarchy is controlled by the memory subsystem, even though it was not specified in the cgcreate command.

Alternative method

mkdir command:

mkdir /cgroup/hierarchy/name/child_name

~]# mkdir /cgroup/hierarchy/name/child_namemkdir /cgroup/cpu_and_mem/group1

~]# mkdir /cgroup/cpu_and_mem/group12.6. Removing Control Groups

cgdelete to remove cgroups. It has similar syntax as cgcreate. Run the following command:

cgdelete subsystems:path

cgdelete subsystems:path- subsystems is a comma‑separated list of subsystems.

- path is the path to the cgroup relative to the root of the hierarchy.

cgdelete cpu,net_cls:/test-subgroup

~]# cgdelete cpu,net_cls:/test-subgroupcgdelete can also recursively remove all subgroups with the option -r.

2.7. Setting Parameters

cgset command from a user account with permission to modify the relevant cgroup. For example, if cpuset is mounted to /cgroup/cpu_and_mem/ and the /cgroup/cpu_and_mem/group1 subdirectory exists, specify the CPUs to which this group has access with the following command:

cpu_and_mem]# cgset -r cpuset.cpus=0-1 group1

cpu_and_mem]# cgset -r cpuset.cpus=0-1 group1cgset is:

cgset -r parameter=value path_to_cgroup

cgset -r parameter=value path_to_cgroup- parameter is the parameter to be set, which corresponds to the file in the directory of the given cgroup.

- value is the value for the parameter.

- path_to_cgroup is the path to the cgroup relative to the root of the hierarchy. For example, to set the parameter of the root group (if the

cpuacctsubsystem is mounted to/cgroup/cpu_and_mem/), change to the/cgroup/cpu_and_mem/directory, and run:cpu_and_mem]# cgset -r cpuacct.usage=0 /

cpu_and_mem]# cgset -r cpuacct.usage=0 /Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, because.is relative to the root group (that is, the root group itself) you could also run:cpu_and_mem]# cgset -r cpuacct.usage=0 .

cpu_and_mem]# cgset -r cpuacct.usage=0 .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note, however, that/is the preferred syntax.Note

Only a small number of parameters can be set for the root group (such as thecpuacct.usageparameter shown in the examples above). This is because a root group owns all of the existing resources, therefore, it would make no sense to limit all existing processes by defining certain parameters, for example thecpuset.cpuparameter.To set the parameter ofgroup1, which is a subgroup of the root group, run:cpu_and_mem]# cgset -r cpuacct.usage=0 group1

cpu_and_mem]# cgset -r cpuacct.usage=0 group1Copy to Clipboard Copied! Toggle word wrap Toggle overflow A trailing slash after the name of the group (for example,cpuacct.usage=0 group1/) is optional.

cgset might depend on values set higher in a particular hierarchy. For example, if group1 is limited to use only CPU 0 on a system, you cannot set group1/subgroup1 to use CPUs 0 and 1, or to use only CPU 1.

cgset to copy the parameters of one cgroup into another existing cgroup. For example:

cpu_and_mem]# cgset --copy-from group1/ group2/

cpu_and_mem]# cgset --copy-from group1/ group2/cgset is:

cgset --copy-from path_to_source_cgroup path_to_target_cgroup

cgset --copy-from path_to_source_cgroup path_to_target_cgroup- path_to_source_cgroup is the path to the cgroup whose parameters are to be copied, relative to the root group of the hierarchy.

- path_to_target_cgroup is the path to the destination cgroup, relative to the root group of the hierarchy.

Alternative method

echo command. In the following example, the echo command inserts the value of 0-1 into the cpuset.cpus pseudofile of the cgroup group1:

echo 0-1 > /cgroup/cpu_and_mem/group1/cpuset.cpus

~]# echo 0-1 > /cgroup/cpu_and_mem/group1/cpuset.cpus2.8. Moving a Process to a Control Group

cgclassify command, for example:

cgclassify -g cpu,memory:group1 1701

~]# cgclassify -g cpu,memory:group1 1701cgclassify is:

cgclassify -g subsystems:path_to_cgroup pidlist

cgclassify -g subsystems:path_to_cgroup pidlist- subsystems is a comma‑separated list of subsystems, or

*to launch the process in the hierarchies associated with all available subsystems. Note that if cgroups of the same name exist in multiple hierarchies, the-goption moves the processes in each of those groups. Ensure that the cgroup exists within each of the hierarchies whose subsystems you specify here. - path_to_cgroup is the path to the cgroup within its hierarchies.

- pidlist is a space-separated list of process identifier (PIDs).

-g option is not specified, cgclassify automatically searches the /etc/cgrules.conf file (see Section 2.8.1, “The cgred Service”) and uses the first applicable configuration line. According to this line, cgclassify determines the hierarchies and cgroups to move the process under. Note that for the move to be successful, the destination hierarchies must exist. The subsystems specified in /etc/cgrules.conf also have to be properly configured for the corresponding hierarchy in /etc/cgconfig.conf.

--sticky option before the pid to keep any child processes in the same cgroup. If you do not set this option and the cgred service is running, child processes are allocated to cgroups based on the settings found in /etc/cgrules.conf. However, the parent process remains in the cgroup in which it was first started.

cgclassify, you can move several processes simultaneously. For example, this command moves the processes with PIDs 1701 and 1138 into cgroup group1/:

cgclassify -g cpu,memory:group1 1701 1138

~]# cgclassify -g cpu,memory:group1 1701 1138Alternative method

tasks file of the cgroup. For example, to move a process with the PID 1701 into a cgroup at /cgroup/cpu_and_mem/group1/:

echo 1701 > /cgroup/cpu_and_mem/group1/tasks

~]# echo 1701 > /cgroup/cpu_and_mem/group1/tasks2.8.1. The cgred Service

cgrulesengd service) that moves tasks into cgroups according to parameters set in the /etc/cgrules.conf file. Entries in the /etc/cgrules.conf file can take one of these two forms:

user subsystems control_group

user subsystems control_groupuser:command subsystems control_group

user:command subsystems control_groupmaria devices /usergroup/staff

maria devices /usergroup/staff

maria access the devices subsystem according to the parameters specified in the /usergroup/staff cgroup. To associate particular commands with particular cgroups, add the command parameter, as follows:

maria:ftp devices /usergroup/staff/ftp

maria:ftp devices /usergroup/staff/ftp

maria uses the ftp command, the process is automatically moved to the /usergroup/staff/ftp cgroup in the hierarchy that contains the devices subsystem. Note, however, that the daemon moves the process to the cgroup only after the appropriate condition is fulfilled. Therefore, the ftp process might run for a short time in the wrong group. Furthermore, if the process quickly spawns children while in the wrong group, these children might not be moved.

/etc/cgrules.conf file can include the following extra notation:

@— indicates a group instead of an individual user. For example,@adminsare all users in theadminsgroup.*— represents "all". For example,*in thesubsystemfield represents all mounted subsystems.%— represents an item that is the same as the item on the line above.

/etc/cgrules.conf file can have the following form:

@adminstaff devices /admingroup @labstaff % %

@adminstaff devices /admingroup

@labstaff % %

adminstaff and labstaff access the devices subsystem according to the limits set in the admingroup cgroup.

/etc/cgrules.conf can be linked to templates configured either in the /etc/cgconfig.conf file or in configuration files stored in the /etc/cgconfig.d/ directory, allowing for flexible cgroup assignment and creation.

/etc/cgconfig.conf:

/etc/cgrules.conf entry, which can look as follows:

peter:ftp cpu users/%g/%u

peter:ftp cpu users/%g/%u

%g and %u variables used above are automatically replaced with group and user name depending on the owner of the ftp process. If the process belongs to peter from the adminstaff group, the above path is translated to users/adminstaff/peter. The cgred service then searches for this directory, and if it does not exist, cgred creates it and assigns the process to users/adminstaff/peter/tasks. Note that template rules apply only to definitions of templates in configuration files, so even if "group users/adminstaff/peter" was defined in /etc/cgconfig.conf, it would be ignored in favor of "template users/%g/%u".

%u— is replaced with the name of the user who owns the current process. If name resolution fails, UID is used instead.%U— is replaced with the UID of the specified user who owns the current process.%g— is replaced with the name of the user group that owns the current process, or with the GID if name resolution fails.%G— is replaced with the GID of the cgroup that owns the current process.%p— is replaced with the name of the current process. PID is used in case of name resolution failure.%P— is replaced with the PID of the current processes.

2.9. Starting a Process in a Control Group

Important

cpuset subsystem, the cpuset.cpus and cpuset.mems parameters must be defined for that cgroup.

cgexec command. For example, this command launches the firefox web browser within the group1 cgroup, subject to the limitations imposed on that group by the cpu subsystem:

cgexec -g cpu:group1 firefox http://www.redhat.com

~]# cgexec -g cpu:group1 firefox http://www.redhat.comcgexec is:

cgexec -g subsystems:path_to_cgroup command arguments

cgexec -g subsystems:path_to_cgroup command arguments- subsystems is a comma‑separated list of subsystems, or

*to launch the process in the hierarchies associated with all available subsystems. Note that, as withcgsetdescribed in Section 2.7, “Setting Parameters”, if cgroups of the same name exist in multiple hierarchies, the-goption creates processes in each of those groups. Ensure that the cgroup exists within each of the hierarchies whose subsystems you specify here. - path_to_cgroup is the path to the cgroup relative to the hierarchy.

- command is the command to run.

- arguments are any arguments for the command.

--sticky option before the command to keep any child processes in the same cgroup. If you do not set this option and the cgred service is running, child processes will be allocated to cgroups based on the settings found in /etc/cgrules.conf. The process itself, however, will remain in the cgroup in which you started it.

Alternative method

echo $$ > /cgroup/cpu_and_mem/group1/tasks firefox

~]# echo $$ > /cgroup/cpu_and_mem/group1/tasks

~]# firefoxgroup1 cgroup. Therefore, an even better way would be:

sh -c "echo \$$ > /cgroup/cpu_and_mem/group1/tasks && firefox"

~]# sh -c "echo \$$ > /cgroup/cpu_and_mem/group1/tasks && firefox"2.9.1. Starting a Service in a Control Group

- use a

/etc/sysconfig/servicenamefile - use the

daemon()function from/etc/init.d/functionsto start the service

/etc/sysconfig directory to include an entry in the form CGROUP_DAEMON="subsystem:control_group" where subsystem is a subsystem associated with a particular hierarchy, and control_group is a cgroup in that hierarchy. For example:

CGROUP_DAEMON="cpuset:group1"

CGROUP_DAEMON="cpuset:group1"

cpuset is mounted to /cgroup/cpu_and_mem/, the above configuration translates to /cgroup/cpu_and_mem/group1.

2.9.2. Process Behavior in the Root Control Group

blkio and cpu configuration options affect processes (tasks) running in the root cgroup in a different way than those in a subgroup. Consider the following example:

- Create two subgroups under one root group:

/rootgroup/red/and/rootgroup/blue/ - In each subgroup and in the root group, define the

cpu.sharesconfiguration option and set it to1.

/rootgroup/tasks, /rootgroup/red/tasks and /rootgroup/blue/tasks) consumes 33.33% of the CPU:

/rootgroup/ process: 33.33% /rootgroup/blue/ process: 33.33% /rootgroup/red/ process: 33.33%

/rootgroup/ process: 33.33%

/rootgroup/blue/ process: 33.33%

/rootgroup/red/ process: 33.33%

blue and red result in the 33.33% percent of the CPU assigned to that specific subgroup to be split among the multiple processes in that subgroup.

/rootgroup/ contains three processes, /rootgroup/red/ contains one process and /rootgroup/blue/ contains one process, and the cpu.shares option is set to 1 in all groups, the CPU resource is divided as follows:

/rootgroup/ processes: 20% + 20% + 20% /rootgroup/blue/ process: 20% /rootgroup/red/ process: 20%

/rootgroup/ processes: 20% + 20% + 20%

/rootgroup/blue/ process: 20%

/rootgroup/red/ process: 20%

blkio and cpu configuration options which divide an available resource based on a weight or a share (for example, cpu.shares or blkio.weight). To move all tasks from the root group into a specific subgroup, you can use the following commands:

rootgroup]# cat tasks >> red/tasks rootgroup]# echo > tasks

rootgroup]# cat tasks >> red/tasks

rootgroup]# echo > tasks2.10. Generating the /etc/cgconfig.conf File

/etc/cgconfig.conf file can be generated from the current cgroup configuration using the cgsnapshot utility. This utility takes a snapshot of the current state of all subsystems and their cgroups and returns their configuration as it would appear in the /etc/cgconfig.conf file. Example 2.6, “Using the cgsnapshot utility” shows an example usage of the cgsnapshot utility.

Example 2.6. Using the cgsnapshot utility

cpu subsystem, with specific values for some of their parameters. Executing the cgsnapshot command (with the -s option and an empty /etc/cgsnapshot_blacklist.conf file[4]) then produces the following output:

-s option used in the example above tells cgsnapshot to ignore all warnings in the output file caused by parameters not being defined in the blacklist or whitelist of the cgsnapshot utility. For more information on parameter blacklisting, refer to Section 2.10.1, “Blacklisting Parameters”. For more information on parameter whitelisting, refer to Section 2.10.2, “Whitelisting Parameters”.

-f option to specify a file to which the output should be redirected. For example:

cgsnapshot -f ~/test/cgconfig_test.conf

~]$ cgsnapshot -f ~/test/cgconfig_test.confWarning

-f option, note that it overwrites any content in the file you specify. Therefore, it is recommended not to direct the output straight to the /etc/cgconfig.conf file.

2.10.1. Blacklisting Parameters

/etc/cgsnapshot_blacklist.conf file is checked for blacklisted parameters. If a parameter is not present in the blacklist, the whitelist is checked. To specify a different blacklist, use the -b option. For example:

cgsnapshot -b ~/test/my_blacklist.conf

~]$ cgsnapshot -b ~/test/my_blacklist.conf2.10.2. Whitelisting Parameters

cgsnapshot -f ~/test/cgconfig_test.conf

~]$ cgsnapshot -f ~/test/cgconfig_test.conf

WARNING: variable cpu.rt_period_us is neither blacklisted nor whitelisted

WARNING: variable cpu.rt_runtime_us is neither blacklisted nor whitelisted

-w option. For example:

cgsnapshot -w ~/test/my_whitelist.conf

~]$ cgsnapshot -w ~/test/my_whitelist.conf-t option tells cgsnapshot to generate a configuration with parameters from the whitelist only.

2.11. Obtaining Information About Control Groups

2.11.1. Finding a Process

ps -O cgroup

~]$ ps -O cgroupcat /proc/PID/cgroup

~]$ cat /proc/PID/cgroup2.11.2. Finding a Subsystem

hierarchy column lists IDs of the existing hierarchies on the system. Subsystems with the same hierarchy ID are attached to the same hierarchy. The num_cgroup column lists the number of existing cgroups in the hierarchy that uses a particular subsystem. The enabled column reports the value of 1 if a particular subsystem is enabled, or 0 if it is not.

lssubsys -m subsystems

~]$ lssubsys -m subsystemslssubsys -m command returns only the top-level mount point per each hierarchy.

2.11.3. Finding Hierarchies

/cgroup/ directory. Assuming this is the case on your system, list or browse the contents of that directory to obtain a list of hierarchies. If the tree utility is installed on your system, run it to obtain an overview of all hierarchies and the cgroups within them:

tree /cgroup

~]$ tree /cgroup2.11.4. Finding Control Groups

lscgroup

~]$ lscgroupcontroller:path. For example:

lscgroup cpuset:group1

~]$ lscgroup cpuset:group1group1 cgroup in the hierarchy to which the cpuset subsystem is attached.

2.11.5. Displaying Parameters of Control Groups

cgget -r parameter list_of_cgroups

~]$ cgget -r parameter list_of_cgroupscgget -r cpuset.cpus -r memory.limit_in_bytes group1 group2

~]$ cgget -r cpuset.cpus -r memory.limit_in_bytes group1 group2cpuset.cpus and memory.limit_in_bytes for cgroups group1 and group2.

cgget -g cpuset /

~]$ cgget -g cpuset /2.12. Unloading Control Groups

Warning

cgclear command destroys all cgroups in all hierarchies. If you do not have these hierarchies stored in a configuration file, you will not be able to readily reconstruct them.

cgclear command.

Note

mount command to create cgroups (as opposed to creating them using the cgconfig service) results in the creation of an entry in the /etc/mtab file (the mounted file systems table). This change is also reflected in the /proc/mounts file. However, the unloading of cgroups with the cgclear command, along with other cgconfig commands, uses a direct kernel interface which does not reflect its changes into the /etc/mtab file and only writes the new information into the /proc/mounts file. After unloading cgroups with the cgclear command, the unmounted cgroups can still be visible in the /etc/mtab file, and, consequently, displayed when the mount command is executed. Refer to the /proc/mounts file for an accurate listing of all mounted cgroups.

2.13. Using the Notification API

memory.oom_control. To create a notification handler, write a C program using the following instructions:

- Using the

eventfd()function, create a file descriptor for event notifications. For more information, refer to theeventfd(2)man page. - To monitor the

memory.oom_controlfile, open it using theopen()function. For more information, refer to theopen(2)man page. - Use the

write()function to write the following arguments to thecgroup.event_controlfile of the cgroup whosememory.oom_controlfile you are monitoring:<event_file_descriptor> <OOM_control_file_descriptor>

<event_file_descriptor> <OOM_control_file_descriptor>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:- event_file_descriptor is used to open the

cgroup.event_controlfile, - and OOM_control_file_descriptor is used to open the respective

memory.oom_controlfile.

For more information on writing to a file, refer to thewrite(1)man page.

memory.oom_control tunable parameter, refer to Section 3.7, “memory”. For more information on configuring notifications for OOM control, refer to Example 3.3, “OOM Control and Notifications”.

2.14. Additional Resources

The libcgroup Man Pages

man 1 cgclassify— thecgclassifycommand is used to move running tasks to one or more cgroups.man 1 cgclear— thecgclearcommand is used to delete all cgroups in a hierarchy.man 5 cgconfig.conf— cgroups are defined in thecgconfig.conffile.man 8 cgconfigparser— thecgconfigparsercommand parses thecgconfig.conffile and mounts hierarchies.man 1 cgcreate— thecgcreatecommand creates new cgroups in hierarchies.man 1 cgdelete— thecgdeletecommand removes specified cgroups.man 1 cgexec— thecgexeccommand runs tasks in specified cgroups.man 1 cgget— thecggetcommand displays cgroup parameters.man 1 cgsnapshot— thecgsnapshotcommand generates a configuration file from existing subsystems.man 5 cgred.conf—cgred.confis the configuration file for thecgredservice.man 5 cgrules.conf—cgrules.confcontains the rules used for determining when tasks belong to certain cgroups.man 8 cgrulesengd— thecgrulesengdservice distributes tasks to cgroups.man 1 cgset— thecgsetcommand sets parameters for a cgroup.man 1 lscgroup— thelscgroupcommand lists the cgroups in a hierarchy.man 1 lssubsys— thelssubsyscommand lists the hierarchies containing the specified subsystems.

lssubsys command is one of the utilities provided by the libcgroup package. You have to install libcgroup to use it: refer to Chapter 2, Using Control Groups if you are unable to run lssubsys.

Chapter 3. Subsystems and Tunable Parameters

cgroups.txt in the kernel documentation, installed on your system at /usr/share/doc/kernel-doc-kernel-version/Documentation/cgroups/ (provided by the kernel-doc package). The latest version of the cgroups documentation is also available online at http://www.kernel.org/doc/Documentation/cgroup-v1/cgroups.txt. Note, however, that the features in the latest documentation might not match those available in the kernel installed on your system.

cpuset.cpus is a pseudofile that specifies which CPUs a cgroup is permitted to access. If /cgroup/cpuset/webserver is a cgroup for the web server that runs on a system, and the following command is executed,

echo 0,2 > /cgroup/cpuset/webserver/cpuset.cpus

~]# echo 0,2 > /cgroup/cpuset/webserver/cpuset.cpus0,2 is written to the cpuset.cpus pseudofile and therefore limits any tasks whose PIDs are listed in /cgroup/cpuset/webserver/tasks to use only CPU 0 and CPU 2 on the system.

3.1. blkio

blkio) subsystem controls and monitors access to I/O on block devices by tasks in cgroups. Writing values to some of these pseudofiles limits access or bandwidth, and reading values from some of these pseudofiles provides information on I/O operations.

blkio subsystem offers two policies for controlling access to I/O:

- Proportional weight division — implemented in the Completely Fair Queuing (CFQ) I/O scheduler, this policy allows you to set weights to specific cgroups. This means that each cgroup has a set percentage (depending on the weight of the cgroup) of all I/O operations reserved. For more information, refer to Section 3.1.1, “Proportional Weight Division Tunable Parameters”

- I/O throttling (Upper limit) — this policy is used to set an upper limit for the number of I/O operations performed by a specific device. This means that a device can have a limited rate of read or write operations. For more information, refer to Section 3.1.2, “I/O Throttling Tunable Parameters”

Important

3.1.1. Proportional Weight Division Tunable Parameters

- blkio.weight

- specifies the relative proportion (weight) of block I/O access available by default to a cgroup, in the range from

100to1000. This value is overridden for specific devices by theblkio.weight_deviceparameter. For example, to assign a default weight of500to a cgroup for access to block devices, run:echo 500 > blkio.weight

~]# echo 500 > blkio.weightCopy to Clipboard Copied! Toggle word wrap Toggle overflow - blkio.weight_device

- specifies the relative proportion (weight) of I/O access on specific devices available to a cgroup, in the range from

100to1000. The value of this parameter overrides the value of theblkio.weightparameter for the devices specified. Values take the format of major:minor weight, where major and minor are device types and node numbers specified in Linux Allocated Devices, otherwise known as the Linux Devices List and available from https://www.kernel.org/doc/html/v4.11/admin-guide/devices.html. For example, to assign a weight of500to a cgroup for access to/dev/sda, run:echo 8:0 500 > blkio.weight_device

~]# echo 8:0 500 > blkio.weight_deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the Linux Allocated Devices notation,8:0represents/dev/sda.

3.1.2. I/O Throttling Tunable Parameters

- blkio.throttle.read_bps_device

- specifies the upper limit on the number of read operations a device can perform. The rate of the read operations is specified in bytes per second. Entries have three fields: major, minor, and bytes_per_second. Major and minor are device types and node numbers specified in Linux Allocated Devices, and bytes_per_second is the upper limit rate at which read operations can be performed. For example, to allow the

/dev/sdadevice to perform read operations at a maximum of 10 MBps, run:echo "8:0 10485760" > /cgroup/blkio/test/blkio.throttle.read_bps_device

~]# echo "8:0 10485760" > /cgroup/blkio/test/blkio.throttle.read_bps_deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - blkio.throttle.read_iops_device

- specifies the upper limit on the number of read operations a device can perform. The rate of the read operations is specified in operations per second. Entries have three fields: major, minor, and operations_per_second. Major and minor are device types and node numbers specified in Linux Allocated Devices, and operations_per_second is the upper limit rate at which read operations can be performed. For example, to allow the

/dev/sdadevice to perform a maximum of 10 read operations per second, run:echo "8:0 10" > /cgroup/blkio/test/blkio.throttle.read_iops_device

~]# echo "8:0 10" > /cgroup/blkio/test/blkio.throttle.read_iops_deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - blkio.throttle.write_bps_device

- specifies the upper limit on the number of write operations a device can perform. The rate of the write operations is specified in bytes per second. Entries have three fields: major, minor, and bytes_per_second. Major and minor are device types and node numbers specified in Linux Allocated Devices, and bytes_per_second is the upper limit rate at which write operations can be performed. For example, to allow the

/dev/sdadevice to perform write operations at a maximum of 10 MBps, run:echo "8:0 10485760" > /cgroup/blkio/test/blkio.throttle.write_bps_device

~]# echo "8:0 10485760" > /cgroup/blkio/test/blkio.throttle.write_bps_deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - blkio.throttle.write_iops_device

- specifies the upper limit on the number of write operations a device can perform. The rate of the write operations is specified in operations per second. Entries have three fields: major, minor, and operations_per_second. Major and minor are device types and node numbers specified in Linux Allocated Devices, and operations_per_second is the upper limit rate at which write operations can be performed. For example, to allow the

/dev/sdadevice to perform a maximum of 10 write operations per second, run:echo "8:0 10" > /cgroup/blkio/test/blkio.throttle.write_iops_device

~]# echo "8:0 10" > /cgroup/blkio/test/blkio.throttle.write_iops_deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - blkio.throttle.io_serviced

- reports the number of I/O operations performed on specific devices by a cgroup as seen by the throttling policy. Entries have four fields: major, minor, operation, and number. Major and minor are device types and node numbers specified in Linux Allocated Devices, operation represents the type of operation (

read,write,sync, orasync) and number represents the number of operations. - blkio.throttle.io_service_bytes

- reports the number of bytes transferred to or from specific devices by a cgroup. The only difference between

blkio.io_service_bytesandblkio.throttle.io_service_bytesis that the former is not updated when the CFQ scheduler is operating on a request queue. Entries have four fields: major, minor, operation, and bytes. Major and minor are device types and node numbers specified in Linux Allocated Devices, operation represents the type of operation (read,write,sync, orasync) and bytes is the number of transferred bytes.

3.1.3. blkio Common Tunable Parameters

- blkio.reset_stats

- resets the statistics recorded in the other pseudofiles. Write an integer to this file to reset the statistics for this cgroup.

- blkio.time

- reports the time that a cgroup had I/O access to specific devices. Entries have three fields: major, minor, and time. Major and minor are device types and node numbers specified in Linux Allocated Devices, and time is the length of time in milliseconds (ms).

- blkio.sectors

- reports the number of sectors transferred to or from specific devices by a cgroup. Entries have three fields: major, minor, and sectors. Major and minor are device types and node numbers specified in Linux Allocated Devices, and sectors is the number of disk sectors.

- blkio.avg_queue_size

- reports the average queue size for I/O operations by a cgroup, over the entire length of time of the group's existence. The queue size is sampled every time a queue for this cgroup receives a timeslice. Note that this report is available only if

CONFIG_DEBUG_BLK_CGROUP=yis set on the system. - blkio.group_wait_time

- reports the total time (in nanoseconds — ns) a cgroup spent waiting for a timeslice for one of its queues. The report is updated every time a queue for this cgroup gets a timeslice, so if you read this pseudofile while the cgroup is waiting for a timeslice, the report will not contain time spent waiting for the operation currently queued. Note that this report is available only if

CONFIG_DEBUG_BLK_CGROUP=yis set on the system. - blkio.empty_time

- reports the total time (in nanoseconds — ns) a cgroup spent without any pending requests. The report is updated every time a queue for this cgroup has a pending request, so if you read this pseudofile while the cgroup has no pending requests, the report will not contain time spent in the current empty state. Note that this report is available only if

CONFIG_DEBUG_BLK_CGROUP=yis set on the system. - blkio.idle_time

- reports the total time (in nanoseconds — ns) the scheduler spent idling for a cgroup in anticipation of a better request than the requests already in other queues or from other groups. The report is updated every time the group is no longer idling, so if you read this pseudofile while the cgroup is idling, the report will not contain time spent in the current idling state. Note that this report is available only if

CONFIG_DEBUG_BLK_CGROUP=yis set on the system. - blkio.dequeue

- reports the number of times requests for I/O operations by a cgroup were dequeued by specific devices. Entries have three fields: major, minor, and number. Major and minor are device types and node numbers specified in Linux Allocated Devices, and number is the number of times requests by the group were dequeued. Note that this report is available only if

CONFIG_DEBUG_BLK_CGROUP=yis set on the system. - blkio.io_serviced

- reports the number of I/O operations performed on specific devices by a cgroup as seen by the CFQ scheduler. Entries have four fields: major, minor, operation, and number. Major and minor are device types and node numbers specified in Linux Allocated Devices, operation represents the type of operation (

read,write,sync, orasync) and number represents the number of operations. - blkio.io_service_bytes

- reports the number of bytes transferred to or from specific devices by a cgroup as seen by the CFQ scheduler. Entries have four fields: major, minor, operation, and bytes. Major and minor are device types and node numbers specified in Linux Allocated Devices, operation represents the type of operation (

read,write,sync, orasync) and bytes is the number of transferred bytes. - blkio.io_service_time

- reports the total time between request dispatch and request completion for I/O operations on specific devices by a cgroup as seen by the CFQ scheduler. Entries have four fields: major, minor, operation, and time. Major and minor are device types and node numbers specified in Linux Allocated Devices, operation represents the type of operation (

read,write,sync, orasync) and time is the length of time in nanoseconds (ns). The time is reported in nanoseconds rather than a larger unit so that this report is meaningful even for solid-state devices. - blkio.io_wait_time

- reports the total time I/O operations on specific devices by a cgroup spent waiting for service in the scheduler queues. When you interpret this report, note:

- the time reported can be greater than the total time elapsed, because the time reported is the cumulative total of all I/O operations for the cgroup rather than the time that the cgroup itself spent waiting for I/O operations. To find the time that the group as a whole has spent waiting, use the

blkio.group_wait_timeparameter. - if the device has a

queue_depth> 1, the time reported only includes the time until the request is dispatched to the device, not any time spent waiting for service while the device reorders requests.

Entries have four fields: major, minor, operation, and time. Major and minor are device types and node numbers specified in Linux Allocated Devices, operation represents the type of operation (read,write,sync, orasync) and time is the length of time in nanoseconds (ns). The time is reported in nanoseconds rather than a larger unit so that this report is meaningful even for solid-state devices. - blkio.io_merged

- reports the number of BIOS requests merged into requests for I/O operations by a cgroup. Entries have two fields: number and operation. Number is the number of requests, and operation represents the type of operation (

read,write,sync, orasync). - blkio.io_queued

- reports the number of requests queued for I/O operations by a cgroup. Entries have two fields: number and operation. Number is the number of requests, and operation represents the type of operation (

read,write,sync, orasync).

3.1.4. Example Usage

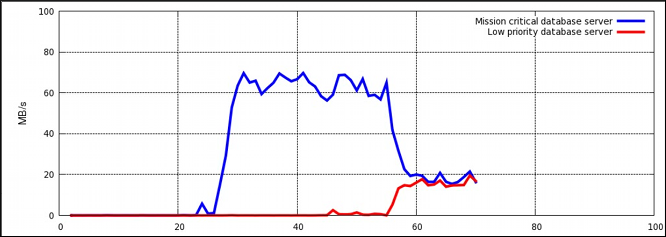

dd threads in two different cgroups with various blkio.weight values.

Example 3.1. blkio proportional weight division

- Mount the

blkiosubsystem:mount -t cgroup -o blkio blkio /cgroup/blkio/

~]# mount -t cgroup -o blkio blkio /cgroup/blkio/Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Create two cgroups for the

blkiosubsystem:mkdir /cgroup/blkio/test1/ mkdir /cgroup/blkio/test2/

~]# mkdir /cgroup/blkio/test1/ ~]# mkdir /cgroup/blkio/test2/Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Set

blkioweights in the previously created cgroups:echo 1000 > /cgroup/blkio/test1/blkio.weight echo 500 > /cgroup/blkio/test2/blkio.weight

~]# echo 1000 > /cgroup/blkio/test1/blkio.weight ~]# echo 500 > /cgroup/blkio/test2/blkio.weightCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create two large files:

dd if=/dev/zero of=file_1 bs=1M count=4000 dd if=/dev/zero of=file_2 bs=1M count=4000

~]# dd if=/dev/zero of=file_1 bs=1M count=4000 ~]# dd if=/dev/zero of=file_2 bs=1M count=4000Copy to Clipboard Copied! Toggle word wrap Toggle overflow The above commands create two files (file_1andfile_2) of size 4 GB. - For each of the test cgroups, execute a

ddcommand (which reads the contents of a file and outputs it to the null device) on one of the large files:cgexec -g blkio:test1 time dd if=file_1 of=/dev/null cgexec -g blkio:test2 time dd if=file_2 of=/dev/null

~]# cgexec -g blkio:test1 time dd if=file_1 of=/dev/null ~]# cgexec -g blkio:test2 time dd if=file_2 of=/dev/nullCopy to Clipboard Copied! Toggle word wrap Toggle overflow Both commands will output their completion time once they have finished. - Simultaneously with the two running

ddthreads, you can monitor the performance in real time by using the iotop utility. To install the iotop utility, execute, as root, theyum install iotopcommand. The following is an example of the output as seen in the iotop utility while running the previously startedddthreads:Total DISK READ: 83.16 M/s | Total DISK WRITE: 0.00 B/s TIME TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND 15:18:04 15071 be/4 root 27.64 M/s 0.00 B/s 0.00 % 92.30 % dd if=file_2 of=/dev/null 15:18:04 15069 be/4 root 55.52 M/s 0.00 B/s 0.00 % 88.48 % dd if=file_1 of=/dev/nullTotal DISK READ: 83.16 M/s | Total DISK WRITE: 0.00 B/s TIME TID PRIO USER DISK READ DISK WRITE SWAPIN IO COMMAND 15:18:04 15071 be/4 root 27.64 M/s 0.00 B/s 0.00 % 92.30 % dd if=file_2 of=/dev/null 15:18:04 15069 be/4 root 55.52 M/s 0.00 B/s 0.00 % 88.48 % dd if=file_1 of=/dev/nullCopy to Clipboard Copied! Toggle word wrap Toggle overflow

dd commands, flush all file system buffers and free pagecache, dentries and inodes using the following commands:

sync echo 3 > /proc/sys/vm/drop_caches

~]# sync

~]# echo 3 > /proc/sys/vm/drop_cachesecho 1 > /sys/block/<disk_device>/queue/iosched/group_isolation

~]# echo 1 > /sys/block/<disk_device>/queue/iosched/group_isolationsda.

3.2. cpu

cpu subsystem schedules CPU access to cgroups. Access to CPU resources can be scheduled using two schedulers:

- Completely Fair Scheduler (CFS) — a proportional share scheduler which divides the CPU time (CPU bandwidth) proportionately between groups of tasks (cgroups) depending on the priority/weight of the task or shares assigned to cgroups. For more information about resource limiting using CFS, refer to Section 3.2.1, “CFS Tunable Parameters”.

- Real-Time scheduler (RT) — a task scheduler that provides a way to specify the amount of CPU time that real-time tasks can use. For more information about resource limiting of real-time tasks, refer to Section 3.2.2, “RT Tunable Parameters”.

3.2.1. CFS Tunable Parameters

Ceiling Enforcement Tunable Parameters

- cpu.cfs_period_us

- specifies a period of time in microseconds (µs, represented here as "

us") for how regularly a cgroup's access to CPU resources should be reallocated. If tasks in a cgroup should be able to access a single CPU for 0.2 seconds out of every 1 second, setcpu.cfs_quota_usto200000andcpu.cfs_period_usto1000000. The upper limit of thecpu.cfs_quota_usparameter is 1 second and the lower limit is 1000 microseconds. - cpu.cfs_quota_us

- specifies the total amount of time in microseconds (µs, represented here as "

us") for which all tasks in a cgroup can run during one period (as defined bycpu.cfs_period_us). As soon as tasks in a cgroup use up all the time specified by the quota, they are throttled for the remainder of the time specified by the period and not allowed to run until the next period. If tasks in a cgroup should be able to access a single CPU for 0.2 seconds out of every 1 second, setcpu.cfs_quota_usto200000andcpu.cfs_period_usto1000000. Note that the quota and period parameters operate on a CPU basis. To allow a process to fully utilize two CPUs, for example, setcpu.cfs_quota_usto200000andcpu.cfs_period_usto100000.Setting the value incpu.cfs_quota_usto-1indicates that the cgroup does not adhere to any CPU time restrictions. This is also the default value for every cgroup (except the root cgroup). - cpu.stat

- reports CPU time statistics using the following values:

nr_periods— number of period intervals (as specified incpu.cfs_period_us) that have elapsed.nr_throttled— number of times tasks in a cgroup have been throttled (that is, not allowed to run because they have exhausted all of the available time as specified by their quota).throttled_time— the total time duration (in nanoseconds) for which tasks in a cgroup have been throttled.

Relative Shares Tunable Parameters

- cpu.shares

- contains an integer value that specifies a relative share of CPU time available to the tasks in a cgroup. For example, tasks in two cgroups that have

cpu.sharesset to100will receive equal CPU time, but tasks in a cgroup that hascpu.sharesset to200receive twice the CPU time of tasks in a cgroup wherecpu.sharesis set to100. The value specified in thecpu.sharesfile must be2or higher.Note that shares of CPU time are distributed per all CPU cores on multi-core systems. Even if a cgroup is limited to less than 100% of CPU on a multi-core system, it may use 100% of each individual CPU core. Consider the following example: if cgroupAis configured to use 25% and cgroupB75% of the CPU, starting four CPU-intensive processes (one inAand three inB) on a system with four cores results in the following division of CPU shares:Expand Table 3.1. CPU share division PID cgroup CPU CPU share 100 A 0 100% of CPU0 101 B 1 100% of CPU1 102 B 2 100% of CPU2 103 B 3 100% of CPU3 Using relative shares to specify CPU access has two implications on resource management that should be considered:- Because the CFS does not demand equal usage of CPU, it is hard to predict how much CPU time a cgroup will be allowed to utilize. When tasks in one cgroup are idle and are not using any CPU time, the leftover time is collected in a global pool of unused CPU cycles. Other cgroups are allowed to borrow CPU cycles from this pool.

- The actual amount of CPU time that is available to a cgroup can vary depending on the number of cgroups that exist on the system. If a cgroup has a relative share of

1000and two other cgroups have a relative share of500, the first cgroup receives 50% of all CPU time in cases when processes in all cgroups attempt to use 100% of the CPU. However, if another cgroup is added with a relative share of1000, the first cgroup is only allowed 33% of the CPU (the rest of the cgroups receive 16.5%, 16.5%, and 33% of CPU).

3.2.2. RT Tunable Parameters

- cpu.rt_period_us

- applicable to real-time scheduling tasks only, this parameter specifies a period of time in microseconds (µs, represented here as "

us") for how regularly a cgroup's access to CPU resources is reallocated. - cpu.rt_runtime_us

- applicable to real-time scheduling tasks only, this parameter specifies a period of time in microseconds (µs, represented here as "

us") for the longest continuous period in which the tasks in a cgroup have access to CPU resources. Establishing this limit prevents tasks in one cgroup from monopolizing CPU time. As mentioned above, the access times are multiplied by the number of logical CPUs. For example, settingcpu.rt_runtime_usto200000andcpu.rt_period_usto1000000translates to the task being able to access a single CPU for 0.4 seconds out of every 1 second on systems with two CPUs (0.2 x 2), or 0.8 seconds on systems with four CPUs (0.2 x 4).

3.2.3. Example Usage

Example 3.2. Limiting CPU access

cpu subsystem mounted on your system:

- To allow one cgroup to use 25% of a single CPU and a different cgroup to use 75% of that same CPU, use the following commands:

echo 250 > /cgroup/cpu/blue/cpu.shares echo 750 > /cgroup/cpu/red/cpu.shares

~]# echo 250 > /cgroup/cpu/blue/cpu.shares ~]# echo 750 > /cgroup/cpu/red/cpu.sharesCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To limit a cgroup to fully utilize a single CPU, use the following commands:

echo 10000 > /cgroup/cpu/red/cpu.cfs_quota_us echo 10000 > /cgroup/cpu/red/cpu.cfs_period_us

~]# echo 10000 > /cgroup/cpu/red/cpu.cfs_quota_us ~]# echo 10000 > /cgroup/cpu/red/cpu.cfs_period_usCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To limit a cgroup to utilize 10% of a single CPU, use the following commands:

echo 10000 > /cgroup/cpu/red/cpu.cfs_quota_us echo 100000 > /cgroup/cpu/red/cpu.cfs_period_us

~]# echo 10000 > /cgroup/cpu/red/cpu.cfs_quota_us ~]# echo 100000 > /cgroup/cpu/red/cpu.cfs_period_usCopy to Clipboard Copied! Toggle word wrap Toggle overflow - On a multi-core system, to allow a cgroup to fully utilize two CPU cores, use the following commands:

echo 200000 > /cgroup/cpu/red/cpu.cfs_quota_us echo 100000 > /cgroup/cpu/red/cpu.cfs_period_us

~]# echo 200000 > /cgroup/cpu/red/cpu.cfs_quota_us ~]# echo 100000 > /cgroup/cpu/red/cpu.cfs_period_usCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3. cpuacct

cpuacct) subsystem generates automatic reports on CPU resources used by the tasks in a cgroup, including tasks in child groups. Three reports are available:

- cpuacct.usage

- reports the total CPU time (in nanoseconds) consumed by all tasks in this cgroup (including tasks lower in the hierarchy).

Note

To reset the value incpuacct.usage, execute the following command:echo 0 > /cgroup/cpuacct/cpuacct.usage

~]# echo 0 > /cgroup/cpuacct/cpuacct.usageCopy to Clipboard Copied! Toggle word wrap Toggle overflow The above command also resets values incpuacct.usage_percpu. - cpuacct.stat

- reports the user and system CPU time consumed by all tasks in this cgroup (including tasks lower in the hierarchy) in the following way:

user— CPU time consumed by tasks in user mode.system— CPU time consumed by tasks in system (kernel) mode.

CPU time is reported in the units defined by theUSER_HZvariable. - cpuacct.usage_percpu

- reports the CPU time (in nanoseconds) consumed on each CPU by all tasks in this cgroup (including tasks lower in the hierarchy).

3.4. cpuset

cpuset subsystem assigns individual CPUs and memory nodes to cgroups. Each cpuset can be specified according to the following parameters, each one in a separate pseudofile within the cgroup virtual file system:

Important

cpuset subsystem, the cpuset.cpus and cpuset.mems parameters must be defined for that cgroup.

- cpuset.cpus (mandatory)

- specifies the CPUs that tasks in this cgroup are permitted to access. This is a comma-separated list, with dashes ("

-") to represent ranges. For example,0-2,16

0-2,16Copy to Clipboard Copied! Toggle word wrap Toggle overflow represents CPUs 0, 1, 2, and 16. - cpuset.mems (mandatory)

- specifies the memory nodes that tasks in this cgroup are permitted to access. This is a comma-separated list in the ASCII format, with dashes ("

-") to represent ranges. For example,0-2,16

0-2,16Copy to Clipboard Copied! Toggle word wrap Toggle overflow represents memory nodes 0, 1, 2, and 16. - cpuset.memory_migrate

- contains a flag (

0or1) that specifies whether a page in memory should migrate to a new node if the values incpuset.memschange. By default, memory migration is disabled (0) and pages stay on the node to which they were originally allocated, even if the node is no longer among the nodes specified incpuset.mems. If enabled (1), the system migrates pages to memory nodes within the new parameters specified bycpuset.mems, maintaining their relative placement if possible — for example, pages on the second node on the list originally specified bycpuset.memsare allocated to the second node on the new list specified bycpuset.mems, if the place is available. - cpuset.cpu_exclusive

- contains a flag (

0or1) that specifies whether cpusets other than this one and its parents and children can share the CPUs specified for this cpuset. By default (0), CPUs are not allocated exclusively to one cpuset. - cpuset.mem_exclusive

- contains a flag (

0or1) that specifies whether other cpusets can share the memory nodes specified for the cpuset. By default (0), memory nodes are not allocated exclusively to one cpuset. Reserving memory nodes for the exclusive use of a cpuset (1) is functionally the same as enabling a memory hardwall with thecpuset.mem_hardwallparameter. - cpuset.mem_hardwall

- contains a flag (

0or1) that specifies whether kernel allocations of memory page and buffer data should be restricted to the memory nodes specified for the cpuset. By default (0), page and buffer data is shared across processes belonging to multiple users. With a hardwall enabled (1), each tasks' user allocation can be kept separate. - cpuset.memory_pressure

- a read-only file that contains a running average of the memory pressure created by the processes in the cpuset. The value in this pseudofile is automatically updated when

cpuset.memory_pressure_enabledis enabled, otherwise, the pseudofile contains the value0. - cpuset.memory_pressure_enabled

- contains a flag (

0or1) that specifies whether the system should compute the memory pressure created by the processes in the cgroup. Computed values are output tocpuset.memory_pressureand represent the rate at which processes attempt to free in-use memory, reported as an integer value of attempts to reclaim memory per second, multiplied by 1000. - cpuset.memory_spread_page

- contains a flag (

0or1) that specifies whether file system buffers should be spread evenly across the memory nodes allocated to the cpuset. By default (0), no attempt is made to spread memory pages for these buffers evenly, and buffers are placed on the same node on which the process that created them is running. - cpuset.memory_spread_slab

- contains a flag (

0or1) that specifies whether kernel slab caches for file input/output operations should be spread evenly across the cpuset. By default (0), no attempt is made to spread kernel slab caches evenly, and slab caches are placed on the same node on which the process that created them is running. - cpuset.sched_load_balance

- contains a flag (

0or1) that specifies whether the kernel will balance loads across the CPUs in the cpuset. By default (1), the kernel balances loads by moving processes from overloaded CPUs to less heavily used CPUs.Note, however, that setting this flag in a cgroup has no effect if load balancing is enabled in any parent cgroup, as load balancing is already being carried out at a higher level. Therefore, to disable load balancing in a cgroup, disable load balancing also in each of its parents in the hierarchy. In this case, you should also consider whether load balancing should be enabled for any siblings of the cgroup in question. - cpuset.sched_relax_domain_level

- contains an integer between

-1and a small positive value, which represents the width of the range of CPUs across which the kernel should attempt to balance loads. This value is meaningless ifcpuset.sched_load_balanceis disabled.The precise effect of this value varies according to system architecture, but the following values are typical:Values of cpuset.sched_relax_domain_levelValue Effect -1Use the system default value for load balancing 0Do not perform immediate load balancing; balance loads only periodically 1Immediately balance loads across threads on the same core 2Immediately balance loads across cores in the same package or book (in case of s390x architectures) 3Immediately balance loads across books in the same package (available only for s390x architectures) 4Immediately balance loads across CPUs on the same node or blade 5Immediately balance loads across several CPUs on architectures with non-uniform memory access (NUMA) 6Immediately balance loads across all CPUs on architectures with NUMA Note

With the release of Red Hat Enterprise Linux 6.1 the BOOK scheduling domain has been added to the list of supported domain levels. This change affected the meaning ofcpuset.sched_relax_domain_levelvalues. Please note that the effect of values from 3 to 5 changed. For example, to get the old effect of value 3, which was "Immediately balance loads across CPUs on the same node or blade" the value 4 needs to be selected. Similarly, the old 4 is now 5, and the old 5 is now 6.

3.5. devices

devices subsystem allows or denies access to devices by tasks in a cgroup.

Important

devices) subsystem is considered to be a Technology Preview in Red Hat Enterprise Linux 6.

- devices.allow

- specifies devices to which tasks in a cgroup have access. Each entry has four fields: type, major, minor, and access. The values used in the type, major, and minor fields correspond to device types and node numbers specified in Linux Allocated Devices, otherwise known as the Linux Devices List and available from https://www.kernel.org/doc/html/v4.11/admin-guide/devices.html.

- type

- type can have one of the following three values:

a— applies to all devices, both character devices and block devicesb— specifies a block devicec— specifies a character device

- major, minor

- major and minor are device node numbers specified by Linux Allocated Devices. The major and minor numbers are separated by a colon. For example,

8is the major number that specifies Small Computer System Interface (SCSI) disk drives, and the minor number1specifies the first partition on the first SCSI disk drive; therefore8:1fully specifies this partition, corresponding to a file system location of/dev/sda1.*can stand for all major or all minor device nodes, for example9:*(all RAID devices) or*:*(all devices). - access

- access is a sequence of one or more of the following letters:

r— allows tasks to read from the specified devicew— allows tasks to write to the specified devicem— allows tasks to create device files that do not yet exist

For example, when access is specified asr, tasks can only read from the specified device, but when access is specified asrw, tasks can read from and write to the device.

- devices.deny

- specifies devices that tasks in a cgroup cannot access. The syntax of entries is identical with

devices.allow. - devices.list

- reports the devices for which access controls have been set for tasks in this cgroup.

3.6. freezer

freezer subsystem suspends or resumes tasks in a cgroup.

- freezer.state

freezer.stateis only available in non-root cgroups and has three possible values:FROZEN— tasks in the cgroup are suspended.FREEZING— the system is in the process of suspending tasks in the cgroup.THAWED— tasks in the cgroup have resumed.

- Move that process to a cgroup in a hierarchy which has the

freezersubsystem attached to it. - Freeze that particular cgroup to suspend the process contained in it.

FROZEN and THAWED values can be written to freezer.state, FREEZING cannot be written, only read.

3.7. memory

memory subsystem generates automatic reports on memory resources used by the tasks in a cgroup, and sets limits on memory use of those tasks:

Note

memory subsystem uses 40 bytes of memory per physical page on x86_64 systems. These resources are consumed even if memory is not used in any hierarchy. If you do not plan to use the memory subsystem, you can disable it to reduce the resource consumption of the kernel.

memory subsystem, open the /boot/grub/grub.conf configuration file as root and append the following text to the line that starts with the kernel keyword:

cgroup_disable=memory

cgroup_disable=memory/boot/grub/grub.conf, see the Configuring the GRUB Boot Loader chapter in the Red Hat Enterprise Linux 6 Deployment Guide.

memory subsystem for a single session, perform the following steps when starting the system:

- At the GRUB boot screen, press any key to enter the GRUB interactive menu.

- Select Red Hat Enterprise Linux with the version of the kernel that you want to boot and press the a key to modify the kernel parameters.

- Type

cgroup_disable=memoryat the end of the line and press Enter to exit GRUB edit mode.

cgroup_disable=memory enabled, memory is not visible as an individually mountable subsystem and it is not automatically mounted when mounting all cgroups in a single hierarchy. Please note that memory is currently the only subsystem that can be effectively disabled with cgroup_disable to save resources. Using this option with other subsystems only disables their usage, but does not cut their resource consumption. However, other subsystems do not consume as much resources as the memory subsystem.

memory subsystem:

- memory.stat

- reports a wide range of memory statistics, as described in the following table:

Expand Table 3.2. Values reported by memory.stat Statistic Description cachepage cache, including tmpfs(shmem), in bytesrssanonymous and swap cache, not including tmpfs(shmem), in bytesmapped_filesize of memory-mapped mapped files, including tmpfs(shmem), in bytespgpginnumber of pages paged into memory pgpgoutnumber of pages paged out of memory swapswap usage, in bytes active_anonanonymous and swap cache on active least-recently-used (LRU) list, including tmpfs(shmem), in bytesinactive_anonanonymous and swap cache on inactive LRU list, including tmpfs(shmem), in bytesactive_filefile-backed memory on active LRU list, in bytes inactive_filefile-backed memory on inactive LRU list, in bytes unevictablememory that cannot be reclaimed, in bytes hierarchical_memory_limitmemory limit for the hierarchy that contains the memorycgroup, in byteshierarchical_memsw_limitmemory plus swap limit for the hierarchy that contains the memorycgroup, in bytesAdditionally, each of these files other thanhierarchical_memory_limitandhierarchical_memsw_limithas a counterpart prefixedtotal_that reports not only on the cgroup, but on all its children as well. For example,swapreports the swap usage by a cgroup andtotal_swapreports the total swap usage by the cgroup and all its child groups.When you interpret the values reported bymemory.stat, note how the various statistics inter-relate:active_anon+inactive_anon= anonymous memory + file cache fortmpfs+ swap cacheTherefore,active_anon+inactive_anon≠rss, becauserssdoes not includetmpfs.active_file+inactive_file= cache - size oftmpfs

- memory.usage_in_bytes

- reports the total current memory usage by processes in the cgroup (in bytes).

- memory.memsw.usage_in_bytes

- reports the sum of current memory usage plus swap space used by processes in the cgroup (in bytes).

- memory.max_usage_in_bytes

- reports the maximum memory used by processes in the cgroup (in bytes).

- memory.memsw.max_usage_in_bytes

- reports the maximum amount of memory and swap space used by processes in the cgroup (in bytes).

- memory.limit_in_bytes

- sets the maximum amount of user memory (including file cache). If no units are specified, the value is interpreted as bytes. However, it is possible to use suffixes to represent larger units —

korKfor kilobytes,morMfor megabytes, andgorGfor gigabytes. For example, to set the limit to 1 gigabyte, execute:echo 1G > /cgroup/memory/lab1/memory.limit_in_bytes

~]# echo 1G > /cgroup/memory/lab1/memory.limit_in_bytesCopy to Clipboard Copied! Toggle word wrap Toggle overflow You cannot usememory.limit_in_bytesto limit the root cgroup; you can only apply values to groups lower in the hierarchy.Write-1tomemory.limit_in_bytesto remove any existing limits. - memory.memsw.limit_in_bytes

- sets the maximum amount for the sum of memory and swap usage. If no units are specified, the value is interpreted as bytes. However, it is possible to use suffixes to represent larger units —

korKfor kilobytes,morMfor megabytes, andgorGfor gigabytes.You cannot usememory.memsw.limit_in_bytesto limit the root cgroup; you can only apply values to groups lower in the hierarchy.Write-1tomemory.memsw.limit_in_bytesto remove any existing limits.Important

It is important to set thememory.limit_in_bytesparameter before setting thememory.memsw.limit_in_bytesparameter: attempting to do so in the reverse order results in an error. This is becausememory.memsw.limit_in_bytesbecomes available only after all memory limitations (previously set inmemory.limit_in_bytes) are exhausted.Consider the following example: settingmemory.limit_in_bytes = 2Gandmemory.memsw.limit_in_bytes = 4Gfor a certain cgroup will allow processes in that cgroup to allocate 2 GB of memory and, once exhausted, allocate another 2 GB of swap only. Thememory.memsw.limit_in_bytesparameter represents the sum of memory and swap. Processes in a cgroup that does not have thememory.memsw.limit_in_bytesparameter set can potentially use up all the available swap (after exhausting the set memory limitation) and trigger an Out Of Memory situation caused by the lack of available swap.The order in which thememory.limit_in_bytesandmemory.memsw.limit_in_bytesparameters are set in the/etc/cgconfig.conffile is important as well. The following is a correct example of such a configuration:memory { memory.limit_in_bytes = 1G; memory.memsw.limit_in_bytes = 1G; }memory { memory.limit_in_bytes = 1G; memory.memsw.limit_in_bytes = 1G; }Copy to Clipboard Copied! Toggle word wrap Toggle overflow - memory.failcnt

- reports the number of times that the memory limit has reached the value set in

memory.limit_in_bytes. - memory.memsw.failcnt

- reports the number of times that the memory plus swap space limit has reached the value set in

memory.memsw.limit_in_bytes. - memory.soft_limit_in_bytes

- enables flexible sharing of memory. Under normal circumstances, control groups are allowed to use as much of the memory as needed, constrained only by their hard limits set with the

memory.limit_in_bytesparameter. However, when the system detects memory contention or low memory, control groups are forced to restrict their consumption to their soft limits. To set the soft limit for example to 256 MB, execute:echo 256M > /cgroup/memory/lab1/memory.soft_limit_in_bytes

~]# echo 256M > /cgroup/memory/lab1/memory.soft_limit_in_bytesCopy to Clipboard Copied! Toggle word wrap Toggle overflow This parameter accepts the same suffixes asmemory.limit_in_bytesto represent units. To have any effect, the soft limit must be set below the hard limit. If lowering the memory usage to the soft limit does not solve the contention, cgroups are pushed back as much as possible to make sure that one control group does not starve the others of memory. Note that soft limits take effect over a long period of time, since they involve reclaiming memory for balancing between memory cgroups. - memory.force_empty

- when set to

0, empties memory of all pages used by tasks in the cgroup. This interface can only be used when the cgroup has no tasks. If memory cannot be freed, it is moved to a parent cgroup if possible. Use thememory.force_emptyparameter before removing a cgroup to avoid moving out-of-use page caches to its parent cgroup. - memory.swappiness

- sets the tendency of the kernel to swap out process memory used by tasks in this cgroup instead of reclaiming pages from the page cache. This is the same tendency, calculated the same way, as set in

/proc/sys/vm/swappinessfor the system as a whole. The default value is60. Values lower than60decrease the kernel's tendency to swap out process memory, values greater than60increase the kernel's tendency to swap out process memory, and values greater than100permit the kernel to swap out pages that are part of the address space of the processes in this cgroup.Note that a value of0does not prevent process memory being swapped out; swap out might still happen when there is a shortage of system memory because the global virtual memory management logic does not read the cgroup value. To lock pages completely, usemlock()instead of cgroups.You cannot change the swappiness of the following groups:- the root cgroup, which uses the swappiness set in

/proc/sys/vm/swappiness. - a cgroup that has child groups below it.

- memory.move_charge_at_immigrate

- allows moving charges associated with a task along with task migration. Charging is a way of giving a penalty to cgroups which access shared pages too often. These penalties, also called charges, are by default not moved when a task migrates from one cgroup to another. The pages allocated from the original cgroup still remain charged to it; the charge is dropped when the page is freed or reclaimed.With