このコンテンツは選択した言語では利用できません。

Chapter 2. Installing Change Data Capture connectors

Install Change Data Capture connectors through AMQ Streams by extending Kafka Connect with connector plugins. Following a deployment of AMQ Streams, you can deploy Change Data Capture as a connector configuration through Kafka Connect.

2.1. Prerequisites

A Change Data Capture installation requires the following:

- An OpenShift cluster

- A deployment of AMQ Streams with Kafka Connect S2I

-

A user on the OpenShift cluster with

cluster-adminpermissions to set up the required cluster roles and API services

Java 8 or later is required to run the Change Data Capture connectors.

To install Change Data Capture, the OpenShift Container Platform command-line interface (CLI) is required.

- For more information about how to install the CLI for OpenShift 3.11, see the OpenShift Container Platform 3.11 documentation.

- For more information about how to install the CLI for OpenShift 4.2, see the OpenShift Container Platform 4.2 documentation.

Additional resources

- For more information about how to install AMQ Streams, see Using AMQ Streams 1.3 on OpenShift.

- AMQ Streams includes a Cluster Operator to deploy and manage Kafka components. For more information about how to install Kafka components using the AMQ Streams Cluster Operator, see Deploying Kafka Connect to your cluster.

2.2. Kafka topic creation recommendations

Change Data Capture uses multiple Kafka topics for storing data. The topics have to be either created by an administrator, or by Kafka itself by enabling auto-creation for topics using the auto.create.topics.enable broker configuration.

The following list describes limitations and recommendations to consider when creating topics:

- Replication, a factor of at least 3 in production

- Single partition

- Infinite (or very long) retention if topic compaction is disabled

- Log compaction enabled, if you wish to only keep the last change event for a given record

Do not enable topic compaction for the database history topics used by the MySQL and SQL Server connectors.

If you relax the single partition rule, your application must be able to handle out-of-order events for different rows in the database (events for a single row are still fully ordered). If multiple partitions are used, Kafka will determine the partition by hashing the key by default. Other partition strategies require using Simple Message Transforms (SMTs) to set the key for each record.

For log compaction, configure the min.compaction.lag.ms and delete.retention.ms topic-level settings in Apache Kafka so that consumers have enough time to receive all events and delete markers. Specifically, these values should be larger than the maximum downtime you anticipate for the sink connectors, such as when you are updating them.

2.3. Deploying Change Data Capture with AMQ Streams

This procedure describes how to set up connectors for Change Data Capture on Red Hat OpenShift container platform.

Before you begin

For setting up Apache Kafka and Kafka Connect on OpenShift, Red Hat AMQ Streams is used. AMQ Streams offers operators and images that bring Kafka to OpenShift.

Here we deploy and use Kafka Connect S2I (Source to Image). S2I is a framework to build images that take application source code as an input and produce a new image that runs the assembled application as output.

A Kafka Connect builder image with S2I support is provided on the Red Hat Container Catalog as part of the registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 image. The S2I process takes your binaries (with plugins and connectors) and stores them in the /tmp/kafka-plugins/s2i directory. It creates a new Kafka Connect image from this directory, which can then be used with the Kafka Connect deployment. When started using the enhanced image, Kafka Connect loads any third-party plug-ins from the /tmp/kafka-plugins/s2i directory.

Instead of deploying and using the Kafka Connect S2I, you can create a new Dockerfile based on an AMQ Streams Kafka image to include the connectors.

See Chapter 3, Creating a Docker image from the Kafka Connect base image.

In this procedure, we:

- Deploy a Kafka cluster to OpenShift

- Download and configure the Change Data Capture connectors

- Deploy Kafka Connect with the connectors

If you have a Kafka cluster deployed already, you can skip the first step.

The pod names must correspond with your AMQ Streams deployment.

Procedure

Deploy your Kafka cluster.

- Install the AMQ Streams operator by following the steps in AMQ Streams documentation.

- Select the desired configuration and deploy your Kafka Cluster.

- Deploy Kafka Connect s2i.

We now have a working Kafka cluster running in OpenShift with Kafka Connect S2I.

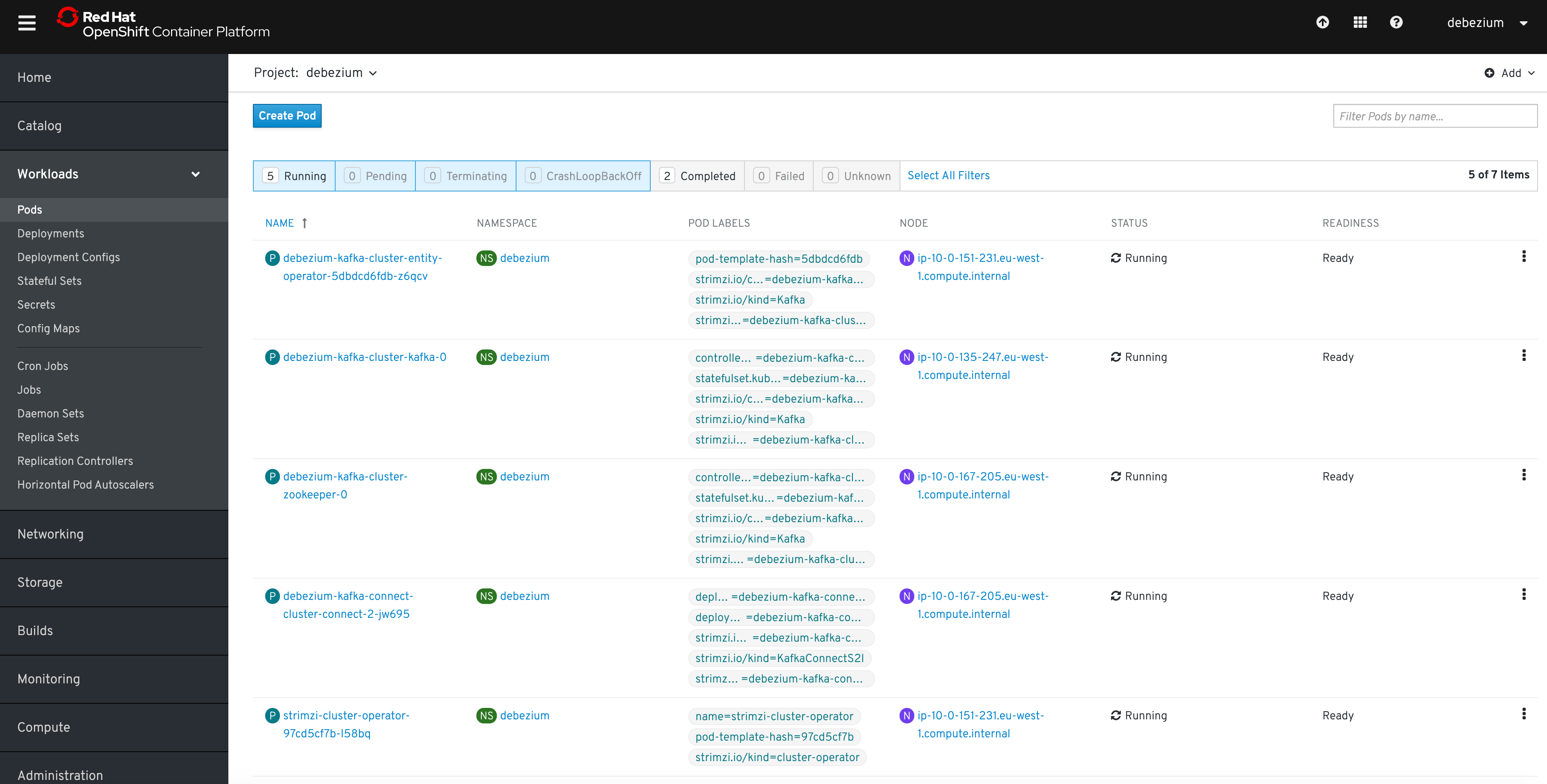

Check your pods are running:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In addition to running pods you should have a DeploymentConfig associated with your Connect S2I.

- Select release 1.0, and download the Debezium connector archive for your database from the AMQ Streams download site.

Extract the archive to create a directory structure for the connector plugins.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Now we trigger the Kafka Connect S2I build.

Check the name of the build config.

oc get buildconfigs

$ oc get buildconfigs NAME TYPE FROM LATEST <cluster-name>-cluster-connect Source Binary 2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

oc start-buildcommand to start a new build of the Kafka Connect image using the Change Data Capture directory:oc start-build <cluster-name>-cluster-connect --from-dir ./my-plugin/

oc start-build <cluster-name>-cluster-connect --from-dir ./my-plugin/Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe name of the build is the same as the name of the deployed Kafka Connect cluster.

Check the updated deployment is running:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can go to the Pods view of your OpenShift Web Console to confirm the pods are running:

Updating Kafka Connect

If you need to update your deployment, amend your JAR files in the Change Data Capture directory and rebuild Kafka Connect.

Verifying the Deployment

Once the build has finished, the new image is used automatically by the Kafka Connect deployment.

When the connector starts, it will connect to the source and produce events for each inserted, updated, and deleted row or document.

Verify whether the deployment is correct by emulating the Debezium tutorial by following the steps to verify the deployment.