이 콘텐츠는 선택한 언어로 제공되지 않습니다.

Chapter 7. Assessing and analyzing applications with MTA

You can use the Migration Toolkit for Applications (MTA) user interface to assess and analyze applications:

- When adding to or editing the Application Inventory, MTA automatically spawns programming language and technology discovery tasks. The tasks apply appropriate tags to the application, reducing the time you spend tagging the application manually.

- When assessing applications, MTA estimates the risks and costs involved in preparing applications for containerization, including time, personnel, and other factors. You can use the results of an assessment for discussions between stakeholders to determine whether applications are suitable for containerization.

- When analyzing applications, MTA uses rules to determine which specific lines in an application must be modified before the application can be migrated or modernized.

7.1. The Assessment module features

The Migration Toolkit for Applications (MTA) Assessment module offers the following features for assessing and analyzing applications:

- Assessment hub

- The Assessment hub integrates with the Application inventory.

- Enhanced assessment questionnaire capabilities

In MTA 7.0, you can import and export assessment questionnaires. You can also design custom questionnaires with a downloadable template by using the YAML syntax, which includes the following features:

- Conditional questions: You can include or exclude questions based on the application or archetype if a certain tag is present on this application or archetype.

- Application auto-tagging based on answers: You can define tags to be applied to applications or archetypes if a certain answer was provided.

- Automated answers from tags in applications or archetypes.

For more information, see The custom assessment questionnaire.

You can customize and save the default questionnaire. For more information, see The default assessment questionnaire.

- Multiple assessment questionnaires

- The Assessment module supports multiple questionnaires, relevant to one or more applications.

- Archetypes

You can group applications with similar characteristics into archetypes. This allows you to assess multiple applications at once. Each archetype has a shared taxonomy of tags, stakeholders, and stakeholder groups. All applications inherit assessment and review from their assigned archetypes.

For more information, see Working with archetypes.

7.2. MTA assessment questionnaires

The Migration Toolkit for Applications (MTA) uses an assessment questionnaire, either default or custom, to assess the risks involved in containerizing an application.

The assessment report provides information about applications and risks associated with migration. The report also generates an adoption plan informed by the prioritization, business criticality, and dependencies of the applications submitted for assessment.

7.2.1. The default assessment questionnaire

Legacy Pathfinder is the default Migration Toolkit for Applications (MTA) questionnaire. Pathfinder is a questionnaire-based tool that you can use to evaluate the suitability of applications for modernization in containers on an enterprise Kubernetes platform.

Through interaction with the default questionnaire and the review process, the system is enriched with application knowledge exposed through the collection of assessment reports.

You can export the default questionnaire to a YAML file:

Example 7.1. The Legacy Pathfinder YAML file

7.2.2. The custom assessment questionnaire

You can use the Migration Toolkit for Applications (MTA) to import a custom assessment questionnaire by using a custom YAML syntax to define the questionnaire. The YAML syntax supports the following features:

- Conditional questions

The YAML syntax supports including or excluding questions based on tags existing on the application or archetype, for example:

If the application or archetype has the

Language/Javatag, theWhat is the main JAVA framework used in your application?question is included in the questionnaire:Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the application or archetype has the

Deployment/ServerlessandArchitecture/Monolithtag, theAre you currently using any form of container orchestration?question is excluded from the questionnaire:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Automated answers based on tags present on the assessed application or archetype

Automated answers are selected based on the tags existing on the application or archetype. For example, if an application or archetype has the

Runtime/Quarkustag, theQuarkusanswer is automatically selected, and if an application or archetype has theRuntime/Spring Boottag, theSpring Bootanswer is automatically selected:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Automatic tagging of applications based on answers

During the assessment, tags are automatically applied to the application or archetype based on the answer if this answer is selected. Note that the tags are transitive. Therefore, the tags are removed if the assessment is discarded. Each tag is defined by the following elements:

-

category: Category of the target tag (

String). -

tag: Definition for the target tag as (

String).

For example, if the selected answer is

Quarkus, theRuntime/Quarkustag is applied to the assessed application or archetype. If the selected answer isSpring Boot, theRuntime/Spring Boottag is applied to the assessed application or archetype:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

category: Category of the target tag (

7.2.2.1. The YAML template for the custom questionnaire

You can use the following YAML template to build your custom questionnaire. You can download this template by clicking Download YAML template on the Assessment questionnaires page.

Example 7.2. The YAML template for the custom questionnaire

7.2.2.2. The custom questionnaire fields

Every custom questionnaire field marked as required is mandatory and must be completed. Otherwise, the YAML syntax will not validate on upload. Each subsection of the field defines a new structure or object in YAML, for example:

| Questionnaire field | Description |

|---|---|

|

| The name of the questionnaire. This field must be unique for the entire MTA instance. |

|

| A short description of the questionnaire. |

|

| The definition of a threshold for each risk category of the application or archetype that is considered to be affected by that risk level. The threshold values can be the following:

The higher risk level always takes precedence. For example, if the |

|

| Messages to be displayed in reports for each risk category. The risk_messages map is defined by the following fields:

|

|

| A list of sections that the questionnaire must include.

|

7.3. Managing assessment questionnaires

By using the MTA user interface, you can perform the following actions on assessment questionnaires:

- Display the questionnaire. You can also diplay the answer choices and their associated risk weight.

- Export the questionnaire to the desired location on your system.

Import the questionnaire from your system.

WarningThe name of the imported questionnaire must be unique. If the name, which is defined in the YAML syntax (

name:<name of questionnaire>), is duplicated, the import will fail with the following error message:UNIQUE constraint failed: Questionnaire.Name.Delete an assessment questionnaire.

WarningWhen you delete the questionnaire, its answers for all applications that use it in all archetypes are also deleted.

ImportantYou cannot delete the Legacy Pathfinder default questionnaire.

Procedure

Depending on your scenario, perform one of the following actions:

Display the quest the assessment questionnaire:

- In the Administration view, select Assessment questionnaires.

-

Click the Options menu (

).

).

- Select View for the questionnaire you want to display.

- Optional: Click the arrow to the left from the question to display the answer choices and their risk weight.

Export the assessment questionnaire:

- In the Administration view, select Assessment questionnaires.

- Select the desired questionnaire.

-

Click the Options menu (

).

).

- Select Export.

- Select the location of the download.

- Click Save.

Import the assessment questionnaire:

- In the Administration view, select Assessment questionnaires.

- Click Import questionnaire.

- Click Upload.

- Navigate to the location of your questionnaire.

- Click Open.

- Import the desired questionnaire by clicking Import.

Delete the assessment questionnaire:

- In the Administration view, select Assessment questionnaires.

- Select the questionnaire you want to delete.

-

Click the Options menu (

).

).

- Select Delete.

- Confirm deleting by entering on the Name of the questionnaire.

7.4. Assessing an application

You can estimate the risks and costs involved in preparing applications for containerization by performing application assessment. You can assess an application and display the currently saved assessments by using the Assessment module.

The Migration Toolkit for Applications (MTA) assesses applications according to a set of questions relevant to the application, such as dependencies.

To assess the application, you can use the default Legacy Pathfinder MTA questionnaire or import your custom questionnaires.

You can assess only one application at a time.

Prerequisites

- You are logged in to an MTA server.

Procedure

- In the MTA user interface, select the Migration view.

- Click Application inventory in the left menu bar. A list of the available applications appears in the main pane.

- Select the application you want to assess.

-

Click the Options menu (

) at the right end of the row and select Assess from the drop-down menu.

) at the right end of the row and select Assess from the drop-down menu.

- From the list of available questionnaires, click Take for the desired questionnaire.

Select Stakeholders and Stakeholder groups from the lists to track who contributed to the assessment for future reference.

NoteYou can also add Stakeholder Groups or Stakeholders in the Controls pane of the Migration view. For more information, see Seeding an instance.

- Click Next.

- Answer each Application assessment question and click Next.

- Click Save to review the assessment and proceed with the steps in Reviewing an application.

If you are seeing false positives in an application that is not fully resolvable, then this is not entirely unexpected.

The reason, is that MTA cannot discover the class is that is being called. Therefore, MTA cannot determine whether it is a valid match or not.

When this happens, MTA defaults to exposing more information than less.

In this situation, the following solutions are suggested:

- Ensure that the maven settings can get all the dependencies.

- Ensure the application can be fully compiled.

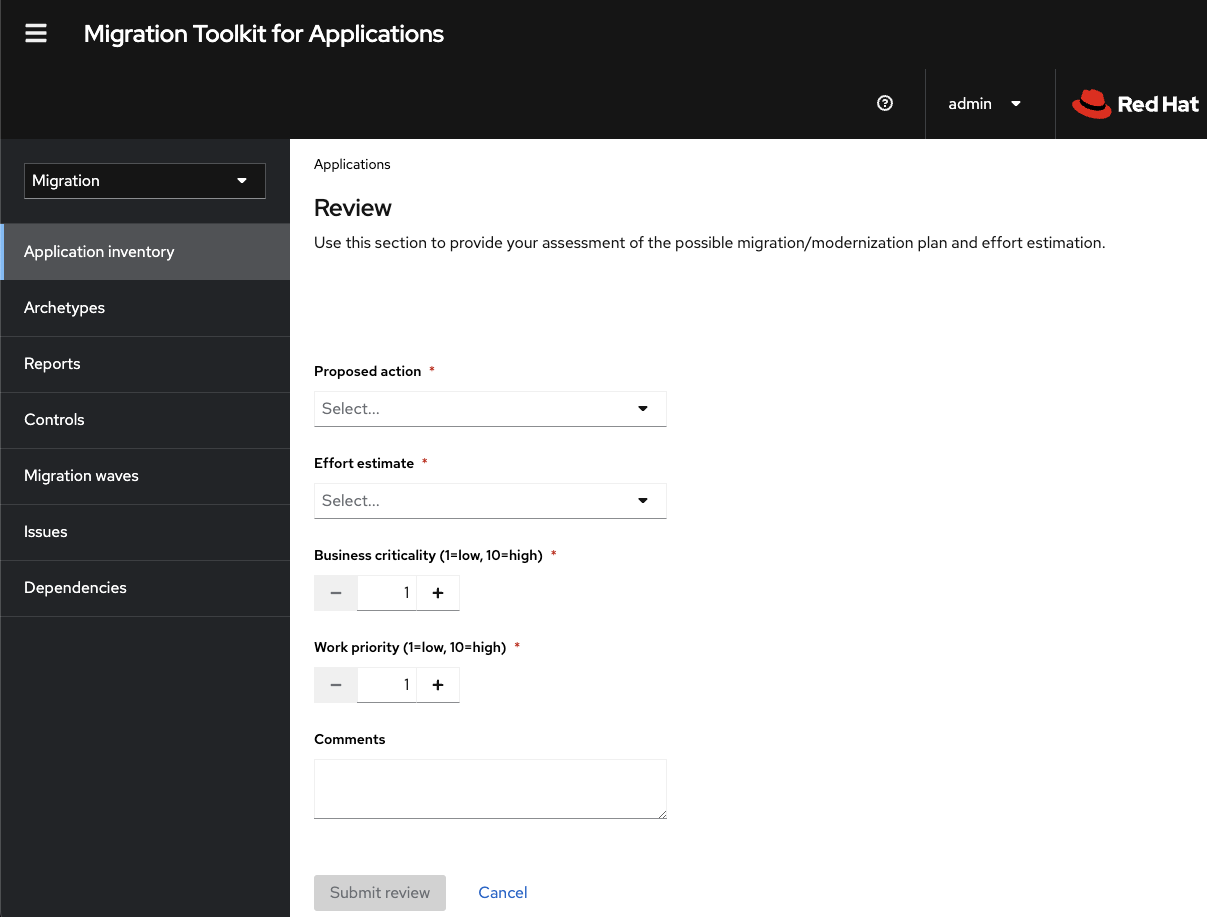

7.5. Reviewing an application

You can use the Migration Toolkit for Applications (MTA) user interface to determine the migration strategy and work priority for each application.

You can review only one application at a time.

Procedure

- In the Migration view, click Application inventory.

- Select the application you want to review.

Review the application by performing either of the following actions:

- Click Save and Review while assessing the application. For more information, see Assessing an application.

Click the Options menu (

) at the right end of the row and select Review from the drop-down list. The application Review parameters appear in the main pane.

) at the right end of the row and select Review from the drop-down list. The application Review parameters appear in the main pane.

- Click Proposed action and select the action.

- Click Effort estimate and set the level of effort required to perform the assessment with the selected questionnaire.

- In the Business criticality field, enter how critical the application is to the business.

- In the Work priority field, enter the application’s priority.

- Optional: Enter the assessment questionnaire comments in the Comments field.

Click Submit review.

The fields from Review are now populated on the Application details page.

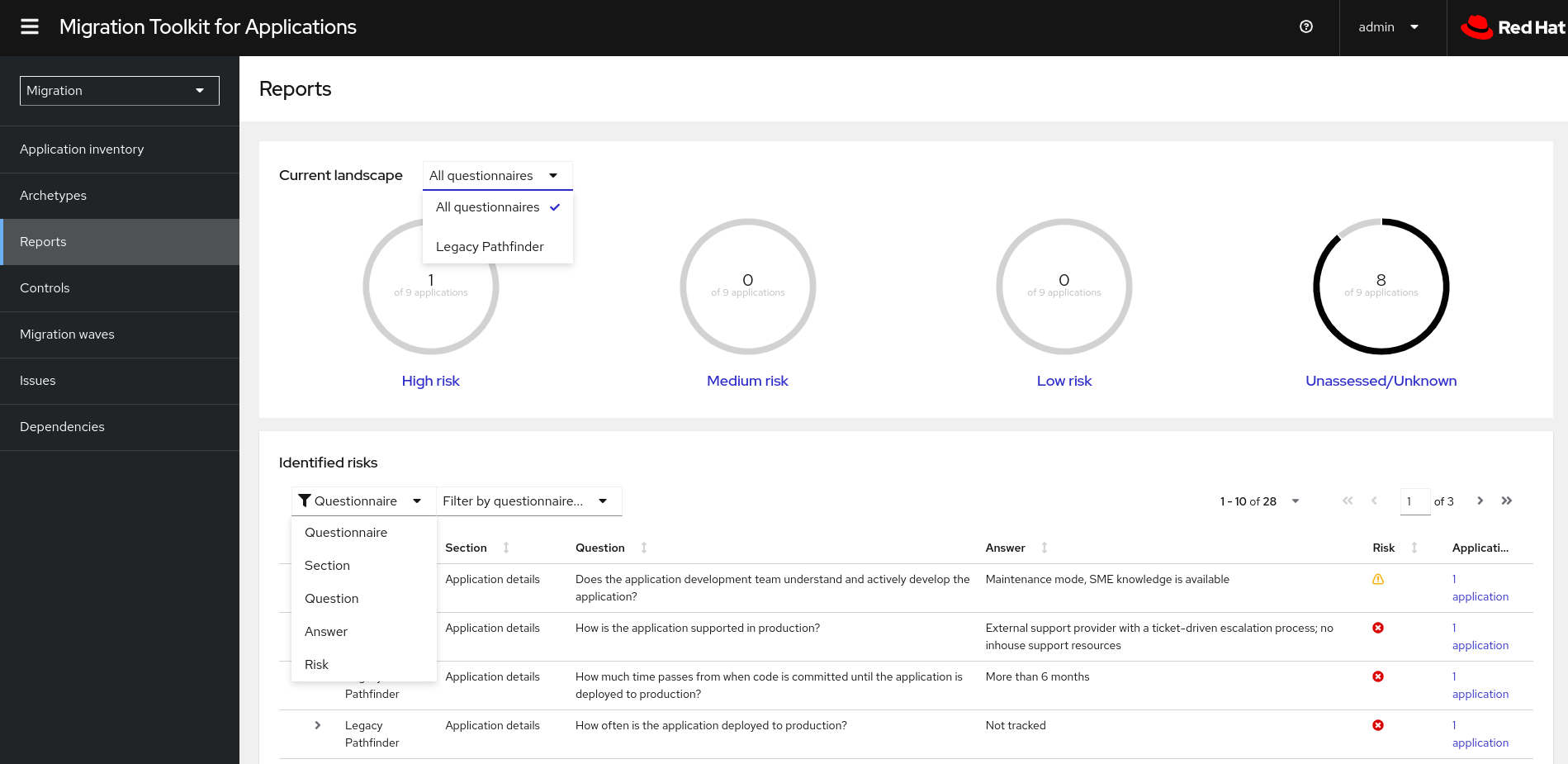

7.6. Reviewing an assessment report

An MTA assessment report displays an aggregated assessment of the data obtained from multiple questionnaires for multiple applications.

Procedure

In the Migration view, click Reports. The aggregated assessment report for all applications is displayed.

Depending on your scenario, perform one of the following actions:

Display a report on the data from a particular questionnaire:

- Select the required questionnaire from a drop-down list of all questionnaires in the Current landscape pane of the report. By default, all questionnaires are selected.

- In the Identified risks pane of the report, sort the displayed list by application name, level of risk, questionnaire, questionnaire section, question, and answer.

Display a report for a specific application:

- Click the link in the Applications column in the Identified risks pane of the report. The Application inventory page opens. The applications included in the link are displayed as a list.

Click the required application. The Assessment side pane opens.

- To see the assessed risk level for the application, open the Details tab.

- To see the details of the assessment, open the Reviews tab.

7.7. Tagging an application

You can attach various tags to the application that you are analyzing. You can use tags to classify applications and instantly identify application information, for example, an application type, data center location, and technologies used within the application. You can also use tagging to associate archetypes to applications for automatic assessment. For more information about archetypes, see Working with archetypes.

Tagging can be done automatically during the analysis manually at any time.

Not all tags can be assigned automatically. For example, an analysis can only tag the application based on its technologies. If you want to tag the application also with the location of the data center where it is deployed, you need to tag the application manually.

7.7.1. Creating application tags

You can create custom tags for applications that MTA assesses or analyzes.

Procedure

- In the Migration view, click Controls.

- Click the Tags tab.

- Click Create tag.

- In the Name field in the opened dialogue, enter a unique name for the tag.

- Click the Tag category field and select the category tag to associate with the tag.

- Click Create.

Optional: Edit the created tag or tag category:

Edit the tag:

- In the list of tag categories under the Tags tab, open the list of tags in the desired category.

- Select Edit from the drop-down menu and edit the tag name in the Name field.

- Click the Tag category field and select the category tag to associate with the tag.

- Click Save.

Edit the tag category:

- Under the Tags tab, select a defined tag category and click Edit.

- Edit the tag category’s name in the Name field.

- Edit the category’s Rank value.

- Click the Color field and select a color for the tag category.

- Click Save.

7.7.2. Manually tagging an application

You can tag an application manually, both before or after you run an application analysis.

Procedure

- In the Migration view, click Application inventory.

-

In the row of the required application, click Edit (

). The Update application window opens.

). The Update application window opens.

- Select the desired tags from the Select a tag(s) drop-down list.

- Click Save.

7.7.3. Automatic tagging

MTA automatically spawns language discovery and technology discovery tasks when adding an application to the Application Inventory. When the language discovery task is running, the technology discovery and analysis tasks wait until the language discovery task is finished. These tasks automatically add tags to the application. MTA can automatically add tags to the application based on the application analysis. Automatic tagging is especially useful when dealing with large portfolios of applications.

Automatic tagging of applications based on application analysis is enabled by default. You can disable automatic tagging during application analysis by deselecting the Enable automated tagging checkbox in the Advanced section of the Analysis configuration wizard.

To tag an application automatically, make sure that the Enable automated tagging checkbox is selected before you run an application analysis.

7.7.4. Displaying application tags

You can display the tags attached to a particular application.

You can display the tags that were attached automatically only after you have run an application analysis.

Procedure

- In the Migration view, click Application inventory.

- Click the name of the required application. A side pane opens.

- Click the Tags tab. The tags attached to the application are displayed.

7.8. Working with archetypes

An archetype is a group of applications with common characteristics. You can use archetypes to assess multiple applications at once.

Application archetypes are defined by criteria tags and the application taxonomy. Each archetype defines how the assessment module assesses the application according to the characteristics defined in that archetype. If the tags of an application match the criteria tags of an archetype, the application is associated with the archetype.

Creation of an archetype is defined by a series of tags, stakeholders, and stakeholder groups. The tags include the following types:

Criteria tags are tags that the archetype requires to include an application as a member.

NoteIf the archetype criteria tags match an application only partially, this application cannot be a member of the archetype. For example, if the application a only has tag a, but the archetype a criteria tags include tags a AND b, the application a will not be a member of the archetype a.

- Archetype tags are tags that are applied to the archetype entity.

All applications associated with the archetype inherit the assessment and review from the archetype groups to which these applications belong. This is the default setting. You can override inheritance for the application by completing an individual assessment and review.

7.8.1. Creating an archetype

When you create an archetype, an application in the inventory is automatically associated to that archetype if this application has the tags that match the criteria tags of the archetype.

Procedure

- Open the MTA web console.

- In the left menu, click Archetypes.

- Click Create new archetype.

In the form that opens, enter the following information for the new archetype:

- Name: A name of the new archetype (mandatory).

- Description: A description of the new archetype (optional).

- Criteria Tags: Tags that associate the assessed applications with the archetype (mandatory). If criteria tags are updated, the process to calculate the applications, which the archetype is associated with, is triggered again.

- Archetype Tags: Tags that the archetype assesses in the application (mandatory).

- Stakeholder(s): Specific stakeholders involved in the application development and migration (optional).

- Stakeholders Group(s): Groups of stakeholders involved in the application development and migration (optional).

- Click Create.

7.8.2. Assessing an archetype

An archetype is considered assessed when all required questionnaires have been answered.

If an application is associated with several archetypes, this application is considered assessed when all associated archetypes have been assessed.

Prerequisites

- You are logged in to an MTA server.

Procedure

- Open the MTA web console.

- Select the Migration view and click Archetype.

-

Click the Options menu (

) and select Assess from the drop-down menu.

) and select Assess from the drop-down menu.

- From the list of available questionnaires, click Take to select the desired questionnaire.

- In the Assessment menu, answer the required questions.

- Click Save.

7.8.3. Reviewing an archetype

An archetype is considered reviewed when it has been reviewed once even if multiple questionnaires have been marked as required.

If an application is associated with several archetypes, this application is considered reviewed when all associated archetypes have been reviewed.

Prerequisites

- You are logged in to an MTA server.

Procedure

- Open the MTA web console.

- Select the Migration view and click Archetype.

-

Click the Options menu (

) and select Review from the drop-down menu..

) and select Review from the drop-down menu..

- From the list of available questionnaires, click Take to select the desired assessment questionnaire.

- In the Assessment menu, answer the required questions.

- Select Save and Review. You will automatically be redirected to the Review tab.

Enter the following information:

- Proposed Action: Proposed action required to complete the migration or modernization of the archetype.

- Effort estimate: The level of effort required to perform the modernization or migration of the selected archetype.

- Business criticality: The level of criticality of the application to the business.

- Work Priority: The archetype’s priority.

- Click Submit review.

7.8.4. Deleting an archetype

Deleting an archetype deletes any associated assessment and review. All associated applications move to the Unassessed and Unreviewed state.

7.9. Analyzing an application

You can use the Migration Toolkit for Applications (MTA) user interface to configure and run an application analysis. The analysis determines which specific lines in the application must be modified before the application can be migrated or modernized.

7.9.1. Configuring and running an application analysis

You can analyze more than one application at a time against more than one transformation target in the same analysis.

Procedure

- In the Migration view, click Application inventory.

- Select an application that you want to analyze.

- Review the credentials assigned to the application.

- Click Analyze.

Select the Analysis mode from the list:

- Binary

- Source code

- Source code and dependencies

- Upload a local binary. This option only appears if you are analyzing a single application. If you chose this option, you are prompted to Upload a local binary. Either drag a file into the area provided or click Upload and select the file to upload.

- Click Next.

Select one or more target options for the analysis:

Application server migration to either of the following platforms:

- JBoss EAP 7

- JBoss EAP 8

- Containerization

- Quarkus

- OracleJDK to OpenJDK

OpenJDK. Use this option to upgrade to either of the following JDK versions:

- OpenJDK 11

- OpenJDK 17

- OpenJDK 21

- Linux. Use this option to ensure that there are no Microsoft Windows paths hard-coded into your applications.

- Jakarta EE 9. Use this option to migrate from Java EE 8.

- Spring Boot on Red Hat Runtimes

- Open Liberty

- Camel. Use this option to migrate from Apache Camel 2 to Apache Camel 3 or from Apache Camel 3 to Apache Camel 4.

- Azure App Service

- Click Next.

Select one of the following Scope options to better focus the analysis:

- Application and internal dependencies only.

- Application and all dependencies, including known Open Source libraries.

- Select the list of packages to be analyzed manually. If you choose this option, type the file name and click Add.

- Exclude packages. If you choose this option, type the name of the package and click Add.

- Click Next.

In Advanced, you can attach additional custom rules to the analysis by selecting the Manual or Repository mode:

- In the Manual mode, click Add Rules. Drag the relevant files or select the files from their directory and click Add.

In the Repository mode, you can add rule files from a Git or Subversion repository.

ImportantAttaching custom rules is optional if you have already attached a migration target to the analysis. If you have not attached any migration target, you must attach rules.

Optional: Set any of the following options:

- Target

- Source(s)

- Excluded rules tags. Rules with these tags are not processed. Add or delete as needed.

Enable automated tagging. Select the checkbox to automatically attach tags to the application. This checkbox is selected by default.

NoteAutomatically attached tags are displayed only after you run the analysis.

You can attach tags to the application manually instead of enabling automated tagging or in addition to it.

NoteAnalysis engines use standard rules for a comprehensive set of migration targets. However, if the target is not included, is a customized framework, or the application is written in a language that is not supported (for example, Node.js, Python), you can add custom rules by skipping the target selection in the Set Target tab and uploading custom rule files in the Custom Rules tab. Only custom rule files that are uploaded manually are validated.

- Click Next.

- In Review, verify the analysis parameters.

Click Run.

The analysis status is

Scheduledas MTA downloads the image for the container to execute. When the image is downloaded, the status changes toIn-progress.

Analysis takes minutes to hours to run depending on the size of the application and the capacity and resources of the cluster.

MTA relies on Kubernetes scheduling capabilities to determine how many analyzer instances are created based on cluster capacity. If several applications are selected for analysis, by default, only one analyzer can be provisioned at a time. With more cluster capacity, more analysis processes can be executed in parallel.

Optional: To track the status of your active analysis task, open the Task Manager drawer by clicking the notifications button.

Alternatively, hover over the application name to display the pop-over window.

- When analysis is complete, to see its results, open the application drawer by clicking on the application name.

After creating an application instance on the Application Inventory page, the language discovery task starts, automatically pre-selecting the target filter option. However, you can choose a different language that you prefer.

7.9.2. Reviewing analysis details

You can display the activity log of the analysis. The activity log contains such analysis details as, for example, analysis steps.

Procedure

- In the Migration view, click Application inventory.

- Click on the application row to open the application drawer.

- Click the Reports tab.

- Click View analysis details for the activity log of the analysis.

Optional: For issues and dependencies found during the analysis, click the Details tab in the application drawer and click Issues or Dependencies.

Alternatively, open the Issues or Dependencies page in the Migration view.

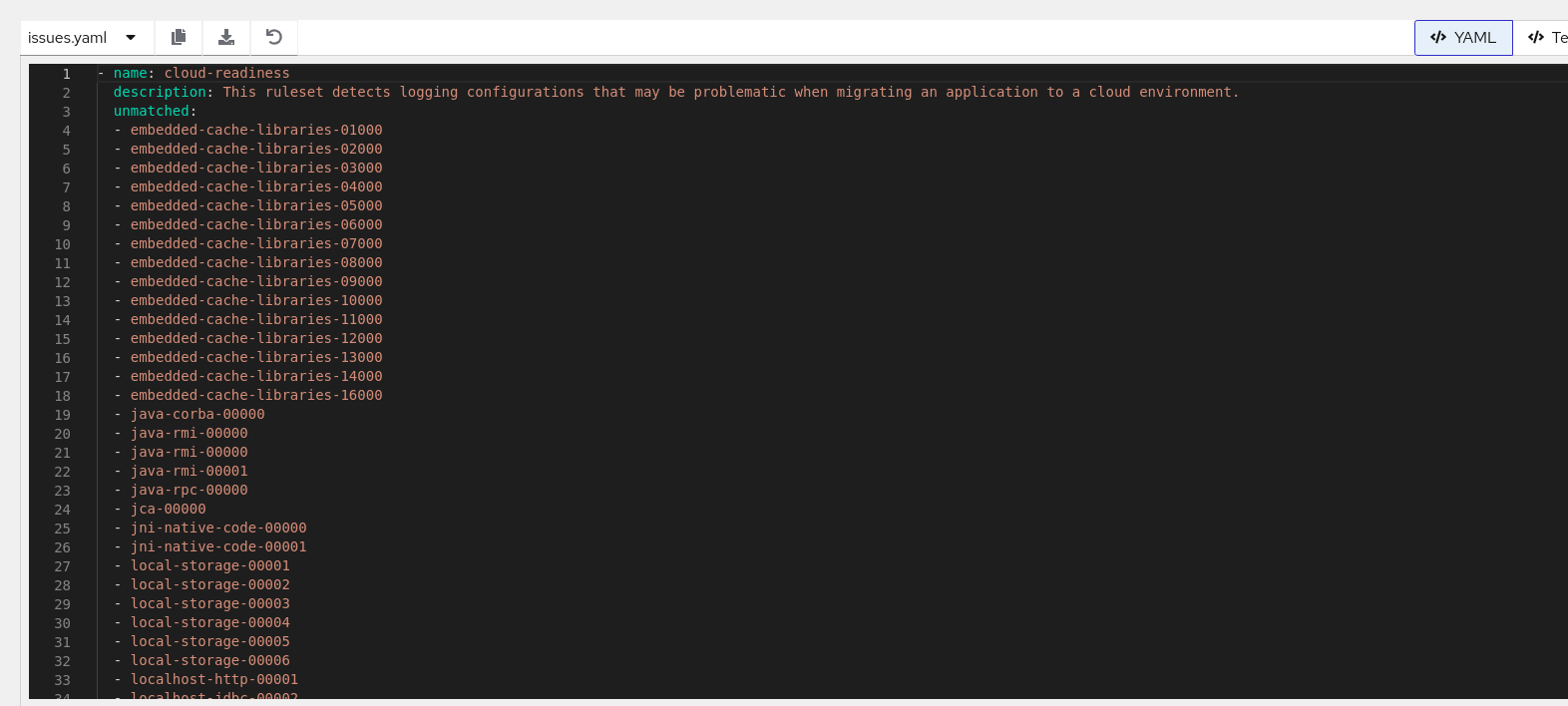

7.9.3. Accessing unmatched rules

To access unmatched rules, you must run the analysis with enhanced logging enabled.

- Navigate to Advanced under Application analysis.

- Select Options.

- Check Enhanced advanced analysis details.

When you run an analysis:

- Navigate to Reports in the side drawer.

- Click View analysis details, which opens the YAML/JSON format log view.

-

Select the

issues.yamlfile. For each ruleset, there is an unmatched section that lists the rule IDs that do not find match rules.

7.9.4. Downloading an analysis report

An MTA analysis report contains a number of sections, including a listing of the technologies used by the application, the dependencies of the application, and the lines of code that must be changed to successfully migrate or modernize the application.

For more information about the contents of an MTA analysis report, see Reviewing the reports.

For your convenience, you can download analysis reports. Note that by default this option is disabled.

Procedure

- In Administration view, click General.

- Toggle the Allow reports to be downloaded after running an analysis. switch.

- Go to the Migration view and click Application inventory.

- Click on the application row to open the application drawer.

- Click the Reports tab.

Click either the HTML or YAML link:

-

By clicking the HTML link, you download the compressed

analysis-report-app-<application_name>.tarfile. Extracting this file creates a folder with the same name as the application. -

By clicking the YAML link, you download the uncompressed

analysis-report-app-<application_name>.yamlfile.

-

By clicking the HTML link, you download the compressed

7.10. Controlling MTA tasks by using Task Manager

Task Manager provides precise information about the Migration Toolkit for Applications (MTA) tasks queued for execution. Task Manager handles the following types of tasks:

- Application analysis

- Language discovery

- Technology discovery

You can display task-related information either of the following ways:

- To display active tasks, open the Task Manager drawer by clicking the notifications button.

- To display all tasks, open the Task Manager page in the Migration view.

There are no multi-user access restrictions on resources. For example, an analyzer task created by a user can be canceled by any other user.

7.10.1. Reviewing a task log

To find details and logs of a particular Migration Toolkit for Applications (MTA) task, you can use the Task Manager page.

Procedure

- In the Migration view, click Task Manager.

-

Click the Options menu (

) for the selected task.

) for the selected task.

Click Task details.

Alternatively, click on the task status in the Status column.

7.10.2. Controlling the order of task execution

You can use Task Manager to preempt a Migration Toolkit for Applications (MTA) task you have scheduled for execution.

You can enable Preemption on any scheduled task (not in the status of Running, Succeeded, or Failed). However, only lower-priority tasks are candidates to be preempted. When a higher-priority task is blocked by lower-priority tasks and has Preemption enabled, the low-priority tasks might be rescheduled so that the blocked higher-priority task might run. Therefore, it is only useful to enable Preemption on higher-priority tasks, for example, application analysis.

Procedure

- In the Migration view, click Task Manager.

-

Click the Options menu (

) for the selected task.

) for the selected task.

Depending on your scenario, complete one of the following steps:

- To enable Preemption for the task, select Enable preemption.

- To disable Preemption for the task with enabled Preemption, select Disable preemption.

Revised on 2025-02-24 15:54:52 UTC