이 콘텐츠는 선택한 언어로 제공되지 않습니다.

Chapter 10. Using AMQ Streams with MirrorMaker 2.0

MirrorMaker 2.0 is used to replicate data between two or more active Kafka clusters, within or across data centers.

Data replication across clusters supports scenarios that require:

- Recovery of data in the event of a system failure

- Aggregation of data for analysis

- Restriction of data access to a specific cluster

- Provision of data at a specific location to improve latency

MirrorMaker 2.0 has features not supported by the previous version of MirrorMaker. However, you can configure MirrorMaker 2.0 to be used in legacy mode.

Additional resources

10.1. MirrorMaker 2.0 data replication

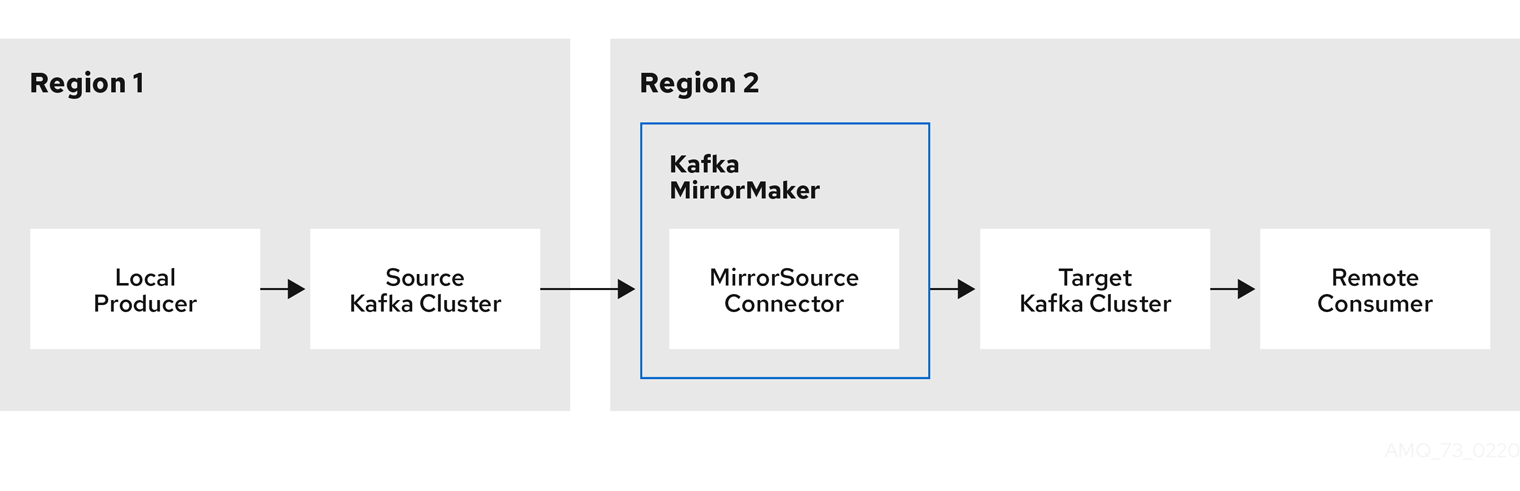

MirrorMaker 2.0 consumes messages from a source Kafka cluster and writes them to a target Kafka cluster.

MirrorMaker 2.0 uses:

- Source cluster configuration to consume data from the source cluster

- Target cluster configuration to output data to the target cluster

MirrorMaker 2.0 is based on the Kafka Connect framework, connectors managing the transfer of data between clusters. A MirrorMaker 2.0 MirrorSourceConnector replicates topics from a source cluster to a target cluster.

The process of mirroring data from one cluster to another cluster is asynchronous. The recommended pattern is for messages to be produced locally alongside the source Kafka cluster, then consumed remotely close to the target Kafka cluster.

MirrorMaker 2.0 can be used with more than one source cluster.

Figure 10.1. Replication across two clusters

By default, a check for new topics in the source cluster is made every 10 minutes. You can change the frequency by adding refresh.topics.interval.seconds to the source connector configuration of the KafkaMirrorMaker2 resource. However, increasing the frequency of the operation might affect overall performance.

10.2. Cluster configuration

You can use MirrorMaker 2.0 in active/passive or active/active cluster configurations.

- In an active/active configuration, both clusters are active and provide the same data simultaneously, which is useful if you want to make the same data available locally in different geographical locations.

- In an active/passive configuration, the data from an active cluster is replicated in a passive cluster, which remains on standby, for example, for data recovery in the event of system failure.

The expectation is that producers and consumers connect to active clusters only.

A MirrorMaker 2.0 cluster is required at each target destination.

10.2.1. Bidirectional replication (active/active)

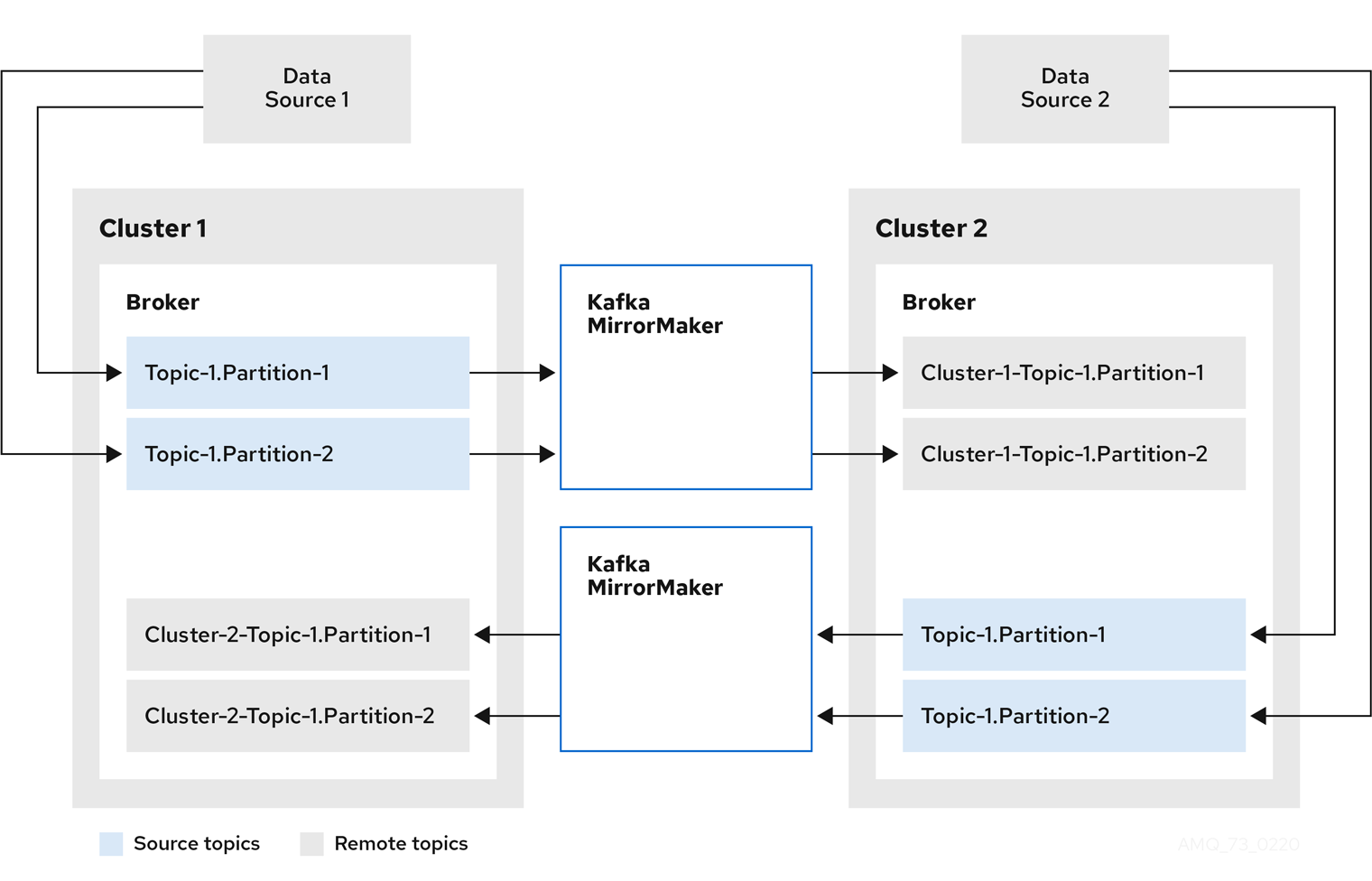

The MirrorMaker 2.0 architecture supports bidirectional replication in an active/active cluster configuration.

Each cluster replicates the data of the other cluster using the concept of source and remote topics. As the same topics are stored in each cluster, remote topics are automatically renamed by MirrorMaker 2.0 to represent the source cluster. The name of the originating cluster is prepended to the name of the topic.

Figure 10.2. Topic renaming

By flagging the originating cluster, topics are not replicated back to that cluster.

The concept of replication through remote topics is useful when configuring an architecture that requires data aggregation. Consumers can subscribe to source and remote topics within the same cluster, without the need for a separate aggregation cluster.

10.2.2. Unidirectional replication (active/passive)

The MirrorMaker 2.0 architecture supports unidirectional replication in an active/passive cluster configuration.

You can use an active/passive cluster configuration to make backups or migrate data to another cluster. In this situation, you might not want automatic renaming of remote topics.

You can override automatic renaming by adding IdentityReplicationPolicy to the source connector configuration of the KafkaMirrorMaker2 resource. With this configuration applied, topics retain their original names.

10.2.3. Topic configuration synchronization

Topic configuration is automatically synchronized between source and target clusters. By synchronizing configuration properties, the need for rebalancing is reduced.

10.2.4. Data integrity

MirrorMaker 2.0 monitors source topics and propagates any configuration changes to remote topics, checking for and creating missing partitions. Only MirrorMaker 2.0 can write to remote topics.

10.2.5. Offset tracking

MirrorMaker 2.0 tracks offsets for consumer groups using internal topics.

- The offset sync topic maps the source and target offsets for replicated topic partitions from record metadata

- The checkpoint topic maps the last committed offset in the source and target cluster for replicated topic partitions in each consumer group

Offsets for the checkpoint topic are tracked at predetermined intervals through configuration. Both topics enable replication to be fully restored from the correct offset position on failover.

MirrorMaker 2.0 uses its MirrorCheckpointConnector to emit checkpoints for offset tracking.

10.2.6. Synchronizing consumer group offsets

The __consumer_offsets topic stores information on committed offsets, for each consumer group. Offset synchronization periodically transfers the consumer offsets for the consumer groups of a source cluster into the consumer offsets topic of a target cluster.

Offset synchronization is particularly useful in an active/passive configuration. If the active cluster goes down, consumer applications can switch to the passive (standby) cluster and pick up from the last transferred offset position.

To use topic offset synchronization:

-

Enable the synchronization by adding

sync.group.offsets.enabledto the checkpoint connector configuration of theKafkaMirrorMaker2resource, and setting the property totrue. Synchronization is disabled by default. -

Add the

IdentityReplicationPolicyto the source and checkpoint connector configuration so that topics in the target cluster retain their original names.

For topic offset synchronization to work, consumer groups in the target cluster cannot use the same ids as groups in the source cluster.

If enabled, the synchronization of offsets from the source cluster is made periodically. You can change the frequency by adding sync.group.offsets.interval.seconds and emit.checkpoints.interval.seconds to the checkpoint connector configuration. The properties specify the frequency in seconds that the consumer group offsets are synchronized, and the frequency of checkpoints emitted for offset tracking. The default for both properties is 60 seconds. You can also change the frequency of checks for new consumer groups using the refresh.groups.interval.seconds property, which is performed every 10 minutes by default.

Because the synchronization is time-based, any switchover by consumers to a passive cluster will likely result in some duplication of messages.

10.2.7. Connectivity checks

A heartbeat internal topic checks connectivity between clusters.

The heartbeat topic is replicated from the source cluster.

Target clusters use the topic to check:

- The connector managing connectivity between clusters is running

- The source cluster is available

MirrorMaker 2.0 uses its MirrorHeartbeatConnector to emit heartbeats that perform these checks.

10.3. ACL rules synchronization

If AclAuthorizer is being used, ACL rules that manage access to brokers also apply to remote topics. Users that can read a source topic can read its remote equivalent.

OAuth 2.0 authorization does not support access to remote topics in this way.

10.4. Synchronizing data between Kafka clusters using MirrorMaker 2.0

Use MirrorMaker 2.0 to synchronize data between Kafka clusters through configuration.

The previous version of MirrorMaker continues to be supported, by running MirrorMaker 2.0 in legacy mode.

The configuration must specify:

- Each Kafka cluster

- Connection information for each cluster, including TLS authentication

The replication flow and direction

- Cluster to cluster

- Topic to topic

- Replication rules

- Committed offset tracking intervals

This procedure describes how to implement MirrorMaker 2.0 by creating the configuration in a properties file, then passing the properties when using the MirrorMaker script file to set up the connections.

MirrorMaker 2.0 uses Kafka Connect to make the connections to transfer data between clusters. Kafka provides MirrorMaker sink and source connectors for data replication. If you wish to use the connectors instead of the MirrorMaker script, the connectors must be configured in the Kafka Connect cluster. For more information, refer to the Apache Kafka documentation.

Before you begin

A sample configuration properties file is provided in ./config/connect-mirror-maker.properties.

Prerequisites

- You need AMQ Streams installed on the hosts of each Kafka cluster node you are replicating.

Procedure

Open the sample properties file in a text editor, or create a new one, and edit the file to include connection information and the replication flows for each Kafka cluster.

The following example shows a configuration to connect two clusters, cluster-1 and cluster-2, bidirectionally. Cluster names are configurable through the

clustersproperty.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Each Kafka cluster is identified with its alias.

- 2

- Connection information for cluster-1, using the bootstrap address and port 443. Both clusters use port 443 to connect to Kafka using OpenShift Routes.

- 3

- The

ssl.properties define TLS configuration for cluster-1. - 4

- Connection information for cluster-2.

- 5

- The

ssl.properties define the TLS configuration for cluster-2. - 6

- Replication flow enabled from the cluster-1 cluster to the cluster-2 cluster.

- 7

- Replicates all topics from the cluster-1 cluster to the cluster-2 cluster.

- 8

- Replication flow enabled from the cluster-2 cluster to the cluster-1 cluster.

- 9

- Replicates specific topics from the cluster-2 cluster to the cluster-1 cluster.

- 10

- Defines the separator used for the renaming of remote topics.

- 11

- When enabled, ACLs are applied to synchronized topics. The default is

false. - 12

- The period between checks for new topics to synchronize.

- 13

- The period between checks for new consumer groups to synchronize.

OPTION: If required, add a policy that overrides the automatic renaming of remote topics. Instead of prepending the name with the name of the source cluster, the topic retains its original name.

This optional setting is used for active/passive backups and data migration.

replication.policy.class=io.strimzi.kafka.connect.mirror.IdentityReplicationPolicy

replication.policy.class=io.strimzi.kafka.connect.mirror.IdentityReplicationPolicyCopy to Clipboard Copied! Toggle word wrap Toggle overflow OPTION: If you want to synchronize consumer group offsets, add configuration to enable and manage the synchronization:

refresh.groups.interval.seconds=60 sync.group.offsets.enabled=true sync.group.offsets.interval.seconds=60 emit.checkpoints.interval.seconds=60

refresh.groups.interval.seconds=60 sync.group.offsets.enabled=true1 sync.group.offsets.interval.seconds=602 emit.checkpoints.interval.seconds=603 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Optional setting to synchronize consumer group offsets, which is useful for recovery in an active/passive configuration. Synchronization is not enabled by default.

- 2

- If the synchronization of consumer group offsets is enabled, you can adjust the frequency of the synchronization.

- 3

- Adjusts the frequency of checks for offset tracking. If you change the frequency of offset synchronization, you might also need to adjust the frequency of these checks.

Start ZooKeeper and Kafka in the target clusters:

su - kafka /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

su - kafka /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

/opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start MirrorMaker with the cluster connection configuration and replication policies you defined in your properties file:

/opt/kafka/bin/connect-mirror-maker.sh /config/connect-mirror-maker.properties

/opt/kafka/bin/connect-mirror-maker.sh /config/connect-mirror-maker.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow MirrorMaker sets up connections between the clusters.

For each target cluster, verify that the topics are being replicated:

/bin/kafka-topics.sh --bootstrap-server <BrokerAddress> --list

/bin/kafka-topics.sh --bootstrap-server <BrokerAddress> --listCopy to Clipboard Copied! Toggle word wrap Toggle overflow

10.5. Using MirrorMaker 2.0 in legacy mode

This procedure describes how to configure MirrorMaker 2.0 to use it in legacy mode. Legacy mode supports the previous version of MirrorMaker.

The MirrorMaker script /opt/kafka/bin/kafka-mirror-maker.sh can run MirrorMaker 2.0 in legacy mode.

Prerequisites

You need the properties files you currently use with the legacy version of MirrorMaker.

-

/opt/kafka/config/consumer.properties -

/opt/kafka/config/producer.properties

Procedure

Edit the MirrorMaker

consumer.propertiesandproducer.propertiesfiles to turn off MirrorMaker 2.0 features.For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the changes and restart MirrorMaker with the properties files you used with the previous version of MirrorMaker:

su - kafka /opt/kafka/bin/kafka-mirror-maker.sh \ --consumer.config /opt/kafka/config/consumer.properties \ --producer.config /opt/kafka/config/producer.properties \ --num.streams=2

su - kafka /opt/kafka/bin/kafka-mirror-maker.sh \ --consumer.config /opt/kafka/config/consumer.properties \ --producer.config /opt/kafka/config/producer.properties \ --num.streams=2Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

consumerproperties provide the configuration for the source cluster and theproducerproperties provide the target cluster configuration.MirrorMaker sets up connections between the clusters.

Start ZooKeeper and Kafka in the target cluster:

su - kafka /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

su - kafka /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow su - kafka /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

su - kafka /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow For the target cluster, verify that the topics are being replicated:

/bin/kafka-topics.sh --bootstrap-server <BrokerAddress> --list

/bin/kafka-topics.sh --bootstrap-server <BrokerAddress> --listCopy to Clipboard Copied! Toggle word wrap Toggle overflow