이 콘텐츠는 선택한 언어로 제공되지 않습니다.

Chapter 1. Introduction to Ceph File System

This chapter explains what the Ceph File System (CephFS) is and how it works.

1.1. About the Ceph File System

The Ceph File System (CephFS) is a file system compatible with POSIX standards that provides a file access to a Ceph Storage Cluster.

The CephFS requires at least one Metadata Server (MDS) daemon (ceph-mds) to run. The MDS daemon manages metadata related to files stored on the Ceph File System and also coordinates access to the shared Ceph Storage Cluster.

CephFS uses the POSIX semantics wherever possible. For example, in contrast to many other common network file systems like NFS, CephFS maintains strong cache coherency across clients. The goal is for processes using the file system to behave the same when they are on different hosts as when they are on the same host. However, in some cases, CephFS diverges from the strict POSIX semantics. For details, see Section 1.4, “Differences from POSIX Compliance in the Ceph File System”.

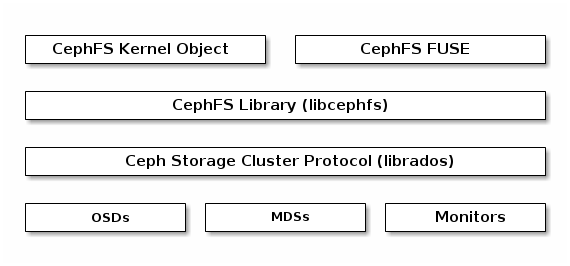

The Ceph File System Components

This picture shows various layers of the Ceph File System.

The bottom layer represents the underlying core cluster that includes:

-

OSDs (

ceph-osd) where the Ceph File System data and metadata are stored -

Metadata Servers (

ceph-mds) that manages Ceph File System metadata -

Monitors (

ceph-mon) that manages the master copy of the cluster map

The Ceph Storage Cluster Protocol layer represents the Ceph native librados library for interacting with the core cluster.

The CephFS library layer includes the CephFS libcephfs library that works on top of librados and represents the Ceph File System.

The upper layer represents two types of clients that can access the Ceph File Systems.

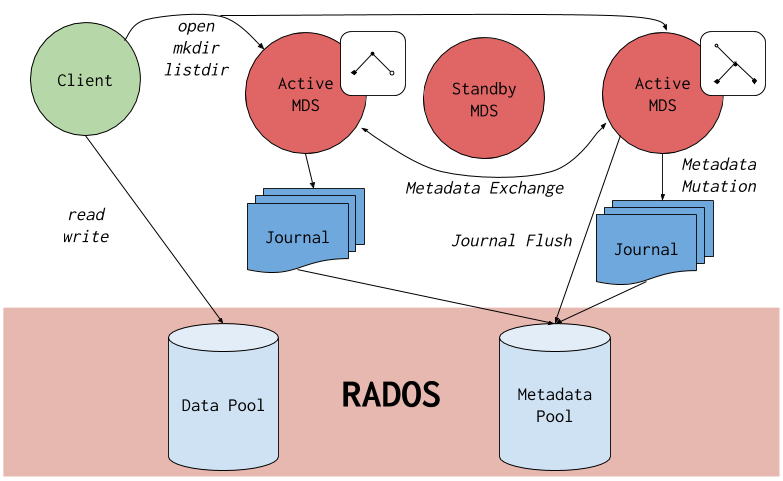

This picture shows in more detail how the Ceph File System components interact with each other.

The Ceph File System has the following primary components:

-

Clients represent the entities performing I/O operations on behalf of applications using CephFS (

ceph-fusefor FUSE clients andkcephfsfor kernel clients). Clients send metadata requests to active MDS. In return, the client learns of file metadata and can begin safely caching both metadata and file data. - Metadata Servers serves metadata to clients, caches hot metadata to reduce requests to the backing metadata pool store, manages client caches to maintain cache coherency, replicates hot metadata between active MDS, and coalesces metadata mutations to a compact journal with regular flushes to the backing metadata pool.

1.2. Main CephFS Features

The Ceph File System introduces the following features and enhancements:

- Scalability

- The Ceph File System is highly scalable due to horizontal scaling of metadata servers and direct client reads and writes with individual OSD nodes.

- Shared File System

- The Ceph File System is a shared file system so multiple clients can work on the same file system at once.

- High Availability

- The Ceph File System provides a cluster of Ceph Metadata Servers (MDS). One is active and others are in standby mode. If the active MDS terminates unexpectedly, one of the standby MDS becomes active. As a result, client mounts continue working through a server failure. This behavior makes the Ceph File System highly available. In addition, you can configure multiple active metadata servers. See Section 2.6, “Configuring Multiple Active Metadata Server Daemons” for details.

- Configurable File and Directory Layouts

- The Ceph File System allows users to configure file and directory layouts to use multiple pools, pool namespaces, and file striping modes across objects. See Section 4.4, “Working with File and Directory Layouts” for details.

- POSIX Access Control Lists (ACL)

The Ceph File System supports the POSIX Access Control Lists (ACL). ACL are enabled by default with the Ceph File Systems mounted as kernel clients with kernel version

kernel-3.10.0-327.18.2.el7.To use ACL with the Ceph File Systems mounted as FUSE clients, you must enabled them. See Section 1.3, “CephFS Limitations” for details.

- Client Quotas

- The Ceph File System FUSE client supports setting quotas on any directory in a system. The quota can restrict the number of bytes or the number of files stored beneath that point in the directory hierarchy. Client quotas are enabled by default.

1.3. CephFS Limitations

- Access Control Lists (ACL) support in FUSE clients

To use the ACL feature with the Ceph File System mounted as a FUSE client, you must enable it. To do so, add the following options to the Ceph configuration file:

[client] client_acl_type=posix_acl

[client] client_acl_type=posix_aclCopy to Clipboard Copied! Toggle word wrap Toggle overflow Then restart the Ceph client.

- Snapshots

Creating snapshots is not enabled by default because this feature is still experimental and it can cause the MDS or client nodes to terminate unexpectedly.

If you understand the risks and still wish to enable snapshots, use:

ceph mds set allow_new_snaps true --yes-i-really-mean-it

ceph mds set allow_new_snaps true --yes-i-really-mean-itCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Multiple Ceph File Systems

By default, creation of multiple Ceph File Systems in one cluster is disabled. An attempt to create an additional Ceph File System fails with the following error:

Error EINVAL: Creation of multiple filesystems is disabled.

Error EINVAL: Creation of multiple filesystems is disabled.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Creating multiple Ceph File Systems in one cluster is not fully supported yet and can cause the MDS or client nodes to terminate unexpectedly.

If you understand the risks and still wish to enable multiple Ceph file systems, use:

ceph fs flag set enable_multiple true --yes-i-really-mean-it

ceph fs flag set enable_multiple true --yes-i-really-mean-itCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4. Differences from POSIX Compliance in the Ceph File System

This section lists situations where the Ceph File System (CephFS) diverges from the strict POSIX semantics.

-

If a client’s attempt to write a file fails, the write operations are not necessarily atomic. That is, the client might call the

write()system call on a file opened with theO_SYNCflag with an 8MB buffer and then terminates unexpectedly and the write operation can be only partially applied. Almost all file systems, even local file systems, have this behavior. - In situations when the write operations occur simultaneously, a write operation that exceeds object boundaries is not necessarily atomic. For example, writer A writes "aa|aa" and writer B writes "bb|bb" simultaneously (where "|" is the object boundary) and "aa|bb" is written rather than the proper "aa|aa" or "bb|bb".

-

POSIX includes the

telldir()andseekdir()system calls that allow you to obtain the current directory offset and seek back to it. Because CephFS can fragment directories at any time, it is difficult to return a stable integer offset for a directory. As such, calling theseekdir()system call to a non-zero offset might often work but is not guaranteed to do so. Callingseekdir()to offset 0 will always work. This is an equivalent to therewinddir()system call. -

Sparse files propagate incorrectly to the

st_blocksfield of thestat()system call. Because CephFS does not explicitly track which parts of a file are allocated or written, thest_blocksfield is always populated by the file size divided by the block size. This behavior causes utilities, such asdu, to overestimate consumed space. -

When the

mmap()system call maps a file into memory on multiple hosts, write operations are not coherently propagated to caches of other hosts. That is, if a page is cached on host A, and then updated on host B, host A page is not coherently invalidated. -

CephFS clients present a hidden

.snapdirectory that is used to access, create, delete, and rename snapshots. Although this directory is excluded from thereaddir()system call, any process that tries to create a file or directory with the same name returns an error. You can change the name of this hidden directory at mount time with the-o snapdirname=.<new_name>option or by using theclient_snapdirconfiguration option.

1.5. Additional Resources

- If you want to use NFS Ganesha as an interface to the Ceph File System with Red Hat OpenStack Platform, see the CephFS with NFS-Ganesha deployment section in the CephFS via NFS Back End Guide for the Shared File System Service for instructions on how to deploy such an environment.