이 콘텐츠는 선택한 언어로 제공되지 않습니다.

Chapter 1. Cross-site replication

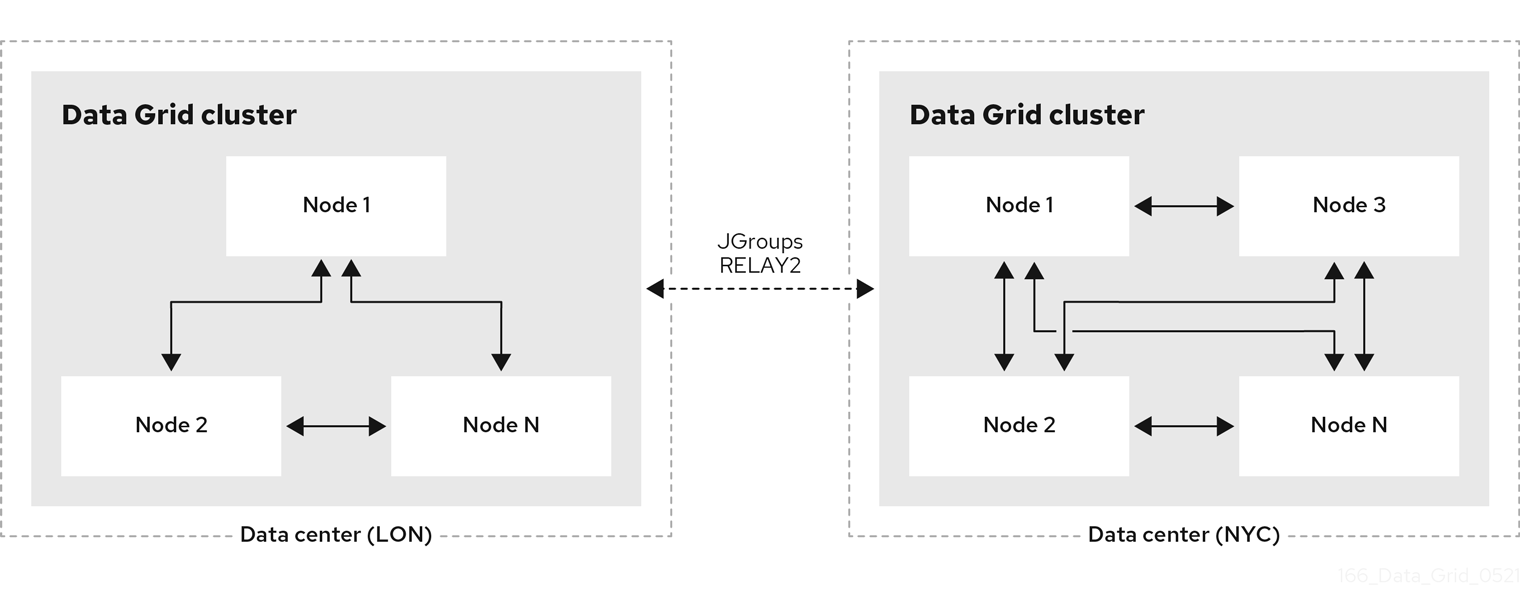

This section explains Data Grid cross-site replication capabilities, including details about relay nodes, state transfer, and client connections for remote caches.

1.1. Cross-site replication

Data Grid can back up data between clusters running in geographically dispersed data centers and across different cloud providers. Cross-site replication provides Data Grid with a global cluster view and:

- Guarantees service continuity in the event of outages or disasters.

- Presents client applications with a single point of access to data in globally distributed caches.

Figure 1.1. Cross-site replication

1.2. Relay nodes

Relay nodes are the nodes in Data Grid clusters that are responsible for sending and receiving requests from backup locations.

If a node is not a relay node, it must forward backup requests to a local relay node. Only relay nodes can send requests to backup locations.

For optimal performance, you should configure all nodes as relay nodes. This increases the speed of backup requests because each node in the cluster can backup to remote sites directly without having to forward backup requests to local relay nodes.

Diagrams in this document illustrate Data Grid clusters with one relay node because this is the default for the JGroups RELAY2 protocol. Likewise, a single relay node is easier to illustrate because each relay node in a cluster communicates with each relay node in the remote cluster.

JGroups configuration refers to relay nodes as "site master" nodes. Data Grid uses relay node instead because it is more descriptive and presents a more intuitive choice for our users.

1.3. Data Grid cache backups

Data Grid caches include a backups configuration that let you name remote sites as backup locations.

For example, the following diagram shows three caches, "customers", "eu-orders", and "us-orders":

- In LON, "customers" names NYC as a backup location.

- In NYC, "customers" names LON as a backup location.

- "eu-orders" and "us-orders" do not have backups and are local to the respective cluster.

1.4. Backup strategies

Data Grid replicates data between clusters at the same time that writes to caches occur. For example, if a client writes "k1" to LON, Data Grid backs up "k1" to NYC at the same time.

To back up data to a different cluster, Data Grid can use either a synchronous or asynchronous strategy.

Synchronous strategy

When Data Grid replicates data to backup locations, it writes to the cache on the local cluster and the cache on the remote cluster concurrently. With the synchronous strategy, Data Grid waits for both write operations to complete before returning.

You can control how Data Grid handles writes to the cache on the local cluster if backup operations fail. Data Grid can do the following:

- Ignore the failed backup and silently continue the write to the local cluster.

- Log a warning message or throw an exception and continue the write to the local cluster.

- Handle failed backup operations with custom logic.

Synchronous backups also support two-phase commits with caches that participate in optimistic transactions. The first phase of the backup acquires a lock. The second phase commits the modification.

Two-phase commit with cross-site replication has a significant performance impact because it requires two round-trips across the network.

Asynchronous strategy

When Data Grid replicates data to backup locations, it does not wait until the operation completes before writing to the local cache.

Asynchronous backup operations and writes to the local cache are independent of each other. If backup operations fail, write operations to the local cache continue and no exceptions occur. When this happens Data Grid also retries the write operation until the remote cluster disconnects from the cross-site view.

Synchronous vs asynchronous backups

Synchronous backups offer the strongest guarantee of data consistency across sites. If strategy=sync, when cache.put() calls return you know the value is up to date in the local cache and in the backup locations.

The trade-off for this consistency is performance. Synchronous backups have much greater latency in comparison to asynchronous backups.

Asynchronous backups, on the other hand, do not add latency to client requests so they have no performance impact. However, if strategy=async, when cache.put() calls return you cannot be sure of that the value in the backup location is the same as in the local cache.

1.5. Automatic offline parameters for backup locations

Operations to replicate data across clusters are resource intensive, using excessive RAM and CPU. To avoid wasting resources Data Grid can take backup locations offline when they stop accepting requests after a specific period of time.

Data Grid takes remote sites offline based on the number of failed sequential requests and the time interval since the first failure. Requests are failed when the target cluster does not have any nodes in the cross-site view (JGroups bridge) or when a timeout expires before the target cluster acknowledges the request.

Backup timeouts

Backup configurations include timeout values for operations to replicate data between clusters. If operations do not complete before the timeout expires, Data Grid records them as failures.

In the following example, operations to replicate data to NYC are recorded as failures if they do not complete after 10 seconds:

XML

JSON

YAML

Number of failures

You can specify the number of consecutive failures that can occur before backup locations go offline.

In the following example, if a cluster attempts to replicate data to NYC and five consecutive operations fail, NYC automatically goes offline:

XML

JSON

YAML

Time to wait

You can also specify how long to wait before taking sites offline when backup operations fail. If a backup request succeeds before the wait time runs out, Data Grid does not take the site offline.

One or two minutes is generally a suitable time to wait before automatically taking backup locations offline. If the wait period is too short then backup locations go offline too soon. You then need to bring clusters back online and perform state transfer operations to ensure data is in sync between the clusters.

A negative or zero value for the number of failures is equivalent to a value of 1. Data Grid uses only a minimum time to wait to take backup locations offline after a failure occurs, for example:

<take-offline after-failures="-1"

min-wait="10000"/>

<take-offline after-failures="-1"

min-wait="10000"/>In the following example, if a cluster attempts to replicate data to NYC and there are more than five consecutive failures and 15 seconds elapse after the first failed operation, NYC automatically goes offline:

XML

JSON

YAML

1.6. State transfer

State transfer is an administrative operation that synchronizes data between sites.

For example, LON goes offline and NYC starts handling client requests. When you bring LON back online, the Data Grid cluster in LON does not have the same data as the cluster in NYC.

To ensure the data is consistent between LON and NYC, you can push state from NYC to LON.

- State transfer is bidirectional. For example, you can push state from NYC to LON or from LON to NYC.

- Pushing state to offline sites brings them back online.

State transfer overwrites only data that exists on both sites, the originating site and the receiving site. Data Grid does not delete data.

For example, "k2" exists on LON and NYC. "k2" is removed from NYC while LON is offline. When you bring LON back online, "k2" still exists at that location. If you push state from NYC to LON, the transfer does not affect "k2" on LON.

To ensure contents of the cache are identical after state transfer, remove all data from the cache on the receiving site before pushing state.

Use the clear() method or the clearcache command from the CLI.

State transfer does not overwrite updates to data that occur after you initiate the push.

For example, "k1,v1" exists on LON and NYC. LON goes offline so you push state transfer to LON from NYC, which brings LON back online. Before state transfer completes, a client puts "k1,v2" on LON.

In this case the state transfer from NYC does not overwrite "k1,v2" because that modification happened after you initiated the push.

Automatic state transfer

By default, you must manually perform cross-site state transfer operations with the CLI or via JMX or REST.

However, when using the asynchronous backup strategy, Data Grid can automatically perform cross-site state transfer operations.

When a backup location comes back online, and the network connection is stable, Data Grid initiates bidirectional state transfer between backup locations. For example, Data Grid simultaneously transfers state from LON to NYC and NYC to LON.

To avoid temporary network disconnects triggering state transfer operations, there are two conditions that backup locations must meet to go offline. The status of a backup location must be offline and it must not be included in the cross-site view with JGroups RELAY2.

The automatic state transfer is also triggered when a cache starts.

In the scenario where LON is starting up, after a cache starts, it sends a notification to NYC. Following this, NYC starts a unidirectional state transfer to LON.

1.7. Client connections across sites

Clients can write to Data Grid clusters in either an Active/Passive or Active/Active configuration.

Active/Passive

The following diagram illustrates Active/Passive where Data Grid handles client requests from one site only:

In the preceding image:

- Client connects to the Data Grid cluster at LON.

- Client writes "k1" to the cache.

- The relay node at LON, "n1", sends the request to replicate "k1" to the relay node at NYC, "nA".

With Active/Passive, NYC provides data redundancy. If the Data Grid cluster at LON goes offline for any reason, clients can start sending requests to NYC. When you bring LON back online you can synchronize data with NYC and then switch clients back to LON.

Active/Active

The following diagram illustrates Active/Active where Data Grid handles client requests at two sites:

In the preceding image:

- Client A connects to the Data Grid cluster at LON.

- Client A writes "k1" to the cache.

- Client B connects to the Data Grid cluster at NYC.

- Client B writes "k2" to the cache.

- Relay nodes at LON and NYC send requests so that "k1" is replicated to NYC and "k2" is replicated to LON.

With Active/Active both NYC and LON replicate data to remote caches while handling client requests. If either NYC or LON go offline, clients can start sending requests to the online site. You can then bring offline sites back online, push state to synchronize data, and switch clients as required.

Backup strategies and client connections

An asynchronous backup strategy (strategy=async) is recommended with Active/Active configurations.

If multiple clients attempt to write to the same entry concurrently, and the backup strategy is synchronous (strategy=sync), then deadlocks occur. However you can use the synchronous backup strategy with an Active/Passive configuration if both sites access different data sets, in which case there is no risk of deadlocks from concurrent writes.

1.7.1. Concurrent writes and conflicting entries

Conflicting entries can occur with Active/Active site configurations if clients write to the same entries at the same time but at different sites.

For example, client A writes to "k1" in LON at the same time that client B writes to "k1" in NYC. In this case, "k1" has a different value in LON than in NYC. After replication occurs, there is no guarantee which value for "k1" exists at which site.

To ensure data consistency, Data Grid uses a vector clock algorithm to detect conflicting entries during backup operations, as in the following illustration:

Vector clocks are timestamp metadata that increment with each write to an entry. In the preceding example, 0,0 represents the initial value for the vector clock on "k1".

A client puts "k1=2" in LON and the vector clock is 1,0, which Data Grid replicates to NYC. A client then puts "k1=3" in NYC and the vector clock updates to 1,1, which Data Grid replicates to LON.

However if a client puts "k1=5" in LON at the same time that a client puts "k1=8" in NYC, Data Grid detects a conflicting entry because the vector value for "k1" is not strictly greater or less between LON and NYC.

When it finds conflicting entries, Data Grid uses the Java compareTo(String anotherString) method to compare site names. To determine which key takes priority, Data Grid selects the site name that is lexicographically less than the other. Keys from a site named AAA take priority over keys from a site named AAB and so on.

Following the same example, to resolve the conflict for "k1", Data Grid uses the value for "k1" that originates from LON. This results in "k1=5" in both LON and NYC after Data Grid resolves the conflict and replicates the value.

Prepend site names with numbers as a simple way to represent the order of priority for resolving conflicting entries; for example, 1LON and 2NYC.

Backup strategies

Data Grid performs conflict resolution with the asynchronous backup strategy (strategy=async) only.

You should never use the synchronous backup strategy with an Active/Active configuration. In this configuration concurrent writes result in deadlocks and you lose data. However you can use the synchronous backup strategy with an Active/Active configuration if both sites access different data sets, in which case there is no risk of deadlocks from concurrent writes.

Cross-site merge policies

Data Grid provides an XSiteEntryMergePolicy SPI in addition to cross-site merge policies that configure Data Grid to do the following:

- Always remove conflicting entries.

- Apply write operations when write/remove conflicts occur.

- Remove entries when write/remove conflicts occur.

1.8. Expiration with cross-site replication

Expiration removes cache entries based on time. Data Grid provides two ways to configure expiration for entries:

Lifespan

The lifespan attribute sets the maximum amount of time that entries can exist. When you set lifespan with cross-site replication, Data Grid clusters expire entries independently of remote sites.

Maximum idle

The max-idle attribute specifies how long entries can exist based on read or write operations in a given time period. When you set a max-idle with cross-site replication, Data Grid clusters send touch commands to coordinate idle timeout values with remote sites.

Using maximum idle expiration in cross-site deployments can impact performance because the additional processing to keep max-idle values synchronized means some operations take longer to complete.