이 콘텐츠는 선택한 언어로 제공되지 않습니다.

1.3. Cluster Infrastructure

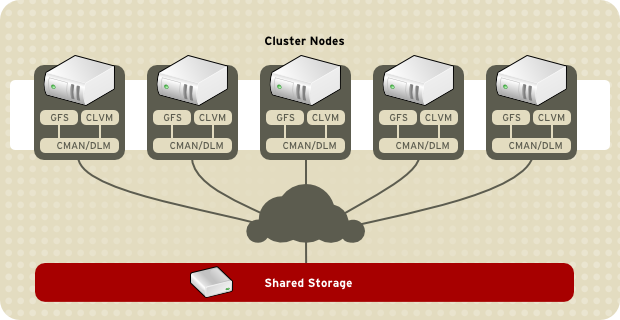

The Red Hat Cluster Suite cluster infrastructure provides the basic functions for a group of computers (called nodes or members) to work together as a cluster. Once a cluster is formed using the cluster infrastructure, you can use other Red Hat Cluster Suite components to suit your clustering needs (for example, setting up a cluster for sharing files on a GFS file system or setting up service failover). The cluster infrastructure performs the following functions:

- Cluster management

- Lock management

- Fencing

- Cluster configuration management

1.3.1. Cluster Management

링크 복사링크가 클립보드에 복사되었습니다!

Cluster management manages cluster quorum and cluster membership. One of the following Red Hat Cluster Suite components performs cluster management: CMAN (an abbreviation for cluster manager) or GULM (Grand Unified Lock Manager). CMAN operates as the cluster manager if a cluster is configured to use DLM (Distributed Lock Manager) as the lock manager. GULM operates as the cluster manager if a cluster is configured to use GULM as the lock manager. The major difference between the two cluster managers is that CMAN is a distributed cluster manager and GULM is a client-server cluster manager. CMAN runs in each cluster node; cluster management is distributed across all nodes in the cluster (refer to Figure 1.2, “CMAN/DLM Overview”). GULM runs in nodes designated as GULM server nodes; cluster management is centralized in the nodes designated as GULM server nodes (refer to Figure 1.3, “GULM Overview”). GULM server nodes manage the cluster through GULM clients in the cluster nodes. With GULM, cluster management operates in a limited number of nodes: either one, three, or five nodes configured as GULM servers.

The cluster manager keeps track of cluster quorum by monitoring the count of cluster nodes that run cluster manager. (In a CMAN cluster, all cluster nodes run cluster manager; in a GULM cluster only the GULM servers run cluster manager.) If more than half the nodes that run cluster manager are active, the cluster has quorum. If half the nodes that run cluster manager (or fewer) are active, the cluster does not have quorum, and all cluster activity is stopped. Cluster quorum prevents the occurrence of a "split-brain" condition — a condition where two instances of the same cluster are running. A split-brain condition would allow each cluster instance to access cluster resources without knowledge of the other cluster instance, resulting in corrupted cluster integrity.

In a CMAN cluster, quorum is determined by communication of heartbeats among cluster nodes via Ethernet. Optionally, quorum can be determined by a combination of communicating heartbeats via Ethernet and through a quorum disk. For quorum via Ethernet, quorum consists of 50 percent of the node votes plus 1. For quorum via quorum disk, quorum consists of user-specified conditions.

Note

In a CMAN cluster, by default each node has one quorum vote for establishing quorum. Optionally, you can configure each node to have more than one vote.

In a GULM cluster, the quorum consists of a majority of nodes designated as GULM servers according to the number of GULM servers configured:

- Configured with one GULM server — Quorum equals one GULM server.

- Configured with three GULM servers — Quorum equals two GULM servers.

- Configured with five GULM servers — Quorum equals three GULM servers.

The cluster manager keeps track of membership by monitoring heartbeat messages from other cluster nodes. When cluster membership changes, the cluster manager notifies the other infrastructure components, which then take appropriate action. For example, if node A joins a cluster and mounts a GFS file system that nodes B and C have already mounted, then an additional journal and lock management is required for node A to use that GFS file system. If a cluster node does not transmit a heartbeat message within a prescribed amount of time, the cluster manager removes the node from the cluster and communicates to other cluster infrastructure components that the node is not a member. Again, other cluster infrastructure components determine what actions to take upon notification that node is no longer a cluster member. For example, Fencing would fence the node that is no longer a member.

Figure 1.2. CMAN/DLM Overview

Figure 1.3. GULM Overview