이 콘텐츠는 선택한 언어로 제공되지 않습니다.

Chapter 1. High level overview of Debezium

Debezium is a set of distributed services that capture changes in your databases. Your applications can consume and respond to those changes. Debezium captures each row-level change in each database table in a change event record and streams these records to Kafka topics. Applications read these streams, which provide the change event records in the same order in which they were generated.

More details are in the following sections:

1.1. Debezium Features

Debezium is a set of source connectors for Apache Kafka Connect, ingesting changes from different databases using change data capture (CDC). Unlike other approaches such as polling or dual writes, log-based CDC as implemented by Debezium:

- makes sure that all data changes are captured

- produces change events with a very low delay (e.g. ms range for MySQL or Postgres) while avoiding increased CPU usage of frequent polling

- requires no changes to your data model (such as "Last Updated" column)

- can capture deletes

- can capture old record state and further metadata such as transaction id and causing query (depending on the database’s capabilities and configuration)

To learn more about the advantages of log-based CDC, refer to this blog post.

The actual change data capture feature of Debezium is amended with a range of related capabilities and options:

- Snapshots: optionally, an initial snapshot of a database’s current state can be taken if a connector gets started up and not all logs still exist (typically the case when the database has been running for some time and has discarded any transaction logs not needed any longer for transaction recovery or replication); different modes exist for snapshotting, refer to the docs of the specific connector you’re using to learn more

- Filters: the set of captured schemas, tables and columns can be configured via whitelist/blacklist filters

- Masking: the values from specific columns can be masked, e.g. for sensitive data

- Monitoring: most connectors can be monitored using JMX

- Different ready-to-use message transformations: e.g. for message routing, extraction of new record state (relational connectors, MongoDB) and routing of events from a transactional outbox table

Refer to the connector documentation for a list of all supported databases and detailed information about the features and configuration options of each connector.

1.2. Debezium Architecture

You deploy Debezium by means of Apache Kafka Connect. Kafka Connect is a framework and runtime for implementing and operating:

- Source connectors such as Debezium that send records into Kafka

- Sink connectors that propagate records from Kafka topics to other systems

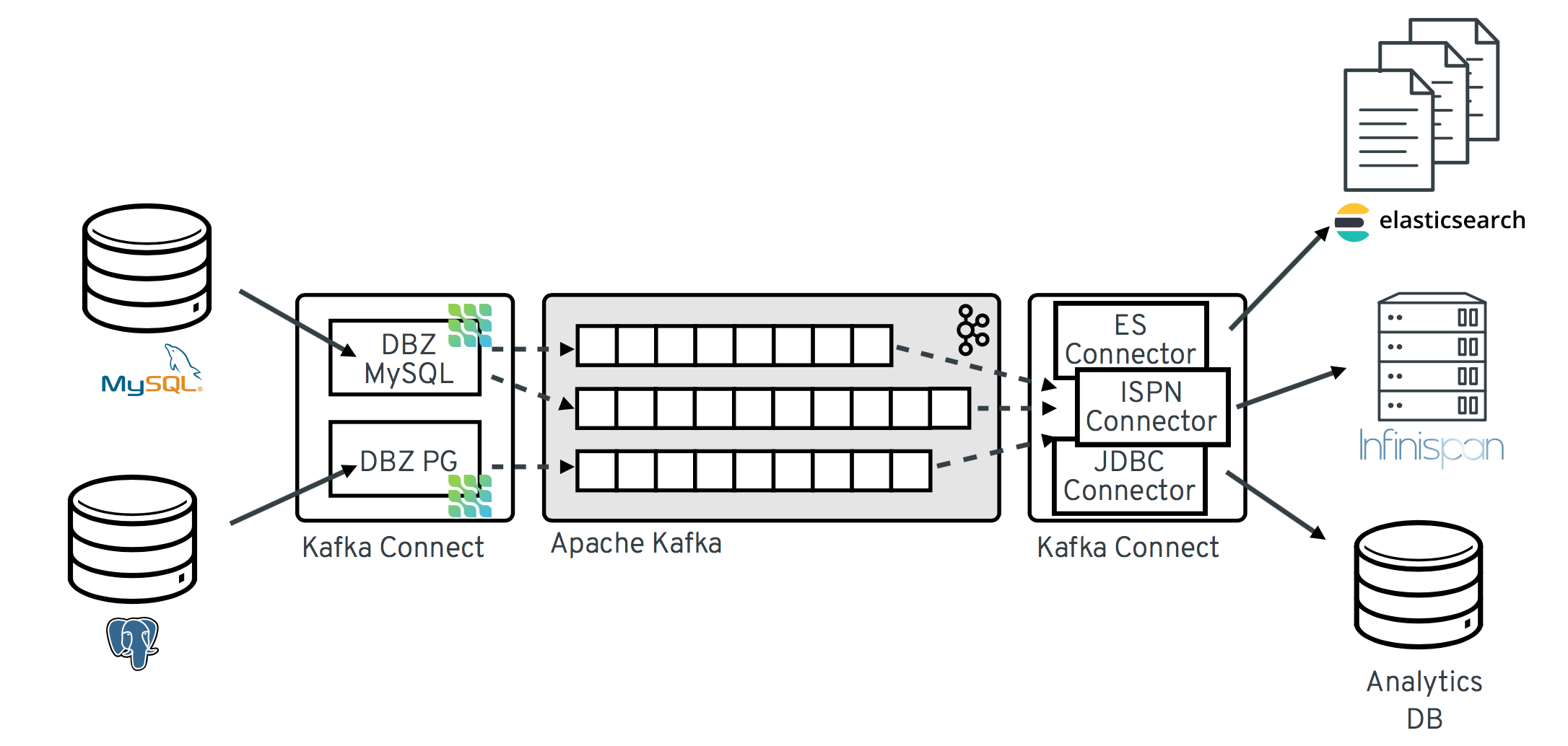

The following image shows the architecture of a change data capture pipeline based on Debezium:

As shown in the image, the Debezium connectors for MySQL and PostgresSQL are deployed to capture changes to these two types of databases. Each Debezium connector establishes a connection to its source database:

-

The MySQL connector uses a client library for accessing the

binlog. - The PostgreSQL connector reads from a logical replication stream.

Kafka Connect operates as a separate service besides the Kafka broker.

By default, changes from one database table are written to a Kafka topic whose name corresponds to the table name. If needed, you can adjust the destination topic name by configuring Debezium’s topic routing transformation. For example, you can:

- Route records to a topic whose name is different from the table’s name

- Stream change event records for multiple tables into a single topic

After change event records are in Apache Kafka, different connectors in the Kafka Connect eco-system can stream the records to other systems and databases such as Elasticsearch, data warehouses and analytics systems, or caches such as Infinispan. Depending on the chosen sink connector, you might need to configure Debezium’s new record state extraction transformation. This Kafka Connect SMT propagates the after structure from Debezium’s change event to the sink connector. This is in place of the verbose change event record that is propagated by default.