이 콘텐츠는 선택한 언어로 제공되지 않습니다.

Chapter 14. Geo-replication

Geo-replication allows multiple, geographically distributed Quay deployments to work as a single registry from the perspective of a client or user. It significantly improves push and pull performance in a globally-distributed Quay setup. Image data is asynchronously replicated in the background with transparent failover / redirect for clients.

Deploying Red Hat Quay with geo-replication on OpenShift is not supported by the Operator.

14.1. Geo-replication features

- When geo-replication is configured, container image pushes will be written to the preferred storage engine for that Red Hat Quay instance (typically the nearest storage backend within the region).

- After the initial push, image data will be replicated in the background to other storage engines.

- The list of replication locations is configurable and those can be different storage backends.

- An image pull will always use the closest available storage engine, to maximize pull performance.

- If replication hasn’t been completed yet, the pull will use the source storage backend instead.

14.2. Geo-replication requirements and constraints

- A single database, and therefore all metadata and Quay configuration, is shared across all regions.

- A single Redis cache is shared across the entire Quay setup and needs to accessible by all Quay pods.

-

The exact same configuration should be used across all regions, with exception of the storage backend, which can be configured explicitly using the

QUAY_DISTRIBUTED_STORAGE_PREFERENCEenvironment variable. - Geo-Replication requires object storage in each region. It does not work with local storage or NFS.

- Each region must be able to access every storage engine in each region (requires a network path).

- Alternatively, the storage proxy option can be used.

- The entire storage backend (all blobs) is replicated. This is in contrast to repository mirroring, which can be limited to an organization or repository or image.

- All Quay instances must share the same entrypoint, typically via load balancer.

- All Quay instances must have the same set of superusers, as they are defined inside the common configuration file.

If the above requirements cannot be met, you should instead use two or more distinct Quay deployments and take advantage of repository mirroring functionality.

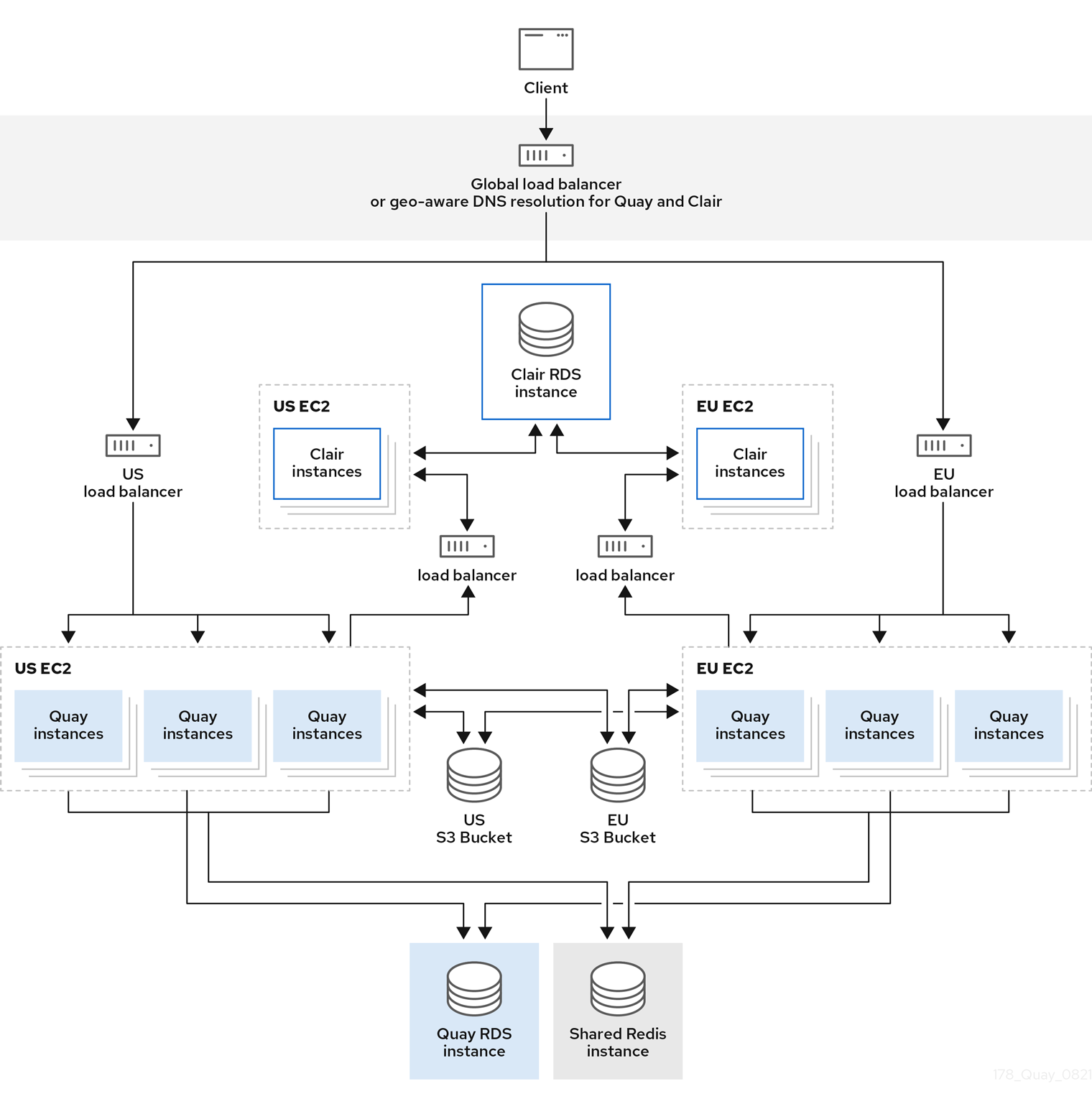

14.3. Geo-replication architecture

In the example shown above, Quay is running in two separate regions, with a common database and a common Redis instance. Localized image storage is provided in each region and image pulls are served from the closest available storage engine. Container image pushes are written to the preferred storage engine for the Quay instance, and will then be replicated, in the background, to the other storage engines.

14.4. Enable storage replication

-

Scroll down to the section entitled

Registry Storage. -

Click

Enable Storage Replication. - Add each of the storage engines to which data will be replicated. All storage engines to be used must be listed.

-

If complete replication of all images to all storage engines is required, under each storage engine configuration click

Replicate to storage engine by default. This will ensure that all images are replicated to that storage engine. To instead enable per-namespace replication, please contact support. -

When you are done, click

Save Configuration Changes. Configuration changes will take effect the next time Red Hat Quay restarts. After adding storage and enabling “Replicate to storage engine by default” for Georeplications, you need to sync existing image data across all storage. To do this, you need to

oc exec(or docker/kubectl exec) into the container and run:scl enable python27 bash python -m util.backfillreplication

# scl enable python27 bash # python -m util.backfillreplicationCopy to Clipboard Copied! Toggle word wrap Toggle overflow This is a one time operation to sync content after adding new storage.

14.4.1. Run Red Hat Quay with storage preferences

- Copy the config.yaml to all machines running Red Hat Quay

For each machine in each region, add a

QUAY_DISTRIBUTED_STORAGE_PREFERENCEenvironment variable with the preferred storage engine for the region in which the machine is running.For example, for a machine running in Europe with the config directory on the host available from

$QUAY/config:sudo podman run -d --rm -p 80:8080 -p 443:8443 \ --name=quay \ -v $QUAY/config:/conf/stack:Z \ -e QUAY_DISTRIBUTED_STORAGE_PREFERENCE=europestorage \ registry.redhat.io/quay/quay-rhel8:v3.6.8

$ sudo podman run -d --rm -p 80:8080 -p 443:8443 \ --name=quay \ -v $QUAY/config:/conf/stack:Z \ -e QUAY_DISTRIBUTED_STORAGE_PREFERENCE=europestorage \ registry.redhat.io/quay/quay-rhel8:v3.6.8Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe value of the environment variable specified must match the name of a Location ID as defined in the config panel.

- Restart all Red Hat Quay containers