Este conteúdo não está disponível no idioma selecionado.

Chapter 7. Planning your OVS-DPDK deployment

To optimize your Open vSwitch with Data Plane Development Kit (OVS-DPDK) deployment for NFV, you should understand how OVS-DPDK uses the Compute node hardware such as CPU, NUMA nodes, memory, NICs, and the considerations for determining the individual OVS-DPDK parameters based on your Compute node.

For a high-level introduction to CPUs and NUMA topology, see: NFV performance considerations in the NFV Product Guide.

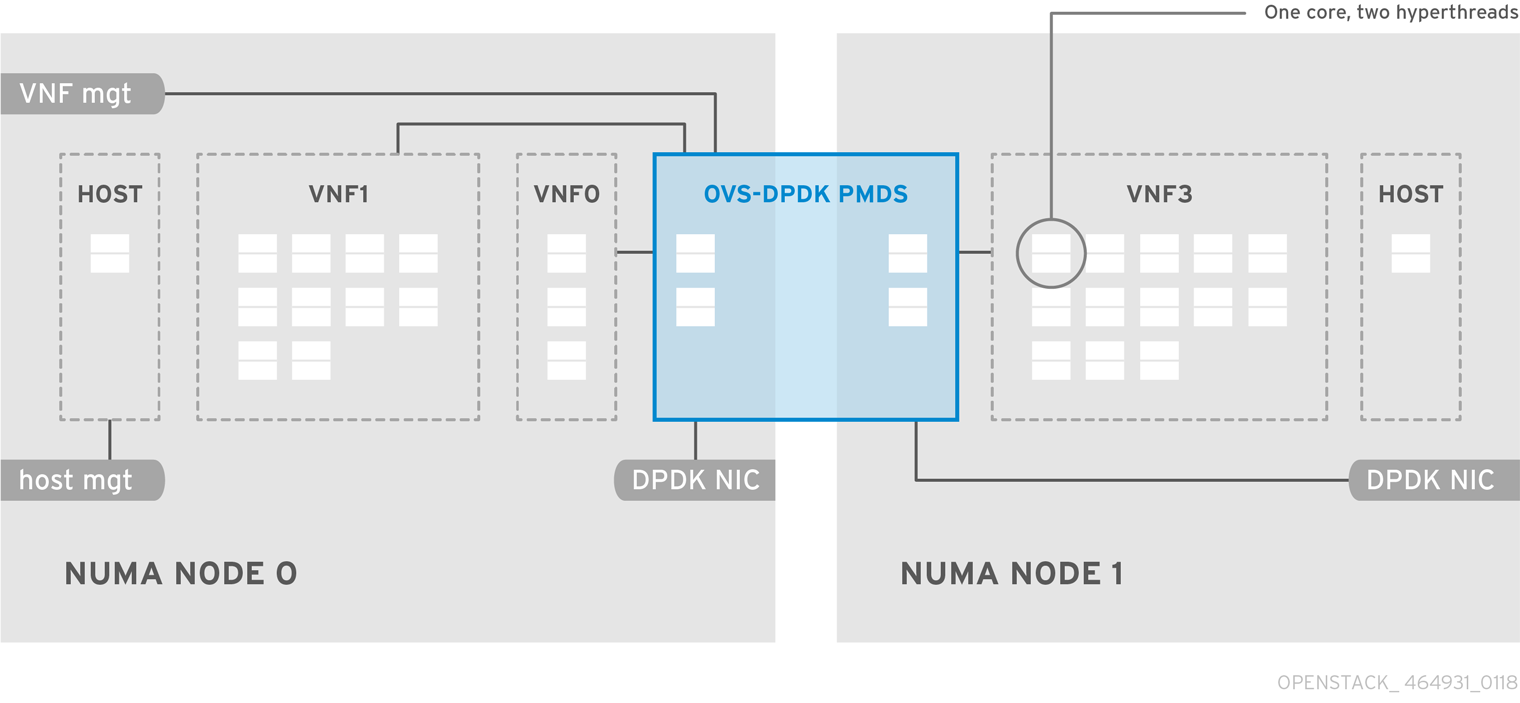

7.1. OVS-DPDK with CPU partitioning and NUMA topology

OVS-DPDK partitions the hardware resources for host, guests, and OVS-DPDK. The OVS-DPDK Poll Mode Drivers (PMDs) run DPDK active loops, which require dedicated cores. This means a list of CPUs and huge pages are dedicated to OVS-DPDK.

A sample partitioning includes 16 cores per NUMA node on dual-socket Compute nodes. The traffic requires additional NICs because the NICs cannot be shared between the host and OVS-DPDK.

DPDK PMD threads must be reserved on both NUMA nodes even if a NUMA node does not have an associated DPDK NIC.

OVS-DPDK performance also requires a reservation of a block of memory local to the NUMA node. Use NICs associated with the same NUMA node that you use for memory and CPU pinning. Ensure that both interfaces in a bond are from NICs on the same NUMA node.

7.2. Overview of workflows and derived parameters

This feature is available in this release as a Technology Preview, and therefore is not fully supported by Red Hat. It should only be used for testing, and should not be deployed in a production environment. For more information about Technology Preview features, see Scope of Coverage Details.

You can use the OpenStack Workflow (mistral) service to derive parameters based on the capabilities of your available bare-metal nodes. With Red Hat OpenStack Platform workflows, you define a set of tasks and actions to perform in a yaml file. You can use a pre-defined workbook, derive_params.yaml, in the tripleo-common/workbooks/ directory. This workbook provides workflows to derive each supported parameter from the results retrieved from Bare Metal introspection. The derive_params.yaml workflows use the formulas from tripleo-common/workbooks/derive_params_formulas.yaml to calculate the derived parameters.

You can modify the formulas in derive_params_formulas.yaml to suit your environment.

The derive_params.yaml workbook assumes all nodes for a given composable role have the same hardware specifications. The workflow considers the flavor-profile association and nova placement scheduler to match nodes associated with a role and uses the introspection data from the first node that matches the role.

For more information on Red Hat OpenStack Platform workflows, see: Troubleshooting Workflows and Executions.

You can use the -p or --plan-environment-file option to add a custom plan_environment.yaml file to the openstack overcloud deploy command. The custom plan_environment.yaml file provides a list of workbooks and any input values to pass into the workbook. The triggered workflows merge the derived parameters back into the custom plan_environment.yaml, where they are available for the overcloud deployment. You can use these derived parameter results to prepare your overcloud images.

For more information on how to use the --plan-environment-file option in your deployment, see Plan Environment Metadata .

7.3. Derived OVS-DPDK parameters

The workflows in derive_params.yaml derive the DPDK parameters associated with the matching role that uses the ComputeNeutronOvsDpdk service.

The following is the list of parameters the workflows can automatically derive for OVS-DPDK:

- IsolCpusList

- KernelArgs

- NovaReservedHostMemory

- NovaVcpuPinSet

- OvsDpdkCoreList

- OvsDpdkSocketMemory

- OvsPmdCoreList

The OvsDpdkMemoryChannels parameter cannot be derived from the introspection memory bank data since the format of memory slot names are not consistent across different hardware environments.

In most cases, the default number for OvsDpdkMemoryChannels is four. Use your hardware manual to determine the number of memory channels per socket and use this value to override the default value.

For configuration details, see Section 8.1, “Deriving DPDK parameters with workflows”.

7.4. Overview of manually calculated OVS-DPDK parameters

This section describes how Open vSwitch (OVS) with Data Plane Development Kit (OVS-DPDK) uses parameters within the director network_environment.yaml heat templates to configure the CPU and memory for optimum performance. Use this information to evaluate the hardware support on your Compute nodes and how best to partition that hardware to optimize your OVS-DPDK deployment.

If you want to generate these values automatically, you can use the derived_parameters.yaml workflow. For more information, see Overview of workflows and derived parameters

Always pair CPU sibling threads, also called logical CPUs, together for the physical core when allocating CPU cores.

To determine the CPU and NUMA nodes on your Compute nodes, see Discovering your NUMA node topology. Use this information to map CPU and other parameters to support the host, guest instance, and OVS-DPDK process needs.

7.4.1. CPU parameters

OVS-DPDK uses the following CPU-partitioning parameters:

- OvsPmdCoreList

Provides the CPU cores that are used for the DPDK poll mode drivers (PMD). Choose CPU cores that are associated with the local NUMA nodes of the DPDK interfaces.

OvsPmdCoreListis used for thepmd-cpu-maskvalue in Open vSwitch. Use theOvsPmdCoreListparameter to set the following configurations:- Pair the sibling threads together.

-

Exclude all cores from the

OvsDpdkCoreList -

Avoid allocating the logical CPUs, or both thread siblings, of the first physical core on both NUMA nodes, as these should be used for the

OvsDpdkCoreListparameter. - Performance depends on the number of physical cores allocated for this PMD core list. On the NUMA node which is associated with a DPDK NIC, allocate the required cores.

For NUMA nodes with a DPDK NIC:

- Determine the number of physical cores required based on the performance requirement and include all the sibling threads, or logical CPUs for each physical core.

For NUMA nodes without a DPDK NIC:

- Allocate the sibling threads, or logical CPUs, of one physical core, excluding the first physical core of the NUMA node.

DPDK PMD threads must be reserved on both NUMA nodes, even if a NUMA node does not have an associated DPDK NIC.

- NovaVcpuPinSet

Sets cores for CPU pinning. The Compute node uses these cores for guest instances.

NovaVcpuPinSetis used as thevcpu_pin_setvalue in thenova.conffile. Use theNovaVcpuPinSetparameter to set the following configurations:-

Exclude all cores from the

OvsPmdCoreListand theOvsDpdkCoreList. - Include all remaining cores.

- Pair the sibling threads together.

-

Exclude all cores from the

- NovaComputeCpuSharedSet

-

Sets the cores to be used for emulator threads. This defines the value of the nova.conf parameter

cpu_shared_set. The recommended value for this parameter matches the value set forOvsDpdkCoreList. - IsolCpusList

A set of CPU cores isolated from the host processes. This parameter is used as the

isolated_coresvalue in thecpu-partitioning-variable.conffile for thetuned-profiles-cpu-partitioningcomponent. Use theIsolCpusListparameter to set the following configurations:-

Match the list of cores in

OvsPmdCoreListandNovaVcpuPinSet. - Pair the sibling threads together.

-

Match the list of cores in

- OvsDpdkCoreList

Provides CPU cores for non-datapath OVS-DPDK processes, such as handler and revalidator threads. This parameter has no impact on overall datapath performance on multi-NUMA node hardware. This parameter is used for the

dpdk-lcore-maskvalue in OVS, and these cores are shared with the host. Use theOvsDpdkCoreListparameter to set the following configurations:- Allocate the first physical core and sibling thread from each NUMA node, even if the NUMA node has no associated DPDK NIC.

-

These cores must be mutually exclusive from the list of cores in

OvsPmdCoreListandNovaVcpuPinSet.

7.4.2. Memory parameters

OVS-DPDK uses the following memory parameters:

- OvsDpdkMemoryChannels

Maps memory channels in the CPU per NUMA node. The

OvsDpdkMemoryChannelsparameter is used by Open vSwitch (OVS) as theother_config:dpdk-extra=”-n <value>”value. Use the following steps to calculate necessary values forOvsDpdkMemoryChannels:-

Use

dmidecode -t memoryor your hardware manual to determine the number of memory channels available. -

Use

ls /sys/devices/system/node/node* -dto determine the number of NUMA nodes. - Divide the number of memory channels available by the number of NUMA nodes.

-

Use

- NovaReservedHostMemory

-

Reserves memory in MB for tasks on the host. This value is used by the Compute node as the

reserved_host_memory_mbvalue innova.conf. The static recommended value is 4096 MB. - OvsDpdkSocketMemory

Specifies the amount of memory in MB to pre-allocate from the hugepage pool, per NUMA node. This value is used by OVS as the

other_config:dpdk-socket-memvalue. Use the following steps to calculate necessary values forOvsDpdkSocketMemory:-

Provide as a comma-separated list. Calculate the

OvsDpdkSocketMemoryvalue from the MTU value of each NIC on the NUMA node. - For a NUMA node without a DPDK NIC, use the static recommendation of 1024 MB (1GB).

The following equation approximates the value for

OvsDpdkSocketMemory:MEMORY_REQD_PER_MTU = (ROUNDUP_PER_MTU + 800) * (4096 * 64) Bytes

- 800 is the overhead value.

- 4096 * 64 is the number of packets in the mempool.

- Add the MEMORY_REQD_PER_MTU for each of the MTU values set on the NUMA node and add another 512 MB as buffer. Round the value up to a multiple of 1024.

-

Provide as a comma-separated list. Calculate the

Sample Calculation - MTU 2000 and MTU 9000

DPDK NICs dpdk0 and dpdk1 are on the same NUMA node 0 and configured with MTUs 9000 and 2000 respectively. The sample calculation to derive the memory required is as follows:

Round off the MTU values to the nearest 1024 bytes.

The MTU value of 9000 becomes 9216 bytes. The MTU value of 2000 becomes 2048 bytes.

The MTU value of 9000 becomes 9216 bytes. The MTU value of 2000 becomes 2048 bytes.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Calculate the required memory for each MTU value based on these rounded byte values.

Memory required for 9000 MTU = (9216 + 800) * (4096*64) = 2625634304 Memory required for 2000 MTU = (2048 + 800) * (4096*64) = 746586112

Memory required for 9000 MTU = (9216 + 800) * (4096*64) = 2625634304 Memory required for 2000 MTU = (2048 + 800) * (4096*64) = 746586112Copy to Clipboard Copied! Toggle word wrap Toggle overflow Calculate the combined total memory required, in bytes.

2625634304 + 746586112 + 536870912 = 3909091328 bytes.

2625634304 + 746586112 + 536870912 = 3909091328 bytes.Copy to Clipboard Copied! Toggle word wrap Toggle overflow This calculation represents (Memory required for MTU of 9000) + (Memory required for MTU of 2000) + (512 MB buffer).

Convert the total memory required into MB.

3909091328 / (1024*1024) = 3728 MB.

3909091328 / (1024*1024) = 3728 MB.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Round this value up to the nearest multiple of 1024.

3724 MB rounds up to 4096 MB.

3724 MB rounds up to 4096 MB.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use this value to set

OvsDpdkSocketMemory.OvsDpdkSocketMemory: “4096,1024”

OvsDpdkSocketMemory: “4096,1024”Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Sample Calculation - MTU 2000

DPDK NICs dpdk0 and dpdk1 are on the same NUMA node 0 and configured with MTUs 2000 and 2000 respectively. The sample calculation to derive the memory required is as follows:

Round off the MTU values to the nearest 1024 bytes.

The MTU value of 2000 becomes 2048 bytes.

The MTU value of 2000 becomes 2048 bytes.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Calculate the required memory for each MTU value based on these rounded byte values.

Memory required for 2000 MTU = (2048 + 800) * (4096*64) = 746586112

Memory required for 2000 MTU = (2048 + 800) * (4096*64) = 746586112Copy to Clipboard Copied! Toggle word wrap Toggle overflow Calculate the combined total memory required, in bytes.

746586112 + 536870912 = 1283457024 bytes.

746586112 + 536870912 = 1283457024 bytes.Copy to Clipboard Copied! Toggle word wrap Toggle overflow This calculation represents (Memory required for MTU of 2000) + (512 MB buffer).

Convert the total memory required into MB.

1283457024 / (1024*1024) = 1224 MB.

1283457024 / (1024*1024) = 1224 MB.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Round this value up to the nearest multiple of 1024.

1224 MB rounds up to 2048 MB.

1224 MB rounds up to 2048 MB.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use this value to set

OvsDpdkSocketMemory.OvsDpdkSocketMemory: “2048,1024”

OvsDpdkSocketMemory: “2048,1024”Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.4.3. Networking parameters

- NeutronDpdkDriverType

-

Sets the driver type used by DPDK. Use the default of

vfio-pci. - NeutronDatapathType

-

Datapath type for OVS bridges. DPDK uses the default value of

netdev. - NeutronVhostuserSocketDir

-

Sets the vhost-user socket directory for OVS. Use

/var/lib/vhost_socketsfor vhost client mode.

7.4.4. Other parameters

- NovaSchedulerDefaultFilters

- Provides an ordered list of filters that the Compute node uses to find a matching Compute node for a requested guest instance.

- VhostuserSocketGroup

-

Sets the vhost-user socket directory group. The default value is

qemu.VhostuserSocketGroupshould be set tohugetlbfsso that the ovs-vswitchd and qemu processes can access the shared hugepages and unix socket used to configure the virtio-net device. This value is role specific and should be applied to any role leveraging OVS-DPDK. - KernelArgs

Provides multiple kernel arguments to

/etc/default/grubfor the Compute node at boot time. Add the following parameters based on your configuration:hugepagesz: Sets the size of the huge pages on a CPU. This value can vary depending on the CPU hardware. Set to 1G for OVS-DPDK deployments (default_hugepagesz=1GB hugepagesz=1G). Use the following command to check for thepdpe1gbCPU flag, to ensure your CPU supports 1G.lshw -class processor | grep pdpe1gb

lshw -class processor | grep pdpe1gbCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

hugepages count: Sets the number of hugepages available. This value depends on the amount of host memory available. Use most of your available memory, excludingNovaReservedHostMemory. You must also configure the huge pages count value within the Red Hat OpenStack Platform flavor associated with your Compute nodes. -

iommu: For Intel CPUs, add“intel_iommu=on iommu=pt”` -

isolcpus: Sets the CPU cores to be tuned. This value matchesIsolCpusList.

7.4.5. Instance extra specifications

Before deploying instances in an NFV environment, create a flavor that utilizes CPU pinning, huge pages, and emulator thread pinning.

- hw:cpu_policy

-

Set the value of this parameter to

dedicated, so that a guest uses pinned CPUs. Instances created from a flavor with this parameter set have an effective overcommit ratio of 1:1. The default isshared. - hw:mem_page_size

Set the value of this parameter to a valid string of a specific value with standard suffix (For example,

4KB,8MB, or1GB). Use 1GB to match the hugepagesz boot parameter. The number of huge pages available for the virtual machines is the boot parameter minus theOvsDpdkSocketMemory. The following values are valid:- small (default) - The smallest page size is used

- large - Only use large page sizes. (2MB or 1GB on x86 architectures)

- any - The compute driver could attempt large pages, but default to small if none are available.

- hw:emulator_threads_policy

-

Set the value of this parameter to

shareso that emulator threads are locked to CPUs that you’ve identified in the heat parameter,NovaComputeCpuSharedSet. If an emulator thread is running on a vCPU being used for the poll mode driver (PMD) or real-time processing, you can experience packet loss or missed deadlines.

7.5. Two NUMA node example OVS-DPDK deployment

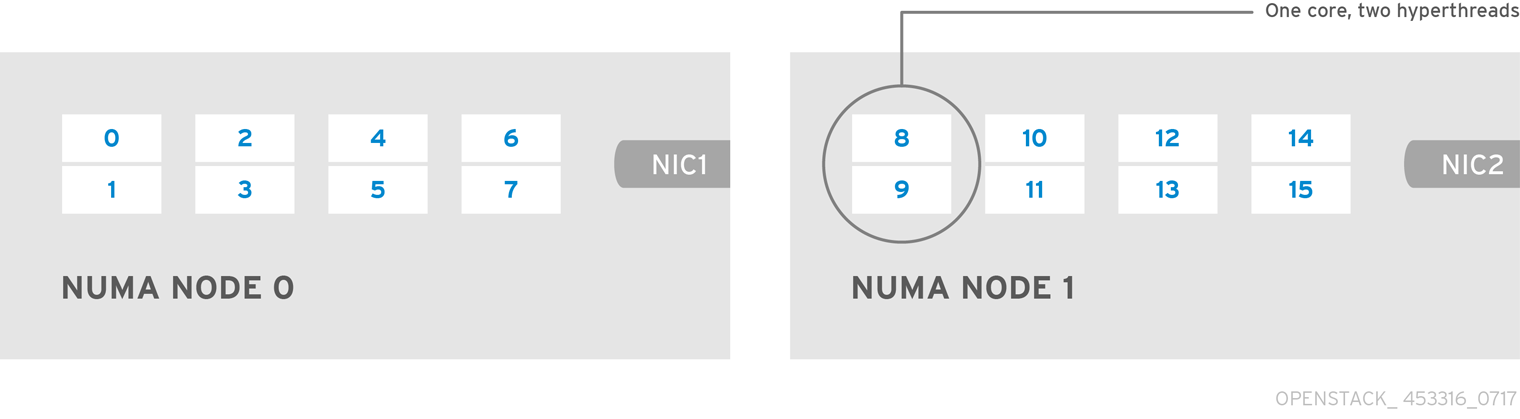

This example Compute node includes the following two NUMA nodes:

- NUMA 0 has cores 0-7. The sibling thread pairs are (0,1), (2,3), (4,5), and (6,7)

- NUMA 1 has cores 8-15. The sibling thread pairs are (8,9), (10,11), (12,13), and (14,15).

- Each NUMA node connects to a physical NIC (NIC1 on NUMA 0 and NIC2 on NUMA 1).

Reserve the first physical cores, or both thread pairs, on each NUMA node (0,1 and 8,9) for non data path DPDK processes (OvsDpdkCoreList).

This example also assumes a 1500 MTU configuration, so the OvsDpdkSocketMemory is the same for all use cases:

OvsDpdkSocketMemory: “1024,1024”

OvsDpdkSocketMemory: “1024,1024”NIC 1 for DPDK, with one physical core for PMD

In this use case, you allocate one physical core on NUMA 0 for PMD. You must also allocate one physical core on NUMA 1, even though there is no DPDK enabled on the NIC for that NUMA node. The remaining cores not reserved for OvsDpdkCoreList are allocated for guest instances. The resulting parameter settings are:

OvsPmdCoreList: “2,3,10,11” NovaVcpuPinSet: “4,5,6,7,12,13,14,15”

OvsPmdCoreList: “2,3,10,11”

NovaVcpuPinSet: “4,5,6,7,12,13,14,15”NIC 1 for DPDK, with two physical cores for PMD

In this use case, you allocate two physical cores on NUMA 0 for PMD. You must also allocate one physical core on NUMA 1, even though there is no DPDK enabled on the NIC for that NUMA node. The remaining cores not reserved for OvsDpdkCoreList are allocated for guest instances. The resulting parameter settings are:

OvsPmdCoreList: “2,3,4,5,10,11” NovaVcpuPinSet: “6,7,12,13,14,15”

OvsPmdCoreList: “2,3,4,5,10,11”

NovaVcpuPinSet: “6,7,12,13,14,15”NIC 2 for DPDK, with one physical core for PMD

In this use case, you allocate one physical core on NUMA 1 for PMD. You must also allocate one physical core on NUMA 0, even though there is no DPDK enabled on the NIC for that NUMA node. The remaining cores (not reserved for OvsDpdkCoreList) are allocated for guest instances. The resulting parameter settings are:

OvsPmdCoreList: “2,3,10,11” NovaVcpuPinSet: “4,5,6,7,12,13,14,15”

OvsPmdCoreList: “2,3,10,11”

NovaVcpuPinSet: “4,5,6,7,12,13,14,15”NIC 2 for DPDK, with two physical cores for PMD

In this use case, you allocate two physical cores on NUMA 1 for PMD. You must also allocate one physical core on NUMA 0, even though there is no DPDK enabled on the NIC for that NUMA node. The remaining cores (not reserved for OvsDpdkCoreList) are allocated for guest instances. The resulting parameter settings are:

OvsPmdCoreList: “2,3,10,11,12,13” NovaVcpuPinSet: “4,5,6,7,14,15”

OvsPmdCoreList: “2,3,10,11,12,13”

NovaVcpuPinSet: “4,5,6,7,14,15”NIC 1 and NIC2 for DPDK, with two physical cores for PMD

In this use case, you allocate two physical cores on each NUMA node for PMD. The remaining cores (not reserved for OvsDpdkCoreList) are allocated for guest instances. The resulting parameter settings are:

OvsPmdCoreList: “2,3,4,5,10,11,12,13” NovaVcpuPinSet: “6,7,14,15”

OvsPmdCoreList: “2,3,4,5,10,11,12,13”

NovaVcpuPinSet: “6,7,14,15”7.6. Topology of an NFV OVS-DPDK deployment

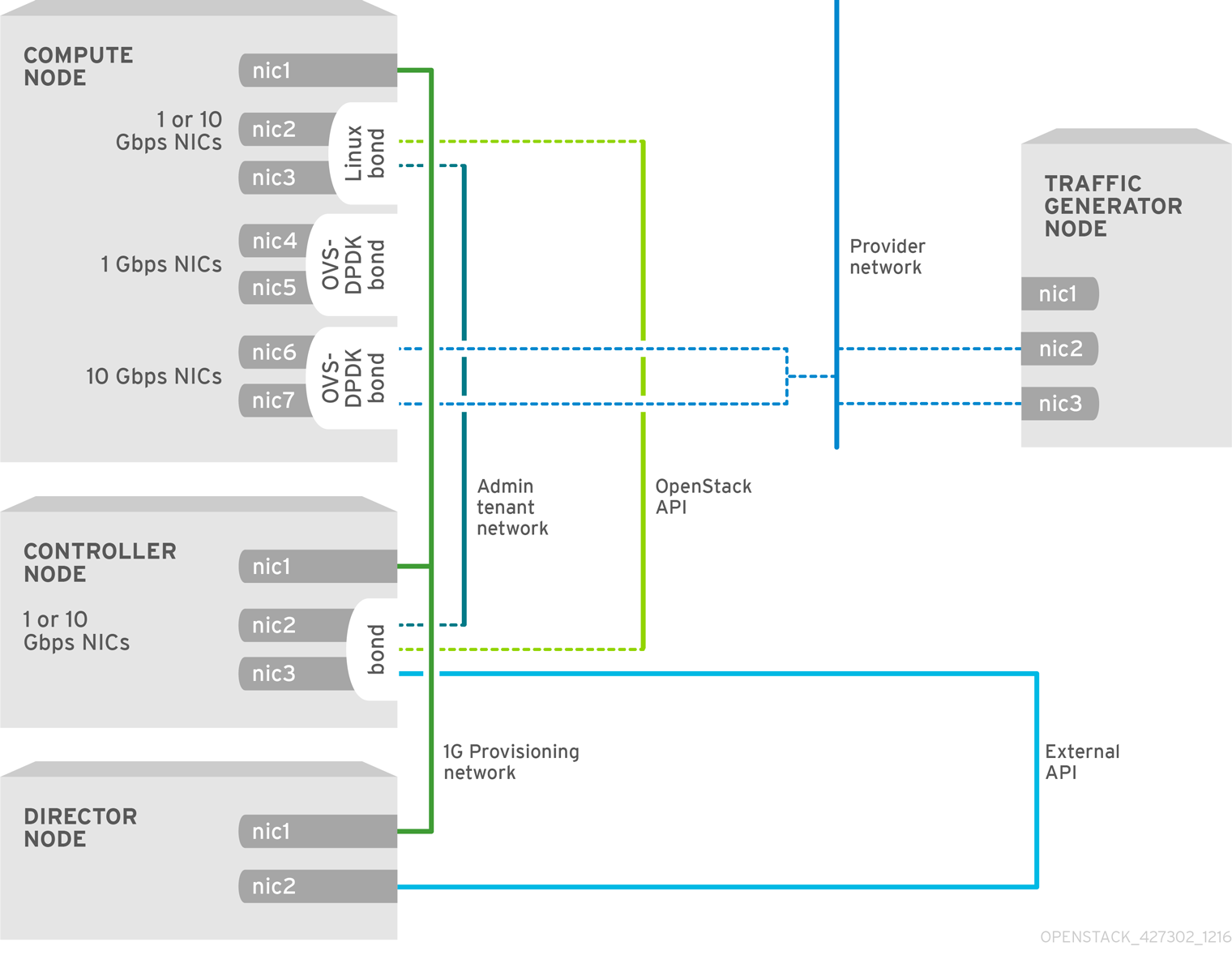

This example deployment shows an Open vSwitch with Data Plane Development Kit (OVS-DPDK) configuration and consists of two virtual network functions (VNFs) with two interfaces each: the management interface represented by mgt, and the data plane interface. In the OVS-DPDK deployment, the VNFs run with inbuilt DPDK that supports the physical interface. OVS-DPDK manages the bonding at the vSwitch level. In an OVS-DPDK deployment, it is recommended that you do not mix kernel and OVS-DPDK NICs as this can lead to performance degradation. To separate the management (mgt) network connected to the Base provider network for the virtual machine, ensure you have additional NICs. The Compute node consists of two regular NICs for the Red Hat OpenStack Platform (RHOSP) API management that can be reused by the Ceph API but cannot be shared with any RHOSP tenant.

NFV OVS-DPDK topology

The following image shows the topology for OVS_DPDK for the NFV use case. It consists of Compute and Controller nodes with 1 or 10 Gbps NICs, and the director node.