此内容没有您所选择的语言版本。

Chapter 1. Overview

Red Hat Ceph Storage is a distributed data object store designed to provide excellent performance, reliability and scalability. Distributed object stores are the future of storage, because they accommodate unstructured data, and because clients can use modern object interfaces and legacy interfaces simultaneously. For example:

- APIs in many languages (C/C++, Java, Python)

- RESTful interfaces (S3/Swift)

- Block device interface

- Filesystem interface

The power of Red Hat Ceph Storage can transform your organization’s IT infrastructure and your ability to manage vast amounts of data, especially for cloud computing platforms like Red Hat OpenStack Platform. Red Hat Ceph Storage delivers extraordinary scalability–thousands of clients accessing petabytes to exabytes of data and beyond.

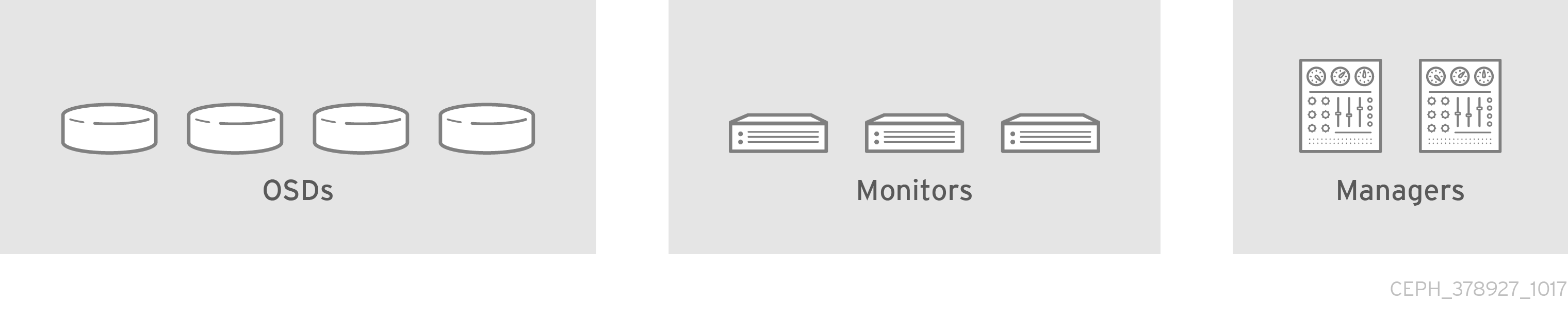

At the heart of every Ceph deployment is the 'Ceph Storage Cluster.' It consists of three types of daemons:

- Ceph OSD Daemon: Ceph OSDs store data on behalf of Ceph clients. Additionally, Ceph OSDs utilize the CPU, memory and networking of Ceph nodes to perform data replication, erasure coding, rebalancing, recovery, monitoring and reporting functions.

- Ceph Monitor: A Ceph monitor maintains a master copy of the Ceph Storage cluster map with the current state of the storage cluster. Monitors require high consistency, and use Paxos to ensure agreement about the state of the Ceph Storage cluster.

- Ceph Manager: New in RHCS 3, a Ceph Manager maintains detailed information about placement groups, process metadata and host metadata in lieu of the Ceph Monitor—significantly improving performance at scale. The Ceph Manager handles execution of many of the read-only Ceph CLI queries, such as placement group statistics. The Ceph Manager also provides the RESTful monitoring APIs.

Ceph client interfaces read data from and write data to the Ceph storage cluster. Clients need the following data to communicate with the Ceph storage cluster:

-

The Ceph configuration file, or the cluster name (usually

ceph) and the monitor address - The pool name

- The user name and the path to the secret key.

Ceph clients maintain object IDs and the pool name(s) where they store the objects. However, they do not need to maintain an object-to-OSD index or communicate with a centralized object index to look up object locations. To store and retrieve data, Ceph clients access a Ceph monitor and retrieve the latest copy of the storage cluster map. Then, Ceph clients provide an object name and pool name to librados, which computes an object’s placement group and the primary OSD for storing and retrieving data using the CRUSH (Controlled Replication Under Scalable Hashing) algorithm. The Ceph client connects to the primary OSD where it may perform read and write operations. There is no intermediary server, broker or bus between the client and the OSD.

When an OSD stores data, it receives data from a Ceph client—whether the client is a Ceph Block Device, a Ceph Object Gateway, a Ceph Filesystem or another interface—and it stores the data as an object.

An object ID is unique across the entire cluster, not just an OSD’s storage media.

Ceph OSDs store all data as objects in a flat namespace. There are no hierarchies of directories. An object has a cluster-wide unique identifier, binary data, and metadata consisting of a set of name/value pairs.

Ceph clients define the semantics for the client’s data format. For example, the Ceph block device maps a block device image to a series of objects stored across the cluster.

Objects consisting of a unique ID, data, and name/value paired metadata can represent both structured and unstructured data, as well as legacy and leading edge data storage interfaces.