此内容没有您所选择的语言版本。

Chapter 14. Benchmarking And Tweaking

14.1. Find The Best Solver Configuration

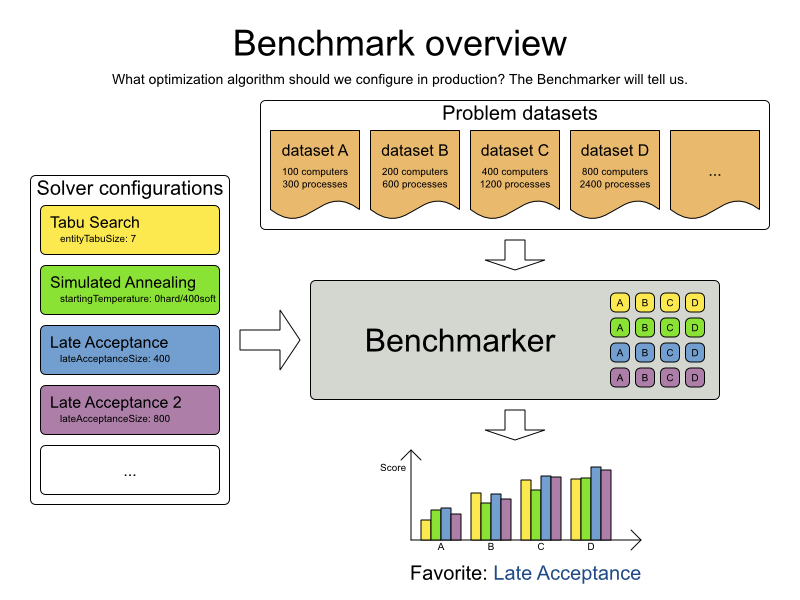

Planner supports several optimization algorithms, so you’re probably wondering which is the best one? Although some optimization algorithms generally perform better than others, it really depends on your problem domain. Most solver phases have parameters which can be tweaked. Those parameters can influence the results a lot, even though most solver phases work pretty well out-of-the-box.

Luckily, Planner includes a benchmarker, which allows you to play out different solver phases with different settings against each other in development, so you can use the best configuration for your planning problem in production.

14.2. Benchmark Configuration

14.2.1. Add Dependency On optaplanner-benchmark

The benchmarker is in a separate artifact called optaplanner-benchmark.

If you use Maven, add a dependency in your pom.xml file:

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-benchmark</artifactId>

</dependency>

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-benchmark</artifactId>

</dependency>

This is similar for Gradle, Ivy and Buildr. The version must be exactly the same as the optaplanner-core version used (which is automatically the case if you import optaplanner-bom).

If you use Ant, you’ve probably already copied the required JARs from the downloaded ZIP’s binaries directory.

14.2.2. Build And Run A PlannerBenchmark

Build a PlannerBenchmark instance with a PlannerBenchmarkFactory. Configure it with a benchmark configuration XML file, provided as a classpath resource:

PlannerBenchmarkFactory plannerBenchmarkFactory = PlannerBenchmarkFactory.createFromXmlResource(

"org/optaplanner/examples/nqueens/benchmark/nqueensBenchmarkConfig.xml");

PlannerBenchmark plannerBenchmark = plannerBenchmarkFactory.buildPlannerBenchmark();

plannerBenchmark.benchmark();

PlannerBenchmarkFactory plannerBenchmarkFactory = PlannerBenchmarkFactory.createFromXmlResource(

"org/optaplanner/examples/nqueens/benchmark/nqueensBenchmarkConfig.xml");

PlannerBenchmark plannerBenchmark = plannerBenchmarkFactory.buildPlannerBenchmark();

plannerBenchmark.benchmark();A benchmark configuration file looks like this:

This PlannerBenchmark will try 3 configurations (Tabu Search, Simulated Annealing and Late Acceptance) on 2 data sets (32queens and 64queens), so it will run 6 solvers.

Every <solverBenchmark> element contains a solver configuration and one or more <inputSolutionFile> elements. It will run the solver configuration on each of those unsolved solution files. The element name is optional, because it is generated if absent. The inputSolutionFile is read by a SolutionFileIO (relative to the working directory).

Use a forward slash (/) as the file separator (for example in the element <inputSolutionFile>). That will work on any platform (including Windows).

Do not use backslash (\) as the file separator: that breaks portability because it does not work on Linux and Mac.

The benchmark report will be written in the directory specified the <benchmarkDirectory> element (relative to the working directory).

It’s recommended that the benchmarkDirectory is a directory ignored for source control and not cleaned by your build system. This way the generated files are not bloating your source control and they aren’t lost when doing a clean build. Usually that directory is called local.

If an Exception or Error occurs in a single benchmark, the entire Benchmarker will not fail-fast (unlike everything else in Planner). Instead, the Benchmarker will continue to run all other benchmarks, write the benchmark report and then fail (if there is at least 1 failing single benchmark). The failing benchmarks will be clearly marked as such in the benchmark report.

14.2.2.1. Inherited solver benchmark

To lower verbosity, the common parts of multiple <solverBenchmark> elements are extracted to the <inheritedSolverBenchmark> element. Every property can still be overwritten per <solverBenchmark> element. Note that inherited solver phases such as <constructionHeuristic> or <localSearch> are not overwritten but instead are added to the tail of the solver phases list.

14.2.3.1. SolutionFileIO Interface

The benchmarker needs to be able to read the input files to load a Solution. Also, it might need to write the best Solution of each benchmark to an output file. For that it uses a class that implements the SolutionFileIO interface:

The SolutionFileIO interface is in the optaplanner-persistence-common JAR (which is a dependency of the optaplanner-benchmark JAR).

By default, a benchmarker uses a XStreamSolutionFileIO instance to read and write solutions.

It’s required to tell the benchmarker about your Solution class which is annotated with XStream annotations:

<problemBenchmarks>

<xStreamAnnotatedClass>org.optaplanner.examples.nqueens.domain.NQueens</xStreamAnnotatedClass>

<inputSolutionFile>data/nqueens/unsolved/32queens.xml</inputSolutionFile>

...

</problemBenchmarks>

<problemBenchmarks>

<xStreamAnnotatedClass>org.optaplanner.examples.nqueens.domain.NQueens</xStreamAnnotatedClass>

<inputSolutionFile>data/nqueens/unsolved/32queens.xml</inputSolutionFile>

...

</problemBenchmarks>

Those input files need to have been written with a XStreamSolutionFileIO instance, not just any XStream instance, because the XStreamSolutionFileIO uses a customized XStream instance.

XStream (and XML in general) is a very verbose format. Reading or writing very large datasets in this format can cause an OutOfMemoryError and performance degradation.

14.2.3.3. Custom SolutionFileIO

Alternatively, write your own SolutionFileIO implementation and configure it with the solutionFileIOClass element:

<problemBenchmarks>

<solutionFileIOClass>org.optaplanner.examples.machinereassignment.persistence.MachineReassignmentFileIO</solutionFileIOClass>

<inputSolutionFile>data/machinereassignment/import/model_a1_1.txt</inputSolutionFile>

...

</problemBenchmarks>

<problemBenchmarks>

<solutionFileIOClass>org.optaplanner.examples.machinereassignment.persistence.MachineReassignmentFileIO</solutionFileIOClass>

<inputSolutionFile>data/machinereassignment/import/model_a1_1.txt</inputSolutionFile>

...

</problemBenchmarks>

It’s recommended that output files can be read as input files, which also implies that getInputFileExtension() and getOutputFileExtension() return the same value.

A SolutionFileIO implementation must be thread-safe.

The benchmark configuration currently expects an <inputSolutionFile> element for each dataset. There are 2 ways to deal with this if your dataset is in a database or another type of repository:

-

Extract the datasets from the database and serialize them to a local file (for example as XML with

XStreamSolutionFileIO). Then use those files an<inputSolutionFile>elements. -

For each dataset, create a text file that holds the unique ID of the dataset. Write a custom SolutionFileIO that reads that identifier, connects to the database and extract the problem identified by that ID. Configure those text files as

<inputSolutionFile>elements.

Local files are always faster and don’t require a network connection.

14.2.4. Warming Up The HotSpot Compiler

Without a warm up, the results of the first (or first few) benchmarks are not reliable, because they will have lost CPU time on HotSpot JIT compilation (and possibly DRL compilation too).

To avoid that distortion, the benchmarker can run some of the benchmarks for a specified amount of time, before running the real benchmarks. Generally, a warm up of 30 seconds suffices:

<plannerBenchmark> ... <warmUpSecondsSpentLimit>30</warmUpSecondsSpentLimit> ... </plannerBenchmark>

<plannerBenchmark>

...

<warmUpSecondsSpentLimit>30</warmUpSecondsSpentLimit>

...

</plannerBenchmark>The warm up time budget does not include the time it takes to load the datasets. With large datasets, this can cause the warm up to run considerably longer than specified in the configuration.

14.2.5. Benchmark Blueprint: A Predefined Configuration

To quickly configure and run a benchmark for typical solver configs, use a solverBenchmarkBluePrint instead of solverBenchmarks:

The following SolverBenchmarkBluePrintTypes are supported:

-

EVERY_CONSTRUCTION_HEURISTIC_TYPE: Run every Construction Heuristic type (First Fit, First Fit Decreasing, Cheapest Insertion, …). -

EVERY_LOCAL_SEARCH_TYPE: Run every Local Search type (Tabu Search, Late Acceptance, …) with the default Construction Heuristic. -

EVERY_CONSTRUCTION_HEURISTIC_TYPE_WITH_EVERY_LOCAL_SEARCH_TYPE: Run every Construction Heuristic type with every Local Search type.

14.2.6. Write The Output Solution Of Benchmark Runs

The best solution of each benchmark run can be written in the benchmarkDirectory. By default, this is disabled, because the files are rarely used and considered bloat. Also, on large datasets, writing the best solution of each single benchmark can take quite some time and memory (causing an OutOfMemoryError), especially in a verbose format like XStream XML.

To write those solutions in the benchmarkDirectory, enable writeOutputSolutionEnabled:

<problemBenchmarks>

...

<writeOutputSolutionEnabled>true</writeOutputSolutionEnabled>

...

</problemBenchmarks>

<problemBenchmarks>

...

<writeOutputSolutionEnabled>true</writeOutputSolutionEnabled>

...

</problemBenchmarks>14.2.7. Benchmark Logging

Benchmark logging is configured like the Solver logging.

To separate the log messages of each single benchmark run into a separate file, use the MDC with key singleBenchmark.name in a sifting appender. For example with Logback in logback.xml:

14.3. Benchmark Report

14.3.1. HTML Report

After running a benchmark, an HTML report will be written in the benchmarkDirectory with the index.html filename. Open it in your browser. It has a nice overview of your benchmark including:

- Summary statistics: graphs and tables

-

Problem statistics per

inputSolutionFile: graphs and CSV - Each solver configuration (ranked): Handy to copy and paste

- Benchmark information: settings, hardware, …

Graphs are generated by the excellent JFreeChart library.

The HTML report will use your default locale to format numbers. If you share the benchmark report with people from another country, consider overwriting the locale accordingly:

14.3.2. Ranking The Solvers

The benchmark report automatically ranks the solvers. The Solver with rank 0 is called the favorite Solver: it performs best overall, but it might not be the best on every problem. It’s recommended to use that favorite Solver in production.

However, there are different ways of ranking the solvers. Configure it like this:

The following solverRankingTypes are supported:

-

TOTAL_SCORE(default): Maximize the overall score, so minimize the overall cost if all solutions would be executed. -

WORST_SCORE: Minimize the worst case scenario. -

TOTAL_RANKING: Maximize the overall ranking. Use this if your datasets differ greatly in size or difficulty, producing a difference inScoremagnitude.

Solvers with at least one failed single benchmark do not get a ranking. Solvers with not fully initialized solutions are ranked worse.

You can also use a custom ranking, by implementing a Comparator:

<benchmarkReport>

<solverRankingComparatorClass>...TotalScoreSolverRankingComparator</solverRankingComparatorClass>

</benchmarkReport>

<benchmarkReport>

<solverRankingComparatorClass>...TotalScoreSolverRankingComparator</solverRankingComparatorClass>

</benchmarkReport>

Or by implementing a SolverRankingWeightFactory:

<benchmarkReport>

<solverRankingWeightFactoryClass>...TotalRankSolverRankingWeightFactory</solverRankingWeightFactoryClass>

</benchmarkReport>

<benchmarkReport>

<solverRankingWeightFactoryClass>...TotalRankSolverRankingWeightFactory</solverRankingWeightFactoryClass>

</benchmarkReport>14.4. Summary Statistics

14.4.1. Best Score Summary (Graph And Table)

Shows the best score per inputSolutionFile for each solver configuration.

Useful for visualizing the best solver configuration.

Figure 14.1. Best Score Summary Statistic

14.4.2. Best Score Scalability Summary (Graph)

Shows the best score per problem scale for each solver configuration.

Useful for visualizing the scalability of each solver configuration.

The problem scale will report 0 if any @ValueRangeProvider method signature returns ValueRange (instead of CountableValueRange or Collection). See ValueRangeFactory for the difference.

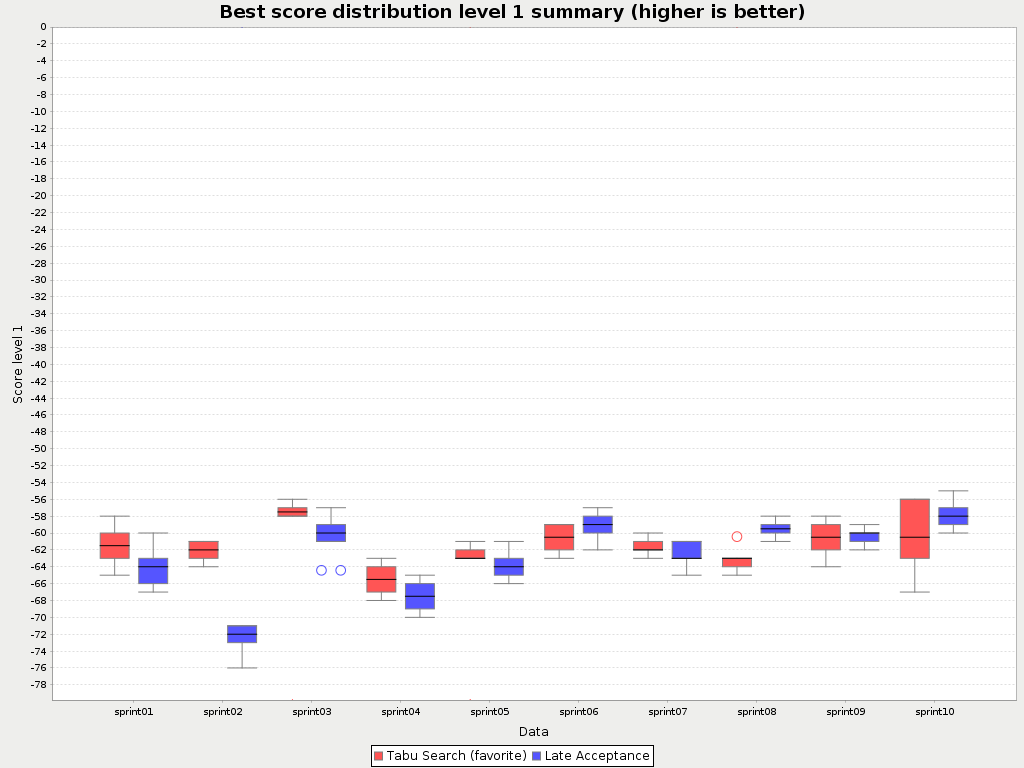

14.4.3. Best Score Distribution Summary (Graph)

Shows the best score distribution per inputSolutionFile for each solver configuration.

Useful for visualizing the reliability of each solver configuration.

Figure 14.2. Best Score Distribution Summary Statistic

Enable statistical benchmarking to use this summary.

Shows the winning score difference per inputSolutionFile for each solver configuration. The winning score difference is the score difference with the score of the winning solver configuration for that particular inputSolutionFile.

Useful for zooming in on the results of the best score summary.

Shows the return on investment (ROI) per inputSolutionFile for each solver configuration if you’d upgrade from the worst solver configuration for that particular inputSolutionFile.

Useful for visualizing the return on investment (ROI) to decision makers.

Shows the score calculation speed: the average calculation count per second per problem scale for each solver configuration.

Useful for comparing different score calculators and/or score rule implementations (presuming that the solver configurations do not differ otherwise). Also useful to measure the scalability cost of an extra constraint.

14.4.7. Time Spent Summary (Graph And Table)

Shows the time spent per inputSolutionFile for each solver configuration. This is pointless if it’s benchmarking against a fixed time limit.

Useful for visualizing the performance of construction heuristics (presuming that no other solver phases are configured).

14.4.8. Time Spent Scalability Summary (Graph)

Shows the time spent per problem scale for each solver configuration. This is pointless if it’s benchmarking against a fixed time limit.

Useful for extrapolating the scalability of construction heuristics (presuming that no other solver phases are configured).

14.4.9. Best Score Per Time Spent Summary (Graph)

Shows the best score per time spent for each solver configuration. This is pointless if it’s benchmarking against a fixed time limit.

Useful for visualizing trade-off between the best score versus the time spent for construction heuristics (presuming that no other solver phases are configured).

14.5. Statistic Per Dataset (Graph And CSV)

14.5.1. Enable A Problem Statistic

The benchmarker supports outputting problem statistics as graphs and CSV (comma separated values) files to the benchmarkDirectory. To configure one, add a problemStatisticType line:

Multiple problemStatisticType elements are allowed.

These statistic per dataset can slow down the solver noticeably, which affects the benchmark results. That’s why they are optional and not enabled by default.

The non-optional summary statistics cannot slow down the solver noticeably.

The following types are supported:

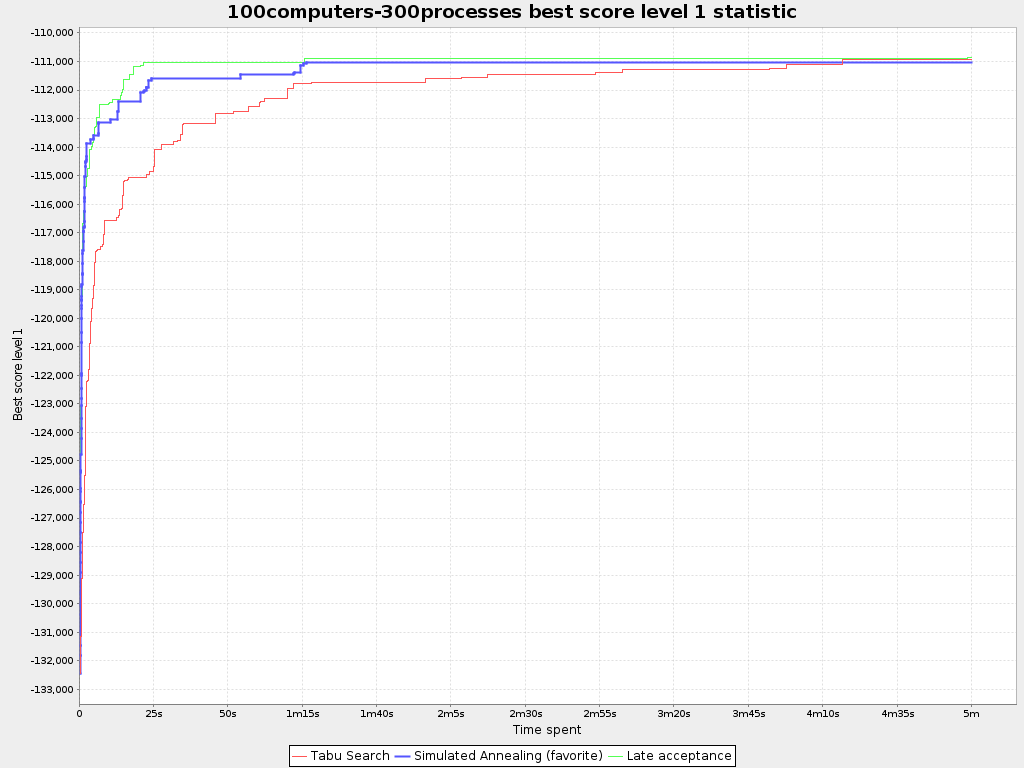

14.5.2. Best Score Over Time Statistic (Graph And CSV)

To see how the best score evolves over time, add:

<problemBenchmarks>

...

<problemStatisticType>BEST_SCORE</problemStatisticType>

</problemBenchmarks>

<problemBenchmarks>

...

<problemStatisticType>BEST_SCORE</problemStatisticType>

</problemBenchmarks>Figure 14.3. Best Score Over Time Statistic

A time gradient based algorithm (such as Simulated Annealing) will have a different statistic if it’s run with a different time limit configuration. That’s because this Simulated Annealing implementation automatically determines its velocity based on the amount of time that can be spent. On the other hand, for the Tabu Search and Late Annealing, what you see is what you’d get.

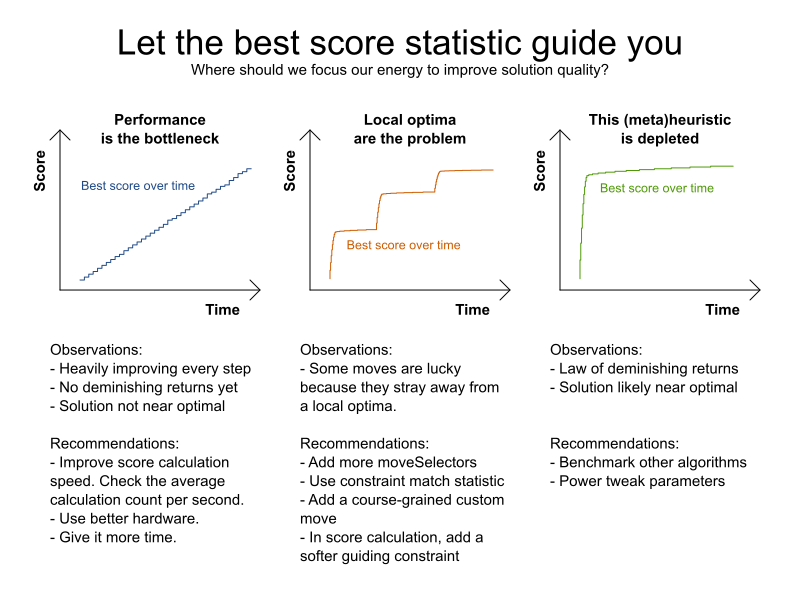

The best score over time statistic is very useful to detect abnormalities, such as a potential score trap which gets the solver temporarily stuck in a local optimum.

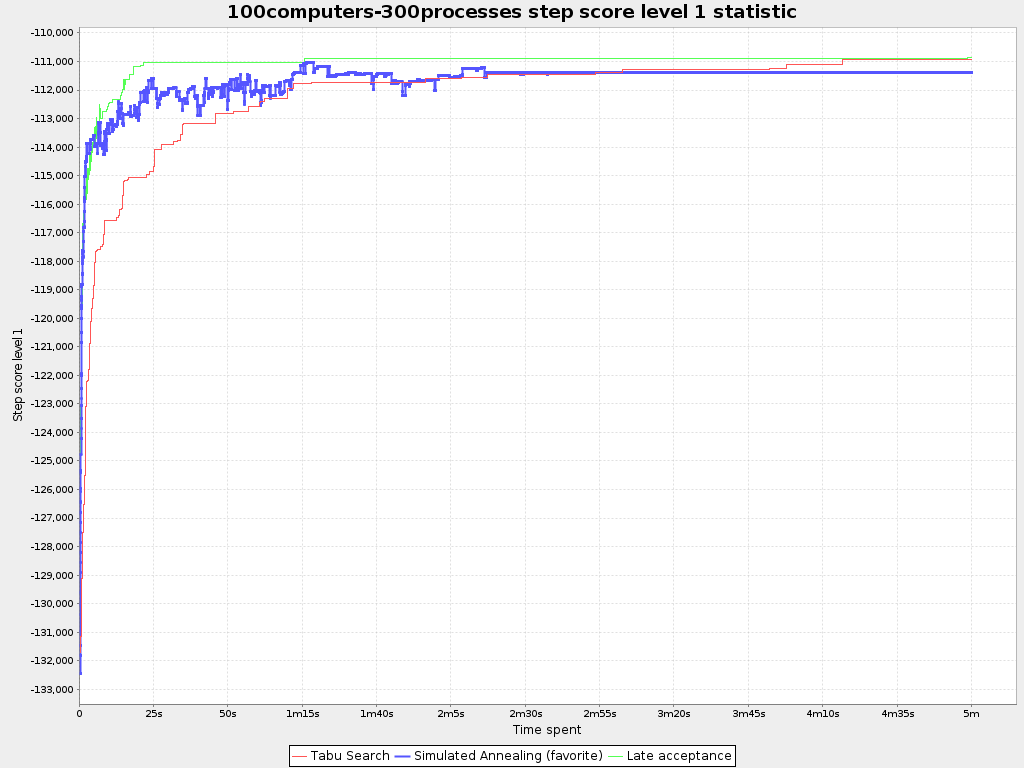

14.5.3. Step Score Over Time Statistic (Graph And CSV)

To see how the step score evolves over time, add:

<problemBenchmarks>

...

<problemStatisticType>STEP_SCORE</problemStatisticType>

</problemBenchmarks>

<problemBenchmarks>

...

<problemStatisticType>STEP_SCORE</problemStatisticType>

</problemBenchmarks>Figure 14.4. Step Score Over Time Statistic

Compare the step score statistic with the best score statistic (especially on parts for which the best score flatlines). If it hits a local optimum, the solver should take deteriorating steps to escape it. But it shouldn’t deteriorate too much either.

The step score statistic has been seen to slow down the solver noticeably due to GC stress, especially for fast stepping algorithms (such as Simulated Annealing and Late Acceptance).

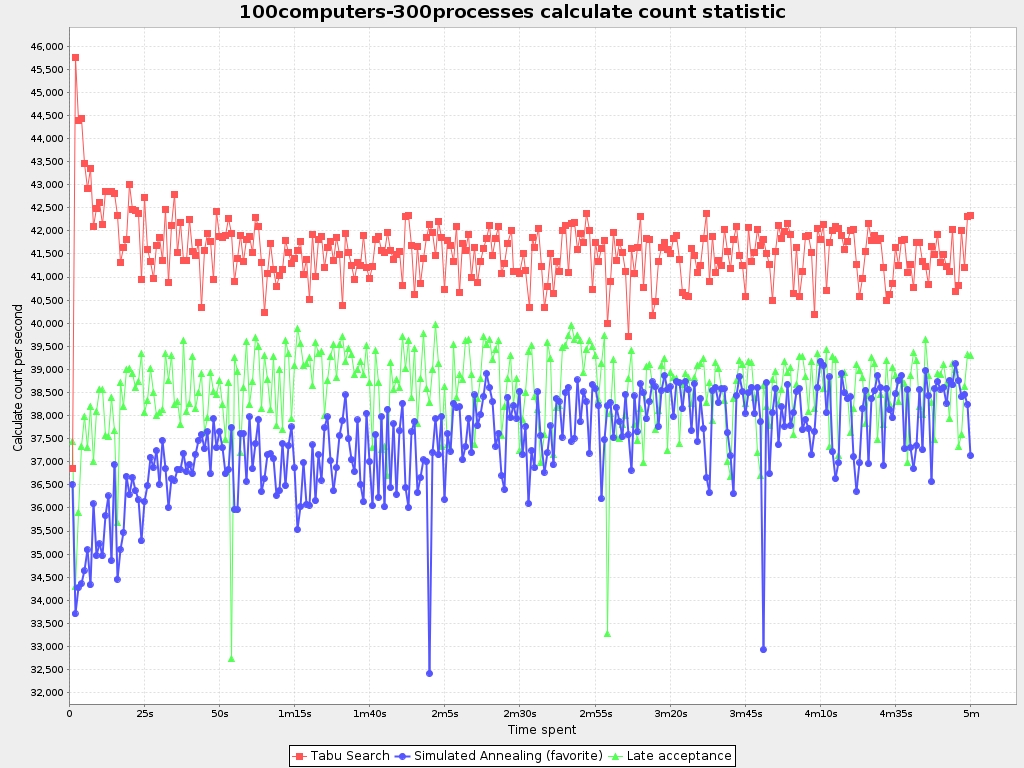

To see how fast the scores are calculated, add:

<problemBenchmarks>

...

<problemStatisticType>CALCULATE_COUNT_PER_SECOND</problemStatisticType>

</problemBenchmarks>

<problemBenchmarks>

...

<problemStatisticType>CALCULATE_COUNT_PER_SECOND</problemStatisticType>

</problemBenchmarks>Figure 14.5. Calculate Count Per Second Statistic

The initial high calculate count is typical during solution initialization: it’s far easier to calculate the score of a solution if only a handful planning entities have been initialized, than when all the planning entities are initialized.

After those few seconds of initialization, the calculate count is relatively stable, apart from an occasional stop-the-world garbage collector disruption.

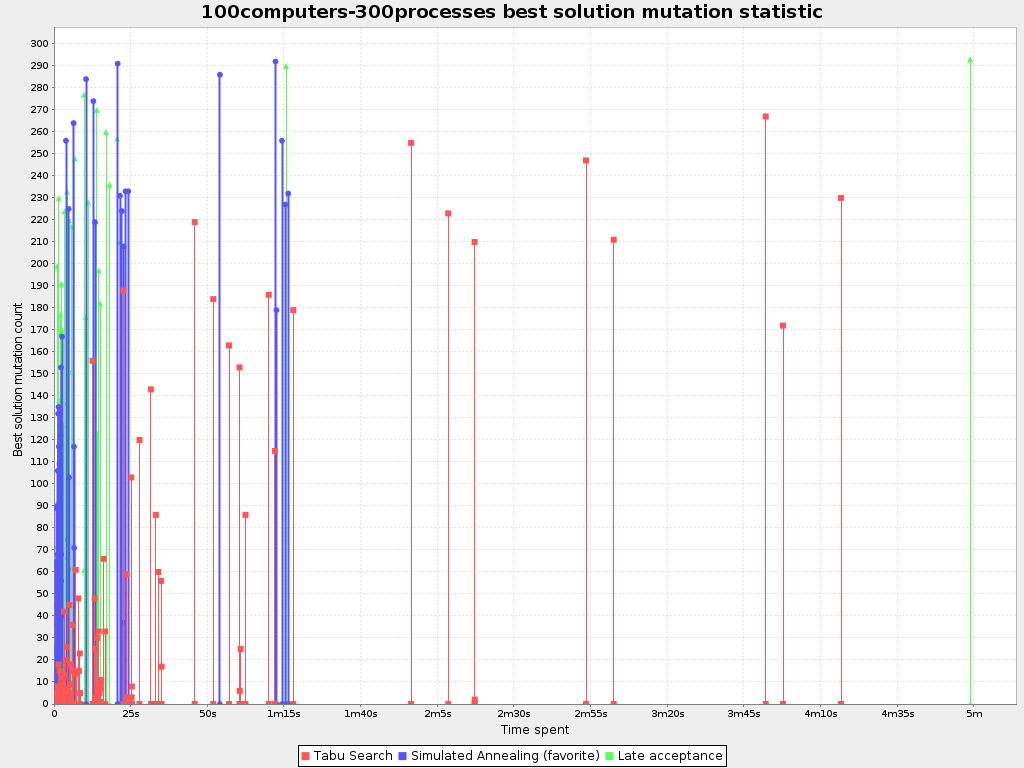

To see how much each new best solution differs from the previous best solution, by counting the number of planning variables which have a different value (not including the variables that have changed multiple times but still end up with the same value), add:

<problemBenchmarks>

...

<problemStatisticType>BEST_SOLUTION_MUTATION</problemStatisticType>

</problemBenchmarks>

<problemBenchmarks>

...

<problemStatisticType>BEST_SOLUTION_MUTATION</problemStatisticType>

</problemBenchmarks>Figure 14.6. Best Solution Mutation Over Time Statistic

Use Tabu Search—an algorithm that behaves like a human—to get an estimation on how difficult it would be for a human to improve the previous best solution to that new best solution.

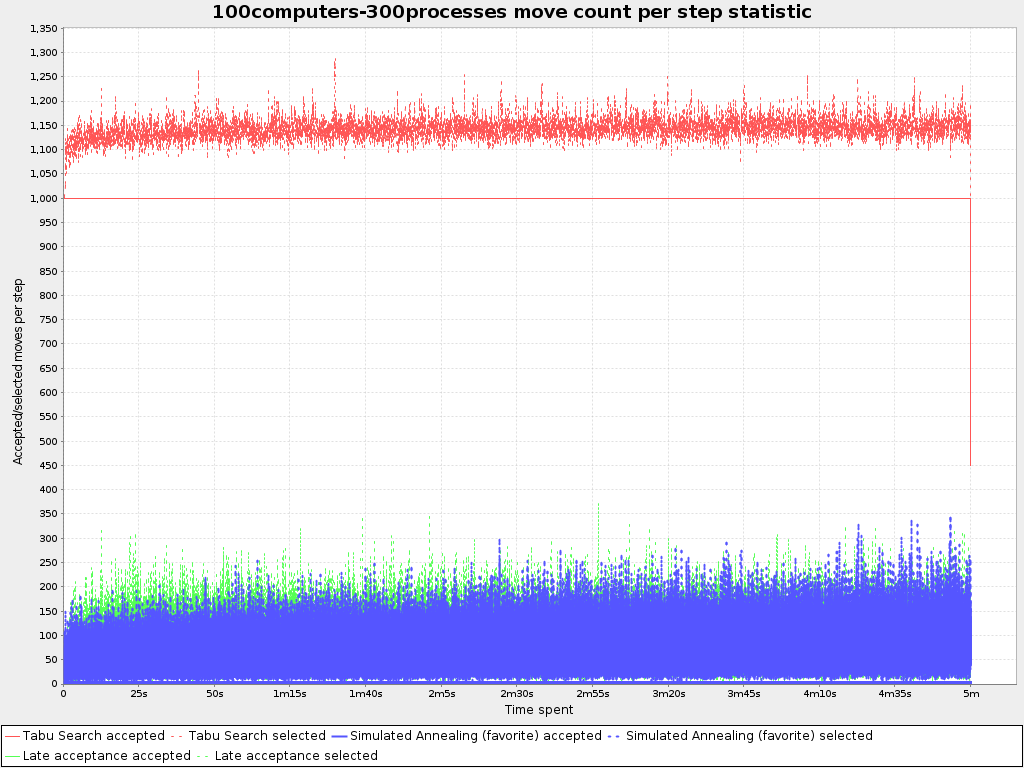

14.5.6. Move Count Per Step Statistic (Graph And CSV)

To see how the selected and accepted move count per step evolves over time, add:

<problemBenchmarks>

...

<problemStatisticType>MOVE_COUNT_PER_STEP</problemStatisticType>

</problemBenchmarks>

<problemBenchmarks>

...

<problemStatisticType>MOVE_COUNT_PER_STEP</problemStatisticType>

</problemBenchmarks>Figure 14.7. Move Count Per Step Statistic

This statistic has been seen to slow down the solver noticeably due to GC stress, especially for fast stepping algorithms (such as Simulated Annealing and Late Acceptance).

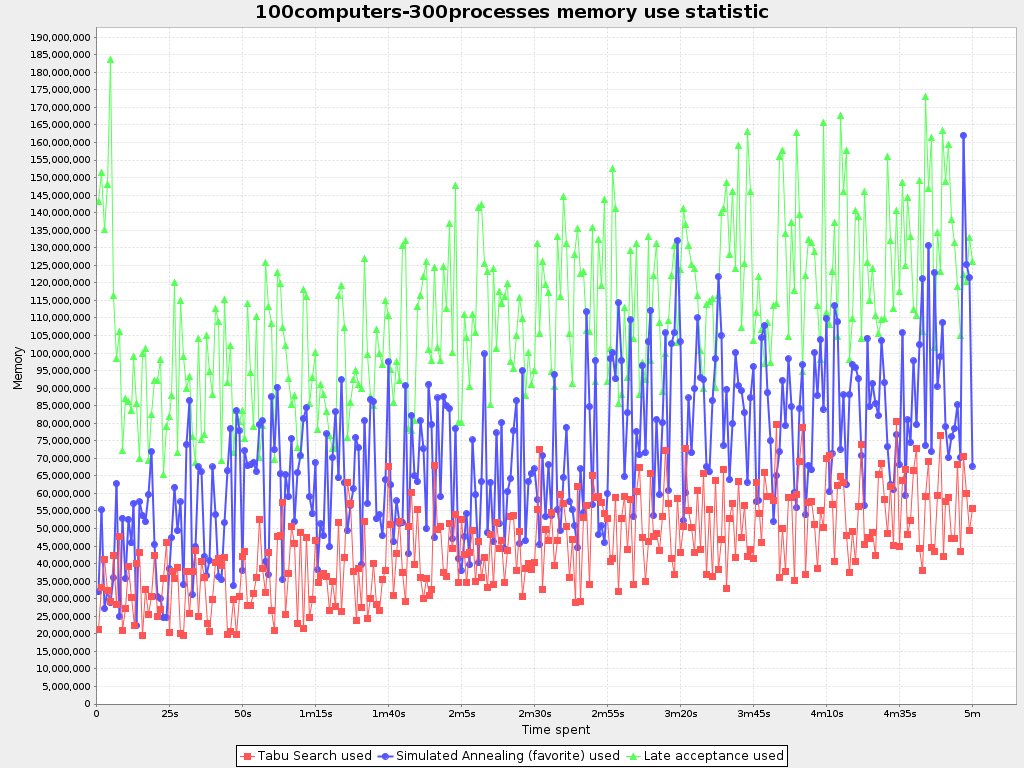

14.5.7. Memory Use Statistic (Graph And CSV)

To see how much memory is used, add:

<problemBenchmarks>

...

<problemStatisticType>MEMORY_USE</problemStatisticType>

</problemBenchmarks>

<problemBenchmarks>

...

<problemStatisticType>MEMORY_USE</problemStatisticType>

</problemBenchmarks>Figure 14.8. Memory Use Statistic

The memory use statistic has been seen to affect the solver noticeably.

14.6. Statistic Per Single Benchmark (Graph And CSV)

14.6.1. Enable A Single Statistic

A single statistic is a statistic for 1 dataset for 1 solver configuration. Unlike a problem statistic, it does not aggregate over solver configurations.

The benchmarker supports outputting single statistics as graphs and CSV (comma separated values) files to the benchmarkDirectory. To configure one, add a singleStatisticType line:

Multiple singleStatisticType elements are allowed.

These statistic per single benchmark can slow down the solver noticeably, which affects the benchmark results. That’s why they are optional and not enabled by default.

The following types are supported:

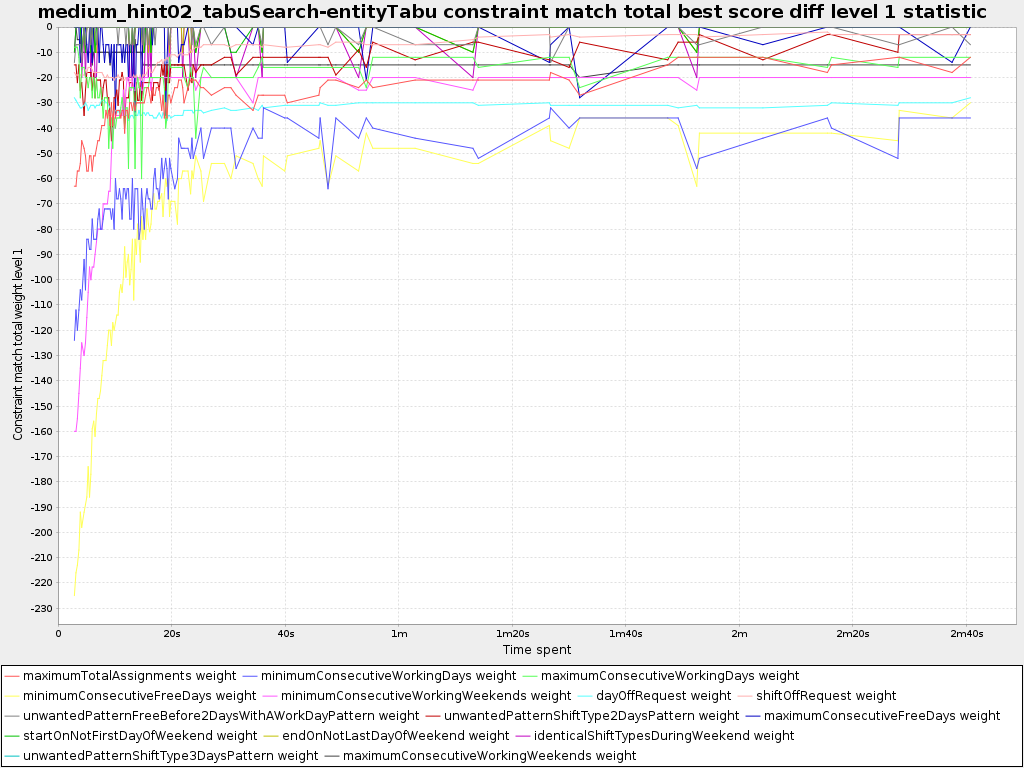

To see which constraints are matched in the best score (and how much) over time, add:

<problemBenchmarks>

...

<singleStatisticType>CONSTRAINT_MATCH_TOTAL_BEST_SCORE</singleStatisticType>

</problemBenchmarks>

<problemBenchmarks>

...

<singleStatisticType>CONSTRAINT_MATCH_TOTAL_BEST_SCORE</singleStatisticType>

</problemBenchmarks>Figure 14.9. Constraint Match Total Best Score Diff Over Time Statistic

Requires the score calculation to support constraint matches. Drools score calculation supports constraint matches automatically, but incremental Java score calculation requires more work.

The constraint match total statistics has been seen to affect the solver noticeably.

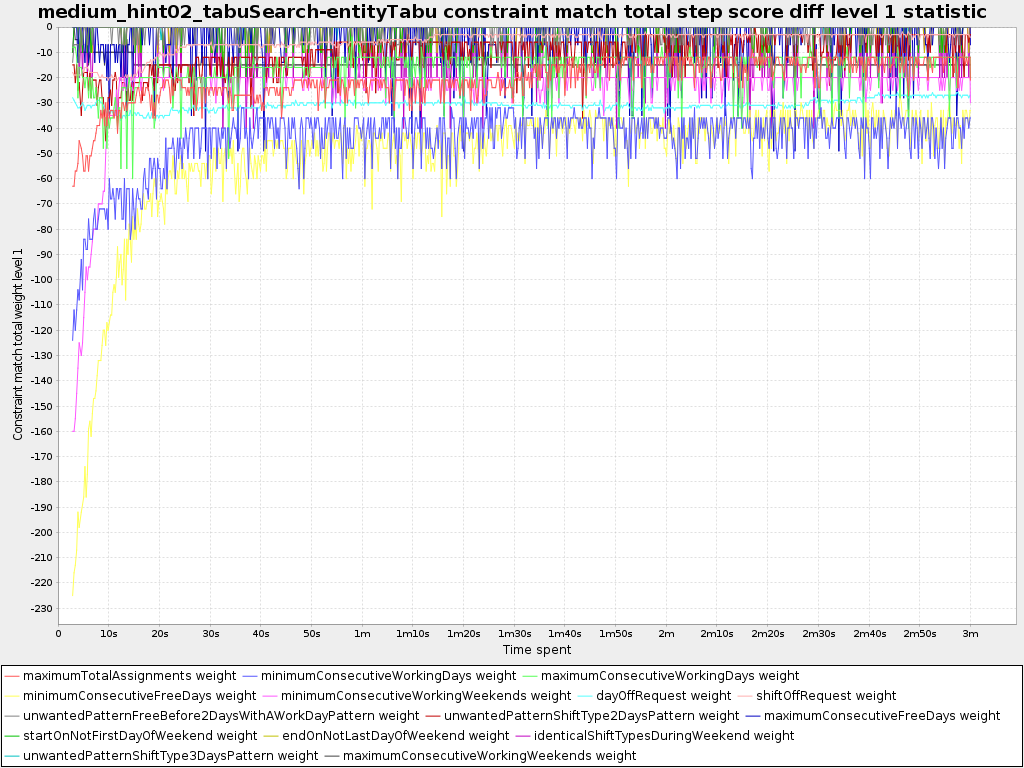

To see which constraints are matched in the step score (and how much) over time, add:

<problemBenchmarks>

...

<singleStatisticType>CONSTRAINT_MATCH_TOTAL_STEP_SCORE</singleStatisticType>

</problemBenchmarks>

<problemBenchmarks>

...

<singleStatisticType>CONSTRAINT_MATCH_TOTAL_STEP_SCORE</singleStatisticType>

</problemBenchmarks>Figure 14.10. Constraint Match Total Step Score Diff Over Time Statistic

Requires the score calculation to support constraint matches. Drools score calculation supports constraint matches automatically, but incremental Java score calculation requires more work.

The constraint match total statistics has been seen to affect the solver noticeably.

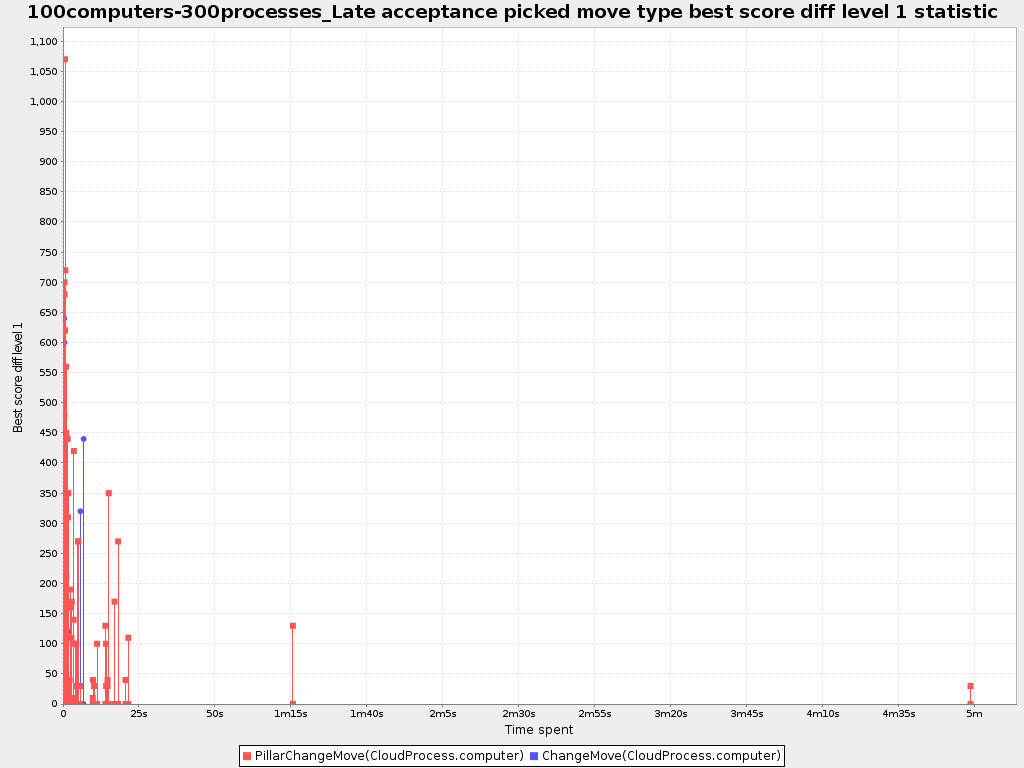

To see which move types improve the best score (and how much) over time, add:

<problemBenchmarks>

...

<singleStatisticType>PICKED_MOVE_TYPE_BEST_SCORE_DIFF</singleStatisticType>

</problemBenchmarks>

<problemBenchmarks>

...

<singleStatisticType>PICKED_MOVE_TYPE_BEST_SCORE_DIFF</singleStatisticType>

</problemBenchmarks>Figure 14.11. Picked Move Type Best Score Diff Over Time Statistic

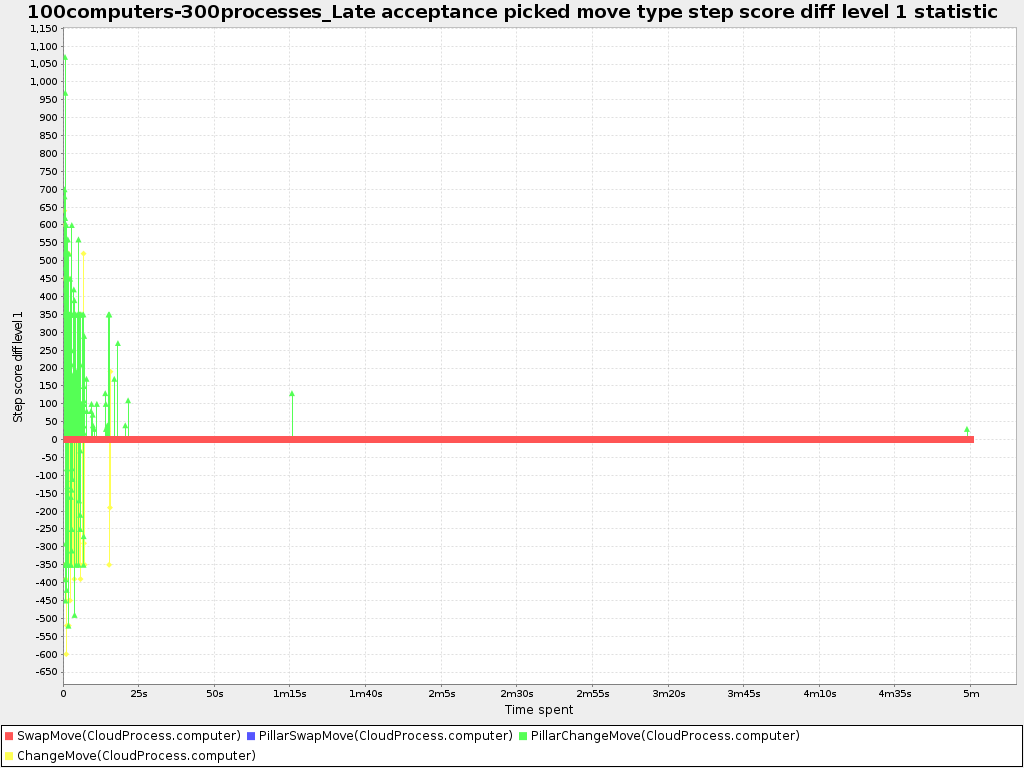

To see how much each winning step affects the step score over time, add:

<problemBenchmarks>

...

<singleStatisticType>PICKED_MOVE_TYPE_STEP_SCORE_DIFF</singleStatisticType>

</problemBenchmarks>

<problemBenchmarks>

...

<singleStatisticType>PICKED_MOVE_TYPE_STEP_SCORE_DIFF</singleStatisticType>

</problemBenchmarks>Figure 14.12. Picked Move Type Step Score Diff Over Time Statistic

14.7. Advanced Benchmarking

14.7.1. Benchmarking Performance Tricks

14.7.1.1. Parallel Benchmarking On Multiple Threads

If you have multiple processors available on your computer, you can run multiple benchmarks in parallel on multiple threads to get your benchmarks results faster:

<plannerBenchmark> ... <parallelBenchmarkCount>AUTO</parallelBenchmarkCount> ... </plannerBenchmark>

<plannerBenchmark>

...

<parallelBenchmarkCount>AUTO</parallelBenchmarkCount>

...

</plannerBenchmark>Running too many benchmarks in parallel will affect the results of benchmarks negatively. Leave some processors unused for garbage collection and other processes.

We tweak parallelBenchmarkCount AUTO to maximize the reliability and efficiency of the benchmark results.

The following parallelBenchmarkCounts are supported:

-

1(default): Run all benchmarks sequentially. -

AUTO: Let Planner decide how many benchmarks to run in parallel. This formula is based on experience. It’s recommended to prefer this over the other parallel enabling options. Static number: The number of benchmarks to run in parallel.

<parallelBenchmarkCount>2</parallelBenchmarkCount>

<parallelBenchmarkCount>2</parallelBenchmarkCount>Copy to Clipboard Copied! Toggle word wrap Toggle overflow JavaScript formula: Formula for the number of benchmarks to run in parallel. It can use the variable

availableProcessorCount. For example:<parallelBenchmarkCount>(availableProcessorCount / 2) + 1</parallelBenchmarkCount>

<parallelBenchmarkCount>(availableProcessorCount / 2) + 1</parallelBenchmarkCount>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The parallelBenchmarkCount is always limited to the number of available processors. If it’s higher, it will be automatically decreased.

If you have a computer with slow or unreliable cooling, increasing the parallelBenchmarkCount above 1 (even on AUTO) may overheat your CPU.

The sensors command can help you detect if this is the case. It is available in the package lm_sensors or lm-sensors in most Linux distributions. There are several freeware tools available for Windows too.

In the future, we will also support multi-JVM benchmarking. This feature is independent of multi-threaded solving or multi-JVM solving.

14.7.2. Statistical Benchmarking

To minimize the influence of your environment and the Random Number Generator on the benchmark results, configure the number of times each single benchmark run is repeated. The results of those runs are statistically aggregated. Each individual result is also visible in the report, as well as plotted in the best score distribution summary.

Just add a <subSingleCount> element to an <inheritedSolverBenchmark> element or in a <solverBenchmark> element:

The subSingleCount defaults to 1 (so no statistical benchmarking).

If subSingleCount is higher than 1, the benchmarker will automatically use a different Random seed for every sub single run, without losing reproducibility (for each sub single index) in EnvironmentMode REPRODUCIBLE and lower.

Matrix benchmarking is benchmarking a combination of value sets. For example: benchmark 4 entityTabuSize values (5, 7, 11 and 13) combined with 3 acceptedCountLimit values (500, 1000 and 2000), resulting in 12 solver configurations.

To reduce the verbosity of such a benchmark configuration, you can use a FreeMarker template for the benchmark configuration instead:

And build it with the class PlannerBenchmarkFactory:

PlannerBenchmarkFactory plannerBenchmarkFactory = PlannerBenchmarkFactory.createFromFreemarkerXmlResource(

"org/optaplanner/examples/cloudbalancing/benchmark/cloudBalancingBenchmarkConfigTemplate.xml.ftl");

PlannerBenchmark plannerBenchmark = plannerBenchmarkFactory.buildPlannerBenchmark();

PlannerBenchmarkFactory plannerBenchmarkFactory = PlannerBenchmarkFactory.createFromFreemarkerXmlResource(

"org/optaplanner/examples/cloudbalancing/benchmark/cloudBalancingBenchmarkConfigTemplate.xml.ftl");

PlannerBenchmark plannerBenchmark = plannerBenchmarkFactory.buildPlannerBenchmark();14.7.4. Benchmark Report Aggregation

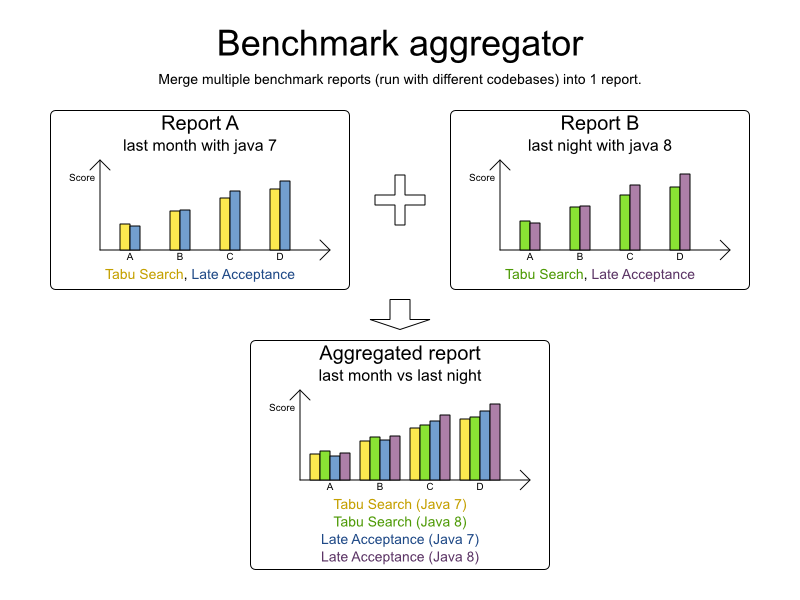

The BenchmarkAggregator takes 1 or more existing benchmarks and merges them into new benchmark report, without actually running the benchmarks again.

This is useful to:

- Report on the impact of code changes: Run the same benchmark configuration before and after the code changes, then aggregate a report.

- Report on the impact of dependency upgrades: Run the same benchmark configuration before and after upgrading the dependency, then aggregate a report.

- Condense a too verbose report: Select only the interesting solver benchmarks from the existing report. This especially useful on template reports to make the graphs readable.

- Partially rerun a benchmark: Rerun part of an existing report (for example only the failed or invalid solvers), then recreate the original intended report with the new values.

To use it, provide a PlannerBenchmarkFactory to the BenchmarkAggregatorFrame to display the GUI:

public static void main(String[] args) {

PlannerBenchmarkFactory plannerBenchmarkFactory = PlannerBenchmarkFactory.createFromXmlResource(

"org/optaplanner/examples/nqueens/benchmark/nqueensBenchmarkConfig.xml");

BenchmarkAggregatorFrame.createAndDisplay(plannerBenchmarkFactory);

}

public static void main(String[] args) {

PlannerBenchmarkFactory plannerBenchmarkFactory = PlannerBenchmarkFactory.createFromXmlResource(

"org/optaplanner/examples/nqueens/benchmark/nqueensBenchmarkConfig.xml");

BenchmarkAggregatorFrame.createAndDisplay(plannerBenchmarkFactory);

}

Despite that it uses a benchmark configuration as input, it ignores all elements of that configuration, except for the elements <benchmarkDirectory> and <benchmarkReport>.

In the GUI, select the interesting benchmarks and click the button to generate the report.

All the input reports which are being merged should have been generated with the same Planner version (excluding hotfix differences) as the BenchmarkAggregator. Using reports from different Planner major or minor versions are not guaranteed to succeed and deliver correct information, because the benchmark report data structure often changes.