此内容没有您所选择的语言版本。

2.2. Performance, Scalability, and Economy

You can deploy GFS in a variety of configurations to suit your needs for performance, scalability, and economy. For superior performance and scalability, you can deploy GFS in a cluster that is connected directly to a SAN. For more economical needs, you can deploy GFS in a cluster that is connected to a LAN with servers that use GNBD (Global Network Block Device).

The following sections provide examples of how GFS can be deployed to suit your needs for performance, scalability, and economy:

Note

The deployment examples in this chapter reflect basic configurations; your needs might require a combination of configurations shown in the examples.

2.2.1. Superior Performance and Scalability

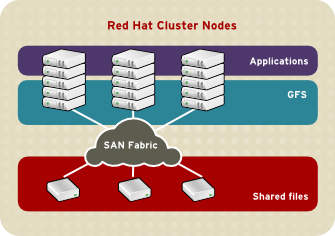

You can obtain the highest shared-file performance when applications access storage directly. The GFS SAN configuration in Figure 2.1, “GFS with a SAN” provides superior file performance for shared files and file systems. Linux applications run directly on GFS nodes. Without file protocols or storage servers to slow data access, performance is similar to individual Linux servers with directly connected storage; yet, each GFS application node has equal access to all data files. GFS supports up to 125 GFS nodes.

Figure 2.1. GFS with a SAN

2.2.2. Economy and Performance

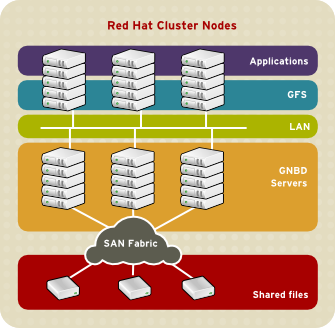

Multiple Linux client applications on a LAN can share the same SAN-based data as shown in Figure 2.2, “GFS and GNBD with a SAN”. SAN block storage is presented to network clients as block storage devices by GNBD servers. From the perspective of a client application, storage is accessed as if it were directly attached to the server in which the application is running. Stored data is actually on the SAN. Storage devices and data can be equally shared by network client applications. File locking and sharing functions are handled by GFS for each network client.

Note

Clients implementing ext2 and ext3 file systems can be configured to access their own dedicated slice of SAN storage.

Figure 2.2. GFS and GNBD with a SAN

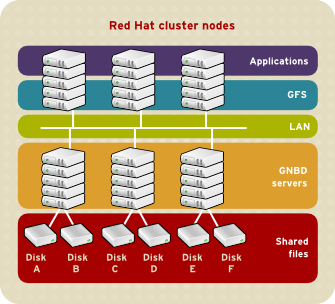

Figure 2.3, “GFS and GNBD with Directly Connected Storage” shows how Linux client applications can take advantage of an existing Ethernet topology to gain shared access to all block storage devices. Client data files and file systems can be shared with GFS on each client. Application failover can be fully automated with Red Hat Cluster Suite.

Figure 2.3. GFS and GNBD with Directly Connected Storage