Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

Chapter 13. Crimson (Technology Preview)

As a storage administrator, the Crimson project is an effort to build a replacement of ceph-osd daemon that is suited to the new reality of low latency, high throughput persistent memory, and NVMe technologies.

The Crimson feature is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs), might not be functionally complete, and Red Hat does not recommend using them for production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. See the support scope for Red Hat Technology Preview features for more details.

13.1. Crimson overview

Crimson is the code name for crimson-osd, which is the next generation ceph-osd for multi-core scalability. It improves performance with fast network and storage devices, employing state-of-the-art technologies that includes DPDK and SPDK. BlueStore continues to support HDDs and SSDs. Crimson aims to be compatible with an earlier version of OSD daemon with the class ceph-osd.

Built on the SeaStar C++ framework, Crimson is a new implementation of the core Ceph object storage daemon (OSD) component and replaces ceph-osd. The crimson-osd minimizes latency and increased CPU processor usage. It uses high-performance asynchronous IO and a new threading architecture that is designed to minimize context switches and inter-thread communication for an operation for cross communication.

For Red Hat Ceph Storage 7, you can test RADOS Block Device (RBD) workloads on replicated pools with Crimson only. Do not use Crimson for production data.

Crimson goals

Crimson OSD is a replacement for the OSD daemon with the following goals:

Minimize CPU overload

- Minimize cycles or IOPS.

- Minimize cross-core communication.

- Minimize copies.

- Bypass kernel, avoid context switches.

Enable emerging storage technologies

- Zoned namespaces

- Persistent memory

- Fast NVMe

Seastar features

- Single reactor thread per CPU

- Asynchronous IO

- Scheduling done in user space

- Includes direct support for DPDK, a high-performance library for user space networking.

Benefits

- SeaStore has an independent metadata collection.

- Transactional

- Composed of flat object namespace.

- Object Names might be Large (>1k).

- Each object contains a key>value mapping (string>bytes) and data payload.

- Supports COW object clones.

- Supports ordered listing of both OMAP and object namespaces.

13.2. Difference between Crimson and Classic Ceph OSD architecture

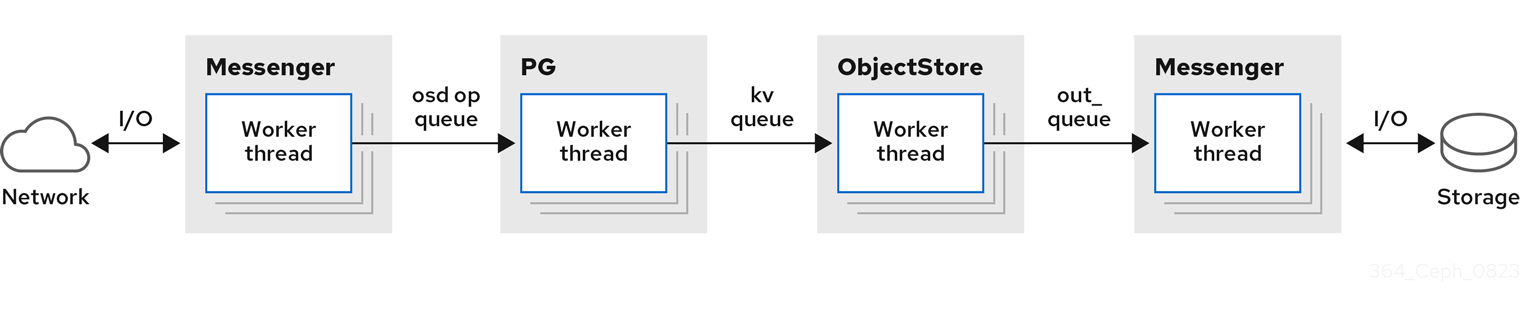

In a classic ceph-osd architecture, a messenger thread reads a client message from the wire, which places the message in the OP queue. The osd-op thread-pool then picks up the message and creates a transaction and queues it to BlueStore, the current default ObjectStore implementation. BlueStore’s kv_queue then picks up this transaction and anything else in the queue, synchronously waits for rocksdb to commit the transaction, and then places the completion callback in the finisher queue. The finisher thread then picks up the completion callback and queues to replace the messenger thread to send.

Each of these actions requires inter-thread co-ordination over the contents of a queue. For pg state, more than one thread might need to access the internal metadata of any PG to lock contention.

This lock contention with increased processor usage scales rapidly with the number of tasks and cores, and every locking point might become the scaling bottleneck under certain scenarios. Moreover, these locks and queues incur latency costs even when uncontended. Due to this latency, the thread pools and task queues deteriorate, as the bookkeeping effort delegates tasks between the worker thread and locks can force context-switches.

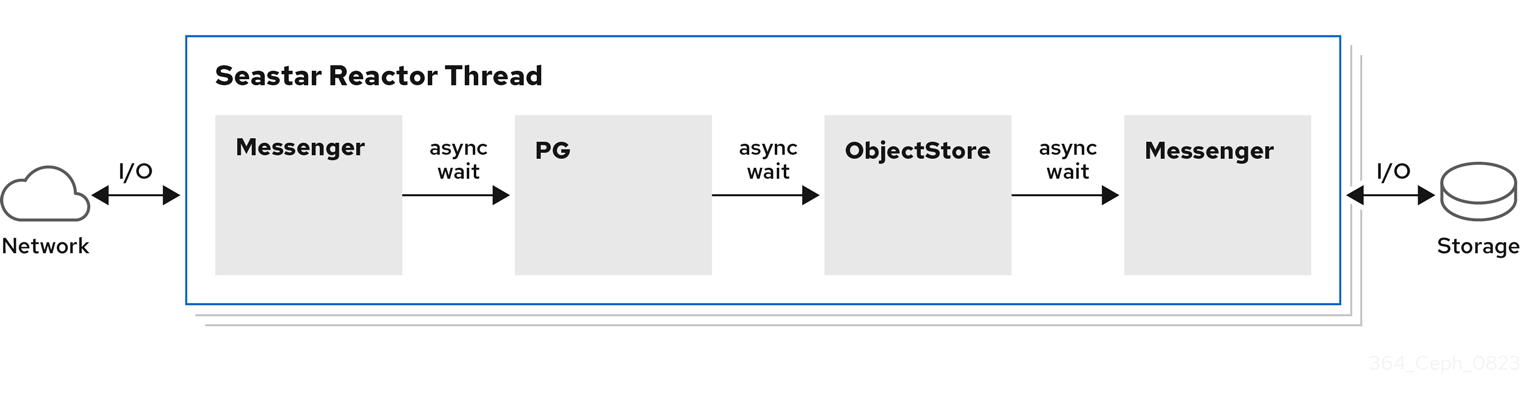

Unlike the ceph-osd architecture, Crimson allows a single I/O operation to complete on a single core without context switches and without blocking if the underlying storage operations do not require it. However, some operations still need to be able to wait for asynchronous processes to complete, probably nondeterministically depending on the state of the system such as recovery or the underlying device.

Crimson uses the C++ framework that is called Seastar, a highly asynchronous engine, which generally pre-allocates one thread pinned to each core. These divide work among those cores such that the state can be partitioned between cores and locking can be avoided. With Seastar, the I/O operations are partitioned among a group of threads based on the target object. Rather than splitting the stages of running an I/O operation among different groups of threads, run all the pipeline stages within a single thread. If an operation needs to be blocked, the core’s Seastar reactor switches to another concurrent operation and progresses.

Ideally, all the locks and context-switches are no longer needed as each running nonblocking task owns the CPU until it completes or cooperatively yields. No other thread can preempt the task at the same time. If the communication is not needed with other shards in the data path, the ideal performance scales linearly with the number of cores until the I/O device reaches its limit. This design fits the Ceph OSD well because, at the OSD level, the PG shard all IOs.

Unlike ceph-osd, crimson-osd does not daemonize itself even if the daemonize option is enabled. Do not daemonize crimson-osd since supported Linux distributions use systemd, which is able to daemonize the application. With sysvinit, use start-stop-daemon to daemonize crimson-osd.

ObjectStore backend

The crimson-osd offers both native and alienized object store backend. The native object store backend performs I/O with the Seastar reactor.

Following three ObjectStore backend is supported for Crimson:

- AlienStore - Provides compatibility with an earlier version of object store, that is, BlueStore.

-

CyanStore - A dummy backend for tests, which are implemented by volatile memory. This object store is modeled after the

memstorein the classic OSD. - SeaStore - The new object store designed specifically for Crimson OSD. The paths toward multiple shard support are different depending on the specific goal of the backend.

Following are the other two classic OSD ObjectStore backends:

- MemStore - The memory as the backend object store.

-

BlueStore - The object store used by the classic

ceph-osd.

13.3. Crimson metrics

Crimson has three ways to report statistics and metrics:

- PG stats reported to manager.

- Prometheus text protocol.

-

The

asockcommand.

PG stats reported to manager

Crimson collects the per-pg, per-pool, and per-osd stats in MPGStats message, which is sent to the Ceph Managers.

Prometheus text protocol

Configure the listening port and address by using the --prometheus-port command-line option.

The asock command

An admin socket command is offered to dump metrics.

Syntax

ceph tell OSD_ID dump_metrics ceph tell OSD_ID dump_metrics reactor_utilization

ceph tell OSD_ID dump_metrics

ceph tell OSD_ID dump_metrics reactor_utilizationExample

[ceph: root@host01 /]# ceph tell osd.0 dump_metrics [ceph: root@host01 /]# ceph tell osd.0 dump_metrics reactor_utilization

[ceph: root@host01 /]# ceph tell osd.0 dump_metrics

[ceph: root@host01 /]# ceph tell osd.0 dump_metrics reactor_utilization

Here, reactor_utilization is an optional string to filter the dumped metrics by prefix.

13.4. Crimson configuration options

Run the crimson-osd --help-seastar command for Seastar specific command-line options. Following are the options that you can use to configure Crimson:

--crimson, Description-

Start

crimson-osdinstead ofceph-osd. --nodaemon, Description- Do not daemonize the service.

--redirect-output, Description-

Redirect the

stdoutandstderrtoout/$type.$num.stdout --osd-args, Description-

Pass extra command-line options to

crimson-osdorceph-osd. This option is useful for passing Seastar options tocrimson-osd. For example, one can supply--osd-args "--memory 2G"to set the amount of memory to use. --cyanstore, Description- Use CyanStore as the object store backend.

--bluestore, Description-

Use the alienized BlueStore as the object store backend.

--bluestoreis the default memory store. --memstore, Description- Use the alienized MemStore as the object store backend.

--seastore, Description- Use SeaStore as the back end object store.

--seastore-devs, Description- Specify the block device used by SeaStore.

--seastore-secondary-devs, Description- Optional. SeaStore supports multiple devices. Enable this feature by passing the block device to this option.

--seastore-secondary-devs-type, Description-

Optional. Specify the type of secondary devices. When the secondary device is slower than main device passed to

--seastore-devs, the cold data in faster device will be evicted to the slower devices over time. Valid types includeHDD,SSD,(default),ZNS, andRANDOM_BLOCK_SSD. Note that secondary devices should not be faster than the main device.

13.5. Configuring Crimson

Configure crimson-osd by installing a new storage cluster. Install a new cluster by using the bootstrap option. You cannot upgrade this cluster as it is in the experimental phase. WARNING: Do not use production data as it might result in data loss.

Prerequisites

- An IP address for the first Ceph Monitor container, which is also the IP address for the first node in the storage cluster.

-

Login access to

registry.redhat.io. -

A minimum of 10 GB of free space for

/var/lib/containers/. - Root-level access to all nodes.

Procedure

While bootstrapping, use the

--imageflag to use Crimson build.Example

cephadm --image quay.ceph.io/ceph-ci/ceph:b682861f8690608d831f58603303388dd7915aa7-crimson bootstrap --mon-ip 10.1.240.54 --allow-fqdn-hostname --initial-dashboard-password Ceph_Crims

[root@host 01 ~]# cephadm --image quay.ceph.io/ceph-ci/ceph:b682861f8690608d831f58603303388dd7915aa7-crimson bootstrap --mon-ip 10.1.240.54 --allow-fqdn-hostname --initial-dashboard-password Ceph_CrimsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Log in to the

cephadmshell:Example

cephadm shell

[root@host 01 ~]# cephadm shellCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable Crimson globally as an experimental feature.

Example

[ceph: root@host01 /]# ceph config set global 'enable_experimental_unrecoverable_data_corrupting_features' crimson

[ceph: root@host01 /]# ceph config set global 'enable_experimental_unrecoverable_data_corrupting_features' crimsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow This step enables

crimson. Crimson is highly experimental, and malfunctions including crashes and data loss are to be expected.Enable the OSD Map flag.

Example

[ceph: root@host01 /]# ceph osd set-allow-crimson --yes-i-really-mean-it

[ceph: root@host01 /]# ceph osd set-allow-crimson --yes-i-really-mean-itCopy to Clipboard Copied! Toggle word wrap Toggle overflow The monitor allows

crimson-osdto boot only with the--yes-i-really-mean-itflag.Enable Crimson parameter for the monitor to direct the default pools to be created as Crimson pools.

Example

[ceph: root@host01 /]# ceph config set mon osd_pool_default_crimson true

[ceph: root@host01 /]# ceph config set mon osd_pool_default_crimson trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

crimson-osddoes not initiate placement groups (PG) for non-crimson pools.

13.6. Crimson configuration parameters

Following are the parameters that you can use to configure Crimson.

crimson_osd_obc_lru_size- Description

- Number of obcs to cache.

- Type

- uint

- Default

- 10

crimson_osd_scheduler_concurrency- Description

-

The maximum number concurrent IO operations,

0for unlimited. - Type

- uint

- Default

- 0

crimson_alien_op_num_threads- Description

- The number of threads for serving alienized ObjectStore.

- Type

- uint

- Default

- 6

crimson_seastar_smp- Description

- Number of seastar reactor threads to use for the OSD.

- Type

- uint

- Default

- 1

crimson_alien_thread_cpu_cores- Description

- String CPU cores on which alienstore threads run in cpuset(7) format.

- Type

- String

seastore_segment_size- Description

- Segment size to use for Segment Manager.

- Type

- Size

- Default

- 64_M

seastore_device_size- Description

- Total size to use for SegmentManager block file if created.

- Type

- Size

- Default

- 50_G

seastore_block_create- Description

- Create SegmentManager file if it does not exist.

- Type

- Boolean

- Default

- true

seastore_journal_batch_capacity- Description

- The number limit of records in a journal batch.

- Type

- uint

- Default

- 16

seastore_journal_batch_flush_size- Description

- The size threshold to force flush a journal batch.

- Type

- Size

- Default

- 16_M

seastore_journal_iodepth_limit- Description

- The IO depth limit to submit journal records.

- Type

- uint

- Default

- 5

seastore_journal_batch_preferred_fullness- Description

- The record fullness threshold to flush a journal batch.

- Type

- Float

- Default

- 0.95

seastore_default_max_object_size- Description

- The default logical address space reservation for seastore objects' data.

- Type

- uint

- Default

- 16777216

seastore_default_object_metadata_reservation- Description

- The default logical address space reservation for seastore objects' metadata.

- Type

- uint

- Default

- 16777216

seastore_cache_lru_size- Description

- Size in bytes of extents to keep in cache.

- Type

- Size

- Default

- 64_M

seastore_cbjournal_size- Description

- Total size to use for CircularBoundedJournal if created, it is valid only if seastore_main_device_type is RANDOM_BLOCK.

- Type

- Size

- Default

- 5_G

seastore_obj_data_write_amplification- Description

- Split extent if ratio of total extent size to write size exceeds this value.

- Type

- Float

- Default

- 1.25

seastore_max_concurrent_transactions- Description

- The maximum concurrent transactions that seastore allows.

- Type

- uint

- Default

- 8

seastore_main_device_type- Description

- The main device type seastore uses (SSD or RANDOM_BLOCK_SSD).

- Type

- String

- Default

- SSD

seastore_multiple_tiers_stop_evict_ratio- Description

- When the used ratio of main tier is less than this value, then stop evict cold data to the cold tier.

- Type

- Float

- Default

- 0.5

seastore_multiple_tiers_default_evict_ratio- Description

- Begin evicting cold data to the cold tier when the used ratio of the main tier reaches this value.

- Type

- Float

- Default

- 0.6

seastore_multiple_tiers_fast_evict_ratio- Description

- Begin fast eviction when the used ratio of the main tier reaches this value.

- Type

- Float

- Default

- 0.7

13.7. Profiling Crimson

Profiling Crimson is a methodology to do performance testing with Crimson. Two types of profiling are supported:

-

Flexible I/O (FIO) - The

crimson-store-nbdshows the configurableFuturizedStoreinternals as an NBD server for use with FIO. - Ceph benchmarking tool (CBT) - A testing harness in python to test the performance of a Ceph cluster.

Procedure

Install

libnbdand compile FIO:Example

dnf install libnbd git clone git://git.kernel.dk/fio.git cd fio ./configure --enable-libnbd make

[root@host01 ~]# dnf install libnbd [root@host01 ~]# git clone git://git.kernel.dk/fio.git [root@host01 ~]# cd fio [root@host01 ~]# ./configure --enable-libnbd [root@host01 ~]# makeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Build

crimson-store-nbd:Example

cd build ninja crimson-store-nbd

[root@host01 ~]# cd build [root@host01 ~]# ninja crimson-store-nbdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

crimson-store-nbdserver with a block device. Specify the path to the raw device, like/dev/nvme1n1:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an FIO job named nbd.fio:

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Test the Crimson object with the FIO compiled:

Example

./fio nbd.fio

[root@host01 ~]# ./fio nbd.fioCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Ceph Benchmarking Tool (CBT)

Run the same test against two branches. One is main(master), another is topic branch of your choice. Compare the test results. Along with every test case, a set of rules is defined to check whether you need to perform regressions when two sets of test results are compared. If a possible regression is found, the rule and corresponding test results are highlighted.

Procedure

From the main branch and the topic branch, run

make crimson osd:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Compare the test results:

Example

~/dev/cbt/compare.py -b /tmp/baseline -a /tmp/yap -v

[root@host01 ~]# ~/dev/cbt/compare.py -b /tmp/baseline -a /tmp/yap -vCopy to Clipboard Copied! Toggle word wrap Toggle overflow