Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

Chapter 2. Upgrading the Red Hat Quay Operator Overview

The Red Hat Quay Operator follows a synchronized versioning scheme, which means that each version of the Operator is tied to the version of Red Hat Quay and the components that it manages. There is no field on the QuayRegistry custom resource which sets the version of Red Hat Quay to deploy; the Operator can only deploy a single version of all components. This scheme was chosen to ensure that all components work well together and to reduce the complexity of the Operator needing to know how to manage the lifecycles of many different versions of Red Hat Quay on Kubernetes.

2.1. Operator Lifecycle Manager

The Red Hat Quay Operator should be installed and upgraded using the Operator Lifecycle Manager (OLM). When creating a Subscription with the default approvalStrategy: Automatic, OLM will automatically upgrade the Red Hat Quay Operator whenever a new version becomes available.

When the Red Hat Quay Operator is installed by Operator Lifecycle Manager, it might be configured to support automatic or manual upgrades. This option is shown on the OperatorHub page for the Red Hat Quay Operator during installation. It can also be found in the Red Hat Quay Operator Subscription object by the approvalStrategy field. Choosing Automatic means that your Red Hat Quay Operator will automatically be upgraded whenever a new Operator version is released. If this is not desirable, then the Manual approval strategy should be selected.

2.2. Upgrading the Red Hat Quay Operator

The standard approach for upgrading installed Operators on OpenShift Container Platform is documented at Upgrading installed Operators.

In general, Red Hat Quay supports upgrades from a prior (N-1) minor version only. For example, upgrading directly from Red Hat Quay 3.0.5 to the latest version of 3.5 is not supported. Instead, users would have to upgrade as follows:

-

3.0.5

3.1.3 -

3.1.3

3.2.2 -

3.2.2

3.3.4 -

3.3.4

3.4.z -

3.4.z

3.5.z

This is required to ensure that any necessary database migrations are done correctly and in the right order during the upgrade.

In some cases, Red Hat Quay supports direct, single-step upgrades from prior (N-2, N-3) minor versions. This simplifies the upgrade procedure for customers on older releases. The following upgrade paths are supported for Red Hat Quay 3.13:

-

3.11.z

3.13.z -

3.12.z

3.13.z

For users on standalone deployments of Red Hat Quay wanting to upgrade to 3.13, see the Standalone upgrade guide.

2.2.1. Upgrading Red Hat Quay to version 3.13

To update Red Hat Quay from one minor version to the next, for example, 3.12.z

Procedure

-

In the OpenShift Container Platform Web Console, navigate to Operators

Installed Operators. - Click on the Red Hat Quay Operator.

- Navigate to the Subscription tab.

- Under Subscription details click Update channel.

-

Select stable-3.13

Save. - Check the progress of the new installation under Upgrade status. Wait until the upgrade status changes to 1 installed before proceeding.

-

In your OpenShift Container Platform cluster, navigate to Workloads

Pods. Existing pods should be terminated, or in the process of being terminated. -

Wait for the following pods, which are responsible for upgrading the database and alembic migration of existing data, to spin up:

clair-postgres-upgrade,quay-postgres-upgrade, andquay-app-upgrade. -

After the

clair-postgres-upgrade,quay-postgres-upgrade, andquay-app-upgradepods are marked as Completed, the remaining pods for your Red Hat Quay deployment spin up. This takes approximately ten minutes. -

Verify that the

quay-databaseuses thepostgresql-13image, andclair-postgrespods now uses thepostgresql-15image. -

After the

quay-apppod is marked as Running, you can reach your Red Hat Quay registry.

2.2.2. Upgrading to the next minor release version

For z stream upgrades, for example, 3.12.1 z stream upgrade depends on the approvalStrategy as outlined above. If the approval strategy is set to Automatic, the Red Hat Quay Operator upgrades automatically to the newest z stream. This results in automatic, rolling Red Hat Quay updates to newer z streams with little to no downtime. Otherwise, the update must be manually approved before installation can begin.

With Red Hat Quay 3.13, the volumeSize parameter has been implemented for use with the clairpostgres component of the QuayRegistry custom resource definition (CRD). This replaces the volumeSize parameter that was previously used for the clair component of the same CRD.

If your Red Hat Quay 3.12 QuayRegistry custom resource definition (CRD) implemented a volume override for the clair component, you must ensure that the volumeSize field is included under the clairpostgres component of the QuayRegistry CRD.

Failure to move volumeSize from the clair component to the clairpostgres component will result in a failed upgrade to version 3.13.

For example:

spec:

components:

- kind: clair

managed: true

- kind: clairpostgres

managed: true

overrides:

volumeSize: <volume_size>

</upgrading-312-to-313>2.2.3. Changing the update channel for the Red Hat Quay Operator

The subscription of an installed Operator specifies an update channel, which is used to track and receive updates for the Operator. To upgrade the Red Hat Quay Operator to start tracking and receiving updates from a newer channel, change the update channel in the Subscription tab for the installed Red Hat Quay Operator. For subscriptions with an Automatic approval strategy, the upgrade begins automatically and can be monitored on the page that lists the Installed Operators.

2.2.4. Manually approving a pending Operator upgrade

If an installed Operator has the approval strategy in its subscription set to Manual, when new updates are released in its current update channel, the update must be manually approved before installation can begin. If the Red Hat Quay Operator has a pending upgrade, this status will be displayed in the list of Installed Operators. In the Subscription tab for the Red Hat Quay Operator, you can preview the install plan and review the resources that are listed as available for upgrade. If satisfied, click Approve and return to the page that lists Installed Operators to monitor the progress of the upgrade.

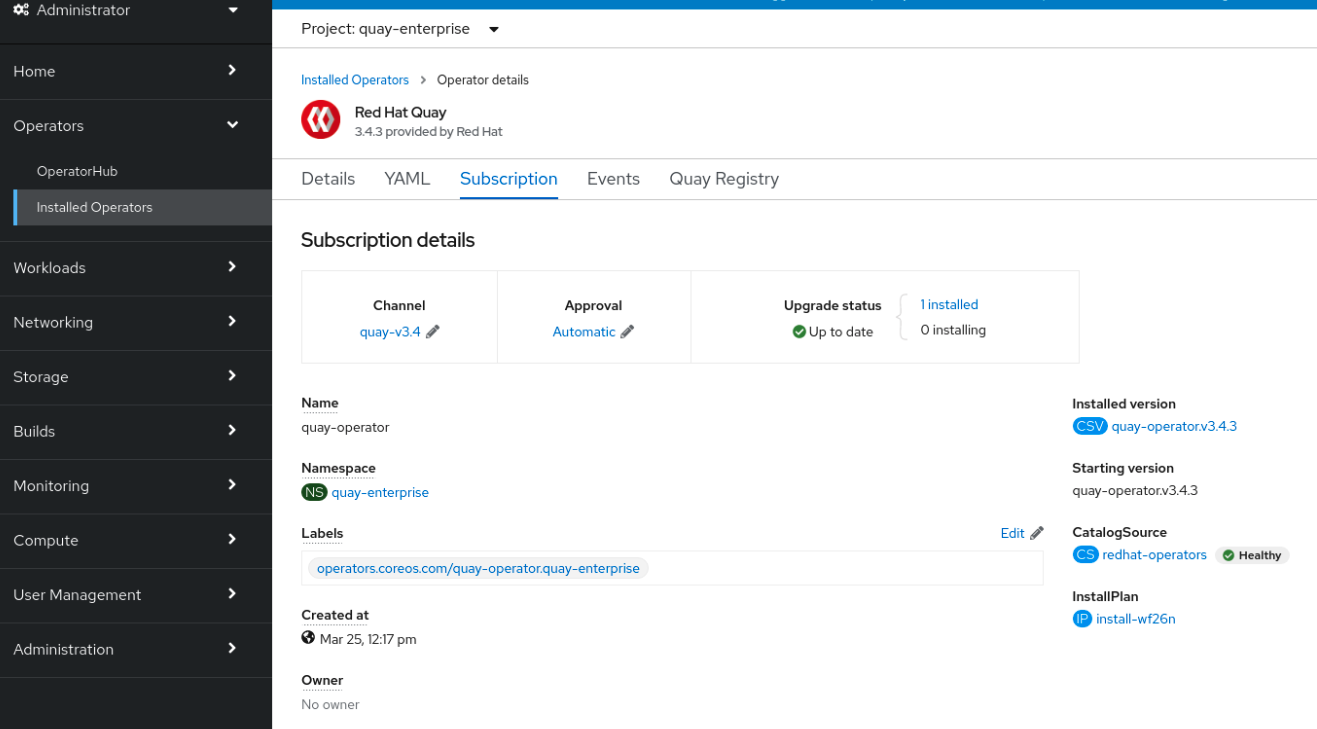

The following image shows the Subscription tab in the UI, including the update Channel, the Approval strategy, the Upgrade status and the InstallPlan:

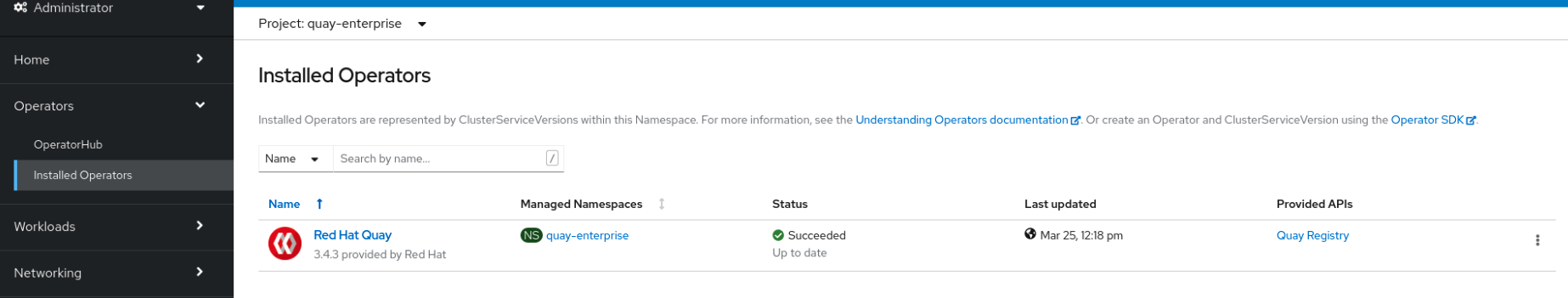

The list of Installed Operators provides a high-level summary of the current Quay installation:

2.3. Upgrading a QuayRegistry resource

When the Red Hat Quay Operator starts, it immediately looks for any QuayRegistries it can find in the namespace(s) it is configured to watch. When it finds one, the following logic is used:

-

If

status.currentVersionis unset, reconcile as normal. -

If

status.currentVersionequals the Operator version, reconcile as normal. -

If

status.currentVersiondoes not equal the Operator version, check if it can be upgraded. If it can, perform upgrade tasks and set thestatus.currentVersionto the Operator’s version once complete. If it cannot be upgraded, return an error and leave theQuayRegistryand its deployed Kubernetes objects alone.

2.4. Upgrading a QuayEcosystem

Upgrades are supported from previous versions of the Operator which used the QuayEcosystem API for a limited set of configurations. To ensure that migrations do not happen unexpectedly, a special label needs to be applied to the QuayEcosystem for it to be migrated. A new QuayRegistry will be created for the Operator to manage, but the old QuayEcosystem will remain until manually deleted to ensure that you can roll back and still access Quay in case anything goes wrong. To migrate an existing QuayEcosystem to a new QuayRegistry, use the following procedure.

Procedure

Add

"quay-operator/migrate": "true"to themetadata.labelsof theQuayEcosystem.$ oc edit quayecosystem <quayecosystemname>

metadata: labels: quay-operator/migrate: "true"-

Wait for a

QuayRegistryto be created with the samemetadata.nameas yourQuayEcosystem. TheQuayEcosystemwill be marked with the label"quay-operator/migration-complete": "true". -

After the

status.registryEndpointof the newQuayRegistryis set, access Red Hat Quay and confirm that all data and settings were migrated successfully. -

If everything works correctly, you can delete the

QuayEcosystemand Kubernetes garbage collection will clean up all old resources.

2.4.1. Reverting QuayEcosystem Upgrade

If something goes wrong during the automatic upgrade from QuayEcosystem to QuayRegistry, follow these steps to revert back to using the QuayEcosystem:

Procedure

Delete the

QuayRegistryusing either the UI orkubectl:$ kubectl delete -n <namespace> quayregistry <quayecosystem-name>

-

If external access was provided using a

Route, change theRouteto point back to the originalServiceusing the UI orkubectl.

If your QuayEcosystem was managing the PostgreSQL database, the upgrade process will migrate your data to a new PostgreSQL database managed by the upgraded Operator. Your old database will not be changed or removed but Red Hat Quay will no longer use it once the migration is complete. If there are issues during the data migration, the upgrade process will exit and it is recommended that you continue with your database as an unmanaged component.

2.4.2. Supported QuayEcosystem Configurations for Upgrades

The Red Hat Quay Operator reports errors in its logs and in status.conditions if migrating a QuayEcosystem component fails or is unsupported. All unmanaged components should migrate successfully because no Kubernetes resources need to be adopted and all the necessary values are already provided in Red Hat Quay’s config.yaml file.

Database

Ephemeral database not supported (volumeSize field must be set).

Redis

Nothing special needed.

External Access

Only passthrough Route access is supported for automatic migration. Manual migration required for other methods.

-

LoadBalancerwithout custom hostname: After theQuayEcosystemis marked with label"quay-operator/migration-complete": "true", delete themetadata.ownerReferencesfield from existingServicebefore deleting theQuayEcosystemto prevent Kubernetes from garbage collecting theServiceand removing the load balancer. A newServicewill be created withmetadata.nameformat<QuayEcosystem-name>-quay-app. Edit thespec.selectorof the existingServiceto match thespec.selectorof the newServiceso traffic to the old load balancer endpoint will now be directed to the new pods. You are now responsible for the oldService; the Quay Operator will not manage it. -

LoadBalancer/NodePort/Ingresswith custom hostname: A newServiceof typeLoadBalancerwill be created withmetadata.nameformat<QuayEcosystem-name>-quay-app. Change your DNS settings to point to thestatus.loadBalancerendpoint provided by the newService.

Clair

Nothing special needed.

Object Storage

QuayEcosystem did not have a managed object storage component, so object storage will always be marked as unmanaged. Local storage is not supported.

Repository Mirroring

Nothing special needed.