This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.ネットワーク

クラスターネットワークの設定および管理

概要

第1章 ネットワークについて

クラスター管理者は、クラスターで実行されるアプリケーションを外部トラフィックに公開し、ネットワーク接続のセキュリティーを保護するための複数のオプションがあります。

- ノードポートやロードバランサーなどのサービスタイプ

-

IngressやRouteなどの API リソース

デフォルトで、Kubernetes は各 Pod に、Pod 内で実行しているアプリケーションの内部 IP アドレスを割り当てます。Pod とそのコンテナーはネットワークネットワーク接続が可能ですが、クラスター外のクライアントにはネットワークアクセスがありません。アプリケーションを外部トラフィックに公開する場合、各 Pod に IP アドレスを割り当てると、ポートの割り当て、ネットワーク、名前の指定、サービス検出、負荷分散、アプリケーション設定、移行などの点で、Pod を物理ホストや仮想マシンのように扱うことができます。

一部のクラウドプラットフォームでは、169.254.169.254 IP アドレスでリッスンするメタデータ API があります。これは、IPv4 169.254.0.0/16 CIDR ブロックのリンクローカル IP アドレスです。

この CIDR ブロックは Pod ネットワークから到達できません。これらの IP アドレスへのアクセスを必要とする Pod には、Pod 仕様の spec.hostNetwork フィールドを true に設定して、ホストのネットワークアクセスが付与される必要があります。

Pod ホストのネットワークアクセスを許可する場合、Pod に基礎となるネットワークインフラストラクチャーへの特権アクセスを付与します。

1.1. OpenShift Container Platform DNS

フロントエンドサービスやバックエンドサービスなど、複数のサービスを実行して複数の Pod で使用している場合、フロントエンド Pod がバックエンドサービスと通信できるように、ユーザー名、サービス IP などの環境変数を作成します。サービスが削除され、再作成される場合には、新規の IP アドレスがそのサービスに割り当てられるので、フロントエンド Pod がサービス IP の環境変数の更新された値を取得するには、これを再作成する必要があります。さらに、バックエンドサービスは、フロントエンド Pod を作成する前に作成し、サービス IP が正しく生成され、フロントエンド Pod に環境変数として提供できるようにする必要があります。

そのため、OpenShift Container Platform には DNS が組み込まれており、これにより、サービスは、サービス IP/ポートと共にサービス DNS によって到達可能になります。

1.2. OpenShift Container Platform Ingress Operator

OpenShift Container Platform クラスターを作成すると、クラスターで実行している Pod およびサービスにはそれぞれ独自の IP アドレスが割り当てられます。IP アドレスは、近くで実行されている他の Pod やサービスからアクセスできますが、外部クライアントの外部からはアクセスできません。Ingress Operator は IngressController API を実装し、OpenShift Container Platform クラスターサービスへの外部アクセスを可能にするコンポーネントです。

Ingress Operator を使用すると、ルーティングを処理する 1 つ以上の HAProxy ベースの Ingress コントローラー をデプロイおよび管理することにより、外部クライアントがサービスにアクセスできるようになります。OpenShift Container Platform Route および Kubernetes Ingress リソースを指定して、トラフィックをルーティングするために Ingress Operator を使用します。endpointPublishingStrategy タイプおよび内部負荷分散を定義する機能などの Ingress コントローラー内の設定は、Ingress コントローラーエンドポイントを公開する方法を提供します。

1.2.1. ルートと Ingress の比較

OpenShift Container Platform の Kubernetes Ingress リソースは、クラスター内で Pod として実行される共有ルーターサービスと共に Ingress コントローラーを実装します。Ingress トラフィックを管理する最も一般的な方法は Ingress コントローラーを使用することです。他の通常の Pod と同様にこの Pod をスケーリングし、複製できます。このルーターサービスは、オープンソースのロードバランサーソリューションである HAProxy をベースとしています。

OpenShift Container Platform ルートは、クラスターのサービスに Ingress トラフィックを提供します。ルートは、Blue-Green デプロイメント向けに TLS 再暗号化、TLS パススルー、分割トラフィックなどの標準の Kubernetes Ingress コントローラーでサポートされない可能性のある高度な機能を提供します。

Ingress トラフィックは、ルートを介してクラスターのサービスにアクセスします。ルートおよび Ingress は、Ingress トラフィックを処理する主要なリソースです。Ingress は、外部要求を受け入れ、ルートに基づいてそれらを委譲するなどのルートと同様の機能を提供します。ただし、Ingress では、特定タイプの接続 (HTTP/2、HTTPS およびサーバー名 ID(SNI)、ならび証明書を使用した TLS のみを許可できます。OpenShift Container Platform では、ルートは、Ingress リソースで指定される各種の条件を満たすために生成されます。

第2章 ホストへのアクセス

OpenShift Container Platform インスタンスにアクセスして、セキュアなシェル (SSH) アクセスでコントロールプレーンノード (別名マスターノード) にアクセスするために bastion ホストを作成する方法を学びます。

2.1. インストーラーでプロビジョニングされるインフラストラクチャークラスターでの Amazon Web Services のホストへのアクセス

OpenShift Container Platform インストーラーは、OpenShift Container Platform クラスターにプロビジョニングされる Amazon Elastic Compute Cloud (Amazon EC2) インスタンスのパブリック IP アドレスを作成しません。OpenShift Container Platform ホストに対して SSH を実行できるようにするには、以下の手順を実行する必要があります。

手順

-

openshift-installコマンドで作成される仮想プライベートクラウド (VPC) に対する SSH アクセスを可能にするセキュリティーグループを作成します。 - インストーラーが作成したパブリックサブネットのいずれかに Amazon EC2 インスタンスを作成します。

パブリック IP アドレスを、作成した Amazon EC2 インスタンスに関連付けます。

OpenShift Container Platform のインストールとは異なり、作成した Amazon EC2 インスタンスを SSH キーペアに関連付ける必要があります。これにはインターネットを OpenShift Container Platform クラスターの VPC にブリッジ接続するための SSH bastion としてのみの単純な機能しかないため、このインスタンスにどのオペレーティングシステムを選択しても問題ありません。どの Amazon Machine Image (AMI) を使用するかについては、注意が必要です。たとえば、Red Hat Enterprise Linux CoreOS (RHCOS) では、インストーラーと同様に、Ignition でキーを指定することができます。

Amazon EC2 インスタンスをプロビジョニングし、これに対して SSH を実行した後に、OpenShift Container Platform インストールに関連付けた SSH キーを追加する必要があります。このキーは bastion インスタンスのキーとは異なる場合がありますが、異なるキーにしなければならない訳ではありません。

注記直接の SSH アクセスは、障害復旧を目的とする場合にのみ推奨されます。Kubernetes API が応答する場合、特権付き Pod を代わりに実行します。

-

oc get nodesを実行し、出力を検査し、マスターであるノードのいずれかを選択します。ホスト名はip-10-0-1-163.ec2.internalに類似したものになります。 Amazon EC2 に手動でデプロイした bastion SSH ホストから、そのコントロールプレーンホスト (別名マスターホスト) に対して SSH を実行します。インストール時に指定したものと同じ SSH キーを使用するようにします。

ssh -i <ssh-key-path> core@<master-hostname>

$ ssh -i <ssh-key-path> core@<master-hostname>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

第3章 ネットワーキング Operator の概要

OpenShift Container Platform は、複数のタイプのネットワーキング Operator をサポートします。これらのネットワーク Operator を使用して、クラスターネットワークを管理できます。

3.1. Cluster Network Operator

Cluster Network Operator (CNO) は、OpenShift Container Platform クラスター内のクラスターネットワークコンポーネントをデプロイおよび管理します。これには、インストール中にクラスター用に選択された Container Network Interface (CNI) のデフォルトネットワークプロバイダープラグインのデプロイメントが含まれます。詳細は、OpenShift Container Platform における Cluster Network Operator を参照してください。

3.2. DNS Operator

DNS Operator は、CoreDNS をデプロイして管理し、Pod に名前解決サービスを提供します。これにより、OpenShift Container Platform で DNS ベースの Kubernetes サービス検出が可能になります。詳細は、OpenShift Container Platform の DNS Operator を参照してください。

3.3. Ingress Operator

OpenShift Container Platform クラスターを作成すると、クラスターで実行している Pod およびサービスにはそれぞれの IP アドレスが割り当てられます。IP アドレスは、近くで実行されている他の Pod やサービスからアクセスできますが、外部クライアントの外部からはアクセスできません。Ingress Operator は IngressController API を実装し、OpenShift Container Platform クラスターサービスへの外部アクセスを可能にします。詳細は、OpenShift Container Platform の Ingress Operator を参照してください。

第4章 OpenShift Container Platform における Cluster Network Operator

Cluster Network Operator (CNO) は、インストール時にクラスター用に選択される Container Network Interface (CNI) デフォルトネットワークプロバイダープラグインを含む、OpenShift Container Platform クラスターの各種のクラスターネットワークコンポーネントをデプロイし、これらを管理します。

4.1. Cluster Network Operator

Cluster Network Operator は、operator.openshift.io API グループから network API を実装します。Operator は、デーモンセットを使用して OpenShift SDN デフォルト Container Network Interface (CNI) ネットワークプロバイダープラグイン、またはクラスターのインストール時に選択したデフォルトネットワークプロバイダープラグインをデプロイします。

手順

Cluster Network Operator は、インストール時に Kubernetes Deployment としてデプロイされます。

以下のコマンドを実行して Deployment のステータスを表示します。

oc get -n openshift-network-operator deployment/network-operator

$ oc get -n openshift-network-operator deployment/network-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

NAME READY UP-TO-DATE AVAILABLE AGE network-operator 1/1 1 1 56m

NAME READY UP-TO-DATE AVAILABLE AGE network-operator 1/1 1 1 56mCopy to Clipboard Copied! Toggle word wrap Toggle overflow 以下のコマンドを実行して、Cluster Network Operator の状態を表示します。

oc get clusteroperator/network

$ oc get clusteroperator/networkCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE network 4.5.4 True False False 50m

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE network 4.5.4 True False False 50mCopy to Clipboard Copied! Toggle word wrap Toggle overflow 以下のフィールドは、Operator のステータス (

AVAILABLE、PROGRESSING、およびDEGRADED) についての情報を提供します。AVAILABLEフィールドは、Cluster Network Operator が Available ステータス条件を報告する場合にTrueになります。

4.2. クラスターネットワーク設定の表示

すべての新規 OpenShift Container Platform インストールには、cluster という名前の network.config オブジェクトがあります。

手順

oc describeコマンドを使用して、クラスターネットワーク設定を表示します。oc describe network.config/cluster

$ oc describe network.config/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Cluster Network Operator のステータス表示

oc describe コマンドを使用して、Cluster Network Operator のステータスを検査し、その詳細を表示することができます。

手順

以下のコマンドを実行して、Cluster Network Operator のステータスを表示します。

oc describe clusteroperators/network

$ oc describe clusteroperators/networkCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Cluster Network Operator ログの表示

oc logs コマンドを使用して、Cluster Network Operator ログを表示できます。

手順

以下のコマンドを実行して、Cluster Network Operator のログを表示します。

oc logs --namespace=openshift-network-operator deployment/network-operator

$ oc logs --namespace=openshift-network-operator deployment/network-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Cluster Network Operator (CNO) の設定

クラスターネットワークの設定は、Cluster Network Operator (CNO) 設定の一部として指定され、cluster という名前のカスタムリソース (CR) オブジェクトに保存されます。CR は operator.openshift.io API グループの Network API のフィールドを指定します。

CNO 設定は、Network.config.openshift.io API グループの Network API からクラスターのインストール時に以下のフィールドを継承し、これらのフィールドは変更できません。

clusterNetwork- Pod IP アドレスの割り当てに使用する IP アドレスプール。

serviceNetwork- サービスの IP アドレスプール。

defaultNetwork.type- OpenShift SDN または OVN-Kubernetes などのクラスターネットワークプロバイダー。

クラスターのインストール後に、直前のセクションで一覧表示されているフィールドを変更することはできません。

defaultNetwork オブジェクトのフィールドを cluster という名前の CNO オブジェクトに設定することにより、クラスターのクラスターネットワークプロバイダー設定を指定できます。

4.5.1. Cluster Network Operator 設定オブジェクト

Cluster Network Operator (CNO) のフィールドは以下の表で説明されています。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

CNO オブジェクトの名前。この名前は常に |

|

|

| Pod ID アドレスの割り当て、サブネット接頭辞の長さのクラスター内の個別ノードへの割り当てに使用される IP アドレスのブロックを指定する一覧です。以下に例を示します。

この値は読み取り専用であり、クラスターのインストール時に |

|

|

| サービスの IP アドレスのブロック。OpenShift SDN および OVN-Kubernetes Container Network Interface (CNI) ネットワークプロバイダーは、サービスネットワークの単一 IP アドレスブロックのみをサポートします。以下に例を示します。 spec: serviceNetwork: - 172.30.0.0/14

この値は読み取り専用であり、クラスターのインストール時に |

|

|

| クラスターネットワークの Container Network Interface (CNI) ネットワークプロバイダーを設定します。 |

|

|

| このオブジェクトのフィールドは、kube-proxy 設定を指定します。OVN-Kubernetes クラスターネットワークプロバイダーを使用している場合、kube-proxy 設定は機能しません。 |

defaultNetwork オブジェクト設定

defaultNetwork オブジェクトの値は、以下の表で定義されます。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

注記 OpenShift Container Platform はデフォルトで、OpenShift SDN Container Network Interface (CNI) クラスターネットワークプロバイダーを使用します。 |

|

|

| このオブジェクトは OpenShift SDN クラスターネットワークプロバイダーにのみ有効です。 |

|

|

| このオブジェクトは OVN-Kubernetes クラスターネットワークプロバイダーにのみ有効です。 |

OpenShift SDN CNI クラスターネットワークプロバイダーの設定

以下の表は、OpenShift SDN Container Network Interface (CNI) クラスターネットワークプロバイダーの設定フィールドについて説明しています。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

| OpenShiftSDN のネットワーク分離モード。 |

|

|

| VXLAN オーバーレイネットワークの最大転送単位 (MTU)。通常、この値は自動的に設定されます。 |

|

|

|

すべての VXLAN パケットに使用するポート。デフォルト値は |

クラスターのインストール時にのみクラスターネットワークプロバイダーの設定を変更することができます。

OpenShift SDN 設定の例

OVN-Kubernetes CNI クラスターネットワークプロバイダーの設定

以下の表は OVN-Kubernetes CNI クラスターネットワークプロバイダーの設定フィールドについて説明しています。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

| Geneve (Generic Network Virtualization Encapsulation) オーバーレイネットワークの MTU (maximum transmission unit)。通常、この値は自動的に設定されます。 |

|

|

| Geneve オーバーレイネットワークの UDP ポート。 |

クラスターのインストール時にのみクラスターネットワークプロバイダーの設定を変更することができます。

OVN-Kubernetes 設定の例

defaultNetwork:

type: OVNKubernetes

ovnKubernetesConfig:

mtu: 1400

genevePort: 6081

defaultNetwork:

type: OVNKubernetes

ovnKubernetesConfig:

mtu: 1400

genevePort: 6081kubeProxyConfig オブジェクト設定

kubeProxyConfig オブジェクトの値は以下の表で定義されます。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

注記

OpenShift Container Platform 4.3 以降で強化されたパフォーマンスの向上により、 |

|

|

|

kubeProxyConfig:

proxyArguments:

iptables-min-sync-period:

- 0s

|

4.5.2. Cluster Network Operator の設定例

以下の例では、詳細な CNO 設定が指定されています。

Cluster Network Operator オブジェクトのサンプル

第5章 OpenShift Container Platform の DNS Operator

DNS Operator は、Pod に対して名前解決サービスを提供するために CoreDNS をデプロイし、これを管理し、OpenShift 内での DNS ベースの Kubernetes サービス検出を可能にします。

5.1. DNS Operator

DNS Operator は、operator.openshift.io API グループから dns API を実装します。この Operator は、デーモンセットを使用して CoreDNS をデプロイし、デーモンセットのサービスを作成し、 kubelet を Pod に対して名前解決に CoreDNS サービス IP を使用するように指示するように設定します。

手順

DNS Operator は、インストール時に Deployment オブジェクトを使用してデプロイされます。

oc getコマンドを使用してデプロイメントのステータスを表示します。oc get -n openshift-dns-operator deployment/dns-operator

$ oc get -n openshift-dns-operator deployment/dns-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

NAME READY UP-TO-DATE AVAILABLE AGE dns-operator 1/1 1 1 23h

NAME READY UP-TO-DATE AVAILABLE AGE dns-operator 1/1 1 1 23hCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc getコマンドを使用して DNS Operator の状態を表示します。oc get clusteroperator/dns

$ oc get clusteroperator/dnsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE dns 4.1.0-0.11 True False False 92m

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE dns 4.1.0-0.11 True False False 92mCopy to Clipboard Copied! Toggle word wrap Toggle overflow AVAILABLE、PROGRESSINGおよびDEGRADEDは、Operator のステータスについての情報を提供します。AVAILABLEは、CoreDNS デーモンセットからの 1 つ以上の Pod がAvailableステータス条件を報告する場合はTrueになります。

5.2. デフォルト DNS の表示

すべての新規 OpenShift Container Platform インストールには、default という名前の dns.operator があります。

手順

oc describeコマンドを使用してデフォルトのdnsを表示します。oc describe dns.operator/default

$ oc describe dns.operator/defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow クラスターのサービス CIDR を見つけるには、

oc getコマンドを使用します。oc get networks.config/cluster -o jsonpath='{$.status.serviceNetwork}'$ oc get networks.config/cluster -o jsonpath='{$.status.serviceNetwork}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

出力例

[172.30.0.0/16]

[172.30.0.0/16]5.3. DNS 転送の使用

DNS 転送を使用すると、指定のゾーンにどのネームサーバーを使用するかを指定することで、ゾーンごとに /etc/resolv.conf で特定される転送設定をオーバーライドできます。転送されるゾーンが OpenShift Container Platform によって管理される Ingress ドメインである場合、アップストリームネームサーバーがドメインについて認証される必要があります。

手順

defaultという名前の DNS Operator オブジェクトを変更します。oc edit dns.operator/default

$ oc edit dns.operator/defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow これにより、

Serverに基づく追加のサーバー設定ブロックを使用してdns-defaultという名前の ConfigMap を作成し、更新できます。クエリーに一致するゾーンを持つサーバーがない場合、名前解決は/etc/resolv.confで指定されたネームサーバーにフォールバックします。DNS の例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 注記serversが定義されていないか、または無効な場合、ConfigMap にはデフォルトサーバーのみが含まれます。ConfigMap を表示します。

oc get configmap/dns-default -n openshift-dns -o yaml

$ oc get configmap/dns-default -n openshift-dns -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 以前のサンプル DNS に基づく DNS ConfigMap の例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

forwardPluginへの変更により、CoreDNS デーモンセットのローリング更新がトリガーされます。

5.4. DNS Operator のステータス

oc describe コマンドを使用して、DNS Operator のステータスを検査し、その詳細を表示することができます。

手順

DNS Operator のステータスを表示します。

oc describe clusteroperators/dns

$ oc describe clusteroperators/dns5.5. DNS Operator ログ

oc logs コマンドを使用して、DNS Operator ログを表示できます。

手順

DNS Operator のログを表示します。

oc logs -n openshift-dns-operator deployment/dns-operator -c dns-operator

$ oc logs -n openshift-dns-operator deployment/dns-operator -c dns-operator第6章 OpenShift Container Platform の Ingress Operator

6.1. OpenShift Container Platform Ingress Operator

OpenShift Container Platform クラスターを作成すると、クラスターで実行している Pod およびサービスにはそれぞれ独自の IP アドレスが割り当てられます。IP アドレスは、近くで実行されている他の Pod やサービスからアクセスできますが、外部クライアントの外部からはアクセスできません。Ingress Operator は IngressController API を実装し、OpenShift Container Platform クラスターサービスへの外部アクセスを可能にするコンポーネントです。

Ingress Operator を使用すると、ルーティングを処理する 1 つ以上の HAProxy ベースの Ingress コントローラー をデプロイおよび管理することにより、外部クライアントがサービスにアクセスできるようになります。OpenShift Container Platform Route および Kubernetes Ingress リソースを指定して、トラフィックをルーティングするために Ingress Operator を使用します。endpointPublishingStrategy タイプおよび内部負荷分散を定義する機能などの Ingress コントローラー内の設定は、Ingress コントローラーエンドポイントを公開する方法を提供します。

6.2. Ingress 設定アセット

インストールプログラムでは、config.openshift.io API グループの Ingress リソースでアセットを生成します (cluster-ingress-02-config.yml)。

Ingress リソースの YAML 定義

インストールプログラムは、このアセットを manifests/ ディレクトリーの cluster-ingress-02-config.yml ファイルに保存します。この Ingress リソースは、Ingress のクラスター全体の設定を定義します。この Ingress 設定は、以下のように使用されます。

- Ingress Operator は、クラスター Ingress 設定のドメインを、デフォルト Ingress コントローラーのドメインとして使用します。

-

OpenShift API Server Operator は、クラスター Ingress 設定からのドメインを使用します。このドメインは、明示的なホストを指定しない

Routeリソースのデフォルトホストを生成する際にも使用されます。

6.3. イメージコントローラー設定パラメーター

ingresscontrollers.operator.openshift.io リソースは以下の設定パラメーターを提供します。

| パラメーター | 説明 |

|---|---|

|

|

空の場合、デフォルト値は |

|

|

|

|

|

設定されていない場合、デフォルト値は

|

|

|

シークレットには以下のキーおよびデータが含まれる必要があります: *

設定されていない場合、ワイルドカード証明書は自動的に生成され、使用されます。証明書は Ingress コントーラーの 使用中の証明書 (生成されるか、ユーザー指定の場合かを問わない) は OpenShift Container Platform のビルトイン OAuth サーバーに自動的に統合されます。 |

|

|

|

|

|

|

|

|

設定されていない場合は、デフォルト値が使用されます。 注記

|

|

|

これが設定されていない場合、デフォルト値は

Ingress コントローラーの最小の TLS バージョンは 重要

HAProxy Ingress コントローラーイメージは TLS

また、Ingress Operator は TLS

OpenShift Container Platform ルーターは、TLS_AES_128_CCM_SHA256、TLS_CHACHA20_POLY1305_SHA256、TLS_AES_256_GCM_SHA384、および TLS_AES_128_GCM_SHA256 を使用する TLS 注記

設定されたセキュリティープロファイルの暗号および最小 TLS バージョンが |

|

|

|

|

|

|

|

|

デフォルトでは、ポリシーは

|

すべてのパラメーターはオプションです。

6.3.1. Ingress コントローラーの TLS セキュリティープロファイル

TLS セキュリティープロファイルは、サーバーに接続する際に接続クライアントが使用できる暗号を規制する方法をサーバーに提供します。

6.3.1.1. TLS セキュリティープロファイルについて

TLS (Transport Layer Security) セキュリティープロファイルを使用して、さまざまな OpenShift Container Platform コンポーネントに必要な TLS 暗号を定義できます。OpenShift Container Platform の TLS セキュリティープロファイルは、Mozilla が推奨する設定 に基づいています。

コンポーネントごとに、以下の TLS セキュリティープロファイルのいずれかを指定できます。

| プロファイル | 説明 |

|---|---|

|

| このプロファイルは、レガシークライアントまたはライブラリーでの使用を目的としています。このプロファイルは、Old 後方互換性 の推奨設定に基づいています。

注記 Ingress コントローラーの場合、TLS の最小バージョンは 1.0 から 1.1 に変換されます。 |

|

| このプロファイルは、大多数のクライアントに推奨される設定です。これは、Ingress コントローラーおよびコントロールプレーンのデフォルトの TLS セキュリティープロファイルです。このプロファイルは、Intermediate 互換性 の推奨設定に基づいています。

|

|

| このプロファイルは、後方互換性を必要としない Modern のクライアントでの使用を目的としています。このプロファイルは、Modern 互換性 の推奨設定に基づいています。

注記

OpenShift Container Platform 4.6、4.7、および 4.8 では、 重要

|

|

| このプロファイルを使用すると、使用する TLS バージョンと暗号を定義できます。 警告

無効な設定により問題が発生する可能性があるため、 注記

OpenShift Container Platform ルーターは、Red Hat 分散の OpenSSL デフォルトセットの TLS |

事前定義されたプロファイルタイプのいずれかを使用する場合、有効なプロファイル設定はリリース間で変更される可能性があります。たとえば、リリース X.Y.Z にデプロイされた Intermediate プロファイルを使用する仕様がある場合、リリース X.Y.Z+1 へのアップグレードにより、新規のプロファイル設定が適用され、ロールアウトが生じる可能性があります。

6.3.1.2. Ingress コントローラーの TLS セキュリティープロファイルの設定

Ingress コントローラーの TLS セキュリティープロファイルを設定するには、IngressController カスタムリソース (CR) を編集して、事前定義済みまたはカスタムの TLS セキュリティープロファイルを指定します。TLS セキュリティープロファイルが設定されていない場合、デフォルト値は API サーバーに設定された TLS セキュリティープロファイルに基づいています。

Old TLS のセキュリティープロファイルを設定するサンプル IngressController CR

TLS セキュリティープロファイルは、Ingress コントローラーの TLS 接続の最小 TLS バージョンと TLS 暗号を定義します。

設定された TLS セキュリティープロファイルの暗号と最小 TLS バージョンは、Status.Tls Profile 配下の IngressController カスタムリソース (CR) と Spec.Tls Security Profile 配下の設定された TLS セキュリティープロファイルで確認できます。Custom TLS セキュリティープロファイルの場合、特定の暗号と最小 TLS バージョンは両方のパラメーターの下に一覧表示されます。

HAProxy Ingress コントローラーイメージは TLS 1.3 をサポートしません。Modern プロファイルには TLS 1.3 が必要であることから、これはサポートされません。Ingress Operator は Modern プロファイルを Intermediate に変換します。また、Ingress Operator は TLS 1.0 の Old または Custom プロファイルを 1.1 に変換し、TLS 1.3 の Custom プロファイルを 1.2 に変換します。

前提条件

-

cluster-adminロールを持つユーザーとしてクラスターにアクセスできる。

手順

openshift-ingress-operatorプロジェクトのIngressControllerCR を編集して、TLS セキュリティープロファイルを設定します。oc edit IngressController default -n openshift-ingress-operator

$ oc edit IngressController default -n openshift-ingress-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow spec.tlsSecurityProfileフィールドを追加します。CustomプロファイルのサンプルIngressControllerCRCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 変更を適用するためにファイルを保存します。

検証

IngressControllerCR にプロファイルが設定されていることを確認します。oc describe IngressController default -n openshift-ingress-operator

$ oc describe IngressController default -n openshift-ingress-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.2. Ingress コントローラーエンドポイントの公開ストラテジー

NodePortService エンドポイントの公開ストラテジー

NodePortService エンドポイントの公開ストラテジーは、Kubernetes NodePort サービスを使用して Ingress コントローラーを公開します。

この設定では、Ingress コントローラーのデプロイメントはコンテナーのネットワークを使用します。NodePortService はデプロイメントを公開するために作成されます。特定のノードポートは OpenShift Container Platform によって動的に割り当てられますが、静的ポートの割り当てをサポートするために、管理される NodePortService のノードポートフィールドへの変更が保持されます。

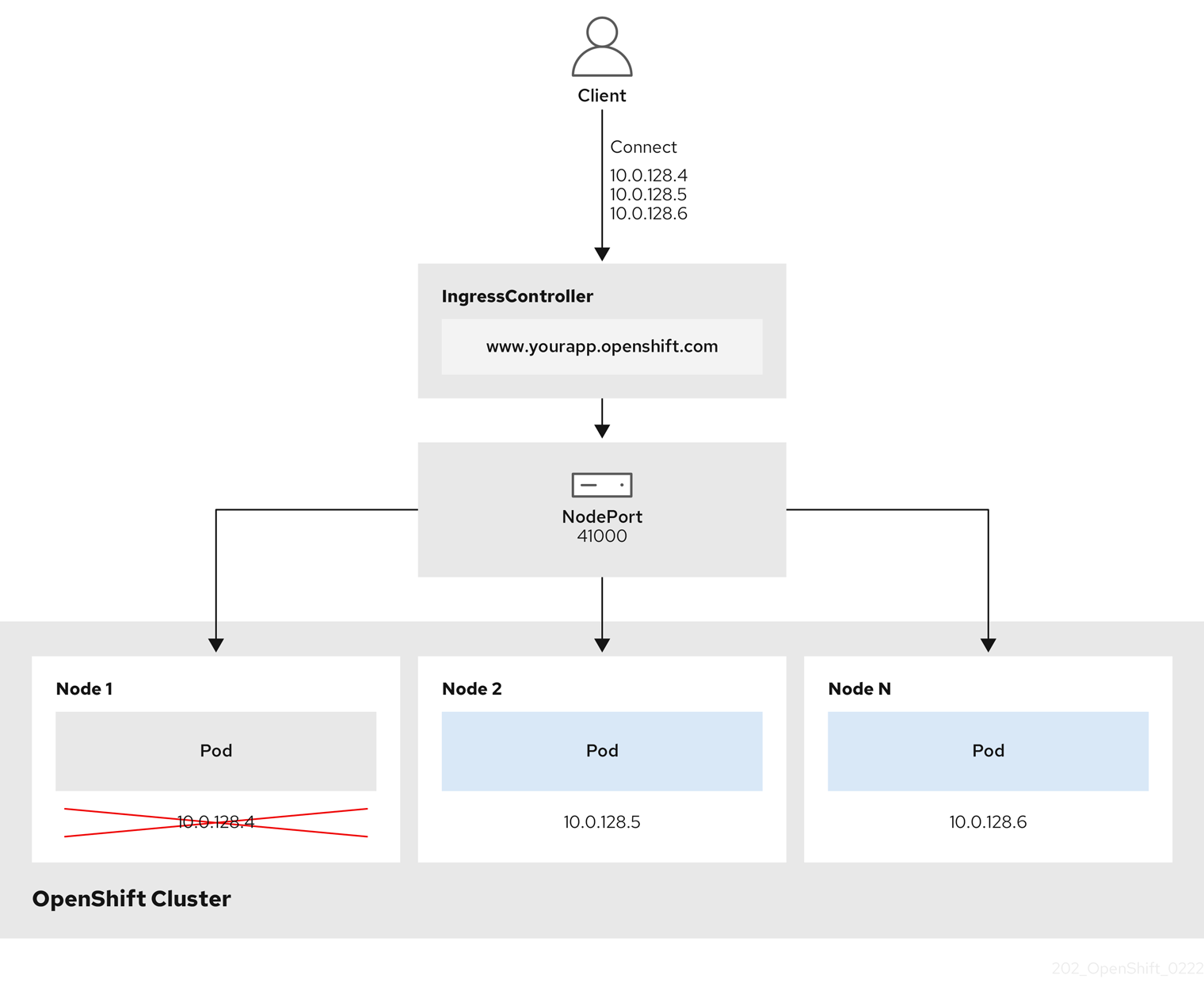

図6.1 NodePortService の図

前述の図では、OpenShift Container Platform Ingress NodePort エンドポイントの公開戦略に関する以下のような概念を示しています。

- クラスターで利用可能なノードにはすべて、外部からアクセス可能な独自の IP アドレスが割り当てられています。クラスター内で動作するサービスは、全ノードに固有の NodePort にバインドされます。

-

たとえば、クライアントが図中の IP アドレス

10.0.128.4に接続してダウンしているノードに接続した場合に、ノードポートは、サービスを実行中で利用可能なノードにクライアントを直接接続します。このシナリオでは、ロードバランシングは必要ありません。イメージが示すように、10.0.128.4アドレスがダウンしており、代わりに別の IP アドレスを使用する必要があります。

Ingress Operator は、サービスの .spec.ports[].nodePort フィールドへの更新を無視します。

デフォルトで、ポートは自動的に割り当てられ、各種の統合用のポート割り当てにアクセスできます。ただし、既存のインフラストラクチャーと統合するために静的ポートの割り当てが必要になることがありますが、これは動的ポートに対応して簡単に再設定できない場合があります。静的ノードポートとの統合を実行するには、管理対象のサービスリソースを直接更新できます。

詳細は、NodePort についての Kubernetes サービスについてのドキュメント を参照してください。

HostNetwork エンドポイントの公開ストラテジー

HostNetwork エンドポイントの公開ストラテジーは、Ingress コントローラーがデプロイされるノードポートで Ingress コントローラーを公開します。

HostNetwork エンドポイント公開ストラテジーを持つ Ingress コントローラーには、ノードごとに単一の Pod レプリカのみを設定できます。n のレプリカを使用する場合、それらのレプリカをスケジュールできる n 以上のノードを使用する必要があります。各 Pod はスケジュールされるノードホストでポート 80 および 443 を要求するので、同じノードで別の Pod がそれらのポートを使用している場合、レプリカをノードにスケジュールすることはできません。

6.4. デフォルト Ingress コントローラーの表示

Ingress Operator は、OpenShift Container Platform の中核となる機能であり、追加の設定なしに有効にできます。

すべての新規 OpenShift Container Platform インストールには、ingresscontroller の名前付きのデフォルトがあります。これは、追加の Ingress コントローラーで補足できます。デフォルトの ingresscontroller が削除される場合、Ingress Operator は 1 分以内にこれを自動的に再作成します。

手順

デフォルト Ingress コントローラーを表示します。

oc describe --namespace=openshift-ingress-operator ingresscontroller/default

$ oc describe --namespace=openshift-ingress-operator ingresscontroller/defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.5. Ingress Operator ステータスの表示

Ingress Operator のステータスを表示し、検査することができます。

手順

Ingress Operator ステータスを表示します。

oc describe clusteroperators/ingress

$ oc describe clusteroperators/ingressCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.6. Ingress コントローラーログの表示

Ingress コントローラーログを表示できます。

手順

Ingress コントローラーログを表示します。

oc logs --namespace=openshift-ingress-operator deployments/ingress-operator

$ oc logs --namespace=openshift-ingress-operator deployments/ingress-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.7. Ingress コントローラーステータスの表示

特定の Ingress コントローラーのステータスを表示できます。

手順

Ingress コントローラーのステータスを表示します。

oc describe --namespace=openshift-ingress-operator ingresscontroller/<name>

$ oc describe --namespace=openshift-ingress-operator ingresscontroller/<name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8. Ingress コントローラーの設定

6.8.1. カスタムデフォルト証明書の設定

管理者として、 Secret リソースを作成し、IngressController カスタムリソース (CR) を編集して Ingress コントローラーがカスタム証明書を使用するように設定できます。

前提条件

- PEM エンコードされたファイルに証明書/キーのペアがなければなりません。ここで、証明書は信頼される認証局またはカスタム PKI で設定されたプライベートの信頼される認証局で署名されます。

証明書が以下の要件を満たしている必要があります。

- 証明書が Ingress ドメインに対して有効化されている必要があります。

-

証明書は拡張を使用して、

subjectAltName拡張を使用して、*.apps.ocp4.example.comなどのワイルドカードドメインを指定します。

IngressControllerCR がなければなりません。デフォルトの CR を使用できます。oc --namespace openshift-ingress-operator get ingresscontrollers

$ oc --namespace openshift-ingress-operator get ingresscontrollersCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

NAME AGE default 10m

NAME AGE default 10mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Intermediate 証明書がある場合、それらはカスタムデフォルト証明書が含まれるシークレットの tls.crt ファイルに組み込まれる必要があります。証明書を指定する際の順序は重要になります。サーバー証明書の後に Intermediate 証明書を一覧表示します。

手順

以下では、カスタム証明書とキーのペアが、現在の作業ディレクトリーの tls.crt および tls.key ファイルにあることを前提とします。tls.crt および tls.key を実際のパス名に置き換えます。さらに、 Secret リソースを作成し、これを IngressController CR で参照する際に、custom-certs-default を別の名前に置き換えます。

このアクションにより、Ingress コントローラーはデプロイメントストラテジーを使用して再デプロイされます。

tls.crtおよびtls.keyファイルを使用して、カスタム証明書を含む Secret リソースをopenshift-ingressnamespace に作成します。oc --namespace openshift-ingress create secret tls custom-certs-default --cert=tls.crt --key=tls.key

$ oc --namespace openshift-ingress create secret tls custom-certs-default --cert=tls.crt --key=tls.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow IngressController CR を、新規証明書シークレットを参照するように更新します。

oc patch --type=merge --namespace openshift-ingress-operator ingresscontrollers/default \ --patch '{"spec":{"defaultCertificate":{"name":"custom-certs-default"}}}'$ oc patch --type=merge --namespace openshift-ingress-operator ingresscontrollers/default \ --patch '{"spec":{"defaultCertificate":{"name":"custom-certs-default"}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 更新が正常に行われていることを確認します。

echo Q |\ openssl s_client -connect console-openshift-console.apps.<domain>:443 -showcerts 2>/dev/null |\ openssl x509 -noout -subject -issuer -enddate

$ echo Q |\ openssl s_client -connect console-openshift-console.apps.<domain>:443 -showcerts 2>/dev/null |\ openssl x509 -noout -subject -issuer -enddateCopy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<domain>- クラスターのベースドメイン名を指定します。

出力例

subject=C = US, ST = NC, L = Raleigh, O = RH, OU = OCP4, CN = *.apps.example.com issuer=C = US, ST = NC, L = Raleigh, O = RH, OU = OCP4, CN = example.com notAfter=May 10 08:32:45 2022 GM

subject=C = US, ST = NC, L = Raleigh, O = RH, OU = OCP4, CN = *.apps.example.com issuer=C = US, ST = NC, L = Raleigh, O = RH, OU = OCP4, CN = example.com notAfter=May 10 08:32:45 2022 GMCopy to Clipboard Copied! Toggle word wrap Toggle overflow 証明書シークレットの名前は、CR を更新するために使用された値に一致する必要があります。

IngressController CR が変更された後に、Ingress Operator はカスタム証明書を使用できるように Ingress コントローラーのデプロイメントを更新します。

6.8.2. カスタムデフォルト証明書の削除

管理者は、使用する Ingress Controller を設定したカスタム証明書を削除できます。

前提条件

-

cluster-adminロールを持つユーザーとしてクラスターにアクセスできる。 -

OpenShift CLI (

oc) がインストールされている。 - Ingress Controller のカスタムデフォルト証明書を設定している。

手順

カスタム証明書を削除し、OpenShift Container Platform に同梱されている証明書を復元するには、以下のコマンドを入力します。

oc patch -n openshift-ingress-operator ingresscontrollers/default \ --type json -p $'- op: remove\n path: /spec/defaultCertificate'

$ oc patch -n openshift-ingress-operator ingresscontrollers/default \ --type json -p $'- op: remove\n path: /spec/defaultCertificate'Copy to Clipboard Copied! Toggle word wrap Toggle overflow クラスターが新しい証明書設定を調整している間、遅延が発生する可能性があります。

検証

元のクラスター証明書が復元されたことを確認するには、次のコマンドを入力します。

echo Q | \ openssl s_client -connect console-openshift-console.apps.<domain>:443 -showcerts 2>/dev/null | \ openssl x509 -noout -subject -issuer -enddate

$ echo Q | \ openssl s_client -connect console-openshift-console.apps.<domain>:443 -showcerts 2>/dev/null | \ openssl x509 -noout -subject -issuer -enddateCopy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<domain>- クラスターのベースドメイン名を指定します。

出力例

subject=CN = *.apps.<domain> issuer=CN = ingress-operator@1620633373 notAfter=May 10 10:44:36 2023 GMT

subject=CN = *.apps.<domain> issuer=CN = ingress-operator@1620633373 notAfter=May 10 10:44:36 2023 GMTCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.3. Ingress コントローラーのスケーリング

Ingress コントローラーは、スループットを増大させるための要件を含む、ルーティングのパフォーマンスや可用性に関する各種要件に対応するために手動でスケーリングできます。oc コマンドは、IngressController リソースのスケーリングに使用されます。以下の手順では、デフォルトの IngressController をスケールアップする例を示します。

手順

デフォルト

IngressControllerの現在の利用可能なレプリカ数を表示します。oc get -n openshift-ingress-operator ingresscontrollers/default -o jsonpath='{$.status.availableReplicas}'$ oc get -n openshift-ingress-operator ingresscontrollers/default -o jsonpath='{$.status.availableReplicas}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

2

2Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc patchコマンドを使用して、デフォルトのIngressControllerを必要なレプリカ数にスケーリングします。以下の例では、デフォルトのIngressControllerを 3 つのレプリカにスケーリングしています。oc patch -n openshift-ingress-operator ingresscontroller/default --patch '{"spec":{"replicas": 3}}' --type=merge$ oc patch -n openshift-ingress-operator ingresscontroller/default --patch '{"spec":{"replicas": 3}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

ingresscontroller.operator.openshift.io/default patched

ingresscontroller.operator.openshift.io/default patchedCopy to Clipboard Copied! Toggle word wrap Toggle overflow デフォルトの

IngressControllerが指定したレプリカ数にスケーリングされていることを確認します。oc get -n openshift-ingress-operator ingresscontrollers/default -o jsonpath='{$.status.availableReplicas}'$ oc get -n openshift-ingress-operator ingresscontrollers/default -o jsonpath='{$.status.availableReplicas}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

3

3Copy to Clipboard Copied! Toggle word wrap Toggle overflow

スケーリングは、必要な数のレプリカを作成するのに時間がかかるため、すぐに実行できるアクションではありません。

6.8.4. Ingress アクセスロギングの設定

アクセスログを有効にするように Ingress コントローラーを設定できます。大量のトラフィックを受信しないクラスターがある場合、サイドカーにログインできます。クラスターのトラフィックが多い場合、ロギングスタックの容量を超えないようにしたり、OpenShift Container Platform 外のロギングインフラストラクチャーと統合したりするために、ログをカスタム syslog エンドポイントに転送することができます。アクセスログの形式を指定することもできます。

コンテナーロギングは、既存の Syslog ロギングインフラストラクチャーがない場合や、Ingress コントローラーで問題を診断する際に短期間使用する場合に、低トラフィックのクラスターのアクセスログを有効にするのに役立ちます。

アクセスログがクラスターロギングスタックの容量を超える可能性があるトラフィックの多いクラスターや、ロギングソリューションが既存の Syslog ロギングインフラストラクチャーと統合する必要のある環境では、syslog が必要です。Syslog のユースケースは重複する可能性があります。

前提条件

-

cluster-admin権限を持つユーザーとしてログインしている。

手順

サイドカーへの Ingress アクセスロギングを設定します。

Ingress アクセスロギングを設定するには、

spec.logging.access.destinationを使用して宛先を指定する必要があります。サイドカーコンテナーへのロギングを指定するには、Containerspec.logging.access.destination.typeを指定する必要があります。以下の例は、コンテナーContainerの宛先に対してログ記録する Ingress コントローラー定義です。Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- サイドカーへの Ingress アクセスロギングの設定では、

NodePortServiceは必要ありません。Ingress ロギングは、すべてのendpointPublishingStrategyと互換性があります。

Ingress コントローラーをサイドカーに対してログを記録するように設定すると、Operator は Ingress コントローラー Pod 内に

logsという名前のコンテナーを作成します。oc -n openshift-ingress logs deployment.apps/router-default -c logs

$ oc -n openshift-ingress logs deployment.apps/router-default -c logsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

2020-05-11T19:11:50.135710+00:00 router-default-57dfc6cd95-bpmk6 router-default-57dfc6cd95-bpmk6 haproxy[108]: 174.19.21.82:39654 [11/May/2020:19:11:50.133] public be_http:hello-openshift:hello-openshift/pod:hello-openshift:hello-openshift:10.128.2.12:8080 0/0/1/0/1 200 142 - - --NI 1/1/0/0/0 0/0 "GET / HTTP/1.1"

2020-05-11T19:11:50.135710+00:00 router-default-57dfc6cd95-bpmk6 router-default-57dfc6cd95-bpmk6 haproxy[108]: 174.19.21.82:39654 [11/May/2020:19:11:50.133] public be_http:hello-openshift:hello-openshift/pod:hello-openshift:hello-openshift:10.128.2.12:8080 0/0/1/0/1 200 142 - - --NI 1/1/0/0/0 0/0 "GET / HTTP/1.1"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Syslog エンドポイントへの Ingress アクセスロギングを設定します。

Ingress アクセスロギングを設定するには、

spec.logging.access.destinationを使用して宛先を指定する必要があります。Syslog エンドポイント宛先へのロギングを指定するには、spec.logging.access.destination.typeにSyslogを指定する必要があります。宛先タイプがSyslogの場合、spec.logging.access.destination.syslog.endpointを使用して宛先エンドポイントも指定する必要があります。また、spec.logging.access.destination.syslog.facilityを使用してファシリティーを指定できます。以下の例は、Syslog宛先に対してログを記録する Ingress コントローラーの定義です。Copy to Clipboard Copied! Toggle word wrap Toggle overflow 注記syslog宛先ポートは UDP である必要があります。

特定のログ形式で Ingress アクセスロギングを設定します。

spec.logging.access.httpLogFormatを指定して、ログ形式をカスタマイズできます。以下の例は、IP アドレスが 1.2.3.4 およびポート 10514 のsyslogエンドポイントに対してログを記録する Ingress コントローラーの定義です。Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Ingress アクセスロギングを無効にします。

Ingress アクセスロギングを無効にするには、

spec.loggingまたはspec.logging.accessを空のままにします。Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.5. Ingress コントローラーのシャード化

トラフィックがクラスターに送信される主要なメカニズムとして、Ingress コントローラーまたはルーターへの要求が大きくなる可能性があります。クラスター管理者は、以下を実行するためにルートをシャード化できます。

- Ingress コントローラーまたはルーターを複数のルートに分散し、変更に対する応答を加速します。

- 特定のルートを他のルートとは異なる信頼性の保証を持つように割り当てます。

- 特定の Ingress コントローラーに異なるポリシーを定義することを許可します。

- 特定のルートのみが追加機能を使用することを許可します。

- たとえば、異なるアドレスで異なるルートを公開し、内部ユーザーおよび外部ユーザーが異なるルートを認識できるようにします。

Ingress コントローラーは、ルートラベルまたは namespace ラベルのいずれかをシャード化の方法として使用できます。

6.8.5.1. ルートラベルを使用した Ingress コントローラーのシャード化の設定

ルートラベルを使用した Ingress コントローラーのシャード化とは、Ingress コントローラーがルートセレクターによって選択される任意 namespace の任意のルートを提供することを意味します。

Ingress コントローラーのシャード化は、一連の Ingress コントローラー間で着信トラフィックの負荷を分散し、トラフィックを特定の Ingress コントローラーに分離する際に役立ちます。たとえば、Company A のトラフィックをある Ingress コントローラーに指定し、Company B を別の Ingress コントローラーに指定できます。

手順

router-internal.yamlファイルを編集します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ingress コントローラーの

router-internal.yamlファイルを適用します。oc apply -f router-internal.yaml

# oc apply -f router-internal.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ingress コントローラーは、

type: shardedというラベルのある namespace のルートを選択します。

6.8.5.2. namespace ラベルを使用した Ingress コントローラーのシャード化の設定

namespace ラベルを使用した Ingress コントローラーのシャード化とは、Ingress コントローラーが namespace セレクターによって選択される任意の namespace の任意のルートを提供することを意味します。

Ingress コントローラーのシャード化は、一連の Ingress コントローラー間で着信トラフィックの負荷を分散し、トラフィックを特定の Ingress コントローラーに分離する際に役立ちます。たとえば、Company A のトラフィックをある Ingress コントローラーに指定し、Company B を別の Ingress コントローラーに指定できます。

Keepalived Ingress VIP をデプロイする場合は、endpoint Publishing Strategy パラメーターに Host Network の値が割り当てられた、デフォルト以外の Ingress Controller をデプロイしないでください。デプロイしてしまうと、問題が発生する可能性があります。endpoint Publishing Strategy に Host Network ではなく、Node Port という値を使用してください。

手順

router-internal.yamlファイルを編集します。cat router-internal.yaml

# cat router-internal.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ingress コントローラーの

router-internal.yamlファイルを適用します。oc apply -f router-internal.yaml

# oc apply -f router-internal.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ingress コントローラーは、

type: shardedというラベルのある namespace セレクターによって選択される namespace のルートを選択します。

6.8.6. 内部ロードバランサーを使用するように Ingress コントローラーを設定する

クラウドプラットフォームで Ingress コントローラーを作成する場合、Ingress コントローラーはデフォルトでパブリッククラウドロードバランサーによって公開されます。管理者は、内部クラウドロードバランサーを使用する Ingress コントローラーを作成できます。

クラウドプロバイダーが Microsoft Azure の場合、ノードを参照するパブリックロードバランサーが少なくとも 1 つ必要です。これがない場合、すべてのノードがインターネットへの egress 接続を失います。

IngressController オブジェクトの スコープ を変更する必要がある場合、IngressController オブジェクトを削除してから、これを再作成する必要があります。カスタムリソース (CR) の作成後に .spec.endpointPublishingStrategy.loadBalancer.scope パラメーターを変更することはできません。

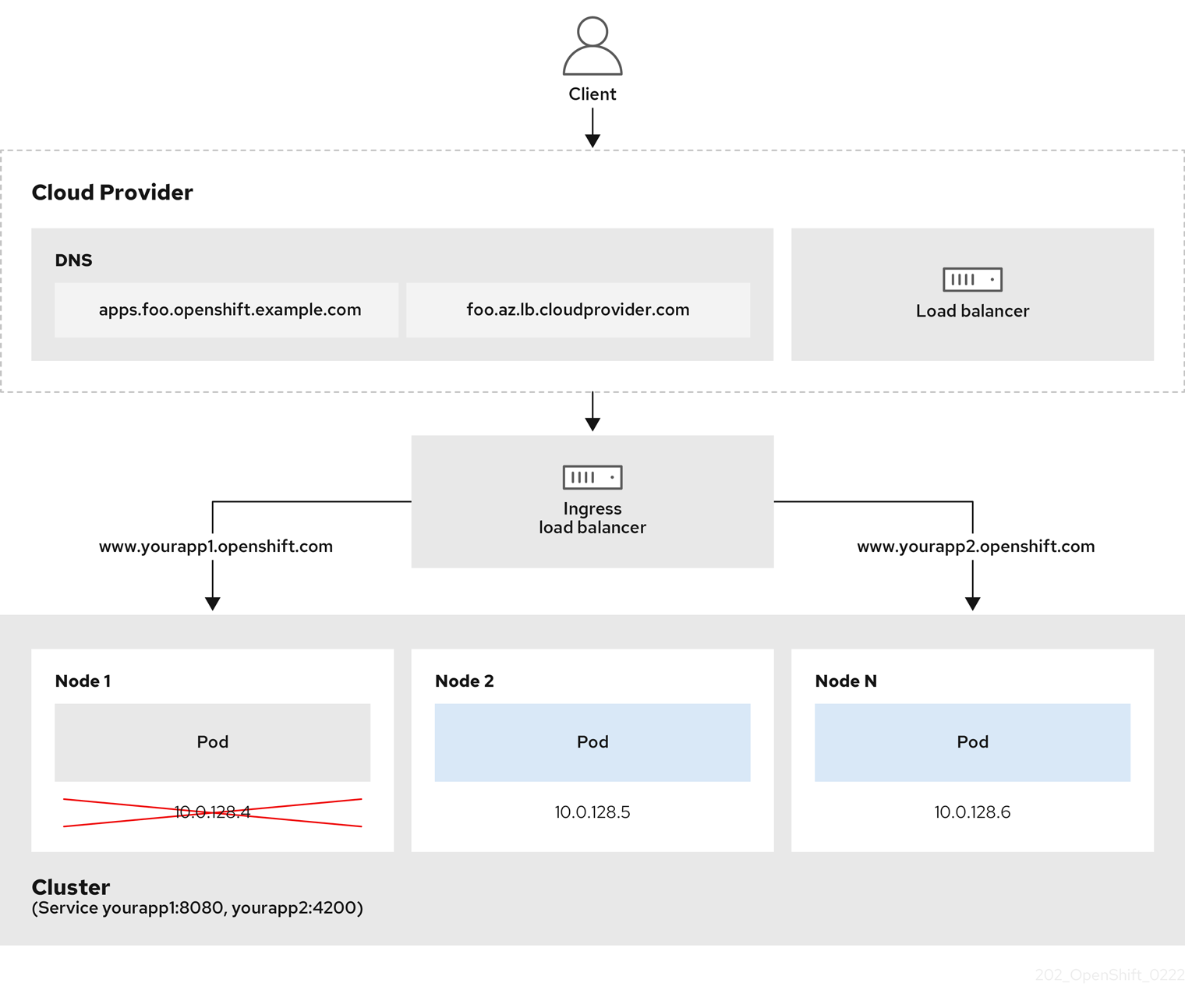

図6.2 ロードバランサーの図

前述の図では、OpenShift Container Platform Ingress LoadBalancerService エンドポイントの公開戦略に関する以下のような概念を示しています。

- 負荷は、外部からクラウドプロバイダーのロードバランサーを使用するか、内部から OpenShift Ingress Controller Load Balancer を使用して、分散できます。

- ロードバランサーのシングル IP アドレスと、図にあるクラスターのように、8080 や 4200 といった馴染みのあるポートを使用することができます。

- 外部のロードバランサーからのトラフィックは、ダウンしたノードのインスタンスで記載されているように、Pod の方向に進められ、ロードバランサーが管理します。実装の詳細については、Kubernetes サービスドキュメント を参照してください。

前提条件

-

OpenShift CLI (

oc) をインストールしている。 -

cluster-admin権限を持つユーザーとしてログインしている。

手順

以下の例のように、

<name>-ingress-controller.yamlという名前のファイルにIngressControllerカスタムリソース (CR) を作成します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow 以下のコマンドを実行して、直前の手順で定義された Ingress コントローラーを作成します。

oc create -f <name>-ingress-controller.yaml

$ oc create -f <name>-ingress-controller.yaml1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

<name>をIngressControllerオブジェクトの名前に置き換えます。

オプション: 以下のコマンドを実行して Ingress コントローラーが作成されていることを確認します。

oc --all-namespaces=true get ingresscontrollers

$ oc --all-namespaces=true get ingresscontrollersCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.7. クラスターを内部に配置するようにのデフォルト Ingress コントローラーを設定する

削除や再作成を実行して、クラスターを内部に配置するように default Ingress コントローラーを設定できます。

クラウドプロバイダーが Microsoft Azure の場合、ノードを参照するパブリックロードバランサーが少なくとも 1 つ必要です。これがない場合、すべてのノードがインターネットへの egress 接続を失います。

IngressController オブジェクトの スコープ を変更する必要がある場合、IngressController オブジェクトを削除してから、これを再作成する必要があります。カスタムリソース (CR) の作成後に .spec.endpointPublishingStrategy.loadBalancer.scope パラメーターを変更することはできません。

前提条件

-

OpenShift CLI (

oc) をインストールしている。 -

cluster-admin権限を持つユーザーとしてログインしている。

手順

削除や再作成を実行して、クラスターを内部に配置するように

defaultIngress コントローラーを設定します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.8. ルートの受付ポリシーの設定

管理者およびアプリケーション開発者は、同じドメイン名を持つ複数の namespace でアプリケーションを実行できます。これは、複数のチームが同じホスト名で公開されるマイクロサービスを開発する組織を対象としています。

複数の namespace での要求の許可は、namespace 間の信頼のあるクラスターに対してのみ有効にする必要があります。有効にしないと、悪意のあるユーザーがホスト名を乗っ取る可能性があります。このため、デフォルトの受付ポリシーは複数の namespace 間でのホスト名の要求を許可しません。

前提条件

- クラスター管理者の権限。

手順

以下のコマンドを使用して、

ingresscontrollerリソース変数の.spec.routeAdmissionフィールドを編集します。oc -n openshift-ingress-operator patch ingresscontroller/default --patch '{"spec":{"routeAdmission":{"namespaceOwnership":"InterNamespaceAllowed"}}}' --type=merge$ oc -n openshift-ingress-operator patch ingresscontroller/default --patch '{"spec":{"routeAdmission":{"namespaceOwnership":"InterNamespaceAllowed"}}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow イメージコントローラー設定例

spec: routeAdmission: namespaceOwnership: InterNamespaceAllowed ...spec: routeAdmission: namespaceOwnership: InterNamespaceAllowed ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.9. ワイルドカードルートの使用

HAProxy Ingress コントローラーにはワイルドカードルートのサポートがあります。Ingress Operator は wildcardPolicy を使用して、Ingress コントローラーの ROUTER_ALLOW_WILDCARD_ROUTES 環境変数を設定します。

Ingress コントローラーのデフォルトの動作では、ワイルドカードポリシーの None (既存の IngressController リソースとの後方互換性がある) を持つルートを許可します。

手順

ワイルドカードポリシーを設定します。

以下のコマンドを使用して

IngressControllerリソースを編集します。oc edit IngressController

$ oc edit IngressControllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow specの下で、wildcardPolicyフィールドをWildcardsDisallowedまたはWildcardsAllowedに設定します。spec: routeAdmission: wildcardPolicy: WildcardsDisallowed # or WildcardsAllowedspec: routeAdmission: wildcardPolicy: WildcardsDisallowed # or WildcardsAllowedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.10. X-Forwarded ヘッダーの使用

Forwarded および X-Forwarded-For を含む HTTP ヘッダーの処理方法についてのポリシーを指定するように HAProxy Ingress コントローラーを設定します。Ingress Operator は HTTPHeaders フィールドを使用して、Ingress コントローラーの ROUTER_SET_FORWARDED_HEADERS 環境変数を設定します。

手順

Ingress コントローラー用に

HTTPHeadersフィールドを設定します。以下のコマンドを使用して

IngressControllerリソースを編集します。oc edit IngressController

$ oc edit IngressControllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow specの下で、HTTPHeadersポリシーフィールドをAppend、Replace、IfNone、またはNeverに設定します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow

使用例

クラスター管理者として、以下を実行できます。

Ingress コントローラーに転送する前に、

X-Forwarded-Forヘッダーを各リクエストに挿入する外部プロキシーを設定します。ヘッダーを変更せずに渡すように Ingress コントローラーを設定するには、

neverポリシーを指定します。これにより、Ingress コントローラーはヘッダーを設定しなくなり、アプリケーションは外部プロキシーが提供するヘッダーのみを受信します。外部プロキシーが外部クラスター要求を設定する

X-Forwarded-Forヘッダーを変更せずに渡すように Ingress コントローラーを設定します。外部プロキシーを通過しない内部クラスター要求に

X-Forwarded-Forヘッダーを設定するように Ingress コントローラーを設定するには、if-noneポリシーを指定します。外部プロキシー経由で HTTP 要求にヘッダーがすでに設定されている場合、Ingress コントローラーはこれを保持します。要求がプロキシーを通過していないためにヘッダーがない場合、Ingress コントローラーはヘッダーを追加します。

アプリケーション開発者として、以下を実行できます。

X-Forwarded-Forヘッダーを挿入するアプリケーション固有の外部プロキシーを設定します。他の Route のポリシーに影響を与えずに、アプリケーションの Route 用にヘッダーを変更せずに渡すように Ingress コントローラーを設定するには、アプリケーションの Route に アノテーション

haproxy.router.openshift.io/set-forwarded-headers: if-noneまたはhaproxy.router.openshift.io/set-forwarded-headers: neverを追加します。注記Ingress コントローラーのグローバルに設定された値とは別に、

haproxy.router.openshift.io/set-forwarded-headersアノテーションをルートごとに設定できます。

6.8.11. HTTP/2 Ingress 接続の有効化

HAProxy で透過的なエンドツーエンド HTTP/2 接続を有効にすることができます。これにより、アプリケーションの所有者は、単一接続、ヘッダー圧縮、バイナリーストリームなど、HTTP/2 プロトコル機能を使用できます。

個別の Ingress コントローラーまたはクラスター全体について、HTTP/2 接続を有効にすることができます。

クライアントから HAProxy への接続について HTTP/2 の使用を有効にするために、ルートはカスタム証明書を指定する必要があります。デフォルトの証明書を使用するルートは HTTP/2 を使用することができません。この制限は、クライアントが同じ証明書を使用する複数の異なるルートに接続を再使用するなどの、接続の結合 (coalescing) の問題を回避するために必要です。

HAProxy からアプリケーション Pod への接続は、re-encrypt ルートのみに HTTP/2 を使用でき、edge termination ルートまたは非セキュアなルートには使用しません。この制限は、HAProxy が TLS 拡張である Application-Level Protocol Negotiation (ALPN) を使用してバックエンドで HTTP/2 の使用をネゴシエートするためにあります。そのため、エンドツーエンドの HTTP/2 はパススルーおよび re-encrypt 使用できますが、非セキュアなルートまたは edge termination ルートでは使用できません。

再暗号化ルートで WebSocket を使用し、Ingress Controller で HTTP/2 を有効にするには、HTTP/2 を介した WebSocket のサポートが必要です。HTTP/2 上の WebSockets は HAProxy 2.4 の機能であり、現時点では OpenShift Container Platform ではサポートされていません。

パススルー以外のルートの場合、Ingress コントローラーはクライアントからの接続とは独立してアプリケーションへの接続をネゴシエートします。つまり、クライアントが Ingress コントローラーに接続して HTTP/1.1 をネゴシエートし、Ingress コントローラーは次にアプリケーションに接続して HTTP/2 をネゴシエートし、アプリケーションへの HTTP/2 接続を使用してクライアント HTTP/1.1 接続からの要求の転送を実行できます。Ingress コントローラーは WebSocket を HTTP/2 に転送できず、その HTTP/2 接続を WebSocket に対してアップグレードできないため、クライアントが後に HTTP/1.1 から WebSocket プロトコルに接続をアップグレードしようとすると問題が発生します。そのため、WebSocket 接続を受け入れることが意図されたアプリケーションがある場合、これは HTTP/2 プロトコルのネゴシエートを許可できないようにする必要があります。そうしないと、クライアントは WebSocket プロトコルへのアップグレードに失敗します。

手順

単一 Ingress コントローラーで HTTP/2 を有効にします。

Ingress コントローラーで HTTP/2 を有効にするには、

oc annotateコマンドを入力します。oc -n openshift-ingress-operator annotate ingresscontrollers/<ingresscontroller_name> ingress.operator.openshift.io/default-enable-http2=true

$ oc -n openshift-ingress-operator annotate ingresscontrollers/<ingresscontroller_name> ingress.operator.openshift.io/default-enable-http2=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow <ingresscontroller_name>をアノテーションを付ける Ingress コントローラーの名前に置き換えます。

クラスター全体で HTTP/2 を有効にします。

クラスター全体で HTTP/2 を有効にするには、

oc annotateコマンドを入力します。oc annotate ingresses.config/cluster ingress.operator.openshift.io/default-enable-http2=true

$ oc annotate ingresses.config/cluster ingress.operator.openshift.io/default-enable-http2=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

第7章 ノードポートサービス範囲の設定

クラスター管理者は、利用可能なノードのポート範囲を拡張できます。クラスターで多数のノードポートが使用される場合、利用可能なポートの数を増やす必要がある場合があります。

デフォルトのポート範囲は 30000-32767 です。最初にデフォルト範囲を超えて拡張した場合でも、ポート範囲を縮小することはできません。

7.1. 前提条件

-

クラスターインフラストラクチャーは、拡張された範囲内で指定するポートへのアクセスを許可する必要があります。たとえば、ノードのポート範囲を

30000-32900に拡張する場合、ファイアウォールまたはパケットフィルターリングの設定によりこれに含まれるポート範囲32768-32900を許可する必要があります。

7.2. ノードのポート範囲の拡張

クラスターのノードポート範囲を拡張できます。

前提条件

-

OpenShift CLI (

oc) をインストールしている。 -

cluster-admin権限を持つユーザーとしてクラスターにログインすること。

手順

ノードのポート範囲を拡張するには、以下のコマンドを入力します。

<port>を、新規の範囲内で最大のポート番号に置き換えます。oc patch network.config.openshift.io cluster --type=merge -p \ '{ "spec": { "serviceNodePortRange": "30000-<port>" } }'$ oc patch network.config.openshift.io cluster --type=merge -p \ '{ "spec": { "serviceNodePortRange": "30000-<port>" } }'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

network.config.openshift.io/cluster patched

network.config.openshift.io/cluster patchedCopy to Clipboard Copied! Toggle word wrap Toggle overflow 設定がアクティブであることを確認するには、以下のコマンドを入力します。更新が適用されるまでに数分の時間がかかることがあります。

oc get configmaps -n openshift-kube-apiserver config \ -o jsonpath="{.data['config\.yaml']}" | \ grep -Eo '"service-node-port-range":["[[:digit:]]+-[[:digit:]]+"]'$ oc get configmaps -n openshift-kube-apiserver config \ -o jsonpath="{.data['config\.yaml']}" | \ grep -Eo '"service-node-port-range":["[[:digit:]]+-[[:digit:]]+"]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

"service-node-port-range":["30000-33000"]

"service-node-port-range":["30000-33000"]Copy to Clipboard Copied! Toggle word wrap Toggle overflow

第8章 ベアメタルクラスターでの SCTP (Stream Control Transmission Protocol) の使用

クラスター管理者は、クラスターで SCTP (Stream Control Transmission Protocol) を使用できます。

8.1. OpenShift Container Platform での SCTP (Stream Control Transmission Protocol) のサポート

クラスター管理者は、クラスターのホストで SCTP を有効にできます。Red Hat Enterprise Linux CoreOS (RHCOS) で、SCTP モジュールはデフォルトで無効にされています。

SCTP は、IP ネットワークの上部で実行される信頼できるメッセージベースのプロトコルです。

これを有効にすると、SCTP を Pod、サービス、およびネットワークポリシーでプロトコルとして使用できます。Service オブジェクトは、type パラメーターを ClusterIP または NodePort のいずれかの値に設定して定義する必要があります。

8.1.1. SCTP プロトコルを使用した設定例

protocol パラメーターを Pod またはサービスリソース定義の SCTP 値に設定して、Pod またはサービスを SCTP を使用するように設定できます。

以下の例では、Pod は SCTP を使用するように設定されています。

以下の例では、サービスは SCTP を使用するように設定されています。

以下の例では、NetworkPolicy オブジェクトは、特定のラベルの付いた Pod からポート 80 の SCTP ネットワークトラフィックに適用するように設定されます。

8.2. SCTP (Stream Control Transmission Protocol) の有効化

クラスター管理者は、クラスターのワーカーノードでブラックリストに指定した SCTP カーネルモジュールを読み込み、有効にできます。

前提条件

-

OpenShift CLI (

oc) をインストールしている。 -

cluster-adminロールを持つユーザーとしてクラスターにアクセスできる。

手順

以下の YAML 定義が含まれる

load-sctp-module.yamlという名前のファイルを作成します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow MachineConfigオブジェクトを作成するには、以下のコマンドを入力します。oc create -f load-sctp-module.yaml

$ oc create -f load-sctp-module.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow オプション: MachineConfig Operator が設定変更を適用している間にノードのステータスを確認するには、以下のコマンドを入力します。ノードのステータスが

Readyに移行すると、設定の更新が適用されます。oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

8.3. SCTP (Stream Control Transmission Protocol) が有効になっていることの確認

SCTP がクラスターで機能することを確認するには、SCTP トラフィックをリッスンするアプリケーションで Pod を作成し、これをサービスに関連付け、公開されたサービスに接続します。

前提条件

-

クラスターからインターネットにアクセスし、

ncパッケージをインストールすること。 -

OpenShift CLI (

oc) をインストールします。 -

cluster-adminロールを持つユーザーとしてクラスターにアクセスできる。

手順

SCTP リスナーを起動する Pod を作成します。

以下の YAML で Pod を定義する

sctp-server.yamlという名前のファイルを作成します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow 以下のコマンドを入力して Pod を作成します。

oc create -f sctp-server.yaml

$ oc create -f sctp-server.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

SCTP リスナー Pod のサービスを作成します。

以下の YAML でサービスを定義する

sctp-service.yamlという名前のファイルを作成します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow サービスを作成するには、以下のコマンドを入力します。

oc create -f sctp-service.yaml

$ oc create -f sctp-service.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

SCTP クライアントの Pod を作成します。

以下の YAML で

sctp-client.yamlという名前のファイルを作成します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow Podオブジェクトを作成するには、以下のコマンドを入力します。oc apply -f sctp-client.yaml

$ oc apply -f sctp-client.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

サーバーで SCTP リスナーを実行します。

サーバー Pod に接続するには、以下のコマンドを入力します。

oc rsh sctpserver

$ oc rsh sctpserverCopy to Clipboard Copied! Toggle word wrap Toggle overflow SCTP リスナーを起動するには、以下のコマンドを入力します。

nc -l 30102 --sctp

$ nc -l 30102 --sctpCopy to Clipboard Copied! Toggle word wrap Toggle overflow

サーバーの SCTP リスナーに接続します。

- ターミナルプログラムで新規のターミナルウィンドウまたはタブを開きます。

sctpserviceサービスの IP アドレスを取得します。以下のコマンドを入力します。oc get services sctpservice -o go-template='{{.spec.clusterIP}}{{"\n"}}'$ oc get services sctpservice -o go-template='{{.spec.clusterIP}}{{"\n"}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow クライアント Pod に接続するには、以下のコマンドを入力します。

oc rsh sctpclient

$ oc rsh sctpclientCopy to Clipboard Copied! Toggle word wrap Toggle overflow SCTP クライアントを起動するには、以下のコマンドを入力します。

<cluster_IP>をsctpserviceサービスのクラスター IP アドレスに置き換えます。nc <cluster_IP> 30102 --sctp

# nc <cluster_IP> 30102 --sctpCopy to Clipboard Copied! Toggle word wrap Toggle overflow

第9章 PTP ハードウェアの設定

Precision Time Protocol (PTP) ハードウェアはテクノロジープレビュー機能です。テクノロジープレビュー機能は Red Hat の実稼働環境でのサービスレベルアグリーメント (SLA) ではサポートされていないため、Red Hat では実稼働環境での使用を推奨していません。Red Hat は実稼働環境でこれらを使用することを推奨していません。テクノロジープレビューの機能は、最新の製品機能をいち早く提供して、開発段階で機能のテストを行いフィードバックを提供していただくことを目的としています。

Red Hat のテクノロジープレビュー機能のサポート範囲に関する詳細は、テクノロジープレビュー機能のサポート範囲 を参照してください。

9.1. PTP ハードウェアについて

OpenShift Container Platform には、ノード上で Precision Time Protocol (PTP) ハードウェアを使用する機能が含まれます。linuxptp サービスは、PTP 対応ハードウェアを搭載したクラスターで設定できます。

PTP Operator は、ベアメタルインフラストラクチャーでのみプロビジョニングされるクラスターの PTP 対応デバイスと連携します。

PTP Operator をデプロイし、OpenShift Container Platform コンソールを使用して PTP をインストールできます。PTP Operator は、linuxptp サービスを作成し、管理します。Operator は以下の機能を提供します。

- クラスター内の PTP 対応デバイスの検出。

- linuxptp サービスの設定の管理。

9.2. PTP ネットワークデバイスの自動検出

PTP Operator は NodePtpDevice.ptp.openshift.io カスタムリソース定義 (CRD) を OpenShift Container Platform に追加します。PTP Operator はクラスターで、各ノードの PTP 対応ネットワークデバイスを検索します。Operator は、互換性のある PTP デバイスを提供する各ノードの NodePtpDevice カスタムリソース (CR) オブジェクトを作成し、更新します。

1 つの CR がノードごとに作成され、ノードと同じ名前を共有します。.status.devices 一覧は、ノード上の PTP デバイスについての情報を提供します。

以下は、PTP Operator によって作成される NodePtpDevice CR の例です。

9.3. PTP Operator のインストール

クラスター管理者は、OpenShift Container Platform CLI または Web コンソールを使用して PTP Operator をインストールできます。

9.3.1. CLI: PTP Operator のインストール

クラスター管理者は、CLI を使用して Operator をインストールできます。

前提条件

- PTP に対応するハードウェアを持つノードでベアメタルハードウェアにインストールされたクラスター。

-

OpenShift CLI (

oc) をインストールしている。 -

cluster-admin権限を持つユーザーとしてログインしている。

手順

PTP Operator の namespace を作成するには、以下のコマンドを入力します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Operator の Operator グループを作成するには、以下のコマンドを入力します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow PTP Operator にサブスクライブします。

以下のコマンドを実行して、OpenShift Container Platform のメジャーおよびマイナーバージョンを環境変数として設定します。これは次の手順で

channelの値として使用されます。OC_VERSION=$(oc version -o yaml | grep openshiftVersion | \ grep -o '[0-9]*[.][0-9]*' | head -1)$ OC_VERSION=$(oc version -o yaml | grep openshiftVersion | \ grep -o '[0-9]*[.][0-9]*' | head -1)Copy to Clipboard Copied! Toggle word wrap Toggle overflow PTP Operator のサブスクリプションを作成するには、以下のコマンドを入力します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Operator がインストールされていることを確認するには、以下のコマンドを入力します。

oc get csv -n openshift-ptp \ -o custom-columns=Name:.metadata.name,Phase:.status.phase

$ oc get csv -n openshift-ptp \ -o custom-columns=Name:.metadata.name,Phase:.status.phaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Name Phase ptp-operator.4.4.0-202006160135 Succeeded

Name Phase ptp-operator.4.4.0-202006160135 SucceededCopy to Clipboard Copied! Toggle word wrap Toggle overflow

9.3.2. Web コンソール: PTP Operator のインストール

クラスター管理者は、Web コンソールを使用して Operator をインストールできます。

先のセクションで説明されているように namespace および Operator グループを作成する必要があります。

手順

OpenShift Container Platform Web コンソールを使用して PTP Operator をインストールします。

- OpenShift Container Platform Web コンソールで、Operators → OperatorHub をクリックします。

- 利用可能な Operator の一覧から PTP Operator を選択してから Install をクリックします。

- Install Operator ページの A specific namespace on the cluster の下で openshift-ptp を選択します。次に、Install をクリックします。

オプション: PTP Operator が正常にインストールされていることを確認します。

- Operators → Installed Operators ページに切り替えます。

PTP Operator が Status が InstallSucceeded の状態で openshift-ptp プロジェクトに一覧表示されていることを確認します。

注記インストール時に、 Operator は Failed ステータスを表示する可能性があります。インストールが後に InstallSucceeded メッセージを出して正常に実行される場合は、Failed メッセージを無視できます。

Operator がインストール済みとして表示されない場合に、さらにトラブルシューティングを実行します。

- Operators → Installed Operators ページに移動し、Operator Subscriptions および Install Plans タブで Status にエラーがあるかどうかを検査します。

-

Workloads → Pods ページに移動し、

openshift-ptpプロジェクトで Pod のログを確認します。

9.4. Linuxptp サービスの設定

PTP Operator は PtpConfig.ptp.openshift.io カスタムリソース定義 (CRD) を OpenShift Container Platform に追加します。PtpConfig カスタムリソース (CR) オブジェクトを作成して、Linuxptp サービス (ptp4l、phc2sys) を設定できます。

前提条件

-

OpenShift CLI (

oc) をインストールしている。 -

cluster-admin権限を持つユーザーとしてログインしている。 - PTP Operator がインストールされていること。

手順

以下の

PtpConfigCR を作成してから、YAML を<name>-ptp-config.yamlファイルに保存します。<name>をこの設定の名前に置き換えます。Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

PtpConfigCR の名前を指定します。- 2

- PTP Operator がインストールされている namespace を指定します。

- 3

- 1 つ以上の

profileオブジェクトの配列を指定します。 - 4

- プロファイルオブジェクトを一意に識別するために使用されるプロファイルオブジェクトの名前を指定します。

- 5

ptp4lサービスで使用するネットワークインターフェイス名を指定します (例:ens787f1)。- 6

ptp4lサービスのシステム設定オプション (例:-s -2) を指定します。これには、インターフェイス名-i <interface>およびサービス設定ファイル-f /etc/ptp4l.confを含めないでください。これらは自動的に追加されます。- 7

phc2sysサービスのシステム設定オプション (例:-a -r) を指定します。- 8

profileがノードに適用される方法を定義する 1 つ以上のrecommendオブジェクトの配列を指定します。- 9

profileセクションに定義されるprofileオブジェクト名を指定します。- 10

0から99までの整数値でpriorityを指定します。数値が大きいほど優先度が低くなるため、99の優先度は10よりも低くなります。ノードがmatchフィールドで定義されるルールに基づいて複数のプロファイルに一致する場合、優先順位の高い プロファイルがそのノードに適用されます。- 11

matchルールを、nodeLabelまたはnodeNameで指定します。- 12

nodeLabelを、ノードオブジェクトのnode.Labelsのkeyで指定します。- 13

nodeNameをノードオブジェクトのnode.Nameで指定します。

以下のコマンドを実行して CR を作成します。

oc create -f <filename>

$ oc create -f <filename>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

<filename>を、先の手順で作成したファイルの名前に置き換えます。

オプション:

PtpConfigプロファイルが、nodeLabelまたはnodeNameに一致するノードに適用されることを確認します。oc get pods -n openshift-ptp -o wide

$ oc get pods -n openshift-ptp -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

第10章 ネットワークポリシー

10.1. ネットワークポリシーについて

クラスター管理者は、トラフィックをクラスター内の Pod に制限するネットワークポリシーを定義できます。

10.1.1. ネットワークポリシーについて

Kubernetes ネットワークポリシーをサポートする Kubernetes Container Network Interface (CNI) プラグインを使用するクラスターでは、ネットワークの分離は NetworkPolicy オブジェクトによって完全に制御されます。OpenShift Container Platform 4.6 では、OpenShift SDN はデフォルトのネットワーク分離モードでのネットワークポリシーの使用をサポートしています。

OpenShift SDN クラスターネットワークプロバイダーを使用する場合、ネットワークポリシーについて、以下の制限が適用されます。

-

egressフィールドで指定される egress ネットワークポリシーはサポートされていません。 -

IPBlock はネットワークポリシーでサポートされますが、

except句はサポートしません。except句を含む IPBlock セクションのあるポリシーを作成する場合、SDN Pod は警告をログに記録し、そのポリシーの IPBlock セクション全体は無視されます。

ネットワークポリシーは、ホストのネットワーク namespace には適用されません。ホストネットワークが有効にされている Pod はネットワークポリシールールによる影響を受けません。

デフォルトで、プロジェクトのすべての Pod は他の Pod およびネットワークのエンドポイントからアクセスできます。プロジェクトで 1 つ以上の Pod を分離するには、そのプロジェクトで NetworkPolicy オブジェクトを作成し、許可する着信接続を指定します。プロジェクト管理者は独自のプロジェクト内で NetworkPolicy オブジェクトの作成および削除を実行できます。

Pod が 1 つ以上の NetworkPolicy オブジェクトのセレクターで一致する場合、Pod はそれらの 1 つ以上の NetworkPolicy オブジェクトで許可される接続のみを受け入れます。NetworkPolicy オブジェクトによって選択されていない Pod は完全にアクセス可能です。

以下のサンプル NetworkPolicy オブジェクトは、複数の異なるシナリオをサポートすることを示しています。

すべてのトラフィックを拒否します。

プロジェクトに deny by default (デフォルトで拒否) を実行させるには、すべての Pod に一致するが、トラフィックを一切許可しない

NetworkPolicyオブジェクトを追加します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow OpenShift Container Platform Ingress コントローラーからの接続のみを許可します。

プロジェクトで OpenShift Container Platform Ingress コントローラーからの接続のみを許可するには、以下の

NetworkPolicyオブジェクトを追加します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow プロジェクト内の Pod からの接続のみを受け入れます。

Pod が同じプロジェクト内の他の Pod からの接続を受け入れるが、他のプロジェクトの Pod からの接続を拒否するように設定するには、以下の

NetworkPolicyオブジェクトを追加します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow Pod ラベルに基づいて HTTP および HTTPS トラフィックのみを許可します。

特定のラベル (以下の例の

role=frontend) の付いた Pod への HTTP および HTTPS アクセスのみを有効にするには、以下と同様のNetworkPolicyオブジェクトを追加します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow namespace および Pod セレクターの両方を使用して接続を受け入れます。

namespace と Pod セレクターを組み合わせてネットワークトラフィックのマッチングをするには、以下と同様の

NetworkPolicyオブジェクトを使用できます。Copy to Clipboard Copied! Toggle word wrap Toggle overflow

NetworkPolicy オブジェクトは加算されるものです。 つまり、複数の NetworkPolicy オブジェクトを組み合わせて複雑なネットワーク要件を満すことができます。

たとえば、先の例で定義された NetworkPolicy オブジェクトの場合、同じプロジェト内に allow-same-namespace と allow-http-and-https ポリシーの両方を定義することができます。これにより、ラベル role=frontend の付いた Pod は各ポリシーで許可されるすべての接続を受け入れます。つまり、同じ namespace の Pod からのすべてのポート、およびすべての namespace の Pod からのポート 80 および 443 での接続を受け入れます。

10.1.2. ネットワークポリシーの最適化

ネットワークポリシーを使用して、namespace 内でラベルで相互に区別される Pod を分離します。

ネットワークポリシールールを効果的に使用するためのガイドラインは、OpenShift SDN クラスターネットワークプロバイダーのみに適用されます。

NetworkPolicy オブジェクトを単一 namespace 内の多数の個別 Pod に適用することは効率的ではありません。Pod ラベルは IP レベルには存在しないため、ネットワークポリシーは、podSelector で選択されるすべての Pod 間のすべてのリンクについての別個の Open vSwitch (OVS) フロールールを生成します。

たとえば、仕様の podSelector および NetworkPolicy オブジェクト内の ingress podSelector のそれぞれが 200 Pod に一致する場合、40,000 (200*200) OVS フロールールが生成されます。これにより、ノードの速度が低下する可能性があります。

ネットワークポリシーを設計する場合は、以下のガイドラインを参照してください。

namespace を使用して分離する必要のある Pod のグループを組み込み、OVS フロールールの数を減らします。

namespace 全体を選択する

NetworkPolicyオブジェクトは、namespaceSelectorsまたは空のpodSelectorsを使用して、namespace の VXLAN 仮想ネットワーク ID に一致する単一の OVS フロールールのみを生成します。- 分離する必要のない Pod は元の namespace に維持し、分離する必要のある Pod は 1 つ以上の異なる namespace に移します。

- 追加のターゲット設定された namespace 間のネットワークポリシーを作成し、分離された Pod から許可する必要のある特定のトラフィックを可能にします。

10.1.3. 次のステップ

- ネットワークポリシーの作成

- オプション: デフォルトネットワークポリシーの定義

10.2. ネットワークポリシーの作成

admin ロールを持つユーザーは、namespace のネットワークポリシーを作成できます。

10.2.1. ネットワークポリシーの作成

クラスターの namespace に許可される Ingress または egress ネットワークトラフィックを記述する詳細なルールを定義するには、ネットワークポリシーを作成できます。

cluster-admin ロールを持つユーザーでログインしている場合、クラスター内の namespace でネットワークポリシーを作成できます。

前提条件

-

クラスターは、

NetworkPolicyオブジェクトをサポートするクラスターネットワークプロバイダーを使用する (例: OVN-Kubernetes ネットワークプロバイダー、またはmode: NetworkPolicyが設定された OpenShift SDN ネットワークプロバイダー)。このモードは OpenShiftSDN のデフォルトです。 -

OpenShift CLI (

oc) がインストールされている。 -

admin権限を持つユーザーとしてクラスターにログインしている。 - ネットワークポリシーが適用される namespace で作業している。

手順

ポリシールールを作成します。

<policy_name>.yamlファイルを作成します。touch <policy_name>.yaml

$ touch <policy_name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<policy_name>- ネットワークポリシーファイル名を指定します。

作成したばかりのファイルで、以下の例のようなネットワークポリシーを定義します。

すべての namespace のすべての Pod から ingress を拒否します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 同じ namespace のすべての Pod から ingress を許可します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

ネットワークポリシーオブジェクトを作成するには、以下のコマンドを入力します。

oc apply -f <policy_name>.yaml -n <namespace>

$ oc apply -f <policy_name>.yaml -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<policy_name>- ネットワークポリシーファイル名を指定します。

<namespace>- オプション: オブジェクトが現在の namespace 以外の namespace に定義されている場合は namespace を指定します。

出力例

networkpolicy "default-deny" created

networkpolicy "default-deny" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

10.2.2. サンプル NetworkPolicy オブジェクト

以下は、サンプル NetworkPolicy オブジェクトにアノテーションを付けます。

10.3. ネットワークポリシーの表示

admin ロールを持つユーザーは、namespace のネットワークポリシーを表示できます。

10.3.1. ネットワークポリシーの表示

namespace のネットワークポリシーを検査できます。

cluster-admin ロールを持つユーザーでログインしている場合、クラスター内のネットワークポリシーを表示できます。

前提条件

-

OpenShift CLI (

oc) がインストールされている。 -

admin権限を持つユーザーとしてクラスターにログインしている。 - ネットワークポリシーが存在する namespace で作業している。

手順

namespace のネットワークポリシーを一覧表示します。

namespace で定義された

NetworkPolicyオブジェクトを表示するには、以下のコマンドを実行します。oc get networkpolicy

$ oc get networkpolicyCopy to Clipboard Copied! Toggle word wrap Toggle overflow オプション: 特定のネットワークポリシーを検査するには、以下のコマンドを入力します。

oc describe networkpolicy <policy_name> -n <namespace>

$ oc describe networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<policy_name>- 検査するネットワークポリシーの名前を指定します。

<namespace>- オプション: オブジェクトが現在の namespace 以外の namespace に定義されている場合は namespace を指定します。

以下に例を示します。

oc describe networkpolicy allow-same-namespace

$ oc describe networkpolicy allow-same-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc describeコマンドの出力Copy to Clipboard Copied! Toggle word wrap Toggle overflow

10.3.2. サンプル NetworkPolicy オブジェクト

以下は、サンプル NetworkPolicy オブジェクトにアノテーションを付けます。

10.4. ネットワークポリシーの編集

admin ロールを持つユーザーは、namespace の既存のネットワークポリシーを編集できます。

10.4.1. ネットワークポリシーの編集

namespace のネットワークポリシーを編集できます。

cluster-admin ロールを持つユーザーでログインしている場合、クラスター内の namespace でネットワークポリシーを編集できます。

前提条件

-

クラスターは、

NetworkPolicyオブジェクトをサポートするクラスターネットワークプロバイダーを使用する (例: OVN-Kubernetes ネットワークプロバイダー、またはmode: NetworkPolicyが設定された OpenShift SDN ネットワークプロバイダー)。このモードは OpenShiftSDN のデフォルトです。 -

OpenShift CLI (

oc) がインストールされている。 -

admin権限を持つユーザーとしてクラスターにログインしている。 - ネットワークポリシーが存在する namespace で作業している。

手順

オプション: namespace のネットワークポリシーオブジェクトを一覧表示するには、以下のコマンドを入力します。

oc get networkpolicy -n <namespace>

$ oc get networkpolicy -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<namespace>- オプション: オブジェクトが現在の namespace 以外の namespace に定義されている場合は namespace を指定します。

NetworkPolicyオブジェクトを編集します。ネットワークポリシーの定義をファイルに保存した場合は、ファイルを編集して必要な変更を加えてから、以下のコマンドを入力します。

oc apply -n <namespace> -f <policy_file>.yaml

$ oc apply -n <namespace> -f <policy_file>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<namespace>- オプション: オブジェクトが現在の namespace 以外の namespace に定義されている場合は namespace を指定します。

<policy_file>- ネットワークポリシーを含むファイルの名前を指定します。

NetworkPolicyオブジェクトを直接更新する必要がある場合、以下のコマンドを入力できます。oc edit networkpolicy <policy_name> -n <namespace>

$ oc edit networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<policy_name>- ネットワークポリシーの名前を指定します。

<namespace>- オプション: オブジェクトが現在の namespace 以外の namespace に定義されている場合は namespace を指定します。

NetworkPolicyオブジェクトが更新されていることを確認します。oc describe networkpolicy <policy_name> -n <namespace>

$ oc describe networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<policy_name>- ネットワークポリシーの名前を指定します。

<namespace>- オプション: オブジェクトが現在の namespace 以外の namespace に定義されている場合は namespace を指定します。

10.4.2. サンプル NetworkPolicy オブジェクト

以下は、サンプル NetworkPolicy オブジェクトにアノテーションを付けます。

10.5. ネットワークポリシーの削除

admin ロールを持つユーザーは、namespace からネットワークポリシーを削除できます。

10.5.1. ネットワークポリシーの削除

namespace のネットワークポリシーを削除できます。

cluster-admin ロールを持つユーザーでログインしている場合、クラスター内のネットワークポリシーを削除できます。

前提条件

-

クラスターは、

NetworkPolicyオブジェクトをサポートするクラスターネットワークプロバイダーを使用する (例: OVN-Kubernetes ネットワークプロバイダー、またはmode: NetworkPolicyが設定された OpenShift SDN ネットワークプロバイダー)。このモードは OpenShiftSDN のデフォルトです。 -

OpenShift CLI (

oc) がインストールされている。 -

admin権限を持つユーザーとしてクラスターにログインしている。 - ネットワークポリシーが存在する namespace で作業している。

手順

NetworkPolicyオブジェクトを削除するには、以下のコマンドを入力します。oc delete networkpolicy <policy_name> -n <namespace>

$ oc delete networkpolicy <policy_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。

<policy_name>- ネットワークポリシーの名前を指定します。

<namespace>- オプション: オブジェクトが現在の namespace 以外の namespace に定義されている場合は namespace を指定します。

出力例

networkpolicy.networking.k8s.io/allow-same-namespace deleted

networkpolicy.networking.k8s.io/allow-same-namespace deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

10.6. プロジェクトのデフォルトネットワークポリシーの定義

クラスター管理者は、新規プロジェクトの作成時にネットワークポリシーを自動的に含めるように新規プロジェクトテンプレートを変更できます。新規プロジェクトのカスタマイズされたテンプレートがまだない場合には、まずテンプレートを作成する必要があります。

10.6.1. 新規プロジェクトのテンプレートの変更

クラスター管理者は、デフォルトのプロジェクトテンプレートを変更し、新規プロジェクトをカスタム要件に基づいて作成することができます。

独自のカスタムプロジェクトテンプレートを作成するには、以下を実行します。

手順

-

cluster-admin権限を持つユーザーとしてログインしている。 デフォルトのプロジェクトテンプレートを生成します。

oc adm create-bootstrap-project-template -o yaml > template.yaml

$ oc adm create-bootstrap-project-template -o yaml > template.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

オブジェクトを追加するか、または既存オブジェクトを変更することにより、テキストエディターで生成される

template.yamlファイルを変更します。 プロジェクトテンプレートは、

openshift-confignamespace に作成される必要があります。変更したテンプレートを読み込みます。oc create -f template.yaml -n openshift-config

$ oc create -f template.yaml -n openshift-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Web コンソールまたは CLI を使用し、プロジェクト設定リソースを編集します。

Web コンソールの使用

- Administration → Cluster Settings ページに移動します。

- Global Configuration をクリックし、すべての設定リソースを表示します。

- Project のエントリーを見つけ、Edit YAML をクリックします。

CLI の使用

project.config.openshift.io/clusterリソースを編集します。oc edit project.config.openshift.io/cluster

$ oc edit project.config.openshift.io/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow

specセクションを、projectRequestTemplateおよびnameパラメーターを組み込むように更新し、アップロードされたプロジェクトテンプレートの名前を設定します。デフォルト名はproject-requestです。カスタムプロジェクトテンプレートを含むプロジェクト設定リソース

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 変更を保存した後、変更が正常に適用されたことを確認するために、新しいプロジェクトを作成します。

10.6.2. 新規プロジェクトへのネットワークポリシーの追加

クラスター管理者は、ネットワークポリシーを新規プロジェクトのデフォルトテンプレートに追加できます。OpenShift Container Platform は、プロジェクトのテンプレートに指定されたすべての NetworkPolicy オブジェクトを自動的に作成します。

前提条件

-

クラスターは、

NetworkPolicyオブジェクトをサポートするデフォルトの CNI ネットワークプロバイダーを使用する (例:mode: NetworkPolicyが設定された OpenShift SDN ネットワークプロバイダー)。このモードは OpenShiftSDN のデフォルトです。 -

OpenShift CLI (

oc) がインストールされている。 -

cluster-admin権限を持つユーザーとしてクラスターにログインすること。 - 新規プロジェクトのカスタムデフォルトプロジェクトテンプレートを作成していること。

手順

以下のコマンドを実行して、新規プロジェクトのデフォルトテンプレートを編集します。

oc edit template <project_template> -n openshift-config

$ oc edit template <project_template> -n openshift-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow <project_template>を、クラスターに設定したデフォルトテンプレートの名前に置き換えます。デフォルトのテンプレート名はproject-requestです。テンプレートでは、各

NetworkPolicyオブジェクトを要素としてobjectsパラメーターに追加します。objectsパラメーターは、1 つ以上のオブジェクトのコレクションを受け入れます。以下の例では、

objectsパラメーターのコレクションにいくつかのNetworkPolicyオブジェクトが含まれます。重要OVN-Kubernetes ネットワークプロバイダープラグインの場合、Ingress コントローラーが

HostNetworkエンドポイント公開ストラテジーを使用するように設定されている場合、Ingress トラフィックが許可され、他のすべてのトラフィックが拒否されるようにネットワークポリシーを適用するための方法はサポートされていません。Copy to Clipboard Copied! Toggle word wrap Toggle overflow オプション: 以下のコマンドを実行して、新規プロジェクトを作成し、ネットワークポリシーオブジェクトが正常に作成されることを確認します。

新規プロジェクトを作成します。

oc new-project <project>

$ oc new-project <project>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

<project>を、作成しているプロジェクトの名前に置き換えます。

新規プロジェクトテンプレートのネットワークポリシーオブジェクトが新規プロジェクトに存在することを確認します。

oc get networkpolicy NAME POD-SELECTOR AGE allow-from-openshift-ingress <none> 7s allow-from-same-namespace <none> 7s

$ oc get networkpolicy NAME POD-SELECTOR AGE allow-from-openshift-ingress <none> 7s allow-from-same-namespace <none> 7sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

10.7. ネットワークポリシーを使用したマルチテナント分離の設定

クラスター管理者は、マルチテナントネットワークの分離を実行するようにネットワークポリシーを設定できます。

OpenShift SDN クラスターネットワークプロバイダーを使用している場合、本セクションで説明されているようにネットワークポリシーを設定すると、マルチテナントモードと同様のネットワーク分離が行われますが、ネットワークポリシーモードが設定されます。

10.7.1. ネットワークポリシーを使用したマルチテナント分離の設定

他のプロジェクト namespace の Pod およびサービスから分離できるようにプロジェクトを設定できます。

前提条件

-

クラスターは、

NetworkPolicyオブジェクトをサポートするクラスターネットワークプロバイダーを使用する (例: OVN-Kubernetes ネットワークプロバイダー、またはmode: NetworkPolicyが設定された OpenShift SDN ネットワークプロバイダー)。このモードは OpenShiftSDN のデフォルトです。 -

OpenShift CLI (

oc) がインストールされている。 -

admin権限を持つユーザーとしてクラスターにログインしている。

手順

以下の

NetworkPolicyオブジェクトを作成します。allow-from-openshift-ingressという名前のポリシー:Copy to Clipboard Copied! Toggle word wrap Toggle overflow allow-from-openshift-monitoringという名前のポリシー。Copy to Clipboard Copied! Toggle word wrap Toggle overflow allow-same-namespaceという名前のポリシー:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

オプション: 以下のコマンドを実行し、ネットワークポリシーオブジェクトが現在のプロジェクトに存在することを確認します。

oc describe networkpolicy

$ oc describe networkpolicyCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

10.7.2. 次のステップ

第11章 複数ネットワーク

11.1. 複数ネットワークについて

Kubernetes では、コンテナーネットワークは Container Network Interface (CNI) を実装するネットワークプラグインに委任されます。

OpenShift Container Platform は、Multus CNI プラグインを使用して CNI プラグインのチェーンを許可します。クラスターのインストール時に、デフォルト の Pod ネットワークを設定します。デフォルトのネットワークは、クラスターのすべての通常のネットワークトラフィックを処理します。利用可能な CNI プラグインに基づいて 追加のネットワーク を定義し、1 つまたは複数のネットワークを Pod に割り当てることができます。必要に応じて、クラスターの複数のネットワークを追加で定義することができます。これは、スイッチまたはルーティングなどのネットワーク機能を提供する Pod を設定する場合に柔軟性を実現します。

11.1.1. 追加ネットワークの使用シナリオ

データプレーンとコントロールプレーンの分離など、ネットワークの分離が必要な状況で追加のネットワークを使用することができます。トラフィックの分離は、以下のようなパフォーマンスおよびセキュリティー関連の理由で必要になります。

- パフォーマンス

- 各プレーンのトラフィック量を管理するために、2 つの異なるプレーンにトラフィックを送信できます。

- セキュリティー

- 機密トラフィックは、セキュリティー上の考慮に基づいて管理されているネットワークに送信でき、テナントまたはカスタマー間で共有できないプライベートを分離することができます。

クラスターのすべての Pod はクラスター全体のデフォルトネットワークを依然として使用し、クラスター全体での接続性を維持します。すべての Pod には、クラスター全体の Pod ネットワークに割り当てられる eth0 インターフェイスがあります。Pod のインターフェイスは、oc exec -it <pod_name> -- ip a コマンドを使用して表示できます。Multus CNI を使用するネットワークを追加する場合、それらの名前は net1、net2、…、 netN になります。

追加のネットワークを Pod に割り当てるには、インターフェイスの割り当て方法を定義する設定を作成する必要があります。それぞれのインターフェイスは、NetworkAttachmentDefinition カスタムリソース (CR) を使用して指定します。これらの CR のそれぞれにある CNI 設定は、インターフェイスの作成方法を定義します。

11.1.2. OpenShift Container Platform の追加ネットワーク

OpenShift Container Platform は、クラスターに追加のネットワークを作成するために使用する以下の CNI プラグインを提供します。

- bridge: ブリッジベースの追加ネットワークを設定する ことで、同じホストにある Pod が相互に、かつホストと通信できます。

- host-device: ホストデバイスの追加ネットワークを設定する ことで、Pod がホストシステム上の物理イーサネットネットワークデバイスにアクセスすることができます。

- ipvlan: ipvlan ベースの追加ネットワークを設定する ことで、macvlan ベースの追加ネットワークと同様に、ホスト上の Pod が他のホストやそれらのホストの Pod と通信できます。macvlan ベースの追加のネットワークとは異なり、各 Pod は親の物理ネットワークインターフェイスと同じ MAC アドレスを共有します。

- macvlan: macvlan ベースの追加ネットワークを作成 することで、ホスト上の Pod が物理ネットワークインターフェイスを使用して他のホストやそれらのホストの Pod と通信できます。macvlan ベースの追加ネットワークに割り当てられる各 Pod には固有の MAC アドレスが割り当てられます。

- SR-IOV: SR-IOV ベースの追加ネットワークを設定する ことで、Pod を ホストシステム上の SR-IOV 対応ハードウェアの Virtual Function (VF) インターフェイスに割り当てることができます。

11.2. 追加のネットワークの設定

クラスター管理者は、クラスターの追加のネットワークを設定できます。以下のネットワークタイプに対応しています。

11.2.1. 追加のネットワークを管理するためのアプローチ

追加したネットワークのライフサイクルを管理するには、2 つのアプローチがあります。各アプローチは同時に使用できず、追加のネットワークを管理する場合に 1 つのアプローチしか使用できません。いずれの方法でも、追加のネットワークは、お客様が設定した Container Network Interface (CNI) プラグインで管理します。

追加ネットワークの場合には、IP アドレスは、追加ネットワークの一部として設定する IPAM(IP Address Management)CNI プラグインでプロビジョニングされます。IPAM プラグインは、DHCP や静的割り当てなど、さまざまな IP アドレス割り当ての方法をサポートしています。

-

Cluster Network Operator (CNO) の設定を変更する: CNO は自動的に

Network Attachment Definitionオブジェクトを作成し、管理します。CNO は、オブジェクトのライフサイクル管理に加えて、DHCP で割り当てられた IP アドレスを使用する追加のネットワークで確実に DHCP が利用できるようにします。 -

YAML マニフェストを適用する:

Network Attachment Definitionオブジェクトを作成することで、追加のネットワークを直接管理できます。この方法では、CNI プラグインを連鎖させることができます。

11.2.2. ネットワーク追加割り当ての設定

追加のネットワークは、k8s.cni.cncf.ioAPI グループの Network Attachment DefinitionAPI で設定されます。API の設定については、以下の表で説明されています。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

| 追加のネットワークの名前です。 |

|

|

| オブジェクトが関連付けられる namespace。 |

|

|

| JSON 形式の CNI プラグイン設定です。 |

11.2.2.1. Cluster Network Operator による追加ネットワークの設定

追加のネットワーク割り当ての設定は、Cluster Network Operator (CNO) の設定の一部として指定します。

以下の YAML は、CNO で追加のネットワークを管理するための設定パラメーターを記述しています。

Cluster Network Operator (CNO) の設定

11.2.2.2. YAML マニフェストからの追加ネットワークの設定

追加ネットワークの設定は、以下の例のように YAML 設定ファイルから指定します。

11.2.3. 追加のネットワークタイプの設定

次のセクションでは、追加のネットワークの具体的な設定フィールドについて説明します。

11.2.3.1. ブリッジネットワークの追加設定

以下のオブジェクトは、ブリッジ CNI プラグインの設定パラメーターについて説明しています。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

CNI 仕様のバージョン。値 |

|

|

|

CNO 設定に以前に指定した |

|

|

| |

|

|

|

使用する仮想ブリッジの名前を指定します。ブリッジインターフェイスがホストに存在しない場合は、これが作成されます。デフォルト値は |

|

|

| IPAM CNI プラグインの設定オブジェクト。プラグインは、割り当て定義についての IP アドレスの割り当てを管理します。 |

|

|

|

仮想ネットワークから外すトラフィックについて IP マスカレードを有効にするには、 |

|

|

|

IP アドレスをブリッジに割り当てるには |

|

|

|

ブリッジを仮想ネットワークのデフォルトゲートウェイとして設定するには、 |

|

|

|

仮想ブリッジの事前に割り当てられた IP アドレスの割り当てを許可するには、 |

|

|

|

仮想ブリッジが受信時に使用した仮想ポートでイーサネットフレームを送信できるようにするには、 |

|

|

|

ブリッジで無作為検出モード (Promiscuous Mode) を有効にするには、 |

|

|

| 仮想 LAN (VLAN) タグを整数値として指定します。デフォルトで、VLAN タグは割り当てません。 |

|

|

| 最大転送単位 (MTU) を指定された値に設定します。デフォルト値はカーネルによって自動的に設定されます。 |

11.2.3.1.1. ブリッジ設定の例

以下の例では、bridge-net という名前の追加のネットワークを設定します。

11.2.3.2. ホストデバイスの追加ネットワークの設定

device、hwaddr、 kernelpath、または pciBusID のいずれかのパラメーターを設定してネットワークデバイスを指定します。

以下のオブジェクトは、ホストデバイス CNI プラグインの設定パラメーターについて説明しています。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

CNI 仕様のバージョン。値 |

|

|

|

CNO 設定に以前に指定した |

|

|

|

設定する CNI プラグインの名前: |

|

|

|

オプション: |

|

|

| オプション: デバイスハードウェアの MAC アドレス。 |

|

|

|

オプション: |

|

|

|

オプション: |

|

|

| IPAM CNI プラグインの設定オブジェクト。プラグインは、割り当て定義についての IP アドレスの割り当てを管理します。 |

11.2.3.2.1. ホストデバイス設定例

以下の例では、hostdev-net という名前の追加のネットワークを設定します。

11.2.3.3. IPVLAN 追加ネットワークの設定

以下のオブジェクトは、IPVLAN CNI プラグインの設定パラメーターについて説明しています。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

CNI 仕様のバージョン。値 |

|

|

|

CNO 設定に以前に指定した |

|

|

|

設定する CNI プラグインの名前: |

|

|

|

仮想ネットワークの操作モード。この値は、 |

|

|

|

ネットワーク割り当てに関連付けるイーサネットインターフェイス。 |

|

|

| 最大転送単位 (MTU) を指定された値に設定します。デフォルト値はカーネルによって自動的に設定されます。 |

|

|

| IPAM CNI プラグインの設定オブジェクト。プラグインは、割り当て定義についての IP アドレスの割り当てを管理します。

|

11.2.3.3.1. IPVLAN 設定例

以下の例では、ipvlan-net という名前の追加のネットワークを設定します。

11.2.3.4. MACVLAN 追加ネットワークの設定

以下のオブジェクトは、macvlan CNI プラグインの設定パラメーターについて説明しています。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

CNI 仕様のバージョン。値 |

|

|

|

CNO 設定に以前に指定した |

|

|

|

設定する CNI プラグインの名前: |

|

|

|

仮想ネットワークのトラフィックの可視性を設定します。 |

|

|

| 仮想インターフェイスに関連付けるイーサネット、ボンディング、または VLAN インターフェイス。値が指定されない場合、ホストシステムのプライマリーイーサネットインターフェイスが使用されます。 |

|

|

| 最大転送単位 (MTU) を指定された値。デフォルト値はカーネルによって自動的に設定されます。 |

|

|

| IPAM CNI プラグインの設定オブジェクト。プラグインは、割り当て定義についての IP アドレスの割り当てを管理します。 |

11.2.3.4.1. macvlan 設定の例

以下の例では、macvlan-net という名前の追加のネットワークを設定します。

11.2.4. 追加ネットワークの IP アドレス割り当ての設定

IPAM (IP アドレス管理) Container Network Interface (CNI) プラグインは、他の CNI プラグインの IP アドレスを提供します。

以下の IP アドレスの割り当てタイプを使用できます。

- 静的割り当て。

- DHCP サーバーを使用した動的割り当て。指定する DHCP サーバーは、追加のネットワークから到達可能である必要があります。

- Whereabouts IPAM CNI プラグインを使用した動的割り当て。

11.2.4.1. 静的 IP アドレス割り当ての設定

以下の表は、静的 IP アドレスの割り当ての設定について説明しています。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

IPAM のアドレスタイプ。値 |

|

|

| 仮想インターフェイスに割り当てる IP アドレスを指定するオブジェクトの配列。IPv4 と IPv6 の IP アドレスの両方がサポートされます。 |

|

|

| Pod 内で設定するルートを指定するオブジェクトの配列です。 |

|

|

| オプション: DNS の設定を指定するオブジェクトの配列です。 |

addressesの配列には、以下のフィールドのあるオブジェクトが必要です。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

指定する IP アドレスおよびネットワーク接頭辞。たとえば、 |

|

|

| egress ネットワークトラフィックをルーティングするデフォルトのゲートウェイ。 |

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

CIDR 形式の IP アドレス範囲 ( |

|

|

| ネットワークトラフィックがルーティングされるゲートウェイ。 |

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

| DNS クエリーの送信先となる 1 つ以上の IP アドレスの配列。 |

|

|

|

ホスト名に追加するデフォルトのドメイン。たとえば、ドメインが |

|

|

|

DNS ルックアップのクエリー時に非修飾ホスト名に追加されるドメイン名の配列 (例: |

静的 IP アドレス割り当ての設定例

11.2.4.2. 動的 IP アドレス (DHCP) 割り当ての設定

以下の JSON は、DHCP を使用した動的 IP アドレスの割り当ての設定について説明しています。

Pod は、作成時に元の DHCP リースを取得します。リースは、クラスターで実行している最小限の DHCP サーバーデプロイメントで定期的に更新する必要があります。

DHCP サーバーのデプロイメントをトリガーするには、以下の例にあるように Cluster Network Operator 設定を編集して shim ネットワーク割り当てを作成する必要があります。

shim ネットワーク割り当ての定義例

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

IPAM のアドレスタイプ。値 |

動的 IP アドレス (DHCP) 割り当ての設定例

{

"ipam": {

"type": "dhcp"

}

}

{

"ipam": {

"type": "dhcp"

}

}11.2.4.3. Whereabouts を使用した動的 IP アドレス割り当ての設定

Whereabouts CNI プラグインにより、DHCP サーバーを使用せずに IP アドレスを追加のネットワークに動的に割り当てることができます。

以下の表は、Whereabouts を使用した動的 IP アドレス割り当ての設定について説明しています。

| フィールド | タイプ | 説明 |

|---|---|---|

|

|

|

IPAM のアドレスタイプ。値 |

|

|

| IP アドレスと範囲を CIDR 表記。IP アドレスは、この範囲内のアドレスから割り当てられます。 |

|

|

| オプション: CIDR 表記の IP アドレスと範囲 (0 個以上) の一覧。除外されたアドレス範囲内の IP アドレスは割り当てられません。 |

Whereabouts を使用する動的 IP アドレス割り当ての設定例

11.2.5. Cluster Network Operator による追加ネットワーク割り当ての作成

Cluster Network Operator (CNO) は追加ネットワークの定義を管理します。作成する追加ネットワークを指定する場合、CNO は NetworkAttachmentDefinition オブジェクトを自動的に作成します。

Cluster Network Operator が管理する NetworkAttachmentDefinition オブジェクトは編集しないでください。これを実行すると、追加ネットワークのネットワークトラフィックが中断する可能性があります。

前提条件

-

OpenShift CLI (

oc) をインストールしている。 -

cluster-admin権限を持つユーザーとしてログインしている。

手順

CNO 設定を編集するには、以下のコマンドを入力します。

oc edit networks.operator.openshift.io cluster

$ oc edit networks.operator.openshift.io clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow 以下のサンプル CR のように、作成される追加ネットワークの設定を追加して、作成している CR を変更します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 変更を保存し、テキストエディターを終了して、変更をコミットします。

検証

以下のコマンドを実行して、CNO が NetworkAttachmentDefinition オブジェクトを作成していることを確認します。CNO がオブジェクトを作成するまでに遅延が生じる可能性があります。

oc get network-attachment-definitions -n <namespace>

$ oc get network-attachment-definitions -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ここでは、以下のようになります。