This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.노드

OpenShift Container Platform에서 노드 구성 및 관리

초록

1장. 노드 개요

1.1. 노드 정보

노드는 Kubernetes 클러스터의 가상 또는 베어 메탈 시스템입니다. 작업자 노드는 포드로 그룹화된 애플리케이션 컨테이너를 호스팅합니다. 컨트롤 플레인 노드는 Kubernetes 클러스터를 제어하는 데 필요한 서비스를 실행합니다. OpenShift Container Platform에서 컨트롤 플레인 노드에는 OpenShift Container Platform 클러스터를 관리하기 위한 Kubernetes 서비스 이상의 기능이 포함되어 있습니다.

클러스터에 안정적이고 정상 노드를 보유하는 것은 호스트된 애플리케이션의 원활한 작동에 필수적입니다. OpenShift Container Platform에서는 노드를 나타내는 Node 오브젝트를 통해 노드에 액세스, 관리 및 모니터링할 수 있습니다. OpenShift CLI(oc) 또는 웹 콘솔을 사용하여 노드에서 다음 작업을 수행할 수 있습니다.

작업 읽기

읽기 작업을 통해 관리자 또는 개발자가 OpenShift Container Platform 클러스터의 노드에 대한 정보를 가져올 수 있습니다.

- 클러스터의 모든 노드를 나열합니다.

- 메모리 및 CPU 사용량, 상태, 상태, 수명 등의 노드에 대한 정보를 가져옵니다.

- 노드에서 실행 중인 포드를 나열합니다.

관리 운영

관리자는 여러 작업을 통해 OpenShift Container Platform 클러스터에서 노드를 쉽게 관리할 수 있습니다.

-

노드 레이블 추가 또는 업데이트. 레이블은

Node오브젝트에 적용되는 키-값 쌍입니다. 레이블을 사용하여 Pod 예약을 제어할 수 있습니다. -

CRD(사용자 정의 리소스 정의) 또는

kubeletConfig오브젝트를 사용하여 노드 구성을 변경합니다. -

포드 예약을 허용하거나 허용하지 않도록 노드를 구성합니다. 정상 작업자 노드가

Ready상태인 경우 컨트롤 플레인 노드는 기본적으로 Pod 배치를 허용합니다. 작업자 노드를 예약할 수 없도록 구성하여 이 기본 동작을 변경할 수 있습니다. -

system-reserved설정을 사용하여 노드에 리소스를 할당합니다. OpenShift Container Platform에서 노드의 최적system-reservedCPU 및 메모리 리소스를 자동으로 확인하거나 노드에 가장 적합한 리소스를 수동으로 확인하고 설정할 수 있습니다. - 노드의 프로세서 코어 수, 하드 제한 또는 둘 다에 따라 노드에서 실행할 수 있는 Pod 수를 구성합니다.

- Pod 유사성 방지를 사용하여 노드를 정상적으로 재부팅합니다.

- 시스템 집합을 사용하여 클러스터를 축소하여 클러스터에서 노드를 삭제합니다. 베어 메탈 클러스터에서 노드를 삭제하려면 먼저 노드의 모든 Pod를 드레이닝한 다음 노드를 수동으로 삭제해야 합니다.

기능 개선 작업

OpenShift Container Platform을 사용하면 노드에 액세스하고 관리하는 것 이상의 작업을 수행할 수 있습니다. 관리자는 노드에서 다음 작업을 수행하여 클러스터가 보다 효율적이고 애플리케이션 친화적인 방식으로 개발자에게 더 나은 환경을 제공할 수 있습니다.

- Node Tuning Operator를 사용하여 특정 수준의 커널 튜닝이 필요한 고성능 애플리케이션을 위한 노드 수준 튜닝을 관리합니다.

- 데몬 세트를 사용하여 노드에서 백그라운드 작업을 자동으로 실행합니다. 데몬 세트를 생성하고 사용하여 공유 스토리지를 생성하거나, 모든 노드에서 로깅 Pod를 실행하거나, 모든 노드에 모니터링 에이전트를 배포할 수 있습니다.

- 가비지 컬렉션을 사용하는 노드 리소스 사용 가능. 종료된 컨테이너와 실행 중인 Pod에서 참조하지 않는 이미지를 제거하여 노드가 효율적으로 실행되도록 할 수 있습니다.

- 노드 집합에 커널 매개 변수 추가.

- 네트워크 에지(원격 작업자 노드)에 작업자 노드가 있도록 OpenShift Container Platform 클러스터를 구성합니다. OpenShift Container Platform 클러스터에 원격 작업자 노드가 있는 문제와 원격 작업자 노드에서 Pod를 관리하는 데 권장되는 접근 방법에 대한 자세한 내용은 네트워크 에지에서 원격 작업자 노드 사용을 참조하십시오.

1.2. Pod 정보

포드는 노드에 함께 배포되는 하나 이상의 컨테이너입니다. 클러스터 관리자는 Pod를 정의하고 스케줄링할 준비가 된 정상 노드에서 실행되도록 할당할 수 있습니다. 포드는 컨테이너가 실행되는 동안 실행됩니다. 포드가 정의되어 있고 실행되면 변경할 수 없습니다. Pod로 작업할 때 수행할 수 있는 작업은 다음과 같습니다.

작업 읽기

관리자는 다음 작업을 통해 프로젝트의 Pod에 대한 정보를 가져올 수 있습니다.

- 복제본 수 및 재시작, 현재 상태 및 수명과 같은 정보를 포함하여 프로젝트와 관련된 포드를 나열합니다.

- CPU, 메모리, 스토리지 사용량과 같은 포드 사용량 통계를 확인합니다.

관리 운영

다음 작업 목록은 관리자가 OpenShift Container Platform 클러스터에서 포드를 관리하는 방법에 대한 개요를 제공합니다.

OpenShift Container Platform에서 사용할 수 있는 고급 예약 기능을 사용하여 Pod 예약을 제어합니다.

- Pod 유사성, 노드 유사성 및 유사성 방지 와 같은 노드 간 바인딩 규칙입니다.

- 노드 레이블 및 선택기.

- 테인트 및 톨러레이션.

- Pod 토폴로지 분배 제약 조건.

- 사용자 지정 스케줄러.

- 스케줄러 에서 더 적절한 노드로 Pod를 다시 예약하도록 특정 전략에 따라 Pod를 제거하도록 Descheduler를 구성합니다.

- Pod 컨트롤러를 사용하여 재시작 후 Pod가 작동하는 방식을 구성하고 재시작 정책을 구성합니다.

- Pod에서 송신 및 수신 트래픽을 모두 제한합니다.

- 포드 템플릿이 있는 모든 오브젝트에 볼륨을 추가하고 제거합니다. 볼륨은 포드의 모든 컨테이너에서 사용할 수 있는 마운트된 파일 시스템입니다. 컨테이너 스토리지는 임시 스토리지입니다. 볼륨을 사용하여 컨테이너 데이터를 유지할 수 있습니다.

기능 개선 작업

OpenShift Container Platform에서 사용할 수 있는 다양한 툴과 기능을 통해 Pod를 보다 쉽고 효율적으로 작업할 수 있습니다. 다음 작업에는 이러한 툴과 기능을 사용하여 포드를 더 효율적으로 관리할 수 있습니다.

| 작업 | 사용자 | 자세한 정보 |

|---|---|---|

| 수평 포드 자동 스케일러 생성 및 사용. | 개발자 | 수평 Pod 자동 스케일러를 사용하여 실행할 최소 및 최대 Pod 수와 Pod에서 목표로 하는 CPU 사용률 또는 메모리 사용률을 지정할 수 있습니다. 수평 Pod 자동 스케일러를 사용하여 Pod를 자동으로 스케일링할 수 있습니다. |

| 관리자 및 개발자 | 관리자는 워크로드의 리소스 및 리소스 요구 사항을 모니터링하여 클러스터 리소스를 더 잘 사용하려면 수직 Pod 자동 스케일러를 사용합니다. 개발자는 수직 Pod 자동 스케일러를 사용하여 각 Pod에 충분한 리소스가 있는 노드에 Pod를 예약하여 수요가 많은 기간에 Pod를 유지하도록 합니다. | |

| 장치 플러그인을 사용하여 외부 리소스에 대한 액세스를 제공합니다. | 관리자 | 장치 플러그인은 노드(kubelet 외부)에서 실행되는 gRPC 서비스로, 특정 하드웨어 리소스를 관리합니다. 장치 플러그인을 배포하여 클러스터 전체에서 하드웨어 장치를 소비하는 일관되고 이식 가능한 솔루션을 제공할 수 있습니다. |

|

| 관리자 |

일부 애플리케이션에는 암호 및 사용자 이름과 같은 중요한 정보가 필요합니다. |

1.3. 컨테이너 정보

컨테이너는 OpenShift Container Platform 애플리케이션의 기본 단위로, 종속 항목, 라이브러리 및 바이너리와 함께 패키지된 애플리케이션 코드를 구성합니다. 컨테이너에서는 물리 서버, 가상 머신(VM) 및 프라이빗 또는 퍼블릭 클라우드와 같은 환경 및 여러 배치 대상 사이에 일관성을 제공합니다.

Linux 컨테이너 기술은 실행 중인 프로세스를 격리하고 지정된 리소스에만 액세스할 수 있는 경량 메커니즘입니다. 관리자는 다음과 같은 Linux 컨테이너에서 다양한 작업을 수행할 수 있습니다.

OpenShift Container Platform은 Init 컨테이너라는 특수 컨테이너를 제공합니다. Init 컨테이너는 애플리케이션 컨테이너보다 먼저 실행되며 애플리케이션 이미지에 없는 유틸리티 또는 설정 스크립트를 포함할 수 있습니다. Init 컨테이너를 사용하여 나머지 Pod를 배포하기 전에 작업을 수행할 수 있습니다.

노드, Pod 및 컨테이너에서 특정 작업을 수행하는 것 외에도 전체 OpenShift Container Platform 클러스터에서 작업하여 클러스터의 효율성과 애플리케이션 Pod를 고가용성으로 유지할 수 있습니다.

2장. 노드 작업

2.1. Pod 사용

Pod는 하나의 호스트에 함께 배포되는 하나 이상의 컨테이너이자 정의, 배포, 관리할 수 있는 최소 컴퓨팅 단위입니다.

2.1.1. Pod 이해

Pod는 컨테이너에 대한 머신 인스턴스(실제 또는 가상)와 대략적으로 동일합니다. 각 Pod에는 자체 내부 IP 주소가 할당되므로 해당 Pod가 전체 포트 공간을 소유하고 Pod 내의 컨테이너는 로컬 스토리지와 네트워킹을 공유할 수 있습니다.

Pod에는 라이프사이클이 정의되어 있으며 노드에서 실행되도록 할당된 다음 컨테이너가 종료되거나 기타 이유로 제거될 때까지 실행됩니다. Pod는 정책 및 종료 코드에 따라 종료 후 제거되거나 컨테이너 로그에 대한 액세스를 활성화하기 위해 유지될 수 있습니다.

OpenShift Container Platform에서는 대체로 Pod를 변경할 수 없는 것으로 취급합니다. 실행 중에는 Pod 정의를 변경할 수 없습니다. OpenShift Container Platform은 기존 Pod를 종료한 후 수정된 구성이나 기본 이미지 또는 둘 다 사용하여 Pod를 다시 생성하는 방식으로 변경 사항을 구현합니다. Pod를 다시 생성하면 확장 가능한 것으로 취급되고 상태가 유지되지 않습니다. 따라서 일반적으로 Pod는 사용자가 직접 관리하는 대신 상위 수준의 컨트롤러에서 관리해야 합니다.

OpenShift Container Platform 노드 호스트당 최대 Pod 수는 클러스터 제한을 참조하십시오.

복제 컨트롤러에서 관리하지 않는 베어 Pod는 노드 중단 시 다시 예약되지 않습니다.

2.1.2. Pod 구성의 예

OpenShift Container Platform에서는 하나의 호스트에 함께 배포되는 하나 이상의 컨테이너이자 정의, 배포, 관리할 수 있는 최소 컴퓨팅 단위인 Pod의 Kubernetes 개념을 활용합니다.

다음은 Rails 애플리케이션의 포드 정의의 예입니다. 이 예제에서는 Pod의 다양한 기능을 보여줍니다. 대부분 다른 주제에서 설명하므로 여기에서는 간단히 언급합니다.

Pod 오브젝트 정의(YAML)

- 1

- Pod는 단일 작업에서 Pod 그룹을 선택하고 관리하는 데 사용할 수 있는 라벨을 하나 이상 사용하여 "태그를 지정"할 수 있습니다. 라벨은

metadata해시의 키/값 형식으로 저장됩니다. - 2

- Pod는 가능한 값

Always,OnFailure,Never를 사용하여 정책을 재시작합니다. 기본값은Always입니다. - 3

- OpenShift Container Platform은 컨테이너에 대한 보안 컨텍스트를 정의합니다. 보안 컨텍스트는 권한 있는 컨테이너로 실행하거나 선택한 사용자로 실행할 수 있는지의 여부 등을 지정합니다. 기본 컨텍스트는 매우 제한적이지만 필요에 따라 관리자가 수정할 수 있습니다.

- 4

containers는 하나 이상의 컨테이너 정의로 이루어진 배열을 지정합니다.- 5

- 이 컨테이너는 컨테이너 내에서 외부 스토리지 볼륨이 마운트되는 위치를 지정합니다. 이 경우 레지스트리에서 OpenShift Container Platform API에 대해 요청하는 데 필요한 자격 증명 액세스 권한을 저장하는 볼륨이 있습니다.

- 6

- Pod에 제공할 볼륨을 지정합니다. 볼륨이 지정된 경로에 마운트합니다. 컨테이너 루트,

/또는 호스트와 컨테이너에서 동일한 경로에 마운트하지 마십시오. 컨테이너가 호스트/dev/pts파일과 같이 충분한 권한이 있는 경우 호스트 시스템이 손상될 수 있습니다./host를 사용하여 호스트를 마운트하는 것이 안전합니다. - 7

- Pod의 각 컨테이너는 자체 컨테이너 이미지에서 인스턴스화됩니다.

- 8

- OpenShift Container Platform API에 대해 요청하는 Pod는 요청 시 Pod에서 인증해야 하는 서비스 계정 사용자를 지정하는

serviceAccount필드가 있는 일반적인 패턴입니다. 따라서 사용자 정의 인프라 구성 요소에 대한 액세스 권한을 세부적으로 제어할 수 있습니다. - 9

- Pod는 사용할 컨테이너에서 사용할 수 있는 스토리지 볼륨을 정의합니다. 이 경우 기본 서비스 계정 토큰이 포함된

시크릿볼륨에 대한 임시 볼륨을 제공합니다.파일이 많은 영구 볼륨을 Pod에 연결하면 해당 Pod가 실패하거나 시작하는 데 시간이 오래 걸릴 수 있습니다. 자세한 내용은 OpenShift에서 파일 수가 많은 영구 볼륨을 사용하는 경우 Pod를 시작하지 못하거나 과도한 시간을 차지하여 "Ready" 상태를 얻을 수 없는 이유는 무엇입니까?

이 Pod 정의에는 Pod가 생성되고 해당 라이프사이클이 시작된 후 OpenShift Container Platform에 의해 자동으로 채워지는 특성은 포함되지 않습니다. Kubernetes Pod 설명서에는 Pod의 기능 및 용도에 대한 세부 정보가 있습니다.

2.2. Pod 보기

관리자는 클러스터의 Pod를 보고 해당 Pod 및 클러스터의 상태를 전체적으로 확인할 수 있습니다.

2.2.1. Pod 정보

OpenShift Container Platform에서는 하나의 호스트에 함께 배포되는 하나 이상의 컨테이너이자 정의, 배포, 관리할 수 있는 최소 컴퓨팅 단위인 Pod의 Kubernetes 개념을 활용합니다. Pod는 컨테이너에 대한 머신 인스턴스(실제 또는 가상)와 대략적으로 동일합니다.

특정 프로젝트와 연결된 Pod 목록을 확인하거나 Pod 관련 사용량 통계를 볼 수 있습니다.

2.2.2. 프로젝트의 Pod 보기

Pod의 복제본 수, 현재 상태, 재시작 횟수, 수명 등을 포함하여 현재 프로젝트와 관련된 Pod 목록을 확인할 수 있습니다.

프로세스

프로젝트의 Pod를 보려면 다음을 수행합니다.

프로젝트로 변경합니다.

oc project <project-name>

$ oc project <project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 다음 명령을 실행합니다.

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 예를 들면 다음과 같습니다.

oc get pods -n openshift-console

$ oc get pods -n openshift-consoleCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

NAME READY STATUS RESTARTS AGE console-698d866b78-bnshf 1/1 Running 2 165m console-698d866b78-m87pm 1/1 Running 2 165m

NAME READY STATUS RESTARTS AGE console-698d866b78-bnshf 1/1 Running 2 165m console-698d866b78-m87pm 1/1 Running 2 165mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pod IP 주소와 Pod가 있는 노드를 보려면

-o wide플래그를 추가합니다.oc get pods -o wide

$ oc get pods -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE console-698d866b78-bnshf 1/1 Running 2 166m 10.128.0.24 ip-10-0-152-71.ec2.internal <none> console-698d866b78-m87pm 1/1 Running 2 166m 10.129.0.23 ip-10-0-173-237.ec2.internal <none>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE console-698d866b78-bnshf 1/1 Running 2 166m 10.128.0.24 ip-10-0-152-71.ec2.internal <none> console-698d866b78-m87pm 1/1 Running 2 166m 10.129.0.23 ip-10-0-173-237.ec2.internal <none>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2.3. Pod 사용량 통계 보기

컨테이너의 런타임 환경을 제공하는 Pod에 대한 사용량 통계를 표시할 수 있습니다. 이러한 사용량 통계에는 CPU, 메모리, 스토리지 사용량이 포함됩니다.

사전 요구 사항

-

사용량 통계를 보려면

cluster-reader권한이 있어야 합니다. - 사용량 통계를 보려면 메트릭이 설치되어 있어야 합니다.

프로세스

사용량 통계를 보려면 다음을 수행합니다.

다음 명령을 실행합니다.

oc adm top pods

$ oc adm top podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 예를 들면 다음과 같습니다.

oc adm top pods -n openshift-console

$ oc adm top pods -n openshift-consoleCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

NAME CPU(cores) MEMORY(bytes) console-7f58c69899-q8c8k 0m 22Mi console-7f58c69899-xhbgg 0m 25Mi downloads-594fcccf94-bcxk8 3m 18Mi downloads-594fcccf94-kv4p6 2m 15Mi

NAME CPU(cores) MEMORY(bytes) console-7f58c69899-q8c8k 0m 22Mi console-7f58c69899-xhbgg 0m 25Mi downloads-594fcccf94-bcxk8 3m 18Mi downloads-594fcccf94-kv4p6 2m 15MiCopy to Clipboard Copied! Toggle word wrap Toggle overflow 다음 명령을 실행하여 라벨이 있는 Pod의 사용량 통계를 확인합니다.

oc adm top pod --selector=''

$ oc adm top pod --selector=''Copy to Clipboard Copied! Toggle word wrap Toggle overflow 필터링할 선택기(라벨 쿼리)를 선택해야 합니다.

=,==,!=가 지원됩니다.

2.2.4. 리소스 로그 보기

OpenShift CLI(oc) 및 웹 콘솔에서 다양한 리소스의 로그를 볼 수 있습니다. 로그는 로그의 말미 또는 끝에서 읽습니다.

사전 요구 사항

- OpenShift CLI(oc)에 액세스합니다.

프로세스(UI)

OpenShift Container Platform 콘솔에서 워크로드 → Pod로 이동하거나 조사하려는 리소스를 통해 Pod로 이동합니다.

참고빌드와 같은 일부 리소스에는 직접 쿼리할 Pod가 없습니다. 이러한 인스턴스에서 리소스의 세부 정보 페이지에서 로그 링크를 찾을 수 있습니다.

- 드롭다운 메뉴에서 프로젝트를 선택합니다.

- 조사할 Pod 이름을 클릭합니다.

- 로그를 클릭합니다.

프로세스(CLI)

특정 Pod의 로그를 확인합니다.

oc logs -f <pod_name> -c <container_name>

$ oc logs -f <pod_name> -c <container_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 다음과 같습니다.

-f- 선택 사항: 출력이 로그에 기록되는 내용을 따르도록 지정합니다.

<pod_name>- pod 이름을 지정합니다.

<container_name>- 선택 사항: 컨테이너의 이름을 지정합니다. Pod에 여러 컨테이너가 있는 경우 컨테이너 이름을 지정해야 합니다.

예를 들면 다음과 같습니다.

oc logs ruby-58cd97df55-mww7r

$ oc logs ruby-58cd97df55-mww7rCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc logs -f ruby-57f7f4855b-znl92 -c ruby

$ oc logs -f ruby-57f7f4855b-znl92 -c rubyCopy to Clipboard Copied! Toggle word wrap Toggle overflow 로그 파일의 내용이 출력됩니다.

특정 리소스의 로그를 확인합니다.

oc logs <object_type>/<resource_name>

$ oc logs <object_type>/<resource_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 리소스 유형 및 이름을 지정합니다.

예를 들면 다음과 같습니다.

oc logs deployment/ruby

$ oc logs deployment/rubyCopy to Clipboard Copied! Toggle word wrap Toggle overflow 로그 파일의 내용이 출력됩니다.

2.3. Pod에 대한 OpenShift Container Platform 클러스터 구성

관리자는 Pod에 효율적인 클러스터를 생성하고 유지 관리할 수 있습니다.

클러스터를 효율적으로 유지하면 Pod가 종료될 때 수행하는 작업과 같은 툴을 사용하여 개발자에게 더 나은 환경을 제공할 수 있습니다. 즉 필요한 수의 Pod가 항상 실행되고 있는지 확인하여 한 번만 실행되도록 설계된 Pod를 재시작하는 경우 Pod에 사용할 수 있는 대역폭을 제한하고, 중단 중 Pod를 계속 실행하는 방법을 제공합니다.

2.3.1. 재시작 후 Pod 작동 방식 구성

Pod 재시작 정책에 따라 해당 Pod의 컨테이너가 종료될 때 OpenShift Container Platform에서 응답하는 방법이 결정됩니다. 정책은 해당 Pod의 모든 컨테이너에 적용됩니다.

가능한 값은 다음과 같습니다.

-

Always- Pod가 재시작될 때까지 급격한 백오프 지연(10초, 20초, 40초)을 사용하여 Pod에서 성공적으로 종료된 컨테이너를 계속 재시작합니다. 기본값은Always입니다. -

OnFailure- 급격한 백오프 지연(10초, 20초, 40초)을 5분으로 제한하여 Pod에서 실패한 컨테이너를 재시작합니다. -

Never- Pod에서 종료되거나 실패한 컨테이너를 재시작하지 않습니다. Pod가 즉시 실패하고 종료됩니다.

Pod가 특정 노드에 바인딩된 후에는 다른 노드에 바인딩되지 않습니다. 따라서 노드 장애 시 Pod가 작동하려면 컨트롤러가 필요합니다.

| 상태 | 컨트롤러 유형 | 재시작 정책 |

|---|---|---|

| 종료할 것으로 예상되는 Pod(예: 일괄 계산) | Job |

|

| 종료되지 않을 것으로 예상되는 Pod(예: 웹 서버) | 복제 컨트롤러 |

|

| 머신당 하나씩 실행해야 하는 Pod | 데몬 세트 | Any |

Pod의 컨테이너가 실패하고 재시작 정책이 OnFailure로 설정된 경우 Pod가 노드에 남아 있고 컨테이너가 재시작됩니다. 컨테이너를 재시작하지 않으려면 재시작 정책 Never를 사용하십시오.

전체 Pod가 실패하면 OpenShift Container Platform에서 새 Pod를 시작합니다. 개발자는 애플리케이션이 새 Pod에서 재시작될 수 있는 가능성을 고려해야 합니다. 특히 애플리케이션에서는 이전 실행으로 발생한 임시 파일, 잠금, 불완전한 출력 등을 처리해야 합니다.

Kubernetes 아키텍처에서는 클라우드 공급자의 끝점이 안정적인 것으로 예상합니다. 클라우드 공급자가 중단되면 kubelet에서 OpenShift Container Platform이 재시작되지 않습니다.

기본 클라우드 공급자 끝점이 안정적이지 않은 경우 클라우드 공급자 통합을 사용하여 클러스터를 설치하지 마십시오. 클라우드가 아닌 환경에서처럼 클러스터를 설치합니다. 설치된 클러스터에서 클라우드 공급자 통합을 설정하거나 해제하는 것은 권장되지 않습니다.

OpenShift Container Platform에서 실패한 컨테이너에 재시작 정책을 사용하는 방법에 대한 자세한 내용은 Kubernetes 설명서의 예제 상태를 참조하십시오.

2.3.2. Pod에서 사용할 수 있는 대역폭 제한

Pod에 서비스 품질 트래픽 조절 기능을 적용하고 사용 가능한 대역폭을 효과적으로 제한할 수 있습니다. Pod에서 송신하는 트래픽은 구성된 속도를 초과하는 패킷을 간단히 삭제하는 정책에 따라 처리합니다. Pod에 수신되는 트래픽은 데이터를 효과적으로 처리하기 위해 대기 중인 패킷을 구성하여 처리합니다. 특정 Pod에 대한 제한 사항은 다른 Pod의 대역폭에 영향을 미치지 않습니다.

프로세스

Pod의 대역폭을 제한하려면 다음을 수행합니다.

오브젝트 정의 JSON 파일을 작성하고

kubernetes.io/ingress-bandwidth및kubernetes.io/egress-bandwidth주석을 사용하여 데이터 트래픽 속도를 지정합니다. 예를 들어 Pod 송신 및 수신 대역폭을 둘 다 10M/s로 제한하려면 다음을 수행합니다.제한된

Pod오브젝트 정의Copy to Clipboard Copied! Toggle word wrap Toggle overflow 오브젝트 정의를 사용하여 Pod를 생성합니다.

oc create -f <file_or_dir_path>

$ oc create -f <file_or_dir_path>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.3. Pod 중단 예산을 사용하여 실행 중인 pod 수를 지정하는 방법

Pod 중단 예산은 Kubernetes API의 일부이며 다른 오브젝트 유형과 같은 oc 명령으로 관리할 수 있습니다. 유지 관리를 위해 노드를 드레이닝하는 것과 같이 작업 중에 pod 에 대한 보안 제약 조건을 지정할 수 있습니다.

PodDisruptionBudget은 동시에 작동해야 하는 최소 복제본 수 또는 백분율을 지정하는 API 오브젝트입니다. 프로젝트에서 이러한 설정은 노드 유지 관리 (예: 클러스터 축소 또는 클러스터 업그레이드) 중에 유용할 수 있으며 (노드 장애 시가 아니라) 자발적으로 제거된 경우에만 적용됩니다.

PodDisruptionBudget 오브젝트의 구성은 다음과 같은 주요 부분으로 구성되어 있습니다.

- 일련의 pod에 대한 라벨 쿼리 기능인 라벨 선택기입니다.

동시에 사용할 수 있어야 하는 최소 pod 수를 지정하는 가용성 수준입니다.

-

minAvailable은 중단 중에도 항상 사용할 수 있어야하는 pod 수입니다. -

maxUnavailable은 중단 중에 사용할 수없는 pod 수입니다.

-

maxUnavailable 0 % 또는 0이나 minAvailable의 100 % 혹은 복제본 수와 동일한 값은 허용되지만 이로 인해 노드가 드레인되지 않도록 차단할 수 있습니다.

다음을 사용하여 모든 프로젝트에서 pod 중단 예산을 확인할 수 있습니다.

oc get poddisruptionbudget --all-namespaces

$ oc get poddisruptionbudget --all-namespaces출력 예

NAMESPACE NAME MIN-AVAILABLE SELECTOR another-project another-pdb 4 bar=foo test-project my-pdb 2 foo=bar

NAMESPACE NAME MIN-AVAILABLE SELECTOR

another-project another-pdb 4 bar=foo

test-project my-pdb 2 foo=bar

PodDisruptionBudget은 시스템에서 최소 minAvailable pod가 실행중인 경우 정상으로 간주됩니다. 이 제한을 초과하는 모든 pod는 제거할 수 있습니다.

Pod 우선 순위 및 선점 설정에 따라 우선 순위가 낮은 pod는 pod 중단 예산 요구 사항을 무시하고 제거될 수 있습니다.

2.3.3.1. Pod 중단 예산을 사용하여 실행해야 할 pod 수 지정

PodDisruptionBudget 오브젝트를 사용하여 동시에 가동되어야 하는 최소 복제본 수 또는 백분율을 지정할 수 있습니다.

프로세스

pod 중단 예산을 구성하려면 다음을 수행합니다.

다음과 같은 오브젝트 정의를 사용하여 YAML 파일을 만듭니다.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 또는 다음을 수행합니다.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 다음 명령을 실행하여 오브젝트를 프로젝트에 추가합니다.

oc create -f </path/to/file> -n <project_name>

$ oc create -f </path/to/file> -n <project_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.4. 중요 Pod를 사용하여 Pod 제거 방지

완전히 작동하는 클러스터에 중요하지만 마스터가 아닌 일반 클러스터 노드에서 실행되는 다양한 핵심 구성 요소가 있습니다. 중요한 추가 기능이 제거되면 클러스터가 제대로 작동하지 않을 수 있습니다.

중요로 표시된 Pod는 제거할 수 없습니다.

프로세스

Pod를 중요로 설정하려면 다음을 수행합니다.

Pod사양을 생성하거나system-cluster-critical우선순위 클래스를 포함하도록 기존 Pod를 편집합니다.spec: template: metadata: name: critical-pod priorityClassName: system-cluster-criticalspec: template: metadata: name: critical-pod priorityClassName: system-cluster-critical1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 노드에서 제거해서는 안 되는 Pod의 기본 우선순위 클래스입니다.

또는 클러스터에 중요한 Pod에 대해

system-node-critical을 지정할 수 있지만 필요한 경우 제거할 수도 있습니다.Pod를 생성합니다.

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. 수평 Pod 자동 스케일러를 사용하여 Pod 자동 스케일링

개발자는 HPA(수평 Pod 자동 스케일러)를 사용하여 해당 복제 컨트롤러 또는 배포 구성에 속하는 Pod에서 수집한 메트릭을 기반으로 OpenShift Container Platform에서 복제 컨트롤러 또는 배포 구성의 규모를 자동으로 늘리거나 줄이는 방법을 지정할 수 있습니다.

2.4.1. 수평 Pod 자동 스케일러 이해

수평 Pod 자동 스케일러를 생성하여 실행하려는 최소 및 최대 Pod 수와 Pod에서 목표로 하는 CPU 사용률 또는 메모리 사용률을 지정할 수 있습니다.

메모리 사용률에 대한 자동 확장은 기술 프리뷰 기능 전용입니다.

수평 Pod 자동 스케일러를 생성하면 OpenShift Container Platform에서 Pod의 CPU 및/또는 메모리 리소스 메트릭을 쿼리합니다. 이러한 메트릭을 사용할 수 있는 경우 수평 Pod 자동 스케일러에서 현재 메트릭 사용률과 원하는 메트릭 사용률의 비율을 계산하고 그에 따라 확장 또는 축소합니다. 쿼리 및 스케일링은 정기적으로 수행되지만 메트릭을 사용할 수 있을 때까지 1~2분이 걸릴 수 있습니다.

복제 컨트롤러의 경우 이러한 스케일링은 복제 컨트롤러의 복제본과 직접적으로 일치합니다. 배포 구성의 경우 스케일링은 배포 구성의 복제본 수와 직접적으로 일치합니다. 자동 스케일링은 Complete 단계에서 최신 배포에만 적용됩니다.

OpenShift Container Platform은 리소스를 자동으로 차지하여 시작하는 동안과 같이 리소스가 급증하는 동안 불필요한 자동 스케일링을 방지합니다. unready 상태의 Pod는 확장 시 CPU 사용량이 0이고, 축소 시에는 자동 스케일러에서 Pod를 무시합니다. 알려진 메트릭이 없는 Pod는 확장 시 CPU 사용량이 0%이고, 축소 시에는 100%입니다. 이를 통해 HPA를 결정하는 동안 안정성이 향상됩니다. 이 기능을 사용하려면 준비 상태 점검을 구성하여 새 Pod를 사용할 준비가 되었는지 확인해야 합니다.

수평 Pod 자동 스케일러를 사용하려면 클러스터 관리자가 클러스터 메트릭을 올바르게 구성해야 합니다.

2.4.1.1. 지원되는 메트릭

수평 Pod 자동 스케일러에서는 다음 메트릭을 지원합니다.

| 메트릭 | 설명 | API 버전 |

|---|---|---|

| CPU 사용 | 사용되는 CPU 코어의 수입니다. Pod에서 요청하는 CPU의 백분율을 계산하는 데 사용할 수 있습니다. |

|

| 메모리 사용률 | 사용되는 메모리의 양입니다. Pod에서 요청하는 메모리의 백분율을 계산하는 데 사용할 수 있습니다. |

|

메모리 기반 자동 스케일링의 경우 메모리 사용량이 복제본 수에 비례하여 증가 및 감소해야 합니다. 평균적으로 다음과 같습니다.

- 복제본 수가 증가하면 Pod당 메모리(작업 집합) 사용량이 전반적으로 감소해야 합니다.

- 복제본 수가 감소하면 Pod별 메모리 사용량이 전반적으로 증가해야 합니다.

메모리 기반 자동 스케일링을 사용하기 전에 OpenShift Container Platform 웹 콘솔을 사용하여 애플리케이션의 메모리 동작을 확인하고 애플리케이션이 해당 요구 사항을 충족하는지 확인하십시오.

다음 예제에서는 image-registry DeploymentConfig 오브젝트에 대한 자동 스케일링을 보여줍니다. 초기 배포에는 Pod 3개가 필요합니다. HPA 오브젝트에서 해당 최솟값을 5개로 늘렸으며 Pod의 CPU 사용량이 75%에 도달하면 7개까지 늘립니다.

oc autoscale dc/image-registry --min=5 --max=7 --cpu-percent=75

$ oc autoscale dc/image-registry --min=5 --max=7 --cpu-percent=75출력 예

horizontalpodautoscaler.autoscaling/image-registry autoscaled

horizontalpodautoscaler.autoscaling/image-registry autoscaledminReplicas 가 3으로 설정된 image-registry DeploymentConfig 오브젝트의 샘플 HPA

배포의 새 상태를 확인합니다.

oc get dc image-registry

$ oc get dc image-registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow 이제 배포에 Pod 5개가 있습니다.

출력 예

NAME REVISION DESIRED CURRENT TRIGGERED BY image-registry 1 5 5 config

NAME REVISION DESIRED CURRENT TRIGGERED BY image-registry 1 5 5 configCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.1.2. 스케일링 정책

autoscaling/v2beta2 API를 사용하면 수평 Pod 자동 스케일러에 스케일링 정책을 추가할 수 있습니다. 스케일링 정책은 OpenShift Container Platform HPA(수평 Pod 자동 스케일러)에서 Pod를 스케일링하는 방법을 제어합니다. 스케일링 정책을 사용하면 지정된 기간에 스케일링할 특정 수 또는 특정 백분율을 설정하여 HPA에서 Pod를 확장 또는 축소하는 비율을 제한할 수 있습니다. 또한 메트릭이 계속 변동하는 경우 이전에 계산한 원하는 상태를 사용하여 스케일링을 제어하는 안정화 기간을 정의할 수 있습니다. 동일한 스케일링 방향(확장 또는 축소)에 대해 여러 정책을 생성하여 변경 정도에 따라 사용할 정책을 결정할 수 있습니다. 반복 시간을 지정하여 스케일링을 제한할 수도 있습니다. HPA는 반복 중 Pod를 스케일링한 다음 필요에 따라 추가 반복에서 스케일링을 수행합니다.

스케일링 정책이 포함된 HPA 오브젝트 샘플

- 1

- 스케일링 정책의 방향, 즉

scaleDown또는scaleUp을 지정합니다. 이 예제에서는 축소 정책을 생성합니다. - 2

- 스케일링 정책을 정의합니다.

- 3

- 정책이 각 반복에서 특정 Pod 수 또는 Pod 백분율로 스케일링하는지의 여부를 결정합니다. 기본값은

pods입니다. - 4

- 각 반복 중에 Pod 수 또는 Pod 백분율 중 하나의 스케일링 정도를 결정합니다. Pod 수에 따라 축소할 기본값은 없습니다.

- 5

- 스케일링 반복의 길이를 결정합니다. 기본값은

15초입니다. - 6

- 백분율로 된 축소 기본값은 100%입니다.

- 7

- 여러 정책이 정의된 경우 먼저 사용할 정책을 결정합니다. 가장 많은 변경을 허용하는 정책을 사용하려면

Max를 지정하고, 최소 변경을 허용하는 정책을 사용하려면Min을 지정합니다. HPA에서 해당 정책 방향으로 스케일링하지 않도록 하려면Disabled를 지정합니다. 기본값은Max입니다. - 8

- HPA에서 원하는 상태를 검토해야 하는 기간을 결정합니다. 기본값은

0입니다. - 9

- 이 예제에서는 확장 정책을 생성합니다.

- 10

- Pod 수에 따라 확장되는 수입니다. Pod 수 확장 기본값은 4%입니다.

- 11

- Pod 백분율에 따라 확장되는 수입니다. 백분율로 된 확장 기본값은 100%입니다.

축소 정책의 예

이 예제에서 Pod 수가 40개를 초과하면 selectPolicy에서 요구하는 대로 해당 정책으로 인해 상당한 변경이 발생하므로 축소에 백분율 기반 정책이 사용됩니다.

Pod 복제본이 80개 있는 경우 HPA는 첫 번째 반복에서 Pod를 8로 줄이며 이는 유형에 기반하여 80개의 Pod 중 10%입니다. percent 및 값: 10 매개 변수), 1분 동안 (periodSeconds: 60). 다음 반복에서는 Pod가 72개입니다. HPA는 나머지 Pod의 10%를 계산한 7.2개를 8개로 올림하여 Pod 8개를 축소합니다. 이후 반복할 때마다 나머지 Pod 수에 따라 스케일링할 Pod 수가 다시 계산됩니다. Pod 수가 40개 미만으로 줄어들면 Pod 기반 숫자가 백분율 기반 숫자보다 크기 때문에 Pod 기반 정책이 적용됩니다. HPA는 한 번에 Pod 4개를 줄입니다(type: pods 및 값: 4), 30 초 동안 (periodSeconds : 30), 20개의 복제본이 남아 있을 때까지 (minReplicas).

selectPolicy: disabled 매개변수는 HPA가 Pod를 확장하지 못하게 합니다. 필요한 경우 복제본 세트 또는 배포 세트의 복제본 수를 조정하여 수동으로 확장할 수 있습니다.

설정되어 있는 경우 oc edit 명령을 사용하여 스케일링 정책을 확인할 수 있습니다.

oc edit hpa hpa-resource-metrics-memory

$ oc edit hpa hpa-resource-metrics-memory출력 예

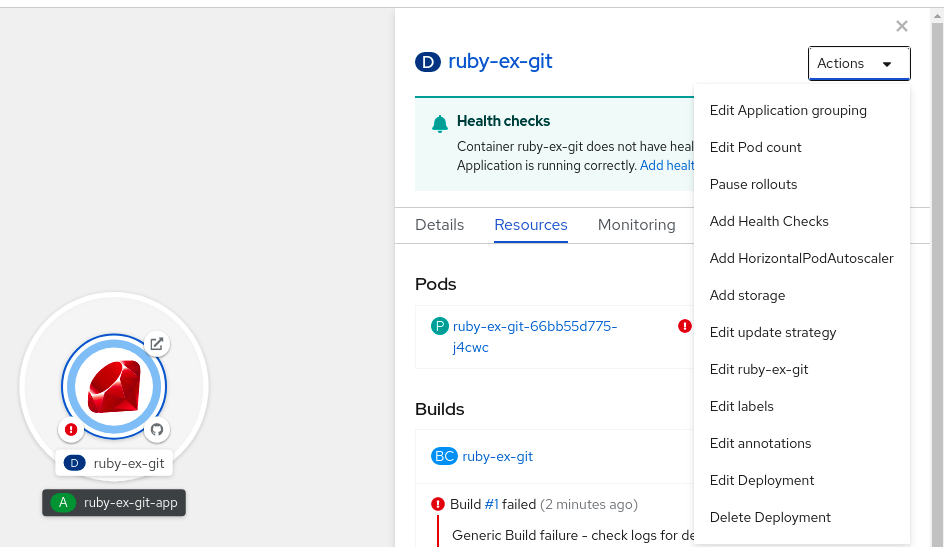

2.4.2. 웹 콘솔을 사용하여 수평 Pod 자동 스케일러 생성

웹 콘솔에서 배포에서 실행하려는 최소 및 최대 Pod 수를 지정하는 HPA(수평 Pod 자동 스케일러)를 생성할 수 있습니다. Pod에서 대상으로 하는 CPU 또는 메모리 사용량도 정의할 수 있습니다.

HPA는 Operator 지원 서비스, Knative 서비스 또는 Helm 차트의 일부인 배포에 추가할 수 없습니다.

절차

웹 콘솔에서 HPA를 생성하려면 다음을 수행합니다.

- 토폴로지 보기에서 노드를 클릭하여 측면 창을 표시합니다.

작업 드롭다운 목록에서 HorizontalPodAutoscaler 추가 를 선택하여 HorizontalPodAutoscaler 추가 양식을 엽니다.

그림 2.1. Add HorizontalPodAutoscaler

HorizontalPodAutoscaler 추가 양식에서 이름, 최소 및 최대 Pod 제한, CPU 및 메모리 사용량을 정의하고 저장을 클릭합니다.

참고CPU 및 메모리 사용량에 대한 값이 없는 경우 경고가 표시됩니다.

웹 콘솔에서 HPA를 편집하려면 다음을 수행합니다.

- 토폴로지 보기에서 노드를 클릭하여 측면 창을 표시합니다.

- 작업 드롭다운 목록에서 HorizontalPodAutoscaler 편집을 선택하여 Horizontal Pod Autoscaler 편집 양식을 엽니다.

- Horizontal Pod Autoscaler 편집 양식에서 최소 및 최대 Pod 제한과 CPU 및 메모리 사용량을 편집한 다음 저장을 클릭합니다.

웹 콘솔에서 수평 Pod 자동 스케일러를 생성하거나 편집하는 동안 양식 보기에서 YAML 보기로 전환할 수 있습니다.

웹 콘솔에서 HPA를 제거하려면 다음을 수행합니다.

- 토폴로지 보기에서 노드를 클릭하여 측면 창을 표시합니다.

- 작업 드롭다운 목록에서 HorizontalPodAutoscaler 제거를 선택합니다.

- 확인 팝업 창에서 제거를 클릭하여 HPA를 제거합니다.

2.4.3. CLI를 사용하여 CPU 사용률에 대한 수평 Pod 자동 스케일러 생성

기존 Deployment,DeploymentConfig,ReplicaSet,ReplicationController 또는 StatefulSet 오브젝트에 HPA(수평 Pod 자동 스케일러)를 생성하여 지정하는 CPU 사용량을 유지하도록 해당 오브젝트와 연결된 Pod를 자동으로 스케일링할 수 있습니다.

HPA는 최소 및 최대 개수 사이에서 복제본 수를 늘리거나 줄여 전체 Pod에서 지정된 CPU 사용률을 유지합니다.

CPU 사용률을 자동 스케일링할 때는 oc autoscale 명령을 사용하여 언제든지 실행하려는 최소 및 최대 Pod 수와 Pod에서 목표로 하는 평균 CPU 사용률을 지정할 수 있습니다. 최솟값을 지정하지 않으면 Pod에 OpenShift Container Platform 서버의 기본값이 지정됩니다. 특정 CPU 값을 자동 스케일링하려면 대상 CPU 및 Pod 제한을 사용하여 HorizontalPodAutoscaler 오브젝트를 생성합니다.

사전 요구 사항

수평 Pod 자동 스케일러를 사용하려면 클러스터 관리자가 클러스터 메트릭을 올바르게 구성해야 합니다. oc describe PodMetrics <pod-name> 명령을 사용하여 메트릭이 구성되어 있는지 확인할 수 있습니다. 메트릭이 구성된 경우 출력이 다음과 유사하게 표시되고 Usage에 Cpu 및 Memory가 표시됩니다.

oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internal

$ oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internal출력 예

프로세스

CPU 사용률에 대한 수평 Pod 자동 스케일러를 생성하려면 다음을 수행합니다.

다음 중 하나를 수행합니다.

CPU 사용률 백분율에 따라 스케일링하려면 기존 오브젝트에 대한

HorizontalPodAutoscaler오브젝트를 생성합니다.oc autoscale <object_type>/<name> \ --min <number> \ --max <number> \ --cpu-percent=<percent>

$ oc autoscale <object_type>/<name> \1 --min <number> \2 --max <number> \3 --cpu-percent=<percent>4 Copy to Clipboard Copied! Toggle word wrap Toggle overflow 예를 들어 다음 명령은

image-registryDeploymentConfig오브젝트에 대한 자동 스케일링을 보여줍니다. 초기 배포에는 Pod 3개가 필요합니다. HPA 오브젝트에서 해당 최솟값을 5개로 늘렸으며 Pod의 CPU 사용량이 75%에 도달하면 7개까지 늘립니다.oc autoscale dc/image-registry --min=5 --max=7 --cpu-percent=75

$ oc autoscale dc/image-registry --min=5 --max=7 --cpu-percent=75Copy to Clipboard Copied! Toggle word wrap Toggle overflow 특정 CPU 값을 스케일링하려면 기존 오브젝트에 대해 다음과 유사한 YAML 파일을 생성합니다.

다음과 유사한 YAML 파일을 생성합니다.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

autoscaling/v2beta2API를 사용합니다.- 2

- 이 수평 Pod 자동 스케일러 오브젝트의 이름을 지정합니다.

- 3

- 스케일링할 오브젝트의 API 버전을 지정합니다.

-

ReplicationController의 경우v1을 사용합니다. -

deploymentConfig

의경우apps.openshift.io/v1을 사용합니다. -

Deployment,ReplicaSet,Statefulset오브젝트의 경우apps/v1을 사용합니다.

-

- 4

- 오브젝트 유형을 지정합니다. 오브젝트는

Deployment,DeploymentConfig/dc,ReplicaSet/rs,ReplicationController/rc또는StatefulSet이어야 합니다. - 5

- 스케일링할 오브젝트의 이름을 지정합니다. 오브젝트가 있어야 합니다.

- 6

- 축소 시 최소 복제본 수를 지정합니다.

- 7

- 확장 시 최대 복제본 수를 지정합니다.

- 8

- 메모리 사용률에

metrics매개변수를 사용합니다. - 9

- CPU 사용률에

cpu를 지정합니다. - 10

AverageValue로 설정합니다.- 11

- 대상 CPU 값을 사용하여

averageValue로 설정합니다.

수평 Pod 자동 스케일러를 생성합니다.

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

수평 Pod 자동 스케일러가 생성되었는지 확인합니다.

oc get hpa cpu-autoscale

$ oc get hpa cpu-autoscaleCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE cpu-autoscale ReplicationController/example 173m/500m 1 10 1 20m

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE cpu-autoscale ReplicationController/example 173m/500m 1 10 1 20mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.4. CLI를 사용하여 메모리 사용률에 대한 수평 Pod 자동 스케일러 오브젝트 생성

기존 DeploymentConfig 또는 ReplicationController 오브젝트에 HPA(수평 Pod 자동 스케일러)를 생성하면 지정하는 평균 메모리 사용률(직접적인 값 또는 요청 메모리의 백분율)을 유지하도록 해당 오브젝트에 연결된 Pod를 자동으로 스케일링할 수 있습니다.

HPA는 최소 및 최대 개수 사이에서 복제본 수를 늘리거나 줄여 전체 Pod에서 지정된 메모리 사용률을 유지합니다.

메모리 사용률의 경우 최소 및 최대 Pod 수와 Pod에서 목표로 해야 하는 평균 메모리 사용률을 지정할 수 있습니다. 최솟값을 지정하지 않으면 Pod에 OpenShift Container Platform 서버의 기본값이 지정됩니다.

메모리 사용률에 대한 자동 확장은 기술 프리뷰 기능 전용입니다. 기술 프리뷰 기능은 Red Hat 프로덕션 서비스 수준 계약(SLA)에서 지원하지 않으며, 기능상 완전하지 않을 수 있어 프로덕션에 사용하지 않는 것이 좋습니다. 이러한 기능을 사용하면 향후 제품 기능을 조기에 이용할 수 있어 개발 과정에서 고객이 기능을 테스트하고 피드백을 제공할 수 있습니다.

Red Hat 기술 프리뷰 기능 지원 범위에 대한 자세한 내용은 https://access.redhat.com/support/offerings/techpreview/를 참조하십시오.

사전 요구 사항

수평 Pod 자동 스케일러를 사용하려면 클러스터 관리자가 클러스터 메트릭을 올바르게 구성해야 합니다. oc describe PodMetrics <pod-name> 명령을 사용하여 메트릭이 구성되어 있는지 확인할 수 있습니다. 메트릭이 구성된 경우 출력이 다음과 유사하게 표시되고 Usage에 Cpu 및 Memory가 표시됩니다.

oc describe PodMetrics openshift-kube-scheduler-ip-10-0-129-223.compute.internal -n openshift-kube-scheduler

$ oc describe PodMetrics openshift-kube-scheduler-ip-10-0-129-223.compute.internal -n openshift-kube-scheduler출력 예

프로세스

메모리 사용률에 대한 수평 Pod 자동 스케일러를 생성하려면 다음을 수행합니다.

다음 중 하나에 대한 YAML 파일을 생성합니다.

특정 메모리 값을 스케일링하려면 기존

ReplicationController오브젝트 또는 복제 컨트롤러에 대해 다음과 유사한HorizontalPodAutoscaler오브젝트를 생성합니다.출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

autoscaling/v2beta2API를 사용합니다.- 2

- 이 수평 Pod 자동 스케일러 오브젝트의 이름을 지정합니다.

- 3

- 스케일링할 오브젝트의 API 버전을 지정합니다.

-

복제 컨트롤러의 경우

v1을 사용합니다. -

DeploymentConfig오브젝트의 경우apps.openshift.io/v1을 사용합니다.

-

복제 컨트롤러의 경우

- 4

ReplicationController또는DeploymentConfig중 스케일링할 오브젝트 유형을 지정합니다.- 5

- 스케일링할 오브젝트의 이름을 지정합니다. 오브젝트가 있어야 합니다.

- 6

- 축소 시 최소 복제본 수를 지정합니다.

- 7

- 확장 시 최대 복제본 수를 지정합니다.

- 8

- 메모리 사용률에

metrics매개변수를 사용합니다. - 9

- 메모리 사용률에 대한

메모리를 지정합니다. - 10

- 유형을

AverageValue로 설정합니다. - 11

averageValue및 특정 메모리 값을 지정합니다.- 12

- 선택 사항: 확장 또는 축소 속도를 제어하려면 스케일링 정책을 지정합니다.

백분율로 스케일링하려면 다음과 유사한

HorizontalPodAutoscaler오브젝트를 생성합니다.출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

autoscaling/v2beta2API를 사용합니다.- 2

- 이 수평 Pod 자동 스케일러 오브젝트의 이름을 지정합니다.

- 3

- 스케일링할 오브젝트의 API 버전을 지정합니다.

-

복제 컨트롤러의 경우

v1을 사용합니다. -

DeploymentConfig오브젝트의 경우apps.openshift.io/v1을 사용합니다.

-

복제 컨트롤러의 경우

- 4

ReplicationController또는DeploymentConfig중 스케일링할 오브젝트 유형을 지정합니다.- 5

- 스케일링할 오브젝트의 이름을 지정합니다. 오브젝트가 있어야 합니다.

- 6

- 축소 시 최소 복제본 수를 지정합니다.

- 7

- 확장 시 최대 복제본 수를 지정합니다.

- 8

- 메모리 사용률에

metrics매개변수를 사용합니다. - 9

- 메모리 사용률에 대한

메모리를 지정합니다. - 10

Utilization으로 설정합니다.- 11

averageUtilization및 전체 Pod에 대한 대상 평균 메모리 사용률(요청 메모리의 백분율로 표시)을 지정합니다. 대상 Pod에 메모리 요청이 구성되어 있어야 합니다.- 12

- 선택 사항: 확장 또는 축소 속도를 제어하려면 스케일링 정책을 지정합니다.

수평 Pod 자동 스케일러를 생성합니다.

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 예를 들면 다음과 같습니다.

oc create -f hpa.yaml

$ oc create -f hpa.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

horizontalpodautoscaler.autoscaling/hpa-resource-metrics-memory created

horizontalpodautoscaler.autoscaling/hpa-resource-metrics-memory createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow 수평 Pod 자동 스케일러가 생성되었는지 확인합니다.

oc get hpa hpa-resource-metrics-memory

$ oc get hpa hpa-resource-metrics-memoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE hpa-resource-metrics-memory ReplicationController/example 2441216/500Mi 1 10 1 20m

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE hpa-resource-metrics-memory ReplicationController/example 2441216/500Mi 1 10 1 20mCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc describe hpa hpa-resource-metrics-memory

$ oc describe hpa hpa-resource-metrics-memoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.5. CLI를 사용하여 수평 Pod 자동 스케일러 상태 조건 이해

일련의 상태 조건을 사용하여 HPA(수평 Pod 자동 스케일러)에서 스케일링할 수 있는지 그리고 HPA가 현재 제한되어 있는지의 여부를 결정할 수 있습니다.

HPA 상태 조건은 Autoscaling API의 v2beta1 버전에서 사용할 수 있습니다.

HPA는 다음과 같은 상태 조건을 통해 응답합니다.

AbleToScale상태는 HPA에서 메트릭을 가져오고 업데이트할 수 있는지의 여부 및 백오프 관련 상태로 스케일링을 방지할 수 있는지의 여부를 나타냅니다.-

True조건은 스케일링이 허용되었음을 나타냅니다. -

False조건은 지정된 이유로 스케일링이 허용되지 않음을 나타냅니다.

-

ScalingActive조건은 HPA가 활성화되어 있고(예: 대상의 복제본 수가 0이 아님) 원하는 메트릭을 계산할 수 있는지의 여부를 나타냅니다.-

True조건은 메트릭이 제대로 작동함을 나타냅니다. -

False조건은 일반적으로 메트릭을 가져오는 데 문제가 있음을 나타냅니다.

-

ScalingLimited조건은 원하는 스케일링이 수평 Pod 자동 스케일러의 최댓값 또는 최솟값으로 제한되었음을 나타냅니다.-

True조건은 스케일링을 위해 최소 또는 최대 복제본 수를 늘리거나 줄여야 함을 나타냅니다. False조건은 요청된 스케일링이 허용됨을 나타냅니다.oc describe hpa cm-test

$ oc describe hpa cm-testCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 수평 Pod 자동 스케일러의 상태 메시지입니다.

-

다음은 스케일링할 수 없는 Pod의 예입니다.

출력 예

다음은 스케일링에 필요한 메트릭을 가져올 수 없는 Pod의 예입니다.

출력 예

Conditions: Type Status Reason Message ---- ------ ------ ------- AbleToScale True SucceededGetScale the HPA controller was able to get the target's current scale ScalingActive False FailedGetResourceMetric the HPA was unable to compute the replica count: failed to get cpu utilization: unable to get metrics for resource cpu: no metrics returned from resource metrics API

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True SucceededGetScale the HPA controller was able to get the target's current scale

ScalingActive False FailedGetResourceMetric the HPA was unable to compute the replica count: failed to get cpu utilization: unable to get metrics for resource cpu: no metrics returned from resource metrics API다음은 요청된 자동 스케일링이 필요한 최솟값보다 적은 Pod의 예입니다.

출력 예

2.4.5.1. CLI를 사용하여 수평 Pod 자동 스케일러 상태 조건 보기

HPA(수평 Pod 자동 스케일러)를 통해 Pod에 설정된 상태 조건을 볼 수 있습니다.

수평 Pod 자동 스케일러 상태 조건은 v2beta1 버전의 Autoscaling API에서 사용할 수 있습니다.

사전 요구 사항

수평 Pod 자동 스케일러를 사용하려면 클러스터 관리자가 클러스터 메트릭을 올바르게 구성해야 합니다. oc describe PodMetrics <pod-name> 명령을 사용하여 메트릭이 구성되어 있는지 확인할 수 있습니다. 메트릭이 구성된 경우 출력이 다음과 유사하게 표시되고 Usage에 Cpu 및 Memory가 표시됩니다.

oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internal

$ oc describe PodMetrics openshift-kube-scheduler-ip-10-0-135-131.ec2.internal출력 예

프로세스

Pod의 상태 조건을 보려면 Pod 이름과 함께 다음 명령을 사용합니다.

oc describe hpa <pod-name>

$ oc describe hpa <pod-name>예를 들면 다음과 같습니다.

oc describe hpa cm-test

$ oc describe hpa cm-test

상태가 출력의 Conditions 필드에 나타납니다.

출력 예

2.5. 수직 Pod 자동 스케일러를 사용하여 Pod 리소스 수준 자동 조정

OpenShift Container Platform VPA(Vertical Pod Autoscaler Operator)는 Pod의 컨테이너에 대한 과거 및 현재의 CPU 및 메모리 리소스를 자동으로 검토한 후 확인한 사용량 값에 따라 리소스 제한 및 요청을 업데이트할 수 있습니다. VPA는 개별 CR(사용자 정의 리소스)을 사용하여 Deployment, Deployment Config, StatefulSet, Job, DaemonSet, ReplicaSet 또는 ReplicationController와 같은 워크로드 오브젝트와 연결된 모든 Pod를 프로젝트에서 업데이트합니다.

VPA를 사용하면 Pod의 최적 CPU 및 메모리 사용량을 이해하고 Pod의 라이프사이클 내내 Pod 리소스를 자동으로 유지 관리할 수 있습니다.

수직 Pod 자동 스케일러는 기술 프리뷰 전용입니다. 기술 프리뷰 기능은 Red Hat 프로덕션 서비스 수준 계약(SLA)에서 지원되지 않으며 기능적으로 완전하지 않을 수 있습니다. 따라서 프로덕션 환경에서 사용하는 것은 권장하지 않습니다. 이러한 기능을 사용하면 향후 제품 기능을 조기에 이용할 수 있어 개발 과정에서 고객이 기능을 테스트하고 피드백을 제공할 수 있습니다.

Red Hat 기술 프리뷰 기능의 지원 범위에 대한 자세한 내용은 https://access.redhat.com/support/offerings/techpreview/를 참조하십시오.

2.5.1. Vertical Pod Autoscaler Operator 정보

VPA(Vertical Pod Autoscaler Operator)는 API 리소스 및 CR(사용자 정의 리소스)로 구현됩니다. CR에 따라 Vertical Pod Autoscaler Operator에서 데몬 세트, 복제 컨트롤러 등과 같은 특정 워크로드 오브젝트와 관련된 Pod에서 수행해야 하는 작업이 프로젝트에서 결정됩니다.

VPA는 해당 Pod의 컨테이너 및 현재 CPU 및 메모리 사용량을 자동으로 계산하고, 이 데이터를 사용하여 최적화된 리소스 제한 및 요청을 확인하여 이러한 Pod가 항상 효율적으로 작동하는지 확인할 수 있습니다. 예를 들어 VPA에서 사용 중인 리소스보다 더 많은 리소스를 요청하는 Pod의 리소스를 줄이고 리소스를 충분히 요청하지 않는 Pod의 리소스를 늘립니다.

VPA는 애플리케이션이 다운 타임 없이 요청을 계속 제공할 수 있도록 권장 사항과 일치하지 않는 모든 Pod를 한 번에 하나씩 자동으로 삭제합니다. 그런 다음 워크로드 오브젝트는 원래 리소스 제한 및 요청을 사용하여 Pod를 재배포합니다. VPA는 변경 승인 Webhook를 사용하여 Pod가 노드에 승인되기 전에 최적화된 리소스 제한 및 요청을 사용하여 Pod를 업데이트합니다. VPA에서 Pod를 삭제하지 않으려면 필요에 따라 VPA 리소스 제한 및 요청 및 수동으로 Pod를 업데이트할 수 있습니다.

예를 들어 CPU의 50%를 사용하면서 10%만 요청하는 Pod가 있는 경우, VPA는 요청하는 것보다 더 많은 CPU를 사용하고 있는 것으로 판단하고 Pod를 삭제합니다. 복제본 세트와 같은 워크로드 오브젝트는 Pod를 재시작하고 VPA는 권장 리소스로 새 Pod를 업데이트합니다.

개발자의 경우 VPA를 사용하면 각 Pod에 적절한 리소스를 제공하도록 노드에 Pod를 예약하여 수요가 많은 기간에도 Pod가 유지되도록 할 수 있습니다.

관리자는 VPA를 사용하여 Pod에서 필요 이상의 CPU 리소스를 예약하지 않도록 클러스터 리소스를 더 효율적으로 활용할 수 있습니다. VPA는 워크로드에서 실제로 사용 중인 리소스를 모니터링하고 다른 워크로드에서 용량을 사용할 수 있도록 리소스 요구 사항을 조정합니다. 또한 VPA는 초기 컨테이너 구성에 지정된 제한 및 요청 간 비율도 유지합니다.

VPA 실행을 중지하거나 클러스터에서 특정 VPA CR을 삭제하는 경우 VPA에서 이미 수정한 Pod에 대한 리소스 요청은 변경되지 않습니다. 새 Pod에서는 모두 VPA에서 설정한 이전의 권장 사항 대신 워크로드 오브젝트에 정의된 리소스를 가져옵니다.

2.5.2. Vertical Pod Autoscaler Operator 설치

OpenShift Container Platform 웹 콘솔을 사용하여 VPA(Vertical Pod Autoscaler Operator)를 설치할 수 있습니다.

프로세스

- OpenShift Container Platform 웹 콘솔에서 Operator → OperatorHub를 클릭합니다.

- 사용 가능한 Operator 목록에서 VerticalPodAutoscaler를 선택한 다음 설치를 클릭합니다.

-

Operator 설치 페이지에서 Operator 권장 네임스페이스 옵션이 선택되어 있는지 확인합니다. 그러면 필수

openshift-vertical-pod-autoscaler네임스페이스에 Operator가 설치됩니다. 해당 네임스페이스가 존재하지 않는 경우 자동으로 생성됩니다. - 설치를 클릭합니다.

VPA Operator 구성 요소를 나열하여 설치를 확인합니다.

- 워크로드 → Pod로 이동합니다.

-

드롭다운 메뉴에서

openshift-vertical-pod-autoscaler프로젝트를 선택하고 Pod 4개가 실행되고 있는지 확인합니다. - 워크로드 → 배포로 이동하여 배포 4개가 실행되고 있는지 확인합니다.

선택 사항: 다음 명령을 사용하여 OpenShift Container Platform CLI에서 설치를 확인합니다.

oc get all -n openshift-vertical-pod-autoscaler

$ oc get all -n openshift-vertical-pod-autoscalerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력에는 Pod 4개와 및 배포 4개가 표시됩니다.

출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.3. Vertical Pod Autoscaler Operator 사용 정보

VPA(Vertical Pod Autoscaler Operator)를 사용하려면 클러스터에서 워크로드 오브젝트에 대한 VPA CR(사용자 정의 리소스)을 생성합니다. VPA는 해당 워크로드 오브젝트와 연결된 Pod에 가장 적합한 CPU 및 메모리 리소스를 확인하고 적용합니다. 배포, 상태 저장 세트, 작업, 데몬 세트, 복제본 세트 또는 복제 컨트롤러 워크로드 오브젝트에 VPA를 사용할 수 있습니다. VPA CR은 모니터링할 Pod와 동일한 프로젝트에 있어야 합니다.

VPA CR을 사용하여 워크로드 오브젝트를 연결하고 VPA가 작동하는 모드를 지정합니다.

-

Auto및Recreate모드는 Pod 수명 동안 VPA CPU 및 메모리 권장 사항을 자동으로 적용합니다. VPA는 권장 사항과 일치하지 않는 프로젝트의 모든 Pod를 삭제합니다. 워크로드 오브젝트에서 재배포하면 VPA는 새 Pod를 권장 사항으로 업데이트합니다. -

Initial모드는 Pod 생성 시에만 VPA 권장 사항을 자동으로 적용합니다. -

Off모드는 권장되는 리소스 제한 및 요청만 제공하며 권장 사항을 수동으로 적용할 수 있습니다.off모드에서는 Pod를 업데이트하지 않습니다.

CR을 사용하여 VPA 평가 및 업데이트에서 특정 컨테이너를 옵트아웃할 수도 있습니다.

예를 들어 Pod에 다음과 같은 제한 및 요청이 있습니다.

auto로 설정된 VPA를 생성하면 VPA에서 리소스 사용량을 확인하고 Pod를 삭제합니다. 재배포되면 Pod는 새 리소스 제한 및 요청을 사용합니다.

다음 명령을 사용하여 VPA 권장 사항을 볼 수 있습니다.

oc get vpa <vpa-name> --output yaml

$ oc get vpa <vpa-name> --output yaml몇 분 후 출력에는 CPU 및 메모리 요청에 대한 권장 사항이 표시되며 다음과 유사합니다.

출력 예

출력에는 권장 리소스(target), 최소 권장 리소스(lowerBound), 최고 권장 리소스(upperBound), 최신 리소스 권장 사항(uncappedTarget)이 표시됩니다.

VPA는 lowerBound 및 upperBound 값을 사용하여 Pod를 업데이트해야 하는지 확인합니다. Pod에 lowerBound 값보다 작거나 upperBound 값을 초과하는 리소스 요청이 있는 경우 VPA는 Pod를 종료하고 target 값을 사용하여 Pod를 다시 생성합니다.

2.5.3.1. VPA 권장 사항 자동 적용

VPA를 사용하여 Pod를 자동으로 업데이트하려면 updateMode를 Auto 또는 Recreate로 설정하여 특정 워크로드 오브젝트에 대한 VPA CR을 생성합니다.

워크로드 오브젝트에 대한 Pod가 생성되면 VPA에서 컨테이너를 지속적으로 모니터링하여 CPU 및 메모리 요구 사항을 분석합니다. VPA는 CPU 및 메모리에 대한 VPA 권장 사항을 충족하지 않는 모든 Pod를 삭제합니다. 재배포되면 Pod는 VPA 권장 사항에 따라 새 리소스 제한 및 요청을 사용하여 애플리케이션에 대해 설정된 모든 Pod 중단 예산을 준수합니다. 권장 사항은 참조를 위해 VPA CR의 status 필드에 추가되어 있습니다.

워크로드 오브젝트에서 Pod를 모니터링하고 업데이트하려면 최소 두 개의 복제본을 지정해야 합니다. 워크로드 오브젝트에서 하나의 복제본을 지정하면 VPA에서 애플리케이션 다운타임을 방지하기 위해 Pod를 삭제하지 않습니다. 권장 리소스를 사용하도록 Pod를 수동으로 삭제할 수 있습니다.

Auto 모드 VPA CR의 예

- 1 1

- 이 VPA CR에서 관리할 워크로드 오브젝트의 유형입니다.

- 2

- 이 VPA CR에서 관리할 워크로드 오브젝트의 이름입니다.

- 3

- 모드를

Auto또는Recreate로 설정합니다.-

Auto. VPA는 Pod 생성 시 리소스 요청을 할당하고 요청된 리소스가 새 권장 사항과 크게 다른 경우 기존 Pod를 종료하여 업데이트합니다. -

Recreate. VPA는 Pod 생성 시 리소스 요청을 할당하고 요청된 리소스가 새 권장 사항과 크게 다른 경우 기존 Pod를 종료하여 업데이트합니다. 이 모드는 리소스 요청이 변경될 때마다 Pod를 재시작해야 하는 경우에만 사용해야 합니다.

-

VPA에서 권장 리소스를 결정하고 권장 사항을 새 Pod에 적용하려면 프로젝트에 작동 중인 Pod가 있어야 합니다.

2.5.3.2. Pod 생성에 VPA 권장 사항 자동 적용

VPA를 사용하여 Pod를 처음 배포할 때만 권장 리소스를 적용하려면 updateMode를 Initial로 설정하여 특정 워크로드 오브젝트에 대한 VPA CR을 생성합니다.

그런 다음 VPA 권장 사항을 사용하려는 워크로드 오브젝트와 연결된 모든 Pod를 수동으로 삭제합니다. Initial 모드에서 VPA는 새 리소스 권장 사항을 확인할 때 Pod를 삭제하지 않고 Pod을 업데이트하지도 않습니다.

Initial 모드 VPA CR의 예

VPA에서 권장 리소스를 결정하고 권장 사항을 새 Pod에 적용하려면 프로젝트에 작동 중인 Pod가 있어야 합니다.

2.5.3.3. VPA 권장 사항 수동 적용

VPA를 권장 CPU 및 메모리 값을 확인하는 데에만 사용하려면 updateMode를 off로 설정하여 특정 워크로드 오브젝트에 대한 VPA CR을 생성합니다.

해당 워크로드 오브젝트에 대한 Pod가 생성되면 VPA는 컨테이너의 CPU 및 메모리 요구 사항을 분석하고 VPA CR의 status 필드에 해당 권장 사항을 기록합니다. VPA는 새 리소스 권장 사항을 확인할 때 Pod를 업데이트하지 않습니다.

Off 모드 VPA CR의 예

다음 명령을 사용하여 권장 사항을 볼 수 있습니다.

oc get vpa <vpa-name> --output yaml

$ oc get vpa <vpa-name> --output yaml권장 사항에 따라 워크로드 오브젝트를 편집하여 CPU 및 메모리 요청을 추가한 다음 권장 리소스를 사용하여 Pod를 삭제하고 재배포할 수 있습니다.

VPA에서 권장 리소스를 결정하려면 프로젝트에 작동 중인 Pod가 있어야 합니다.

2.5.3.4. VPA 권장 사항 적용에서 컨테이너 제외

워크로드 오브젝트에 컨테이너가 여러 개 있고 VPA에서 모든 컨테이너를 평가하고 해당 컨테이너에 대해 작동하지 않도록 하려면 특정 워크로드 오브젝트에 대한 VPA CR을 생성하고 resourcePolicy를 추가하여 특정 컨테이너를 옵트아웃합니다.

VPA에서 권장 리소스를 사용하여 Pod를 업데이트하면 resourcePolicy가 포함된 모든 컨테이너가 업데이트되지 않으며 VPA는 Pod의 해당 컨테이너에 대한 권장 사항을 제공하지 않습니다.

예를 들면 Pod에 다음과 같이 리소스 요청 및 제한이 동일한 두 개의 컨테이너가 있습니다.

backend 컨테이너를 옵트아웃으로 설정하여 VPA CR을 시작하면 VPA에서 Pod를 종료한 후 frontend 컨테이너에만 적용되는 권장 리소스를 사용하여 Pod를 다시 생성합니다.

2.5.4. Vertical Pod Autoscaler Operator 사용

VPA(Vertical Pod Autoscaler Operator) CR(사용자 정의 리소스)을 생성하여 VPA를 사용할 수 있습니다. CR은 VPA에서 해당 Pod에 수행할 작업을 분석하고 결정해야 하는 Pod를 나타냅니다.

프로세스

특정 워크로드 오브젝트에 대한 VPA CR을 생성하려면 다음을 수행합니다.

스케일링할 워크로드 오브젝트가 있는 프로젝트로 변경합니다.

VPA CR YAML 파일을 생성합니다.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 이 VPA에서 관리할 워크로드 오브젝트 유형을 지정합니다.

Deployment,StatefulSet,Job,DaemonSet,ReplicaSet또는ReplicationController. - 2

- 이 VPA에서 관리할 기존 워크로드 오브젝트의 이름을 지정합니다.

- 3

- 다음과 같이 VPA 모드를 지정합니다.

-

auto: 컨트롤러와 연결된 Pod에 권장 리소스를 자동으로 적용합니다. VPA는 기존 Pod를 종료하고 권장 리소스 제한 및 요청을 사용하여 새 Pod를 생성합니다. -

recreate: 워크로드 오브젝트와 연결된 Pod에 권장 리소스를 자동으로 적용합니다. VPA는 기존 Pod를 종료하고 권장 리소스 제한 및 요청을 사용하여 새 Pod를 생성합니다.recreate모드는 리소스 요청이 변경될 때마다 Pod를 재시작해야 하는 경우에만 사용해야 합니다. -

initial: 워크로드 오브젝트와 연결된 Pod가 생성될 때 권장 리소스를 자동으로 적용합니다. VPA는 새 리소스 권장 사항을 확인할 때 Pod를 업데이트하지 않습니다. -

off: 워크로드 오브젝트와 연결된 Pod의 리소스 권장 사항만 생성합니다. VPA는 새 리소스 권장 사항을 확인할 때 Pod를 업데이트하지 않고 해당 권장 사항을 새 Pod에 적용하지도 않습니다.

-

- 4

- 선택 사항입니다. 옵트아웃할 컨테이너를 지정하고 모드를

Off로 설정합니다.

VPA CR을 생성합니다.

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 잠시 후 VPA는 워크로드 오브젝트와 연결된 Pod에서 컨테이너의 리소스 사용량을 확인합니다.

다음 명령을 사용하여 VPA 권장 사항을 볼 수 있습니다.

oc get vpa <vpa-name> --output yaml

$ oc get vpa <vpa-name> --output yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력에는 CPU 및 메모리 요청에 대한 권장 사항이 표시되며 다음과 유사합니다.

출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.5. Vertical Pod Autoscaler Operator 설치 제거

OpenShift Container Platform 클러스터에서 VPA(Vertical Pod Autoscaler Operator)를 제거할 수 있습니다. 설치 제거해도 기존 VPA CR에 의해 이미 수정된 Pod의 리소스 요청은 변경되지 않습니다. 새 Pod에서는 모두 Vertical Pod Autoscaler Operator에서 설정한 권장 사항 대신 워크로드 오브젝트에 정의된 리소스를 가져옵니다.

oc delete vpa <vpa-name> 명령을 사용하여 특정 VPA를 제거할 수 있습니다. 리소스 요청에는 수직 Pod 자동 스케일러를 설치 제거할 때와 동일한 작업이 적용됩니다.

VPA Operator를 제거한 후 잠재적인 문제를 방지하려면 Operator와 연결된 다른 구성 요소를 제거하는 것이 좋습니다.

사전 요구 사항

- Vertical Pod Autoscaler Operator를 설치해야 합니다.

프로세스

- OpenShift Container Platform 웹 콘솔에서 Operator → 설치된 Operator를 클릭합니다.

- openshift-vertical-pod-autoscaler 프로젝트로 전환합니다.

- VerticalPodAutoscaler Operator를 찾아 옵션 메뉴를 클릭합니다. Operator 설치 제거를 선택합니다.

- 대화 상자에서 설치 제거를 클릭합니다.

- 선택 사항: Operator와 연결된 모든 피연산자를 제거하려면 대화 상자에서 이 연산자의 모든 피연산자 인스턴스 삭제를 선택합니다.

- 제거를 클릭합니다.

선택 사항: OpenShift CLI를 사용하여 VPA 구성 요소를 제거합니다.

VPA 변경 Webhook 구성을 삭제합니다.

oc delete mutatingwebhookconfigurations/vpa-webhook-config

$ oc delete mutatingwebhookconfigurations/vpa-webhook-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow 모든 VPA 사용자 정의 리소스를 나열합니다.

oc get verticalpodautoscalercheckpoints.autoscaling.k8s.io,verticalpodautoscalercontrollers.autoscaling.openshift.io,verticalpodautoscalers.autoscaling.k8s.io -o wide --all-namespaces

$ oc get verticalpodautoscalercheckpoints.autoscaling.k8s.io,verticalpodautoscalercontrollers.autoscaling.openshift.io,verticalpodautoscalers.autoscaling.k8s.io -o wide --all-namespacesCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 나열된 VPA 사용자 정의 리소스를 삭제합니다. 예를 들면 다음과 같습니다.

oc delete verticalpodautoscalercheckpoint.autoscaling.k8s.io/vpa-recommender-httpd -n my-project

$ oc delete verticalpodautoscalercheckpoint.autoscaling.k8s.io/vpa-recommender-httpd -n my-projectCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete verticalpodautoscalercontroller.autoscaling.openshift.io/default -n openshift-vertical-pod-autoscaler

$ oc delete verticalpodautoscalercontroller.autoscaling.openshift.io/default -n openshift-vertical-pod-autoscalerCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete verticalpodautoscaler.autoscaling.k8s.io/vpa-recommender -n my-project

$ oc delete verticalpodautoscaler.autoscaling.k8s.io/vpa-recommender -n my-projectCopy to Clipboard Copied! Toggle word wrap Toggle overflow VPA CRD(사용자 정의 리소스 정의)를 나열합니다.

oc get crd

$ oc get crdCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 나열된 VPA CRD를 삭제합니다.

oc delete crd verticalpodautoscalercheckpoints.autoscaling.k8s.io verticalpodautoscalercontrollers.autoscaling.openshift.io verticalpodautoscalers.autoscaling.k8s.io

$ oc delete crd verticalpodautoscalercheckpoints.autoscaling.k8s.io verticalpodautoscalercontrollers.autoscaling.openshift.io verticalpodautoscalers.autoscaling.k8s.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow CRD를 삭제하면 관련 역할, 클러스터 역할, 역할 바인딩이 제거됩니다. 그러나 수동으로 삭제해야 하는 몇 가지 클러스터 역할이 있을 수 있습니다.

모든 VPA 클러스터 역할을 나열합니다.

oc get clusterrole | grep openshift-vertical-pod-autoscaler

$ oc get clusterrole | grep openshift-vertical-pod-autoscalerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

openshift-vertical-pod-autoscaler-6896f-admin 2022-02-02T15:29:55Z openshift-vertical-pod-autoscaler-6896f-edit 2022-02-02T15:29:55Z openshift-vertical-pod-autoscaler-6896f-view 2022-02-02T15:29:55Z

openshift-vertical-pod-autoscaler-6896f-admin 2022-02-02T15:29:55Z openshift-vertical-pod-autoscaler-6896f-edit 2022-02-02T15:29:55Z openshift-vertical-pod-autoscaler-6896f-view 2022-02-02T15:29:55ZCopy to Clipboard Copied! Toggle word wrap Toggle overflow 나열된 VPA 클러스터 역할을 삭제합니다. 예를 들면 다음과 같습니다.

oc delete clusterrole openshift-vertical-pod-autoscaler-6896f-admin openshift-vertical-pod-autoscaler-6896f-edit openshift-vertical-pod-autoscaler-6896f-view

$ oc delete clusterrole openshift-vertical-pod-autoscaler-6896f-admin openshift-vertical-pod-autoscaler-6896f-edit openshift-vertical-pod-autoscaler-6896f-viewCopy to Clipboard Copied! Toggle word wrap Toggle overflow VPA Operator를 삭제합니다.

oc delete operator/vertical-pod-autoscaler.openshift-vertical-pod-autoscaler

$ oc delete operator/vertical-pod-autoscaler.openshift-vertical-pod-autoscalerCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.6. Pod에 민감한 데이터 제공

일부 애플리케이션에는 개발자에게 제공하길 원하지 않는 민감한 정보(암호 및 사용자 이름 등)가 필요합니다.

관리자는 Secret 오브젝트를 사용하여 이러한 정보를 명확한 텍스트로 공개하지 않고도 제공할 수 있습니다.

2.6.1. 보안 이해

Secret 오브젝트 유형에서는 암호, OpenShift Container Platform 클라이언트 구성 파일, 개인 소스 리포지토리 자격 증명 등과 같은 중요한 정보를 보유하는 메커니즘을 제공합니다. 보안은 Pod에서 중요한 콘텐츠를 분리합니다. 볼륨 플러그인을 사용하여 컨테이너에 보안을 마운트하거나 시스템에서 보안을 사용하여 Pod 대신 조치를 수행할 수 있습니다.

주요 속성은 다음과 같습니다.

- 보안 데이터는 정의와는 별도로 참조할 수 있습니다.

- 보안 데이터 볼륨은 임시 파일 저장 기능(tmpfs)에 의해 지원되며 노드에 저장되지 않습니다.

- 보안 데이터는 네임스페이스 내에서 공유할 수 있습니다.

YAML Secret 오브젝트 정의

먼저 보안을 생성한 후 해당 보안을 사용하는 Pod를 생성해야 합니다.

보안 생성 시 다음을 수행합니다.

- 보안 데이터를 사용하여 보안 오브젝트를 생성합니다.

- Pod 서비스 계정을 업데이트하여 보안에 대한 참조를 허용합니다.

-

보안을 환경 변수로 사용하거나

secret볼륨을 사용하여 파일로 사용하는 Pod를 생성합니다.

2.6.1.1. 보안 유형

type 필드의 값은 보안의 키 이름과 값의 구조를 나타냅니다. 유형을 사용하면 보안 오브젝트에 사용자 이름과 키를 적용할 수 있습니다. 검증을 수행하지 않으려면 기본값인 opaque 유형을 사용합니다.

보안 데이터에 특정 키 이름이 있는지 확인하기 위해 서버 측 최소 검증을 트리거하려면 다음 유형 중 하나를 지정합니다.

-

kubernetes.io/service-account-token. 서비스 계정 토큰을 사용합니다. -

kubernetes.io/basic-auth. 기본 인증에 사용합니다. -

kubernetes.io/ssh-auth. SSH 키 인증에 사용합니다. -

kubernetes.io/tls. TLS 인증 기관에 사용합니다.

유형을 지정합니다. opaque 검증을 수행하지 않으려면 시크릿에서 키 이름 또는 값에 대한 규칙을 준수하도록 요청하지 않습니다. opaque 보안에는 임의의 값을 포함할 수 있는 비정형 key:value 쌍을 사용할 수 있습니다.

example.com/my-secret-type과 같은 다른 임의의 유형을 지정할 수 있습니다. 이러한 유형은 서버 측에 적용되지 않지만 보안 생성자가 해당 유형의 키/값 요구 사항을 준수하도록 의도했음을 나타냅니다.

다양한 시크릿 유형의 예는 보안 사용의 코드 샘플을 참조하십시오.

2.6.1.2. 보안 데이터 키

보안키는 DNS 하위 도메인에 있어야 합니다.

2.6.2. 보안 생성 방법 이해

관리자는 개발자가 해당 보안을 사용하는 Pod를 생성하기 전에 보안을 생성해야 합니다.

보안 생성 시 다음을 수행합니다.

시크릿을 유지하려는 데이터가 포함된 보안 오브젝트를 생성합니다. 각 시크릿 유형에 필요한 특정 데이터는 다음 섹션에서 확인할 수 있습니다.

불투명 보안을 생성하는 YAML 오브젝트의 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 둘 다 아닌

data또는stringdata필드를 사용합니다.Pod의 서비스 계정을 업데이트하여 보안을 참조합니다.

보안을 사용하는 서비스 계정의 YAML

apiVersion: v1 kind: ServiceAccount ... secrets: - name: test-secret

apiVersion: v1 kind: ServiceAccount ... secrets: - name: test-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow 보안을 환경 변수로 사용하거나

secret볼륨을 사용하여 파일로 사용하는 Pod를 생성합니다.보안 데이터로 볼륨의 파일을 채우는 Pod의 YAML

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 보안 데이터로 환경 변수를 채우는 Pod의 YAML

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- secret 키를 사용하는 환경 변수를 지정합니다.

보안 데이터로 환경 변수를 채우는 빌드 구성의 YAML

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- secret 키를 사용하는 환경 변수를 지정합니다.

2.6.2.1. 보안 생성 제한 사항

보안을 사용하려면 Pod에서 보안을 참조해야 합니다. 보안은 다음 세 가지 방법으로 Pod에서 사용할 수 있습니다.

- 컨테이너에 환경 변수를 채우기 위해 사용.

- 하나 이상의 컨테이너에 마운트된 볼륨에서 파일로 사용.

- Pod에 대한 이미지를 가져올 때 kubelet으로 사용.

볼륨 유형 보안은 볼륨 메커니즘을 사용하여 데이터를 컨테이너에 파일로 작성합니다. 이미지 가져오기 보안은 서비스 계정을 사용하여 네임스페이스의 모든 Pod에 보안을 자동으로 삽입합니다.

템플릿에 보안 정의가 포함된 경우 템플릿에 제공된 보안을 사용할 수 있는 유일한 방법은 보안 볼륨 소스를 검증하고 지정된 오브젝트 참조가 Secret 오브젝트를 실제로 가리키는 것입니다. 따라서 보안을 생성한 후 해당 보안을 사용하는 Pod를 생성해야 합니다. 가장 효과적인 방법은 서비스 계정을 사용하여 자동으로 삽입되도록 하는 것입니다.

Secret API 오브젝트는 네임스페이스에 있습니다. 동일한 네임스페이스에 있는 Pod만 참조할 수 있습니다.

개별 보안은 1MB로 제한됩니다. 이는 대규모 보안이 생성되어 apiserver 및 kubelet 메모리가 소모되는 것을 막기 위한 것입니다. 그러나 작은 보안을 많이 생성해도 메모리가 소모될 수 있습니다.

2.6.2.2. 불투명 보안 생성

관리자는 임의의 값을 포함할 수 있는 비정형 key:value 쌍을 저장할 수 있는 불투명 보안을 생성할 수 있습니다.

프로세스

컨트롤 플레인 노드의 YAML 파일에

Secret오브젝트를 생성합니다.예를 들면 다음과 같습니다.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 불투명 보안을 지정합니다.

다음 명령을 사용하여

Secret오브젝트를 생성합니다.oc create -f <filename>.yaml

$ oc create -f <filename>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pod에서 보안을 사용하려면 다음을 수행합니다.

- "보안 생성 방법" 섹션에 표시된 대로 Pod의 서비스 계정을 업데이트하여 보안을 참조합니다.

-

"secret을 보안 생성 방법" 섹션에 설명된 대로 보안을 환경 변수로 사용하거나

secret볼륨을 사용하여 파일로 사용하는 Pod를 생성합니다.

2.6.2.3. 서비스 계정 토큰 시크릿 생성

관리자는 API에 인증해야 하는 애플리케이션에 서비스 계정 토큰을 배포할 수 있는 서비스 계정 토큰 시크릿을 생성할 수 있습니다.

서비스 계정 토큰 시크릿을 사용하는 대신 TokenRequest API를 사용하여 바인딩된 서비스 계정 토큰을 얻는 것이 좋습니다. TokenRequest API에서 얻은 토큰은 바인딩된 수명을 가지며 다른 API 클라이언트에서 읽을 수 없기 때문에 시크릿에 저장된 토큰보다 더 안전합니다.

TokenRequest API를 사용할 수 없고 읽을 수 없는 API 오브젝트에서의 보안 노출이 허용 가능한 경우에만 서비스 계정 토큰 시크릿을 생성해야 합니다.

바인딩된 서비스 계정 토큰 생성에 대한 정보는 다음 추가 리소스 섹션을 참조하십시오.

절차

컨트롤 플레인 노드의 YAML 파일에

Secret오브젝트를 생성합니다.보안

오브젝트의 예:Copy to Clipboard Copied! Toggle word wrap Toggle overflow 다음 명령을 사용하여

Secret오브젝트를 생성합니다.oc create -f <filename>.yaml

$ oc create -f <filename>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pod에서 보안을 사용하려면 다음을 수행합니다.

- "보안 생성 방법" 섹션에 표시된 대로 Pod의 서비스 계정을 업데이트하여 보안을 참조합니다.

-

"secret을 보안 생성 방법" 섹션에 설명된 대로 보안을 환경 변수로 사용하거나

secret볼륨을 사용하여 파일로 사용하는 Pod를 생성합니다.

2.6.2.4. 기본 인증 보안 생성

관리자는 기본 인증에 필요한 자격 증명을 저장할 수 있는 기본 인증 보안을 생성할 수 있습니다. 이 시크릿 유형을 사용하는 경우 Secret 오브젝트의 data 매개변수에 base64 형식으로 인코딩된 다음 키가 포함되어야 합니다.

-

Username: 인증을 위한 사용자 이름 -

password: 인증이 필요한 암호 또는 토큰

stringData 매개변수를 사용하여 일반 텍스트 콘텐츠를 사용할 수 있습니다.

절차

컨트롤 플레인 노드의 YAML 파일에

Secret오브젝트를 생성합니다.보안 오브젝트

의예Copy to Clipboard Copied! Toggle word wrap Toggle overflow 다음 명령을 사용하여

Secret오브젝트를 생성합니다.oc create -f <filename>.yaml

$ oc create -f <filename>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pod에서 보안을 사용하려면 다음을 수행합니다.

- "보안 생성 방법" 섹션에 표시된 대로 Pod의 서비스 계정을 업데이트하여 보안을 참조합니다.

-

"secret을 보안 생성 방법" 섹션에 설명된 대로 보안을 환경 변수로 사용하거나

secret볼륨을 사용하여 파일로 사용하는 Pod를 생성합니다.

2.6.2.5. SSH 인증 보안 생성

관리자는 SSH 인증에 사용되는 데이터를 저장할 수 있는 SSH 인증 시크릿을 생성할 수 있습니다. 이 시크릿 유형을 사용하는 경우 Secret 오브젝트의 data 매개변수에 사용할 SSH 자격 증명이 포함되어야 합니다.

절차

컨트롤 플레인 노드의 YAML 파일에

Secret오브젝트를 생성합니다.보안

오브젝트의 예:Copy to Clipboard Copied! Toggle word wrap Toggle overflow 다음 명령을 사용하여

Secret오브젝트를 생성합니다.oc create -f <filename>.yaml

$ oc create -f <filename>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pod에서 보안을 사용하려면 다음을 수행합니다.

- "보안 생성 방법" 섹션에 표시된 대로 Pod의 서비스 계정을 업데이트하여 보안을 참조합니다.

-

"secret을 보안 생성 방법" 섹션에 설명된 대로 보안을 환경 변수로 사용하거나

secret볼륨을 사용하여 파일로 사용하는 Pod를 생성합니다.

2.6.2.6. Docker 구성 보안 생성

관리자는 컨테이너 이미지 레지스트리에 액세스하기 위한 인증 정보를 저장할 수 있는 Docker 구성 시크릿을 생성할 수 있습니다.

-

kubernetes.io/dockercfg. 이 시크릿 유형을 사용하여 로컬 Docker 구성 파일을 저장합니다.secret오브젝트의data매개변수에 base64 형식으로 인코딩된.dockercfg파일의 콘텐츠가 포함되어야 합니다. -

kubernetes.io/dockerconfigjson. 이 시크릿 유형을 사용하여 로컬 Docker 구성 JSON 파일을 저장합니다.secret오브젝트의data매개변수에 base64 형식으로 인코딩된.docker/config.json파일의 내용이 포함되어야 합니다.

절차

컨트롤 플레인 노드의 YAML 파일에

Secret오브젝트를 생성합니다.Docker 구성 보안 오브젝트

의예Copy to Clipboard Copied! Toggle word wrap Toggle overflow Docker 구성 JSON

시크릿오브젝트의 예Copy to Clipboard Copied! Toggle word wrap Toggle overflow 다음 명령을 사용하여

Secret오브젝트를 생성합니다.oc create -f <filename>.yaml

$ oc create -f <filename>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pod에서 보안을 사용하려면 다음을 수행합니다.

- "보안 생성 방법" 섹션에 표시된 대로 Pod의 서비스 계정을 업데이트하여 보안을 참조합니다.

-

"secret을 보안 생성 방법" 섹션에 설명된 대로 보안을 환경 변수로 사용하거나

secret볼륨을 사용하여 파일로 사용하는 Pod를 생성합니다.

2.6.3. 보안 업데이트 방법 이해

보안 값을 수정해도 이미 실행 중인 Pod에서 사용하는 값은 동적으로 변경되지 않습니다. 보안을 변경하려면 원래 Pod를 삭제하고 새 Pod를 생성해야 합니다(대개 동일한 PodSpec 사용).

보안 업데이트 작업에서는 새 컨테이너 이미지를 배포하는 것과 동일한 워크플로를 따릅니다. kubectl rolling-update 명령을 사용할 수 있습니다.

보안의 resourceVersion 값은 참조 시 지정되지 않습니다. 따라서 Pod가 시작되는 동시에 보안이 업데이트되는 경우 Pod에 사용되는 보안의 버전이 정의되지 않습니다.

현재는 Pod가 생성될 때 사용된 보안 오브젝트의 리소스 버전을 확인할 수 없습니다. 컨트롤러에서 이전 resourceVersion 을 사용하여 재시작할 수 있도록 Pod에서 이 정보를 보고하도록 계획되어 있습니다. 그동안 기존 보안 데이터를 업데이트하지 말고 고유한 이름으로 새 보안을 생성하십시오.

2.6.4. 보안이 포함된 서명된 인증서 사용 정보

서비스에 대한 통신을 보호하려면 프로젝트의 보안에 추가할 수 있는 서명된 제공 인증서/키 쌍을 생성하도록 OpenShift Container Platform을 구성하면 됩니다.

서비스 제공 인증서 보안은 즉시 사용 가능한 인증서가 필요한 복잡한 미들웨어 애플리케이션을 지원하기 위한 것입니다. 해당 설정은 관리자 툴에서 노드 및 마스터에 대해 생성하는 서버 인증서와 동일합니다.

서비스 Pod 사양은 서비스 제공 인증서 보안에 대해 구성됩니다.

- 1

- 인증서 이름을 지정합니다.

기타 Pod는 해당 Pod에 자동으로 마운트되는 /var/run/secrets/kubernetes.io/serviceaccount/service-ca.crt 파일의 CA 번들을 사용하여 내부 DNS 이름에만 서명되는 클러스터 생성 인증서를 신뢰할 수 있습니다.

이 기능의 서명 알고리즘은 x509.SHA256WithRSA입니다. 직접 교대하려면 생성된 보안을 삭제합니다. 새 인증서가 생성됩니다.

2.6.4.1. 보안과 함께 사용할 서명된 인증서 생성

Pod와 함께 서명된 제공 인증서/키 쌍을 사용하려면 서비스를 생성하거나 편집하여 service.beta.openshift.io/serving-cert-secret-name 주석을 추가한 다음 포드에 보안을 추가합니다.

절차

서비스 제공 인증서 보안을 생성하려면 다음을 수행합니다.

-

서비스에 대한

Pod사양을 편집합니다. 보안에 사용할 이름으로

service.beta.openshift.io/serving-cert-secret-name주석을 추가합니다.Copy to Clipboard Copied! Toggle word wrap Toggle overflow 인증서 및 키는 PEM 형식이며 각각

tls.crt및tls.key에 저장됩니다.서비스를 생성합니다.

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 보안이 생성되었는지 확인합니다.

모든 보안 목록을 확인합니다.

oc get secrets

$ oc get secretsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

NAME TYPE DATA AGE my-cert kubernetes.io/tls 2 9m

NAME TYPE DATA AGE my-cert kubernetes.io/tls 2 9mCopy to Clipboard Copied! Toggle word wrap Toggle overflow 보안에 대한 세부 정보를 확인합니다.

oc describe secret my-cert

$ oc describe secret my-certCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

해당 보안을 사용하여

Pod사양을 편집합니다.Copy to Clipboard Copied! Toggle word wrap Toggle overflow 사용 가능한 경우 Pod가 실행됩니다. 인증서는 내부 서비스 DNS 이름인

<service.name>.<service.namespace>.svc에 적합합니다.인증서/키 쌍은 만료 시기가 다가오면 자동으로 교체됩니다. 보안의

service.beta.openshift.io/expiry주석에서 RFC3339 형식으로 된 만료 날짜를 확인합니다.참고대부분의 경우 서비스 DNS 이름

<service.name>.<service.namespace>.svc는 외부에서 라우팅할 수 없습니다.<service.name>.<service.namespace>.svc는 주로 클러스터 내 또는 서비스 내 통신과 경로 재암호화에 사용됩니다.

2.6.5. 보안 문제 해결

와 함께 서비스 인증서 생성이 실패하는 경우 (서비스의 service.beta.openshift.io/serving-cert-generation-error 주석에는 다음이 포함됩니다).

secret/ssl-key references serviceUID 62ad25ca-d703-11e6-9d6f-0e9c0057b608, which does not match 77b6dd80-d716-11e6-9d6f-0e9c0057b60

secret/ssl-key references serviceUID 62ad25ca-d703-11e6-9d6f-0e9c0057b608, which does not match 77b6dd80-d716-11e6-9d6f-0e9c0057b60

인증서를 생성한 서비스가 더 이상 존재하지 않거나 serviceUID가 다릅니다. 이전 보안을 제거하고 서비스 service.beta.openshift.io/serving-cert-generation- error,

service.beta.openshift.io/serving-cert-generation-error -num 주석을 지워 인증서를 강제로 다시 생성해야 합니다.

보안을 삭제합니다.

oc delete secret <secret_name>

$ oc delete secret <secret_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 주석을 지웁니다.

oc annotate service <service_name> service.beta.openshift.io/serving-cert-generation-error-

$ oc annotate service <service_name> service.beta.openshift.io/serving-cert-generation-error-Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc annotate service <service_name> service.beta.openshift.io/serving-cert-generation-error-num-

$ oc annotate service <service_name> service.beta.openshift.io/serving-cert-generation-error-num-Copy to Clipboard Copied! Toggle word wrap Toggle overflow

주석을 제거하는 명령에는 제거할 주석 이름 뒤에 -가 있습니다.

2.7. 구성 맵 생성 및 사용

다음 섹션에서는 구성 맵과 이를 생성하고 사용하는 방법을 정의합니다.

2.7.1. 구성 맵 이해

많은 애플리케이션에서는 구성 파일, 명령줄 인수 및 환경 변수를 조합한 구성이 필요합니다. OpenShift Container Platform에서 컨테이너화된 애플리케이션을 이식하기 위해 이러한 구성 아티팩트는 이미지 콘텐츠와 분리됩니다.

ConfigMap 오브젝트는 컨테이너를 OpenShift Container Platform과 무관하게 유지하면서 구성 데이터를 사용하여 컨테이너를 삽입하는 메커니즘을 제공합니다. 구성 맵은 개별 속성 또는 전체 구성 파일 또는 JSON Blob과 같은 세분화된 정보를 저장하는 데 사용할 수 있습니다.

ConfigMap API 오브젝트에는 Pod에서 사용하거나 컨트롤러와 같은 시스템 구성 요소의 구성 데이터를 저장하는 데 사용할 수 있는 구성 데이터의 키-값 쌍이 있습니다. 예를 들면 다음과 같습니다.

ConfigMap 오브젝트 정의

이미지와 같은 바이너리 파일에서 구성 맵을 생성할 때 binaryData 필드를 사용할 수 있습니다.

다양한 방법으로 Pod에서 구성 데이터를 사용할 수 있습니다. 구성 맵을 다음과 같이 사용할 수 있습니다.

- 컨테이너에서 환경 변수 값 채우기

- 컨테이너에서 명령줄 인수 설정

- 볼륨에 구성 파일 채우기

사용자 및 시스템 구성 요소는 구성 데이터를 구성 맵에 저장할 수 있습니다.

구성 맵은 보안과 유사하지만 민감한 정보가 포함되지 않은 문자열 작업을 더 편리하게 지원하도록 설계되었습니다.

구성 맵 제한 사항

Pod에서 콘텐츠를 사용하기 전에 구성 맵을 생성해야 합니다.

컨트롤러는 누락된 구성 데이터를 허용하도록 작성할 수 있습니다. 상황에 따라 구성 맵을 사용하여 구성된 개별 구성 요소를 참조하십시오.

ConfigMap 오브젝트는 프로젝트에 있습니다.

동일한 프로젝트의 Pod에서만 참조할 수 있습니다.

Kubelet은 API 서버에서 가져오는 Pod에 대한 구성 맵만 지원합니다.

여기에는 CLI를 사용하거나 복제 컨트롤러에서 간접적으로 생성되는 모든 Pod가 포함됩니다. OpenShift Container Platform 노드의 --manifest-url 플래그, --config 플래그 또는 해당 REST API를 사용하여 생성한 Pod를 포함하지 않으며 이는 Pod를 생성하는 일반적인 방법이 아니기 때문입니다.

2.7.2. OpenShift Container Platform 웹 콘솔에서 구성 맵 생성

OpenShift Container Platform 웹 콘솔에서 구성 맵을 생성할 수 있습니다.

절차

클러스터 관리자로 구성 맵을 생성하려면 다음을 수행합니다.

-

관리자 관점에서

Workloads→Config Maps을 선택합니다. - 페이지 오른쪽 상단에서 구성 맵 생성을 선택합니다.

- 구성 맵의 콘텐츠를 입력합니다.

- 생성을 선택합니다.

-

관리자 관점에서

개발자로 구성 맵을 생성하려면 다음을 수행합니다.

-

개발자 관점에서

Config Maps을 선택합니다. - 페이지 오른쪽 상단에서 구성 맵 생성을 선택합니다.

- 구성 맵의 콘텐츠를 입력합니다.

- 생성을 선택합니다.

-

개발자 관점에서

2.7.3. CLI를 사용하여 구성 맵 생성

다음 명령을 사용하여 디렉토리, 특정 파일 또는 리터럴 값에서 구성 맵을 생성할 수 있습니다.

절차

구성 맵 생성:

oc create configmap <configmap_name> [options]

$ oc create configmap <configmap_name> [options]Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7.3.1. 디렉토리에서 구성 맵 생성

디렉토리에서 구성 맵을 생성할 수 있습니다. 이 방법을 사용하면 디렉토리 내 여러 파일을 사용하여 구성 맵을 생성할 수 있습니다.

절차

다음 예제 절차에서는 디렉토리에서 구성 맵을 생성하는 방법을 간략하게 설명합니다.

구성 맵을 채우려는 데이터가 이미 포함된 일부 파일이 있는 디렉토리로 시작합니다.

ls example-files

$ ls example-filesCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

game.properties ui.properties

game.properties ui.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow cat example-files/game.properties

$ cat example-files/game.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow cat example-files/ui.properties

$ cat example-files/ui.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

color.good=purple color.bad=yellow allow.textmode=true how.nice.to.look=fairlyNice

color.good=purple color.bad=yellow allow.textmode=true how.nice.to.look=fairlyNiceCopy to Clipboard Copied! Toggle word wrap Toggle overflow 다음 명령을 입력하여 이 디렉토리에 각 파일의 내용을 보관하는 구성 맵을 생성합니다.

oc create configmap game-config \ --from-file=example-files/$ oc create configmap game-config \ --from-file=example-files/Copy to Clipboard Copied! Toggle word wrap Toggle overflow --from-file옵션이 디렉터리를 가리키는 경우 해당 디렉터리의 각 파일은 구성 맵에 키를 채우는 데 사용됩니다. 여기서 키 이름은 파일 이름이고 키의 값은 파일의 내용입니다.예를 들어 이전 명령은 다음 구성 맵을 생성합니다.

oc describe configmaps game-config

$ oc describe configmaps game-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 맵의 두 키가 명령에 지정된 디렉토리의 파일 이름에서 생성되는 것을 확인할 수 있습니다. 해당 키의 콘텐츠가 커질 수 있으므로

oc describe의 출력은 키와 크기의 이름만 표시합니다.키 값을 보려면

-o옵션을 사용하여 오브젝트에 대한oc get명령을 입력합니다.oc get configmaps game-config -o yaml

$ oc get configmaps game-config -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7.3.2. 파일에서 구성 맵 생성

파일에서 구성 맵을 생성할 수 있습니다.

절차

다음 예제 절차에서는 파일에서 구성 맵을 생성하는 방법을 간략하게 설명합니다.

파일에서 구성 맵을 생성하는 경우 UTF8이 아닌 데이터를 손상시키지 않고 이 필드에 배치된 UTF8이 아닌 데이터가 포함된 파일을 포함할 수 있습니다. OpenShift Container Platform에서는 바이너리 파일을 감지하고 파일을 MIME로 투명하게 인코딩합니다. 서버에서 MIME 페이로드는 데이터 손상 없이 디코딩되어 저장됩니다.

--from-file 옵션을 CLI에 여러 번 전달할 수 있습니다. 다음 예제에서는 디렉토리 예제에서 생성되는 것과 동일한 결과를 보여줍니다.

특정 파일을 지정하여 구성 맵을 생성합니다.

oc create configmap game-config-2 \ --from-file=example-files/game.properties \ --from-file=example-files/ui.properties$ oc create configmap game-config-2 \ --from-file=example-files/game.properties \ --from-file=example-files/ui.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow 결과 확인:

oc get configmaps game-config-2 -o yaml

$ oc get configmaps game-config-2 -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

파일에서 가져온 콘텐츠의 구성 맵에 설정할 키를 지정할 수 있습니다. 이는 key=value 표현식을 --from-file 옵션에 전달하여 설정할 수 있습니다. 예를 들면 다음과 같습니다.

키-값 쌍을 지정하여 구성 맵을 생성합니다.

oc create configmap game-config-3 \ --from-file=game-special-key=example-files/game.properties$ oc create configmap game-config-3 \ --from-file=game-special-key=example-files/game.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow 결과 확인:

oc get configmaps game-config-3 -o yaml

$ oc get configmaps game-config-3 -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 이전 단계에서 설정한 키입니다.

2.7.3.3. 리터럴 값에서 구성 맵 생성

구성 맵에 리터럴 값을 제공할 수 있습니다.

절차

--from-literal 옵션은 명령줄에서 직접 리터럴 값을 제공할 수 있는 key=value 구문을 사용합니다.

리터럴 값을 지정하여 구성 맵을 생성합니다.

oc create configmap special-config \ --from-literal=special.how=very \ --from-literal=special.type=charm$ oc create configmap special-config \ --from-literal=special.how=very \ --from-literal=special.type=charmCopy to Clipboard Copied! Toggle word wrap Toggle overflow 결과 확인:

oc get configmaps special-config -o yaml

$ oc get configmaps special-config -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7.4. 사용 사례: Pod에서 구성 맵 사용

다음 섹션에서는 Pod에서 ConfigMap 오브젝트를 사용할 때 몇 가지 사용 사례에 대해 설명합니다.

2.7.4.1. 구성 맵을 사용하여 컨테이너에서 환경 변수 채우기

구성 맵은 컨테이너의 개별 환경 변수를 채우거나 유효한 환경 변수 이름을 형성하는 모든 키에서 컨테이너에 있는 환경 변수를 채우는 데 사용할 수 있습니다.

예를 들어 다음 구성 맵을 고려하십시오.

두 개의 환경 변수가 있는 ConfigMap

하나의 환경 변수가 있는 ConfigMap

절차

configMapKeyRef섹션을 사용하여 Pod에서 이ConfigMap의 키를 사용할 수 있습니다.특정 환경 변수를 삽입하도록 구성된 샘플

Pod사양Copy to Clipboard Copied! Toggle word wrap Toggle overflow 이 Pod가 실행되면 Pod 로그에 다음 출력이 포함됩니다.

SPECIAL_LEVEL_KEY=very log_level=INFO

SPECIAL_LEVEL_KEY=very log_level=INFOCopy to Clipboard Copied! Toggle word wrap Toggle overflow

SPECIAL_TYPE_KEY=charm은 예제 출력에 나열되지 않습니다. optional: true가 설정되어 있기 때문입니다.

2.7.4.2. 구성 맵을 사용하여 컨테이너 명령에 대한 명령줄 인수 설정

구성 맵을 사용하여 컨테이너에서 명령 또는 인수 값을 설정할 수도 있습니다. 이는 Kubernetes 대체 구문 $(VAR_NAME)을 사용하여 수행됩니다. 다음 구성 맵을 고려하십시오.

절차

컨테이너의 명령에 값을 삽입하려면 환경 변수 사용 사례에서 ConfigMap을 사용하는 것처럼 환경 변수로 사용할 키를 사용해야 합니다. 그런 다음

$(VAR_NAME)구문을 사용하여 컨테이너의 명령에서 참조할 수 있습니다.특정 환경 변수를 삽입하도록 구성된 샘플

Pod사양Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 환경 변수로 사용할 키를 사용하여 컨테이너의 명령에 값을 삽입합니다.

이 Pod가 실행되면 test-container 컨테이너에서 실행되는 echo 명령의 출력은 다음과 같습니다.

very charm

very charmCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7.4.3. 구성 맵을 사용하여 볼륨에 콘텐츠 삽입

구성 맵을 사용하여 볼륨에 콘텐츠를 삽입할 수 있습니다.

ConfigMap CR(사용자 정의 리소스)의 예

절차

구성 맵을 사용하여 볼륨에 콘텐츠를 삽입하는 몇 가지 다른 옵션이 있습니다.

구성 맵을 사용하여 콘텐츠를 볼륨에 삽입하는 가장 기본적인 방법은 키가 파일 이름이고 파일의 콘텐츠가 키의 값인 파일로 볼륨을 채우는 것입니다.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 키가 포함된 파일입니다.

이 Pod가 실행되면 cat 명령의 출력은 다음과 같습니다.

very

veryCopy to Clipboard Copied! Toggle word wrap Toggle overflow 구성 맵 키가 프로젝션되는 볼륨 내 경로를 제어할 수도 있습니다.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 구성 맵 키의 경로입니다.

이 Pod가 실행되면 cat 명령의 출력은 다음과 같습니다.

very

veryCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.8. 장치 플러그인을 사용하여 Pod가 있는 외부 리소스에 액세스

장치 플러그인을 사용하면 사용자 정의 코드를 작성하지 않고 OpenShift Container Platform Pod에서 특정 장치 유형(GPU, InfiniBand 또는 벤더별 초기화 및 설정이 필요한 기타 유사한 컴퓨팅 리소스)을 사용할 수 있습니다.

2.8.1. 장치 플러그인 이해

장치 플러그인은 클러스터 전체에서 하드웨어 장치를 소비할 수 있는 일관되고 이식 가능한 솔루션을 제공합니다. 장치 플러그인은 확장 메커니즘을 통해 이러한 장치를 지원하여 컨테이너에서 이러한 장치를 사용할 수있게 하고 장치의 상태 점검을 제공하며 안전하게 공유합니다.

OpenShift Container Platform은 장치 플러그인 API를 지원하지만 장치 플러그인 컨테이너는 개별 공급 업체에 의해 지원됩니다.

장치 플러그인은 특정 하드웨어 리소스를 관리하는 노드 (kubelet 외부)에서 실행되는 gRPC 서비스입니다. 모든 장치 플러그인은 다음 원격 프로 시저 호출 (RPC)을 지원해야합니다.

장치 플러그인의 예

간편한 장치 플러그인 참조 구현을 위해 장치 관리자 (Device Manager) 코드에 vendor/k8s.io/kubernetes/pkg/kubelet/cm/deviceplugin/device_plugin_stub.go 스텁 장치 플러그인을 사용할 수 있습니다.

2.8.1.1. 장치 플러그인을 배포하는 방법

- 장치 플러그인 배포에는 데몬 세트 접근 방식을 사용하는 것이 좋습니다.

- 시작할 때 장치 플러그인은 노드의 /var/lib/kubelet/device-plugin/에 UNIX 도메인 소켓을 만들어 장치 관리자의 RPC를 제공하려고 합니다.

- 장치 플러그인은 하드웨어 리소스, 호스트 파일 시스템에 대한 액세스 및 소켓 생성을 관리해야 하므로 권한이 부여된 보안 컨텍스트에서 실행해야합니다.

- 배포 단계에 대한 보다 구체적인 세부 사항은 각 장치 플러그인 구현에서 확인할 수 있습니다.

2.8.2. 장치 관리자 이해

장치 관리자(Device Manager)는 장치 플러그인이라는 플러그인을 사용하여 특수 노드 하드웨어 리소스를 알리기 위한 메커니즘을 제공합니다.

업스트림 코드 변경없이 특수 하드웨어를 공개할 수 있습니다.

OpenShift Container Platform은 장치 플러그인 API를 지원하지만 장치 플러그인 컨테이너는 개별 공급 업체에 의해 지원됩니다.

장치 관리자는 장치를 확장 리소스(Extended Resources)으로 공개합니다. 사용자 pod는 다른 확장 리소스 를 요청하는 데 사용되는 동일한 제한/요청 메커니즘을 사용하여 장치 관리자에 의해 공개된 장치를 사용할 수 있습니다.

시작시 장치 플러그인은 /var/lib/kubelet/device-plugins/kubelet.sock에서에서 Register를 호출하는 장치 관리자에 직접 등록하고 장치 관리자의 요구를 제공하기 위해 /var/lib/kubelet/device-plugins/<plugin>.sock에서 gRPC 서비스를 시작합니다.

장치 관리자는 새 등록 요청을 처리하는 동안 장치 플러그인 서비스에서 ListAndWatch 원격 프로 시저 호출 (RPC)을 시작합니다. 이에 대한 응답으로 장치 관리자는 플러그인에서 gRPC 스트림을 통해 장치 오브젝트 목록을 가져옵니다. 장치 관리자는 플러그인에서 새 업데이트의 유무에 대해 스트림을 모니터링합니다. 플러그인 측에서도 플러그인은 스트림을 열린 상태로 유지하고 장치 상태가 변경될 때마다 동일한 스트리밍 연결을 통해 새 장치 목록이 장치 관리자로 전송됩니다.

새로운 pod 승인 요청을 처리하는 동안 Kubelet은 장치 할당을 위해 요청된 Extended Resources를 장치 관리자에게 전달합니다. 장치 관리자는 데이터베이스에서 해당 플러그인이 존재하는지 확인합니다. 플러그인이 존재하고 로컬 캐시로 할당 가능한 디바이스가 있는 경우 Allocate RPC가 특정 디바이스 플러그인에서 호출됩니다.

또한 장치 플러그인은 드라이버 설치, 장치 초기화 및 장치 재설정과 같은 몇 가지 다른 장치 별 작업을 수행할 수 있습니다. 이러한 기능은 구현마다 다릅니다.

2.8.3. 장치 관리자 활성화

장치 관리자는 장치 플러그인을 구현하여 업스트림 코드 변경없이 특수 하드웨어를 사용할 수 있습니다.

장치 관리자(Device Manager)는 장치 플러그인이라는 플러그인을 사용하여 특수 노드 하드웨어 리소스를 알리기 위한 메커니즘을 제공합니다.

구성하려는 노드 유형의 정적

MachineConfigPoolCRD와 연관된 라벨을 가져옵니다. 다음 중 하나를 실행합니다.Machine config를 표시합니다:

oc describe machineconfig <name>

# oc describe machineconfig <name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 예를 들면 다음과 같습니다.

oc describe machineconfig 00-worker

# oc describe machineconfig 00-workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Name: 00-worker Namespace: Labels: machineconfiguration.openshift.io/role=worker

Name: 00-worker Namespace: Labels: machineconfiguration.openshift.io/role=worker1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 장치 관리자에 필요한 라벨입니다.

프로세스

구성 변경을 위한 사용자 정의 리소스 (CR)를 만듭니다.

장치 관리자 CR의 설정 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 장치 관리자를 만듭니다.

oc create -f devicemgr.yaml

$ oc create -f devicemgr.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

kubeletconfig.machineconfiguration.openshift.io/devicemgr created

kubeletconfig.machineconfiguration.openshift.io/devicemgr createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 노드에서 /var/lib/kubelet/device-plugins/kubelet.sock이 작성되었는지 확인하여 장치 관리자가 실제로 사용 가능한지 확인합니다. 이는 장치 관리자의 gRPC 서버가 새 플러그인 등록을 수신하는 UNIX 도메인 소켓입니다. 이 소켓 파일은 장치 관리자가 활성화된 경우에만 Kubelet을 시작할 때 생성됩니다.

2.9. Pod 예약 결정에 Pod 우선순위 포함

클러스터에서 Pod 우선순위 및 선점을 활성화할 수 있습니다. Pod 우선순위는 다른 Pod와 관련된 Pod의 중요성을 나타내며 해당 우선순위를 기반으로 Pod를 대기열에 넣습니다. Pod 선점을 통해 클러스터에서 우선순위가 낮은 Pod를 제거하거나 선점할 수 있으므로 적절한 노드 Pod 우선순위에 사용 가능한 공간이 없는 경우 우선순위가 높은 Pod를 예약할 수 있습니다. Pod Pod 우선순위는 Pod의 스케줄링 순서에도 영향을 미치지 않습니다.

우선순위 및 선점 기능을 사용하려면 Pod의 상대적 가중치를 정의하는 우선순위 클래스를 생성합니다. 그런 다음 Pod 사양의 우선순위 클래스를 참조하여 예약에 해당 값을 적용합니다.

2.9.1. Pod 우선순위 이해

Pod 우선순위 및 선점 기능을 사용하면 스케줄러에서 보류 중인 Pod를 우선순위에 따라 정렬하고, 보류 중인 Pod는 예약 큐에서 우선순위가 더 낮은 다른 대기 중인 Pod보다 앞에 배치됩니다. 그 결과 예약 요구 사항이 충족되는 경우 우선순위가 높은 Pod가 우선순위가 낮은 Pod보다 더 빨리 예약될 수 있습니다. Pod를 예약할 수 없는 경우에는 스케줄러에서 우선순위가 낮은 다른 Pod를 계속 예약합니다.

2.9.1.1. Pod 우선순위 클래스

네임스페이스가 지정되지 않은 오브젝트로서 이름에서 우선순위 정수 값으로의 매핑을 정의하는 우선순위 클래스를 Pod에 할당할 수 있습니다. 값이 클수록 우선순위가 높습니다.

우선순위 클래스 오브젝트에는 1000000000(10억)보다 작거나 같은 32비트 정수 값을 사용할 수 있습니다. 선점하거나 제거해서는 안 되는 중요한 Pod의 경우 10억보다 큰 숫자를 예약합니다. 기본적으로 OpenShift Container Platform에는 중요한 시스템 Pod의 예약을 보장하기 위해 우선순위 클래스가 2개 예약되어 있습니다.

oc get priorityclasses

$ oc get priorityclasses출력 예

NAME VALUE GLOBAL-DEFAULT AGE cluster-logging 1000000 false 29s system-cluster-critical 2000000000 false 72m system-node-critical 2000001000 false 72m

NAME VALUE GLOBAL-DEFAULT AGE

cluster-logging 1000000 false 29s

system-cluster-critical 2000000000 false 72m

system-node-critical 2000001000 false 72msystem-node-critical - 이 우선순위 클래스의 값은 2000001000이며 노드에서 제거해서는 안 되는 모든 Pod에 사용합니다. 이 우선순위 클래스가 있는 Pod의 예로는

sdn-ovs,sdn등이 있습니다. 대다수의 중요한 구성 요소에는 기본적으로system-node-critical우선순위 클래스가 포함됩니다. 예를 들면 다음과 같습니다.- master-api

- master-controller

- master-etcd

- sdn

- sdn-ovs

- sync

system-cluster-critical - 이 우선순위 클래스의 값은 2000000000(10억)이며 클러스터에 중요한 Pod에 사용합니다. 이 우선순위 클래스가 있는 Pod는 특정 상황에서 노드에서 제거할 수 있습니다. 예를 들어

system-node-critical우선순위 클래스를 사용하여 구성한 Pod가 우선할 수 있습니다. 그러나 이 우선순위 클래스는 예약을 보장합니다. 이 우선순위 클래스를 사용할 수 있는 Pod의 예로는 fluentd, Descheduler와 같은 추가 기능 구성 요소 등이 있습니다. 대다수의 중요한 구성 요소에는 기본적으로system-cluster-critical우선순위 클래스가 포함됩니다. 예를 들면 다음과 같습니다.- fluentd

- metrics-server

- Descheduler

- cluster-logging - 이 우선순위는 Fluentd에서 Fluentd Pod가 다른 앱보다 먼저 노드에 예약되도록 하는 데 사용합니다.

2.9.1.2. Pod 우선순위 이름

우선순위 클래스가 한 개 이상 있으면 Pod 사양에서 우선순위 클래스 이름을 지정하는 Pod를 생성할 수 있습니다. 우선순위 승인 컨트롤러는 우선순위 클래스 이름 필드를 사용하여 정수 값으로 된 우선순위를 채웁니다. 이름이 지정된 우선순위 클래스가 없는 경우 Pod가 거부됩니다.

2.9.2. Pod 선점 이해

개발자가 Pod를 생성하면 Pod가 큐로 들어갑니다. 개발자가 Pod에 Pod 우선순위 또는 선점을 구성한 경우 스케줄러는 큐에서 Pod를 선택하고 해당 Pod를 노드에 예약하려고 합니다. 스케줄러가 노드에서 Pod의 지정된 요구 사항을 모두 충족하는 적절한 공간을 찾을 수 없는 경우 보류 중인 Pod에 대한 선점 논리가 트리거됩니다.

스케줄러가 노드에서 Pod를 하나 이상 선점하면 우선순위가 높은 Pod 사양의 nominatedNodeName 필드가 nodename 필드와 함께 노드의 이름으로 설정됩니다. 스케줄러는 nominatedNodeName 필드를 사용하여 Pod용으로 예약된 리소스를 계속 추적하고 클러스터의 선점에 대한 정보도 사용자에게 제공합니다.

스케줄러에서 우선순위가 낮은 Pod를 선점한 후에는 Pod의 정상 종료 기간을 따릅니다. 스케줄러에서 우선순위가 낮은 Pod가 종료되기를 기다리는 동안 다른 노드를 사용할 수 있게 되는 경우 스케줄러는 해당 노드에서 우선순위가 더 높은 Pod를 예약할 수 있습니다. 따라서 Pod 사양의 nominatedNodeName 필드 및 nodeName 필드가 다를 수 있습니다.

또한 스케줄러가 노드의 Pod를 선점하고 종료되기를 기다리고 있는 상태에서 보류 중인 Pod보다 우선순위가 높은 Pod를 예약해야 하는 경우, 스케줄러는 우선순위가 더 높은 Pod를 대신 예약할 수 있습니다. 이러한 경우 스케줄러는 보류 중인 Pod의 nominatedNodeName을 지워 해당 Pod를 다른 노드에 사용할 수 있도록 합니다.

선점을 수행해도 우선순위가 낮은 Pod가 노드에서 모두 제거되는 것은 아닙니다. 스케줄러는 우선순위가 낮은 Pod의 일부를 제거하여 보류 중인 Pod를 예약할 수 있습니다.

스케줄러는 노드에서 보류 중인 Pod를 예약할 수 있는 경우에만 해당 노드에서 Pod 선점을 고려합니다.

2.9.2.1. Pod 선점 및 기타 스케줄러 설정

Pod 우선순위 및 선점 기능을 활성화하는 경우 다른 스케줄러 설정을 고려하십시오.

- Pod 우선순위 및 Pod 중단 예산

- Pod 중단 예산은 동시에 작동해야 하는 최소 복제본 수 또는 백분율을 지정합니다. Pod 중단 예산을 지정하면 Pod를 최적의 노력 수준에서 선점할 때 OpenShift Container Platform에서 해당 예산을 준수합니다. 스케줄러는 Pod 중단 예산을 위반하지 않고 Pod를 선점하려고 합니다. 이러한 Pod를 찾을 수 없는 경우 Pod 중단 예산 요구 사항과 관계없이 우선순위가 낮은 Pod를 선점할 수 있습니다.

- Pod 우선순위 및 Pod 유사성

- Pod 유사성을 위해서는 동일한 라벨이 있는 다른 Pod와 같은 노드에서 새 Pod를 예약해야 합니다.

노드에서 우선순위가 낮은 하나 이상의 Pod와 보류 중인 Pod에 Pod 간 유사성이 있는 경우 스케줄러는 선호도 요구 사항을 위반하지 않고 우선순위가 낮은 Pod를 선점할 수 없습니다. 이 경우 스케줄러는 보류 중인 Pod를 예약할 다른 노드를 찾습니다. 그러나 스케줄러에서 적절한 노드를 찾을 수 있다는 보장이 없고 보류 중인 Pod가 예약되지 않을 수 있습니다.

이러한 상황을 방지하려면 우선순위가 같은 Pod를 사용하여 Pod 유사성을 신중하게 구성합니다.

2.9.2.2. 선점된 Pod의 정상 종료

Pod를 선점할 때 스케줄러는 Pod의 정상 종료 기간이 만료될 때까지 대기하여 Pod가 작동을 완료하고 종료할 수 있도록 합니다. 기간이 지난 후에도 Pod가 종료되지 않으면 스케줄러에서 Pod를 종료합니다. 이러한 정상 종료 기간으로 인해 스케줄러에서 Pod를 선점하는 시점과 노드에서 보류 중인 Pod를 예약할 수 있는 시간 사이에 시차가 발생합니다.

이 간격을 최소화하려면 우선순위가 낮은 Pod의 정상 종료 기간을 짧게 구성하십시오.

2.9.3. 우선순위 및 선점 구성

우선순위 클래스 오브젝트를 생성하고 Pod 사양에 priorityClassName을 사용하여 Pod를 우선순위에 연결하여 우선순위 및 선점을 적용합니다.

우선순위 클래스 오브젝트 샘플

- 1

- 우선순위 클래스 오브젝트의 이름입니다.

- 2

- 오브젝트의 우선순위 값입니다.

- 3

- 우선순위 클래스 이름이 지정되지 않은 Pod에 이 우선순위 클래스를 사용해야 하는지의 여부를 나타내는 선택적 필드입니다. 이 필드는 기본적으로

false입니다.globalDefault가true로 설정된 하나의 우선순위 클래스만 클러스터에 존재할 수 있습니다.globalDefault:true가 설정된 우선순위 클래스가 없는 경우 우선순위 클래스 이름이 없는 Pod의 우선순위는 0입니다.globalDefault:true를 사용하여 우선순위 클래스를 추가하면 우선순위 클래스를 추가한 후 생성된 Pod에만 영향을 미치고 기존 Pod의 우선순위는 변경되지 않습니다. - 4

- 개발자가 이 우선순위 클래스와 함께 사용해야 하는 Pod를 설명하는 임의의 텍스트 문자열(선택 사항)입니다.

프로세스

우선순위 및 선점을 사용하도록 클러스터를 구성하려면 다음을 수행합니다.

우선순위 클래스를 한 개 이상 생성합니다.

- 우선순위의 이름과 값을 지정합니다.

-

필요한 경우 우선순위 클래스 및 설명에

globalDefault필드를 지정합니다.

Pod사양을 생성하거나 다음과 같이 우선순위 클래스의 이름을 포함하도록 기존 Pod를 편집합니다.우선순위 클래스 이름이 있는 샘플

Pod사양Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 이 Pod에 사용할 우선순위 클래스를 지정합니다.

Pod를 생성합니다.

oc create -f <file-name>.yaml

$ oc create -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pod 구성 또는 Pod 템플릿에 우선순위 이름을 직접 추가할 수 있습니다.

2.10. 노드 선택기를 사용하여 특정 노드에 Pod 배치

노드 선택기는 키-값 쌍으로 구성된 맵을 지정합니다. 규칙은 노드의 사용자 정의 라벨과 Pod에 지정된 선택기를 사용하여 정의합니다.

Pod를 노드에서 실행하려면 Pod에 노드의 라벨로 표시된 키-값 쌍이 있어야 합니다.

동일한 Pod 구성에서 노드 유사성 및 노드 선택기를 사용하는 경우 아래의 중요 고려 사항을 참조하십시오.

2.10.1. 노드 선택기를 사용하여 Pod 배치 제어

Pod의 노드 선택기와 노드의 라벨을 사용하여 Pod가 예약되는 위치를 제어할 수 있습니다. 노드 선택기를 사용하면 OpenShift Container Platform에서 일치하는 라벨이 포함된 노드에 Pod를 예약합니다.

노드, 머신 세트 또는 머신 구성에 라벨을 추가합니다. 머신 세트에 라벨을 추가하면 노드 또는 머신이 중단되는 경우 새 노드에 라벨이 지정됩니다. 노드 또는 머신이 중단된 경우 노드 또는 머신 구성에 추가된 라벨이 유지되지 않습니다.

기존 Pod에 노드 선택기를 추가하려면 ReplicaSet 오브젝트, DaemonSet 오브젝트, StatefulSet 오브젝트, Deployment 오브젝트 또는 DeploymentConfig 오브젝트와 같이 해당 Pod의 제어 오브젝트에 노드 선택기를 추가합니다. 이 제어 오브젝트 아래의 기존 Pod는 라벨이 일치하는 노드에서 재생성됩니다. 새 Pod를 생성하는 경우 Pod 사양에 노드 선택기를 직접 추가할 수 있습니다.

예약된 기존 Pod에 노드 선택기를 직접 추가할 수 없습니다.

사전 요구 사항

기존 Pod에 노드 선택기를 추가하려면 해당 Pod의 제어 오브젝트를 결정하십시오. 예를 들어 router-default-66d5cf9464-m2g75 Pod는 router-default-66d5cf9464 복제본 세트에서 제어합니다.

웹 콘솔에서 Pod YAML의 ownerReferences 아래에 제어 오브젝트가 나열됩니다.

절차

머신 세트를 사용하거나 노드를 직접 편집하여 노드에 라벨을 추가합니다.

노드를 생성할 때 머신 세트에서 관리하는 노드에 라벨을 추가하려면

MachineSet오브젝트를 사용합니다.다음 명령을 실행하여

MachineSet오브젝트에 라벨을 추가합니다.oc patch MachineSet <name> --type='json' -p='[{"op":"add","path":"/spec/template/spec/metadata/labels", "value":{"<key>"="<value>","<key>"="<value>"}}]' -n openshift-machine-api$ oc patch MachineSet <name> --type='json' -p='[{"op":"add","path":"/spec/template/spec/metadata/labels", "value":{"<key>"="<value>","<key>"="<value>"}}]' -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow 예를 들면 다음과 같습니다.

oc patch MachineSet abc612-msrtw-worker-us-east-1c --type='json' -p='[{"op":"add","path":"/spec/template/spec/metadata/labels", "value":{"type":"user-node","region":"east"}}]' -n openshift-machine-api$ oc patch MachineSet abc612-msrtw-worker-us-east-1c --type='json' -p='[{"op":"add","path":"/spec/template/spec/metadata/labels", "value":{"type":"user-node","region":"east"}}]' -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc edit명령을 사용하여 라벨이MachineSet오브젝트에 추가되었는지 확인합니다.예를 들면 다음과 같습니다.

oc edit MachineSet abc612-msrtw-worker-us-east-1c -n openshift-machine-api

$ oc edit MachineSet abc612-msrtw-worker-us-east-1c -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow MachineSet오브젝트의 예Copy to Clipboard Copied! Toggle word wrap Toggle overflow

라벨을 노드에 직접 추가합니다.

노드의

Node오브젝트를 편집합니다.oc label nodes <name> <key>=<value>

$ oc label nodes <name> <key>=<value>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 예를 들어 노드에 라벨을 지정하려면 다음을 수행합니다.

oc label nodes ip-10-0-142-25.ec2.internal type=user-node region=east

$ oc label nodes ip-10-0-142-25.ec2.internal type=user-node region=eastCopy to Clipboard Copied! Toggle word wrap Toggle overflow 라벨이 노드에 추가되었는지 확인합니다.

oc get nodes -l type=user-node,region=east

$ oc get nodes -l type=user-node,region=eastCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

NAME STATUS ROLES AGE VERSION ip-10-0-142-25.ec2.internal Ready worker 17m v1.18.3+002a51f

NAME STATUS ROLES AGE VERSION ip-10-0-142-25.ec2.internal Ready worker 17m v1.18.3+002a51fCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Pod에 일치하는 노드 선택기를 추가합니다.

기존 및 향후 Pod에 노드 선택기를 추가하려면 Pod의 제어 오브젝트에 노드 선택기를 추가합니다.

라벨이 있는

ReplicaSet오브젝트의 예Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 노드 선택기를 추가합니다.

특정 새 Pod에 노드 선택기를 추가하려면 선택기를

Pod오브젝트에 직접 추가합니다.노드 선택기가 있는

Pod오브젝트의 예Copy to Clipboard Copied! Toggle word wrap Toggle overflow 참고예약된 기존 Pod에 노드 선택기를 직접 추가할 수 없습니다.

3장. 노드에 대한 Pod 배치 제어(예약)

3.1. 스케줄러를 사용하여 Pod 배치 제어

Pod 예약은 클러스터 내 노드에 대한 새 Pod 배치를 결정하는 내부 프로세스입니다.

스케줄러 코드는 새 Pod가 생성될 때 해당 Pod를 감시하고 이를 호스팅하는 데 가장 적합한 노드를 확인할 수 있도록 깔끔하게 분리되어 있습니다. 그런 다음 마스터 API를 사용하여 Pod에 대한 바인딩(Pod와 노드의 바인딩)을 생성합니다.

- 기본 Pod 예약

- OpenShift Container Platform에는 대부분의 사용자 요구 사항을 충족하는 기본 스케줄러가 제공됩니다. 기본 스케줄러는 고유 툴과 사용자 정의 툴을 모두 사용하여 Pod에 가장 적합한 항목을 결정합니다.

- 고급 Pod 예약

새 Pod가 배치되는 위치를 추가로 제어해야 하는 상황에서는 OpenShift Container Platform 고급 예약 기능을 사용하여 Pod를 요청하거나 특정 노드에서 또는 특정 Pod와 함께 실행하도록 하는 기본 설정을 포함하도록 Pod를 구성할 수 있습니다.

- Pod 유사성 및 유사성 방지 규칙 사용

- Pod 유사성을 사용하여 Pod 배치 제어