This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Migration Toolkit for Containers

Migrating to OpenShift Container Platform 4

Abstract

Chapter 1. Migrating from OpenShift Container Platform 3

1.1. About migrating OpenShift Container Platform 3 to 4

OpenShift Container Platform 4 includes new technologies and functionality that results in a cluster that is self-managing, flexible, and automated. The way that OpenShift Container Platform 4 clusters are deployed and managed drastically differs from OpenShift Container Platform 3.

To successfully transition from OpenShift Container Platform 3 to OpenShift Container Platform 4, it is important that you review the following information:

- Planning your transition

- Learn about the differences between OpenShift Container Platform versions 3 and 4. Prior to transitioning, be sure that you have reviewed and prepared for storage, networking, logging, security, and monitoring considerations.

- Performing your migration

- Learn about and use Migration Toolkit for Containers (MTC) to migrate your application workloads.

1.2. Planning your migration

Before performing your migration to OpenShift Container Platform 4.5, it is important to take the time to properly plan for the transition. OpenShift Container Platform 4 introduces architectural changes and enhancements, so the procedures that you used to manage your OpenShift Container Platform 3 cluster might not apply for OpenShift Container Platform 4.

This planning document assumes that you are transitioning from OpenShift Container Platform 3.11 to OpenShift Container Platform 4.5.

This document provides high-level information on the most important differences between OpenShift Container Platform 3 and OpenShift Container Platform 4 and the most noteworthy migration considerations. For detailed information on configuring your OpenShift Container Platform 4 cluster, review the appropriate sections of the OpenShift Container Platform documentation. For detailed information on new features and other notable technical changes, review the OpenShift Container Platform 4.5 release notes.

It is not possible to upgrade your existing OpenShift Container Platform 3 cluster to OpenShift Container Platform 4. You must start with a new OpenShift Container Platform 4 installation. Tools are available to assist in migrating your control plane settings and application workloads.

1.2.1. Comparing OpenShift Container Platform 3 and OpenShift Container Platform 4

With OpenShift Container Platform 3, administrators individually deployed Red Hat Enterprise Linux (RHEL) hosts, and then installed OpenShift Container Platform on top of these hosts to form a cluster. Administrators were responsible for properly configuring these hosts and performing updates.

OpenShift Container Platform 4 represents a significant change in the way that OpenShift Container Platform clusters are deployed and managed. OpenShift Container Platform 4 includes new technologies and functionality, such as Operators, machine sets, and Red Hat Enterprise Linux CoreOS (RHCOS), which are core to the operation of the cluster. This technology shift enables clusters to self-manage some functions previously performed by administrators. This also ensures platform stability and consistency, and simplifies installation and scaling.

For more information, see OpenShift Container Platform architecture.

1.2.1.1. Architecture differences

Immutable infrastructure

OpenShift Container Platform 4 uses Red Hat Enterprise Linux CoreOS (RHCOS), which is designed to run containerized applications, and provides efficient installation, Operator-based management, and simplified upgrades. RHCOS is an immutable container host, rather than a customizable operating system like RHEL. RHCOS enables OpenShift Container Platform 4 to manage and automate the deployment of the underlying container host. RHCOS is a part of OpenShift Container Platform, which means that everything runs inside a container and is deployed using OpenShift Container Platform.

In OpenShift Container Platform 4, control plane nodes must run RHCOS, ensuring that full-stack automation is maintained for the control plane. This makes rolling out updates and upgrades a much easier process than in OpenShift Container Platform 3.

For more information, see Red Hat Enterprise Linux CoreOS (RHCOS).

Operators

Operators are a method of packaging, deploying, and managing a Kubernetes application. Operators ease the operational complexity of running another piece of software. They watch over your environment and use the current state to make decisions in real time. Advanced Operators are designed to upgrade and react to failures automatically.

For more information, see Understanding Operators.

1.2.1.2. Installation and update differences

Installation process

To install OpenShift Container Platform 3.11, you prepared your Red Hat Enterprise Linux (RHEL) hosts, set all of the configuration values your cluster needed, and then ran an Ansible playbook to install and set up your cluster.

In OpenShift Container Platform 4.5, you use the OpenShift installation program to create a minimum set of resources required for a cluster. Once the cluster is running, you use Operators to further configure your cluster and to install new services. After first boot, Red Hat Enterprise Linux CoreOS (RHCOS) systems are managed by the Machine Config Operator (MCO) that runs in the OpenShift Container Platform cluster.

For more information, see Installation process.

If you want to add Red Hat Enterprise Linux (RHEL) (RHEL) worker machines to your OpenShift Container Platform 4.5 cluster, you use an Ansible playbook to join the RHEL worker machines after the cluster is running. For more information, see Adding RHEL compute machines to an OpenShift Container Platform cluster.

Infrastructure options

In OpenShift Container Platform 3.11, you installed your cluster on infrastructure that you prepared and maintained. In addition to providing your own infrastructure, OpenShift Container Platform 4 offers an option to deploy a cluster on infrastructure that the OpenShift Container Platform installation program provisions and the cluster maintains.

For more information, see OpenShift Container Platform installation overview.

Upgrading your cluster

In OpenShift Container Platform 3.11, you upgraded your cluster by running Ansible playbooks. In OpenShift Container Platform 4.5, the cluster manages its own updates, including updates to Red Hat Enterprise Linux CoreOS (RHCOS) on cluster nodes. You can easily upgrade your cluster by using the web console or by using the oc adm upgrade command from the OpenShift CLI and the Operators will automatically upgrade themselves. If your OpenShift Container Platform 4.5 cluster has RHEL worker machines, then you will still need to run an Ansible playbook to upgrade those worker machines.

For more information, see Updating clusters.

1.2.2. Migration considerations

Review the changes and other considerations that might affect your transition from OpenShift Container Platform 3.11 to OpenShift Container Platform 4.

1.2.2.1. Storage considerations

Review the following storage changes to consider when transitioning from OpenShift Container Platform 3.11 to OpenShift Container Platform 4.5.

Local volume persistent storage

Local storage is only supported by using the Local Storage Operator in OpenShift Container Platform 4.5. It is not supported to use the local provisioner method from OpenShift Container Platform 3.11.

For more information, see Persistent storage using local volumes.

FlexVolume persistent storage

The FlexVolume plug-in location changed from OpenShift Container Platform 3.11. The new location in OpenShift Container Platform 4.5 is /etc/kubernetes/kubelet-plugins/volume/exec. Attachable FlexVolume plug-ins are no longer supported.

For more information, see Persistent storage using FlexVolume.

Container Storage Interface (CSI) persistent storage

Persistent storage using the Container Storage Interface (CSI) was Technology Preview in OpenShift Container Platform 3.11. OpenShift Container Platform 4.5 fully supports CSI version 1.1.0 and ships with several CSI drivers. You can also install your own driver.

For more information, see Persistent storage using the Container Storage Interface (CSI).

Red Hat OpenShift Container Storage

Red Hat OpenShift Container Storage 3, which is available for use with OpenShift Container Platform 3.11, uses Red Hat Gluster Storage as the backing storage.

Red Hat OpenShift Container Storage 4, which is available for use with OpenShift Container Platform 4, uses Red Hat Ceph Storage as the backing storage.

For more information, see Persistent storage using Red Hat OpenShift Container Storage and the interoperability matrix article.

Unsupported persistent storage options

Support for the following persistent storage options from OpenShift Container Platform 3.11 has changed in OpenShift Container Platform 4.5:

- GlusterFS is no longer supported.

- CephFS as a standalone product is no longer supported.

- Ceph RBD as a standalone product is no longer supported.

If you used one of these in OpenShift Container Platform 3.11, you must choose a different persistent storage option for full support in OpenShift Container Platform 4.5.

For more information, see Understanding persistent storage.

1.2.2.2. Networking considerations

Review the following networking changes to consider when transitioning from OpenShift Container Platform 3.11 to OpenShift Container Platform 4.5.

Network isolation mode

The default network isolation mode for OpenShift Container Platform 3.11 was ovs-subnet, though users frequently switched to use ovn-multitenant. The default network isolation mode for OpenShift Container Platform 4.5 is controlled by a network policy.

If your OpenShift Container Platform 3.11 cluster used the ovs-subnet or ovs-multitenant mode, it is recommended to switch to a network policy for your OpenShift Container Platform 4.5 cluster. Network policies are supported upstream, are more flexible, and they provide the functionality that ovs-multitenant does. If you want to maintain the ovs-multitenant behavior while using a network policy in OpenShift Container Platform 4.5, follow the steps to configure multitenant isolation using network policy.

For more information, see About network policy.

Encrypting traffic between hosts

In OpenShift Container Platform 3.11, you could use IPsec to encrypt traffic between hosts. OpenShift Container Platform 4.5 does not support IPsec. It is recommended to use Red Hat OpenShift Service Mesh to enable mutual TLS between services.

For more information, see Understanding Red Hat OpenShift Service Mesh.

1.2.2.3. Logging considerations

Review the following logging changes to consider when transitioning from OpenShift Container Platform 3.11 to OpenShift Container Platform 4.5.

Deploying cluster logging

OpenShift Container Platform 4 provides a simple deployment mechanism for cluster logging, by using a Cluster Logging custom resource.

For more information, see Installing cluster logging.

Aggregated logging data

You cannot transition your aggregate logging data from OpenShift Container Platform 3.11 into your new OpenShift Container Platform 4 cluster.

For more information, see About cluster logging.

Unsupported logging configurations

Some logging configurations that were available in OpenShift Container Platform 3.11 are no longer supported in OpenShift Container Platform 4.5.

For more information on the explicitly unsupported logging cases, see Maintenance and support.

1.2.2.4. Security considerations

Review the following security changes to consider when transitioning from OpenShift Container Platform 3.11 to OpenShift Container Platform 4.5.

Unauthenticated access to discovery endpoints

In OpenShift Container Platform 3.11, an unauthenticated user could access the discovery endpoints (for example, /api/* and /apis/*). For security reasons, unauthenticated access to the discovery endpoints is no longer allowed in OpenShift Container Platform 4.5. If you do need to allow unauthenticated access, you can configure the RBAC settings as necessary; however, be sure to consider the security implications as this can expose internal cluster components to the external network.

Identity providers

Configuration for identity providers has changed for OpenShift Container Platform 4, including the following notable changes:

- The request header identity provider in OpenShift Container Platform 4.5 requires mutual TLS, where in OpenShift Container Platform 3.11 it did not.

-

The configuration of the OpenID Connect identity provider was simplified in OpenShift Container Platform 4.5. It now obtains data, which previously had to specified in OpenShift Container Platform 3.11, from the provider’s

/.well-known/openid-configurationendpoint.

For more information, see Understanding identity provider configuration.

1.2.2.5. Monitoring considerations

Review the following monitoring changes to consider when transitioning from OpenShift Container Platform 3.11 to OpenShift Container Platform 4.5.

Alert for monitoring infrastructure availability

The default alert that triggers to ensure the availability of the monitoring structure was called DeadMansSwitch in OpenShift Container Platform 3.11. This was renamed to Watchdog in OpenShift Container Platform 4. If you had PagerDuty integration set up with this alert in OpenShift Container Platform 3.11, you must set up the PagerDuty integration for the Watchdog alert in OpenShift Container Platform 4.

For more information, see Applying custom Alertmanager configuration.

1.3. Migration tools and prerequisites

You can migrate application workloads from OpenShift Container Platform 3.7, 3.9, 3.10, and 3.11 to OpenShift Container Platform 4.5 with the Migration Toolkit for Containers (MTC). MTC enables you to control the migration and to minimize application downtime.

The MTC web console and API, based on Kubernetes custom resources, enable you to migrate stateful application workloads at the granularity of a namespace.

MTC supports the file system and snapshot data copy methods for migrating data from the source cluster to the target cluster. You can select a method that is suited for your environment and is supported by your storage provider.

You can use migration hooks to run Ansible playbooks at certain points during the migration. The hooks are added when you create a migration plan.

The service catalog is deprecated in OpenShift Container Platform 4. You can migrate workload resources provisioned with the service catalog from OpenShift Container Platform 3 to 4 but you cannot perform service catalog actions such as provision, deprovision, or update on these workloads after migration.

The MTC web console displays a message if the service catalog resources cannot be migrated.

Before you begin your migration, be sure to review the information on planning your migration.

1.3.1. Migration Toolkit for Containers workflow

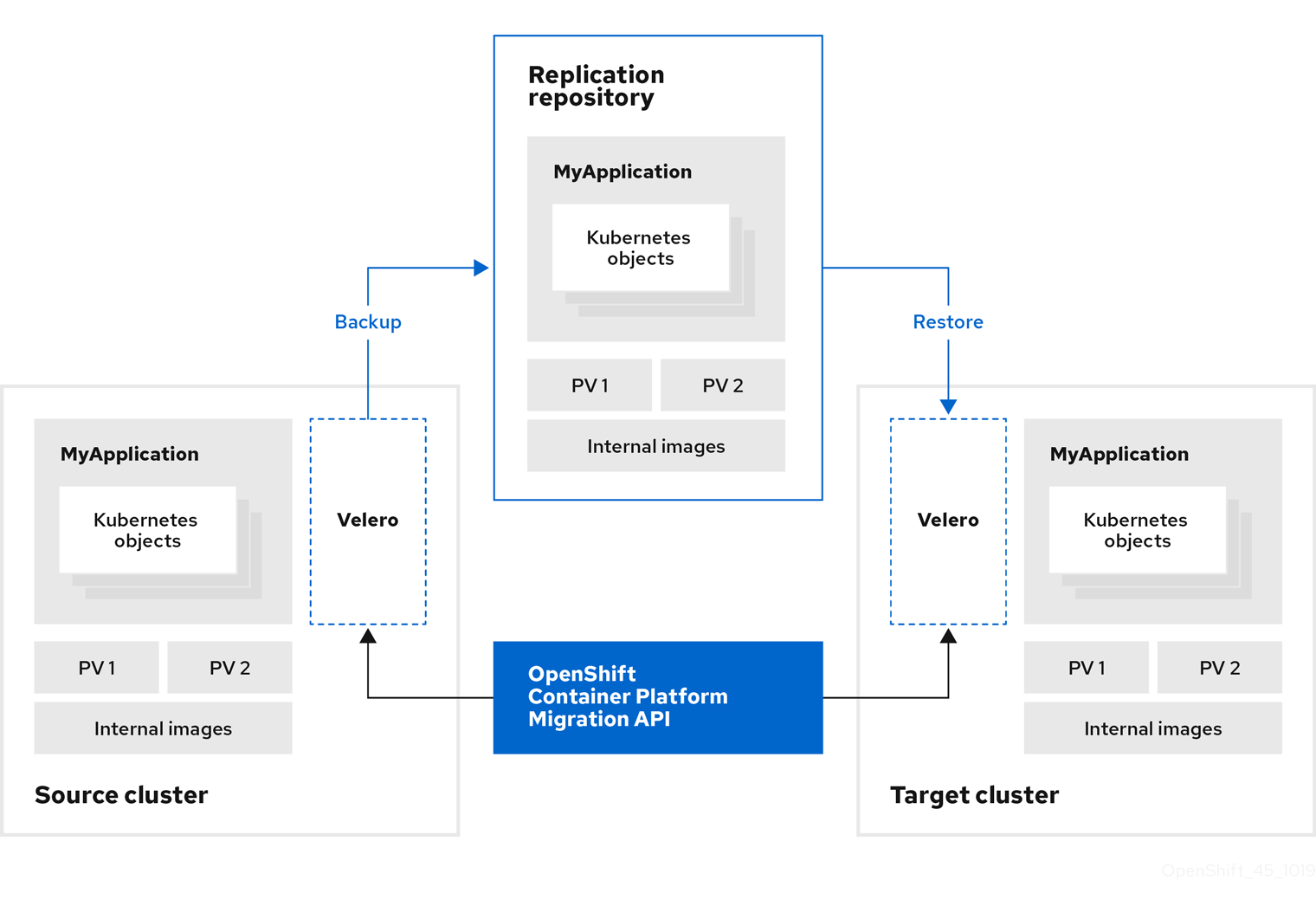

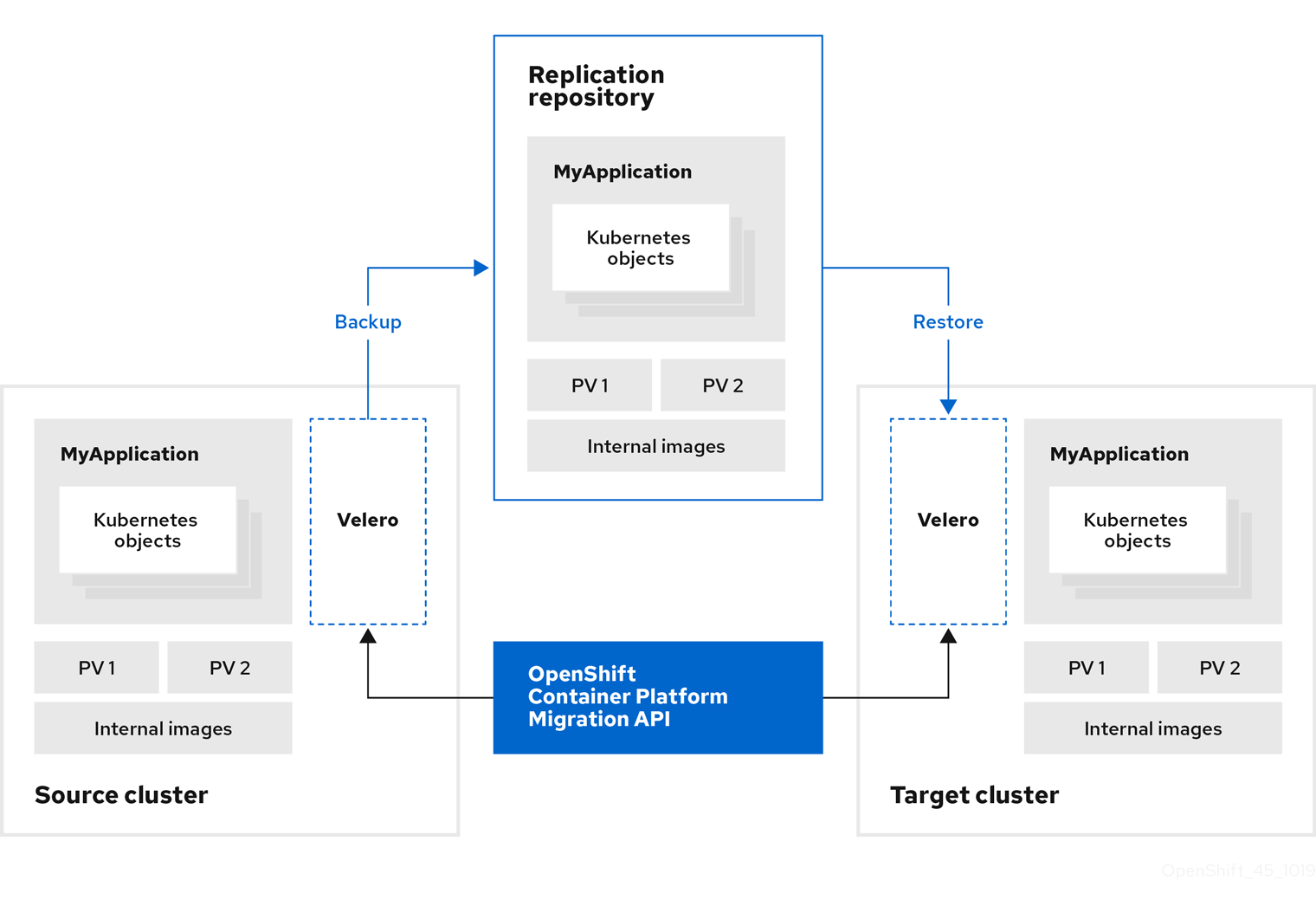

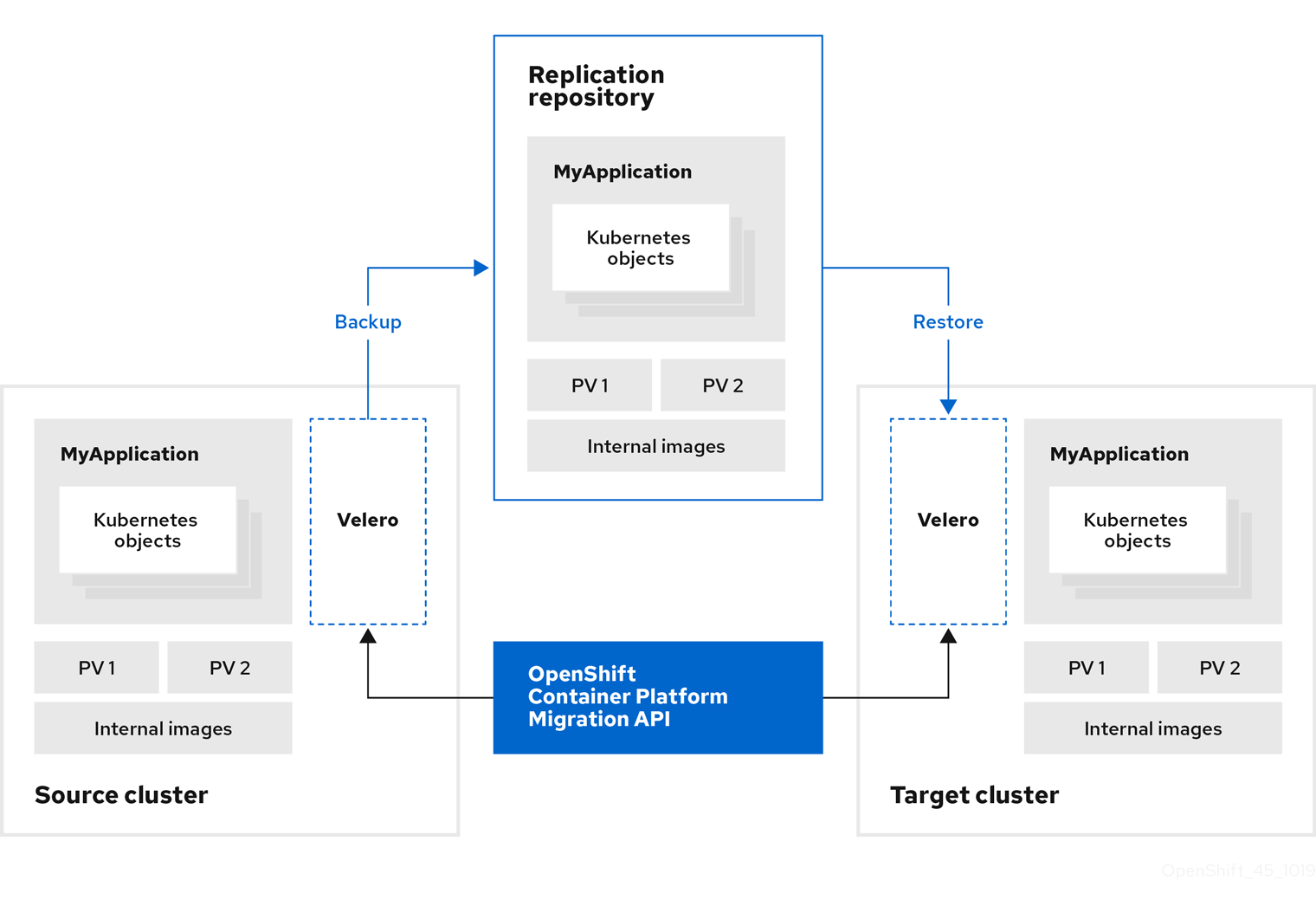

You use the Migration Toolkit for Containers (MTC) to migrate Kubernetes resources, persistent volume data, and internal container images from an OpenShift Container Platform source cluster to an OpenShift Container Platform 4.5 target cluster by using the MTC web console or the Kubernetes API.

The (MTC) migrates the following resources:

- A namespace specified in a migration plan.

Namespace-scoped resources: When the MTC migrates a namespace, it migrates all the objects and resources associated with that namespace, such as services or pods. Additionally, if a resource that exists in the namespace but not at the cluster level depends on a resource that exists at the cluster level, the MTC migrates both resources.

For example, a security context constraint (SCC) is a resource that exists at the cluster level and a service account (SA) is a resource that exists at the namespace level. If an SA exists in a namespace that the MTC migrates, the MTC automatically locates any SCCs that are linked to the SA and also migrates those SCCs. Similarly, the MTC migrates persistent volume claims that are linked to the persistent volumes of the namespace.

- Custom resources (CRs) and custom resource definitions (CRDs): The MTC automatically migrates any CRs that exist at the namespace level as well as the CRDs that are linked to those CRs.

Migrating an application with the MTC web console involves the following steps:

Install the Migration Toolkit for Containers Operator on all clusters.

You can install the Migration Toolkit for Containers Operator in a restricted environment with limited or no internet access. The source and target clusters must have network access to each other and to a mirror registry.

Configure the replication repository, an intermediate object storage that MTC uses to migrate data.

The source and target clusters must have network access to the replication repository during migration. In a restricted environment, you can use an internally hosted S3 storage repository. If you are using a proxy server, you must configure it to allow network traffic between the replication repository and the clusters.

- Add the source cluster to the MTC web console.

- Add the replication repository to the MTC web console.

Create a migration plan, with one of the following data migration options:

Copy: MTC copies the data from the source cluster to the replication repository, and from the replication repository to the target cluster.

NoteIf you are using direct image migration or direct volume migration, the images or volumes are copied directly from the source cluster to the target cluster.

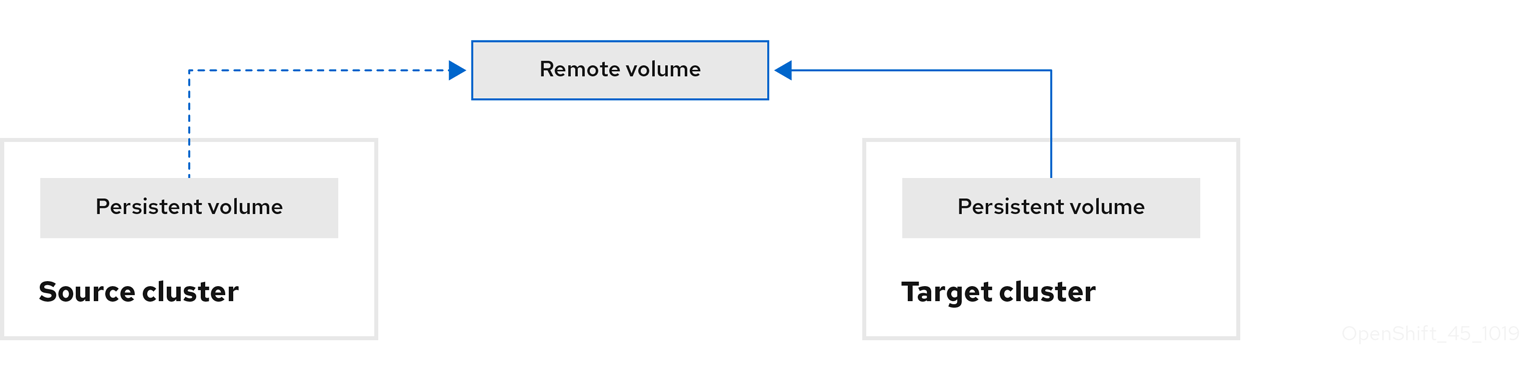

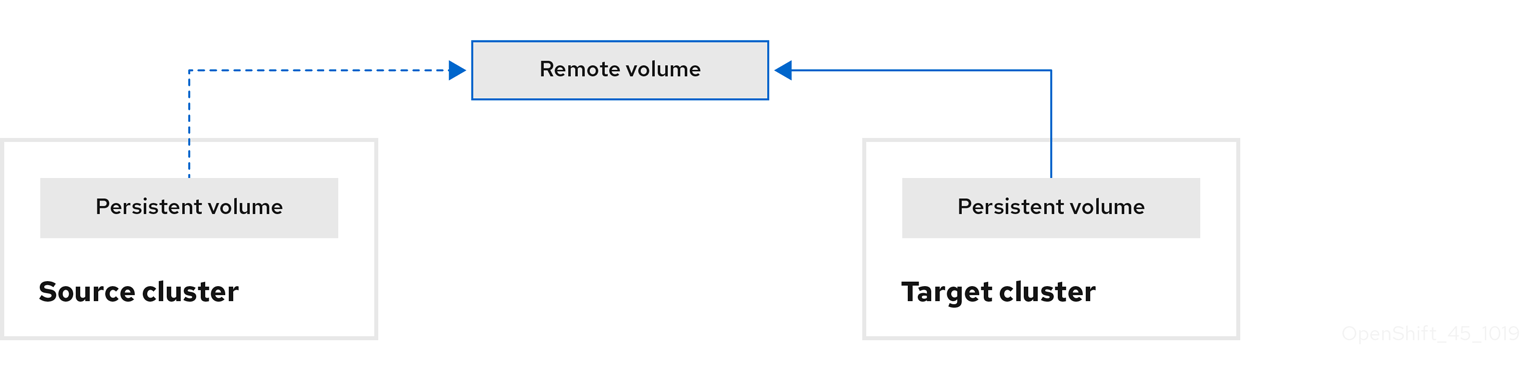

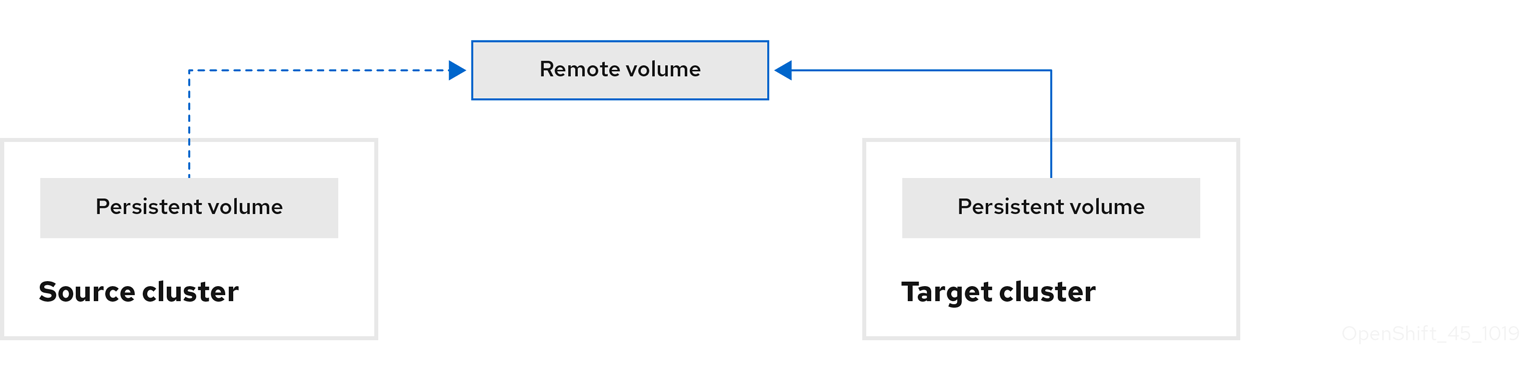

Move: MTC unmounts a remote volume, for example, NFS, from the source cluster, creates a PV resource on the target cluster pointing to the remote volume, and then mounts the remote volume on the target cluster. Applications running on the target cluster use the same remote volume that the source cluster was using. The remote volume must be accessible to the source and target clusters.

NoteAlthough the replication repository does not appear in this diagram, it is required for migration.

Run the migration plan, with one of the following options:

Stage (optional) copies data to the target cluster without stopping the application.

Staging can be run multiple times so that most of the data is copied to the target before migration. This minimizes the duration of the migration and application downtime.

- Migrate stops the application on the source cluster and recreates its resources on the target cluster. Optionally, you can migrate the workload without stopping the application.

1.3.2. Migration Toolkit for Containers custom resources

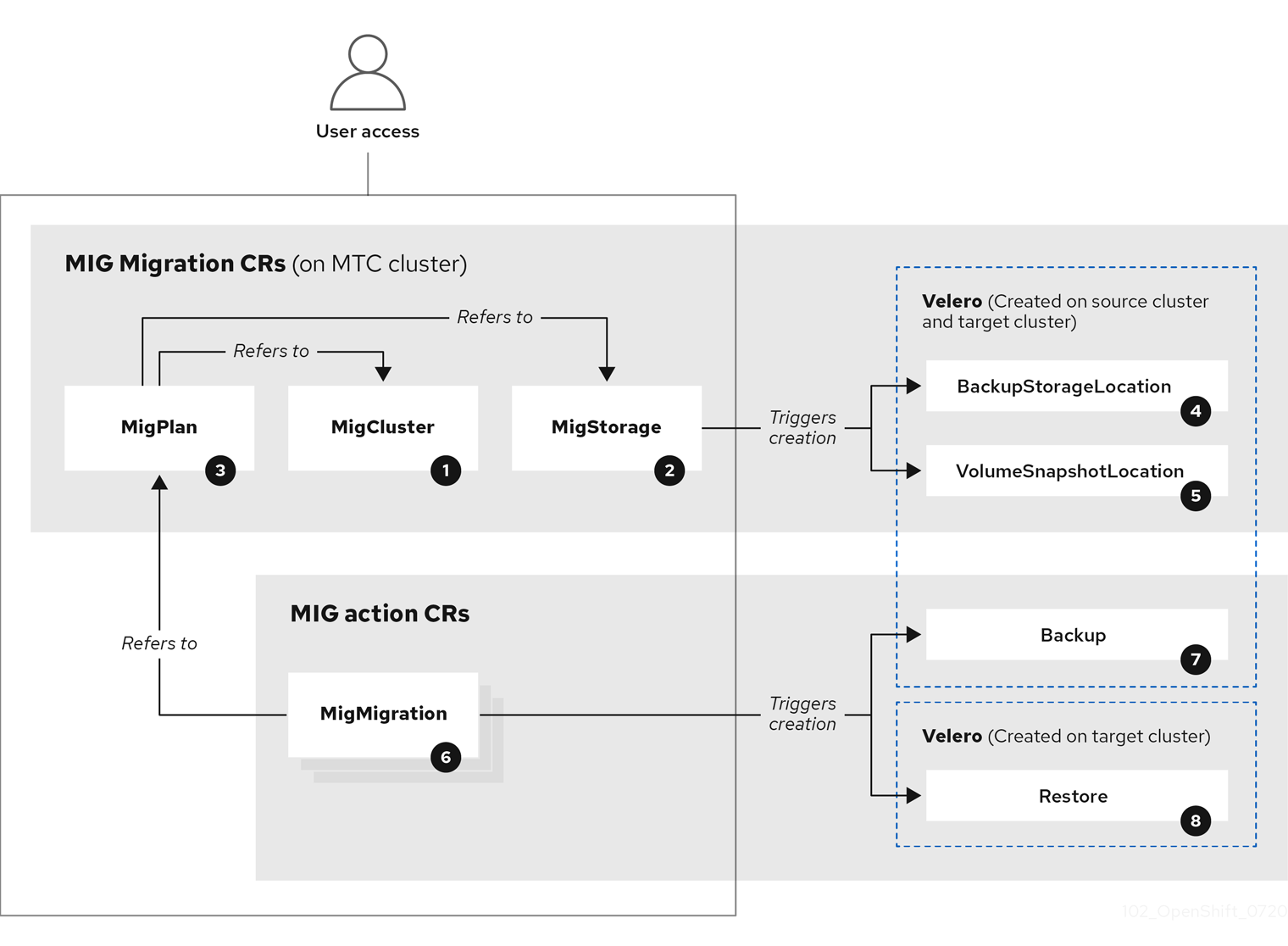

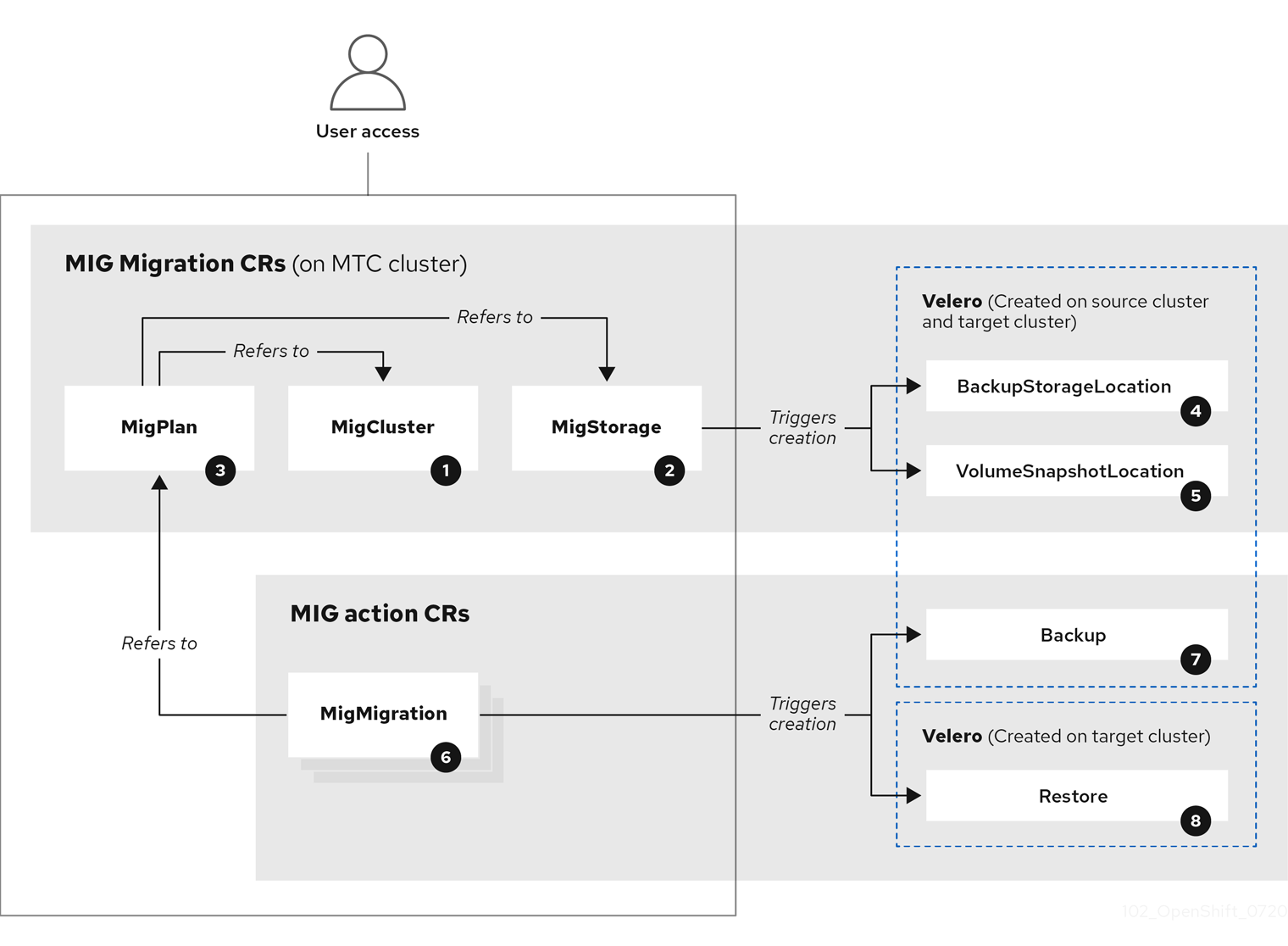

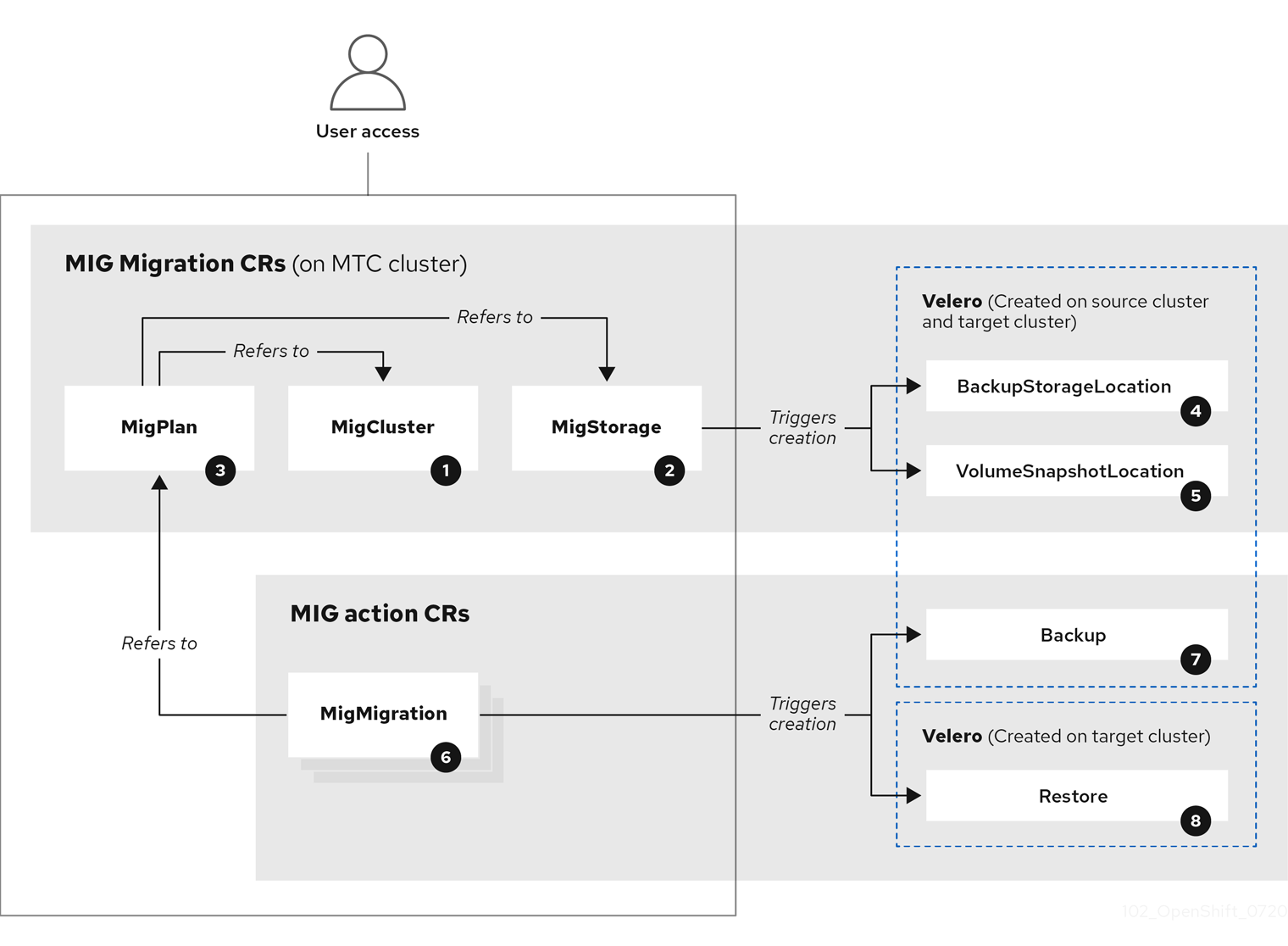

The Migration Toolkit for Containers (MTC) creates the following custom resources (CRs):

![]() MigCluster (configuration, MTC cluster): Cluster definition

MigCluster (configuration, MTC cluster): Cluster definition

![]() MigStorage (configuration, MTC cluster): Storage definition

MigStorage (configuration, MTC cluster): Storage definition

![]() MigPlan (configuration, MTC cluster): Migration plan

MigPlan (configuration, MTC cluster): Migration plan

The MigPlan CR describes the source and target clusters, replication repository, and namespaces being migrated. It is associated with 0, 1, or many MigMigration CRs.

Deleting a MigPlan CR deletes the associated MigMigration CRs.

![]() BackupStorageLocation (configuration, MTC cluster): Location of

BackupStorageLocation (configuration, MTC cluster): Location of Velero backup objects

![]() VolumeSnapshotLocation (configuration, MTC cluster): Location of

VolumeSnapshotLocation (configuration, MTC cluster): Location of Velero volume snapshots

![]() MigMigration (action, MTC cluster): Migration, created every time you stage or migrate data. Each

MigMigration (action, MTC cluster): Migration, created every time you stage or migrate data. Each MigMigration CR is associated with a MigPlan CR.

![]() Backup (action, source cluster): When you run a migration plan, the

Backup (action, source cluster): When you run a migration plan, the MigMigration CR creates two Velero backup CRs on each source cluster:

- Backup CR #1 for Kubernetes objects

- Backup CR #2 for PV data

![]() Restore (action, target cluster): When you run a migration plan, the

Restore (action, target cluster): When you run a migration plan, the MigMigration CR creates two Velero restore CRs on the target cluster:

- Restore CR #1 (using Backup CR #2) for PV data

- Restore CR #2 (using Backup CR #1) for Kubernetes objects

1.3.3. About data copy methods

The Migration Toolkit for Containers (MTC) supports the file system and snapshot data copy methods for migrating data from the source cluster to the target cluster. You can select a method that is suited for your environment and is supported by your storage provider.

1.3.3.1. File system copy method

MTC copies data files from the source cluster to the replication repository, and from there to the target cluster.

| Benefits | Limitations |

|---|---|

|

|

1.3.3.2. Snapshot copy method

MTC copies a snapshot of the source cluster data to the replication repository of a cloud provider. The data is restored on the target cluster.

AWS, Google Cloud Provider, and Microsoft Azure support the snapshot copy method.

| Benefits | Limitations |

|---|---|

|

|

1.3.4. About migration hooks

You can use migration hooks to run custom code at certain points during a migration with the Migration Toolkit for Containers (MTC). You can add up to four migration hooks to a single migration plan, with each hook running at a different phase of the migration.

Migration hooks perform tasks such as customizing application quiescence, manually migrating unsupported data types, and updating applications after migration.

A migration hook runs on a source or a target cluster at one of the following migration steps:

-

PreBackup: Before resources are backed up on the source cluster -

PostBackup: After resources are backed up on the source cluster -

PreRestore: Before resources are restored on the target cluster -

PostRestore: After resources are restored on the target cluster

You can create a hook by using an Ansible playbook or a custom hook container.

Ansible playbook

The Ansible playbook is mounted on a hook container as a config map. The hook container runs as a job, using the cluster, service account, and namespace specified in the MigPlan custom resource (CR). The job continues to run until it reaches the the default limit of 6 retries or a successful completion. This continues even if the initial pod is evicted or killed.

The default Ansible runtime image is registry.redhat.io/rhmtc/openshift-migration-hook-runner-rhel7:1.4. This image is based on the Ansible Runner image and includes python-openshift for Ansible Kubernetes resources and an updated oc binary.

Optional: You can use a custom Ansible runtime image containing additional Ansible modules or tools instead of the default image.

Custom hook container

You can create a custom hook container that includes Ansible playbooks or custom code.

1.4. Installing and upgrading the Migration Toolkit for Containers

You can install the Migration Toolkit for Containers (MTC) on an OpenShift Container Platform 4.5 target cluster and an OpenShift Container Platform 3 source cluster.

The Migration Controller pod runs on the target cluster by default. You can configure the Migration Controller pod to run on the source cluster or on a remote cluster.

1.4.1. Installing the Migration Toolkit for Containers in a connected environment

You can install the Migration Toolkit for Containers (MTC) in a connected environment.

You must install the same MTC version on all clusters.

1.4.1.1. Installing the Migration Toolkit for Containers on an OpenShift Container Platform 4.5 target cluster

You can install the Migration Toolkit for Containers (MTC) on an OpenShift Container Platform 4.5 target cluster.

Prerequisites

-

You must be logged in as a user with

cluster-adminprivileges on all clusters.

Procedure

- In the OpenShift Container Platform web console, click Operators → OperatorHub.

- Use the Filter by keyword field to find the Migration Toolkit for Containers Operator.

Select the Migration Toolkit for Containers Operator and click Install.

NoteDo not change the subscription approval option to Automatic. The Migration Toolkit for Containers version must be the same on the source and the target clusters.

Click Install.

On the Installed Operators page, the Migration Toolkit for Containers Operator appears in the openshift-migration project with the status Succeeded.

- Click Migration Toolkit for Containers Operator.

- Under Provided APIs, locate the Migration Controller tile, and click Create Instance.

- Click Create.

- Click Workloads → Pods to verify that the MTC pods are running.

1.4.1.2. Installing the Migration Toolkit for Containers on an OpenShift Container Platform 3 source cluster

You can install the Migration Toolkit for Containers (MTC) manually on an OpenShift Container Platform 3 source cluster.

You must install the same MTC version on the OpenShift Container Platform 3 and 4 clusters.

To ensure that you have the latest version on the OpenShift Container Platform 3 cluster, download the operator.yml and controller-3.yml files when you are ready to create and run the migration plan.

Prerequisites

-

You must be logged in as a user with

cluster-adminprivileges on all clusters. -

You must have access to

registry.redhat.io. -

You must have

podmaninstalled. - The source cluster must be OpenShift Container Platform 3.7, 3.9, 3.10, or 3.11.

The source cluster must be configured to pull images from

registry.redhat.io.To pull images, you must create an image stream secret and copy it to each node in your cluster.

Procedure

Log in to

registry.redhat.iowith your Red Hat Customer Portal credentials:sudo podman login registry.redhat.io

$ sudo podman login registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Download the

operator.ymlfile:sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/operator.yml ./

$ sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/operator.yml ./Copy to Clipboard Copied! Toggle word wrap Toggle overflow Download the

controller-3.ymlfile:sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/controller-3.yml ./

$ sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/controller-3.yml ./Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Log in to your OpenShift Container Platform 3 cluster.

Verify that the cluster can authenticate with

registry.redhat.io:oc run test --image registry.redhat.io/ubi8 --command sleep infinity

$ oc run test --image registry.redhat.io/ubi8 --command sleep infinityCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the Migration Toolkit for Containers Operator object:

oc create -f operator.yml

$ oc create -f operator.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- You can ignore

Error from server (AlreadyExists)messages. They are caused by the Migration Toolkit for Containers Operator creating resources for earlier versions of OpenShift Container Platform 3 that are provided in later releases.

Create the

MigrationControllerobject:oc create -f controller-3.yml

$ oc create -f controller-3.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the

VeleroandResticpods are running:oc get pods -n openshift-migration

$ oc get pods -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.2. Installing the Migration Toolkit for Containers in a restricted environment

You can install the Migration Toolkit for Containers (MTC) in a restricted environment.

You must install the same MTC version on all clusters.

You can build a custom Operator catalog image for OpenShift Container Platform 4, push it to a local mirror image registry, and configure Operator Lifecycle Manager (OLM) to install the Migration Toolkit for Containers Operator from the local registry.

1.4.2.1. Building an Operator catalog image

Cluster administrators can build a custom Operator catalog image based on the Package Manifest Format to be used by Operator Lifecycle Manager (OLM). The catalog image can be pushed to a container image registry that supports Docker v2-2. For a cluster on a restricted network, this registry can be a registry that the cluster has network access to, such as a mirror registry created during a restricted network cluster installation.

The internal registry of the OpenShift Container Platform cluster cannot be used as the target registry because it does not support pushing without a tag, which is required during the mirroring process.

For this example, the procedure assumes use of a mirror registry that has access to both your network and the Internet.

Only the Linux version of the oc client can be used for this procedure, because the Windows and macOS versions do not provide the oc adm catalog build command.

Prerequisites

- Workstation with unrestricted network access

-

ocversion 4.3.5+ Linux client -

podmanversion 1.4.4+ - Access to mirror registry that supports Docker v2-2

If you are working with private registries, set the

REG_CREDSenvironment variable to the file path of your registry credentials for use in later steps. For example, for thepodmanCLI:REG_CREDS=${XDG_RUNTIME_DIR}/containers/auth.json$ REG_CREDS=${XDG_RUNTIME_DIR}/containers/auth.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are working with private namespaces that your quay.io account has access to, you must set a Quay authentication token. Set the

AUTH_TOKENenvironment variable for use with the--auth-tokenflag by making a request against the login API using your quay.io credentials:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

On the workstation with unrestricted network access, authenticate with the target mirror registry:

podman login <registry_host_name>

$ podman login <registry_host_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Also authenticate with

registry.redhat.ioso that the base image can be pulled during the build:podman login registry.redhat.io

$ podman login registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Build a catalog image based on the

redhat-operatorscatalog from Quay.io, tagging and pushing it to your mirror registry:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Organization (namespace) to pull from an App Registry instance.

- 2

- Set

--fromto theose-operator-registrybase image using the tag that matches the target OpenShift Container Platform cluster major and minor version. - 3

- Set

--filter-by-osto the operating system and architecture to use for the base image, which must match the target OpenShift Container Platform cluster. Valid values arelinux/amd64,linux/ppc64le, andlinux/s390x. - 4

- Name your catalog image and include a tag, for example,

v1. - 5

- Optional: If required, specify the location of your registry credentials file.

- 6

- Optional: If you do not want to configure trust for the target registry, add the

--insecureflag. - 7

- Optional: If other application registry catalogs are used that are not public, specify a Quay authentication token.

Example output

INFO[0013] loading Bundles dir=/var/folders/st/9cskxqs53ll3wdn434vw4cd80000gn/T/300666084/manifests-829192605 ... Pushed sha256:f73d42950021f9240389f99ddc5b0c7f1b533c054ba344654ff1edaf6bf827e3 to example_registry:5000/olm/redhat-operators:v1

INFO[0013] loading Bundles dir=/var/folders/st/9cskxqs53ll3wdn434vw4cd80000gn/T/300666084/manifests-829192605 ... Pushed sha256:f73d42950021f9240389f99ddc5b0c7f1b533c054ba344654ff1edaf6bf827e3 to example_registry:5000/olm/redhat-operators:v1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Sometimes invalid manifests are accidentally introduced catalogs provided by Red Hat; when this happens, you might see some errors:

Example output with errors

... INFO[0014] directory dir=/var/folders/st/9cskxqs53ll3wdn434vw4cd80000gn/T/300666084/manifests-829192605 file=4.2 load=package W1114 19:42:37.876180 34665 builder.go:141] error building database: error loading package into db: fuse-camel-k-operator.v7.5.0 specifies replacement that couldn't be found Uploading ... 244.9kB/s

... INFO[0014] directory dir=/var/folders/st/9cskxqs53ll3wdn434vw4cd80000gn/T/300666084/manifests-829192605 file=4.2 load=package W1114 19:42:37.876180 34665 builder.go:141] error building database: error loading package into db: fuse-camel-k-operator.v7.5.0 specifies replacement that couldn't be found Uploading ... 244.9kB/sCopy to Clipboard Copied! Toggle word wrap Toggle overflow These errors are usually non-fatal, and if the Operator package mentioned does not contain an Operator you plan to install or a dependency of one, then they can be ignored.

1.4.2.2. Configuring OperatorHub for restricted networks

Cluster administrators can configure OLM and OperatorHub to use local content in a restricted network environment using a custom Operator catalog image. For this example, the procedure uses a custom redhat-operators catalog image previously built and pushed to a supported registry.

Prerequisites

- Workstation with unrestricted network access

- A custom Operator catalog image pushed to a supported registry

-

ocversion 4.3.5+ -

podmanversion 1.4.4+ - Access to mirror registry that supports Docker v2-2

If you are working with private registries, set the

REG_CREDSenvironment variable to the file path of your registry credentials for use in later steps. For example, for thepodmanCLI:REG_CREDS=${XDG_RUNTIME_DIR}/containers/auth.json$ REG_CREDS=${XDG_RUNTIME_DIR}/containers/auth.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

The

oc adm catalog mirrorcommand extracts the contents of your custom Operator catalog image to generate the manifests required for mirroring. You can choose to either:- Allow the default behavior of the command to automatically mirror all of the image content to your mirror registry after generating manifests, or

-

Add the

--manifests-onlyflag to only generate the manifests required for mirroring, but do not actually mirror the image content to a registry yet. This can be useful for reviewing what will be mirrored, and it allows you to make any changes to the mapping list if you only require a subset of the content. You can then use that file with theoc image mirrorcommand to mirror the modified list of images in a later step.

On your workstation with unrestricted network access, run the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify your Operator catalog image.

- 2

- Optional: If required, specify the location of your registry credentials file.

- 3

- Optional: If you do not want to configure trust for the target registry, add the

--insecureflag. - 4

- This flag is currently required due to a known issue with multiple architecture support.

- 5

- Optional: Only generate the manifests required for mirroring and do not actually mirror the image content to a registry.

WarningIf the

--filter-by-osflag remains unset or set to any value other than.*, the command filters out different architectures, which changes the digest of the manifest list, also known as a multi-arch image. The incorrect digest causes deployments of those images and Operators on disconnected clusters to fail. For more information, see BZ#1890951.Example output

using database path mapping: /:/tmp/190214037 wrote database to /tmp/190214037 using database at: /tmp/190214037/bundles.db ...

using database path mapping: /:/tmp/190214037 wrote database to /tmp/190214037 using database at: /tmp/190214037/bundles.db1 ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Temporary database generated by the command.

After running the command, a

<image_name>-manifests/directory is created in the current directory and generates the following files:-

The

imageContentSourcePolicy.yamlfile defines anImageContentSourcePolicyobject that can configure nodes to translate between the image references stored in Operator manifests and the mirrored registry. -

The

mapping.txtfile contains all of the source images and where to map them in the target registry. This file is compatible with theoc image mirrorcommand and can be used to further customize the mirroring configuration.

If you used the

--manifests-onlyflag in the previous step and want to mirror only a subset of the content:Modify the list of images in your

mapping.txtfile to your specifications. If you are unsure of the exact names and versions of the subset of images you want to mirror, use the following steps to find them:Run the

sqlite3tool against the temporary database that was generated by theoc adm catalog mirrorcommand to retrieve a list of images matching a general search query. The output helps inform how you will later edit yourmapping.txtfile.For example, to retrieve a list of images that are similar to the string

clusterlogging.4.3:echo "select * from related_image \ where operatorbundle_name like 'clusterlogging.4.3%';" \ | sqlite3 -line /tmp/190214037/bundles.db$ echo "select * from related_image \ where operatorbundle_name like 'clusterlogging.4.3%';" \ | sqlite3 -line /tmp/190214037/bundles.db1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Refer to the previous output of the

oc adm catalog mirrorcommand to find the path of the database file.

Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the results from the previous step to edit the

mapping.txtfile to only include the subset of images you want to mirror.For example, you can use the

imagevalues from the previous example output to find that the following matching lines exist in yourmapping.txtfile:Matching image mappings in

mapping.txtregistry.redhat.io/openshift4/ose-logging-kibana5@sha256:aa4a8b2a00836d0e28aa6497ad90a3c116f135f382d8211e3c55f34fb36dfe61=<registry_host_name>:<port>/openshift4-ose-logging-kibana5:a767c8f0 registry.redhat.io/openshift4/ose-oauth-proxy@sha256:6b4db07f6e6c962fc96473d86c44532c93b146bbefe311d0c348117bf759c506=<registry_host_name>:<port>/openshift4-ose-oauth-proxy:3754ea2b

registry.redhat.io/openshift4/ose-logging-kibana5@sha256:aa4a8b2a00836d0e28aa6497ad90a3c116f135f382d8211e3c55f34fb36dfe61=<registry_host_name>:<port>/openshift4-ose-logging-kibana5:a767c8f0 registry.redhat.io/openshift4/ose-oauth-proxy@sha256:6b4db07f6e6c962fc96473d86c44532c93b146bbefe311d0c348117bf759c506=<registry_host_name>:<port>/openshift4-ose-oauth-proxy:3754ea2bCopy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, if you only want to mirror these images, you would then remove all other entries in the

mapping.txtfile and leave only the above two lines.

Still on your workstation with unrestricted network access, use your modified

mapping.txtfile to mirror the images to your registry using theoc image mirrorcommand:oc image mirror \ [-a ${REG_CREDS}] \ --filter-by-os='.*' \ -f ./redhat-operators-manifests/mapping.txt$ oc image mirror \ [-a ${REG_CREDS}] \ --filter-by-os='.*' \ -f ./redhat-operators-manifests/mapping.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow WarningIf the

--filter-by-osflag remains unset or set to any value other than.*, the command filters out different architectures, which changes the digest of the manifest list, also known as a multi-arch image. The incorrect digest causes deployments of those images and Operators on disconnected clusters to fail.

Apply the

ImageContentSourcePolicyobject:oc apply -f ./redhat-operators-manifests/imageContentSourcePolicy.yaml

$ oc apply -f ./redhat-operators-manifests/imageContentSourcePolicy.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

CatalogSourceobject that references your catalog image.Modify the following to your specifications and save it as a

catalogsource.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify your custom Operator catalog image.

Use the file to create the

CatalogSourceobject:oc create -f catalogsource.yaml

$ oc create -f catalogsource.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verify the following resources are created successfully.

Check the pods:

oc get pods -n openshift-marketplace

$ oc get pods -n openshift-marketplaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE my-operator-catalog-6njx6 1/1 Running 0 28s marketplace-operator-d9f549946-96sgr 1/1 Running 0 26h

NAME READY STATUS RESTARTS AGE my-operator-catalog-6njx6 1/1 Running 0 28s marketplace-operator-d9f549946-96sgr 1/1 Running 0 26hCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the catalog source:

oc get catalogsource -n openshift-marketplace

$ oc get catalogsource -n openshift-marketplaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DISPLAY TYPE PUBLISHER AGE my-operator-catalog My Operator Catalog grpc 5s

NAME DISPLAY TYPE PUBLISHER AGE my-operator-catalog My Operator Catalog grpc 5sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the package manifest:

oc get packagemanifest -n openshift-marketplace

$ oc get packagemanifest -n openshift-marketplaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME CATALOG AGE etcd My Operator Catalog 34s

NAME CATALOG AGE etcd My Operator Catalog 34sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

You can now install the Operators from the OperatorHub page on your restricted network OpenShift Container Platform cluster web console.

You can install the Migration Toolkit for Containers (MTC) on an OpenShift Container Platform 4.5 target cluster.

Prerequisites

-

You must be logged in as a user with

cluster-adminprivileges on all clusters. - You must create a custom Operator catalog and push it to a mirror registry.

- You must configure Operator Lifecycle Manager to install the Migration Toolkit for Containers Operator from the mirror registry.

Procedure

- In the OpenShift Container Platform web console, click Operators → OperatorHub.

- Use the Filter by keyword field to find the Migration Toolkit for Containers Operator.

Select the Migration Toolkit for Containers Operator and click Install.

NoteDo not change the subscription approval option to Automatic. The Migration Toolkit for Containers version must be the same on the source and the target clusters.

Click Install.

On the Installed Operators page, the Migration Toolkit for Containers Operator appears in the openshift-migration project with the status Succeeded.

- Click Migration Toolkit for Containers Operator.

- Under Provided APIs, locate the Migration Controller tile, and click Create Instance.

- Click Create.

- Click Workloads → Pods to verify that the MTC pods are running.

1.4.2.4. Installing the Migration Toolkit for Containers on an OpenShift Container Platform 3 source cluster in a restricted environment

You can create a manifest file based on the Migration Toolkit for Containers (MTC) Operator image and edit the manifest to point to your local image registry. Then, you can use the local image to create the Migration Toolkit for Containers Operator on an OpenShift Container Platform 3 source cluster.

You must install the same MTC version on the OpenShift Container Platform 3 and 4 clusters.

To ensure that you have the latest version on the OpenShift Container Platform 3 cluster, download the operator.yml and controller-3.yml files when you are ready to create and run the migration plan.

Prerequisites

-

You must be logged in as a user with

cluster-adminprivileges on all clusters. -

You must have access to

registry.redhat.io. -

You must have

podmaninstalled. - The source cluster must be OpenShift Container Platform 3.7, 3.9, 3.10, or 3.11.

- You must have a Linux workstation with unrestricted network access.

- You must have access to a mirror registry that supports Docker v2-2

Procedure

On the workstation with unrestricted network access, log in to

registry.redhat.iowith your Red Hat Customer Portal credentials:sudo podman login registry.redhat.io

$ sudo podman login registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Download the

operator.ymlfile:sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/operator.yml ./

$ sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/operator.yml ./Copy to Clipboard Copied! Toggle word wrap Toggle overflow Download the

controller-3.ymlfile:sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/controller-3.yml ./

$ sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/controller-3.yml ./Copy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain the Operator image value from the

mapping.txtfile that was created when you ran theoc adm catalog mirroron the OpenShift Container Platform 4 cluster:grep openshift-migration-rhel7-operator ./mapping.txt | grep rhmtc

$ grep openshift-migration-rhel7-operator ./mapping.txt | grep rhmtcCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output shows the mapping between the

registry.redhat.ioimage and your mirror registry image.Example output

registry.redhat.io/rhmtc/openshift-migration-rhel7-operator@sha256:468a6126f73b1ee12085ca53a312d1f96ef5a2ca03442bcb63724af5e2614e8a=<registry.apps.example.com>/rhmtc/openshift-migration-rhel7-operator

registry.redhat.io/rhmtc/openshift-migration-rhel7-operator@sha256:468a6126f73b1ee12085ca53a312d1f96ef5a2ca03442bcb63724af5e2614e8a=<registry.apps.example.com>/rhmtc/openshift-migration-rhel7-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the

imageandREGISTRYvalues in the Operator configuration file:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Log in to your OpenShift Container Platform 3 cluster.

Create the Migration Toolkit for Containers Operator object:

oc create -f operator.yml

$ oc create -f operator.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- You can ignore

Error from server (AlreadyExists)messages. They are caused by the Migration Toolkit for Containers Operator creating resources for earlier versions of OpenShift Container Platform 3 that are provided in later releases.

Create the

MigrationControllerobject:oc create -f controller-3.yml

$ oc create -f controller-3.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the

VeleroandResticpods are running:oc get pods -n openshift-migration

$ oc get pods -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.3. Upgrading the Migration Toolkit for Containers

You can upgrade the Migration Toolkit for Containers (MTC) by using the OpenShift Container Platform web console.

You must ensure that the same MTC version is installed on all clusters.

If you are upgrading MTC version 1.3, you must perform an additional procedure to update the MigPlan custom resource (CR).

1.4.3.1. Upgrading the Migration Toolkit for Containers on an OpenShift Container Platform 4 cluster

You can upgrade the Migration Toolkit for Containers (MTC) on an OpenShift Container Platform 4 cluster by using the OpenShift Container Platform web console.

Prerequisites

-

You must be logged in as a user with

cluster-adminprivileges.

Procedure

In the OpenShift Container Platform console, navigate to Operators → Installed Operators.

Operators that have a pending upgrade display an Upgrade available status.

- Click Migration Toolkit for Containers Operator.

- Click the Subscription tab. Any upgrades requiring approval are displayed next to Upgrade Status. For example, it might display 1 requires approval.

- Click 1 requires approval, then click Preview Install Plan.

- Review the resources that are listed as available for upgrade and click Approve.

- Navigate back to the Operators → Installed Operators page to monitor the progress of the upgrade. When complete, the status changes to Succeeded and Up to date.

- Click Workloads → Pods to verify that the MTC pods are running.

1.4.3.2. Upgrading the Migration Toolkit for Containers on an OpenShift Container Platform 3 cluster

You can upgrade Migration Toolkit for Containers (MTC) on an OpenShift Container Platform 3 cluster with podman.

Prerequisites

-

You must be logged in as a user with

cluster-adminprivileges. -

You must have access to

registry.redhat.io. -

You must have

podmaninstalled.

Procedure

Log in to

registry.redhat.iowith your Red Hat Customer Portal credentials:sudo podman login registry.redhat.io

$ sudo podman login registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Download the latest

operator.ymlfile:sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/operator.yml ./

$ sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/operator.yml ./1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- You can specify a z-stream release, if necessary.

Replace the Migration Toolkit for Containers Operator:

oc replace --force -f operator.yml

$ oc replace --force -f operator.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the changes:

For MTC 1.1.2 and earlier versions, delete the

Resticpods:oc delete pod <restic_pod>

$ oc delete pod <restic_pod>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For MTC 1.2 and later versions:

Scale the

migration-operatordeployment to0to stop the deployment:oc scale -n openshift-migration --replicas=0 deployment/migration-operator

$ oc scale -n openshift-migration --replicas=0 deployment/migration-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Scale the

migration-operatordeployment to1to start the deployment and apply the changes:oc scale -n openshift-migration --replicas=1 deployment/migration-operator

$ oc scale -n openshift-migration --replicas=1 deployment/migration-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verify that the

migration-operatorwas upgraded:oc -o yaml -n openshift-migration get deployment/migration-operator | grep image: | awk -F ":" '{ print $NF }'$ oc -o yaml -n openshift-migration get deployment/migration-operator | grep image: | awk -F ":" '{ print $NF }'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Download the latest

controller-3.ymlfile:sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/controller-3.yml ./

$ sudo podman cp $(sudo podman create \ registry.redhat.io/rhmtc/openshift-migration-rhel7-operator:v1.4):/controller-3.yml ./Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

migration-controllerobject:oc create -f controller-3.yml

$ oc create -f controller-3.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow If your OpenShift Container Platform version is 3.10 or earlier, set the security context constraint of the

migration-controllerservice account toanyuidto enable direct image migration and direct volume migration:oc adm policy add-scc-to-user anyuid -z migration-controller -n openshift-migration

$ oc adm policy add-scc-to-user anyuid -z migration-controller -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the MTC pods are running:

oc get pods -n openshift-migration

$ oc get pods -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you have previously added the OpenShift Container Platform 3 cluster to the MTC web console, you must update the service account token in the web console because the upgrade process deletes and restores the

openshift-migrationnamespace:Obtain the service account token:

oc sa get-token migration-controller -n openshift-migration

$ oc sa get-token migration-controller -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow - In the MTC web console, click Clusters.

-

Click the Options menu

next to the cluster and select Edit.

next to the cluster and select Edit.

- Enter the new service account token in the Service account token field.

- Click Update cluster and then click Close.

1.4.3.3. Upgrading MTC 1.3 to 1.4

If you are upgrading Migration Toolkit for Containers (MTC) version 1.3.x to 1.4, you must update the MigPlan custom resource (CR) manifest on the cluster on which the MigrationController pod is running.

Because the indirectImageMigration and indirectVolumeMigration parameters do not exist in MTC 1.3, their default value in version 1.4 is false, which means that direct image migration and direct volume migration are enabled. Because the direct migration requirements are not fulfilled, the migration plan cannot reach a Ready state unless these parameter values are changed to true.

Prerequisites

- You must have MTC 1.3 installed.

-

You must be logged in as a user with

cluster-adminprivileges.

Procedure

-

Log in to the cluster on which the

MigrationControllerpod is running. Get the

MigPlanCR manifest:oc get migplan <migplan> -o yaml -n openshift-migration

$ oc get migplan <migplan> -o yaml -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the following parameter values and save the file as

migplan.yaml:... spec: indirectImageMigration: true indirectVolumeMigration: true

... spec: indirectImageMigration: true indirectVolumeMigration: trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the

MigPlanCR manifest to apply the changes:oc replace -f migplan.yaml -n openshift-migration

$ oc replace -f migplan.yaml -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the updated

MigPlanCR manifest to verify the changes:oc get migplan <migplan> -o yaml -n openshift-migration

$ oc get migplan <migplan> -o yaml -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.5. Configuring object storage for a replication repository

You must configure an object storage to use as a replication repository. The Migration Toolkit for Containers (MTC) copies data from the source cluster to the replication repository, and then from the replication repository to the target cluster.

MTC supports the file system and snapshot data copy methods for migrating data from the source cluster to the target cluster. You can select a method that is suited for your environment and is supported by your storage provider.

The following storage providers are supported:

- Multi-Cloud Object Gateway (MCG)

- Amazon Web Services (AWS) S3

- Google Cloud Provider (GCP)

- Microsoft Azure

- Generic S3 object storage, for example, Minio or Ceph S3

In a restricted environment, you can create an internally hosted replication repository.

Prerequisites

- All clusters must have uninterrupted network access to the replication repository.

- If you use a proxy server with an internally hosted replication repository, you must ensure that the proxy allows access to the replication repository.

1.5.1. Configuring a Multi-Cloud Object Gateway storage bucket as a replication repository

You can install the OpenShift Container Storage Operator and configure a Multi-Cloud Object Gateway (MCG) storage bucket as a replication repository for the Migration Toolkit for Containers (MTC).

1.5.1.1. Installing the OpenShift Container Storage Operator

You can install the OpenShift Container Storage Operator from OperatorHub.

Procedure

- In the OpenShift Container Platform web console, click Operators → OperatorHub.

- Use Filter by keyword (in this case, OCS) to find the OpenShift Container Storage Operator.

- Select the OpenShift Container Storage Operator and click Install.

- Select an Update Channel, Installation Mode, and Approval Strategy.

Click Install.

On the Installed Operators page, the OpenShift Container Storage Operator appears in the openshift-storage project with the status Succeeded.

1.5.1.2. Creating the Multi-Cloud Object Gateway storage bucket

You can create the Multi-Cloud Object Gateway (MCG) storage bucket’s custom resources (CRs).

Procedure

Log in to the OpenShift Container Platform cluster:

oc login

$ oc loginCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

NooBaaCR configuration file,noobaa.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

NooBaaobject:oc create -f noobaa.yml

$ oc create -f noobaa.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

BackingStoreCR configuration file,bs.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

BackingStoreobject:oc create -f bs.yml

$ oc create -f bs.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

BucketClassCR configuration file,bc.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

BucketClassobject:oc create -f bc.yml

$ oc create -f bc.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

ObjectBucketClaimCR configuration file,obc.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Record the bucket name for adding the replication repository to the MTC web console.

Create the

ObjectBucketClaimobject:oc create -f obc.yml

$ oc create -f obc.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Watch the resource creation process to verify that the

ObjectBucketClaimstatus isBound:watch -n 30 'oc get -n openshift-storage objectbucketclaim migstorage -o yaml'

$ watch -n 30 'oc get -n openshift-storage objectbucketclaim migstorage -o yaml'Copy to Clipboard Copied! Toggle word wrap Toggle overflow This process can take five to ten minutes.

Obtain and record the following values, which are required when you add the replication repository to the MTC web console:

S3 endpoint:

oc get route -n openshift-storage s3

$ oc get route -n openshift-storage s3Copy to Clipboard Copied! Toggle word wrap Toggle overflow S3 provider access key:

oc get secret -n openshift-storage migstorage -o go-template='{{ .data.AWS_ACCESS_KEY_ID }}' | base64 --decode$ oc get secret -n openshift-storage migstorage -o go-template='{{ .data.AWS_ACCESS_KEY_ID }}' | base64 --decodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow S3 provider secret access key:

oc get secret -n openshift-storage migstorage -o go-template='{{ .data.AWS_SECRET_ACCESS_KEY }}' | base64 --decode$ oc get secret -n openshift-storage migstorage -o go-template='{{ .data.AWS_SECRET_ACCESS_KEY }}' | base64 --decodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.5.2. Configuring an AWS S3 storage bucket as a replication repository

You can configure an AWS S3 storage bucket as a replication repository for the Migration Toolkit for Containers (MTC).

Prerequisites

- The AWS S3 storage bucket must be accessible to the source and target clusters.

- You must have the AWS CLI installed.

If you are using the snapshot copy method:

- You must have access to EC2 Elastic Block Storage (EBS).

- The source and target clusters must be in the same region.

- The source and target clusters must have the same storage class.

- The storage class must be compatible with snapshots.

Procedure

Create an AWS S3 bucket:

aws s3api create-bucket \ --bucket <bucket_name> \ --region <bucket_region>$ aws s3api create-bucket \ --bucket <bucket_name> \1 --region <bucket_region>2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the IAM user

velero:aws iam create-user --user-name velero

$ aws iam create-user --user-name veleroCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create an EC2 EBS snapshot policy:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an AWS S3 access policy for one or for all S3 buckets:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

"Resource": [ "arn:aws:s3:::*""Resource": [ "arn:aws:s3:::*"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Attach the EC2 EBS policy to

velero:aws iam put-user-policy \ --user-name velero \ --policy-name velero-ebs \ --policy-document file://velero-ec2-snapshot-policy.json

$ aws iam put-user-policy \ --user-name velero \ --policy-name velero-ebs \ --policy-document file://velero-ec2-snapshot-policy.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Attach the AWS S3 policy to

velero:aws iam put-user-policy \ --user-name velero \ --policy-name velero-s3 \ --policy-document file://velero-s3-policy.json

$ aws iam put-user-policy \ --user-name velero \ --policy-name velero-s3 \ --policy-document file://velero-s3-policy.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create an access key for

velero:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.5.3. Configuring a Google Cloud Provider storage bucket as a replication repository

You can configure a Google Cloud Provider (GCP) storage bucket as a replication repository for the Migration Toolkit for Containers (MTC).

Prerequisites

- The GCP storage bucket must be accessible to the source and target clusters.

-

You must have

gsutilinstalled. If you are using the snapshot copy method:

- The source and target clusters must be in the same region.

- The source and target clusters must have the same storage class.

- The storage class must be compatible with snapshots.

Procedure

Log in to

gsutil:gsutil init

$ gsutil initCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Welcome! This command will take you through the configuration of gcloud. Your current configuration has been set to: [default] To continue, you must login. Would you like to login (Y/n)?

Welcome! This command will take you through the configuration of gcloud. Your current configuration has been set to: [default] To continue, you must login. Would you like to login (Y/n)?Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

BUCKETvariable:BUCKET=<bucket_name>

$ BUCKET=<bucket_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify your bucket name.

Create a storage bucket:

gsutil mb gs://$BUCKET/

$ gsutil mb gs://$BUCKET/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

PROJECT_IDvariable to your active project:PROJECT_ID=`gcloud config get-value project`

$ PROJECT_ID=`gcloud config get-value project`Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

veleroIAM service account:gcloud iam service-accounts create velero \ --display-name "Velero Storage"$ gcloud iam service-accounts create velero \ --display-name "Velero Storage"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

SERVICE_ACCOUNT_EMAILvariable:SERVICE_ACCOUNT_EMAIL=`gcloud iam service-accounts list \ --filter="displayName:Velero Storage" \ --format 'value(email)'`

$ SERVICE_ACCOUNT_EMAIL=`gcloud iam service-accounts list \ --filter="displayName:Velero Storage" \ --format 'value(email)'`Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

ROLE_PERMISSIONSvariable:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

velero.servercustom role:gcloud iam roles create velero.server \ --project $PROJECT_ID \ --title "Velero Server" \ --permissions "$(IFS=","; echo "${ROLE_PERMISSIONS[*]}")"$ gcloud iam roles create velero.server \ --project $PROJECT_ID \ --title "Velero Server" \ --permissions "$(IFS=","; echo "${ROLE_PERMISSIONS[*]}")"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add IAM policy binding to the project:

gcloud projects add-iam-policy-binding $PROJECT_ID \ --member serviceAccount:$SERVICE_ACCOUNT_EMAIL \ --role projects/$PROJECT_ID/roles/velero.server$ gcloud projects add-iam-policy-binding $PROJECT_ID \ --member serviceAccount:$SERVICE_ACCOUNT_EMAIL \ --role projects/$PROJECT_ID/roles/velero.serverCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the IAM service account:

gsutil iam ch serviceAccount:$SERVICE_ACCOUNT_EMAIL:objectAdmin gs://${BUCKET}$ gsutil iam ch serviceAccount:$SERVICE_ACCOUNT_EMAIL:objectAdmin gs://${BUCKET}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the IAM service account keys to the

credentials-velerofile in the current directory:gcloud iam service-accounts keys create credentials-velero \ --iam-account $SERVICE_ACCOUNT_EMAIL

$ gcloud iam service-accounts keys create credentials-velero \ --iam-account $SERVICE_ACCOUNT_EMAILCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.5.4. Configuring a Microsoft Azure Blob storage container as a replication repository

You can configure a Microsoft Azure Blob storage container as a replication repository for the Migration Toolkit for Containers (MTC).

Prerequisites

- You must have an Azure storage account.

- You must have the Azure CLI installed.

- The Azure Blob storage container must be accessible to the source and target clusters.

If you are using the snapshot copy method:

- The source and target clusters must be in the same region.

- The source and target clusters must have the same storage class.

- The storage class must be compatible with snapshots.

Procedure

Set the

AZURE_RESOURCE_GROUPvariable:AZURE_RESOURCE_GROUP=Velero_Backups

$ AZURE_RESOURCE_GROUP=Velero_BackupsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create an Azure resource group:

az group create -n $AZURE_RESOURCE_GROUP --location <CentralUS>

$ az group create -n $AZURE_RESOURCE_GROUP --location <CentralUS>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify your location.

Set the

AZURE_STORAGE_ACCOUNT_IDvariable:AZURE_STORAGE_ACCOUNT_ID=velerobackups

$ AZURE_STORAGE_ACCOUNT_ID=velerobackupsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create an Azure storage account:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

BLOB_CONTAINERvariable:BLOB_CONTAINER=velero

$ BLOB_CONTAINER=veleroCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create an Azure Blob storage container:

az storage container create \ -n $BLOB_CONTAINER \ --public-access off \ --account-name $AZURE_STORAGE_ACCOUNT_ID

$ az storage container create \ -n $BLOB_CONTAINER \ --public-access off \ --account-name $AZURE_STORAGE_ACCOUNT_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a service principal and credentials for

velero:AZURE_SUBSCRIPTION_ID=`az account list --query '[?isDefault].id' -o tsv` \ AZURE_TENANT_ID=`az account list --query '[?isDefault].tenantId' -o tsv` \ AZURE_CLIENT_SECRET=`az ad sp create-for-rbac --name "velero" --role "Contributor" --query 'password' -o tsv` \ AZURE_CLIENT_ID=`az ad sp list --display-name "velero" --query '[0].appId' -o tsv`

$ AZURE_SUBSCRIPTION_ID=`az account list --query '[?isDefault].id' -o tsv` \ AZURE_TENANT_ID=`az account list --query '[?isDefault].tenantId' -o tsv` \ AZURE_CLIENT_SECRET=`az ad sp create-for-rbac --name "velero" --role "Contributor" --query 'password' -o tsv` \ AZURE_CLIENT_ID=`az ad sp list --display-name "velero" --query '[0].appId' -o tsv`Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the service principal credentials in the

credentials-velerofile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.6. Migrating your applications

You can migrate your applications by using the Migration Toolkit for Containers (MTC) web console or from the command line.

1.6.1. Prerequisites

The Migration Toolkit for Containers (MTC) has the following prerequisites:

-

You must be logged in as a user with

cluster-adminprivileges on all clusters. - The MTC version must be the same on all clusters.

If your application uses internal images from the

openshiftnamespace, you must ensure that the required versions of the images are present on the target cluster.You can manually update an image stream tag in order to use a deprecated OpenShift Container Platform 3 image on an OpenShift Container Platform 4.5 cluster.

Clusters:

- The source cluster must be upgraded to the latest MTC z-stream release.

-

The cluster on which the

migration-controllerpod is running must have unrestricted network access to the other clusters. - The clusters must have unrestricted network access to each other.

- The clusters must have unrestricted network access to the replication repository.

- The clusters must be able to communicate using OpenShift routes on port 443.

- The clusters must have no critical conditions.

- The clusters must be in a ready state.

Volume migration:

- The persistent volumes (PVs) must be valid.

- The PVs must be bound to persistent volume claims.

- If you copy the PVs by using the move method, the clusters must have unrestricted network access to the remote volume.

If you copy the PVs by using the snapshot copy method, the following prerequisites apply:

- The cloud provider must support snapshots.

- The volumes must have the same cloud provider.

- The volumes must be located in the same geographic region.

- The volumes must have the same storage class.

- If you perform a direct volume migration in a proxy environment, you must configure an Stunnel TCP proxy.

- If you perform a direct image migration, you must expose the internal registry of the source cluster to external traffic.

1.6.1.1. Updating deprecated internal images with podman

If your application uses images from the openshift namespace, the required versions of the images must be present on the target cluster.

If the OpenShift Container Platform 3 image is deprecated in OpenShift Container Platform 4.5, you can manually update the image stream tag by using podman.

Prerequisites

-

You must have

podmaninstalled. -

You must be logged in as a user with

cluster-adminprivileges.

Procedure

- Expose the internal registries on the source and target clusters.

-

If you are using insecure registries, add your registry host values to the

[registries.insecure]section of/etc/container/registries.confto ensure thatpodmandoes not encounter a TLS verification error. Log in to the source cluster registry:

podman login -u $(oc whoami) -p $(oc whoami -t) --tls-verify=false <source_cluster>

$ podman login -u $(oc whoami) -p $(oc whoami -t) --tls-verify=false <source_cluster>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Log in to the target cluster registry:

podman login -u $(oc whoami) -p $(oc whoami -t) --tls-verify=false <target_cluster>

$ podman login -u $(oc whoami) -p $(oc whoami -t) --tls-verify=false <target_cluster>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the deprecated image:

podman pull <source_cluster>/openshift/<image>

$ podman pull <source_cluster>/openshift/<image>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Tag the image for the target cluster registry:

podman tag <source_cluster>/openshift/<image> <target_cluster>/openshift/<image>

$ podman tag <source_cluster>/openshift/<image> <target_cluster>/openshift/<image>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Push the image to the target cluster 4 registry:

podman push <target_cluster>/openshift/<image>

$ podman push <target_cluster>/openshift/<image>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the image has a valid image stream on the target cluster:

oc get imagestream -n openshift | grep <image>

$ oc get imagestream -n openshift | grep <image>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

<image> <target_cluster>/openshift/<image> <versions> more... 6 seconds ago

<image> <target_cluster>/openshift/<image> <versions> more... 6 seconds agoCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.6.1.2. Creating a CA certificate bundle file

If you use a self-signed certificate to secure a cluster or a replication repository for the Migration Toolkit for Containers (MTC), certificate verification might fail with the following error message: Certificate signed by unknown authority.

You can create a custom CA certificate bundle file and upload it in the MTC web console when you add a cluster or a replication repository.

Procedure

Download a CA certificate from a remote endpoint and save it as a CA bundle file:

echo -n | openssl s_client -connect <host_FQDN>:<port> \ | sed -ne '/-BEGIN CERTIFICATE-/,/-END CERTIFICATE-/p' > <ca_bundle.cert>

$ echo -n | openssl s_client -connect <host_FQDN>:<port> \

| sed -ne '/-BEGIN CERTIFICATE-/,/-END CERTIFICATE-/p' > <ca_bundle.cert> 1.6.1.3. Configuring a proxy for direct volume migration

If you are performing direct volume migration from a source cluster behind a proxy, you must configure an Stunnel proxy in the MigrationController custom resource (CR). Stunnel creates a transparent tunnel between the source and target clusters for the TCP connection without changing the certificates.

Direct volume migration supports only one proxy. The source cluster cannot access the route of the target cluster if the target cluster is also behind a proxy.

Prerequisites

-

You must be logged in as a user with

cluster-adminprivileges on all clusters.

Procedure

-

Log in to the cluster on which the

MigrationControllerpod runs. Get the

MigrationControllerCR manifest:oc get migrationcontroller <migration_controller> -n openshift-migration

$ oc get migrationcontroller <migration_controller> -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the

stunnel_tcp_proxyparameter:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the Stunnel proxy:

http://<user_name>:<password>@<ip_address>:<port>.

-

Save the manifest as

migration-controller.yaml. Apply the updated manifest:

oc replace -f migration-controller.yaml -n openshift-migration

$ oc replace -f migration-controller.yaml -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.6.1.4. Writing an Ansible playbook for a migration hook

You can write an Ansible playbook to use as a migration hook. The hook is added to a migration plan by using the MTC web console or by specifying values for the spec.hooks parameters in the MigPlan custom resource (CR) manifest.

The Ansible playbook is mounted onto a hook container as a config map. The hook container runs as a job, using the cluster, service account, and namespace specified in the MigPlan CR. The hook container uses a specified service account token so that the tasks do not require authentication before they run in the cluster.

1.6.1.4.1. Ansible modules

You can use the Ansible shell module to run oc commands.

Example shell module

- hosts: localhost

gather_facts: false

tasks:

- name: get pod name

shell: oc get po --all-namespaces

- hosts: localhost

gather_facts: false

tasks:

- name: get pod name

shell: oc get po --all-namespaces

You can use kubernetes.core modules, such as k8s_info, to interact with Kubernetes resources.

Example k8s_facts module