This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.CLI tools

Learning how to use the command-line tools for OpenShift Container Platform

Abstract

Chapter 1. OpenShift Container Platform CLI tools overview

A user performs a range of operations while working on OpenShift Container Platform such as the following:

- Managing clusters

- Building, deploying, and managing applications

- Managing deployment processes

- Developing Operators

- Creating and maintaining Operator catalogs

OpenShift Container Platform offers a set of command-line interface (CLI) tools that simplify these tasks by enabling users to perform various administration and development operations from the terminal. These tools expose simple commands to manage the applications, as well as interact with each component of the system.

1.1. List of CLI tools

The following set of CLI tools are available in OpenShift Container Platform:

- OpenShift CLI (oc): This is the most commonly used CLI tool by OpenShift Container Platform users. It helps both cluster administrators and developers to perform end-to-end operations across OpenShift Container Platform using the terminal. Unlike the web console, it allows the user to work directly with the project source code using command scripts.

-

Knative CLI (kn): The Knative (

kn) CLI tool provides simple and intuitive terminal commands that can be used to interact with OpenShift Serverless components, such as Knative Serving and Eventing. -

Pipelines CLI (tkn): OpenShift Pipelines is a continuous integration and continuous delivery (CI/CD) solution in OpenShift Container Platform, which internally uses Tekton. The

tknCLI tool provides simple and intuitive commands to interact with OpenShift Pipelines using the terminal. -

opm CLI: The

opmCLI tool helps the Operator developers and cluster administrators to create and maintain the catalogs of Operators from the terminal. - Operator SDK: The Operator SDK, a component of the Operator Framework, provides a CLI tool that Operator developers can use to build, test, and deploy an Operator from the terminal. It simplifies the process of building Kubernetes-native applications, which can require deep, application-specific operational knowledge.

Chapter 2. OpenShift CLI (oc)

2.1. Getting started with the OpenShift CLI

2.1.1. About the OpenShift CLI

With the OpenShift command-line interface (CLI), the oc command, you can create applications and manage OpenShift Container Platform projects from a terminal. The OpenShift CLI is ideal in the following situations:

- Working directly with project source code

- Scripting OpenShift Container Platform operations

- Managing projects while restricted by bandwidth resources and the web console is unavailable

2.1.2. Installing the OpenShift CLI

You can install the OpenShift CLI (oc) either by downloading the binary or by using an RPM.

2.1.2.1. Installing the OpenShift CLI by downloading the binary

You can install the OpenShift CLI (oc) to interact with OpenShift Container Platform from a command-line interface. You can install oc on Linux, Windows, or macOS.

If you installed an earlier version of oc, you cannot use it to complete all of the commands in OpenShift Container Platform 4.9. Download and install the new version of oc.

Installing the OpenShift CLI on Linux

You can install the OpenShift CLI (oc) binary on Linux by using the following procedure.

Procedure

- Navigate to the OpenShift Container Platform downloads page on the Red Hat Customer Portal.

- Select the appropriate version in the Version drop-down menu.

- Click Download Now next to the OpenShift v4.9 Linux Client entry and save the file.

Unpack the archive:

tar xvf <file>

$ tar xvf <file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Place the

ocbinary in a directory that is on yourPATH.To check your

PATH, execute the following command:echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After you install the OpenShift CLI, it is available using the oc command:

oc <command>

$ oc <command>Installing the OpenShift CLI on Windows

You can install the OpenShift CLI (oc) binary on Windows by using the following procedure.

Procedure

- Navigate to the OpenShift Container Platform downloads page on the Red Hat Customer Portal.

- Select the appropriate version in the Version drop-down menu.

- Click Download Now next to the OpenShift v4.9 Windows Client entry and save the file.

- Unzip the archive with a ZIP program.

Move the

ocbinary to a directory that is on yourPATH.To check your

PATH, open the command prompt and execute the following command:path

C:\> pathCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After you install the OpenShift CLI, it is available using the oc command:

oc <command>

C:\> oc <command>Installing the OpenShift CLI on macOS

You can install the OpenShift CLI (oc) binary on macOS by using the following procedure.

Procedure

- Navigate to the OpenShift Container Platform downloads page on the Red Hat Customer Portal.

- Select the appropriate version in the Version drop-down menu.

- Click Download Now next to the OpenShift v4.9 MacOSX Client entry and save the file.

- Unpack and unzip the archive.

Move the

ocbinary to a directory on your PATH.To check your

PATH, open a terminal and execute the following command:echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After you install the OpenShift CLI, it is available using the oc command:

oc <command>

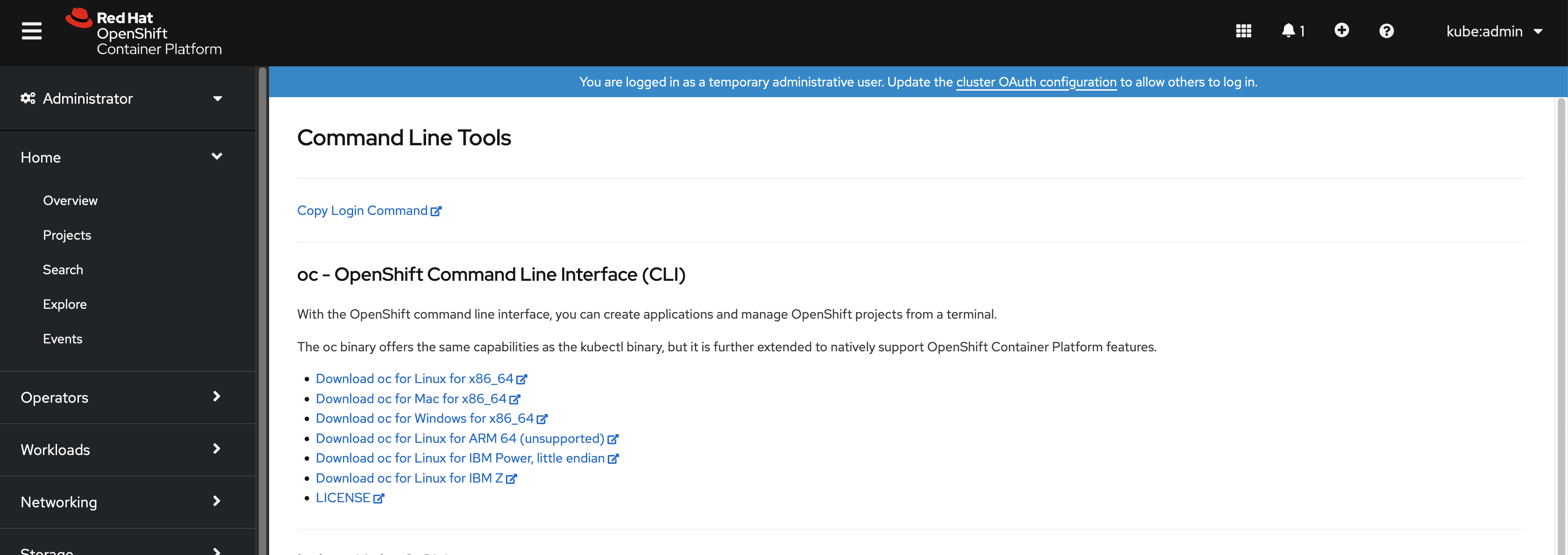

$ oc <command>2.1.2.2. Installing the OpenShift CLI by using the web console

You can install the OpenShift CLI (oc) to interact with OpenShift Container Platform from a web console. You can install oc on Linux, Windows, or macOS.

If you installed an earlier version of oc, you cannot use it to complete all of the commands in OpenShift Container Platform 4.9. Download and install the new version of oc.

2.1.2.2.1. Installing the OpenShift CLI on Linux using the web console

You can install the OpenShift CLI (oc) binary on Linux by using the following procedure.

Procedure

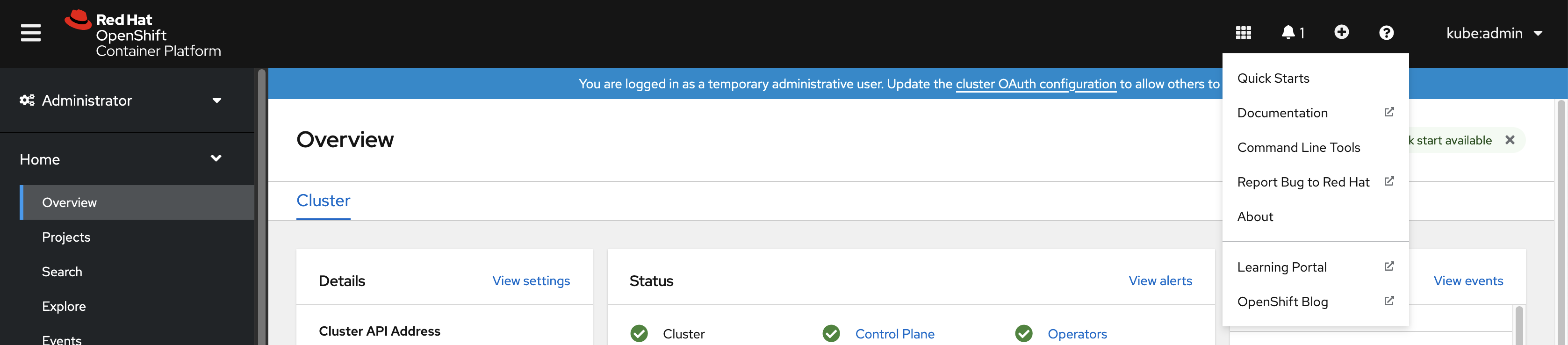

From the web console, click ?.

Click Command Line Tools.

-

Select appropriate

ocbinary for your Linux platform, and then click Download oc for Linux. - Save the file.

Unpack the archive.

tar xvf <file>

$ tar xvf <file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Move the

ocbinary to a directory that is on yourPATH.To check your

PATH, execute the following command:echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After you install the OpenShift CLI, it is available using the oc command:

oc <command>

$ oc <command>2.1.2.2.2. Installing the OpenShift CLI on Windows using the web console

You can install the OpenShift CLI (oc) binary on Winndows by using the following procedure.

Procedure

From the web console, click ?.

Click Command Line Tools.

-

Select the

ocbinary for Windows platform, and then click Download oc for Windows for x86_64. - Save the file.

- Unzip the archive with a ZIP program.

Move the

ocbinary to a directory that is on yourPATH.To check your

PATH, open the command prompt and execute the following command:path

C:\> pathCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After you install the OpenShift CLI, it is available using the oc command:

oc <command>

C:\> oc <command>2.1.2.2.3. Installing the OpenShift CLI on macOS using the web console

You can install the OpenShift CLI (oc) binary on macOS by using the following procedure.

Procedure

From the web console, click ?.

Click Command Line Tools.

-

Select the

ocbinary for macOS platform, and then click Download oc for Mac for x86_64. - Save the file.

- Unpack and unzip the archive.

Move the

ocbinary to a directory on your PATH.To check your

PATH, open a terminal and execute the following command:echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After you install the OpenShift CLI, it is available using the oc command:

oc <command>

$ oc <command>2.1.2.3. Installing the OpenShift CLI by using an RPM

For Red Hat Enterprise Linux (RHEL), you can install the OpenShift CLI (oc) as an RPM if you have an active OpenShift Container Platform subscription on your Red Hat account.

Prerequisites

- Must have root or sudo privileges.

Procedure

Register with Red Hat Subscription Manager:

subscription-manager register

# subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the latest subscription data:

subscription-manager refresh

# subscription-manager refreshCopy to Clipboard Copied! Toggle word wrap Toggle overflow List the available subscriptions:

subscription-manager list --available --matches '*OpenShift*'

# subscription-manager list --available --matches '*OpenShift*'Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the output for the previous command, find the pool ID for an OpenShift Container Platform subscription and attach the subscription to the registered system:

subscription-manager attach --pool=<pool_id>

# subscription-manager attach --pool=<pool_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enable the repositories required by OpenShift Container Platform 4.9.

For Red Hat Enterprise Linux 8:

subscription-manager repos --enable="rhocp-4.9-for-rhel-8-x86_64-rpms"

# subscription-manager repos --enable="rhocp-4.9-for-rhel-8-x86_64-rpms"Copy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7:

subscription-manager repos --enable="rhel-7-server-ose-4.9-rpms"

# subscription-manager repos --enable="rhel-7-server-ose-4.9-rpms"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Install the

openshift-clientspackage:yum install openshift-clients

# yum install openshift-clientsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After you install the CLI, it is available using the oc command:

oc <command>

$ oc <command>2.1.2.4. Installing the OpenShift CLI by using Homebrew

For macOS, you can install the OpenShift CLI (oc) by using the Homebrew package manager.

Prerequisites

-

You must have Homebrew (

brew) installed.

Procedure

Run the following command to install the openshift-cli package:

brew install openshift-cli

$ brew install openshift-cliCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.3. Logging in to the OpenShift CLI

You can log in to the OpenShift CLI (oc) to access and manage your cluster.

Prerequisites

- You must have access to an OpenShift Container Platform cluster.

-

You must have installed the OpenShift CLI (

oc).

To access a cluster that is accessible only over an HTTP proxy server, you can set the HTTP_PROXY, HTTPS_PROXY and NO_PROXY variables. These environment variables are respected by the oc CLI so that all communication with the cluster goes through the HTTP proxy.

Authentication headers are sent only when using HTTPS transport.

Procedure

Enter the

oc logincommand and pass in a user name:oc login -u user1

$ oc login -u user1Copy to Clipboard Copied! Toggle word wrap Toggle overflow When prompted, enter the required information:

Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If you are logged in to the web console, you can generate an oc login command that includes your token and server information. You can use the command to log in to the OpenShift Container Platform CLI without the interactive prompts. To generate the command, select Copy login command from the username drop-down menu at the top right of the web console.

You can now create a project or issue other commands for managing your cluster.

2.1.4. Using the OpenShift CLI

Review the following sections to learn how to complete common tasks using the CLI.

2.1.4.1. Creating a project

Use the oc new-project command to create a new project.

oc new-project my-project

$ oc new-project my-projectExample output

Now using project "my-project" on server "https://openshift.example.com:6443".

Now using project "my-project" on server "https://openshift.example.com:6443".2.1.4.2. Creating a new app

Use the oc new-app command to create a new application.

oc new-app https://github.com/sclorg/cakephp-ex

$ oc new-app https://github.com/sclorg/cakephp-exExample output

--> Found image 40de956 (9 days old) in imagestream "openshift/php" under tag "7.2" for "php"

...

Run 'oc status' to view your app.

--> Found image 40de956 (9 days old) in imagestream "openshift/php" under tag "7.2" for "php"

...

Run 'oc status' to view your app.2.1.4.3. Viewing pods

Use the oc get pods command to view the pods for the current project.

When you run oc inside a pod and do not specify a namespace, the namespace of the pod is used by default.

oc get pods -o wide

$ oc get pods -o wideExample output

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE cakephp-ex-1-build 0/1 Completed 0 5m45s 10.131.0.10 ip-10-0-141-74.ec2.internal <none> cakephp-ex-1-deploy 0/1 Completed 0 3m44s 10.129.2.9 ip-10-0-147-65.ec2.internal <none> cakephp-ex-1-ktz97 1/1 Running 0 3m33s 10.128.2.11 ip-10-0-168-105.ec2.internal <none>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

cakephp-ex-1-build 0/1 Completed 0 5m45s 10.131.0.10 ip-10-0-141-74.ec2.internal <none>

cakephp-ex-1-deploy 0/1 Completed 0 3m44s 10.129.2.9 ip-10-0-147-65.ec2.internal <none>

cakephp-ex-1-ktz97 1/1 Running 0 3m33s 10.128.2.11 ip-10-0-168-105.ec2.internal <none>2.1.4.4. Viewing pod logs

Use the oc logs command to view logs for a particular pod.

oc logs cakephp-ex-1-deploy

$ oc logs cakephp-ex-1-deployExample output

--> Scaling cakephp-ex-1 to 1 --> Success

--> Scaling cakephp-ex-1 to 1

--> Success2.1.4.5. Viewing the current project

Use the oc project command to view the current project.

oc project

$ oc projectExample output

Using project "my-project" on server "https://openshift.example.com:6443".

Using project "my-project" on server "https://openshift.example.com:6443".2.1.4.6. Viewing the status for the current project

Use the oc status command to view information about the current project, such as services, deployments, and build configs.

oc status

$ oc statusExample output

2.1.4.7. Listing supported API resources

Use the oc api-resources command to view the list of supported API resources on the server.

oc api-resources

$ oc api-resourcesExample output

NAME SHORTNAMES APIGROUP NAMESPACED KIND bindings true Binding componentstatuses cs false ComponentStatus configmaps cm true ConfigMap ...

NAME SHORTNAMES APIGROUP NAMESPACED KIND

bindings true Binding

componentstatuses cs false ComponentStatus

configmaps cm true ConfigMap

...2.1.5. Getting help

You can get help with CLI commands and OpenShift Container Platform resources in the following ways.

Use

oc helpto get a list and description of all available CLI commands:Example: Get general help for the CLI

oc help

$ oc helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

--helpflag to get help about a specific CLI command:Example: Get help for the

oc createcommandoc create --help

$ oc create --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

oc explaincommand to view the description and fields for a particular resource:Example: View documentation for the

Podresourceoc explain pods

$ oc explain podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.6. Logging out of the OpenShift CLI

You can log out the OpenShift CLI to end your current session.

Use the

oc logoutcommand.oc logout

$ oc logoutCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Logged "user1" out on "https://openshift.example.com"

Logged "user1" out on "https://openshift.example.com"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

This deletes the saved authentication token from the server and removes it from your configuration file.

2.2. Configuring the OpenShift CLI

2.2.1. Enabling tab completion

You can enable tab completion for the Bash or Zsh shells.

2.2.1.1. Enabling tab completion for Bash

After you install the OpenShift CLI (oc), you can enable tab completion to automatically complete oc commands or suggest options when you press Tab. The following procedure enables tab completion for the Bash shell.

Prerequisites

-

You must have the OpenShift CLI (

oc) installed. -

You must have the package

bash-completioninstalled.

Procedure

Save the Bash completion code to a file:

oc completion bash > oc_bash_completion

$ oc completion bash > oc_bash_completionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the file to

/etc/bash_completion.d/:sudo cp oc_bash_completion /etc/bash_completion.d/

$ sudo cp oc_bash_completion /etc/bash_completion.d/Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can also save the file to a local directory and source it from your

.bashrcfile instead.

Tab completion is enabled when you open a new terminal.

2.2.1.2. Enabling tab completion for Zsh

After you install the OpenShift CLI (oc), you can enable tab completion to automatically complete oc commands or suggest options when you press Tab. The following procedure enables tab completion for the Zsh shell.

Prerequisites

-

You must have the OpenShift CLI (

oc) installed.

Procedure

To add tab completion for

octo your.zshrcfile, run the following command:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Tab completion is enabled when you open a new terminal.

2.3. Managing CLI profiles

A CLI configuration file allows you to configure different profiles, or contexts, for use with the CLI tools overview. A context consists of user authentication and OpenShift Container Platform server information associated with a nickname.

2.3.1. About switches between CLI profiles

Contexts allow you to easily switch between multiple users across multiple OpenShift Container Platform servers, or clusters, when using CLI operations. Nicknames make managing CLI configurations easier by providing short-hand references to contexts, user credentials, and cluster details. After logging in with the CLI for the first time, OpenShift Container Platform creates a ~/.kube/config file if one does not already exist. As more authentication and connection details are provided to the CLI, either automatically during an oc login operation or by manually configuring CLI profiles, the updated information is stored in the configuration file:

CLI config file

- 1

- The

clusterssection defines connection details for OpenShift Container Platform clusters, including the address for their master server. In this example, one cluster is nicknamedopenshift1.example.com:8443and another is nicknamedopenshift2.example.com:8443. - 2

- This

contextssection defines two contexts: one nicknamedalice-project/openshift1.example.com:8443/alice, using thealice-projectproject,openshift1.example.com:8443cluster, andaliceuser, and another nicknamedjoe-project/openshift1.example.com:8443/alice, using thejoe-projectproject,openshift1.example.com:8443cluster andaliceuser. - 3

- The

current-contextparameter shows that thejoe-project/openshift1.example.com:8443/alicecontext is currently in use, allowing thealiceuser to work in thejoe-projectproject on theopenshift1.example.com:8443cluster. - 4

- The

userssection defines user credentials. In this example, the user nicknamealice/openshift1.example.com:8443uses an access token.

The CLI can support multiple configuration files which are loaded at runtime and merged together along with any override options specified from the command line. After you are logged in, you can use the oc status or oc project command to verify your current working environment:

Verify the current working environment

oc status

$ oc statusExample output

List the current project

oc project

$ oc projectExample output

Using project "joe-project" from context named "joe-project/openshift1.example.com:8443/alice" on server "https://openshift1.example.com:8443".

Using project "joe-project" from context named "joe-project/openshift1.example.com:8443/alice" on server "https://openshift1.example.com:8443".

You can run the oc login command again and supply the required information during the interactive process, to log in using any other combination of user credentials and cluster details. A context is constructed based on the supplied information if one does not already exist. If you are already logged in and want to switch to another project the current user already has access to, use the oc project command and enter the name of the project:

oc project alice-project

$ oc project alice-projectExample output

Now using project "alice-project" on server "https://openshift1.example.com:8443".

Now using project "alice-project" on server "https://openshift1.example.com:8443".

At any time, you can use the oc config view command to view your current CLI configuration, as seen in the output. Additional CLI configuration commands are also available for more advanced usage.

If you have access to administrator credentials but are no longer logged in as the default system user system:admin, you can log back in as this user at any time as long as the credentials are still present in your CLI config file. The following command logs in and switches to the default project:

oc login -u system:admin -n default

$ oc login -u system:admin -n default2.3.2. Manual configuration of CLI profiles

This section covers more advanced usage of CLI configurations. In most situations, you can use the oc login and oc project commands to log in and switch between contexts and projects.

If you want to manually configure your CLI config files, you can use the oc config command instead of directly modifying the files. The oc config command includes a number of helpful sub-commands for this purpose:

| Subcommand | Usage |

|---|---|

|

| Sets a cluster entry in the CLI config file. If the referenced cluster nickname already exists, the specified information is merged in. oc config set-cluster <cluster_nickname> [--server=<master_ip_or_fqdn>] [--certificate-authority=<path/to/certificate/authority>] [--api-version=<apiversion>] [--insecure-skip-tls-verify=true] |

|

| Sets a context entry in the CLI config file. If the referenced context nickname already exists, the specified information is merged in. oc config set-context <context_nickname> [--cluster=<cluster_nickname>] [--user=<user_nickname>] [--namespace=<namespace>] |

|

| Sets the current context using the specified context nickname. oc config use-context <context_nickname> |

|

| Sets an individual value in the CLI config file. oc config set <property_name> <property_value>

The |

|

| Unsets individual values in the CLI config file. oc config unset <property_name>

The |

|

| Displays the merged CLI configuration currently in use. oc config view Displays the result of the specified CLI config file. oc config view --config=<specific_filename> |

Example usage

-

Log in as a user that uses an access token. This token is used by the

aliceuser:

oc login https://openshift1.example.com --token=ns7yVhuRNpDM9cgzfhhxQ7bM5s7N2ZVrkZepSRf4LC0

$ oc login https://openshift1.example.com --token=ns7yVhuRNpDM9cgzfhhxQ7bM5s7N2ZVrkZepSRf4LC0- View the cluster entry automatically created:

oc config view

$ oc config viewExample output

- Update the current context to have users log in to the desired namespace:

oc config set-context `oc config current-context` --namespace=<project_name>

$ oc config set-context `oc config current-context` --namespace=<project_name>- Examine the current context, to confirm that the changes are implemented:

oc whoami -c

$ oc whoami -cAll subsequent CLI operations uses the new context, unless otherwise specified by overriding CLI options or until the context is switched.

2.3.3. Load and merge rules

You can follow these rules, when issuing CLI operations for the loading and merging order for the CLI configuration:

CLI config files are retrieved from your workstation, using the following hierarchy and merge rules:

-

If the

--configoption is set, then only that file is loaded. The flag is set once and no merging takes place. -

If the

$KUBECONFIGenvironment variable is set, then it is used. The variable can be a list of paths, and if so the paths are merged together. When a value is modified, it is modified in the file that defines the stanza. When a value is created, it is created in the first file that exists. If no files in the chain exist, then it creates the last file in the list. -

Otherwise, the

~/.kube/configfile is used and no merging takes place.

-

If the

The context to use is determined based on the first match in the following flow:

-

The value of the

--contextoption. -

The

current-contextvalue from the CLI config file. - An empty value is allowed at this stage.

-

The value of the

The user and cluster to use is determined. At this point, you may or may not have a context; they are built based on the first match in the following flow, which is run once for the user and once for the cluster:

-

The value of the

--userfor user name and--clusteroption for cluster name. -

If the

--contextoption is present, then use the context’s value. - An empty value is allowed at this stage.

-

The value of the

The actual cluster information to use is determined. At this point, you may or may not have cluster information. Each piece of the cluster information is built based on the first match in the following flow:

The values of any of the following command line options:

-

--server, -

--api-version -

--certificate-authority -

--insecure-skip-tls-verify

-

- If cluster information and a value for the attribute is present, then use it.

- If you do not have a server location, then there is an error.

The actual user information to use is determined. Users are built using the same rules as clusters, except that you can only have one authentication technique per user; conflicting techniques cause the operation to fail. Command line options take precedence over config file values. Valid command line options are:

-

--auth-path -

--client-certificate -

--client-key -

--token

-

- For any information that is still missing, default values are used and prompts are given for additional information.

2.4. Extending the OpenShift CLI with plugins

You can write and install plugins to build on the default oc commands, allowing you to perform new and more complex tasks with the OpenShift Container Platform CLI.

2.4.1. Writing CLI plugins

You can write a plugin for the OpenShift Container Platform CLI in any programming language or script that allows you to write command-line commands. Note that you can not use a plugin to overwrite an existing oc command.

Procedure

This procedure creates a simple Bash plugin that prints a message to the terminal when the oc foo command is issued.

Create a file called

oc-foo.When naming your plugin file, keep the following in mind:

-

The file must begin with

oc-orkubectl-to be recognized as a plugin. -

The file name determines the command that invokes the plugin. For example, a plugin with the file name

oc-foo-barcan be invoked by a command ofoc foo bar. You can also use underscores if you want the command to contain dashes. For example, a plugin with the file nameoc-foo_barcan be invoked by a command ofoc foo-bar.

-

The file must begin with

Add the following contents to the file.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

After you install this plugin for the OpenShift Container Platform CLI, it can be invoked using the oc foo command.

2.4.2. Installing and using CLI plugins

After you write a custom plugin for the OpenShift Container Platform CLI, you must install it to use the functionality that it provides.

Prerequisites

-

You must have the

ocCLI tool installed. -

You must have a CLI plugin file that begins with

oc-orkubectl-.

Procedure

If necessary, update the plugin file to be executable.

chmod +x <plugin_file>

$ chmod +x <plugin_file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Place the file anywhere in your

PATH, such as/usr/local/bin/.sudo mv <plugin_file> /usr/local/bin/.

$ sudo mv <plugin_file> /usr/local/bin/.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run

oc plugin listto make sure that the plugin is listed.oc plugin list

$ oc plugin listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

The following compatible plugins are available: /usr/local/bin/<plugin_file>

The following compatible plugins are available: /usr/local/bin/<plugin_file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow If your plugin is not listed here, verify that the file begins with

oc-orkubectl-, is executable, and is on yourPATH.Invoke the new command or option introduced by the plugin.

For example, if you built and installed the

kubectl-nsplugin from the Sample plugin repository, you can use the following command to view the current namespace.oc ns

$ oc nsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that the command to invoke the plugin depends on the plugin file name. For example, a plugin with the file name of

oc-foo-baris invoked by theoc foo barcommand.

2.5. OpenShift CLI developer command reference

This reference provides descriptions and example commands for OpenShift CLI (oc) developer commands. For administrator commands, see the OpenShift CLI administrator command reference.

Run oc help to list all commands or run oc <command> --help to get additional details for a specific command.

2.5.1. OpenShift CLI (oc) developer commands

2.5.1.1. oc annotate

Update the annotations on a resource

Example usage

2.5.1.2. oc api-resources

Print the supported API resources on the server

Example usage

2.5.1.3. oc api-versions

Print the supported API versions on the server, in the form of "group/version"

Example usage

# Print the supported API versions oc api-versions

# Print the supported API versions

oc api-versions2.5.1.4. oc apply

Apply a configuration to a resource by file name or stdin

Example usage

2.5.1.5. oc apply edit-last-applied

Edit latest last-applied-configuration annotations of a resource/object

Example usage

# Edit the last-applied-configuration annotations by type/name in YAML oc apply edit-last-applied deployment/nginx # Edit the last-applied-configuration annotations by file in JSON oc apply edit-last-applied -f deploy.yaml -o json

# Edit the last-applied-configuration annotations by type/name in YAML

oc apply edit-last-applied deployment/nginx

# Edit the last-applied-configuration annotations by file in JSON

oc apply edit-last-applied -f deploy.yaml -o json2.5.1.6. oc apply set-last-applied

Set the last-applied-configuration annotation on a live object to match the contents of a file

Example usage

2.5.1.7. oc apply view-last-applied

View the latest last-applied-configuration annotations of a resource/object

Example usage

# View the last-applied-configuration annotations by type/name in YAML oc apply view-last-applied deployment/nginx # View the last-applied-configuration annotations by file in JSON oc apply view-last-applied -f deploy.yaml -o json

# View the last-applied-configuration annotations by type/name in YAML

oc apply view-last-applied deployment/nginx

# View the last-applied-configuration annotations by file in JSON

oc apply view-last-applied -f deploy.yaml -o json2.5.1.8. oc attach

Attach to a running container

Example usage

2.5.1.9. oc auth can-i

Check whether an action is allowed

Example usage

2.5.1.10. oc auth reconcile

Reconciles rules for RBAC role, role binding, cluster role, and cluster role binding objects

Example usage

# Reconcile RBAC resources from a file oc auth reconcile -f my-rbac-rules.yaml

# Reconcile RBAC resources from a file

oc auth reconcile -f my-rbac-rules.yaml2.5.1.11. oc autoscale

Autoscale a deployment config, deployment, replica set, stateful set, or replication controller

Example usage

# Auto scale a deployment "foo", with the number of pods between 2 and 10, no target CPU utilization specified so a default autoscaling policy will be used oc autoscale deployment foo --min=2 --max=10 # Auto scale a replication controller "foo", with the number of pods between 1 and 5, target CPU utilization at 80% oc autoscale rc foo --max=5 --cpu-percent=80

# Auto scale a deployment "foo", with the number of pods between 2 and 10, no target CPU utilization specified so a default autoscaling policy will be used

oc autoscale deployment foo --min=2 --max=10

# Auto scale a replication controller "foo", with the number of pods between 1 and 5, target CPU utilization at 80%

oc autoscale rc foo --max=5 --cpu-percent=802.5.1.12. oc cancel-build

Cancel running, pending, or new builds

Example usage

2.5.1.13. oc cluster-info

Display cluster information

Example usage

# Print the address of the control plane and cluster services oc cluster-info

# Print the address of the control plane and cluster services

oc cluster-info2.5.1.14. oc cluster-info dump

Dump relevant information for debugging and diagnosis

Example usage

2.5.1.15. oc completion

Output shell completion code for the specified shell (bash or zsh)

Example usage

2.5.1.16. oc config current-context

Display the current-context

Example usage

# Display the current-context oc config current-context

# Display the current-context

oc config current-context2.5.1.17. oc config delete-cluster

Delete the specified cluster from the kubeconfig

Example usage

# Delete the minikube cluster oc config delete-cluster minikube

# Delete the minikube cluster

oc config delete-cluster minikube2.5.1.18. oc config delete-context

Delete the specified context from the kubeconfig

Example usage

# Delete the context for the minikube cluster oc config delete-context minikube

# Delete the context for the minikube cluster

oc config delete-context minikube2.5.1.19. oc config delete-user

Delete the specified user from the kubeconfig

Example usage

# Delete the minikube user oc config delete-user minikube

# Delete the minikube user

oc config delete-user minikube2.5.1.20. oc config get-clusters

Display clusters defined in the kubeconfig

Example usage

# List the clusters that oc knows about oc config get-clusters

# List the clusters that oc knows about

oc config get-clusters2.5.1.21. oc config get-contexts

Describe one or many contexts

Example usage

# List all the contexts in your kubeconfig file oc config get-contexts # Describe one context in your kubeconfig file oc config get-contexts my-context

# List all the contexts in your kubeconfig file

oc config get-contexts

# Describe one context in your kubeconfig file

oc config get-contexts my-context2.5.1.22. oc config get-users

Display users defined in the kubeconfig

Example usage

# List the users that oc knows about oc config get-users

# List the users that oc knows about

oc config get-users2.5.1.23. oc config rename-context

Rename a context from the kubeconfig file

Example usage

# Rename the context 'old-name' to 'new-name' in your kubeconfig file oc config rename-context old-name new-name

# Rename the context 'old-name' to 'new-name' in your kubeconfig file

oc config rename-context old-name new-name2.5.1.24. oc config set

Set an individual value in a kubeconfig file

Example usage

2.5.1.25. oc config set-cluster

Set a cluster entry in kubeconfig

Example usage

2.5.1.26. oc config set-context

Set a context entry in kubeconfig

Example usage

# Set the user field on the gce context entry without touching other values oc config set-context gce --user=cluster-admin

# Set the user field on the gce context entry without touching other values

oc config set-context gce --user=cluster-admin2.5.1.27. oc config set-credentials

Set a user entry in kubeconfig

Example usage

2.5.1.28. oc config unset

Unset an individual value in a kubeconfig file

Example usage

# Unset the current-context oc config unset current-context # Unset namespace in foo context oc config unset contexts.foo.namespace

# Unset the current-context

oc config unset current-context

# Unset namespace in foo context

oc config unset contexts.foo.namespace2.5.1.29. oc config use-context

Set the current-context in a kubeconfig file

Example usage

# Use the context for the minikube cluster oc config use-context minikube

# Use the context for the minikube cluster

oc config use-context minikube2.5.1.30. oc config view

Display merged kubeconfig settings or a specified kubeconfig file

Example usage

2.5.1.31. oc cp

Copy files and directories to and from containers

Example usage

2.5.1.32. oc create

Create a resource from a file or from stdin

Example usage

2.5.1.33. oc create build

Create a new build

Example usage

# Create a new build oc create build myapp

# Create a new build

oc create build myapp2.5.1.34. oc create clusterresourcequota

Create a cluster resource quota

Example usage

# Create a cluster resource quota limited to 10 pods oc create clusterresourcequota limit-bob --project-annotation-selector=openshift.io/requester=user-bob --hard=pods=10

# Create a cluster resource quota limited to 10 pods

oc create clusterresourcequota limit-bob --project-annotation-selector=openshift.io/requester=user-bob --hard=pods=102.5.1.35. oc create clusterrole

Create a cluster role

Example usage

2.5.1.36. oc create clusterrolebinding

Create a cluster role binding for a particular cluster role

Example usage

# Create a cluster role binding for user1, user2, and group1 using the cluster-admin cluster role oc create clusterrolebinding cluster-admin --clusterrole=cluster-admin --user=user1 --user=user2 --group=group1

# Create a cluster role binding for user1, user2, and group1 using the cluster-admin cluster role

oc create clusterrolebinding cluster-admin --clusterrole=cluster-admin --user=user1 --user=user2 --group=group12.5.1.37. oc create configmap

Create a config map from a local file, directory or literal value

Example usage

2.5.1.38. oc create cronjob

Create a cron job with the specified name

Example usage

# Create a cron job oc create cronjob my-job --image=busybox --schedule="*/1 * * * *" # Create a cron job with a command oc create cronjob my-job --image=busybox --schedule="*/1 * * * *" -- date

# Create a cron job

oc create cronjob my-job --image=busybox --schedule="*/1 * * * *"

# Create a cron job with a command

oc create cronjob my-job --image=busybox --schedule="*/1 * * * *" -- date2.5.1.39. oc create deployment

Create a deployment with the specified name

Example usage

2.5.1.40. oc create deploymentconfig

Create a deployment config with default options that uses a given image

Example usage

# Create an nginx deployment config named my-nginx oc create deploymentconfig my-nginx --image=nginx

# Create an nginx deployment config named my-nginx

oc create deploymentconfig my-nginx --image=nginx2.5.1.41. oc create identity

Manually create an identity (only needed if automatic creation is disabled)

Example usage

# Create an identity with identity provider "acme_ldap" and the identity provider username "adamjones" oc create identity acme_ldap:adamjones

# Create an identity with identity provider "acme_ldap" and the identity provider username "adamjones"

oc create identity acme_ldap:adamjones2.5.1.42. oc create imagestream

Create a new empty image stream

Example usage

# Create a new image stream oc create imagestream mysql

# Create a new image stream

oc create imagestream mysql2.5.1.43. oc create imagestreamtag

Create a new image stream tag

Example usage

# Create a new image stream tag based on an image in a remote registry oc create imagestreamtag mysql:latest --from-image=myregistry.local/mysql/mysql:5.0

# Create a new image stream tag based on an image in a remote registry

oc create imagestreamtag mysql:latest --from-image=myregistry.local/mysql/mysql:5.02.5.1.44. oc create ingress

Create an ingress with the specified name

Example usage

2.5.1.45. oc create job

Create a job with the specified name

Example usage

2.5.1.46. oc create namespace

Create a namespace with the specified name

Example usage

# Create a new namespace named my-namespace oc create namespace my-namespace

# Create a new namespace named my-namespace

oc create namespace my-namespace2.5.1.47. oc create poddisruptionbudget

Create a pod disruption budget with the specified name

Example usage

2.5.1.48. oc create priorityclass

Create a priority class with the specified name

Example usage

2.5.1.49. oc create quota

Create a quota with the specified name

Example usage

# Create a new resource quota named my-quota oc create quota my-quota --hard=cpu=1,memory=1G,pods=2,services=3,replicationcontrollers=2,resourcequotas=1,secrets=5,persistentvolumeclaims=10 # Create a new resource quota named best-effort oc create quota best-effort --hard=pods=100 --scopes=BestEffort

# Create a new resource quota named my-quota

oc create quota my-quota --hard=cpu=1,memory=1G,pods=2,services=3,replicationcontrollers=2,resourcequotas=1,secrets=5,persistentvolumeclaims=10

# Create a new resource quota named best-effort

oc create quota best-effort --hard=pods=100 --scopes=BestEffort2.5.1.50. oc create role

Create a role with single rule

Example usage

2.5.1.51. oc create rolebinding

Create a role binding for a particular role or cluster role

Example usage

# Create a role binding for user1, user2, and group1 using the admin cluster role oc create rolebinding admin --clusterrole=admin --user=user1 --user=user2 --group=group1

# Create a role binding for user1, user2, and group1 using the admin cluster role

oc create rolebinding admin --clusterrole=admin --user=user1 --user=user2 --group=group12.5.1.52. oc create route edge

Create a route that uses edge TLS termination

Example usage

2.5.1.53. oc create route passthrough

Create a route that uses passthrough TLS termination

Example usage

2.5.1.54. oc create route reencrypt

Create a route that uses reencrypt TLS termination

Example usage

2.5.1.55. oc create secret docker-registry

Create a secret for use with a Docker registry

Example usage

# If you don't already have a .dockercfg file, you can create a dockercfg secret directly by using: oc create secret docker-registry my-secret --docker-server=DOCKER_REGISTRY_SERVER --docker-username=DOCKER_USER --docker-password=DOCKER_PASSWORD --docker-email=DOCKER_EMAIL # Create a new secret named my-secret from ~/.docker/config.json oc create secret docker-registry my-secret --from-file=.dockerconfigjson=path/to/.docker/config.json

# If you don't already have a .dockercfg file, you can create a dockercfg secret directly by using:

oc create secret docker-registry my-secret --docker-server=DOCKER_REGISTRY_SERVER --docker-username=DOCKER_USER --docker-password=DOCKER_PASSWORD --docker-email=DOCKER_EMAIL

# Create a new secret named my-secret from ~/.docker/config.json

oc create secret docker-registry my-secret --from-file=.dockerconfigjson=path/to/.docker/config.json2.5.1.56. oc create secret generic

Create a secret from a local file, directory, or literal value

Example usage

2.5.1.57. oc create secret tls

Create a TLS secret

Example usage

# Create a new TLS secret named tls-secret with the given key pair oc create secret tls tls-secret --cert=path/to/tls.cert --key=path/to/tls.key

# Create a new TLS secret named tls-secret with the given key pair

oc create secret tls tls-secret --cert=path/to/tls.cert --key=path/to/tls.key2.5.1.58. oc create service clusterip

Create a ClusterIP service

Example usage

# Create a new ClusterIP service named my-cs oc create service clusterip my-cs --tcp=5678:8080 # Create a new ClusterIP service named my-cs (in headless mode) oc create service clusterip my-cs --clusterip="None"

# Create a new ClusterIP service named my-cs

oc create service clusterip my-cs --tcp=5678:8080

# Create a new ClusterIP service named my-cs (in headless mode)

oc create service clusterip my-cs --clusterip="None"2.5.1.59. oc create service externalname

Create an ExternalName service

Example usage

# Create a new ExternalName service named my-ns oc create service externalname my-ns --external-name bar.com

# Create a new ExternalName service named my-ns

oc create service externalname my-ns --external-name bar.com2.5.1.60. oc create service loadbalancer

Create a LoadBalancer service

Example usage

# Create a new LoadBalancer service named my-lbs oc create service loadbalancer my-lbs --tcp=5678:8080

# Create a new LoadBalancer service named my-lbs

oc create service loadbalancer my-lbs --tcp=5678:80802.5.1.61. oc create service nodeport

Create a NodePort service

Example usage

# Create a new NodePort service named my-ns oc create service nodeport my-ns --tcp=5678:8080

# Create a new NodePort service named my-ns

oc create service nodeport my-ns --tcp=5678:80802.5.1.62. oc create serviceaccount

Create a service account with the specified name

Example usage

# Create a new service account named my-service-account oc create serviceaccount my-service-account

# Create a new service account named my-service-account

oc create serviceaccount my-service-account2.5.1.63. oc create user

Manually create a user (only needed if automatic creation is disabled)

Example usage

# Create a user with the username "ajones" and the display name "Adam Jones" oc create user ajones --full-name="Adam Jones"

# Create a user with the username "ajones" and the display name "Adam Jones"

oc create user ajones --full-name="Adam Jones"2.5.1.64. oc create useridentitymapping

Manually map an identity to a user

Example usage

# Map the identity "acme_ldap:adamjones" to the user "ajones" oc create useridentitymapping acme_ldap:adamjones ajones

# Map the identity "acme_ldap:adamjones" to the user "ajones"

oc create useridentitymapping acme_ldap:adamjones ajones2.5.1.65. oc debug

Launch a new instance of a pod for debugging

Example usage

2.5.1.66. oc delete

Delete resources by file names, stdin, resources and names, or by resources and label selector

Example usage

2.5.1.67. oc describe

Show details of a specific resource or group of resources

Example usage

2.5.1.68. oc diff

Diff the live version against a would-be applied version

Example usage

# Diff resources included in pod.json oc diff -f pod.json # Diff file read from stdin cat service.yaml | oc diff -f -

# Diff resources included in pod.json

oc diff -f pod.json

# Diff file read from stdin

cat service.yaml | oc diff -f -2.5.1.69. oc edit

Edit a resource on the server

Example usage

2.5.1.70. oc ex dockergc

Perform garbage collection to free space in docker storage

Example usage

# Perform garbage collection with the default settings oc ex dockergc

# Perform garbage collection with the default settings

oc ex dockergc2.5.1.71. oc exec

Execute a command in a container

Example usage

2.5.1.72. oc explain

Get documentation for a resource

Example usage

# Get the documentation of the resource and its fields oc explain pods # Get the documentation of a specific field of a resource oc explain pods.spec.containers

# Get the documentation of the resource and its fields

oc explain pods

# Get the documentation of a specific field of a resource

oc explain pods.spec.containers2.5.1.73. oc expose

Expose a replicated application as a service or route

Example usage

2.5.1.74. oc extract

Extract secrets or config maps to disk

Example usage

2.5.1.75. oc get

Display one or many resources

Example usage

2.5.1.76. oc idle

Idle scalable resources

Example usage

# Idle the scalable controllers associated with the services listed in to-idle.txt $ oc idle --resource-names-file to-idle.txt

# Idle the scalable controllers associated with the services listed in to-idle.txt

$ oc idle --resource-names-file to-idle.txt2.5.1.77. oc image append

Add layers to images and push them to a registry

Example usage

2.5.1.78. oc image extract

Copy files from an image to the file system

Example usage

2.5.1.79. oc image info

Display information about an image

Example usage

2.5.1.80. oc image mirror

Mirror images from one repository to another

Example usage

2.5.1.81. oc import-image

Import images from a container image registry

Example usage

2.5.1.82. oc kustomize

Build a kustomization target from a directory or URL.

Example usage

2.5.1.83. oc label

Update the labels on a resource

Example usage

2.5.1.84. oc login

Log in to a server

Example usage

2.5.1.85. oc logout

End the current server session

Example usage

# Log out oc logout

# Log out

oc logout2.5.1.86. oc logs

Print the logs for a container in a pod

Example usage

2.5.1.87. oc new-app

Create a new application

Example usage

2.5.1.88. oc new-build

Create a new build configuration

Example usage

2.5.1.89. oc new-project

Request a new project

Example usage

# Create a new project with minimal information oc new-project web-team-dev # Create a new project with a display name and description oc new-project web-team-dev --display-name="Web Team Development" --description="Development project for the web team."

# Create a new project with minimal information

oc new-project web-team-dev

# Create a new project with a display name and description

oc new-project web-team-dev --display-name="Web Team Development" --description="Development project for the web team."2.5.1.90. oc observe

Observe changes to resources and react to them (experimental)

Example usage

2.5.1.91. oc patch

Update fields of a resource

Example usage

2.5.1.92. oc policy add-role-to-user

Add a role to users or service accounts for the current project

Example usage

# Add the 'view' role to user1 for the current project oc policy add-role-to-user view user1 # Add the 'edit' role to serviceaccount1 for the current project oc policy add-role-to-user edit -z serviceaccount1

# Add the 'view' role to user1 for the current project

oc policy add-role-to-user view user1

# Add the 'edit' role to serviceaccount1 for the current project

oc policy add-role-to-user edit -z serviceaccount12.5.1.93. oc policy scc-review

Check which service account can create a pod

Example usage

2.5.1.94. oc policy scc-subject-review

Check whether a user or a service account can create a pod

Example usage

2.5.1.95. oc port-forward

Forward one or more local ports to a pod

Example usage

2.5.1.96. oc process

Process a template into list of resources

Example usage

2.5.1.97. oc project

Switch to another project

Example usage

# Switch to the 'myapp' project oc project myapp # Display the project currently in use oc project

# Switch to the 'myapp' project

oc project myapp

# Display the project currently in use

oc project2.5.1.98. oc projects

Display existing projects

Example usage

# List all projects oc projects

# List all projects

oc projects2.5.1.99. oc proxy

Run a proxy to the Kubernetes API server

Example usage

2.5.1.100. oc registry info

Print information about the integrated registry

Example usage

# Display information about the integrated registry oc registry info

# Display information about the integrated registry

oc registry info2.5.1.101. oc registry login

Log in to the integrated registry

Example usage

2.5.1.102. oc replace

Replace a resource by file name or stdin

Example usage

2.5.1.103. oc rollback

Revert part of an application back to a previous deployment

Example usage

2.5.1.104. oc rollout cancel

Cancel the in-progress deployment

Example usage

# Cancel the in-progress deployment based on 'nginx' oc rollout cancel dc/nginx

# Cancel the in-progress deployment based on 'nginx'

oc rollout cancel dc/nginx2.5.1.105. oc rollout history

View rollout history

Example usage

# View the rollout history of a deployment oc rollout history dc/nginx # View the details of deployment revision 3 oc rollout history dc/nginx --revision=3

# View the rollout history of a deployment

oc rollout history dc/nginx

# View the details of deployment revision 3

oc rollout history dc/nginx --revision=32.5.1.106. oc rollout latest

Start a new rollout for a deployment config with the latest state from its triggers

Example usage

# Start a new rollout based on the latest images defined in the image change triggers oc rollout latest dc/nginx # Print the rolled out deployment config oc rollout latest dc/nginx -o json

# Start a new rollout based on the latest images defined in the image change triggers

oc rollout latest dc/nginx

# Print the rolled out deployment config

oc rollout latest dc/nginx -o json2.5.1.107. oc rollout pause

Mark the provided resource as paused

Example usage

# Mark the nginx deployment as paused. Any current state of # the deployment will continue its function, new updates to the deployment will not # have an effect as long as the deployment is paused oc rollout pause dc/nginx

# Mark the nginx deployment as paused. Any current state of

# the deployment will continue its function, new updates to the deployment will not

# have an effect as long as the deployment is paused

oc rollout pause dc/nginx2.5.1.108. oc rollout restart

Restart a resource

Example usage

# Restart a deployment oc rollout restart deployment/nginx # Restart a daemon set oc rollout restart daemonset/abc

# Restart a deployment

oc rollout restart deployment/nginx

# Restart a daemon set

oc rollout restart daemonset/abc2.5.1.109. oc rollout resume

Resume a paused resource

Example usage

# Resume an already paused deployment oc rollout resume dc/nginx

# Resume an already paused deployment

oc rollout resume dc/nginx2.5.1.110. oc rollout retry

Retry the latest failed rollout

Example usage

# Retry the latest failed deployment based on 'frontend' # The deployer pod and any hook pods are deleted for the latest failed deployment oc rollout retry dc/frontend

# Retry the latest failed deployment based on 'frontend'

# The deployer pod and any hook pods are deleted for the latest failed deployment

oc rollout retry dc/frontend2.5.1.111. oc rollout status

Show the status of the rollout

Example usage

# Watch the status of the latest rollout oc rollout status dc/nginx

# Watch the status of the latest rollout

oc rollout status dc/nginx2.5.1.112. oc rollout undo

Undo a previous rollout

Example usage

# Roll back to the previous deployment oc rollout undo dc/nginx # Roll back to deployment revision 3. The replication controller for that version must exist oc rollout undo dc/nginx --to-revision=3

# Roll back to the previous deployment

oc rollout undo dc/nginx

# Roll back to deployment revision 3. The replication controller for that version must exist

oc rollout undo dc/nginx --to-revision=32.5.1.113. oc rsh

Start a shell session in a container

Example usage

2.5.1.114. oc rsync

Copy files between a local file system and a pod

Example usage

# Synchronize a local directory with a pod directory oc rsync ./local/dir/ POD:/remote/dir # Synchronize a pod directory with a local directory oc rsync POD:/remote/dir/ ./local/dir

# Synchronize a local directory with a pod directory

oc rsync ./local/dir/ POD:/remote/dir

# Synchronize a pod directory with a local directory

oc rsync POD:/remote/dir/ ./local/dir2.5.1.115. oc run

Run a particular image on the cluster

Example usage

2.5.1.116. oc scale

Set a new size for a deployment, replica set, or replication controller

Example usage

2.5.1.117. oc secrets link

Link secrets to a service account

Example usage

2.5.1.118. oc secrets unlink

Detach secrets from a service account

Example usage

# Unlink a secret currently associated with a service account oc secrets unlink serviceaccount-name secret-name another-secret-name ...

# Unlink a secret currently associated with a service account

oc secrets unlink serviceaccount-name secret-name another-secret-name ...2.5.1.119. oc serviceaccounts create-kubeconfig

Generate a kubeconfig file for a service account

Example usage

# Create a kubeconfig file for service account 'default' oc serviceaccounts create-kubeconfig 'default' > default.kubeconfig

# Create a kubeconfig file for service account 'default'

oc serviceaccounts create-kubeconfig 'default' > default.kubeconfig2.5.1.120. oc serviceaccounts get-token

Get a token assigned to a service account

Example usage

# Get the service account token from service account 'default' oc serviceaccounts get-token 'default'

# Get the service account token from service account 'default'

oc serviceaccounts get-token 'default'2.5.1.121. oc serviceaccounts new-token

Generate a new token for a service account

Example usage

2.5.1.122. oc set build-hook

Update a build hook on a build config

Example usage

2.5.1.123. oc set build-secret

Update a build secret on a build config

Example usage

2.5.1.124. oc set data

Update the data within a config map or secret

Example usage

2.5.1.125. oc set deployment-hook

Update a deployment hook on a deployment config

Example usage

2.5.1.126. oc set env

Update environment variables on a pod template

Example usage

2.5.1.127. oc set image

Update the image of a pod template

Example usage

2.5.1.128. oc set image-lookup

Change how images are resolved when deploying applications

Example usage

2.5.1.129. oc set probe

Update a probe on a pod template

Example usage

2.5.1.130. oc set resources

Update resource requests/limits on objects with pod templates

Example usage

2.5.1.131. oc set route-backends

Update the backends for a route

Example usage

2.5.1.132. oc set selector

Set the selector on a resource

Example usage

# Set the labels and selector before creating a deployment/service pair. oc create service clusterip my-svc --clusterip="None" -o yaml --dry-run | oc set selector --local -f - 'environment=qa' -o yaml | oc create -f - oc create deployment my-dep -o yaml --dry-run | oc label --local -f - environment=qa -o yaml | oc create -f -

# Set the labels and selector before creating a deployment/service pair.

oc create service clusterip my-svc --clusterip="None" -o yaml --dry-run | oc set selector --local -f - 'environment=qa' -o yaml | oc create -f -

oc create deployment my-dep -o yaml --dry-run | oc label --local -f - environment=qa -o yaml | oc create -f -2.5.1.133. oc set serviceaccount

Update the service account of a resource

Example usage

# Set deployment nginx-deployment's service account to serviceaccount1 oc set serviceaccount deployment nginx-deployment serviceaccount1 # Print the result (in YAML format) of updated nginx deployment with service account from a local file, without hitting the API server oc set sa -f nginx-deployment.yaml serviceaccount1 --local --dry-run -o yaml

# Set deployment nginx-deployment's service account to serviceaccount1

oc set serviceaccount deployment nginx-deployment serviceaccount1

# Print the result (in YAML format) of updated nginx deployment with service account from a local file, without hitting the API server

oc set sa -f nginx-deployment.yaml serviceaccount1 --local --dry-run -o yaml2.5.1.134. oc set subject

Update the user, group, or service account in a role binding or cluster role binding

Example usage

2.5.1.135. oc set triggers

Update the triggers on one or more objects

Example usage

2.5.1.136. oc set volumes

Update volumes on a pod template

Example usage

2.5.1.137. oc start-build

Start a new build

Example usage

2.5.1.138. oc status

Show an overview of the current project

Example usage

2.5.1.139. oc tag

Tag existing images into image streams

Example usage

2.5.1.140. oc version

Print the client and server version information

Example usage

2.5.1.141. oc wait

Experimental: Wait for a specific condition on one or many resources

Example usage

2.5.1.142. oc whoami

Return information about the current session

Example usage

# Display the currently authenticated user oc whoami

# Display the currently authenticated user

oc whoami2.6. OpenShift CLI administrator command reference

This reference provides descriptions and example commands for OpenShift CLI (oc) administrator commands. You must have cluster-admin or equivalent permissions to use these commands.

For developer commands, see the OpenShift CLI developer command reference.

Run oc adm -h to list all administrator commands or run oc <command> --help to get additional details for a specific command.

2.6.1. OpenShift CLI (oc) administrator commands

2.6.1.1. oc adm build-chain

Output the inputs and dependencies of your builds

Example usage

2.6.1.2. oc adm catalog mirror

Mirror an operator-registry catalog

Example usage

2.6.1.3. oc adm certificate approve

Approve a certificate signing request

Example usage

# Approve CSR 'csr-sqgzp' oc adm certificate approve csr-sqgzp

# Approve CSR 'csr-sqgzp'

oc adm certificate approve csr-sqgzp2.6.1.4. oc adm certificate deny

Deny a certificate signing request

Example usage

# Deny CSR 'csr-sqgzp' oc adm certificate deny csr-sqgzp

# Deny CSR 'csr-sqgzp'

oc adm certificate deny csr-sqgzp2.6.1.5. oc adm completion

Output shell completion code for the specified shell (bash or zsh)

Example usage

2.6.1.6. oc adm config current-context

Display the current-context

Example usage

# Display the current-context oc config current-context

# Display the current-context

oc config current-context2.6.1.7. oc adm config delete-cluster

Delete the specified cluster from the kubeconfig

Example usage

# Delete the minikube cluster oc config delete-cluster minikube

# Delete the minikube cluster

oc config delete-cluster minikube2.6.1.8. oc adm config delete-context

Delete the specified context from the kubeconfig

Example usage

# Delete the context for the minikube cluster oc config delete-context minikube

# Delete the context for the minikube cluster

oc config delete-context minikube2.6.1.9. oc adm config delete-user

Delete the specified user from the kubeconfig

Example usage

# Delete the minikube user oc config delete-user minikube

# Delete the minikube user

oc config delete-user minikube2.6.1.10. oc adm config get-clusters

Display clusters defined in the kubeconfig

Example usage

# List the clusters that oc knows about oc config get-clusters

# List the clusters that oc knows about

oc config get-clusters2.6.1.11. oc adm config get-contexts

Describe one or many contexts

Example usage

# List all the contexts in your kubeconfig file oc config get-contexts # Describe one context in your kubeconfig file oc config get-contexts my-context

# List all the contexts in your kubeconfig file

oc config get-contexts

# Describe one context in your kubeconfig file

oc config get-contexts my-context2.6.1.12. oc adm config get-users

Display users defined in the kubeconfig

Example usage

# List the users that oc knows about oc config get-users

# List the users that oc knows about

oc config get-users2.6.1.13. oc adm config rename-context

Rename a context from the kubeconfig file

Example usage

# Rename the context 'old-name' to 'new-name' in your kubeconfig file oc config rename-context old-name new-name

# Rename the context 'old-name' to 'new-name' in your kubeconfig file

oc config rename-context old-name new-name2.6.1.14. oc adm config set

Set an individual value in a kubeconfig file

Example usage

2.6.1.15. oc adm config set-cluster

Set a cluster entry in kubeconfig

Example usage

2.6.1.16. oc adm config set-context

Set a context entry in kubeconfig

Example usage

# Set the user field on the gce context entry without touching other values oc config set-context gce --user=cluster-admin

# Set the user field on the gce context entry without touching other values

oc config set-context gce --user=cluster-admin2.6.1.17. oc adm config set-credentials

Set a user entry in kubeconfig

Example usage

2.6.1.18. oc adm config unset

Unset an individual value in a kubeconfig file

Example usage

# Unset the current-context oc config unset current-context # Unset namespace in foo context oc config unset contexts.foo.namespace

# Unset the current-context

oc config unset current-context

# Unset namespace in foo context

oc config unset contexts.foo.namespace2.6.1.19. oc adm config use-context

Set the current-context in a kubeconfig file

Example usage

# Use the context for the minikube cluster oc config use-context minikube

# Use the context for the minikube cluster

oc config use-context minikube2.6.1.20. oc adm config view

Display merged kubeconfig settings or a specified kubeconfig file

Example usage

2.6.1.21. oc adm cordon

Mark node as unschedulable

Example usage

# Mark node "foo" as unschedulable oc adm cordon foo

# Mark node "foo" as unschedulable

oc adm cordon foo2.6.1.22. oc adm create-bootstrap-project-template

Create a bootstrap project template

Example usage

# Output a bootstrap project template in YAML format to stdout oc adm create-bootstrap-project-template -o yaml

# Output a bootstrap project template in YAML format to stdout

oc adm create-bootstrap-project-template -o yaml2.6.1.23. oc adm create-error-template

Create an error page template

Example usage

# Output a template for the error page to stdout oc adm create-error-template

# Output a template for the error page to stdout

oc adm create-error-template2.6.1.24. oc adm create-login-template

Create a login template

Example usage

# Output a template for the login page to stdout oc adm create-login-template

# Output a template for the login page to stdout

oc adm create-login-template2.6.1.25. oc adm create-provider-selection-template

Create a provider selection template

Example usage

# Output a template for the provider selection page to stdout oc adm create-provider-selection-template

# Output a template for the provider selection page to stdout

oc adm create-provider-selection-template2.6.1.26. oc adm drain

Drain node in preparation for maintenance

Example usage

# Drain node "foo", even if there are pods not managed by a replication controller, replica set, job, daemon set or stateful set on it oc adm drain foo --force # As above, but abort if there are pods not managed by a replication controller, replica set, job, daemon set or stateful set, and use a grace period of 15 minutes oc adm drain foo --grace-period=900

# Drain node "foo", even if there are pods not managed by a replication controller, replica set, job, daemon set or stateful set on it

oc adm drain foo --force

# As above, but abort if there are pods not managed by a replication controller, replica set, job, daemon set or stateful set, and use a grace period of 15 minutes

oc adm drain foo --grace-period=9002.6.1.27. oc adm groups add-users

Add users to a group

Example usage

# Add user1 and user2 to my-group oc adm groups add-users my-group user1 user2

# Add user1 and user2 to my-group

oc adm groups add-users my-group user1 user22.6.1.28. oc adm groups new

Create a new group

Example usage

2.6.1.29. oc adm groups prune

Remove old OpenShift groups referencing missing records from an external provider

Example usage

2.6.1.30. oc adm groups remove-users

Remove users from a group

Example usage

# Remove user1 and user2 from my-group oc adm groups remove-users my-group user1 user2

# Remove user1 and user2 from my-group

oc adm groups remove-users my-group user1 user22.6.1.31. oc adm groups sync

Sync OpenShift groups with records from an external provider

Example usage

2.6.1.32. oc adm inspect

Collect debugging data for a given resource

Example usage

2.6.1.33. oc adm migrate template-instances

Update template instances to point to the latest group-version-kinds

Example usage

# Perform a dry-run of updating all objects oc adm migrate template-instances # To actually perform the update, the confirm flag must be appended oc adm migrate template-instances --confirm

# Perform a dry-run of updating all objects

oc adm migrate template-instances

# To actually perform the update, the confirm flag must be appended

oc adm migrate template-instances --confirm2.6.1.34. oc adm must-gather

Launch a new instance of a pod for gathering debug information

Example usage

2.6.1.35. oc adm new-project

Create a new project

Example usage

# Create a new project using a node selector oc adm new-project myproject --node-selector='type=user-node,region=east'

# Create a new project using a node selector

oc adm new-project myproject --node-selector='type=user-node,region=east'2.6.1.36. oc adm node-logs

Display and filter node logs

Example usage

2.6.1.37. oc adm pod-network isolate-projects

Isolate project network

Example usage

# Provide isolation for project p1 oc adm pod-network isolate-projects <p1> # Allow all projects with label name=top-secret to have their own isolated project network oc adm pod-network isolate-projects --selector='name=top-secret'

# Provide isolation for project p1

oc adm pod-network isolate-projects <p1>

# Allow all projects with label name=top-secret to have their own isolated project network

oc adm pod-network isolate-projects --selector='name=top-secret'2.6.1.38. oc adm pod-network join-projects

Join project network

Example usage

# Allow project p2 to use project p1 network oc adm pod-network join-projects --to=<p1> <p2> # Allow all projects with label name=top-secret to use project p1 network oc adm pod-network join-projects --to=<p1> --selector='name=top-secret'

# Allow project p2 to use project p1 network

oc adm pod-network join-projects --to=<p1> <p2>

# Allow all projects with label name=top-secret to use project p1 network

oc adm pod-network join-projects --to=<p1> --selector='name=top-secret'2.6.1.39. oc adm pod-network make-projects-global

Make project network global

Example usage

# Allow project p1 to access all pods in the cluster and vice versa oc adm pod-network make-projects-global <p1> # Allow all projects with label name=share to access all pods in the cluster and vice versa oc adm pod-network make-projects-global --selector='name=share'

# Allow project p1 to access all pods in the cluster and vice versa

oc adm pod-network make-projects-global <p1>

# Allow all projects with label name=share to access all pods in the cluster and vice versa

oc adm pod-network make-projects-global --selector='name=share'2.6.1.40. oc adm policy add-role-to-user

Add a role to users or service accounts for the current project

Example usage

# Add the 'view' role to user1 for the current project oc policy add-role-to-user view user1 # Add the 'edit' role to serviceaccount1 for the current project oc policy add-role-to-user edit -z serviceaccount1

# Add the 'view' role to user1 for the current project

oc policy add-role-to-user view user1

# Add the 'edit' role to serviceaccount1 for the current project

oc policy add-role-to-user edit -z serviceaccount12.6.1.41. oc adm policy add-scc-to-group

Add a security context constraint to groups

Example usage

# Add the 'restricted' security context constraint to group1 and group2 oc adm policy add-scc-to-group restricted group1 group2

# Add the 'restricted' security context constraint to group1 and group2

oc adm policy add-scc-to-group restricted group1 group22.6.1.42. oc adm policy add-scc-to-user

Add a security context constraint to users or a service account

Example usage

# Add the 'restricted' security context constraint to user1 and user2 oc adm policy add-scc-to-user restricted user1 user2 # Add the 'privileged' security context constraint to serviceaccount1 in the current namespace oc adm policy add-scc-to-user privileged -z serviceaccount1

# Add the 'restricted' security context constraint to user1 and user2

oc adm policy add-scc-to-user restricted user1 user2

# Add the 'privileged' security context constraint to serviceaccount1 in the current namespace

oc adm policy add-scc-to-user privileged -z serviceaccount12.6.1.43. oc adm policy scc-review

Check which service account can create a pod

Example usage

2.6.1.44. oc adm policy scc-subject-review

Check whether a user or a service account can create a pod

Example usage

2.6.1.45. oc adm prune builds

Remove old completed and failed builds

Example usage

2.6.1.46. oc adm prune deployments

Remove old completed and failed deployment configs

Example usage

# Dry run deleting all but the last complete deployment for every deployment config oc adm prune deployments --keep-complete=1 # To actually perform the prune operation, the confirm flag must be appended oc adm prune deployments --keep-complete=1 --confirm

# Dry run deleting all but the last complete deployment for every deployment config

oc adm prune deployments --keep-complete=1

# To actually perform the prune operation, the confirm flag must be appended

oc adm prune deployments --keep-complete=1 --confirm2.6.1.47. oc adm prune groups

Remove old OpenShift groups referencing missing records from an external provider

Example usage

2.6.1.48. oc adm prune images

Remove unreferenced images

Example usage

2.6.1.49. oc adm release extract

Extract the contents of an update payload to disk

Example usage