This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Serverless

OpenShift Serverless installation, usage, and release notes

Abstract

Chapter 1. Release notes

For additional information about the OpenShift Serverless life cycle and supported platforms, refer to the Platform Life Cycle Policy.

Release notes contain information about new and deprecated features, breaking changes, and known issues. The following release notes apply for the most recent OpenShift Serverless releases on OpenShift Container Platform.

For an overview of OpenShift Serverless functionality, see About OpenShift Serverless.

OpenShift Serverless is based on the open source Knative project.

For details about the latest Knative component releases, see the Knative blog.

1.1. About API versions

API versions are an important measure of the development status of certain features and custom resources in OpenShift Serverless. Creating resources on your cluster that do not use the correct API version can cause issues in your deployment.

The OpenShift Serverless Operator automatically upgrades older resources that use deprecated versions of APIs to use the latest version. For example, if you have created resources on your cluster that use older versions of the ApiServerSource API, such as v1beta1, the OpenShift Serverless Operator automatically updates these resources to use the v1 version of the API when this is available and the v1beta1 version is deprecated.

After they have been deprecated, older versions of APIs might be removed in any upcoming release. Using deprecated versions of APIs does not cause resources to fail. However, if you try to use a version of an API that has been removed, it will cause resources to fail. Ensure that your manifests are updated to use the latest version to avoid issues.

1.2. Generally Available and Technology Preview features

Features which are Generally Available (GA) are fully supported and are suitable for production use. Technology Preview (TP) features are experimental features and are not intended for production use. See the Technology Preview scope of support on the Red Hat Customer Portal for more information about TP features.

The following table provides information about which OpenShift Serverless features are GA and which are TP:

| Feature | 1.26 | 1.27 | 1.28 |

|---|---|---|---|

|

| GA | GA | GA |

| Quarkus functions | GA | GA | GA |

| Node.js functions | TP | TP | GA |

| TypeScript functions | TP | TP | GA |

| Python functions | - | - | TP |

| Service Mesh mTLS | GA | GA | GA |

|

| GA | GA | GA |

| HTTPS redirection | GA | GA | GA |

| Kafka broker | GA | GA | GA |

| Kafka sink | GA | GA | GA |

| Init containers support for Knative services | GA | GA | GA |

| PVC support for Knative services | GA | GA | GA |

| TLS for internal traffic | TP | TP | TP |

| Namespace-scoped brokers | - | TP | TP |

|

| - | - | TP |

1.3. Deprecated and removed features

Some features that were Generally Available (GA) or a Technology Preview (TP) in previous releases have been deprecated or removed. Deprecated functionality is still included in OpenShift Serverless and continues to be supported; however, it will be removed in a future release of this product and is not recommended for new deployments.

For the most recent list of major functionality deprecated and removed within OpenShift Serverless, refer to the following table:

| Feature | 1.20 | 1.21 | 1.22 to 1.26 | 1.27 | 1.28 |

|---|---|---|---|---|---|

|

| Deprecated | Deprecated | Removed | Removed | Removed |

|

| Deprecated | Removed | Removed | Removed | Removed |

|

Serving and Eventing | - | - | - | Deprecated | Deprecated |

|

| - | - | - | - | Deprecated |

1.4. Release notes for Red Hat OpenShift Serverless 1.28

OpenShift Serverless 1.28 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

1.4.1. New features

- OpenShift Serverless now uses Knative Serving 1.7.

- OpenShift Serverless now uses Knative Eventing 1.7.

- OpenShift Serverless now uses Kourier 1.7.

-

OpenShift Serverless now uses Knative (

kn) CLI 1.7. - OpenShift Serverless now uses Knative Kafka 1.7.

-

The

kn funcCLI plug-in now usesfunc1.9.1 version. - Node.js and TypeScript runtimes for OpenShift Serverless Functions are now Generally Available (GA).

- Python runtime for OpenShift Serverless Functions is now available as a Technology Preview.

- Multi-container support for Knative Serving is now available as a Technology Preview. This feature allows you to use a single Knative service to deploy a multi-container pod.

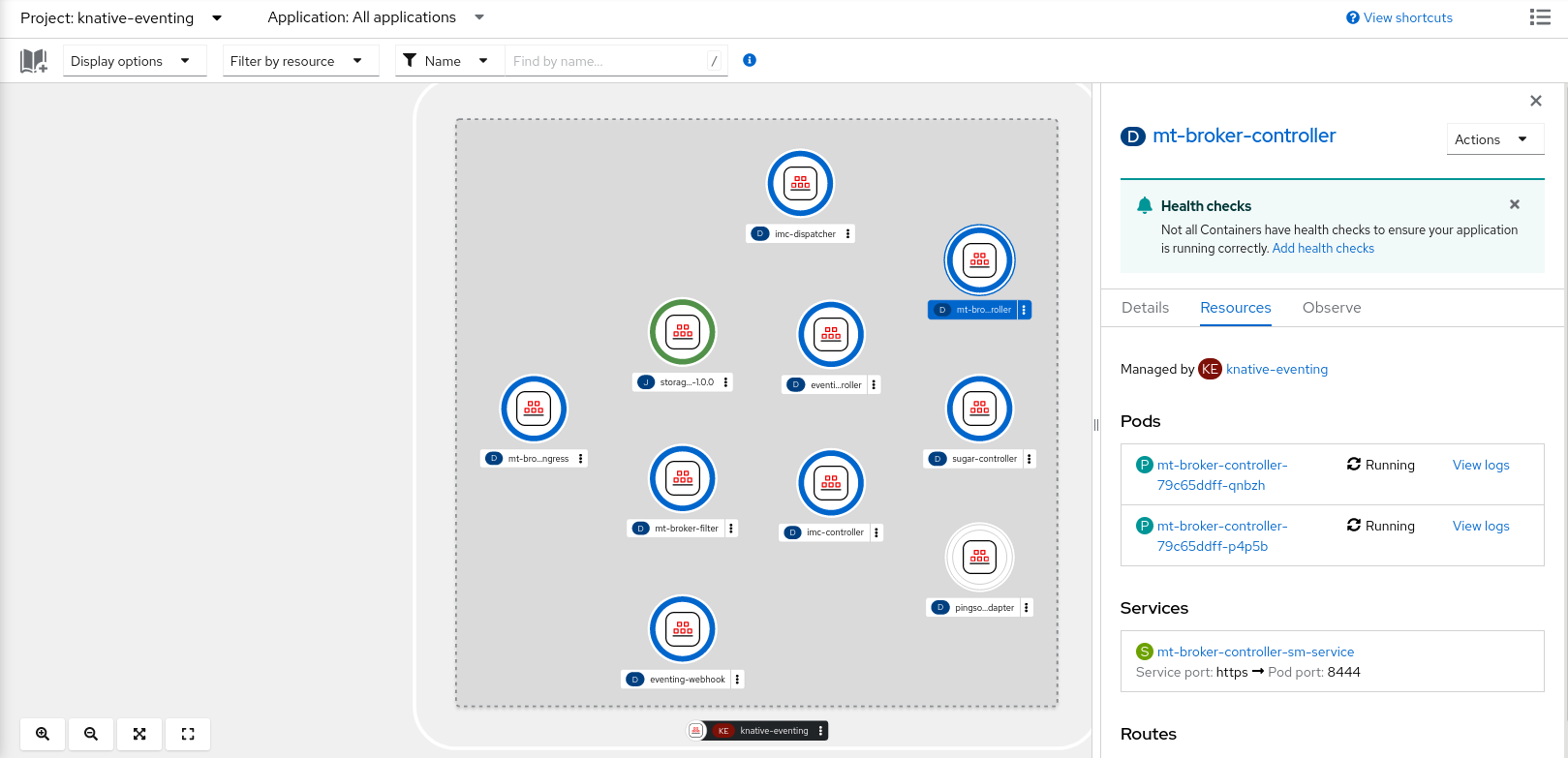

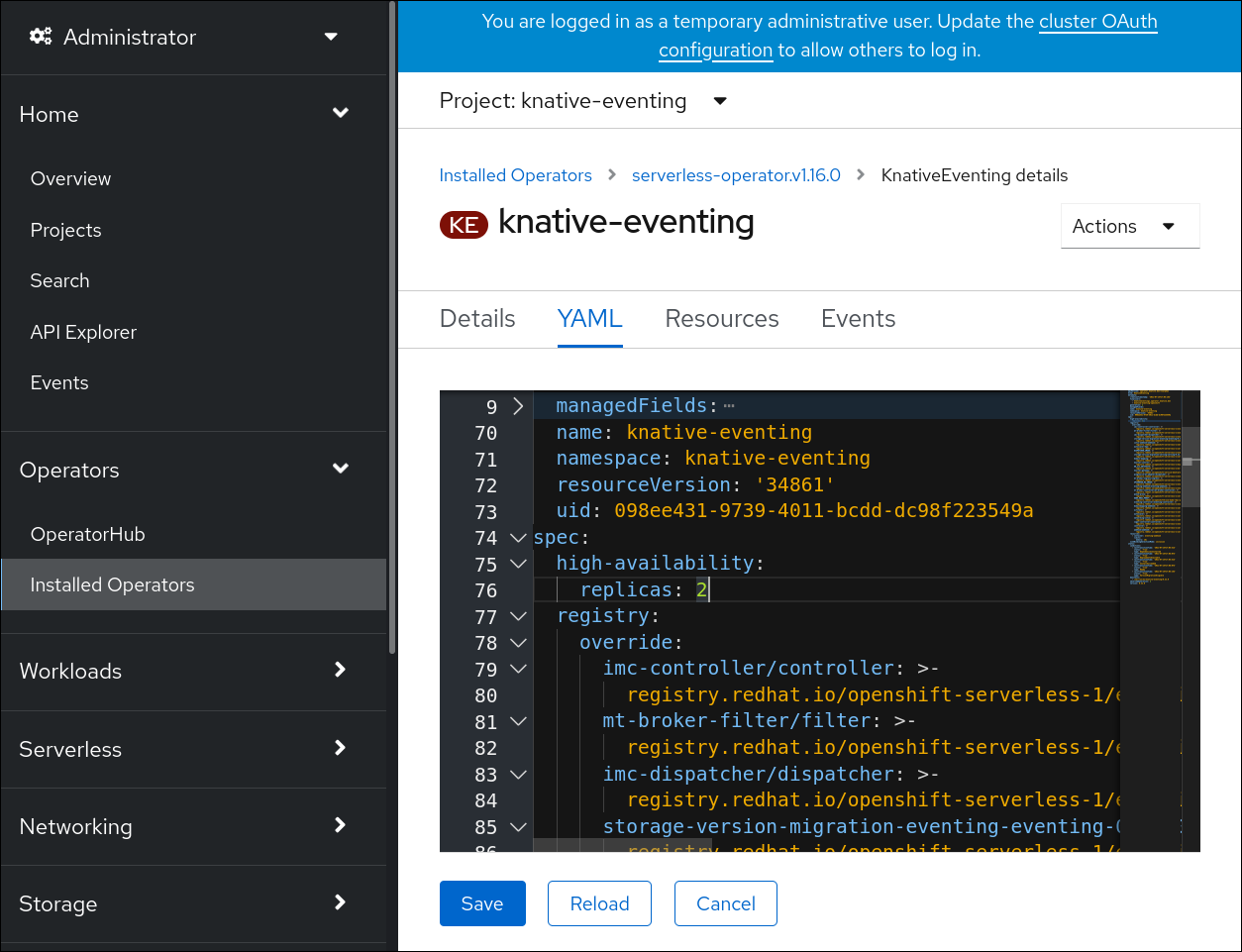

In OpenShift Serverless 1.29 or later, the following components of Knative Eventing will be scaled down from two pods to one:

-

imc-controller -

imc-dispatcher -

mt-broker-controller -

mt-broker-filter -

mt-broker-ingress

-

The

serverless.openshift.io/enable-secret-informer-filteringannotation for the Serving CR is now deprecated. The annotation is valid only for Istio, and not for Kourier.With OpenShift Serverless 1.28, the OpenShift Serverless Operator allows injecting the environment variable

ENABLE_SECRET_INFORMER_FILTERING_BY_CERT_UIDfor bothnet-istioandnet-kourier.If you enable secret filtering, all of your secrets need to be labeled with

networking.internal.knative.dev/certificate-uid: "<id>". Otherwise, Knative Serving does not detect them, which leads to failures. You must label both new and existing secrets.In one of the following OpenShift Serverless releases, secret filtering will become enabled by default. To prevent failures, label your secrets in advance.

1.4.2. Known issues

Currently, runtimes for Python are not supported for OpenShift Serverless Functions on IBM Power, IBM zSystems, and IBM® LinuxONE.

Node.js, TypeScript, and Quarkus functions are supported on these architectures.

On the Windows platform, Python functions cannot be locally built, run, or deployed using the Source-to-Image builder due to the

app.shfile permissions.To work around this problem, use the Windows Subsystem for Linux.

1.5. Release notes for Red Hat OpenShift Serverless 1.27

OpenShift Serverless 1.27 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

OpenShift Serverless 1.26 is the earliest release that is fully supported on OpenShift Container Platform 4.12. OpenShift Serverless 1.25 and older does not deploy on OpenShift Container Platform 4.12.

For this reason, before upgrading OpenShift Container Platform to version 4.12, first upgrade OpenShift Serverless to version 1.26 or 1.27.

1.5.1. New features

- OpenShift Serverless now uses Knative Serving 1.6.

- OpenShift Serverless now uses Knative Eventing 1.6.

- OpenShift Serverless now uses Kourier 1.6.

-

OpenShift Serverless now uses Knative (

kn) CLI 1.6. - OpenShift Serverless now uses Knative Kafka 1.6.

-

The

kn funcCLI plug-in now usesfunc1.8.1. - Namespace-scoped brokers are now available as a Technology Preview. Such brokers can be used, for instance, to implement role-based access control (RBAC) policies.

-

KafkaSinknow uses theCloudEventbinary content mode by default. The binary content mode is more efficient than the structured mode because it uses headers in its body instead of aCloudEvent. For example, for the HTTP protocol, it uses HTTP headers. - You can now use the gRPC framework over the HTTP/2 protocol for external traffic using the OpenShift Route on OpenShift Container Platform 4.10 and later. This improves efficiency and speed of the communications between the client and server.

-

API version

v1alpha1of the Knative Operator Serving and Eventings CRDs is deprecated in 1.27. It will be removed in future versions. Red Hat strongly recommends to use thev1beta1version instead. This does not affect the existing installations, because CRDs are updated automatically when upgrading the Serverless Operator. - The delivery timeout feature is now enabled by default. It allows you to specify the timeout for each sent HTTP request. The feature remains a Technology Preview.

1.5.2. Fixed issues

-

Previously, Knative services sometimes did not get into the

Readystate, reporting waiting for the load balancer to be ready. This issue has been fixed.

1.5.3. Known issues

-

Integrating OpenShift Serverless with Red Hat OpenShift Service Mesh causes the

net-kourierpod to run out of memory on startup when too many secrets are present on the cluster. Namespace-scoped brokers might leave

ClusterRoleBindingsin the user namespace even after deletion of namespace-scoped brokers.If this happens, delete the

ClusterRoleBindingnamedrbac-proxy-reviews-prom-rb-knative-kafka-broker-data-plane-{{.Namespace}}in the user namespace.If you use

net-istiofor Ingress and enable mTLS via SMCP usingsecurity.dataPlane.mtls: true, Service Mesh deploysDestinationRulesfor the*.localhost, which does not allowDomainMappingfor OpenShift Serverless.To work around this issue, enable mTLS by deploying

PeerAuthenticationinstead of usingsecurity.dataPlane.mtls: true.

1.6. Release notes for Red Hat OpenShift Serverless 1.26

OpenShift Serverless 1.26 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

1.6.1. New features

- OpenShift Serverless Functions with Quarkus is now GA.

- OpenShift Serverless now uses Knative Serving 1.5.

- OpenShift Serverless now uses Knative Eventing 1.5.

- OpenShift Serverless now uses Kourier 1.5.

-

OpenShift Serverless now uses Knative (

kn) CLI 1.5. - OpenShift Serverless now uses Knative Kafka 1.5.

- OpenShift Serverless now uses Knative Operator 1.3.

-

The

kn funcCLI plugin now usesfunc1.8.1. - Persistent volume claims (PVCs) are now GA. PVCs provide permanent data storage for your Knative services.

The new trigger filters feature is now available as a Developer Preview. It allows users to specify a set of filter expressions, where each expression evaluates to either true or false for each event.

To enable new trigger filters, add the

new-trigger-filters: enabledentry in the section of theKnativeEventingtype in the operator config map:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Knative Operator 1.3 adds the updated

v1beta1version of the API foroperator.knative.dev.To update from

v1alpha1tov1beta1in yourKnativeServingandKnativeEventingcustom resource config maps, edit theapiVersionkey:Example

KnativeServingcustom resource config mapapiVersion: operator.knative.dev/v1beta1 kind: KnativeServing ...

apiVersion: operator.knative.dev/v1beta1 kind: KnativeServing ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

KnativeEventingcustom resource config mapapiVersion: operator.knative.dev/v1beta1 kind: KnativeEventing ...

apiVersion: operator.knative.dev/v1beta1 kind: KnativeEventing ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.6.2. Fixed issues

- Previously, Federal Information Processing Standards (FIPS) mode was disabled for Kafka broker, Kafka source, and Kafka sink. This has been fixed, and FIPS mode is now available.

1.6.3. Known issues

If you use

net-istiofor Ingress and enable mTLS via SMCP usingsecurity.dataPlane.mtls: true, Service Mesh deploysDestinationRulesfor the*.localhost, which does not allowDomainMappingfor OpenShift Serverless.To work around this issue, enable mTLS by deploying

PeerAuthenticationinstead of usingsecurity.dataPlane.mtls: true.

1.7. Release notes for Red Hat OpenShift Serverless 1.25.0

OpenShift Serverless 1.25.0 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

1.7.1. New features

- OpenShift Serverless now uses Knative Serving 1.4.

- OpenShift Serverless now uses Knative Eventing 1.4.

- OpenShift Serverless now uses Kourier 1.4.

-

OpenShift Serverless now uses Knative (

kn) CLI 1.4. - OpenShift Serverless now uses Knative Kafka 1.4.

-

The

kn funcCLI plugin now usesfunc1.7.0. - Integrated development environment (IDE) plugins for creating and deploying functions are now available for Visual Studio Code and IntelliJ.

Knative Kafka broker is now GA. Knative Kafka broker is a highly performant implementation of the Knative broker API, directly targeting Apache Kafka.

It is recommended to not use the MT-Channel-Broker, but the Knative Kafka broker instead.

-

Knative Kafka sink is now GA. A

KafkaSinktakes aCloudEventand sends it to an Apache Kafka topic. Events can be specified in either structured or binary content modes. - Enabling TLS for internal traffic is now available as a Technology Preview.

1.7.2. Fixed issues

- Previously, Knative Serving had an issue where the readiness probe failed if the container was restarted after a liveness probe fail. This issue has been fixed.

1.7.3. Known issues

- The Federal Information Processing Standards (FIPS) mode is disabled for Kafka broker, Kafka source, and Kafka sink.

-

The

SinkBindingobject does not support custom revision names for services. The Knative Serving Controller pod adds a new informer to watch secrets in the cluster. The informer includes the secrets in the cache, which increases memory consumption of the controller pod.

If the pod runs out of memory, you can work around the issue by increasing the memory limit for the deployment.

If you use

net-istiofor Ingress and enable mTLS via SMCP usingsecurity.dataPlane.mtls: true, Service Mesh deploysDestinationRulesfor the*.localhost, which does not allowDomainMappingfor OpenShift Serverless.To work around this issue, enable mTLS by deploying

PeerAuthenticationinstead of usingsecurity.dataPlane.mtls: true.

1.8. Release notes for Red Hat OpenShift Serverless 1.24.0

OpenShift Serverless 1.24.0 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

1.8.1. New features

- OpenShift Serverless now uses Knative Serving 1.3.

- OpenShift Serverless now uses Knative Eventing 1.3.

- OpenShift Serverless now uses Kourier 1.3.

-

OpenShift Serverless now uses Knative

knCLI 1.3. - OpenShift Serverless now uses Knative Kafka 1.3.

-

The

kn funcCLI plugin now usesfunc0.24. - Init containers support for Knative services is now generally available (GA).

- OpenShift Serverless logic is now available as a Developer Preview. It enables defining declarative workflow models for managing serverless applications.

- You can now use the cost management service with OpenShift Serverless.

1.8.2. Fixed issues

Integrating OpenShift Serverless with Red Hat OpenShift Service Mesh causes the

net-istio-controllerpod to run out of memory on startup when too many secrets are present on the cluster.It is now possible to enable secret filtering, which causes

net-istio-controllerto consider only secrets with anetworking.internal.knative.dev/certificate-uidlabel, thus reducing the amount of memory needed.- The OpenShift Serverless Functions Technology Preview now uses Cloud Native Buildpacks by default to build container images.

1.8.3. Known issues

- The Federal Information Processing Standards (FIPS) mode is disabled for Kafka broker, Kafka source, and Kafka sink.

In OpenShift Serverless 1.23, support for KafkaBindings and the

kafka-bindingwebhook were removed. However, an existingkafkabindings.webhook.kafka.sources.knative.dev MutatingWebhookConfigurationmight remain, pointing to thekafka-source-webhookservice, which no longer exists.For certain specifications of KafkaBindings on the cluster,

kafkabindings.webhook.kafka.sources.knative.dev MutatingWebhookConfigurationmight be configured to pass any create and update events to various resources, such as Deployments, Knative Services, or Jobs, through the webhook, which would then fail.To work around this issue, manually delete

kafkabindings.webhook.kafka.sources.knative.dev MutatingWebhookConfigurationfrom the cluster after upgrading to OpenShift Serverless 1.23:oc delete mutatingwebhookconfiguration kafkabindings.webhook.kafka.sources.knative.dev

$ oc delete mutatingwebhookconfiguration kafkabindings.webhook.kafka.sources.knative.devCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you use

net-istiofor Ingress and enable mTLS via SMCP usingsecurity.dataPlane.mtls: true, Service Mesh deploysDestinationRulesfor the*.localhost, which does not allowDomainMappingfor OpenShift Serverless.To work around this issue, enable mTLS by deploying

PeerAuthenticationinstead of usingsecurity.dataPlane.mtls: true.

1.9. Release notes for Red Hat OpenShift Serverless 1.23.0

OpenShift Serverless 1.23.0 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

1.9.1. New features

- OpenShift Serverless now uses Knative Serving 1.2.

- OpenShift Serverless now uses Knative Eventing 1.2.

- OpenShift Serverless now uses Kourier 1.2.

-

OpenShift Serverless now uses Knative (

kn) CLI 1.2. - OpenShift Serverless now uses Knative Kafka 1.2.

-

The

kn funcCLI plugin now usesfunc0.24. -

It is now possible to use the

kafka.eventing.knative.dev/external.topicannotation with the Kafka broker. This annotation makes it possible to use an existing externally managed topic instead of the broker creating its own internal topic. -

The

kafka-ch-controllerandkafka-webhookKafka components no longer exist. These components have been replaced by thekafka-webhook-eventingcomponent. - The OpenShift Serverless Functions Technology Preview now uses Source-to-Image (S2I) by default to build container images.

1.9.2. Known issues

- The Federal Information Processing Standards (FIPS) mode is disabled for Kafka broker, Kafka source, and Kafka sink.

-

If you delete a namespace that includes a Kafka broker, the namespace finalizer may fail to be removed if the broker’s

auth.secret.ref.namesecret is deleted before the broker. Running OpenShift Serverless with a large number of Knative services can cause Knative activator pods to run close to their default memory limits of 600MB. These pods might be restarted if memory consumption reaches this limit. Requests and limits for the activator deployment can be configured by modifying the

KnativeServingcustom resource:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

If you are using Cloud Native Buildpacks as the local build strategy for a function,

kn funcis unable to automatically start podman or use an SSH tunnel to a remote daemon. The workaround for these issues is to have a Docker or podman daemon already running on the local development computer before deploying a function. - On-cluster function builds currently fail for Quarkus and Golang runtimes. They work correctly for Node, Typescript, Python, and Springboot runtimes.

If you use

net-istiofor Ingress and enable mTLS via SMCP usingsecurity.dataPlane.mtls: true, Service Mesh deploysDestinationRulesfor the*.localhost, which does not allowDomainMappingfor OpenShift Serverless.To work around this issue, enable mTLS by deploying

PeerAuthenticationinstead of usingsecurity.dataPlane.mtls: true.

1.10. Release notes for Red Hat OpenShift Serverless 1.22.0

OpenShift Serverless 1.22.0 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

1.10.1. New features

- OpenShift Serverless now uses Knative Serving 1.1.

- OpenShift Serverless now uses Knative Eventing 1.1.

- OpenShift Serverless now uses Kourier 1.1.

-

OpenShift Serverless now uses Knative (

kn) CLI 1.1. - OpenShift Serverless now uses Knative Kafka 1.1.

-

The

kn funcCLI plugin now usesfunc0.23. - Init containers support for Knative services is now available as a Technology Preview.

- Persistent volume claim (PVC) support for Knative services is now available as a Technology Preview.

-

The

knative-serving,knative-serving-ingress,knative-eventingandknative-kafkasystem namespaces now have theknative.openshift.io/part-of: "openshift-serverless"label by default. - The Knative Eventing - Kafka Broker/Trigger dashboard has been added, which allows visualizing Kafka broker and trigger metrics in the web console.

- The Knative Eventing - KafkaSink dashboard has been added, which allows visualizing KafkaSink metrics in the web console.

- The Knative Eventing - Broker/Trigger dashboard is now called Knative Eventing - Channel-based Broker/Trigger.

-

The

knative.openshift.io/part-of: "openshift-serverless"label has substituted theknative.openshift.io/system-namespacelabel. -

Naming style in Knative Serving YAML configuration files changed from camel case (

ExampleName) to hyphen style (example-name). Beginning with this release, use the hyphen style notation when creating or editing Knative Serving YAML configuration files.

1.10.2. Known issues

- The Federal Information Processing Standards (FIPS) mode is disabled for Kafka broker, Kafka source, and Kafka sink.

1.11. Release notes for Red Hat OpenShift Serverless 1.21.0

OpenShift Serverless 1.21.0 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

1.11.1. New features

- OpenShift Serverless now uses Knative Serving 1.0

- OpenShift Serverless now uses Knative Eventing 1.0.

- OpenShift Serverless now uses Kourier 1.0.

-

OpenShift Serverless now uses Knative (

kn) CLI 1.0. - OpenShift Serverless now uses Knative Kafka 1.0.

-

The

kn funcCLI plugin now usesfunc0.21. - The Kafka sink is now available as a Technology Preview.

-

The Knative open source project has begun to deprecate camel-cased configuration keys in favor of using kebab-cased keys consistently. As a result, the

defaultExternalSchemekey, previously mentioned in the OpenShift Serverless 1.18.0 release notes, is now deprecated and replaced by thedefault-external-schemekey. Usage instructions for the key remain the same.

1.11.2. Fixed issues

-

In OpenShift Serverless 1.20.0, there was an event delivery issue affecting the use of

kn event sendto send events to a service. This issue is now fixed. -

In OpenShift Serverless 1.20.0 (

func0.20), TypeScript functions created with thehttptemplate failed to deploy on the cluster. This issue is now fixed. -

In OpenShift Serverless 1.20.0 (

func0.20), deploying a function using thegcr.ioregistry failed with an error. This issue is now fixed. -

In OpenShift Serverless 1.20.0 (

func0.20), creating a Springboot function project directory with thekn func createcommand and then running thekn func buildcommand failed with an error message. This issue is now fixed. -

In OpenShift Serverless 1.19.0 (

func0.19), some runtimes were unable to build a function by using podman. This issue is now fixed.

1.11.3. Known issues

Currently, the domain mapping controller cannot process the URI of a broker, which contains a path that is currently not supported.

This means that, if you want to use a

DomainMappingcustom resource (CR) to map a custom domain to a broker, you must configure theDomainMappingCR with the broker’s ingress service, and append the exact path of the broker to the custom domain:Example

DomainMappingCRCopy to Clipboard Copied! Toggle word wrap Toggle overflow The URI for the broker is then

<domain-name>/<broker-namespace>/<broker-name>.

1.12. Release notes for Red Hat OpenShift Serverless 1.20.0

OpenShift Serverless 1.20.0 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

1.12.1. New features

- OpenShift Serverless now uses Knative Serving 0.26.

- OpenShift Serverless now uses Knative Eventing 0.26.

- OpenShift Serverless now uses Kourier 0.26.

-

OpenShift Serverless now uses Knative (

kn) CLI 0.26. - OpenShift Serverless now uses Knative Kafka 0.26.

-

The

kn funcCLI plugin now usesfunc0.20. The Kafka broker is now available as a Technology Preview.

ImportantThe Kafka broker, which is currently in Technology Preview, is not supported on FIPS.

-

The

kn eventplugin is now available as a Technology Preview. -

The

--min-scaleand--max-scaleflags for thekn service createcommand have been deprecated. Use the--scale-minand--scale-maxflags instead.

1.12.2. Known issues

OpenShift Serverless deploys Knative services with a default address that uses HTTPS. When sending an event to a resource inside the cluster, the sender does not have the cluster certificate authority (CA) configured. This causes event delivery to fail, unless the cluster uses globally accepted certificates.

For example, an event delivery to a publicly accessible address works:

kn event send --to-url https://ce-api.foo.example.com/

$ kn event send --to-url https://ce-api.foo.example.com/Copy to Clipboard Copied! Toggle word wrap Toggle overflow On the other hand, this delivery fails if the service uses a public address with an HTTPS certificate issued by a custom CA:

kn event send --to Service:serving.knative.dev/v1:event-display

$ kn event send --to Service:serving.knative.dev/v1:event-displayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Sending an event to other addressable objects, such as brokers or channels, is not affected by this issue and works as expected.

- The Kafka broker currently does not work on a cluster with Federal Information Processing Standards (FIPS) mode enabled.

If you create a Springboot function project directory with the

kn func createcommand, subsequent running of thekn func buildcommand fails with this error message:[analyzer] no stack metadata found at path '' [analyzer] ERROR: failed to : set API for buildpack 'paketo-buildpacks/ca-certificates@3.0.2': buildpack API version '0.7' is incompatible with the lifecycle

[analyzer] no stack metadata found at path '' [analyzer] ERROR: failed to : set API for buildpack 'paketo-buildpacks/ca-certificates@3.0.2': buildpack API version '0.7' is incompatible with the lifecycleCopy to Clipboard Copied! Toggle word wrap Toggle overflow As a workaround, you can change the

builderproperty togcr.io/paketo-buildpacks/builder:basein the function configuration filefunc.yaml.Deploying a function using the

gcr.ioregistry fails with this error message:Error: failed to get credentials: failed to verify credentials: status code: 404

Error: failed to get credentials: failed to verify credentials: status code: 404Copy to Clipboard Copied! Toggle word wrap Toggle overflow As a workaround, use a different registry than

gcr.io, such asquay.ioordocker.io.TypeScript functions created with the

httptemplate fail to deploy on the cluster.As a workaround, in the

func.yamlfile, replace the following section:buildEnvs: []

buildEnvs: []Copy to Clipboard Copied! Toggle word wrap Toggle overflow with this:

buildEnvs: - name: BP_NODE_RUN_SCRIPTS value: build

buildEnvs: - name: BP_NODE_RUN_SCRIPTS value: buildCopy to Clipboard Copied! Toggle word wrap Toggle overflow In

funcversion 0.20, some runtimes might be unable to build a function by using podman. You might see an error message similar to the following:ERROR: failed to image: error during connect: Get "http://%2Fvar%2Frun%2Fdocker.sock/v1.40/info": EOF

ERROR: failed to image: error during connect: Get "http://%2Fvar%2Frun%2Fdocker.sock/v1.40/info": EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following workaround exists for this issue:

Update the podman service by adding

--time=0to the serviceExecStartdefinition:Example service configuration

ExecStart=/usr/bin/podman $LOGGING system service --time=0

ExecStart=/usr/bin/podman $LOGGING system service --time=0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the podman service by running the following commands:

systemctl --user daemon-reload

$ systemctl --user daemon-reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow systemctl restart --user podman.socket

$ systemctl restart --user podman.socketCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Alternatively, you can expose the podman API by using TCP:

podman system service --time=0 tcp:127.0.0.1:5534 & export DOCKER_HOST=tcp://127.0.0.1:5534

$ podman system service --time=0 tcp:127.0.0.1:5534 & export DOCKER_HOST=tcp://127.0.0.1:5534Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.13. Release notes for Red Hat OpenShift Serverless 1.19.0

OpenShift Serverless 1.19.0 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

1.13.1. New features

- OpenShift Serverless now uses Knative Serving 0.25.

- OpenShift Serverless now uses Knative Eventing 0.25.

- OpenShift Serverless now uses Kourier 0.25.

-

OpenShift Serverless now uses Knative (

kn) CLI 0.25. - OpenShift Serverless now uses Knative Kafka 0.25.

-

The

kn funcCLI plugin now usesfunc0.19. -

The

KafkaBindingAPI is deprecated in OpenShift Serverless 1.19.0 and will be removed in a future release. - HTTPS redirection is now supported and can be configured either globally for a cluster or per each Knative service.

1.13.2. Fixed issues

- In previous releases, the Kafka channel dispatcher waited only for the local commit to succeed before responding, which might have caused lost events in the case of an Apache Kafka node failure. The Kafka channel dispatcher now waits for all in-sync replicas to commit before responding.

1.13.3. Known issues

In

funcversion 0.19, some runtimes might be unable to build a function by using podman. You might see an error message similar to the following:ERROR: failed to image: error during connect: Get "http://%2Fvar%2Frun%2Fdocker.sock/v1.40/info": EOF

ERROR: failed to image: error during connect: Get "http://%2Fvar%2Frun%2Fdocker.sock/v1.40/info": EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following workaround exists for this issue:

Update the podman service by adding

--time=0to the serviceExecStartdefinition:Example service configuration

ExecStart=/usr/bin/podman $LOGGING system service --time=0

ExecStart=/usr/bin/podman $LOGGING system service --time=0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the podman service by running the following commands:

systemctl --user daemon-reload

$ systemctl --user daemon-reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow systemctl restart --user podman.socket

$ systemctl restart --user podman.socketCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Alternatively, you can expose the podman API by using TCP:

podman system service --time=0 tcp:127.0.0.1:5534 & export DOCKER_HOST=tcp://127.0.0.1:5534

$ podman system service --time=0 tcp:127.0.0.1:5534 & export DOCKER_HOST=tcp://127.0.0.1:5534Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.14. Release notes for Red Hat OpenShift Serverless 1.18.0

OpenShift Serverless 1.18.0 is now available. New features, changes, and known issues that pertain to OpenShift Serverless on OpenShift Container Platform are included in this topic.

1.14.1. New features

- OpenShift Serverless now uses Knative Serving 0.24.0.

- OpenShift Serverless now uses Knative Eventing 0.24.0.

- OpenShift Serverless now uses Kourier 0.24.0.

-

OpenShift Serverless now uses Knative (

kn) CLI 0.24.0. - OpenShift Serverless now uses Knative Kafka 0.24.7.

-

The

kn funcCLI plugin now usesfunc0.18.0. In the upcoming OpenShift Serverless 1.19.0 release, the URL scheme of external routes will default to HTTPS for enhanced security.

If you do not want this change to apply for your workloads, you can override the default setting before upgrading to 1.19.0, by adding the following YAML to your

KnativeServingcustom resource (CR):Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you want the change to apply in 1.18.0 already, add the following YAML:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the upcoming OpenShift Serverless 1.19.0 release, the default service type by which the Kourier Gateway is exposed will be

ClusterIPand notLoadBalancer.If you do not want this change to apply to your workloads, you can override the default setting before upgrading to 1.19.0, by adding the following YAML to your

KnativeServingcustom resource (CR):Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

You can now use

emptyDirvolumes with OpenShift Serverless. See the OpenShift Serverless documentation about Knative Serving for details. -

Rust templates are now available when you create a function using

kn func.

1.14.2. Fixed issues

- The prior 1.4 version of Camel-K was not compatible with OpenShift Serverless 1.17.0. The issue in Camel-K has been fixed, and Camel-K version 1.4.1 can be used with OpenShift Serverless 1.17.0.

Previously, if you created a new subscription for a Kafka channel, or a new Kafka source, a delay was possible in the Kafka data plane becoming ready to dispatch messages after the newly created subscription or sink reported a ready status.

As a result, messages that were sent during the time when the data plane was not reporting a ready status, might not have been delivered to the subscriber or sink.

In OpenShift Serverless 1.18.0, the issue is fixed and the initial messages are no longer lost. For more information about the issue, see Knowledgebase Article #6343981.

1.14.3. Known issues

Older versions of the Knative

knCLI might use older versions of the Knative Serving and Knative Eventing APIs. For example, version 0.23.2 of theknCLI uses thev1alpha1API version.On the other hand, newer releases of OpenShift Serverless might no longer support older API versions. For example, OpenShift Serverless 1.18.0 no longer supports version

v1alpha1of thekafkasources.sources.knative.devAPI.Consequently, using an older version of the Knative

knCLI with a newer OpenShift Serverless might produce an error because thekncannot find the outdated API. For example, version 0.23.2 of theknCLI does not work with OpenShift Serverless 1.18.0.To avoid issues, use the latest

knCLI version available for your OpenShift Serverless release. For OpenShift Serverless 1.18.0, use KnativeknCLI 0.24.0.

Chapter 2. About Serverless

2.1. OpenShift Serverless overview

OpenShift Serverless provides Kubernetes native building blocks that enable developers to create and deploy serverless, event-driven applications on OpenShift Container Platform. OpenShift Serverless is based on the open source Knative project, which provides portability and consistency for hybrid and multi-cloud environments by enabling an enterprise-grade serverless platform.

Because OpenShift Serverless releases on a different cadence from OpenShift Container Platform, the OpenShift Serverless documentation does not maintain separate documentation sets for minor versions of the product. The current documentation set applies to all currently supported versions of OpenShift Serverless unless version-specific limitations are called out in a particular topic or for a particular feature.

For additional information about the OpenShift Serverless life cycle and supported platforms, refer to the Platform Life Cycle Policy.

2.2. Knative Serving

Knative Serving supports developers who want to create, deploy, and manage cloud-native applications. It provides a set of objects as Kubernetes custom resource definitions (CRDs) that define and control the behavior of serverless workloads on an OpenShift Container Platform cluster.

Developers use these CRDs to create custom resource (CR) instances that can be used as building blocks to address complex use cases. For example:

- Rapidly deploying serverless containers.

- Automatically scaling pods.

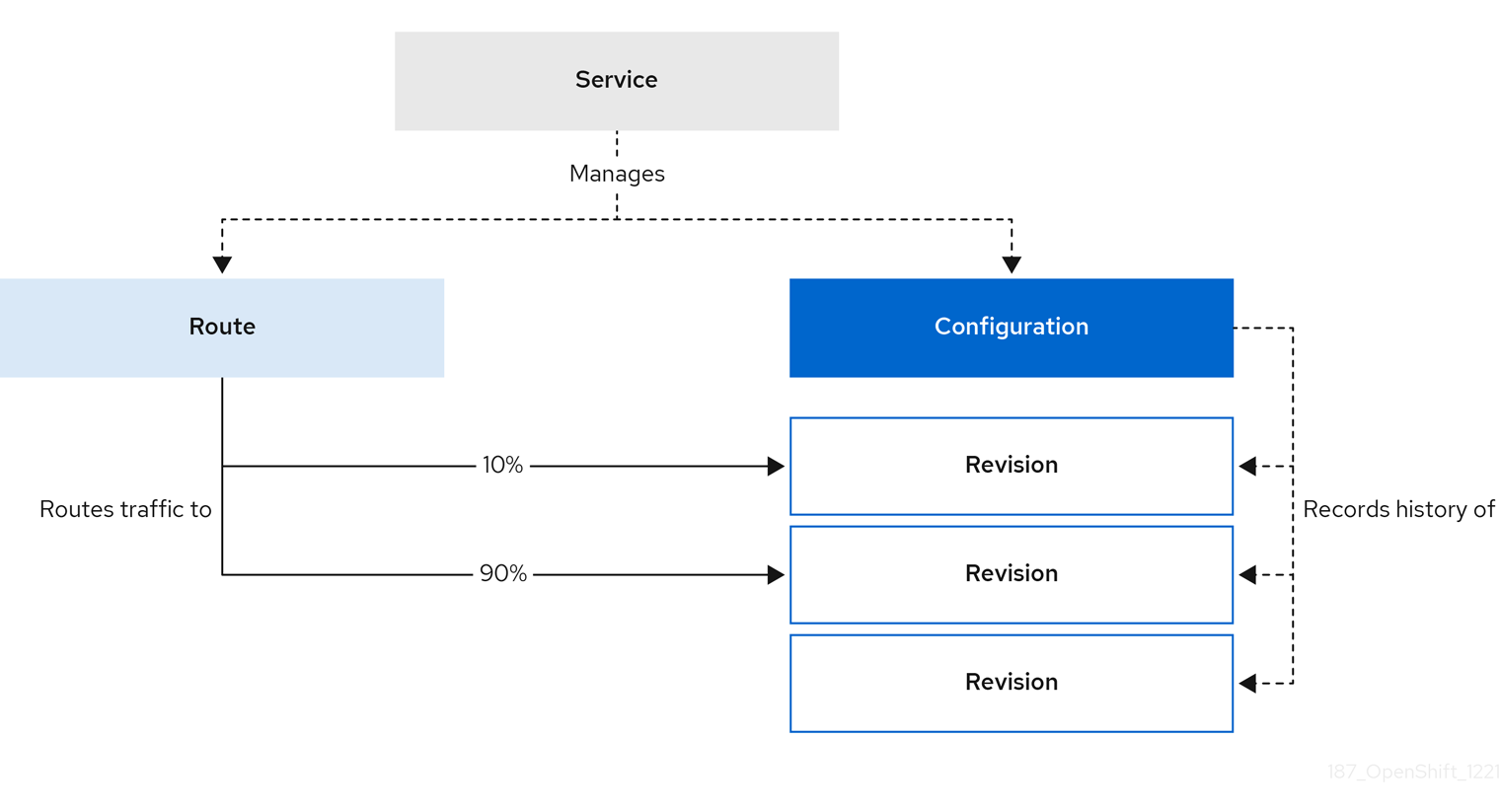

2.2.1. Knative Serving resources

- Service

-

The

service.serving.knative.devCRD automatically manages the life cycle of your workload to ensure that the application is deployed and reachable through the network. It creates a route, a configuration, and a new revision for each change to a user created service, or custom resource. Most developer interactions in Knative are carried out by modifying services. - Revision

-

The

revision.serving.knative.devCRD is a point-in-time snapshot of the code and configuration for each modification made to the workload. Revisions are immutable objects and can be retained for as long as necessary. - Route

-

The

route.serving.knative.devCRD maps a network endpoint to one or more revisions. You can manage the traffic in several ways, including fractional traffic and named routes. - Configuration

-

The

configuration.serving.knative.devCRD maintains the desired state for your deployment. It provides a clean separation between code and configuration. Modifying a configuration creates a new revision.

2.3. Knative Eventing

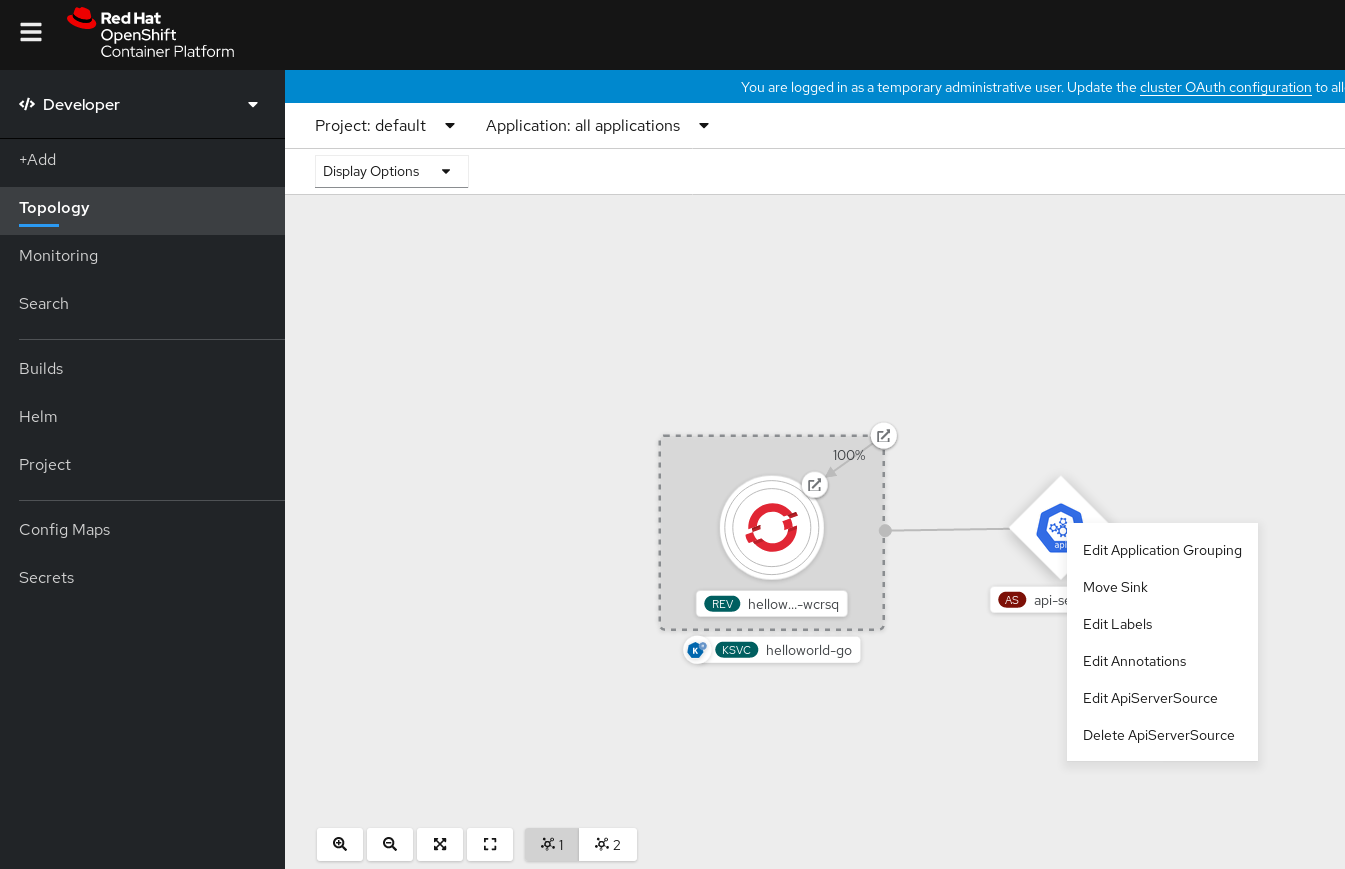

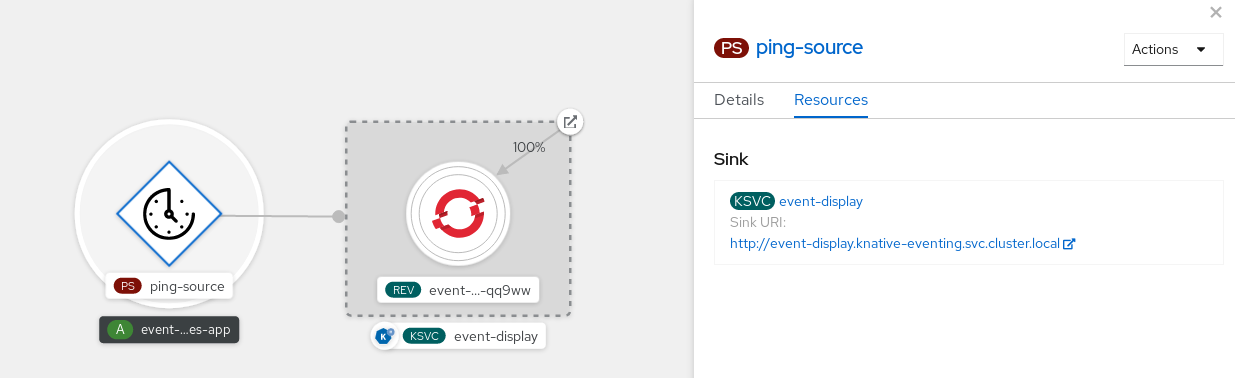

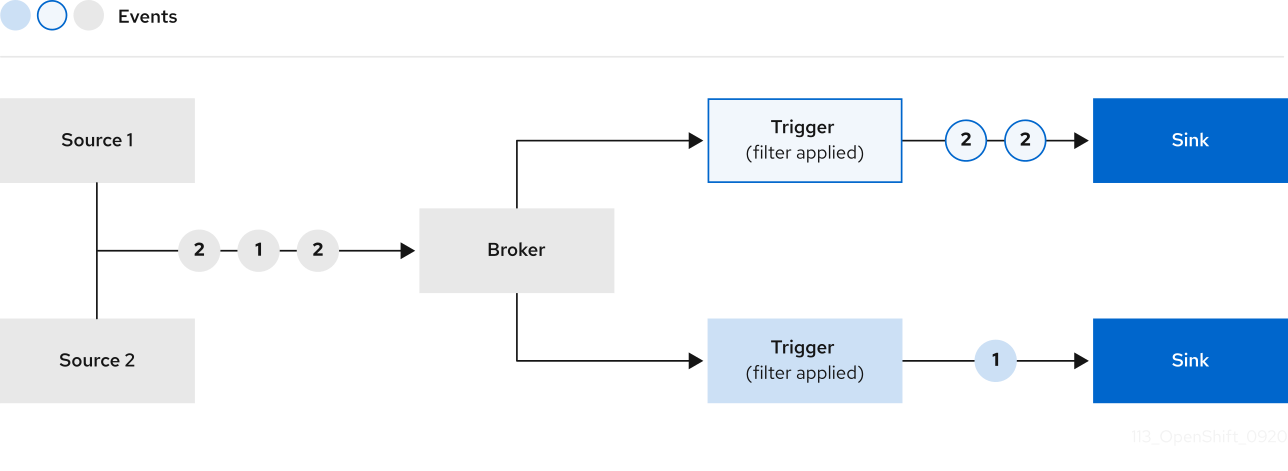

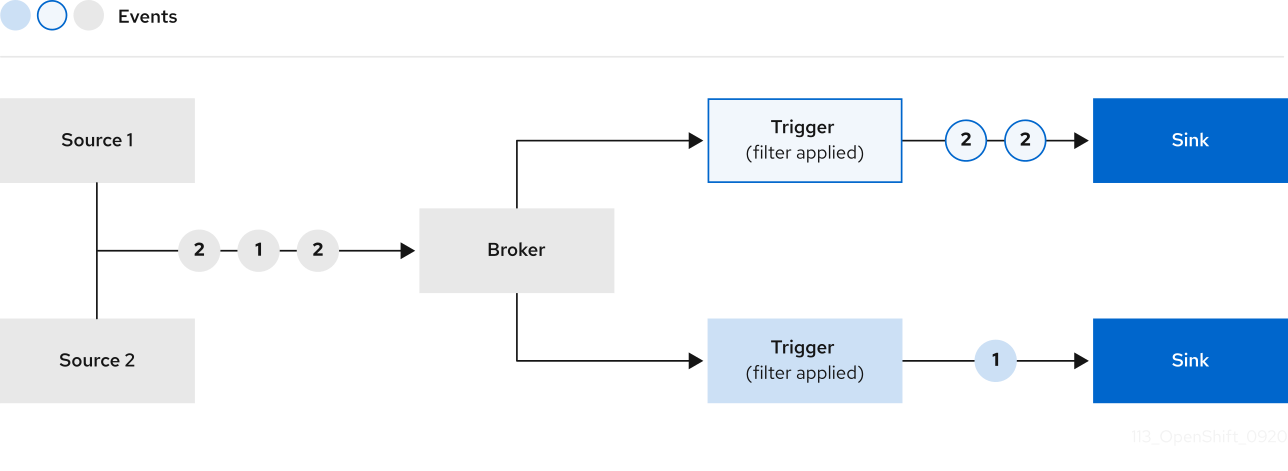

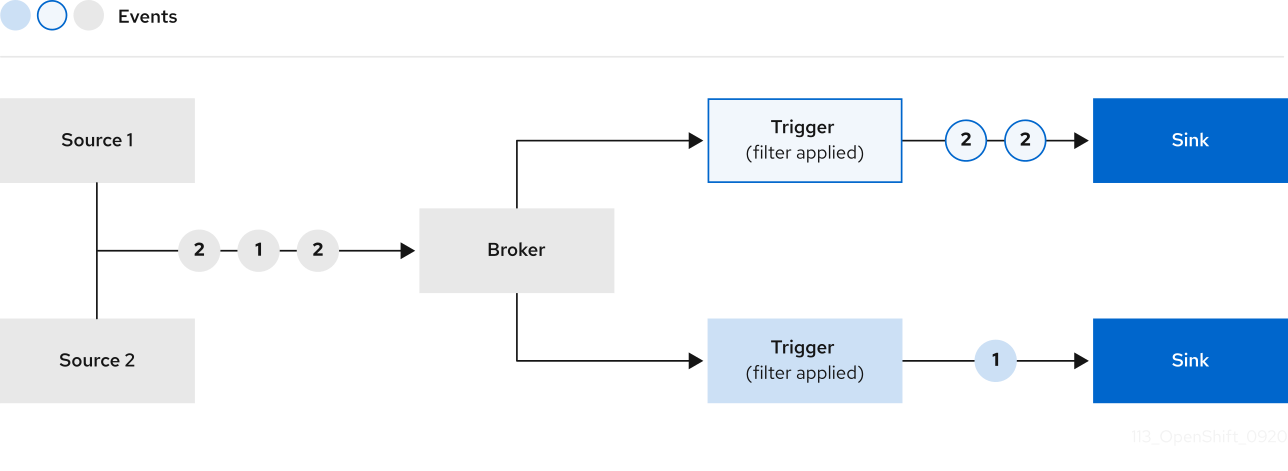

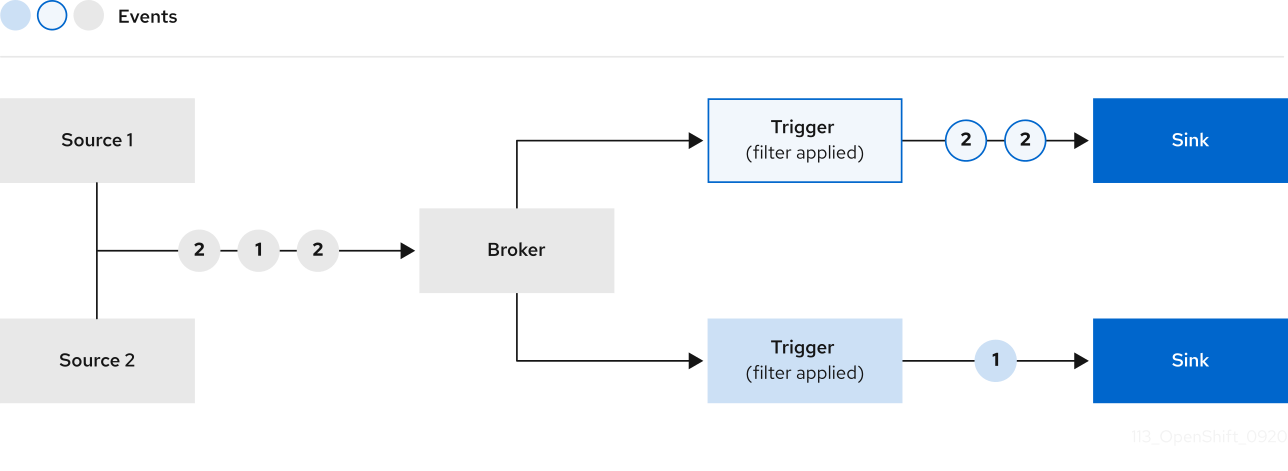

Knative Eventing on OpenShift Container Platform enables developers to use an event-driven architecture with serverless applications. An event-driven architecture is based on the concept of decoupled relationships between event producers and event consumers.

Event producers create events, and event sinks, or consumers, receive events. Knative Eventing uses standard HTTP POST requests to send and receive events between event producers and sinks. These events conform to the CloudEvents specifications, which enables creating, parsing, sending, and receiving events in any programming language.

Knative Eventing supports the following use cases:

- Publish an event without creating a consumer

- You can send events to a broker as an HTTP POST, and use binding to decouple the destination configuration from your application that produces events.

- Consume an event without creating a publisher

- You can use a trigger to consume events from a broker based on event attributes. The application receives events as an HTTP POST.

To enable delivery to multiple types of sinks, Knative Eventing defines the following generic interfaces that can be implemented by multiple Kubernetes resources:

- Addressable resources

-

Able to receive and acknowledge an event delivered over HTTP to an address defined in the

status.address.urlfield of the event. The KubernetesServiceresource also satisfies the addressable interface. - Callable resources

-

Able to receive an event delivered over HTTP and transform it, returning

0or1new events in the HTTP response payload. These returned events may be further processed in the same way that events from an external event source are processed.

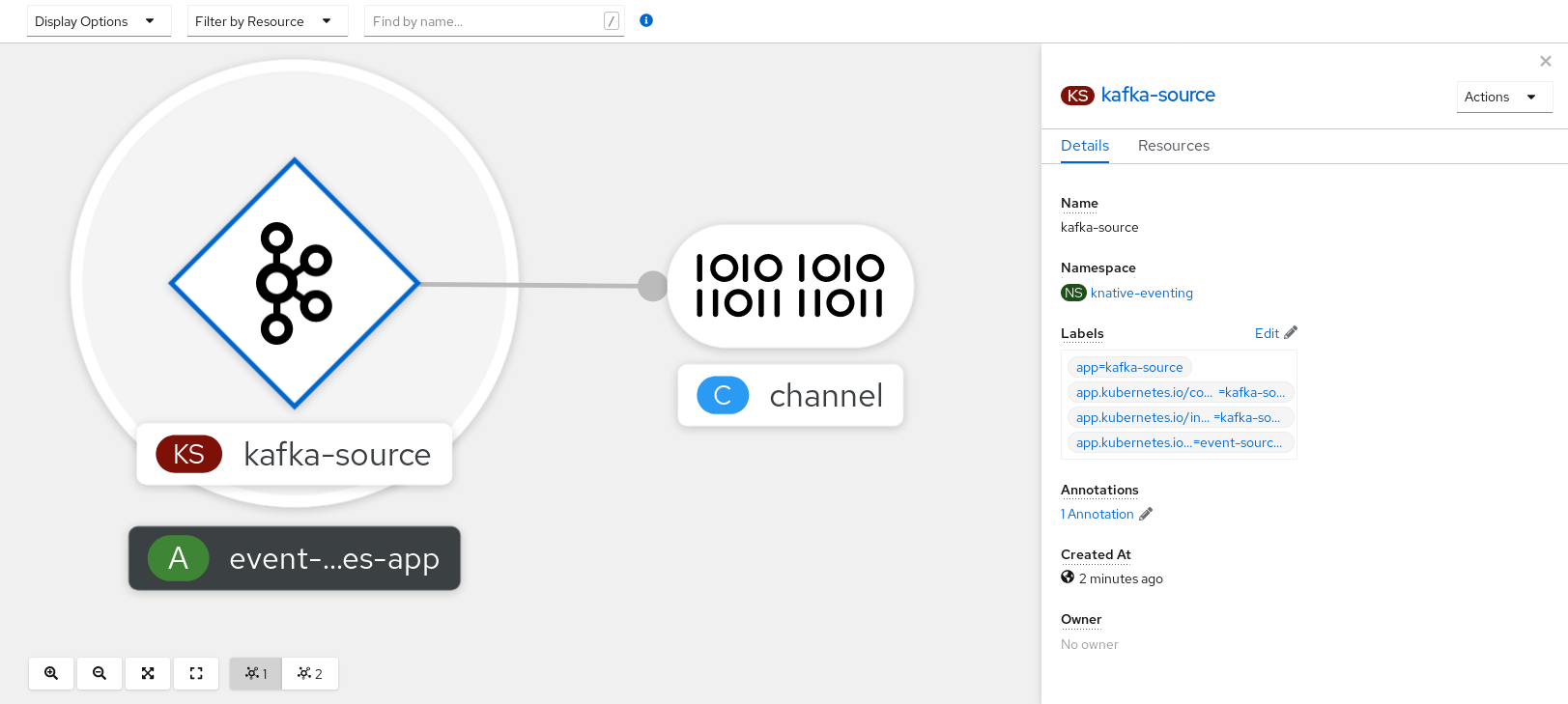

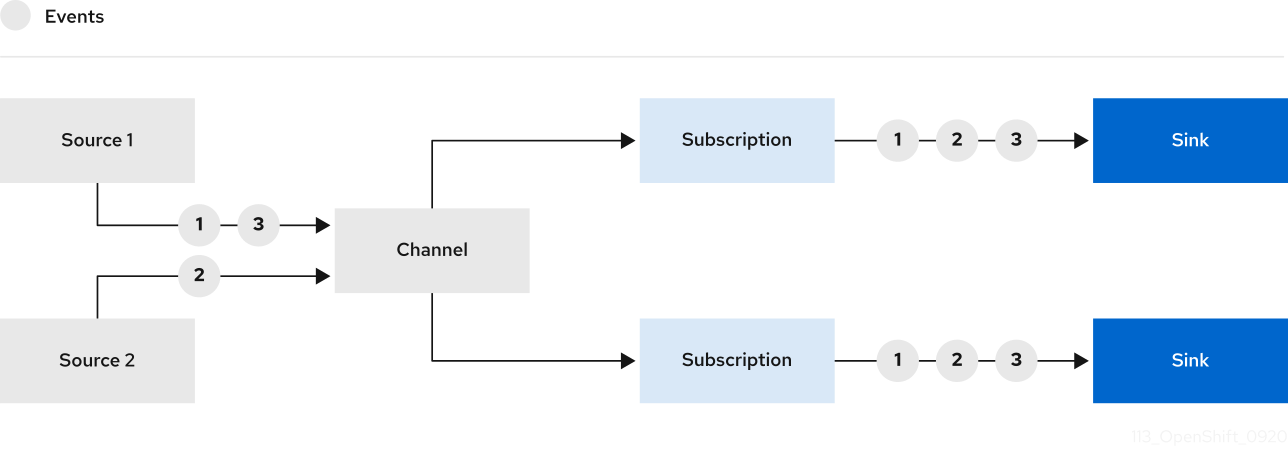

2.3.1. Using Knative Kafka

Knative Kafka provides integration options for you to use supported versions of the Apache Kafka message streaming platform with OpenShift Serverless. Kafka provides options for event source, channel, broker, and event sink capabilities.

Knative Kafka is not currently supported for IBM Z and IBM Power.

Knative Kafka provides additional options, such as:

- Kafka source

- Kafka channel

- Kafka broker

- Kafka sink

2.4. About OpenShift Serverless Functions

OpenShift Serverless Functions enables developers to create and deploy stateless, event-driven functions as a Knative service on OpenShift Container Platform. The kn func CLI is provided as a plugin for the Knative kn CLI. You can use the kn func CLI to create, build, and deploy the container image as a Knative service on the cluster.

2.4.1. Included runtimes

OpenShift Serverless Functions provides templates that can be used to create basic functions for the following runtimes:

2.4.2. Next steps

Chapter 3. Installing Serverless

3.1. Preparing to install OpenShift Serverless

Read the following information about supported configurations and prerequisites before you install OpenShift Serverless.

- OpenShift Serverless is supported for installation in a restricted network environment.

- OpenShift Serverless currently cannot be used in a multi-tenant configuration on a single cluster.

3.1.1. Supported configurations

The set of supported features, configurations, and integrations for OpenShift Serverless, current and past versions, are available at the Supported Configurations page.

3.1.2. Scalability and performance

OpenShift Serverless has been tested with a configuration of 3 main nodes and 3 worker nodes, each of which has 64 CPUs, 457 GB of memory, and 394 GB of storage each.

The maximum number of Knative services that can be created using this configuration is 3,000. This corresponds to the OpenShift Container Platform Kubernetes services limit of 10,000, since 1 Knative service creates 3 Kubernetes services.

The average scale from zero response time was approximately 3.4 seconds, with a maximum response time of 8 seconds, and a 99.9th percentile of 4.5 seconds for a simple Quarkus application. These times might vary depending on the application and the runtime of the application.

3.1.3. Defining cluster size requirements

To install and use OpenShift Serverless, the OpenShift Container Platform cluster must be sized correctly.

The following requirements relate only to the pool of worker machines of the OpenShift Container Platform cluster. Control plane nodes are not used for general scheduling and are omitted from the requirements.

The minimum requirement to use OpenShift Serverless is a cluster with 10 CPUs and 40GB memory. By default, each pod requests ~400m of CPU, so the minimum requirements are based on this value.

The total size requirements to run OpenShift Serverless are dependent on the components that are installed and the applications that are deployed, and might vary depending on your deployment.

3.1.4. Scaling your cluster using compute machine sets

You can use the OpenShift Container Platform MachineSet API to manually scale your cluster up to the desired size. The minimum requirements usually mean that you must scale up one of the default compute machine sets by two additional machines. See Manually scaling a compute machine set.

3.1.4.1. Additional requirements for advanced use-cases

For more advanced use-cases such as logging or metering on OpenShift Container Platform, you must deploy more resources. Recommended requirements for such use-cases are 24 CPUs and 96GB of memory.

If you have high availability (HA) enabled on your cluster, this requires between 0.5 - 1.5 cores and between 200MB - 2GB of memory for each replica of the Knative Serving control plane. HA is enabled for some Knative Serving components by default. You can disable HA by following the documentation on "Configuring high availability replicas".

3.2. Installing the OpenShift Serverless Operator

Installing the OpenShift Serverless Operator enables you to install and use Knative Serving, Knative Eventing, and Knative Kafka on a OpenShift Container Platform cluster. The OpenShift Serverless Operator manages Knative custom resource definitions (CRDs) for your cluster and enables you to configure them without directly modifying individual config maps for each component.

3.2.1. Installing the OpenShift Serverless Operator from the web console

You can install the OpenShift Serverless Operator from the OperatorHub by using the OpenShift Container Platform web console. Installing this Operator enables you to install and use Knative components.

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator access.

- You have logged in to the OpenShift Container Platform web console.

Procedure

- In the OpenShift Container Platform web console, navigate to the Operators → OperatorHub page.

- Scroll, or type the keyword Serverless into the Filter by keyword box to find the OpenShift Serverless Operator.

- Review the information about the Operator and click Install.

On the Install Operator page:

-

The Installation Mode is All namespaces on the cluster (default). This mode installs the Operator in the default

openshift-serverlessnamespace to watch and be made available to all namespaces in the cluster. -

The Installed Namespace is

openshift-serverless. - Select the stable channel as the Update Channel. The stable channel will enable installation of the latest stable release of the OpenShift Serverless Operator.

- Select Automatic or Manual approval strategy.

-

The Installation Mode is All namespaces on the cluster (default). This mode installs the Operator in the default

- Click Install to make the Operator available to the selected namespaces on this OpenShift Container Platform cluster.

From the Catalog → Operator Management page, you can monitor the OpenShift Serverless Operator subscription’s installation and upgrade progress.

- If you selected a Manual approval strategy, the subscription’s upgrade status will remain Upgrading until you review and approve its install plan. After approving on the Install Plan page, the subscription upgrade status moves to Up to date.

- If you selected an Automatic approval strategy, the upgrade status should resolve to Up to date without intervention.

Verification

After the Subscription’s upgrade status is Up to date, select Catalog → Installed Operators to verify that the OpenShift Serverless Operator eventually shows up and its Status ultimately resolves to InstallSucceeded in the relevant namespace.

If it does not:

- Switch to the Catalog → Operator Management page and inspect the Operator Subscriptions and Install Plans tabs for any failure or errors under Status.

-

Check the logs in any pods in the

openshift-serverlessproject on the Workloads → Pods page that are reporting issues to troubleshoot further.

If you want to use Red Hat OpenShift distributed tracing with OpenShift Serverless, you must install and configure Red Hat OpenShift distributed tracing before you install Knative Serving or Knative Eventing.

3.2.2. Installing the OpenShift Serverless Operator from the CLI

You can install the OpenShift Serverless Operator from the OperatorHub by using the CLI. Installing this Operator enables you to install and use Knative components.

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator access.

- Your cluster has the Marketplace capability enabled or the Red Hat Operator catalog source configured manually.

- You have logged in to the OpenShift Container Platform cluster.

Procedure

Create a YAML file containing

Namespace,OperatorGroup, andSubscriptionobjects to subscribe a namespace to the OpenShift Serverless Operator. For example, create the fileserverless-subscription.yamlwith the following content:Example subscription

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The channel name of the Operator. The

stablechannel enables installation of the most recent stable version of the OpenShift Serverless Operator. - 2

- The name of the Operator to subscribe to. For the OpenShift Serverless Operator, this is always

serverless-operator. - 3

- The name of the CatalogSource that provides the Operator. Use

redhat-operatorsfor the default OperatorHub catalog sources. - 4

- The namespace of the CatalogSource. Use

openshift-marketplacefor the default OperatorHub catalog sources.

Create the

Subscriptionobject:oc apply -f serverless-subscription.yaml

$ oc apply -f serverless-subscription.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Check that the cluster service version (CSV) has reached the Succeeded phase:

Example command

$ oc get csv

$ oc get csvExample output

NAME DISPLAY VERSION REPLACES PHASE serverless-operator.v1.25.0 Red Hat OpenShift Serverless 1.25.0 serverless-operator.v1.24.0 Succeeded

NAME DISPLAY VERSION REPLACES PHASE

serverless-operator.v1.25.0 Red Hat OpenShift Serverless 1.25.0 serverless-operator.v1.24.0 SucceededIf you want to use Red Hat OpenShift distributed tracing with OpenShift Serverless, you must install and configure Red Hat OpenShift distributed tracing before you install Knative Serving or Knative Eventing.

3.2.3. Global configuration

The OpenShift Serverless Operator manages the global configuration of a Knative installation, including propagating values from the KnativeServing and KnativeEventing custom resources to system config maps. Any updates to config maps which are applied manually are overwritten by the Operator. However, modifying the Knative custom resources allows you to set values for these config maps.

Knative has multiple config maps that are named with the prefix config-. All Knative config maps are created in the same namespace as the custom resource that they apply to. For example, if the KnativeServing custom resource is created in the knative-serving namespace, all Knative Serving config maps are also created in this namespace.

The spec.config in the Knative custom resources have one <name> entry for each config map, named config-<name>, with a value which is be used for the config map data.

3.2.5. Next steps

- After the OpenShift Serverless Operator is installed, you can install Knative Serving or install Knative Eventing.

3.3. Installing the Knative CLI

The Knative (kn) CLI does not have its own login mechanism. To log in to the cluster, you must install the OpenShift CLI (oc) and use the oc login command. Installation options for the CLIs may vary depending on your operating system.

For more information on installing the OpenShift CLI (oc) for your operating system and logging in with oc, see the OpenShift CLI getting started documentation.

OpenShift Serverless cannot be installed using the Knative (kn) CLI. A cluster administrator must install the OpenShift Serverless Operator and set up the Knative components, as described in the Installing the OpenShift Serverless Operator documentation.

If you try to use an older version of the Knative (kn) CLI with a newer OpenShift Serverless release, the API is not found and an error occurs.

For example, if you use the 1.23.0 release of the Knative (kn) CLI, which uses version 1.2, with the 1.24.0 OpenShift Serverless release, which uses the 1.3 versions of the Knative Serving and Knative Eventing APIs, the CLI does not work because it continues to look for the outdated 1.2 API versions.

Ensure that you are using the latest Knative (kn) CLI version for your OpenShift Serverless release to avoid issues.

3.3.1. Installing the Knative CLI using the OpenShift Container Platform web console

Using the OpenShift Container Platform web console provides a streamlined and intuitive user interface to install the Knative (kn) CLI. After the OpenShift Serverless Operator is installed, you will see a link to download the Knative (kn) CLI for Linux (amd64, s390x, ppc64le), macOS, or Windows from the Command Line Tools page in the OpenShift Container Platform web console.

Prerequisites

- You have logged in to the OpenShift Container Platform web console.

The OpenShift Serverless Operator and Knative Serving are installed on your OpenShift Container Platform cluster.

ImportantIf libc is not available, you might see the following error when you run CLI commands:

kn: No such file or directory

$ kn: No such file or directoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

If you want to use the verification steps for this procedure, you must install the OpenShift (

oc) CLI.

Procedure

-

Download the Knative (

kn) CLI from the Command Line Tools page. You can access the Command Line Tools page by clicking the icon in the top right corner of the web console and selecting Command Line Tools in the list.

icon in the top right corner of the web console and selecting Command Line Tools in the list.

Unpack the archive:

tar -xf <file>

$ tar -xf <file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Move the

knbinary to a directory on yourPATH. To check your

PATH, run:echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Run the following commands to check that the correct Knative CLI resources and route have been created:

oc get ConsoleCLIDownload

$ oc get ConsoleCLIDownloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DISPLAY NAME AGE kn kn - OpenShift Serverless Command Line Interface (CLI) 2022-09-20T08:41:18Z oc-cli-downloads oc - OpenShift Command Line Interface (CLI) 2022-09-20T08:00:20Z

NAME DISPLAY NAME AGE kn kn - OpenShift Serverless Command Line Interface (CLI) 2022-09-20T08:41:18Z oc-cli-downloads oc - OpenShift Command Line Interface (CLI) 2022-09-20T08:00:20ZCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc get route -n openshift-serverless

$ oc get route -n openshift-serverlessCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD kn kn-openshift-serverless.apps.example.com knative-openshift-metrics-3 http-cli edge/Redirect None

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD kn kn-openshift-serverless.apps.example.com knative-openshift-metrics-3 http-cli edge/Redirect NoneCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.2. Installing the Knative CLI for Linux by using an RPM package manager

For Red Hat Enterprise Linux (RHEL), you can install the Knative (kn) CLI as an RPM by using a package manager, such as yum or dnf. This allows the Knative CLI version to be automatically managed by the system. For example, using a command like dnf upgrade upgrades all packages, including kn, if a new version is available.

Prerequisites

- You have an active OpenShift Container Platform subscription on your Red Hat account.

Procedure

Register with Red Hat Subscription Manager:

subscription-manager register

# subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the latest subscription data:

subscription-manager refresh

# subscription-manager refreshCopy to Clipboard Copied! Toggle word wrap Toggle overflow Attach the subscription to the registered system:

subscription-manager attach --pool=<pool_id>

# subscription-manager attach --pool=<pool_id>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Pool ID for an active OpenShift Container Platform subscription

Enable the repositories required by the Knative (

kn) CLI:Linux (x86_64, amd64)

subscription-manager repos --enable="openshift-serverless-1-for-rhel-8-x86_64-rpms"

# subscription-manager repos --enable="openshift-serverless-1-for-rhel-8-x86_64-rpms"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Linux on IBM Z and LinuxONE (s390x)

subscription-manager repos --enable="openshift-serverless-1-for-rhel-8-s390x-rpms"

# subscription-manager repos --enable="openshift-serverless-1-for-rhel-8-s390x-rpms"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Linux on IBM Power (ppc64le)

subscription-manager repos --enable="openshift-serverless-1-for-rhel-8-ppc64le-rpms"

# subscription-manager repos --enable="openshift-serverless-1-for-rhel-8-ppc64le-rpms"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Install the Knative (

kn) CLI as an RPM by using a package manager:Example

yumcommandyum install openshift-serverless-clients

# yum install openshift-serverless-clientsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.3. Installing the Knative CLI for Linux

If you are using a Linux distribution that does not have RPM or another package manager installed, you can install the Knative (kn) CLI as a binary file. To do this, you must download and unpack a tar.gz archive and add the binary to a directory on your PATH.

Prerequisites

If you are not using RHEL or Fedora, ensure that libc is installed in a directory on your library path.

ImportantIf libc is not available, you might see the following error when you run CLI commands:

kn: No such file or directory

$ kn: No such file or directoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Download the relevant Knative (

kn) CLItar.gzarchive:You can also download any version of

knby navigating to that version’s corresponding directory in the Serverless client download mirror.Unpack the archive:

tar -xf <filename>

$ tar -xf <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Move the

knbinary to a directory on yourPATH. To check your

PATH, run:echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.4. Installing the Knative CLI for macOS

If you are using macOS, you can install the Knative (kn) CLI as a binary file. To do this, you must download and unpack a tar.gz archive and add the binary to a directory on your PATH.

Procedure

Download the Knative (

kn) CLItar.gzarchive.You can also download any version of

knby navigating to that version’s corresponding directory in the Serverless client download mirror.- Unpack and extract the archive.

-

Move the

knbinary to a directory on yourPATH. To check your

PATH, open a terminal window and run:echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.5. Installing the Knative CLI for Windows

If you are using Windows, you can install the Knative (kn) CLI as a binary file. To do this, you must download and unpack a ZIP archive and add the binary to a directory on your PATH.

Procedure

Download the Knative (

kn) CLI ZIP archive.You can also download any version of

knby navigating to that version’s corresponding directory in the Serverless client download mirror.- Extract the archive with a ZIP program.

-

Move the

knbinary to a directory on yourPATH. To check your

PATH, open the command prompt and run the command:path

C:\> pathCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4. Installing Knative Serving

Installing Knative Serving allows you to create Knative services and functions on your cluster. It also allows you to use additional functionality such as autoscaling and networking options for your applications.

After you install the OpenShift Serverless Operator, you can install Knative Serving by using the default settings, or configure more advanced settings in the KnativeServing custom resource (CR). For more information about configuration options for the KnativeServing CR, see Global configuration.

If you want to use Red Hat OpenShift distributed tracing with OpenShift Serverless, you must install and configure Red Hat OpenShift distributed tracing before you install Knative Serving.

3.4.1. Installing Knative Serving by using the web console

After you install the OpenShift Serverless Operator, install Knative Serving by using the OpenShift Container Platform web console. You can install Knative Serving by using the default settings or configure more advanced settings in the KnativeServing custom resource (CR).

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator access.

- You have logged in to the OpenShift Container Platform web console.

- You have installed the OpenShift Serverless Operator.

Procedure

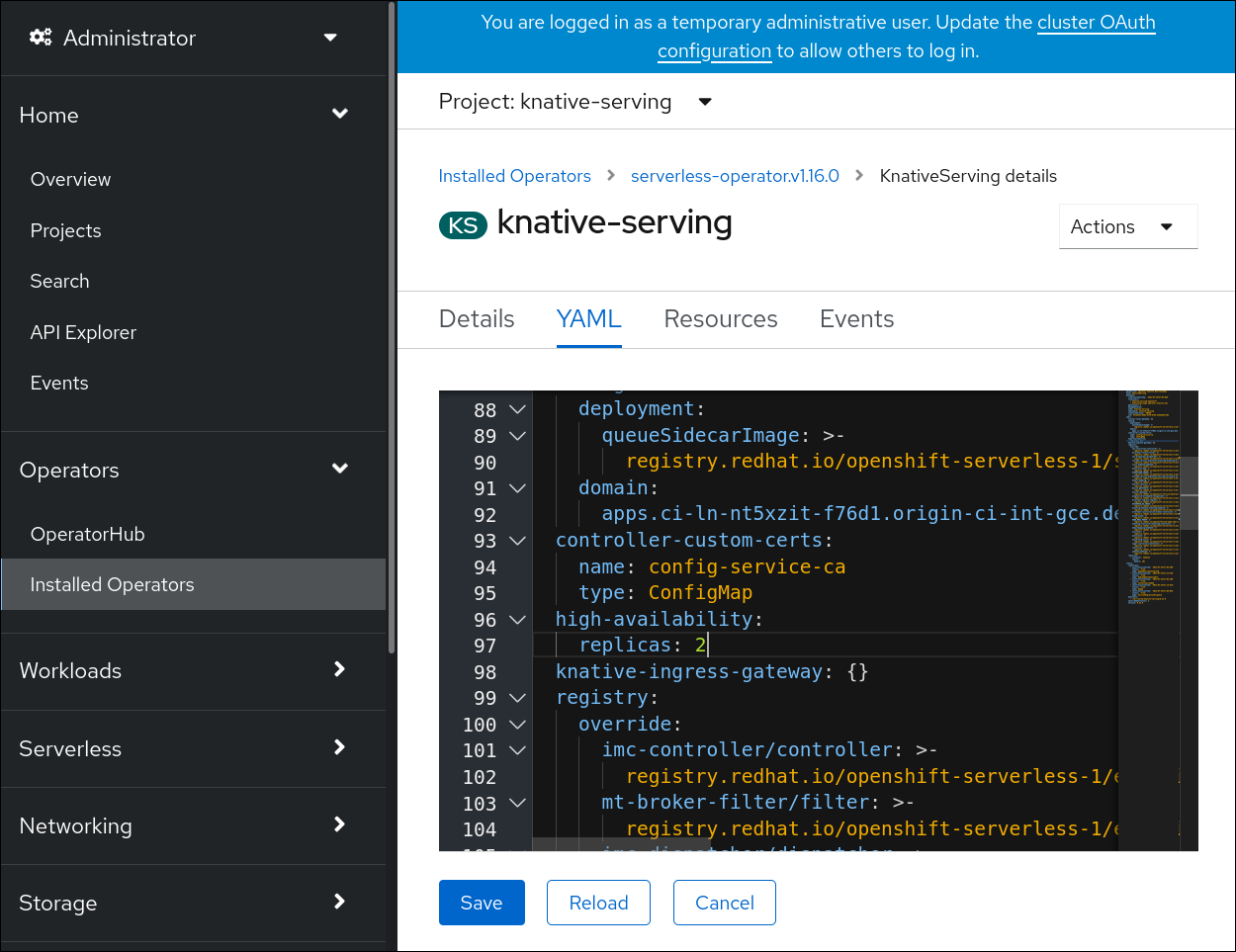

- In the Administrator perspective of the OpenShift Container Platform web console, navigate to Operators → Installed Operators.

- Check that the Project dropdown at the top of the page is set to Project: knative-serving.

- Click Knative Serving in the list of Provided APIs for the OpenShift Serverless Operator to go to the Knative Serving tab.

- Click Create Knative Serving.

In the Create Knative Serving page, you can install Knative Serving using the default settings by clicking Create.

You can also modify settings for the Knative Serving installation by editing the

KnativeServingobject using either the form provided, or by editing the YAML.-

Using the form is recommended for simpler configurations that do not require full control of

KnativeServingobject creation. Editing the YAML is recommended for more complex configurations that require full control of

KnativeServingobject creation. You can access the YAML by clicking the edit YAML link in the top right of the Create Knative Serving page.After you complete the form, or have finished modifying the YAML, click Create.

NoteFor more information about configuration options for the KnativeServing custom resource definition, see the documentation on Advanced installation configuration options.

-

Using the form is recommended for simpler configurations that do not require full control of

-

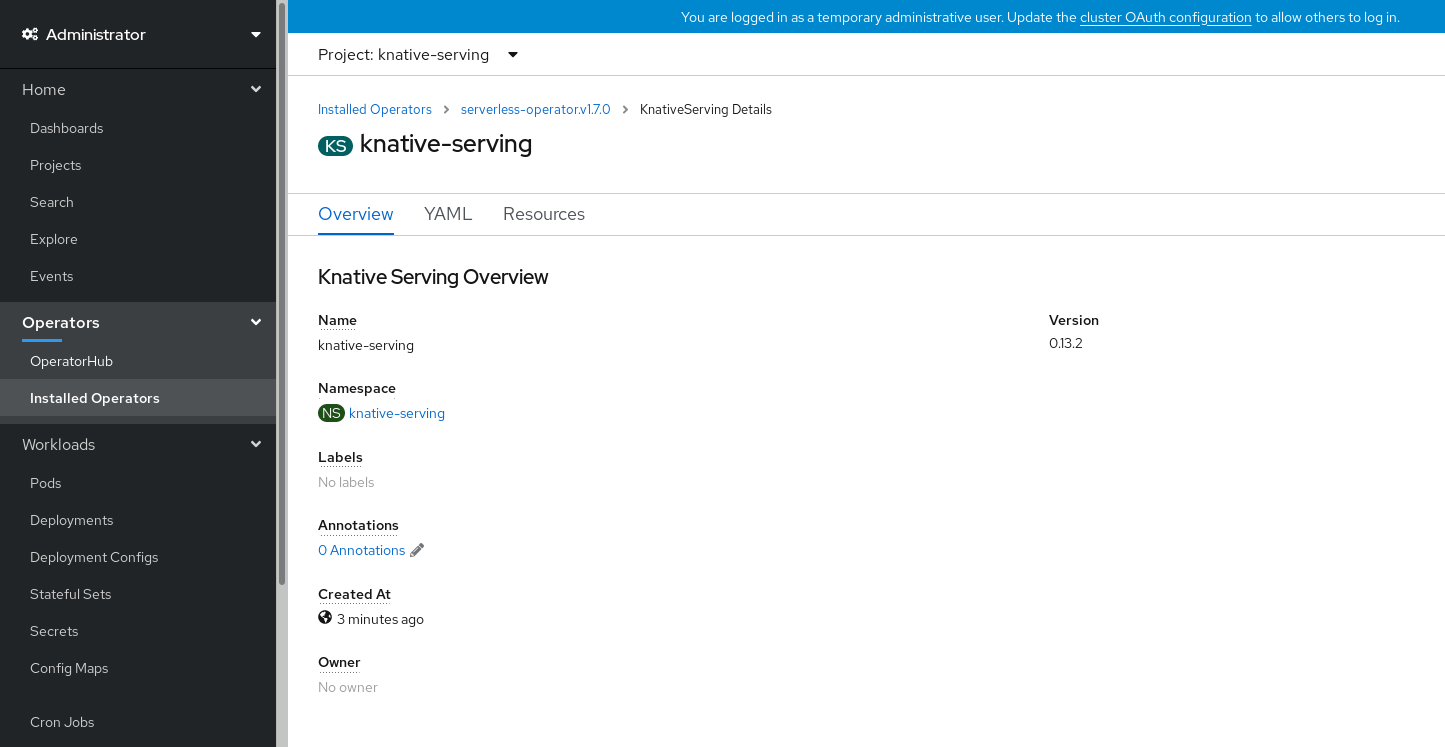

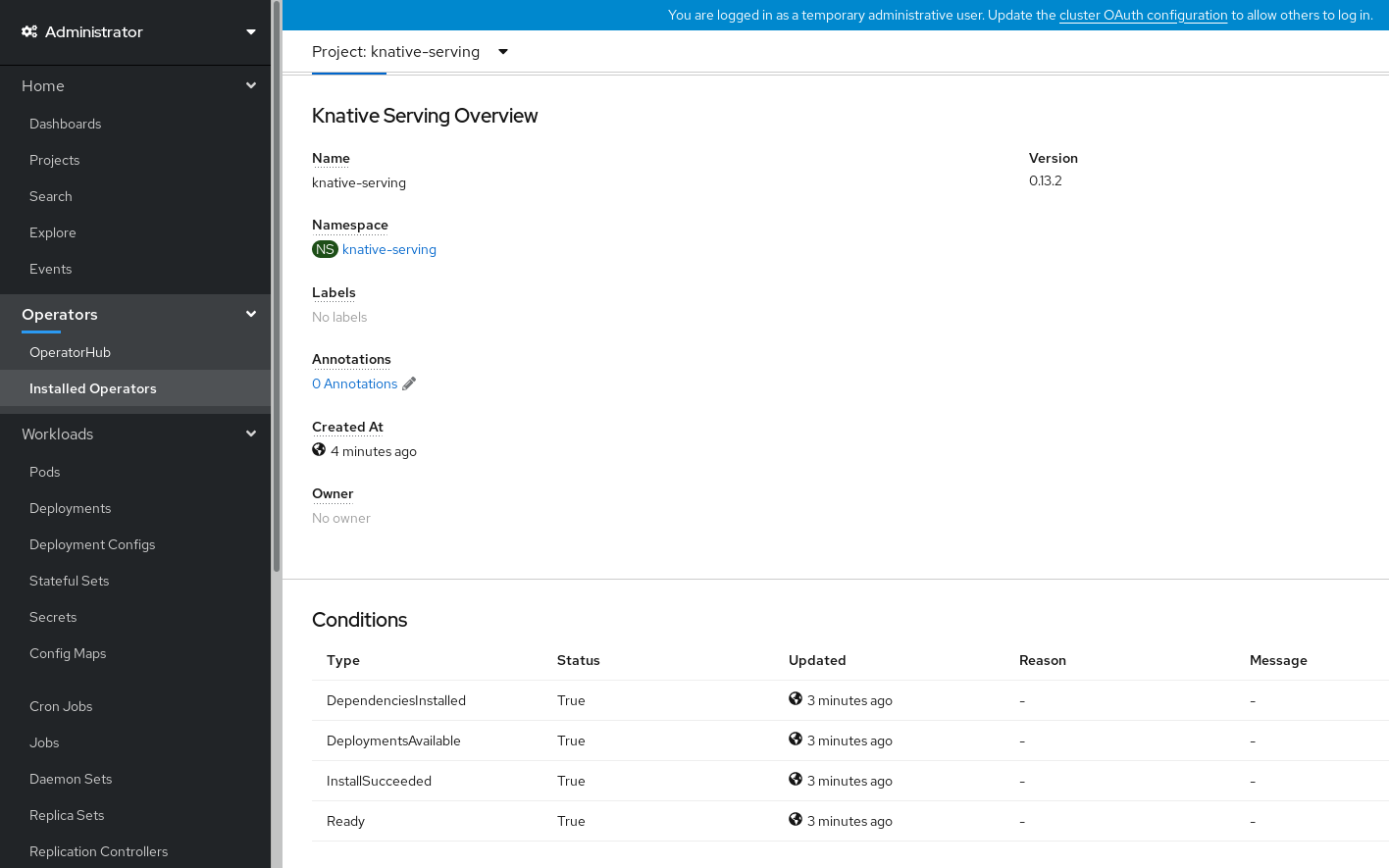

After you have installed Knative Serving, the

KnativeServingobject is created, and you are automatically directed to the Knative Serving tab. You will see theknative-servingcustom resource in the list of resources.

Verification

-

Click on

knative-servingcustom resource in the Knative Serving tab. You will be automatically directed to the Knative Serving Overview page.

- Scroll down to look at the list of Conditions.

You should see a list of conditions with a status of True, as shown in the example image.

Note

NoteIt may take a few seconds for the Knative Serving resources to be created. You can check their status in the Resources tab.

- If the conditions have a status of Unknown or False, wait a few moments and then check again after you have confirmed that the resources have been created.

3.4.2. Installing Knative Serving by using YAML

After you install the OpenShift Serverless Operator, you can install Knative Serving by using the default settings, or configure more advanced settings in the KnativeServing custom resource (CR). You can use the following procedure to install Knative Serving by using YAML files and the oc CLI.

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator access.

- You have installed the OpenShift Serverless Operator.

-

Install the OpenShift CLI (

oc).

Procedure

Create a file named

serving.yamland copy the following example YAML into it:apiVersion: operator.knative.dev/v1beta1 kind: KnativeServing metadata: name: knative-serving namespace: knative-servingapiVersion: operator.knative.dev/v1beta1 kind: KnativeServing metadata: name: knative-serving namespace: knative-servingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

serving.yamlfile:oc apply -f serving.yaml

$ oc apply -f serving.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify the installation is complete, enter the following command:

oc get knativeserving.operator.knative.dev/knative-serving -n knative-serving --template='{{range .status.conditions}}{{printf "%s=%s\n" .type .status}}{{end}}'$ oc get knativeserving.operator.knative.dev/knative-serving -n knative-serving --template='{{range .status.conditions}}{{printf "%s=%s\n" .type .status}}{{end}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

DependenciesInstalled=True DeploymentsAvailable=True InstallSucceeded=True Ready=True

DependenciesInstalled=True DeploymentsAvailable=True InstallSucceeded=True Ready=TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIt may take a few seconds for the Knative Serving resources to be created.

If the conditions have a status of

UnknownorFalse, wait a few moments and then check again after you have confirmed that the resources have been created.Check that the Knative Serving resources have been created:

oc get pods -n knative-serving

$ oc get pods -n knative-servingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the necessary networking components have been installed to the automatically created

knative-serving-ingressnamespace:oc get pods -n knative-serving-ingress

$ oc get pods -n knative-serving-ingressCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE net-kourier-controller-7d4b6c5d95-62mkf 1/1 Running 0 76s net-kourier-controller-7d4b6c5d95-qmgm2 1/1 Running 0 76s 3scale-kourier-gateway-6688b49568-987qz 1/1 Running 0 75s 3scale-kourier-gateway-6688b49568-b5tnp 1/1 Running 0 75s

NAME READY STATUS RESTARTS AGE net-kourier-controller-7d4b6c5d95-62mkf 1/1 Running 0 76s net-kourier-controller-7d4b6c5d95-qmgm2 1/1 Running 0 76s 3scale-kourier-gateway-6688b49568-987qz 1/1 Running 0 75s 3scale-kourier-gateway-6688b49568-b5tnp 1/1 Running 0 75sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3. Next steps

- If you want to use Knative event-driven architecture you can install Knative Eventing.

3.5. Installing Knative Eventing

To use event-driven architecture on your cluster, install Knative Eventing. You can create Knative components such as event sources, brokers, and channels and then use them to send events to applications or external systems.

After you install the OpenShift Serverless Operator, you can install Knative Eventing by using the default settings, or configure more advanced settings in the KnativeEventing custom resource (CR). For more information about configuration options for the KnativeEventing CR, see Global configuration.

If you want to use Red Hat OpenShift distributed tracing with OpenShift Serverless, you must install and configure Red Hat OpenShift distributed tracing before you install Knative Eventing.

3.5.1. Installing Knative Eventing by using the web console

After you install the OpenShift Serverless Operator, install Knative Eventing by using the OpenShift Container Platform web console. You can install Knative Eventing by using the default settings or configure more advanced settings in the KnativeEventing custom resource (CR).

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator access.

- You have logged in to the OpenShift Container Platform web console.

- You have installed the OpenShift Serverless Operator.

Procedure

- In the Administrator perspective of the OpenShift Container Platform web console, navigate to Operators → Installed Operators.

- Check that the Project dropdown at the top of the page is set to Project: knative-eventing.

- Click Knative Eventing in the list of Provided APIs for the OpenShift Serverless Operator to go to the Knative Eventing tab.

- Click Create Knative Eventing.

In the Create Knative Eventing page, you can choose to configure the

KnativeEventingobject by using either the default form provided, or by editing the YAML.Using the form is recommended for simpler configurations that do not require full control of

KnativeEventingobject creation.Optional. If you are configuring the

KnativeEventingobject using the form, make any changes that you want to implement for your Knative Eventing deployment.

Click Create.

Editing the YAML is recommended for more complex configurations that require full control of

KnativeEventingobject creation. You can access the YAML by clicking the edit YAML link in the top right of the Create Knative Eventing page.Optional. If you are configuring the

KnativeEventingobject by editing the YAML, make any changes to the YAML that you want to implement for your Knative Eventing deployment.

- Click Create.

-

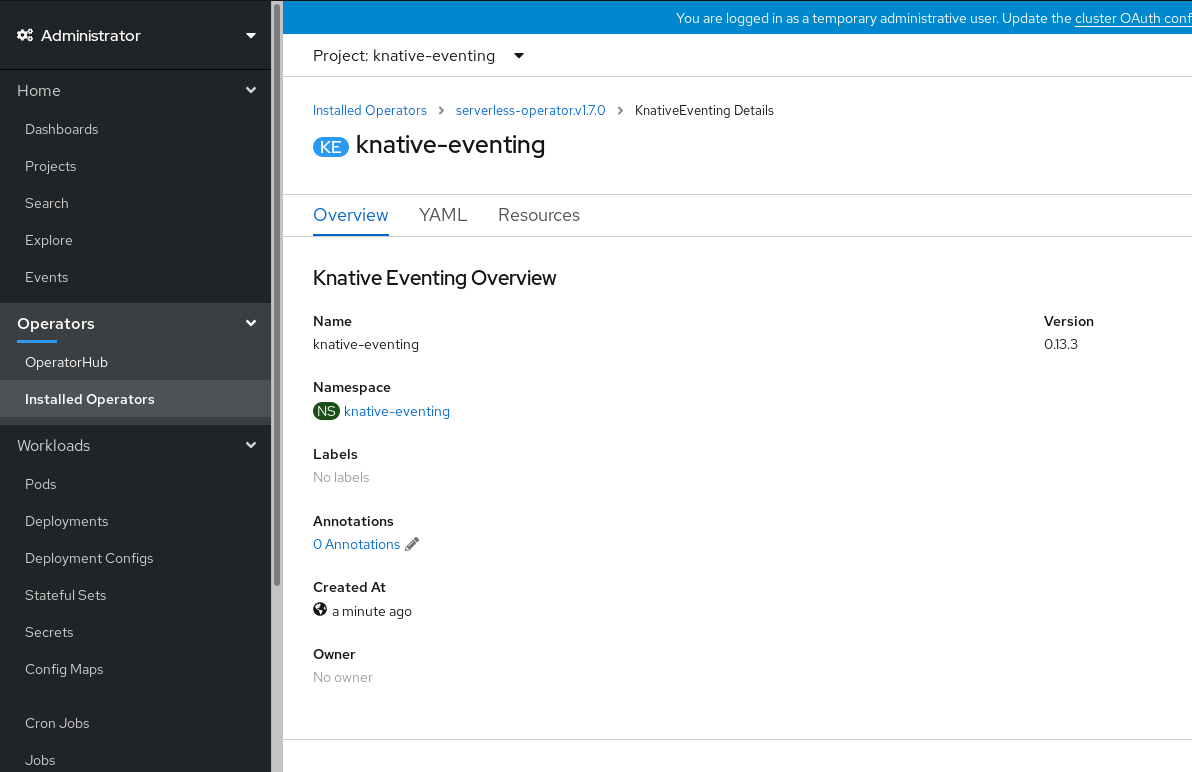

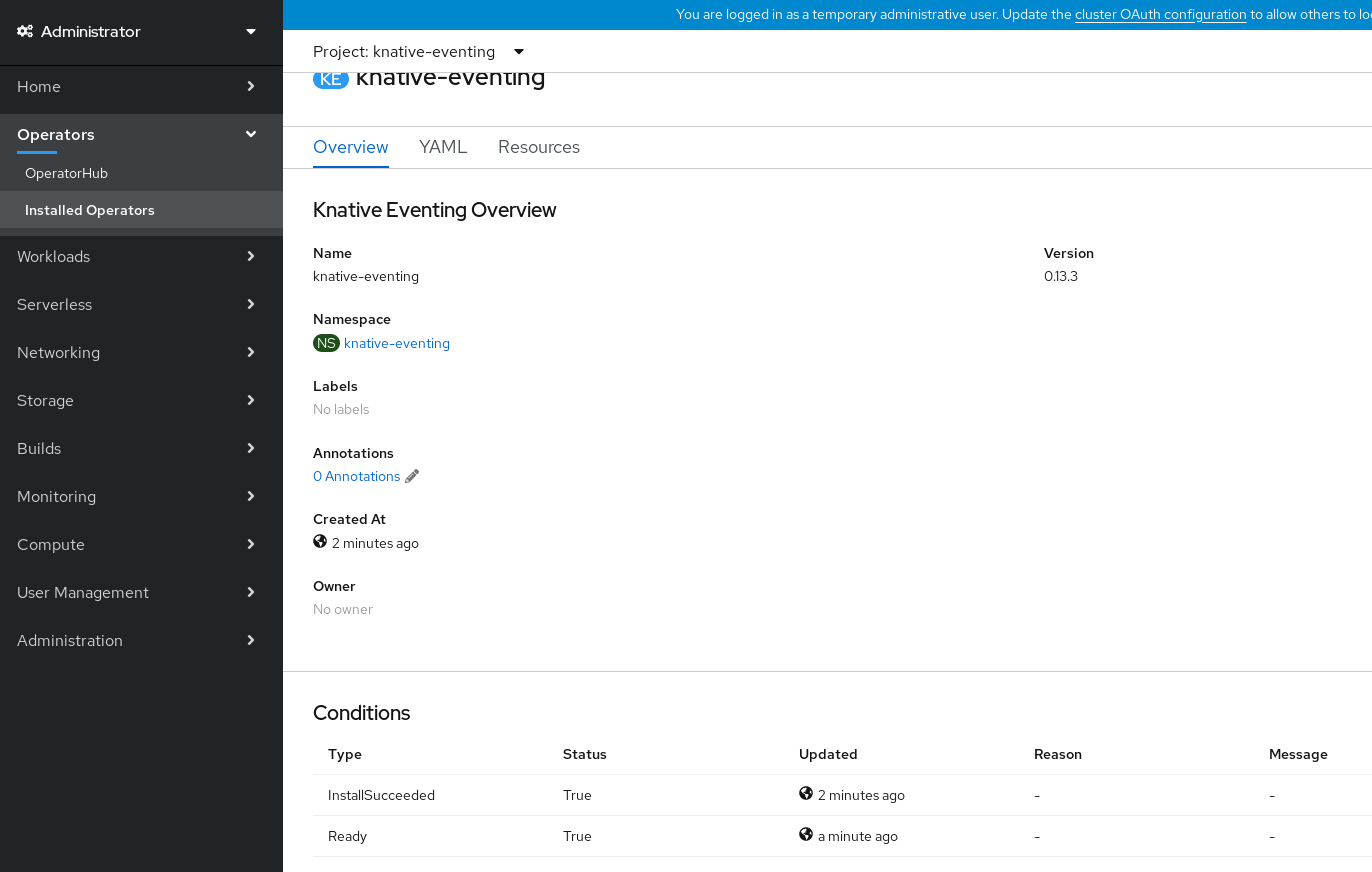

After you have installed Knative Eventing, the

KnativeEventingobject is created, and you are automatically directed to the Knative Eventing tab. You will see theknative-eventingcustom resource in the list of resources.

Verification

-

Click on the

knative-eventingcustom resource in the Knative Eventing tab. You are automatically directed to the Knative Eventing Overview page.

- Scroll down to look at the list of Conditions.

You should see a list of conditions with a status of True, as shown in the example image.

Note

NoteIt may take a few seconds for the Knative Eventing resources to be created. You can check their status in the Resources tab.

- If the conditions have a status of Unknown or False, wait a few moments and then check again after you have confirmed that the resources have been created.

3.5.2. Installing Knative Eventing by using YAML

After you install the OpenShift Serverless Operator, you can install Knative Eventing by using the default settings, or configure more advanced settings in the KnativeEventing custom resource (CR). You can use the following procedure to install Knative Eventing by using YAML files and the oc CLI.

Prerequisites

- You have access to an OpenShift Container Platform account with cluster administrator access.

- You have installed the OpenShift Serverless Operator.

-

Install the OpenShift CLI (

oc).

Procedure

-

Create a file named

eventing.yaml. Copy the following sample YAML into

eventing.yaml:apiVersion: operator.knative.dev/v1beta1 kind: KnativeEventing metadata: name: knative-eventing namespace: knative-eventingapiVersion: operator.knative.dev/v1beta1 kind: KnativeEventing metadata: name: knative-eventing namespace: knative-eventingCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Optional. Make any changes to the YAML that you want to implement for your Knative Eventing deployment.

Apply the

eventing.yamlfile by entering:oc apply -f eventing.yaml

$ oc apply -f eventing.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify the installation is complete by entering the following command and observing the output:

oc get knativeeventing.operator.knative.dev/knative-eventing \ -n knative-eventing \ --template='{{range .status.conditions}}{{printf "%s=%s\n" .type .status}}{{end}}'$ oc get knativeeventing.operator.knative.dev/knative-eventing \ -n knative-eventing \ --template='{{range .status.conditions}}{{printf "%s=%s\n" .type .status}}{{end}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

InstallSucceeded=True Ready=True

InstallSucceeded=True Ready=TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIt may take a few seconds for the Knative Eventing resources to be created.

-

If the conditions have a status of

UnknownorFalse, wait a few moments and then check again after you have confirmed that the resources have been created. Check that the Knative Eventing resources have been created by entering:

oc get pods -n knative-eventing

$ oc get pods -n knative-eventingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

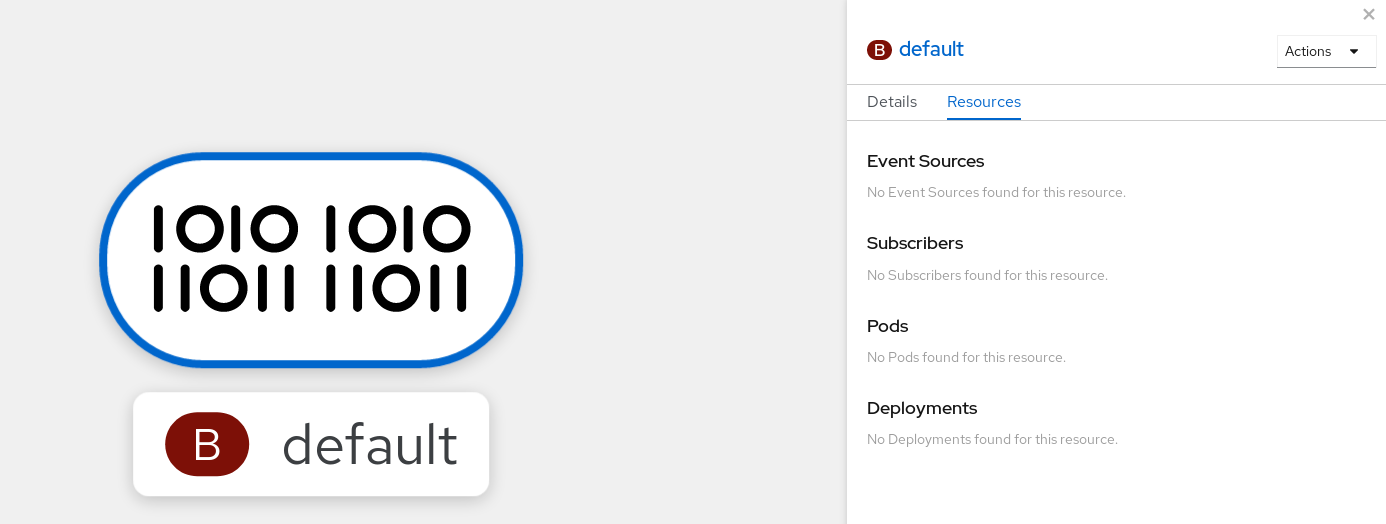

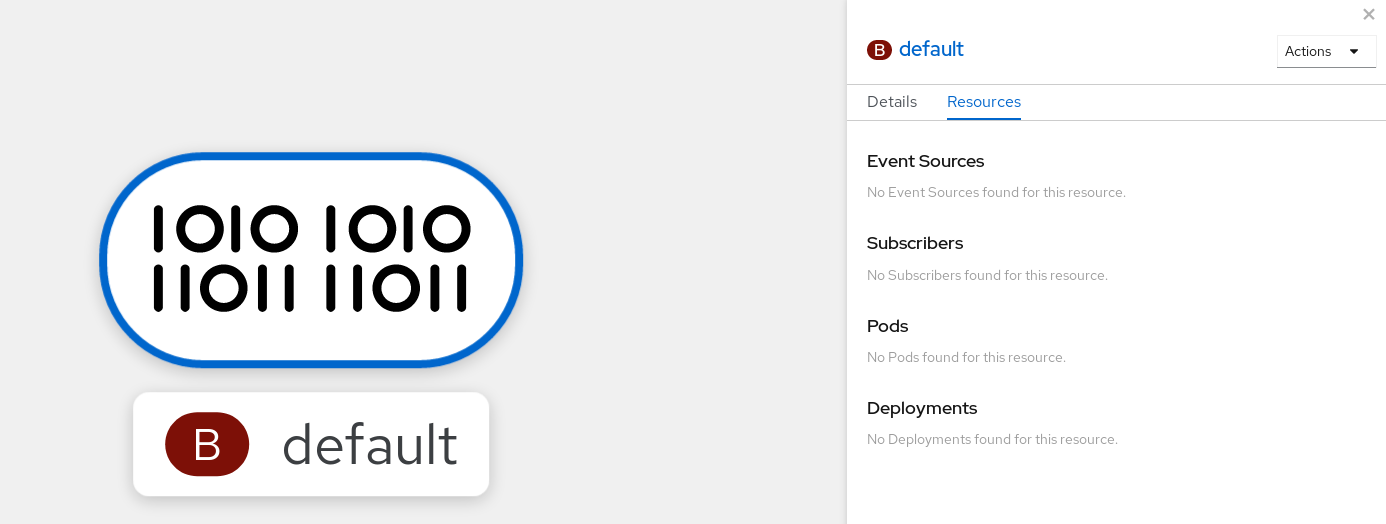

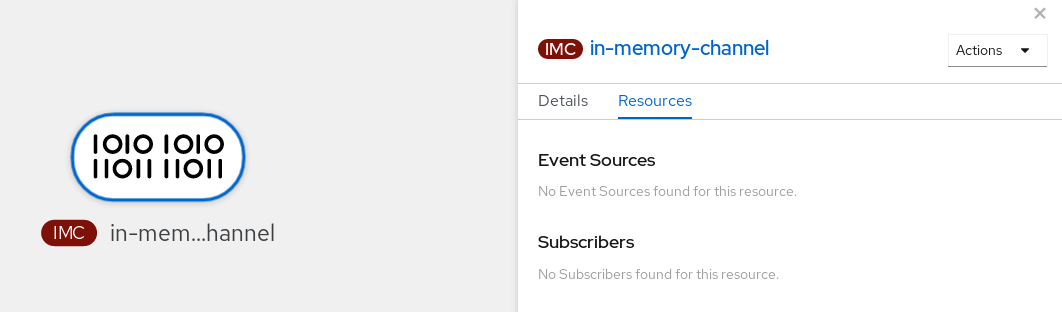

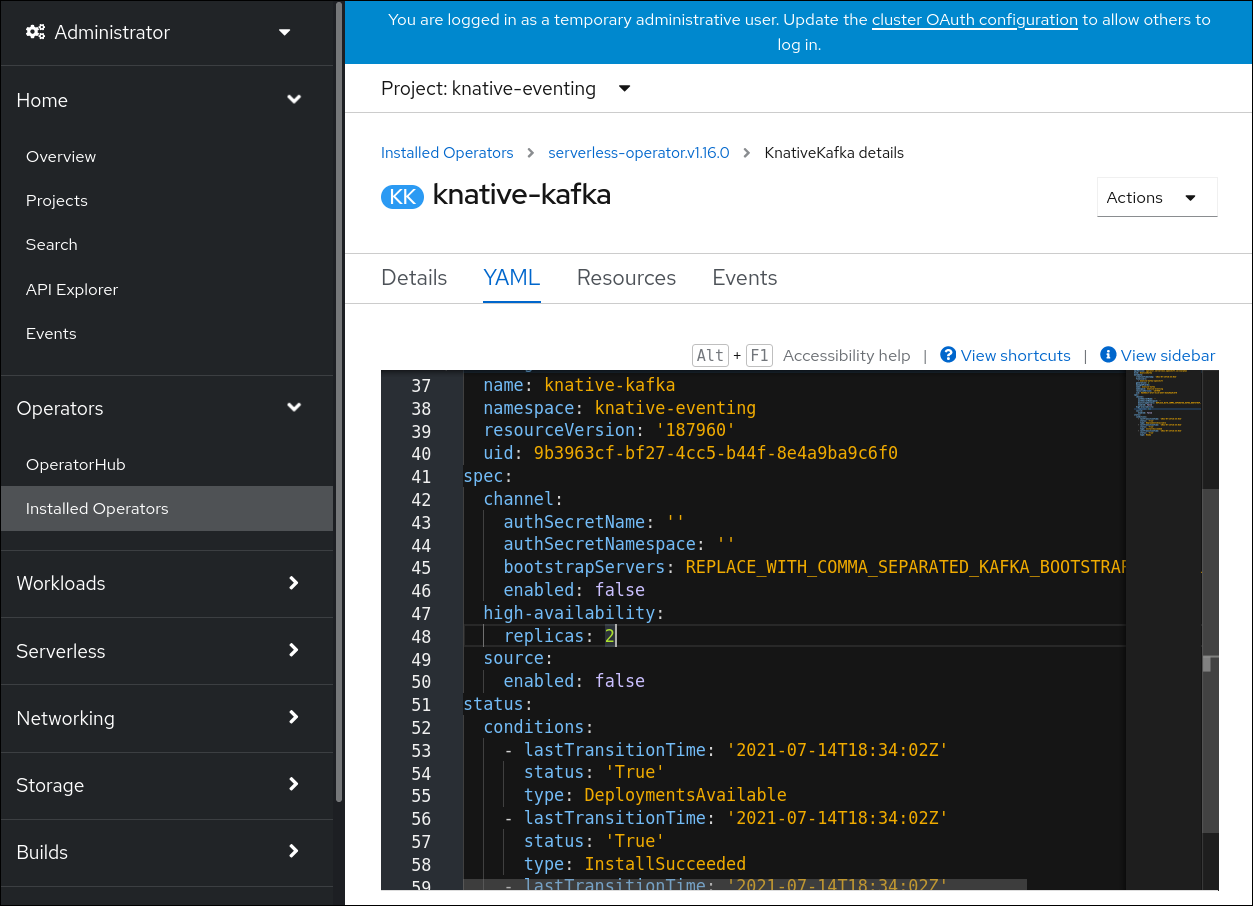

3.5.3. Installing Knative Kafka

Knative Kafka provides integration options for you to use supported versions of the Apache Kafka message streaming platform with OpenShift Serverless. Knative Kafka functionality is available in an OpenShift Serverless installation if you have installed the KnativeKafka custom resource.

Prerequisites

- You have installed the OpenShift Serverless Operator and Knative Eventing on your cluster.

- You have access to a Red Hat AMQ Streams cluster.

-

Install the OpenShift CLI (

oc) if you want to use the verification steps.

- You have cluster administrator permissions on OpenShift Container Platform.

- You are logged in to the OpenShift Container Platform web console.

Procedure

- In the Administrator perspective, navigate to Operators → Installed Operators.

- Check that the Project dropdown at the top of the page is set to Project: knative-eventing.

- In the list of Provided APIs for the OpenShift Serverless Operator, find the Knative Kafka box and click Create Instance.

Configure the KnativeKafka object in the Create Knative Kafka page.

ImportantTo use the Kafka channel, source, broker, or sink on your cluster, you must toggle the enabled switch for the options you want to use to true. These switches are set to false by default. Additionally, to use the Kafka channel, broker, or sink you must specify the bootstrap servers.

Example

KnativeKafkacustom resourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Enables developers to use the

KafkaChannelchannel type in the cluster. - 2

- A comma-separated list of bootstrap servers from your AMQ Streams cluster.

- 3

- Enables developers to use the

KafkaSourceevent source type in the cluster. - 4

- Enables developers to use the Knative Kafka broker implementation in the cluster.

- 5

- A comma-separated list of bootstrap servers from your Red Hat AMQ Streams cluster.

- 6

- Defines the number of partitions of the Kafka topics, backed by the

Brokerobjects. The default is10. - 7

- Defines the replication factor of the Kafka topics, backed by the

Brokerobjects. The default is3. - 8

- Enables developers to use a Kafka sink in the cluster.

NoteThe

replicationFactorvalue must be less than or equal to the number of nodes of your Red Hat AMQ Streams cluster.- Using the form is recommended for simpler configurations that do not require full control of KnativeKafka object creation.

- Editing the YAML is recommended for more complex configurations that require full control of KnativeKafka object creation. You can access the YAML by clicking the Edit YAML link in the top right of the Create Knative Kafka page.

- Click Create after you have completed any of the optional configurations for Kafka. You are automatically directed to the Knative Kafka tab where knative-kafka is in the list of resources.

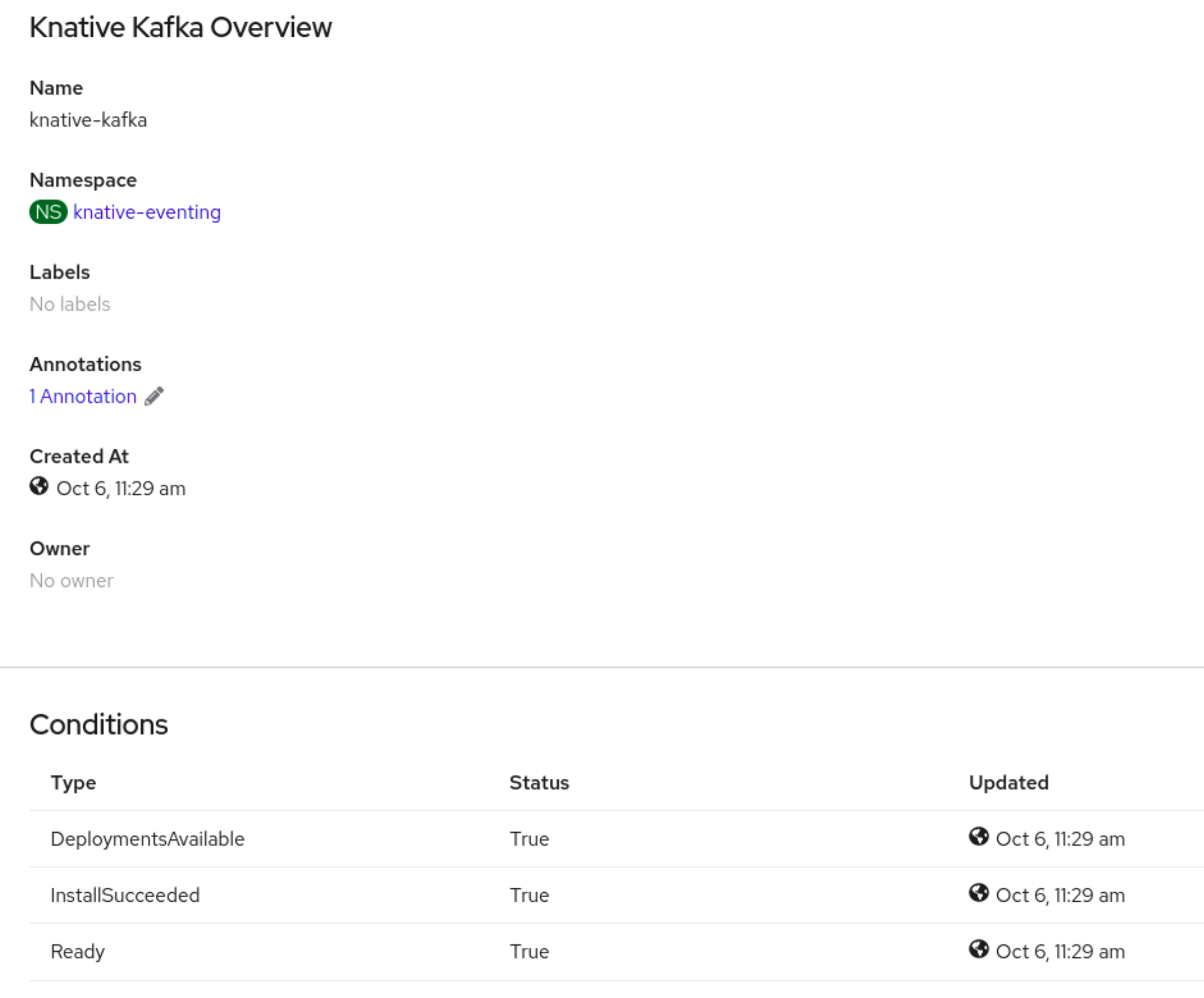

Verification

- Click on the knative-kafka resource in the Knative Kafka tab. You are automatically directed to the Knative Kafka Overview page.

View the list of Conditions for the resource and confirm that they have a status of True.

If the conditions have a status of Unknown or False, wait a few moments to refresh the page.

Check that the Knative Kafka resources have been created:

oc get pods -n knative-eventing

$ oc get pods -n knative-eventingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.5.4. Next steps

- If you want to use Knative services you can install Knative Serving.

3.6. Configuring Knative Kafka

Knative Kafka provides integration options for you to use supported versions of the Apache Kafka message streaming platform with OpenShift Serverless. Kafka provides options for event source, channel, broker, and event sink capabilities.

In addition to the Knative Eventing components that are provided as part of a core OpenShift Serverless installation, cluster administrators can install the KnativeKafka custom resource (CR).

Knative Kafka is not currently supported for IBM Z and IBM Power.

The KnativeKafka CR provides users with additional options, such as:

- Kafka source

- Kafka channel

- Kafka broker

- Kafka sink

3.7. Configuring OpenShift Serverless Functions

To improve the process of deployment of your application code, you can use OpenShift Serverless to deploy stateless, event-driven functions as a Knative service on OpenShift Container Platform. If you want to develop functions, you must complete the set up steps.

3.7.1. Prerequisites

To enable the use of OpenShift Serverless Functions on your cluster, you must complete the following steps:

The OpenShift Serverless Operator and Knative Serving are installed on your cluster.

NoteFunctions are deployed as a Knative service. If you want to use event-driven architecture with your functions, you must also install Knative Eventing.

-

You have the

ocCLI installed. -

You have the Knative (

kn) CLI installed. Installing the Knative CLI enables the use ofkn funccommands which you can use to create and manage functions. - You have installed Docker Container Engine or Podman version 3.4.7 or higher.

- You have access to an available image registry, such as the OpenShift Container Registry.