Chapter 2. Deployment configuration

This chapter describes how to configure different aspects of the supported deployments:

- Kafka clusters

- Kafka Connect clusters

- Kafka Connect clusters with Source2Image support

- Kafka Mirror Maker

- Kafka Bridge

- OAuth 2.0 token-based authentication

- OAuth 2.0 token-based authorization

2.1. Kafka cluster configuration

The full schema of the Kafka resource is described in the Section B.2, “Kafka schema reference”. All labels that are applied to the desired Kafka resource will also be applied to the OpenShift resources making up the Kafka cluster. This provides a convenient mechanism for resources to be labeled as required.

2.1.1. Sample Kafka YAML configuration

For help in understanding the configuration options available for your Kafka deployment, refer to sample YAML file provided here.

The sample shows only some of the possible configuration options, but those that are particularly important include:

- Resource requests (CPU / Memory)

- JVM options for maximum and minimum memory allocation

- Listeners (and authentication)

- Authentication

- Storage

- Rack awareness

- Metrics

- 1

- Replicas specifies the number of broker nodes.

- 2

- Kafka version, which can be changed by following the upgrade procedure.

- 3

- Resource requests specify the resources to reserve for a given container.

- 4

- Resource limits specify the maximum resources that can be consumed by a container.

- 5

- 6

- Listeners configure how clients connect to the Kafka cluster via bootstrap addresses. Listeners are configured as internal or external listeners for connection inside or outside the OpenShift cluster.

- 7

- Name to identify the listener. Must be unique within the Kafka cluster.

- 8

- Port number used by the listener inside Kafka. The port number has to be unique within a given Kafka cluster. Allowed port numbers are 9092 and higher with the exception of ports 9404 and 9999, which are already used for Prometheus and JMX. Depending on the listener type, the port number might not be the same as the port number that connects Kafka clients.

- 9

- Listener type specified as

internal, or for external listeners, asroute,loadbalancer,nodeportoringress. - 10

- Enables TLS encryption for each listener. Default is

false. TLS encryption is not required forroutelisteners. - 11

- Defines whether the fully-qualified DNS names including the cluster service suffix (usually

.cluster.local) are assigned. - 12

- Listener authentication mechanism specified as mutual TLS, SCRAM-SHA-512 or token-based OAuth 2.0.

- 13

- External listener configuration specifies how the Kafka cluster is exposed outside OpenShift, such as through a

route,loadbalancerornodeport. - 14

- Optional configuration for a Kafka listener certificate managed by an external Certificate Authority. The

brokerCertChainAndKeyproperty specifies aSecretthat holds a server certificate and a private key. Kafka listener certificates can also be configured for TLS listeners. - 15

- Authorization enables simple, OAUTH 2.0 or OPA authorization on the Kafka broker. Simple authorization uses the

AclAuthorizerKafka plugin. - 16

- Config specifies the broker configuration. Standard Apache Kafka configuration may be provided, restricted to those properties not managed directly by AMQ Streams.

- 17

- 18

- 19

- Storage size for persistent volumes may be increased and additional volumes may be added to JBOD storage.

- 20

- Persistent storage has additional configuration options, such as a storage

idandclassfor dynamic volume provisioning. - 21

- Rack awareness is configured to spread replicas across different racks. A

topologykey must match the label of a cluster node. - 22

- 23

- Kafka rules for exporting metrics to a Grafana dashboard through the JMX Exporter. A set of rules provided with AMQ Streams may be copied to your Kafka resource configuration.

- 24

- ZooKeeper-specific configuration, which contains properties similar to the Kafka configuration.

- 25

- Entity Operator configuration, which specifies the configuration for the Topic Operator and User Operator.

- 26

- Kafka Exporter configuration, which is used to expose data as Prometheus metrics.

- 27

- Cruise Control configuration, which is used to rebalance the Kafka cluster.

2.1.2. Data storage considerations

An efficient data storage infrastructure is essential to the optimal performance of AMQ Streams.

Block storage is required. File storage, such as NFS, does not work with Kafka.

For your block storage, you can choose, for example:

- Cloud-based block storage solutions, such as Amazon Elastic Block Store (EBS)

- Local persistent volumes

- Storage Area Network (SAN) volumes accessed by a protocol such as Fibre Channel or iSCSI

AMQ Streams does not require OpenShift raw block volumes.

2.1.2.1. File systems

It is recommended that you configure your storage system to use the XFS file system. AMQ Streams is also compatible with the ext4 file system, but this might require additional configuration for best results.

2.1.2.2. Apache Kafka and ZooKeeper storage

Use separate disks for Apache Kafka and ZooKeeper.

Three types of data storage are supported:

- Ephemeral (Recommended for development only)

- Persistent

- JBOD (Just a Bunch of Disks, suitable for Kafka only)

For more information, see Kafka and ZooKeeper storage.

Solid-state drives (SSDs), though not essential, can improve the performance of Kafka in large clusters where data is sent to and received from multiple topics asynchronously. SSDs are particularly effective with ZooKeeper, which requires fast, low latency data access.

You do not need to provision replicated storage because Kafka and ZooKeeper both have built-in data replication.

2.1.3. Kafka and ZooKeeper storage types

As stateful applications, Kafka and ZooKeeper need to store data on disk. AMQ Streams supports three storage types for this data:

- Ephemeral

- Persistent

- JBOD storage

JBOD storage is supported only for Kafka, not for ZooKeeper.

When configuring a Kafka resource, you can specify the type of storage used by the Kafka broker and its corresponding ZooKeeper node. You configure the storage type using the storage property in the following resources:

-

Kafka.spec.kafka -

Kafka.spec.zookeeper

The storage type is configured in the type field.

The storage type cannot be changed after a Kafka cluster is deployed.

Additional resources

- For more information about ephemeral storage, see ephemeral storage schema reference.

- For more information about persistent storage, see persistent storage schema reference.

- For more information about JBOD storage, see JBOD schema reference.

-

For more information about the schema for

Kafka, seeKafkaschema reference.

2.1.3.1. Ephemeral storage

Ephemeral storage uses the emptyDir volumes to store data. To use ephemeral storage, the type field should be set to ephemeral.

emptyDir volumes are not persistent and the data stored in them will be lost when the Pod is restarted. After the new pod is started, it has to recover all data from other nodes of the cluster. Ephemeral storage is not suitable for use with single node ZooKeeper clusters and for Kafka topics with replication factor 1, because it will lead to data loss.

An example of Ephemeral storage

2.1.3.1.1. Log directories

The ephemeral volume will be used by the Kafka brokers as log directories mounted into the following path:

/var/lib/kafka/data/kafka-log_idx_-

Where

idxis the Kafka broker pod index. For example/var/lib/kafka/data/kafka-log0.

2.1.3.2. Persistent storage

Persistent storage uses Persistent Volume Claims to provision persistent volumes for storing data. Persistent Volume Claims can be used to provision volumes of many different types, depending on the Storage Class which will provision the volume. The data types which can be used with persistent volume claims include many types of SAN storage as well as Local persistent volumes.

To use persistent storage, the type has to be set to persistent-claim. Persistent storage supports additional configuration options:

id(optional)-

Storage identification number. This option is mandatory for storage volumes defined in a JBOD storage declaration. Default is

0. size(required)- Defines the size of the persistent volume claim, for example, "1000Gi".

class(optional)- The OpenShift Storage Class to use for dynamic volume provisioning.

selector(optional)- Allows selecting a specific persistent volume to use. It contains key:value pairs representing labels for selecting such a volume.

deleteClaim(optional)-

Boolean value which specifies if the Persistent Volume Claim has to be deleted when the cluster is undeployed. Default is

false.

Increasing the size of persistent volumes in an existing AMQ Streams cluster is only supported in OpenShift versions that support persistent volume resizing. The persistent volume to be resized must use a storage class that supports volume expansion. For other versions of OpenShift and storage classes which do not support volume expansion, you must decide the necessary storage size before deploying the cluster. Decreasing the size of existing persistent volumes is not possible.

Example fragment of persistent storage configuration with 1000Gi size

# ... storage: type: persistent-claim size: 1000Gi # ...

# ...

storage:

type: persistent-claim

size: 1000Gi

# ...The following example demonstrates the use of a storage class.

Example fragment of persistent storage configuration with specific Storage Class

Finally, a selector can be used to select a specific labeled persistent volume to provide needed features such as an SSD.

Example fragment of persistent storage configuration with selector

2.1.3.2.1. Storage class overrides

You can specify a different storage class for one or more Kafka brokers or ZooKeeper nodes, instead of using the default storage class. This is useful if, for example, storage classes are restricted to different availability zones or data centers. You can use the overrides field for this purpose.

In this example, the default storage class is named my-storage-class:

Example AMQ Streams cluster using storage class overrides

As a result of the configured overrides property, the volumes use the following storage classes:

-

The persistent volumes of ZooKeeper node 0 will use

my-storage-class-zone-1a. -

The persistent volumes of ZooKeeper node 1 will use

my-storage-class-zone-1b. -

The persistent volumes of ZooKeeepr node 2 will use

my-storage-class-zone-1c. -

The persistent volumes of Kafka broker 0 will use

my-storage-class-zone-1a. -

The persistent volumes of Kafka broker 1 will use

my-storage-class-zone-1b. -

The persistent volumes of Kafka broker 2 will use

my-storage-class-zone-1c.

The overrides property is currently used only to override storage class configurations. Overriding other storage configuration fields is not currently supported. Other fields from the storage configuration are currently not supported.

2.1.3.2.2. Persistent Volume Claim naming

When persistent storage is used, it creates Persistent Volume Claims with the following names:

data-cluster-name-kafka-idx-

Persistent Volume Claim for the volume used for storing data for the Kafka broker pod

idx. data-cluster-name-zookeeper-idx-

Persistent Volume Claim for the volume used for storing data for the ZooKeeper node pod

idx.

2.1.3.2.3. Log directories

The persistent volume will be used by the Kafka brokers as log directories mounted into the following path:

/var/lib/kafka/data/kafka-log_idx_-

Where

idxis the Kafka broker pod index. For example/var/lib/kafka/data/kafka-log0.

2.1.3.3. Resizing persistent volumes

You can provision increased storage capacity by increasing the size of the persistent volumes used by an existing AMQ Streams cluster. Resizing persistent volumes is supported in clusters that use either a single persistent volume or multiple persistent volumes in a JBOD storage configuration.

You can increase but not decrease the size of persistent volumes. Decreasing the size of persistent volumes is not currently supported in OpenShift.

Prerequisites

- An OpenShift cluster with support for volume resizing.

- The Cluster Operator is running.

- A Kafka cluster using persistent volumes created using a storage class that supports volume expansion.

Procedure

In a

Kafkaresource, increase the size of the persistent volume allocated to the Kafka cluster, the ZooKeeper cluster, or both.-

To increase the volume size allocated to the Kafka cluster, edit the

spec.kafka.storageproperty. To increase the volume size allocated to the ZooKeeper cluster, edit the

spec.zookeeper.storageproperty.For example, to increase the volume size from

1000Gito2000Gi:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

To increase the volume size allocated to the Kafka cluster, edit the

Create or update the resource.

Use

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow OpenShift increases the capacity of the selected persistent volumes in response to a request from the Cluster Operator. When the resizing is complete, the Cluster Operator restarts all pods that use the resized persistent volumes. This happens automatically.

Additional resources

For more information about resizing persistent volumes in OpenShift, see Resizing Persistent Volumes using Kubernetes.

2.1.3.4. JBOD storage overview

You can configure AMQ Streams to use JBOD, a data storage configuration of multiple disks or volumes. JBOD is one approach to providing increased data storage for Kafka brokers. It can also improve performance.

A JBOD configuration is described by one or more volumes, each of which can be either ephemeral or persistent. The rules and constraints for JBOD volume declarations are the same as those for ephemeral and persistent storage. For example, you cannot change the size of a persistent storage volume after it has been provisioned.

2.1.3.4.1. JBOD configuration

To use JBOD with AMQ Streams, the storage type must be set to jbod. The volumes property allows you to describe the disks that make up your JBOD storage array or configuration. The following fragment shows an example JBOD configuration:

The ids cannot be changed once the JBOD volumes are created.

Users can add or remove volumes from the JBOD configuration.

2.1.3.4.2. JBOD and Persistent Volume Claims

When persistent storage is used to declare JBOD volumes, the naming scheme of the resulting Persistent Volume Claims is as follows:

data-id-cluster-name-kafka-idx-

Where

idis the ID of the volume used for storing data for Kafka broker podidx.

2.1.3.4.3. Log directories

The JBOD volumes will be used by the Kafka brokers as log directories mounted into the following path:

/var/lib/kafka/data-id/kafka-log_idx_-

Where

idis the ID of the volume used for storing data for Kafka broker podidx. For example/var/lib/kafka/data-0/kafka-log0.

2.1.3.5. Adding volumes to JBOD storage

This procedure describes how to add volumes to a Kafka cluster configured to use JBOD storage. It cannot be applied to Kafka clusters configured to use any other storage type.

When adding a new volume under an id which was already used in the past and removed, you have to make sure that the previously used PersistentVolumeClaims have been deleted.

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

- A Kafka cluster with JBOD storage

Procedure

Edit the

spec.kafka.storage.volumesproperty in theKafkaresource. Add the new volumes to thevolumesarray. For example, add the new volume with id2:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f KAFKA-CONFIG-FILE

oc apply -f KAFKA-CONFIG-FILECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create new topics or reassign existing partitions to the new disks.

Additional resources

For more information about reassigning topics, see Section 2.1.24.2, “Partition reassignment”.

2.1.3.6. Removing volumes from JBOD storage

This procedure describes how to remove volumes from Kafka cluster configured to use JBOD storage. It cannot be applied to Kafka clusters configured to use any other storage type. The JBOD storage always has to contain at least one volume.

To avoid data loss, you have to move all partitions before removing the volumes.

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

- A Kafka cluster with JBOD storage with two or more volumes

Procedure

- Reassign all partitions from the disks which are you going to remove. Any data in partitions still assigned to the disks which are going to be removed might be lost.

Edit the

spec.kafka.storage.volumesproperty in theKafkaresource. Remove one or more volumes from thevolumesarray. For example, remove the volumes with ids1and2:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

For more information about reassigning topics, see Section 2.1.24.2, “Partition reassignment”.

2.1.4. Kafka broker replicas

A Kafka cluster can run with many brokers. You can configure the number of brokers used for the Kafka cluster in Kafka.spec.kafka.replicas. The best number of brokers for your cluster has to be determined based on your specific use case.

2.1.4.1. Configuring the number of broker nodes

This procedure describes how to configure the number of Kafka broker nodes in a new cluster. It only applies to new clusters with no partitions. If your cluster already has topics defined, see Section 2.1.24, “Scaling clusters”.

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

- A Kafka cluster with no topics defined yet

Procedure

Edit the

replicasproperty in theKafkaresource. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

If your cluster already has topics defined, see Section 2.1.24, “Scaling clusters”.

2.1.5. Kafka broker configuration

AMQ Streams allows you to customize the configuration of the Kafka brokers in your Kafka cluster. You can specify and configure most of the options listed in the "Broker Configs" section of the Apache Kafka documentation. You cannot configure options that are related to the following areas:

- Security (Encryption, Authentication, and Authorization)

- Listener configuration

- Broker ID configuration

- Configuration of log data directories

- Inter-broker communication

- ZooKeeper connectivity

These options are automatically configured by AMQ Streams.

For more information on broker configuration, see the KafkaClusterSpec schema.

Listener configuration

You configure listeners for connecting to Kafka brokers. For more information on configuring listeners, see Listener configuration

Authorizing access to Kafka

You can configure your Kafka cluster to allow or decline actions executed by users. For more information on securing access to Kafka brokers, see Managing access to Kafka.

2.1.5.1. Configuring Kafka brokers

You can configure an existing Kafka broker, or create a new Kafka broker with a specified configuration.

Prerequisites

- An OpenShift cluster is available.

- The Cluster Operator is running.

Procedure

-

Open the YAML configuration file that contains the

Kafkaresource specifying the cluster deployment. In the

spec.kafka.configproperty in theKafkaresource, enter one or more Kafka configuration settings. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the new configuration to create or update the resource.

Use

oc apply:oc apply -f kafka.yaml

oc apply -f kafka.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

kafka.yamlis the YAML configuration file for the resource that you want to configure; for example,kafka-persistent.yaml.

2.1.6. Listener configuration

Listeners are used to connect to Kafka brokers.

AMQ Streams provides a generic GenericKafkaListener schema with properties to configure listeners through the Kafka resource.

The GenericKafkaListener provides a flexible approach to listener configuration.

You can specify properties to configure internal listeners for connecting within the OpenShift cluster, or external listeners for connecting outside the OpenShift cluster.

Generic listener configuration

Each listener is defined as an array in the Kafka resource.

For more information on listener configuration, see the GenericKafkaListener schema reference.

Generic listener configuration replaces the previous approach to listener configuration using the KafkaListeners schema reference, which is deprecated. However, you can convert the old format into the new format with backwards compatibility.

The KafkaListeners schema uses sub-properties for plain, tls and external listeners, with fixed ports for each. Because of the limits inherent in the architecture of the schema, it is only possible to configure three listeners, with configuration options limited to the type of listener.

With the GenericKafkaListener schema, you can configure as many listeners as required, as long as their names and ports are unique.

You might want to configure multiple external listeners, for example, to handle access from networks that require different authentication mechanisms. Or you might need to join your OpenShift network to an outside network. In which case, you can configure internal listeners (using the useServiceDnsDomain property) so that the OpenShift service DNS domain (typically .cluster.local) is not used.

Configuring listeners to secure access to Kafka brokers

You can configure listeners for secure connection using authentication. For more information on securing access to Kafka brokers, see Managing access to Kafka.

Configuring external listeners for client access outside OpenShift

You can configure external listeners for client access outside an OpenShift environment using a specified connection mechanism, such as a loadbalancer. For more information on the configuration options for connecting an external client, see Configuring external listeners.

Listener certificates

You can provide your own server certificates, called Kafka listener certificates, for TLS listeners or external listeners which have TLS encryption enabled. For more information, see Kafka listener certificates.

2.1.7. ZooKeeper replicas

ZooKeeper clusters or ensembles usually run with an odd number of nodes, typically three, five, or seven.

The majority of nodes must be available in order to maintain an effective quorum. If the ZooKeeper cluster loses its quorum, it will stop responding to clients and the Kafka brokers will stop working. Having a stable and highly available ZooKeeper cluster is crucial for AMQ Streams.

- Three-node cluster

- A three-node ZooKeeper cluster requires at least two nodes to be up and running in order to maintain the quorum. It can tolerate only one node being unavailable.

- Five-node cluster

- A five-node ZooKeeper cluster requires at least three nodes to be up and running in order to maintain the quorum. It can tolerate two nodes being unavailable.

- Seven-node cluster

- A seven-node ZooKeeper cluster requires at least four nodes to be up and running in order to maintain the quorum. It can tolerate three nodes being unavailable.

For development purposes, it is also possible to run ZooKeeper with a single node.

Having more nodes does not necessarily mean better performance, as the costs to maintain the quorum will rise with the number of nodes in the cluster. Depending on your availability requirements, you can decide for the number of nodes to use.

2.1.7.1. Number of ZooKeeper nodes

The number of ZooKeeper nodes can be configured using the replicas property in Kafka.spec.zookeeper.

An example showing replicas configuration

2.1.7.2. Changing the number of ZooKeeper replicas

Prerequisites

- An OpenShift cluster is available.

- The Cluster Operator is running.

Procedure

-

Open the YAML configuration file that contains the

Kafkaresource specifying the cluster deployment. In the

spec.zookeeper.replicasproperty in theKafkaresource, enter the number of replicated ZooKeeper servers. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the new configuration to create or update the resource.

Use

oc apply:oc apply -f kafka.yaml

oc apply -f kafka.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

kafka.yamlis the YAML configuration file for the resource that you want to configure; for example,kafka-persistent.yaml.

2.1.8. ZooKeeper configuration

AMQ Streams allows you to customize the configuration of Apache ZooKeeper nodes. You can specify and configure most of the options listed in the ZooKeeper documentation.

Options which cannot be configured are those related to the following areas:

- Security (Encryption, Authentication, and Authorization)

- Listener configuration

- Configuration of data directories

- ZooKeeper cluster composition

These options are automatically configured by AMQ Streams.

2.1.8.1. ZooKeeper configuration

ZooKeeper nodes are configured using the config property in Kafka.spec.zookeeper. This property contains the ZooKeeper configuration options as keys. The values can be described using one of the following JSON types:

- String

- Number

- Boolean

Users can specify and configure the options listed in ZooKeeper documentation with the exception of those options which are managed directly by AMQ Streams. Specifically, all configuration options with keys equal to or starting with one of the following strings are forbidden:

-

server. -

dataDir -

dataLogDir -

clientPort -

authProvider -

quorum.auth -

requireClientAuthScheme

When one of the forbidden options is present in the config property, it is ignored and a warning message is printed to the Cluster Operator log file. All other options are passed to ZooKeeper.

The Cluster Operator does not validate keys or values in the provided config object. When invalid configuration is provided, the ZooKeeper cluster might not start or might become unstable. In such cases, the configuration in the Kafka.spec.zookeeper.config object should be fixed and the Cluster Operator will roll out the new configuration to all ZooKeeper nodes.

Selected options have default values:

-

timeTickwith default value2000 -

initLimitwith default value5 -

syncLimitwith default value2 -

autopurge.purgeIntervalwith default value1

These options will be automatically configured when they are not present in the Kafka.spec.zookeeper.config property.

Use the three allowed ssl configuration options for client connection using a specific cipher suite for a TLS version. A cipher suite combines algorithms for secure connection and data transfer.

Example ZooKeeper configuration

- 1

- The cipher suite for TLS using a combination of

ECDHEkey exchange mechanism,RSAauthentication algorithm,AESbulk encyption algorithm andSHA384MAC algorithm. - 2

- The SSl protocol

TLSv1.2is enabled. - 3

- Specifies the

TLSv1.2protocol to generate the SSL context. Allowed values areTLSv1.1andTLSv1.2.

2.1.8.2. Configuring ZooKeeper

Prerequisites

- An OpenShift cluster is available.

- The Cluster Operator is running.

Procedure

-

Open the YAML configuration file that contains the

Kafkaresource specifying the cluster deployment. In the

spec.zookeeper.configproperty in theKafkaresource, enter one or more ZooKeeper configuration settings. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the new configuration to create or update the resource.

Use

oc apply:oc apply -f kafka.yaml

oc apply -f kafka.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

kafka.yamlis the YAML configuration file for the resource that you want to configure; for example,kafka-persistent.yaml.

2.1.9. ZooKeeper connection

ZooKeeper services are secured with encryption and authentication and are not intended to be used by external applications that are not part of AMQ Streams.

However, if you want to use Kafka CLI tools that require a connection to ZooKeeper, you can use a terminal inside a ZooKeeper container and connect to localhost:12181 as the ZooKeeper address.

2.1.9.1. Connecting to ZooKeeper from a terminal

Most Kafka CLI tools can connect directly to Kafka. So you should under normal circumstances not need to connect to ZooKeeper. In case it is needed, you can follow this procedure. Open a terminal inside a ZooKeeper container to use Kafka CLI tools that require a ZooKeeper connection.

Prerequisites

- An OpenShift cluster is available.

- A Kafka cluster is running.

- The Cluster Operator is running.

Procedure

Open the terminal using the OpenShift console or run the

execcommand from your CLI.For example:

oc exec -it my-cluster-zookeeper-0 -- bin/kafka-topics.sh --list --zookeeper localhost:12181

oc exec -it my-cluster-zookeeper-0 -- bin/kafka-topics.sh --list --zookeeper localhost:12181Copy to Clipboard Copied! Toggle word wrap Toggle overflow Be sure to use

localhost:12181.You can now run Kafka commands to ZooKeeper.

2.1.10. Entity Operator

The Entity Operator is responsible for managing Kafka-related entities in a running Kafka cluster.

The Entity Operator comprises the:

- Topic Operator to manage Kafka topics

- User Operator to manage Kafka users

Through Kafka resource configuration, the Cluster Operator can deploy the Entity Operator, including one or both operators, when deploying a Kafka cluster.

When deployed, the Entity Operator contains the operators according to the deployment configuration.

The operators are automatically configured to manage the topics and users of the Kafka cluster.

2.1.10.1. Entity Operator configuration properties

Use the entityOperator property in Kafka.spec to configure the Entity Operator.

The entityOperator property supports several sub-properties:

-

tlsSidecar -

topicOperator -

userOperator -

template

The tlsSidecar property contains the configuration of the TLS sidecar container, which is used to communicate with ZooKeeper. For more information on configuring the TLS sidecar, see Section 2.1.19, “TLS sidecar”.

The template property contains the configuration of the Entity Operator pod, such as labels, annotations, affinity, and tolerations. For more information on configuring templates, see Section 2.6, “Customizing OpenShift resources”.

The topicOperator property contains the configuration of the Topic Operator. When this option is missing, the Entity Operator is deployed without the Topic Operator.

The userOperator property contains the configuration of the User Operator. When this option is missing, the Entity Operator is deployed without the User Operator.

For more information on the properties to configure the Entity Operator, see the EntityUserOperatorSpec schema reference.

Example of basic configuration enabling both operators

If an empty object ({}) is used for the topicOperator and userOperator, all properties use their default values.

When both topicOperator and userOperator properties are missing, the Entity Operator is not deployed.

2.1.10.2. Topic Operator configuration properties

Topic Operator deployment can be configured using additional options inside the topicOperator object. The following properties are supported:

watchedNamespace-

The OpenShift namespace in which the topic operator watches for

KafkaTopics. Default is the namespace where the Kafka cluster is deployed. reconciliationIntervalSeconds-

The interval between periodic reconciliations in seconds. Default

90. zookeeperSessionTimeoutSeconds-

The ZooKeeper session timeout in seconds. Default

20. topicMetadataMaxAttempts-

The number of attempts at getting topic metadata from Kafka. The time between each attempt is defined as an exponential back-off. Consider increasing this value when topic creation could take more time due to the number of partitions or replicas. Default

6. image-

The

imageproperty can be used to configure the container image which will be used. For more details about configuring custom container images, see Section 2.1.18, “Container images”. resources-

The

resourcesproperty configures the amount of resources allocated to the Topic Operator. For more details about resource request and limit configuration, see Section 2.1.11, “CPU and memory resources”. logging-

The

loggingproperty configures the logging of the Topic Operator. For more details, see Section 2.1.10.4, “Operator loggers”.

Example of Topic Operator configuration

2.1.10.3. User Operator configuration properties

User Operator deployment can be configured using additional options inside the userOperator object. The following properties are supported:

watchedNamespace-

The OpenShift namespace in which the user operator watches for

KafkaUsers. Default is the namespace where the Kafka cluster is deployed. reconciliationIntervalSeconds-

The interval between periodic reconciliations in seconds. Default

120. zookeeperSessionTimeoutSeconds-

The ZooKeeper session timeout in seconds. Default

6. image-

The

imageproperty can be used to configure the container image which will be used. For more details about configuring custom container images, see Section 2.1.18, “Container images”. resources-

The

resourcesproperty configures the amount of resources allocated to the User Operator. For more details about resource request and limit configuration, see Section 2.1.11, “CPU and memory resources”. logging-

The

loggingproperty configures the logging of the User Operator. For more details, see Section 2.1.10.4, “Operator loggers”.

Example of User Operator configuration

2.1.10.4. Operator loggers

The Topic Operator and User Operator have a configurable logger:

-

rootLogger.level

The operators use the Apache log4j2 logger implementation.

Use the logging property in the Kafka resource to configure loggers and logger levels.

You can set the log levels by specifying the logger and level directly (inline) or use a custom (external) ConfigMap. If a ConfigMap is used, you set logging.name property to the name of the ConfigMap containing the external logging configuration. Inside the ConfigMap, the logging configuration is described using log4j2.properties.

Here we see examples of inline and external logging.

Inline logging

External logging

Additional resources

- Garbage collector (GC) logging can also be enabled (or disabled). For more information about GC logging, see Section 2.1.17.1, “JVM configuration”

- For more information about log levels, see Apache logging services.

2.1.10.5. Configuring the Entity Operator

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

Edit the

entityOperatorproperty in theKafkaresource. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.11. CPU and memory resources

For every deployed container, AMQ Streams allows you to request specific resources and define the maximum consumption of those resources.

AMQ Streams supports two types of resources:

- CPU

- Memory

AMQ Streams uses the OpenShift syntax for specifying CPU and memory resources.

2.1.11.1. Resource limits and requests

Resource limits and requests are configured using the resources property in the following resources:

-

Kafka.spec.kafka -

Kafka.spec.zookeeper -

Kafka.spec.entityOperator.topicOperator -

Kafka.spec.entityOperator.userOperator -

Kafka.spec.entityOperator.tlsSidecar -

Kafka.spec.kafkaExporter -

KafkaConnect.spec -

KafkaConnectS2I.spec -

KafkaBridge.spec

Additional resources

- For more information about managing computing resources on OpenShift, see Managing Compute Resources for Containers.

2.1.11.1.1. Resource requests

Requests specify the resources to reserve for a given container. Reserving the resources ensures that they are always available.

If the resource request is for more than the available free resources in the OpenShift cluster, the pod is not scheduled.

Resources requests are specified in the requests property. Resources requests currently supported by AMQ Streams:

-

cpu -

memory

A request may be configured for one or more supported resources.

Example resource request configuration with all resources

2.1.11.1.2. Resource limits

Limits specify the maximum resources that can be consumed by a given container. The limit is not reserved and might not always be available. A container can use the resources up to the limit only when they are available. Resource limits should be always higher than the resource requests.

Resource limits are specified in the limits property. Resource limits currently supported by AMQ Streams:

-

cpu -

memory

A resource may be configured for one or more supported limits.

Example resource limits configuration

2.1.11.1.3. Supported CPU formats

CPU requests and limits are supported in the following formats:

-

Number of CPU cores as integer (

5CPU core) or decimal (2.5CPU core). -

Number or millicpus / millicores (

100m) where 1000 millicores is the same1CPU core.

Example CPU units

The computing power of 1 CPU core may differ depending on the platform where OpenShift is deployed.

Additional resources

- For more information on CPU specification, see the Meaning of CPU.

2.1.11.1.4. Supported memory formats

Memory requests and limits are specified in megabytes, gigabytes, mebibytes, and gibibytes.

-

To specify memory in megabytes, use the

Msuffix. For example1000M. -

To specify memory in gigabytes, use the

Gsuffix. For example1G. -

To specify memory in mebibytes, use the

Misuffix. For example1000Mi. -

To specify memory in gibibytes, use the

Gisuffix. For example1Gi.

An example of using different memory units

Additional resources

- For more details about memory specification and additional supported units, see Meaning of memory.

2.1.11.2. Configuring resource requests and limits

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

Edit the

resourcesproperty in the resource specifying the cluster deployment. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about the schema, see ResourceRequirements API reference.

2.1.12. Kafka loggers

Kafka has its own configurable loggers:

-

log4j.logger.org.I0Itec.zkclient.ZkClient -

log4j.logger.org.apache.zookeeper -

log4j.logger.kafka -

log4j.logger.org.apache.kafka -

log4j.logger.kafka.request.logger -

log4j.logger.kafka.network.Processor -

log4j.logger.kafka.server.KafkaApis -

log4j.logger.kafka.network.RequestChannel$ -

log4j.logger.kafka.controller -

log4j.logger.kafka.log.LogCleaner -

log4j.logger.state.change.logger -

log4j.logger.kafka.authorizer.logger

ZooKeeper also has a configurable logger:

-

zookeeper.root.logger

Kafka and ZooKeeper use the Apache log4j logger implementation.

Operators use the Apache log4j2 logger implementation, so the logging configuration is described inside the ConfigMap using log4j2.properties. For more information, see Section 2.1.10.4, “Operator loggers”.

Use the logging property to configure loggers and logger levels.

You can set the log levels by specifying the logger and level directly (inline) or use a custom (external) ConfigMap. If a ConfigMap is used, you set logging.name property to the name of the ConfigMap containing the external logging configuration. Inside the ConfigMap, the logging configuration is described using log4j.properties.

Here we see examples of inline and external logging.

Inline logging

External logging

Changes to both external and inline logging levels will be applied to Kafka brokers without a restart.

Additional resources

- Garbage collector (GC) logging can also be enabled (or disabled). For more information on garbage collection, see Section 2.1.17.1, “JVM configuration”

- For more information about log levels, see Apache logging services.

2.1.13. Kafka rack awareness

The rack awareness feature in AMQ Streams helps to spread the Kafka broker pods and Kafka topic replicas across different racks. Enabling rack awareness helps to improve availability of Kafka brokers and the topics they are hosting.

"Rack" might represent an availability zone, data center, or an actual rack in your data center.

2.1.13.1. Configuring rack awareness in Kafka brokers

Kafka rack awareness can be configured in the rack property of Kafka.spec.kafka. The rack object has one mandatory field named topologyKey. This key needs to match one of the labels assigned to the OpenShift cluster nodes. The label is used by OpenShift when scheduling the Kafka broker pods to nodes. If the OpenShift cluster is running on a cloud provider platform, that label should represent the availability zone where the node is running. Usually, the nodes are labeled with topology.kubernetes.io/zone label (or failure-domain.beta.kubernetes.io/zone on older OpenShift versions) that can be used as the topologyKey value. For more information about OpenShift node labels, see Well-Known Labels, Annotations and Taints. This has the effect of spreading the broker pods across zones, and also setting the brokers' broker.rack configuration parameter inside Kafka broker.

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

- Consult your OpenShift administrator regarding the node label that represents the zone / rack into which the node is deployed.

Edit the

rackproperty in theKafkaresource using the label as the topology key.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For information about Configuring init container image for Kafka rack awareness, see Section 2.1.18, “Container images”.

2.1.14. Healthchecks

Healthchecks are periodical tests which verify the health of an application. When a Healthcheck probe fails, OpenShift assumes that the application is not healthy and attempts to fix it.

OpenShift supports two types of Healthcheck probes:

- Liveness probes

- Readiness probes

For more details about the probes, see Configure Liveness and Readiness Probes. Both types of probes are used in AMQ Streams components.

Users can configure selected options for liveness and readiness probes.

2.1.14.1. Healthcheck configurations

Liveness and readiness probes can be configured using the livenessProbe and readinessProbe properties in following resources:

-

Kafka.spec.kafka -

Kafka.spec.zookeeper -

Kafka.spec.entityOperator.tlsSidecar -

Kafka.spec.entityOperator.topicOperator -

Kafka.spec.entityOperator.userOperator -

Kafka.spec.kafkaExporter -

KafkaConnect.spec -

KafkaConnectS2I.spec -

KafkaMirrorMaker.spec -

KafkaBridge.spec

Both livenessProbe and readinessProbe support the following options:

-

initialDelaySeconds -

timeoutSeconds -

periodSeconds -

successThreshold -

failureThreshold

For more information about the livenessProbe and readinessProbe options, see Section B.45, “Probe schema reference”.

An example of liveness and readiness probe configuration

2.1.14.2. Configuring healthchecks

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

Edit the

livenessProbeorreadinessProbeproperty in theKafkaresource. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.15. Prometheus metrics

AMQ Streams supports Prometheus metrics using Prometheus JMX exporter to convert the JMX metrics supported by Apache Kafka and ZooKeeper to Prometheus metrics. When metrics are enabled, they are exposed on port 9404.

For more information about setting up and deploying Prometheus and Grafana, see Introducing Metrics to Kafka in the Deploying and Upgrading AMQ Streams on OpenShift guide.

2.1.15.1. Metrics configuration

Prometheus metrics are enabled by configuring the metrics property in following resources:

-

Kafka.spec.kafka -

Kafka.spec.zookeeper -

KafkaConnect.spec -

KafkaConnectS2I.spec

When the metrics property is not defined in the resource, the Prometheus metrics will be disabled. To enable Prometheus metrics export without any further configuration, you can set it to an empty object ({}).

Example of enabling metrics without any further configuration

The metrics property might contain additional configuration for the Prometheus JMX exporter.

Example of enabling metrics with additional Prometheus JMX Exporter configuration

2.1.15.2. Configuring Prometheus metrics

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

Edit the

metricsproperty in theKafkaresource. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.16. JMX Options

AMQ Streams supports obtaining JMX metrics from the Kafka brokers by opening a JMX port on 9999. You can obtain various metrics about each Kafka broker, for example, usage data such as the BytesPerSecond value or the request rate of the network of the broker. AMQ Streams supports opening a password and username protected JMX port or a non-protected JMX port.

2.1.16.1. Configuring JMX options

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

You can configure JMX options by using the jmxOptions property in the following resources:

-

Kafka.spec.kafka

You can configure username and password protection for the JMX port that is opened on the Kafka brokers.

Securing the JMX Port

You can secure the JMX port to prevent unauthorized pods from accessing the port. Currently the JMX port can only be secured using a username and password. To enable security for the JMX port, set the type parameter in the authentication field to password.:

This allows you to deploy a pod internally into a cluster and obtain JMX metrics by using the headless service and specifying which broker you want to address. To get JMX metrics from broker 0 we address the headless service appending broker 0 in front of the headless service:

"<cluster-name>-kafka-0-<cluster-name>-<headless-service-name>"

"<cluster-name>-kafka-0-<cluster-name>-<headless-service-name>"If the JMX port is secured, you can get the username and password by referencing them from the JMX secret in the deployment of your pod.

Using an open JMX port

To disable security for the JMX port, do not fill in the authentication field

This will just open the JMX Port on the headless service and you can follow a similar approach as described above to deploy a pod into the cluster. The only difference is that any pod will be able to read from the JMX port.

2.1.17. JVM Options

The following components of AMQ Streams run inside a Virtual Machine (VM):

- Apache Kafka

- Apache ZooKeeper

- Apache Kafka Connect

- Apache Kafka MirrorMaker

- AMQ Streams Kafka Bridge

JVM configuration options optimize the performance for different platforms and architectures. AMQ Streams allows you to configure some of these options.

2.1.17.1. JVM configuration

Use the jvmOptions property to configure supported options for the JVM on which the component is running.

Supported JVM options help to optimize performance for different platforms and architectures.

2.1.17.2. Configuring JVM options

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

Edit the

jvmOptionsproperty in theKafka,KafkaConnect,KafkaConnectS2I,KafkaMirrorMaker, orKafkaBridgeresource. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.18. Container images

AMQ Streams allows you to configure container images which will be used for its components. Overriding container images is recommended only in special situations, where you need to use a different container registry. For example, because your network does not allow access to the container repository used by AMQ Streams. In such a case, you should either copy the AMQ Streams images or build them from the source. If the configured image is not compatible with AMQ Streams images, it might not work properly.

2.1.18.1. Container image configurations

Use the image property to specify which container image to use.

Overriding container images is recommended only in special situations.

2.1.18.2. Configuring container images

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

Edit the

imageproperty in theKafkaresource. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.19. TLS sidecar

A sidecar is a container that runs in a pod but serves a supporting purpose. In AMQ Streams, the TLS sidecar uses TLS to encrypt and decrypt all communication between the various components and ZooKeeper.

The TLS sidecar is used in:

- Entity Operator

- Cruise Control

2.1.19.1. TLS sidecar configuration

The TLS sidecar can be configured using the tlsSidecar property in:

-

Kafka.spec.kafka -

Kafka.spec.zookeeper -

Kafka.spec.entityOperator

The TLS sidecar supports the following additional options:

-

image -

resources -

logLevel -

readinessProbe -

livenessProbe

The resources property can be used to specify the memory and CPU resources allocated for the TLS sidecar.

The image property can be used to configure the container image which will be used. For more details about configuring custom container images, see Section 2.1.18, “Container images”.

The logLevel property is used to specify the logging level. Following logging levels are supported:

- emerg

- alert

- crit

- err

- warning

- notice

- info

- debug

The default value is notice.

For more information about configuring the readinessProbe and livenessProbe properties for the healthchecks, see Section 2.1.14.1, “Healthcheck configurations”.

Example of TLS sidecar configuration

2.1.19.2. Configuring TLS sidecar

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

Edit the

tlsSidecarproperty in theKafkaresource. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.20. Configuring pod scheduling

When two applications are scheduled to the same OpenShift node, both applications might use the same resources like disk I/O and impact performance. That can lead to performance degradation. Scheduling Kafka pods in a way that avoids sharing nodes with other critical workloads, using the right nodes or dedicated a set of nodes only for Kafka are the best ways how to avoid such problems.

2.1.20.1. Scheduling pods based on other applications

2.1.20.1.1. Avoid critical applications to share the node

Pod anti-affinity can be used to ensure that critical applications are never scheduled on the same disk. When running Kafka cluster, it is recommended to use pod anti-affinity to ensure that the Kafka brokers do not share the nodes with other workloads like databases.

2.1.20.1.2. Affinity

Affinity can be configured using the affinity property in following resources:

-

Kafka.spec.kafka.template.pod -

Kafka.spec.zookeeper.template.pod -

Kafka.spec.entityOperator.template.pod -

KafkaConnect.spec.template.pod -

KafkaConnectS2I.spec.template.pod -

KafkaBridge.spec.template.pod

The affinity configuration can include different types of affinity:

- Pod affinity and anti-affinity

- Node affinity

The format of the affinity property follows the OpenShift specification. For more details, see the Kubernetes node and pod affinity documentation.

2.1.20.1.3. Configuring pod anti-affinity in Kafka components

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

Edit the

affinityproperty in the resource specifying the cluster deployment. Use labels to specify the pods which should not be scheduled on the same nodes. ThetopologyKeyshould be set tokubernetes.io/hostnameto specify that the selected pods should not be scheduled on nodes with the same hostname. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.20.2. Scheduling pods to specific nodes

2.1.20.2.1. Node scheduling

The OpenShift cluster usually consists of many different types of worker nodes. Some are optimized for CPU heavy workloads, some for memory, while other might be optimized for storage (fast local SSDs) or network. Using different nodes helps to optimize both costs and performance. To achieve the best possible performance, it is important to allow scheduling of AMQ Streams components to use the right nodes.

OpenShift uses node affinity to schedule workloads onto specific nodes. Node affinity allows you to create a scheduling constraint for the node on which the pod will be scheduled. The constraint is specified as a label selector. You can specify the label using either the built-in node label like beta.kubernetes.io/instance-type or custom labels to select the right node.

2.1.20.2.2. Affinity

Affinity can be configured using the affinity property in following resources:

-

Kafka.spec.kafka.template.pod -

Kafka.spec.zookeeper.template.pod -

Kafka.spec.entityOperator.template.pod -

KafkaConnect.spec.template.pod -

KafkaConnectS2I.spec.template.pod -

KafkaBridge.spec.template.pod

The affinity configuration can include different types of affinity:

- Pod affinity and anti-affinity

- Node affinity

The format of the affinity property follows the OpenShift specification. For more details, see the Kubernetes node and pod affinity documentation.

2.1.20.2.3. Configuring node affinity in Kafka components

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

Label the nodes where AMQ Streams components should be scheduled.

This can be done using

oc label:oc label node your-node node-type=fast-network

oc label node your-node node-type=fast-networkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, some of the existing labels might be reused.

Edit the

affinityproperty in the resource specifying the cluster deployment. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.20.3. Using dedicated nodes

2.1.20.3.1. Dedicated nodes

Cluster administrators can mark selected OpenShift nodes as tainted. Nodes with taints are excluded from regular scheduling and normal pods will not be scheduled to run on them. Only services which can tolerate the taint set on the node can be scheduled on it. The only other services running on such nodes will be system services such as log collectors or software defined networks.

Taints can be used to create dedicated nodes. Running Kafka and its components on dedicated nodes can have many advantages. There will be no other applications running on the same nodes which could cause disturbance or consume the resources needed for Kafka. That can lead to improved performance and stability.

To schedule Kafka pods on the dedicated nodes, configure node affinity and tolerations.

2.1.20.3.2. Affinity

Affinity can be configured using the affinity property in following resources:

-

Kafka.spec.kafka.template.pod -

Kafka.spec.zookeeper.template.pod -

Kafka.spec.entityOperator.template.pod -

KafkaConnect.spec.template.pod -

KafkaConnectS2I.spec.template.pod -

KafkaBridge.spec.template.pod

The affinity configuration can include different types of affinity:

- Pod affinity and anti-affinity

- Node affinity

The format of the affinity property follows the OpenShift specification. For more details, see the Kubernetes node and pod affinity documentation.

2.1.20.3.3. Tolerations

Tolerations can be configured using the tolerations property in following resources:

-

Kafka.spec.kafka.template.pod -

Kafka.spec.zookeeper.template.pod -

Kafka.spec.entityOperator.template.pod -

KafkaConnect.spec.template.pod -

KafkaConnectS2I.spec.template.pod -

KafkaBridge.spec.template.pod

The format of the tolerations property follows the OpenShift specification. For more details, see the Kubernetes taints and tolerations.

2.1.20.3.4. Setting up dedicated nodes and scheduling pods on them

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

- Select the nodes which should be used as dedicated.

- Make sure there are no workloads scheduled on these nodes.

Set the taints on the selected nodes:

This can be done using

oc adm taint:oc adm taint node your-node dedicated=Kafka:NoSchedule

oc adm taint node your-node dedicated=Kafka:NoScheduleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Additionally, add a label to the selected nodes as well.

This can be done using

oc label:oc label node your-node dedicated=Kafka

oc label node your-node dedicated=KafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

affinityandtolerationsproperties in the resource specifying the cluster deployment. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.21. Kafka Exporter

You can configure the Kafka resource to automatically deploy Kafka Exporter in your cluster.

Kafka Exporter extracts data for analysis as Prometheus metrics, primarily data relating to offsets, consumer groups, consumer lag and topics.

For information on setting up Kafka Exporter and why it is important to monitor consumer lag for performance, see Kafka Exporter in the Deploying and Upgrading AMQ Streams on OpenShift guide.

2.1.22. Performing a rolling update of a Kafka cluster

This procedure describes how to manually trigger a rolling update of an existing Kafka cluster by using an OpenShift annotation.

Prerequisites

See the Deploying and Upgrading AMQ Streams on OpenShift guide for instructions on running a:

Procedure

Find the name of the

StatefulSetthat controls the Kafka pods you want to manually update.For example, if your Kafka cluster is named my-cluster, the corresponding

StatefulSetis named my-cluster-kafka.Annotate the

StatefulSetresource in OpenShift. For example, usingoc annotate:oc annotate statefulset cluster-name-kafka strimzi.io/manual-rolling-update=true

oc annotate statefulset cluster-name-kafka strimzi.io/manual-rolling-update=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Wait for the next reconciliation to occur (every two minutes by default). A rolling update of all pods within the annotated

StatefulSetis triggered, as long as the annotation was detected by the reconciliation process. When the rolling update of all the pods is complete, the annotation is removed from theStatefulSet.

2.1.23. Performing a rolling update of a ZooKeeper cluster

This procedure describes how to manually trigger a rolling update of an existing ZooKeeper cluster by using an OpenShift annotation.

Prerequisites

See the Deploying and Upgrading AMQ Streams on OpenShift guide for instructions on running a:

Procedure

Find the name of the

StatefulSetthat controls the ZooKeeper pods you want to manually update.For example, if your Kafka cluster is named my-cluster, the corresponding

StatefulSetis named my-cluster-zookeeper.Annotate the

StatefulSetresource in OpenShift. For example, usingoc annotate:oc annotate statefulset cluster-name-zookeeper strimzi.io/manual-rolling-update=true

oc annotate statefulset cluster-name-zookeeper strimzi.io/manual-rolling-update=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Wait for the next reconciliation to occur (every two minutes by default). A rolling update of all pods within the annotated

StatefulSetis triggered, as long as the annotation was detected by the reconciliation process. When the rolling update of all the pods is complete, the annotation is removed from theStatefulSet.

2.1.24. Scaling clusters

2.1.24.1. Scaling Kafka clusters

2.1.24.1.1. Adding brokers to a cluster

The primary way of increasing throughput for a topic is to increase the number of partitions for that topic. That works because the extra partitions allow the load of the topic to be shared between the different brokers in the cluster. However, in situations where every broker is constrained by a particular resource (typically I/O) using more partitions will not result in increased throughput. Instead, you need to add brokers to the cluster.

When you add an extra broker to the cluster, Kafka does not assign any partitions to it automatically. You must decide which partitions to move from the existing brokers to the new broker.

Once the partitions have been redistributed between all the brokers, the resource utilization of each broker should be reduced.

2.1.24.1.2. Removing brokers from a cluster

Because AMQ Streams uses StatefulSets to manage broker pods, you cannot remove any pod from the cluster. You can only remove one or more of the highest numbered pods from the cluster. For example, in a cluster of 12 brokers the pods are named cluster-name-kafka-0 up to cluster-name-kafka-11. If you decide to scale down by one broker, the cluster-name-kafka-11 will be removed.

Before you remove a broker from a cluster, ensure that it is not assigned to any partitions. You should also decide which of the remaining brokers will be responsible for each of the partitions on the broker being decommissioned. Once the broker has no assigned partitions, you can scale the cluster down safely.

2.1.24.2. Partition reassignment

The Topic Operator does not currently support reassigning replicas to different brokers, so it is necessary to connect directly to broker pods to reassign replicas to brokers.

Within a broker pod, the kafka-reassign-partitions.sh utility allows you to reassign partitions to different brokers.

It has three different modes:

--generate- Takes a set of topics and brokers and generates a reassignment JSON file which will result in the partitions of those topics being assigned to those brokers. Because this operates on whole topics, it cannot be used when you just need to reassign some of the partitions of some topics.

--execute- Takes a reassignment JSON file and applies it to the partitions and brokers in the cluster. Brokers that gain partitions as a result become followers of the partition leader. For a given partition, once the new broker has caught up and joined the ISR (in-sync replicas) the old broker will stop being a follower and will delete its replica.

--verify-

Using the same reassignment JSON file as the

--executestep,--verifychecks whether all of the partitions in the file have been moved to their intended brokers. If the reassignment is complete, --verify also removes any throttles that are in effect. Unless removed, throttles will continue to affect the cluster even after the reassignment has finished.

It is only possible to have one reassignment running in a cluster at any given time, and it is not possible to cancel a running reassignment. If you need to cancel a reassignment, wait for it to complete and then perform another reassignment to revert the effects of the first reassignment. The kafka-reassign-partitions.sh will print the reassignment JSON for this reversion as part of its output. Very large reassignments should be broken down into a number of smaller reassignments in case there is a need to stop in-progress reassignment.

2.1.24.2.1. Reassignment JSON file

The reassignment JSON file has a specific structure:

Where <PartitionObjects> is a comma-separated list of objects like:

{

"topic": <TopicName>,

"partition": <Partition>,

"replicas": [ <AssignedBrokerIds> ]

}

{

"topic": <TopicName>,

"partition": <Partition>,

"replicas": [ <AssignedBrokerIds> ]

}

Although Kafka also supports a "log_dirs" property this should not be used in AMQ Streams.

The following is an example reassignment JSON file that assigns topic topic-a, partition 4 to brokers 2, 4 and 7, and topic topic-b partition 2 to brokers 1, 5 and 7:

Partitions not included in the JSON are not changed.

2.1.24.2.2. Reassigning partitions between JBOD volumes

When using JBOD storage in your Kafka cluster, you can choose to reassign the partitions between specific volumes and their log directories (each volume has a single log directory). To reassign a partition to a specific volume, add the log_dirs option to <PartitionObjects> in the reassignment JSON file.

The log_dirs object should contain the same number of log directories as the number of replicas specified in the replicas object. The value should be either an absolute path to the log directory, or the any keyword.

For example:

2.1.24.3. Generating reassignment JSON files

This procedure describes how to generate a reassignment JSON file that reassigns all the partitions for a given set of topics using the kafka-reassign-partitions.sh tool.

Prerequisites

- A running Cluster Operator

-

A

Kafkaresource - A set of topics to reassign the partitions of

Procedure

Prepare a JSON file named

topics.jsonthat lists the topics to move. It must have the following structure:Copy to Clipboard Copied! Toggle word wrap Toggle overflow where <TopicObjects> is a comma-separated list of objects like:

{ "topic": <TopicName> }{ "topic": <TopicName> }Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example if you want to reassign all the partitions of

topic-aandtopic-b, you would need to prepare atopics.jsonfile like this:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the

topics.jsonfile to one of the broker pods:cat topics.json | oc exec -c kafka <BrokerPod> -i -- \ /bin/bash -c \ 'cat > /tmp/topics.json'

cat topics.json | oc exec -c kafka <BrokerPod> -i -- \ /bin/bash -c \ 'cat > /tmp/topics.json'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

kafka-reassign-partitions.shcommand to generate the reassignment JSON.oc exec <BrokerPod> -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --topics-to-move-json-file /tmp/topics.json \ --broker-list <BrokerList> \ --generate

oc exec <BrokerPod> -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --topics-to-move-json-file /tmp/topics.json \ --broker-list <BrokerList> \ --generateCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example, to move all the partitions of

topic-aandtopic-bto brokers4and7oc exec <BrokerPod> -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --topics-to-move-json-file /tmp/topics.json \ --broker-list 4,7 \ --generate

oc exec <BrokerPod> -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --topics-to-move-json-file /tmp/topics.json \ --broker-list 4,7 \ --generateCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.24.4. Creating reassignment JSON files manually

You can manually create the reassignment JSON file if you want to move specific partitions.

2.1.24.5. Reassignment throttles

Partition reassignment can be a slow process because it involves transferring large amounts of data between brokers. To avoid a detrimental impact on clients, you can throttle the reassignment process. This might cause the reassignment to take longer to complete.

- If the throttle is too low then the newly assigned brokers will not be able to keep up with records being published and the reassignment will never complete.

- If the throttle is too high then clients will be impacted.

For example, for producers, this could manifest as higher than normal latency waiting for acknowledgement. For consumers, this could manifest as a drop in throughput caused by higher latency between polls.

2.1.24.6. Scaling up a Kafka cluster

This procedure describes how to increase the number of brokers in a Kafka cluster.

Prerequisites

- An existing Kafka cluster.

-

A reassignment JSON file named

reassignment.jsonthat describes how partitions should be reassigned to brokers in the enlarged cluster.

Procedure

-

Add as many new brokers as you need by increasing the

Kafka.spec.kafka.replicasconfiguration option. - Verify that the new broker pods have started.

Copy the

reassignment.jsonfile to the broker pod on which you will later execute the commands:cat reassignment.json | \ oc exec broker-pod -c kafka -i -- /bin/bash -c \ 'cat > /tmp/reassignment.json'

cat reassignment.json | \ oc exec broker-pod -c kafka -i -- /bin/bash -c \ 'cat > /tmp/reassignment.json'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

cat reassignment.json | \ oc exec my-cluster-kafka-0 -c kafka -i -- /bin/bash -c \ 'cat > /tmp/reassignment.json'

cat reassignment.json | \ oc exec my-cluster-kafka-0 -c kafka -i -- /bin/bash -c \ 'cat > /tmp/reassignment.json'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Execute the partition reassignment using the

kafka-reassign-partitions.shcommand line tool from the same broker pod.oc exec broker-pod -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --reassignment-json-file /tmp/reassignment.json \ --execute

oc exec broker-pod -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --reassignment-json-file /tmp/reassignment.json \ --executeCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are going to throttle replication you can also pass the

--throttleoption with an inter-broker throttled rate in bytes per second. For example:oc exec my-cluster-kafka-0 -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --reassignment-json-file /tmp/reassignment.json \ --throttle 5000000 \ --execute

oc exec my-cluster-kafka-0 -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --reassignment-json-file /tmp/reassignment.json \ --throttle 5000000 \ --executeCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command will print out two reassignment JSON objects. The first records the current assignment for the partitions being moved. You should save this to a local file (not a file in the pod) in case you need to revert the reassignment later on. The second JSON object is the target reassignment you have passed in your reassignment JSON file.

If you need to change the throttle during reassignment you can use the same command line with a different throttled rate. For example:

oc exec my-cluster-kafka-0 -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --reassignment-json-file /tmp/reassignment.json \ --throttle 10000000 \ --execute

oc exec my-cluster-kafka-0 -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --reassignment-json-file /tmp/reassignment.json \ --throttle 10000000 \ --executeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Periodically verify whether the reassignment has completed using the

kafka-reassign-partitions.shcommand line tool from any of the broker pods. This is the same command as the previous step but with the--verifyoption instead of the--executeoption.oc exec broker-pod -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --reassignment-json-file /tmp/reassignment.json \ --verify

oc exec broker-pod -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --reassignment-json-file /tmp/reassignment.json \ --verifyCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example,

oc exec my-cluster-kafka-0 -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --reassignment-json-file /tmp/reassignment.json \ --verify

oc exec my-cluster-kafka-0 -c kafka -it -- \ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 \ --reassignment-json-file /tmp/reassignment.json \ --verifyCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

The reassignment has finished when the

--verifycommand reports each of the partitions being moved as completed successfully. This final--verifywill also have the effect of removing any reassignment throttles. You can now delete the revert file if you saved the JSON for reverting the assignment to their original brokers.

2.1.24.7. Scaling down a Kafka cluster

Additional resources

This procedure describes how to decrease the number of brokers in a Kafka cluster.

Prerequisites

- An existing Kafka cluster.

-

A reassignment JSON file named

reassignment.jsondescribing how partitions should be reassigned to brokers in the cluster once the broker(s) in the highest numberedPod(s)have been removed.

Procedure

Copy the

reassignment.jsonfile to the broker pod on which you will later execute the commands:cat reassignment.json | \ oc exec broker-pod -c kafka -i -- /bin/bash -c \ 'cat > /tmp/reassignment.json'

cat reassignment.json | \ oc exec broker-pod -c kafka -i -- /bin/bash -c \ 'cat > /tmp/reassignment.json'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

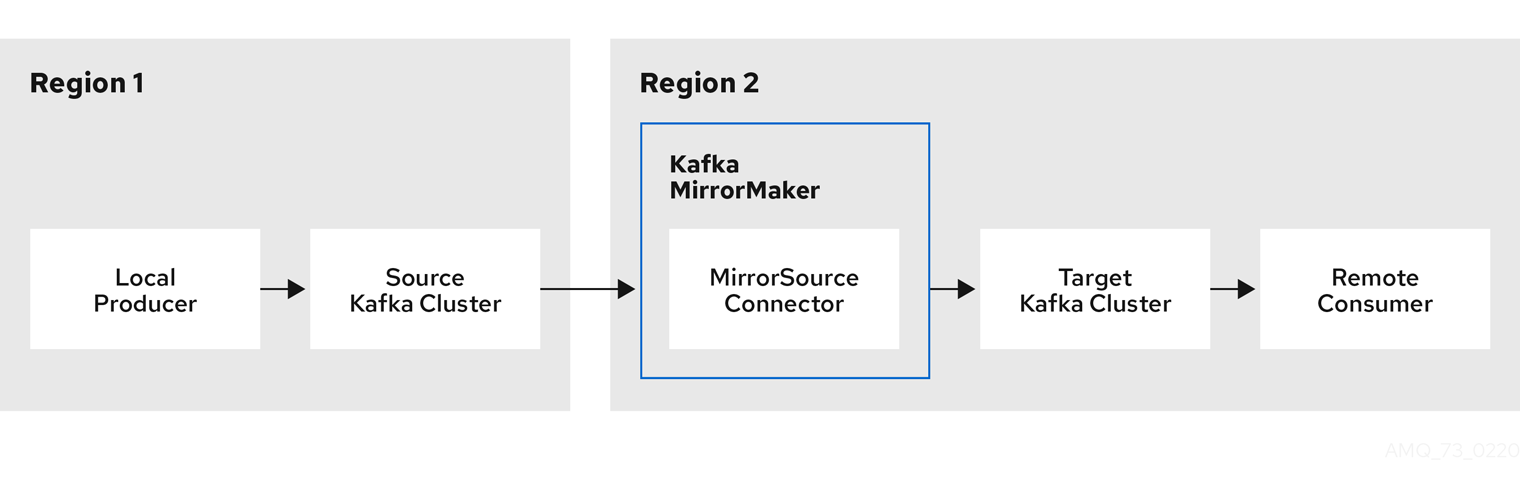

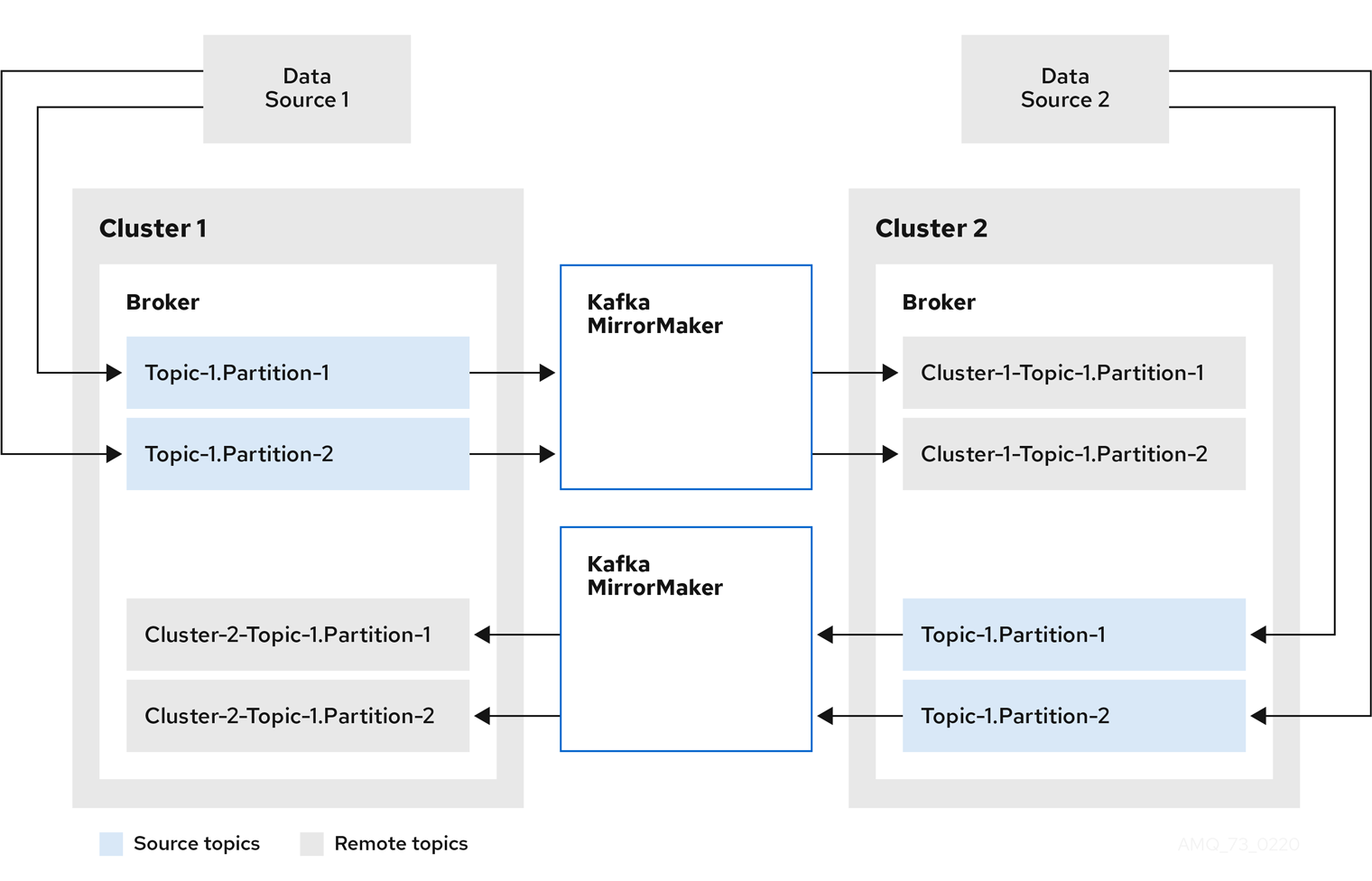

cat reassignment.json | \ oc exec my-cluster-kafka-0 -c kafka -i -- /bin/bash -c \ 'cat > /tmp/reassignment.json'