Chapter 2. Getting started with AMQ Streams

AMQ Streams works on all types of clusters, from public and private clouds to local deployments intended for development. This guide assumes that an OpenShift cluster is available and the oc command-line tool is installed and configured to connect to the running cluster.

AMQ Streams is based on Strimzi 0.14.x. This chapter describes the procedures to deploy AMQ Streams on OpenShift 3.11 and later.

To run the commands in this guide, your cluster user must have the rights to manage role-based access control (RBAC) and CRDs.

For more information about OpenShift and setting up OpenShift cluster, see OpenShift documentation.

2.1. Installing AMQ Streams and deploying components

To install AMQ Streams, download and extract the amq-streams-x.y.z-ocp-install-examples.zip file from the AMQ Streams download site.

The folder contains several YAML files to help you deploy the components of AMQ Streams to OpenShift, perform common operations, and configure your Kafka cluster. The YAML files are referenced throughout this documentation.

The remainder of this chapter provides an overview of each component and instructions for deploying the components to OpenShift using the YAML files provided.

Although container images for AMQ Streams are available in the Red Hat Container Catalog, we recommend that you use the YAML files provided instead.

2.2. Custom resources

Custom resource definitions (CRDs) extend the Kubernetes API, providing definitions to create and modify custom resources to an OpenShift cluster. Custom resources are created as instances of CRDs.

In AMQ Streams, CRDs introduce custom resources specific to AMQ Streams to an OpenShift cluster, such as Kafka, Kafka Connect, Kafka Mirror Maker, and users and topics custom resources. CRDs provide configuration instructions, defining the schemas used to instantiate and manage the AMQ Streams-specific resources. CRDs also allow AMQ Streams resources to benefit from native OpenShift features like CLI accessibility and configuration validation.

CRDs require a one-time installation in a cluster. Depending on the cluster setup, installation typically requires cluster admin privileges.

Access to manage custom resources is limited to AMQ Streams administrators.

CRDs and custom resources are defined as YAML files.

A CRD defines a new kind of resource, such as kind:Kafka, within an OpenShift cluster.

The Kubernetes API server allows custom resources to be created based on the kind and understands from the CRD how to validate and store the custom resource when it is added to the OpenShift cluster.

When CRDs are deleted, custom resources of that type are also deleted. Additionally, the resources created by the custom resource, such as pods and statefulsets are also deleted.

Additional resources

2.2.1. AMQ Streams custom resource example

Each AMQ Streams-specific custom resource conforms to the schema defined by the CRD for the resource’s kind.

To understand the relationship between a CRD and a custom resource, let’s look at a sample of the CRD for a Kafka topic.

Kafka topic CRD

- 1

- The metadata for the topic CRD, its name and a label to identify the CRD.

- 2

- The specification for this CRD, including the group (domain) name, the plural name and the supported schema version, which are used in the URL to access the API of the topic. The other names are used to identify instance resources in the CLI. For example,

oc get kafkatopic my-topicoroc get kafkatopics. - 3

- The shortname can be used in CLI commands. For example,

oc get ktcan be used as an abbreviation instead ofoc get kafkatopic. - 4

- The information presented when using a

getcommand on the custom resource. - 5

- The current status of the CRD as described in the schema reference for the resource.

- 6

- openAPIV3Schema validation provides validation for the creation of topic custom resources. For example, a topic requires at least one partition and one replica.

You can identify the CRD YAML files supplied with the AMQ Streams installation files, because the file names contain an index number followed by ‘Crd’.

Here is a corresponding example of a KafkaTopic custom resource.

Kafka topic custom resource

- 1

- The

kindandapiVersionidentify the CRD of which the custom resource is an instance. - 2

- A label, applicable only to

KafkaTopicandKafkaUserresources, that defines the name of the Kafka cluster (which is same as the name of theKafkaresource) to which a topic or user belongs.The name is used by the Topic Operator and User Operator to identify the Kafka cluster when creating a topic or user.

- 3

- The spec shows the number of partitions and replicas for the topic as well as the configuration parameters for the topic itself. In this example, the retention period for a message to remain in the topic and the segment file size for the log are specified.

- 4

- Status conditions for the

KafkaTopicresource. Thetypecondition changed toReadyat thelastTransitionTime.

Custom resources can be applied to a cluster through the platform CLI. When the custom resource is created, it uses the same validation as the built-in resources of the Kubernetes API.

After a KafkaTopic custom resource is created, the Topic Operator is notified and corresponding Kafka topics are created in AMQ Streams.

2.2.2. AMQ Streams custom resource status

The status property of a AMQ Streams custom resource publishes information about the resource to users and tools that need it.

Several resources have a status property, as described in the following table.

| AMQ Streams resource | Schema reference | Publishes status information on… |

|---|---|---|

|

| The Kafka cluster. | |

|

| The Kafka Connect cluster, if deployed. | |

|

| The Kafka Connect cluster with Source-to-Image support, if deployed. | |

|

| The Kafka MirrorMaker tool, if deployed. | |

|

| Kafka topics in your Kafka cluster. | |

|

| Kafka users in your Kafka cluster. | |

|

| The AMQ Streams Kafka Bridge, if deployed. |

The status property of a resource provides information on the resource’s:

-

Current state, in the

status.conditionsproperty -

Last observed generation, in the

status.observedGenerationproperty

The status property also provides resource-specific information. For example:

-

KafkaConnectStatusprovides the REST API endpoint for Kafka Connect connectors. -

KafkaUserStatusprovides the user name of the Kafka user and theSecretin which their credentials are stored. -

KafkaBridgeStatusprovides the HTTP address at which external client applications can access the Bridge service.

A resource’s current state is useful for tracking progress related to the resource achieving its desired state, as defined by the spec property. The status conditions provide the time and reason the state of the resource changed and details of events preventing or delaying the operator from realizing the resource’s desired state.

The last observed generation is the generation of the resource that was last reconciled by the Cluster Operator. If the value of observedGeneration is different from the value of metadata.generation, the operator has not yet processed the latest update to the resource. If these values are the same, the status information reflects the most recent changes to the resource.

AMQ Streams creates and maintains the status of custom resources, periodically evaluating the current state of the custom resource and updating its status accordingly. When performing an update on a custom resource using oc edit, for example, its status is not editable. Moreover, changing the status would not affect the configuration of the Kafka cluster.

Here we see the status property specified for a Kafka custom resource.

Kafka custom resource with status

- 1

- Status

conditionsdescribe criteria related to the status that cannot be deduced from the existing resource information, or are specific to the instance of a resource. - 2

- The

Readycondition indicates whether the Cluster Operator currently considers the Kafka cluster able to handle traffic. - 3

- The

observedGenerationindicates the generation of theKafkacustom resource that was last reconciled by the Cluster Operator. - 4

- The

listenersdescribe the current Kafka bootstrap addresses by type.ImportantThe address in the custom resource status for external listeners with type

nodeportis currently not supported.

The Kafka bootstrap addresses listed in the status do not signify that those endpoints or the Kafka cluster is in a ready state.

Accessing status information

You can access status information for a resource from the command line. For more information, see Chapter 16, Checking the status of a custom resource.

2.3. Cluster Operator

The Cluster Operator is responsible for deploying and managing Apache Kafka clusters within an OpenShift cluster.

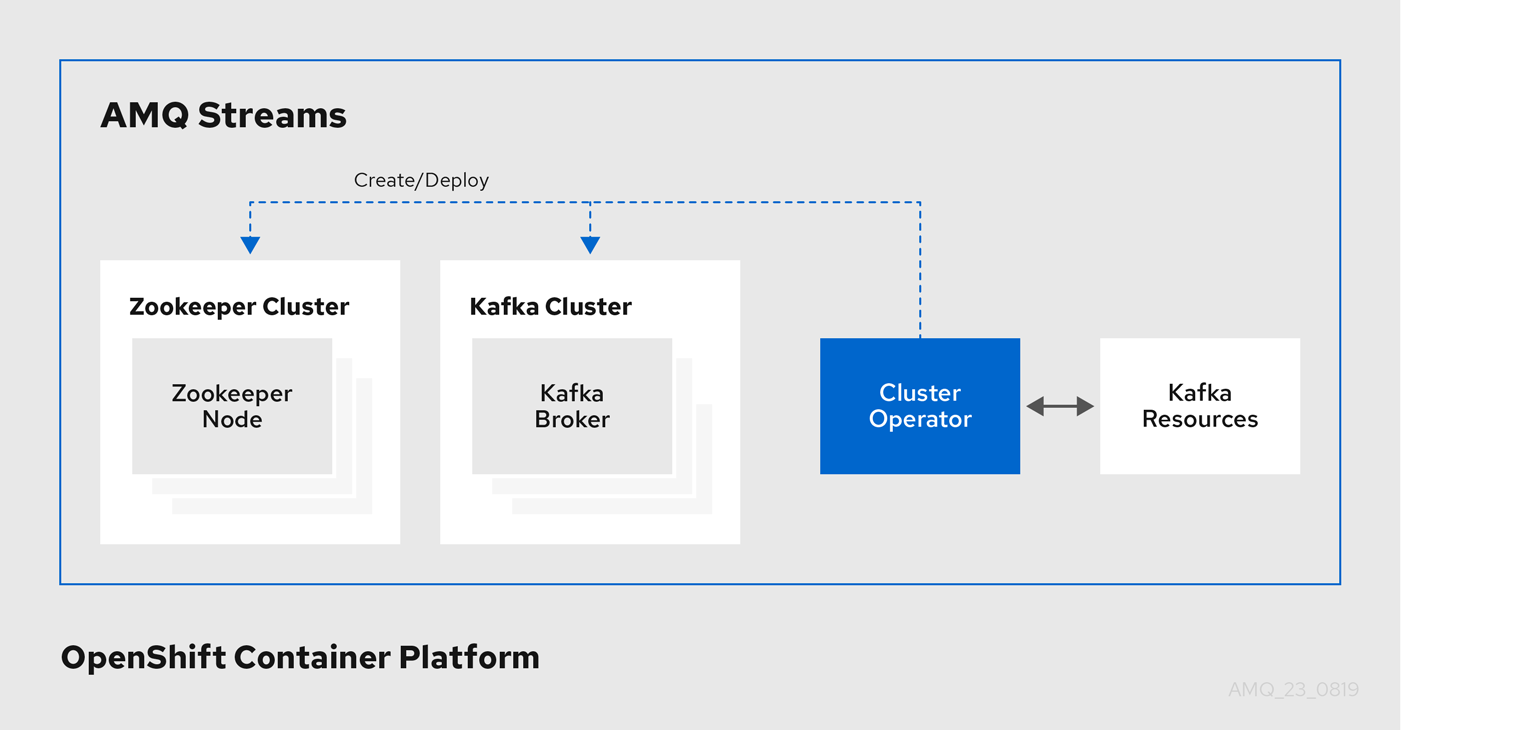

2.3.1. Overview of the Cluster Operator component

AMQ Streams uses the Cluster Operator to deploy and manage clusters for:

- Kafka (including Zookeeper, Entity Operator and Kafka Exporter)

- Kafka Connect

- Kafka Mirror Maker

- Kafka Bridge

Custom resources are used to deploy the clusters.

For example, to deploy a Kafka cluster:

-

A

Kafkaresource with the cluster configuration is created within the OpenShift cluster. -

The Cluster Operator deploys a corresponding Kafka cluster, based on what is declared in the

Kafkaresource.

The Cluster Operator can also deploy (through Entity Operator configuration of the Kafka resource):

-

A Topic Operator to provide operator-style topic management through

KafkaTopiccustom resources -

A User Operator to provide operator-style user management through

KafkaUsercustom resources

For more information on the configuration options supported by the Kafka resource, see Section 3.1, “Kafka cluster configuration”.

On OpenShift, a Kafka Connect deployment can incorporate a Source2Image feature to provides a convenient way to include connectors.

Example architecture for the Cluster Operator

2.3.2. Watch options for a Cluster Operator deployment

When the Cluster Operator is running, it starts to watch for updates of Kafka resources.

Depending on the deployment, the Cluster Operator can watch Kafka resources from:

AMQ Streams provides example YAML files to make the deployment process easier.

The Cluster Operator watches the following resources:

-

Kafkafor the Kafka cluster. -

KafkaConnectfor the Kafka Connect cluster. -

KafkaConnectS2Ifor the Kafka Connect cluster with Source2Image support. -

KafkaMirrorMakerfor the Kafka Mirror Maker instance. -

KafkaBridgefor the Kafka Bridge instance

When one of these resources is created in the OpenShift cluster, the operator gets the cluster description from the resource and starts creating a new cluster for the resource by creating the necessary OpenShift resources, such as StatefulSets, Services and ConfigMaps.

Each time a Kafka resource is updated, the operator performs corresponding updates on the OpenShift resources that make up the cluster for the resource.

Resources are either patched or deleted, and then recreated in order to make the cluster for the resource reflect the desired state of the cluster. This operation might cause a rolling update that might lead to service disruption.

When a resource is deleted, the operator undeploys the cluster and deletes all related OpenShift resources.

2.3.3. Deploying the Cluster Operator to watch a single namespace

Prerequisites

-

This procedure requires use of an OpenShift user account which is able to create

CustomResourceDefinitions,ClusterRolesandClusterRoleBindings. Use of Role Base Access Control (RBAC) in the OpenShift cluster usually means that permission to create, edit, and delete these resources is limited to OpenShift cluster administrators, such assystem:admin. Modify the installation files according to the namespace the Cluster Operator is going to be installed in.

On Linux, use:

sed -i 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yaml

sed -i 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow On MacOS, use:

sed -i '' 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yaml

sed -i '' 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Deploy the Cluster Operator:

oc apply -f install/cluster-operator -n my-namespace

oc apply -f install/cluster-operator -n my-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.4. Deploying the Cluster Operator to watch multiple namespaces

Prerequisites

-

This procedure requires use of an OpenShift user account which is able to create

CustomResourceDefinitions,ClusterRolesandClusterRoleBindings. Use of Role Base Access Control (RBAC) in the OpenShift cluster usually means that permission to create, edit, and delete these resources is limited to OpenShift cluster administrators, such assystem:admin. Edit the installation files according to the namespace the Cluster Operator is going to be installed in.

On Linux, use:

sed -i 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yaml

sed -i 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow On MacOS, use:

sed -i '' 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yaml

sed -i '' 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Edit the file

install/cluster-operator/050-Deployment-strimzi-cluster-operator.yamland in the environment variableSTRIMZI_NAMESPACElist all the namespaces where Cluster Operator should watch for resources. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For all namespaces which should be watched by the Cluster Operator (

watched-namespace-1,watched-namespace-2,watched-namespace-3in the above example), install theRoleBindings. Replace thewatched-namespacewith the namespace used in the previous step.This can be done using

oc apply:oc apply -f install/cluster-operator/020-RoleBinding-strimzi-cluster-operator.yaml -n watched-namespace oc apply -f install/cluster-operator/031-RoleBinding-strimzi-cluster-operator-entity-operator-delegation.yaml -n watched-namespace oc apply -f install/cluster-operator/032-RoleBinding-strimzi-cluster-operator-topic-operator-delegation.yaml -n watched-namespace

oc apply -f install/cluster-operator/020-RoleBinding-strimzi-cluster-operator.yaml -n watched-namespace oc apply -f install/cluster-operator/031-RoleBinding-strimzi-cluster-operator-entity-operator-delegation.yaml -n watched-namespace oc apply -f install/cluster-operator/032-RoleBinding-strimzi-cluster-operator-topic-operator-delegation.yaml -n watched-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy the Cluster Operator

This can be done using

oc apply:oc apply -f install/cluster-operator -n my-namespace

oc apply -f install/cluster-operator -n my-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.5. Deploying the Cluster Operator to watch all namespaces

You can configure the Cluster Operator to watch AMQ Streams resources across all namespaces in your OpenShift cluster. When running in this mode, the Cluster Operator automatically manages clusters in any new namespaces that are created.

Prerequisites

-

This procedure requires use of an OpenShift user account which is able to create

CustomResourceDefinitions,ClusterRolesandClusterRoleBindings. Use of Role Base Access Control (RBAC) in the OpenShift cluster usually means that permission to create, edit, and delete these resources is limited to OpenShift cluster administrators, such assystem:admin. - Your OpenShift cluster is running.

Procedure

Configure the Cluster Operator to watch all namespaces:

-

Edit the

050-Deployment-strimzi-cluster-operator.yamlfile. Set the value of the

STRIMZI_NAMESPACEenvironment variable to*.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Edit the

Create

ClusterRoleBindingsthat grant cluster-wide access to all namespaces to the Cluster Operator.Use the

oc create clusterrolebindingcommand:oc create clusterrolebinding strimzi-cluster-operator-namespaced --clusterrole=strimzi-cluster-operator-namespaced --serviceaccount my-namespace:strimzi-cluster-operator oc create clusterrolebinding strimzi-cluster-operator-entity-operator-delegation --clusterrole=strimzi-entity-operator --serviceaccount my-namespace:strimzi-cluster-operator oc create clusterrolebinding strimzi-cluster-operator-topic-operator-delegation --clusterrole=strimzi-topic-operator --serviceaccount my-namespace:strimzi-cluster-operator

oc create clusterrolebinding strimzi-cluster-operator-namespaced --clusterrole=strimzi-cluster-operator-namespaced --serviceaccount my-namespace:strimzi-cluster-operator oc create clusterrolebinding strimzi-cluster-operator-entity-operator-delegation --clusterrole=strimzi-entity-operator --serviceaccount my-namespace:strimzi-cluster-operator oc create clusterrolebinding strimzi-cluster-operator-topic-operator-delegation --clusterrole=strimzi-topic-operator --serviceaccount my-namespace:strimzi-cluster-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

my-namespacewith the namespace in which you want to install the Cluster Operator.Deploy the Cluster Operator to your OpenShift cluster.

Use the

oc applycommand:oc apply -f install/cluster-operator -n my-namespace

oc apply -f install/cluster-operator -n my-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. Kafka cluster

You can use AMQ Streams to deploy an ephemeral or persistent Kafka cluster to OpenShift. When installing Kafka, AMQ Streams also installs a Zookeeper cluster and adds the necessary configuration to connect Kafka with Zookeeper.

You can also use it to deploy Kafka Exporter.

- Ephemeral cluster

-

In general, an ephemeral (that is, temporary) Kafka cluster is suitable for development and testing purposes, not for production. This deployment uses

emptyDirvolumes for storing broker information (for Zookeeper) and topics or partitions (for Kafka). Using anemptyDirvolume means that its content is strictly related to the pod life cycle and is deleted when the pod goes down. - Persistent cluster

-

A persistent Kafka cluster uses

PersistentVolumesto store Zookeeper and Kafka data. ThePersistentVolumeis acquired using aPersistentVolumeClaimto make it independent of the actual type of thePersistentVolume. For example, it can use Amazon EBS volumes in Amazon AWS deployments without any changes in the YAML files. ThePersistentVolumeClaimcan use aStorageClassto trigger automatic volume provisioning.

AMQ Streams includes two templates for deploying a Kafka cluster:

-

kafka-ephemeral.yamldeploys an ephemeral cluster, namedmy-clusterby default. -

kafka-persistent.yamldeploys a persistent cluster, namedmy-clusterby default.

The cluster name is defined by the name of the resource and cannot be changed after the cluster has been deployed. To change the cluster name before you deploy the cluster, edit the Kafka.metadata.name property of the resource in the relevant YAML file.

apiVersion: kafka.strimzi.io/v1beta1 kind: Kafka metadata: name: my-cluster # ...

apiVersion: kafka.strimzi.io/v1beta1

kind: Kafka

metadata:

name: my-cluster

# ...2.4.1. Deploying the Kafka cluster

You can deploy an ephemeral or persistent Kafka cluster to OpenShift on the command line.

Prerequisites

- The Cluster Operator is deployed.

Procedure

If you plan to use the cluster for development or testing purposes, you can create and deploy an ephemeral cluster using

oc apply.oc apply -f examples/kafka/kafka-ephemeral.yaml

oc apply -f examples/kafka/kafka-ephemeral.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you plan to use the cluster in production, create and deploy a persistent cluster using

oc apply.oc apply -f examples/kafka/kafka-persistent.yaml

oc apply -f examples/kafka/kafka-persistent.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information on deploying the Cluster Operator, see Section 2.3, “Cluster Operator”.

-

For more information on the different configuration options supported by the

Kafkaresource, see Section 3.1, “Kafka cluster configuration”.

2.5. Kafka Connect

Kafka Connect is a tool for streaming data between Apache Kafka and external systems. It provides a framework for moving large amounts of data into and out of your Kafka cluster while maintaining scalability and reliability. Kafka Connect is typically used to integrate Kafka with external databases and storage and messaging systems.

You can use Kafka Connect to:

- Build connector plug-ins (as JAR files) for your Kafka cluster

- Run connectors

Kafka Connect includes the following built-in connectors for moving file-based data into and out of your Kafka cluster.

| File Connector | Description |

|---|---|

|

| Transfers data to your Kafka cluster from a file (the source). |

|

| Transfers data from your Kafka cluster to a file (the sink). |

In AMQ Streams, you can use the Cluster Operator to deploy a Kafka Connect to your OpenShift cluster. OpenShift also supports Kafka Connect Source-2-Image (S2I) clusters.

A Kafka Connect cluster is implemented as a Deployment with a configurable number of workers. The Kafka Connect REST API is available on port 8083, as the <connect-cluster-name>-connect-api service.

For more information on deploying a Kafka Connect S2I cluster on OpenShift, see Creating a container image using OpenShift builds and Source-to-Image.

2.5.1. Deploying Kafka Connect to your cluster

You can deploy a Kafka Connect cluster to your OpenShift cluster by using the Cluster Operator.

Prerequisites

Procedure

Use the

oc applycommand to create aKafkaConnectresource based on thekafka-connect.yamlfile:oc apply -f examples/kafka-connect/kafka-connect.yaml

oc apply -f examples/kafka-connect/kafka-connect.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

2.5.2. Extending Kafka Connect with connector plug-ins

The AMQ Streams container images for Kafka Connect include the two built-in file connectors: FileStreamSourceConnector and FileStreamSinkConnector. You can add your own connectors by:

- Creating a container image from the Kafka Connect base image (manually or using your CI (continuous integration), for example).

- Creating a container image using OpenShift builds and Source-to-Image (S2I) - available only on OpenShift.

2.5.2.1. Creating a Docker image from the Kafka Connect base image

You can use the Kafka container image on Red Hat Container Catalog as a base image for creating your own custom image with additional connector plug-ins.

The following procedure explains how to create your custom image and add it to the /opt/kafka/plugins directory. At startup, the AMQ Streams version of Kafka Connect loads any third-party connector plug-ins contained in the /opt/kafka/plugins directory.

Prerequisites

Procedure

Create a new

Dockerfileusingregistry.redhat.io/amq7/amq-streams-kafka-23:1.3.0as the base image:FROM registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 USER root:root COPY ./my-plugins/ /opt/kafka/plugins/ USER jboss:jboss

FROM registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 USER root:root COPY ./my-plugins/ /opt/kafka/plugins/ USER jboss:jbossCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Build the container image.

- Push your custom image to your container registry.

Point to the new container image.

You can either:

Edit the

KafkaConnect.spec.imageproperty of theKafkaConnectcustom resource.If set, this property overrides the

STRIMZI_DEFAULT_KAFKA_CONNECT_IMAGEvariable in the Cluster Operator.Copy to Clipboard Copied! Toggle word wrap Toggle overflow or

-

In the

install/cluster-operator/050-Deployment-strimzi-cluster-operator.yamlfile, edit theSTRIMZI_DEFAULT_KAFKA_CONNECT_IMAGEvariable to point to the new container image and reinstall the Cluster Operator.

Additional resources

-

For more information on the

KafkaConnect.spec.image property, see Section 3.2.11, “Container images”. -

For more information on the

STRIMZI_DEFAULT_KAFKA_CONNECT_IMAGEvariable, see Section 4.1.7, “Cluster Operator Configuration”.

2.5.2.2. Creating a container image using OpenShift builds and Source-to-Image

You can use OpenShift builds and the Source-to-Image (S2I) framework to create new container images. An OpenShift build takes a builder image with S2I support, together with source code and binaries provided by the user, and uses them to build a new container image. Once built, container images are stored in OpenShift’s local container image repository and are available for use in deployments.

A Kafka Connect builder image with S2I support is provided on the Red Hat Container Catalog as part of the registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 image. This S2I image takes your binaries (with plug-ins and connectors) and stores them in the /tmp/kafka-plugins/s2i directory. It creates a new Kafka Connect image from this directory, which can then be used with the Kafka Connect deployment. When started using the enhanced image, Kafka Connect loads any third-party plug-ins from the /tmp/kafka-plugins/s2i directory.

Procedure

On the command line, use the

oc applycommand to create and deploy a Kafka Connect S2I cluster:oc apply -f examples/kafka-connect/kafka-connect-s2i.yaml

oc apply -f examples/kafka-connect/kafka-connect-s2i.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a directory with Kafka Connect plug-ins:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

oc start-buildcommand to start a new build of the image using the prepared directory:oc start-build my-connect-cluster-connect --from-dir ./my-plugins/

oc start-build my-connect-cluster-connect --from-dir ./my-plugins/Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe name of the build is the same as the name of the deployed Kafka Connect cluster.

- Once the build has finished, the new image is used automatically by the Kafka Connect deployment.

2.6. Kafka Mirror Maker

The Cluster Operator deploys one or more Kafka Mirror Maker replicas to replicate data between Kafka clusters. This process is called mirroring to avoid confusion with the Kafka partitions replication concept. The Mirror Maker consumes messages from the source cluster and republishes those messages to the target cluster.

For information about example resources and the format for deploying Kafka Mirror Maker, see Kafka Mirror Maker configuration.

2.6.1. Deploying Kafka Mirror Maker

Prerequisites

- Before deploying Kafka Mirror Maker, the Cluster Operator must be deployed.

Procedure

Create a Kafka Mirror Maker cluster from the command-line:

oc apply -f examples/kafka-mirror-maker/kafka-mirror-maker.yaml

oc apply -f examples/kafka-mirror-maker/kafka-mirror-maker.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about deploying the Cluster Operator, see Section 2.3, “Cluster Operator”

2.7. Kafka Bridge

The Cluster Operator deploys one or more Kafka bridge replicas to send data between Kafka clusters and clients via HTTP API.

For information about example resources and the format for deploying Kafka Bridge, see Kafka Bridge configuration.

2.7.1. Deploying Kafka Bridge to your OpenShift cluster

You can deploy a Kafka Bridge cluster to your OpenShift cluster by using the Cluster Operator.

Prerequisites

Procedure

Use the

oc applycommand to create aKafkaBridgeresource based on thekafka-bridge.yamlfile:oc apply -f examples/kafka-bridge/kafka-bridge.yaml

oc apply -f examples/kafka-bridge/kafka-bridge.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

2.8. Deploying example clients

Prerequisites

- An existing Kafka cluster for the client to connect to.

Procedure

Deploy the producer.

Use

oc run:oc run kafka-producer -ti --image=registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 --rm=true --restart=Never -- bin/kafka-console-producer.sh --broker-list cluster-name-kafka-bootstrap:9092 --topic my-topic

oc run kafka-producer -ti --image=registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 --rm=true --restart=Never -- bin/kafka-console-producer.sh --broker-list cluster-name-kafka-bootstrap:9092 --topic my-topicCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Type your message into the console where the producer is running.

- Press Enter to send the message.

Deploy the consumer.

Use

oc run:oc run kafka-consumer -ti --image=registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 --rm=true --restart=Never -- bin/kafka-console-consumer.sh --bootstrap-server cluster-name-kafka-bootstrap:9092 --topic my-topic --from-beginning

oc run kafka-consumer -ti --image=registry.redhat.io/amq7/amq-streams-kafka-23:1.3.0 --rm=true --restart=Never -- bin/kafka-console-consumer.sh --bootstrap-server cluster-name-kafka-bootstrap:9092 --topic my-topic --from-beginningCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Confirm that you see the incoming messages in the consumer console.

2.9. Topic Operator

The Topic Operator is responsible for managing Kafka topics within a Kafka cluster running within an OpenShift cluster.

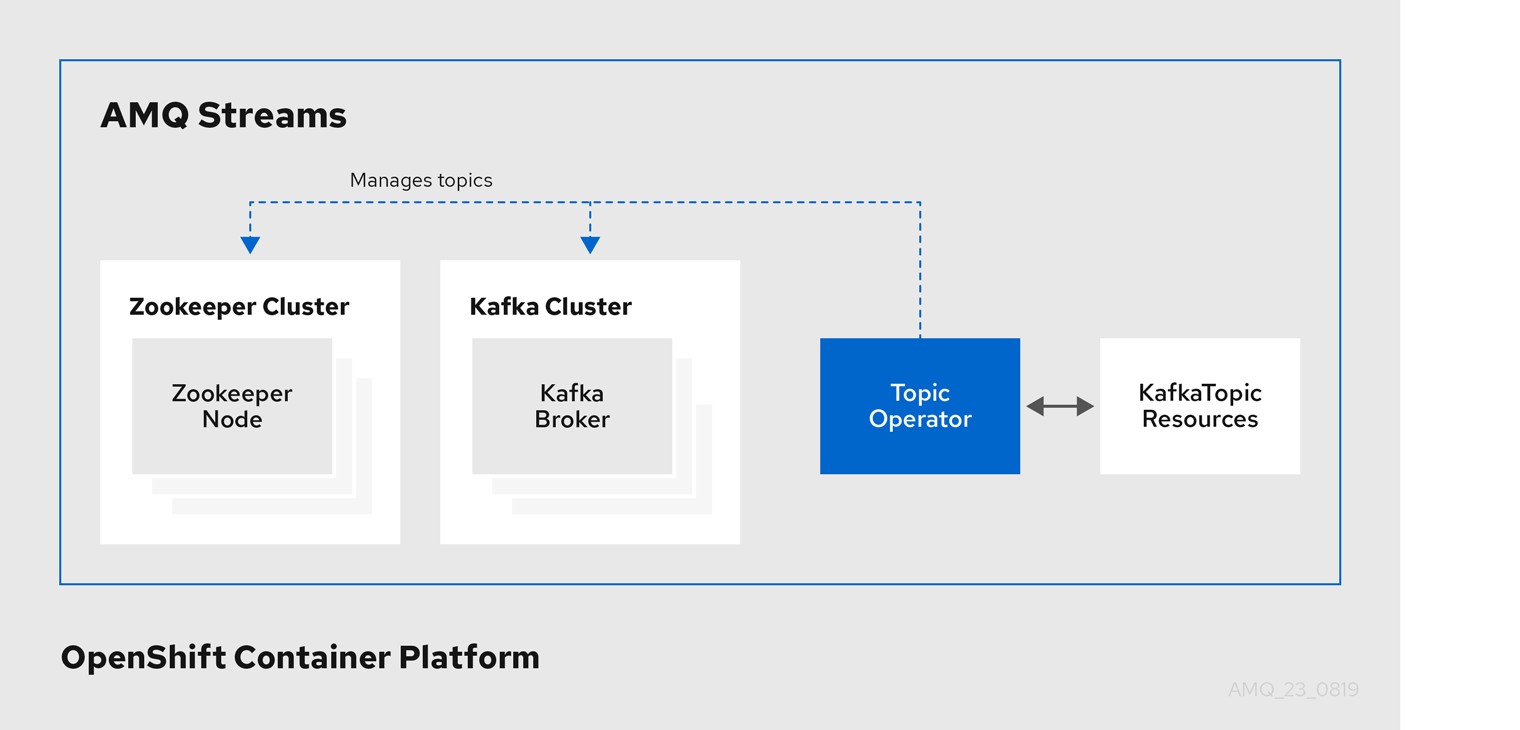

2.9.1. Overview of the Topic Operator component

The Topic Operator provides a way of managing topics in a Kafka cluster via OpenShift resources.

Example architecture for the Topic Operator

The role of the Topic Operator is to keep a set of KafkaTopic OpenShift resources describing Kafka topics in-sync with corresponding Kafka topics.

Specifically, if a KafkaTopic is:

- Created, the operator will create the topic it describes

- Deleted, the operator will delete the topic it describes

- Changed, the operator will update the topic it describes

And also, in the other direction, if a topic is:

-

Created within the Kafka cluster, the operator will create a

KafkaTopicdescribing it -

Deleted from the Kafka cluster, the operator will delete the

KafkaTopicdescribing it -

Changed in the Kafka cluster, the operator will update the

KafkaTopicdescribing it

This allows you to declare a KafkaTopic as part of your application’s deployment and the Topic Operator will take care of creating the topic for you. Your application just needs to deal with producing or consuming from the necessary topics.

If the topic is reconfigured or reassigned to different Kafka nodes, the KafkaTopic will always be up to date.

For more details about creating, modifying and deleting topics, see Chapter 5, Using the Topic Operator.

2.9.2. Deploying the Topic Operator using the Cluster Operator

This procedure describes how to deploy the Topic Operator using the Cluster Operator. If you want to use the Topic Operator with a Kafka cluster that is not managed by AMQ Streams, you must deploy the Topic Operator as a standalone component. For more information, see Section 4.2.6, “Deploying the standalone Topic Operator”.

Prerequisites

- A running Cluster Operator

-

A

Kafkaresource to be created or updated

Procedure

Ensure that the

Kafka.spec.entityOperatorobject exists in theKafkaresource. This configures the Entity Operator.Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Configure the Topic Operator using the fields described in Section C.52, “

EntityTopicOperatorSpecschema reference”. Create or update the Kafka resource in OpenShift.

Use

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about deploying the Cluster Operator, see Section 2.3, “Cluster Operator”.

- For more information about deploying the Entity Operator, see Section 3.1.11, “Entity Operator”.

-

For more information about the

Kafka.spec.entityOperatorobject used to configure the Topic Operator when deployed by the Cluster Operator, see Section C.51, “EntityOperatorSpecschema reference”.

2.10. User Operator

The User Operator is responsible for managing Kafka users within a Kafka cluster running within an OpenShift cluster.

2.10.1. Overview of the User Operator component

The User Operator manages Kafka users for a Kafka cluster by watching for KafkaUser resources that describe Kafka users and ensuring that they are configured properly in the Kafka cluster. For example:

-

if a

KafkaUseris created, the User Operator will create the user it describes -

if a

KafkaUseris deleted, the User Operator will delete the user it describes -

if a

KafkaUseris changed, the User Operator will update the user it describes

Unlike the Topic Operator, the User Operator does not sync any changes from the Kafka cluster with the OpenShift resources. Unlike the Kafka topics which might be created by applications directly in Kafka, it is not expected that the users will be managed directly in the Kafka cluster in parallel with the User Operator, so this should not be needed.

The User Operator allows you to declare a KafkaUser as part of your application’s deployment. When the user is created, the user credentials will be created in a Secret. Your application needs to use the user and its credentials for authentication and to produce or consume messages.

In addition to managing credentials for authentication, the User Operator also manages authorization rules by including a description of the user’s rights in the KafkaUser declaration.

2.10.2. Deploying the User Operator using the Cluster Operator

Prerequisites

- A running Cluster Operator

-

A

Kafkaresource to be created or updated.

Procedure

-

Edit the

Kafkaresource ensuring it has aKafka.spec.entityOperator.userOperatorobject that configures the User Operator how you want. Create or update the Kafka resource in OpenShift.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about deploying the Cluster Operator, see Section 2.3, “Cluster Operator”.

-

For more information about the

Kafka.spec.entityOperatorobject used to configure the User Operator when deployed by the Cluster Operator, seeEntityOperatorSpecschema reference.

2.11. Strimzi Administrators

AMQ Streams includes several custom resources. By default, permission to create, edit, and delete these resources is limited to OpenShift cluster administrators. If you want to allow non-cluster administators to manage AMQ Streams resources, you must assign them the Strimzi Administrator role.

2.11.1. Designating Strimzi Administrators

Prerequisites

-

AMQ Streams

CustomResourceDefinitionsare installed.

Procedure

Create the

strimzi-admincluster role in OpenShift.Use

oc apply:oc apply -f install/strimzi-admin

oc apply -f install/strimzi-adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Assign the

strimzi-adminClusterRoleto one or more existing users in the OpenShift cluster.Use

oc create:oc create clusterrolebinding strimzi-admin --clusterrole=strimzi-admin --user=user1 --user=user2

oc create clusterrolebinding strimzi-admin --clusterrole=strimzi-admin --user=user1 --user=user2Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.12. Container images

Container images for AMQ Streams are available in the Red Hat Container Catalog. The installation YAML files provided by AMQ Streams will pull the images directly from the Red Hat Container Catalog.

If you do not have access to the Red Hat Container Catalog or want to use your own container repository:

- Pull all container images listed here

- Push them into your own registry

- Update the image names in the installation YAML files

Each Kafka version supported for the release has a separate image.

| Container image | Namespace/Repository | Description |

|---|---|---|

| Kafka |

| AMQ Streams image for running Kafka, including:

|

| Operator |

| AMQ Streams image for running the operators:

|

| Kafka Bridge |

| AMQ Streams image for running the AMQ Streams Kafka Bridge |