Chapter 8. Background

Ceph monitors maintain a "master copy" of the cluster map, which means a Ceph client can determine the location of all Ceph monitors and Ceph OSDs just by connecting to one Ceph monitor and retrieving a current cluster map. Before Ceph clients can read from or write to Ceph OSDs, they must connect to a Ceph monitor first. With a current copy of the cluster map and the CRUSH algorithm, a Ceph client can compute the location for any object. The ability to compute object locations allows a Ceph client to talk directly to Ceph OSDs, which is a very important aspect of Ceph’s high scalability and performance.

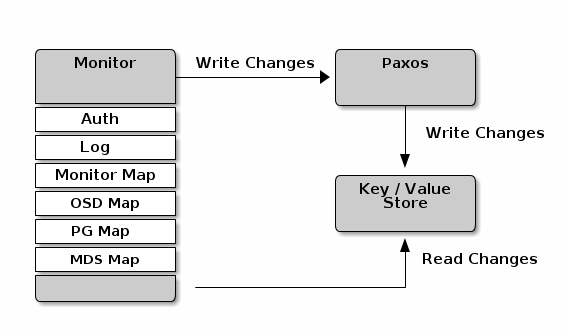

The primary role of the Ceph monitor is to maintain a master copy of the cluster map. Ceph monitors also provide authentication and logging services. Ceph monitors write all changes in the monitor services to a single Paxos instance, and Paxos writes the changes to a key/value store for strong consistency. Ceph monitors can query the most recent version of the cluster map during sync operations. Ceph monitors leverage the key/value store’s snapshots and iterators (using leveldb) to perform store-wide synchronization.

8.1. Cluster Maps

The cluster map is a composite of maps, including the monitor map, the OSD map, and the placement group map. The cluster map tracks a number of important things: which processes are in the Red Hat Ceph Storage cluster; which processes that are in the Red Hat Ceph Storage cluster are up and running or down; whether, the placement groups are active or inactive, and clean or in some other state; and, other details that reflect the current state of the cluster such as the total amount of storage space, and the amount of storage used.

When there is a significant change in the state of the cluster—e.g., a Ceph OSD goes down, a placement group falls into a degraded state, etc.--the cluster map gets updated to reflect the current state of the cluster. Additionally, the Ceph monitor also maintains a history of the prior states of the cluster. The monitor map, OSD map and placement group map each maintain a history of their map versions. We call each version an "epoch."

When operating your Red Hat Ceph Storage cluster, keeping track of these states is an important part of your system administration duties.

8.2. Monitor Quorum

A cluster will run fine with a single monitor; however, a single monitor is a single-point-of-failure. To ensure high availability in a production Ceph Storage cluster, you should run Ceph with multiple monitors so that the failure of a single monitor WILL NOT bring down your entire cluster.

When a Ceph Storage cluster runs multiple Ceph monitors for high availability, Ceph monitors use Paxos to establish consensus about the master cluster map. A consensus requires a majority of monitors running to establish a quorum for consensus about the cluster map (e.g., 1; 2 out of 3; 3 out of 5; 4 out of 6; etc.).

8.3. Consistency

When you add monitor settings to your Ceph configuration file, you need to be aware of some of the architectural aspects of Ceph monitors. Ceph imposes strict consistency requirements for a Ceph monitor when discovering another Ceph monitor within the cluster. Whereas, Ceph Clients and other Ceph daemons use the Ceph configuration file to discover monitors, monitors discover each other using the monitor map (monmap), not the Ceph configuration file.

A Ceph monitor always refers to the local copy of the monmap when discovering other Ceph monitors in the Red Hat Ceph Storage cluster. Using the monmap instead of the Ceph configuration file avoids errors that could break the cluster (e.g., typos in ceph.conf when specifying a monitor address or port). Since monitors use monmaps for discovery and they share monmaps with clients and other Ceph daemons, the monmap provides monitors with a strict guarantee that their consensus is valid.

Strict consistency also applies to updates to the monmap. As with any other updates on the Ceph monitor, changes to the monmap always run through a distributed consensus algorithm called Paxos. The Ceph Monitors must agree on each update to the monmap, such as adding or removing a Ceph monitor, to ensure that each monitor in the quorum has the same version of the monmap. Updates to the monmap are incremental so that Ceph monitors have the latest agreed upon version, and a set of previous versions. Maintaining a history enables a Ceph monitor that has an older version of the monmap to catch up with the current state of the Red Hat Ceph Storage cluster.

If Ceph monitors discovered each other through the Ceph configuration file instead of through the monmap, it would introduce additional risks because the Ceph configuration files aren’t updated and distributed automatically. Ceph monitors might inadvertently use an older Ceph configuration file, fail to recognize a Ceph monitor, fall out of a quorum, or develop a situation where Paxos isn’t able to determine the current state of the system accurately.

8.4. Bootstrapping Monitors

In most configuration and deployment cases, tools that deploy Ceph may help bootstrap the Ceph monitors by generating a monitor map for you (e.g., ceph-deploy, etc). A Ceph monitor requires a few explicit settings:

-

Filesystem ID: The

fsidis the unique identifier for your object store. Since you can run multiple clusters on the same hardware, you must specify the unique ID of the object store when bootstrapping a monitor. Deployment tools usually do this for you (e.g.,ceph-deploycan call a tool likeuuidgen), but you may specify thefsidmanually too. -

Monitor ID: A monitor ID is a unique ID assigned to each monitor within the cluster. It is an alphanumeric value, and by convention the identifier usually follows an alphabetical increment (e.g.,

a,b, etc.). This can be set in a Ceph configuration file (e.g.,[mon.a],[mon.b], etc.), by a deployment tool, or using thecephcommandline. -

Keys: The monitor must have secret keys. A deployment tool such as

ceph-deployusually does this for you, but you may perform this step manually too.