Block Device Guide

Managing, creating, configuring, and using Red Hat Ceph Storage Block Devices

Abstract

Chapter 1. Introduction to Ceph block devices

A block is a set length of bytes in a sequence, for example, a 512-byte block of data. Combining many blocks together into a single file can be used as a storage device that you can read from and write to. Block-based storage interfaces are the most common way to store data with rotating media such as:

- Hard drives

- CD/DVD discs

- Floppy disks

- Traditional 9-track tapes

The ubiquity of block device interfaces makes a virtual block device an ideal candidate for interacting with a mass data storage system like Red Hat Ceph Storage.

Ceph block devices are thin-provisioned, resizable and store data striped over multiple Object Storage Devices (OSD) in a Ceph storage cluster. Ceph block devices are also known as Reliable Autonomic Distributed Object Store (RADOS) Block Devices (RBDs). Ceph block devices leverage RADOS capabilities such as:

- Snapshots

- Replication

- Data consistency

Ceph block devices interact with OSDs by using the librbd library.

Ceph block devices deliver high performance with infinite scalability to Kernel Virtual Machines (KVMs), such as Quick Emulator (QEMU), and cloud-based computing systems, like OpenStack, that rely on the libvirt and QEMU utilities to integrate with Ceph block devices. You can use the same storage cluster to operate the Ceph Object Gateway and Ceph block devices simultaneously.

To use Ceph block devices, requires you to have access to a running Ceph storage cluster. For details on installing a Red Hat Ceph Storage cluster, see the Red Hat Ceph Storage Installation Guide.

Chapter 2. Ceph block devices

As a storage administrator, being familiar with Ceph’s block device commands can help you effectively manage the Red Hat Ceph Storage cluster. You can create and manage block devices pools and images, along with enabling and disabling the various features of Ceph block devices.

Prerequisites

- A running Red Hat Ceph Storage cluster.

2.1. Displaying the command help

Display command, and sub-command online help from the command-line interface.

The -h option still displays help for all available commands.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

Use the

rbd helpcommand to display help for a particularrbdcommand and its subcommand:Syntax

rbd help COMMAND SUBCOMMAND

rbd help COMMAND SUBCOMMANDCopy to Clipboard Copied! Toggle word wrap Toggle overflow To display help for the

snap listcommand:rbd help snap list

[root@rbd-client ~]# rbd help snap listCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2. Creating a block device pool

Before using the block device client, ensure a pool for rbd exists, is enabled and initialized.

You MUST create a pool first before you can specify it as a source.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

To create an

rbdpool, execute the following:Syntax

ceph osd pool create POOL_NAME PG_NUM ceph osd pool application enable POOL_NAME rbd rbd pool init -p POOL_NAME

ceph osd pool create POOL_NAME PG_NUM ceph osd pool application enable POOL_NAME rbd rbd pool init -p POOL_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

ceph osd pool create pool1 ceph osd pool application enable pool1 rbd rbd pool init -p pool1

[root@rbd-client ~]# ceph osd pool create pool1 [root@rbd-client ~]# ceph osd pool application enable pool1 rbd [root@rbd-client ~]# rbd pool init -p pool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- See the Pools chapter in the Red Hat Ceph Storage Storage Strategies Guide for additional details.

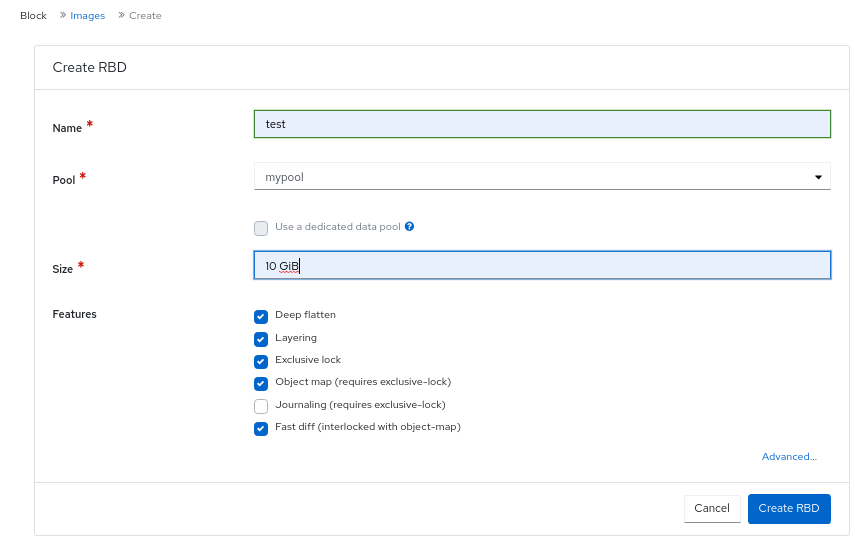

2.3. Creating a block device image

Before adding a block device to a node, create an image for it in the Ceph storage cluster.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

To create a block device image, execute the following command:

Syntax

rbd create IMAGE_NAME --size MEGABYTES --pool POOL_NAME

rbd create IMAGE_NAME --size MEGABYTES --pool POOL_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd create image1 --size 1024 --pool pool1

[root@rbd-client ~]# rbd create image1 --size 1024 --pool pool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow This example creates a 1 GB image named

image1that stores information in a pool namedpool1.NoteEnsure the pool exists before creating an image.

Additional Resources

- See the Creating a block device pool section in the Red Hat Ceph Storage Block Device Guide for additional details.

2.4. Listing the block device images

List the block device images.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

To list block devices in the

rbdpool, execute the following command:Noterbdis the default pool name.Example

rbd ls

[root@rbd-client ~]# rbd lsCopy to Clipboard Copied! Toggle word wrap Toggle overflow To list block devices in a specific pool:

Syntax

rbd ls POOL_NAME

rbd ls POOL_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd ls pool1

[root@rbd-client ~]# rbd ls pool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5. Retrieving the block device image information

Retrieve information on the block device image.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

To retrieve information from a particular image in the default

rbdpool, run the following command:Syntax

rbd --image IMAGE_NAME info

rbd --image IMAGE_NAME infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --image image1 info

[root@rbd-client ~]# rbd --image image1 infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow To retrieve information from an image within a pool:

Syntax

rbd --image IMAGE_NAME -p POOL_NAME info

rbd --image IMAGE_NAME -p POOL_NAME infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --image image1 -p pool1 info

[root@rbd-client ~]# rbd --image image1 -p pool1 infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.6. Resizing a block device image

Ceph block device images are thin-provisioned. They do not actually use any physical storage until you begin saving data to them. However, they do have a maximum capacity that you set with the --size option.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

To increase or decrease the maximum size of a Ceph block device image for the default

rbdpool:Syntax

rbd resize --image IMAGE_NAME --size SIZE

rbd resize --image IMAGE_NAME --size SIZECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd resize --image image1 --size 1024

[root@rbd-client ~]# rbd resize --image image1 --size 1024Copy to Clipboard Copied! Toggle word wrap Toggle overflow To increase or decrease the maximum size of a Ceph block device image for a specific pool:

Syntax

rbd resize --image POOL_NAME/IMAGE_NAME --size SIZE

rbd resize --image POOL_NAME/IMAGE_NAME --size SIZECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd resize --image pool1/image1 --size 1024

[root@rbd-client ~]# rbd resize --image pool1/image1 --size 1024Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7. Removing a block device image

Remove a block device image.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

To remove a block device from the default

rbdpool:Syntax

rbd rm IMAGE_NAME

rbd rm IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd rm image1

[root@rbd-client ~]# rbd rm image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow To remove a block device from a specific pool:

Syntax

rbd rm IMAGE_NAME -p POOL_NAME

rbd rm IMAGE_NAME -p POOL_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd rm image1 -p pool1

[root@rbd-client ~]# rbd rm image1 -p pool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.8. Moving a block device image to the trash

RADOS Block Device (RBD) images can be moved to the trash using the rbd trash command. This command provides more options than the rbd rm command.

Once an image is moved to the trash, it can be removed from the trash at a later time. This helps to avoid accidental deletion.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

To move an image to the trash execute the following:

Syntax

rbd trash mv [POOL_NAME/] IMAGE_NAME

rbd trash mv [POOL_NAME/] IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd trash mv pool1/image1

[root@rbd-client ~]# rbd trash mv pool1/image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Once an image is in the trash, a unique image ID is assigned.

NoteYou need this image ID to specify the image later if you need to use any of the trash options.

-

Execute the

rbd trash list POOL_NAMEfor a list of IDs of the images in the trash. This command also returns the image’s pre-deletion name. In addition, there is an optional--image-idargument that can be used withrbd infoandrbd snapcommands. Use--image-idwith therbd infocommand to see the properties of an image in the trash, and withrbd snapto remove an image’s snapshots from the trash. To remove an image from the trash execute the following:

Syntax

rbd trash rm [POOL_NAME/] IMAGE_ID

rbd trash rm [POOL_NAME/] IMAGE_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd trash rm pool1/d35ed01706a0

[root@rbd-client ~]# rbd trash rm pool1/d35ed01706a0Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantOnce an image is removed from the trash, it cannot be restored.

Execute the

rbd trash restorecommand to restore the image:Syntax

rbd trash restore [POOL_NAME/] IMAGE_ID

rbd trash restore [POOL_NAME/] IMAGE_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd trash restore pool1/d35ed01706a0

[root@rbd-client ~]# rbd trash restore pool1/d35ed01706a0Copy to Clipboard Copied! Toggle word wrap Toggle overflow To remove all expired images from trash:

Syntax

rbd trash purge POOL_NAME

rbd trash purge POOL_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd trash purge pool1 Removing images: 100% complete...done.

[root@rbd-client ~]# rbd trash purge pool1 Removing images: 100% complete...done.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.9. Defining an automatic trash purge schedule

You can schedule periodic trash purge operations on a pool.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

To add a trash purge schedule, execute:

Syntax

rbd trash purge schedule add --pool POOL_NAME INTERVAL

rbd trash purge schedule add --pool POOL_NAME INTERVALCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd trash purge schedule add --pool pool1 10m

[ceph: root@host01 /]# rbd trash purge schedule add --pool pool1 10mCopy to Clipboard Copied! Toggle word wrap Toggle overflow To list the trash purge schedule, execute:

Syntax

rbd trash purge schedule ls --pool POOL_NAME

rbd trash purge schedule ls --pool POOL_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd trash purge schedule ls --pool pool1 every 10m

[ceph: root@host01 /]# rbd trash purge schedule ls --pool pool1 every 10mCopy to Clipboard Copied! Toggle word wrap Toggle overflow To know the status of trash purge schedule, execute:

Example

[ceph: root@host01 /]# rbd trash purge schedule status POOL NAMESPACE SCHEDULE TIME pool1 2021-08-02 11:50:00

[ceph: root@host01 /]# rbd trash purge schedule status POOL NAMESPACE SCHEDULE TIME pool1 2021-08-02 11:50:00Copy to Clipboard Copied! Toggle word wrap Toggle overflow To remove the trash purge schedule, execute:

Syntax

rbd trash purge schedule remove --pool POOL_NAME INTERVAL

rbd trash purge schedule remove --pool POOL_NAME INTERVALCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd trash purge schedule remove --pool pool1 10m

[ceph: root@host01 /]# rbd trash purge schedule remove --pool pool1 10mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.10. Enabling and disabling image features

The block device images, such as fast-diff, exclusive-lock, object-map, or deep-flatten, are enabled by default. You can enable or disable these image features on already existing images.

The deep flatten feature can be only disabled on already existing images but not enabled. To use deep flatten, enable it when creating images.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

Retrieve information from a particular image in a pool:

Syntax

rbd --image POOL_NAME/IMAGE_NAME info

rbd --image POOL_NAME/IMAGE_NAME infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd --image pool1/image1 info

[ceph: root@host01 /]# rbd --image pool1/image1 infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable a feature:

Syntax

rbd feature enable POOL_NAME/IMAGE_NAME FEATURE_NAME

rbd feature enable POOL_NAME/IMAGE_NAME FEATURE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow To enable the

exclusive-lockfeature on theimage1image in thepool1pool:Example

[ceph: root@host01 /]# rbd feature enable pool1/image1 exclusive-lock

[ceph: root@host01 /]# rbd feature enable pool1/image1 exclusive-lockCopy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantIf you enable the

fast-diffandobject-mapfeatures, then rebuild the object map:+ .Syntax

rbd object-map rebuild POOL_NAME/IMAGE_NAME

rbd object-map rebuild POOL_NAME/IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow

Disable a feature:

Syntax

rbd feature disable POOL_NAME/IMAGE_NAME FEATURE_NAME

rbd feature disable POOL_NAME/IMAGE_NAME FEATURE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow To disable the

fast-difffeature on theimage1image in thepool1pool:Example

[ceph: root@host01 /]# rbd feature disable pool1/image1 fast-diff

[ceph: root@host01 /]# rbd feature disable pool1/image1 fast-diffCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.11. Working with image metadata

Ceph supports adding custom image metadata as key-value pairs. The pairs do not have any strict format.

Also, by using metadata, you can set the RADOS Block Device (RBD) configuration parameters for particular images.

Use the rbd image-meta commands to work with metadata.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the client node.

Procedure

To set a new metadata key-value pair:

Syntax

rbd image-meta set POOL_NAME/IMAGE_NAME KEY VALUE

rbd image-meta set POOL_NAME/IMAGE_NAME KEY VALUECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd image-meta set pool1/image1 last_update 2021-06-06

[ceph: root@host01 /]# rbd image-meta set pool1/image1 last_update 2021-06-06Copy to Clipboard Copied! Toggle word wrap Toggle overflow This example sets the

last_updatekey to the2021-06-06value on theimage1image in thepool1pool.To view a value of a key:

Syntax

rbd image-meta get POOL_NAME/IMAGE_NAME KEY

rbd image-meta get POOL_NAME/IMAGE_NAME KEYCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd image-meta get pool1/image1 last_update

[ceph: root@host01 /]# rbd image-meta get pool1/image1 last_updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow This example views the value of the

last_updatekey.To show all metadata on an image:

Syntax

rbd image-meta list POOL_NAME/IMAGE_NAME

rbd image-meta list POOL_NAME/IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd image-meta list pool1/image1

[ceph: root@host01 /]# rbd image-meta list pool1/image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow This example lists the metadata set for the

image1image in thepool1pool.To remove a metadata key-value pair:

Syntax

rbd image-meta remove POOL_NAME/IMAGE_NAME KEY

rbd image-meta remove POOL_NAME/IMAGE_NAME KEYCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd image-meta remove pool1/image1 last_update

[ceph: root@host01 /]# rbd image-meta remove pool1/image1 last_updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow This example removes the

last_updatekey-value pair from theimage1image in thepool1pool.To override the RBD image configuration settings set in the Ceph configuration file for a particular image:

Syntax

rbd config image set POOL_NAME/IMAGE_NAME PARAMETER VALUE

rbd config image set POOL_NAME/IMAGE_NAME PARAMETER VALUECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd config image set pool1/image1 rbd_cache false

[ceph: root@host01 /]# rbd config image set pool1/image1 rbd_cache falseCopy to Clipboard Copied! Toggle word wrap Toggle overflow This example disables the RBD cache for the

image1image in thepool1pool.

2.12. Moving images between pools

You can move RADOS Block Device (RBD) images between different pools within the same cluster.

During this process, the source image is copied to the target image with all snapshot history and optionally with link to the source image’s parent to help preserve sparseness. The source image is read only, the target image is writable. The target image is linked to the source image while the migration is in progress.

You can safely run this process in the background while the new target image is in use. However, stop all clients using the target image before the preparation step to ensure that clients using the image are updated to point to the new target image.

The krbd kernel module does not support live migration at this time.

Prerequisites

- Stop all clients that use the source image.

- Root-level access to the client node.

Procedure

Prepare for migration by creating the new target image that cross-links the source and target images:

Syntax

rbd migration prepare SOURCE_IMAGE TARGET_IMAGE

rbd migration prepare SOURCE_IMAGE TARGET_IMAGECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace:

- SOURCE_IMAGE with the name of the image to be moved. Use the POOL/IMAGE_NAME format.

- TARGET_IMAGE with the name of the new image. Use the POOL/IMAGE_NAME format.

Example

rbd migration prepare pool1/image1 pool2/image2

[root@rbd-client ~]# rbd migration prepare pool1/image1 pool2/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the state of the new target image, which is supposed to be

prepared:Syntax

rbd status TARGET_IMAGE

rbd status TARGET_IMAGECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Optionally, restart the clients using the new target image name.

Copy the source image to target image:

Syntax

rbd migration execute TARGET_IMAGE

rbd migration execute TARGET_IMAGECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd migration execute pool2/image2

[root@rbd-client ~]# rbd migration execute pool2/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure that the migration is completed:

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Commit the migration by removing the cross-link between the source and target images, and this also removes the source image:

Syntax

rbd migration commit TARGET_IMAGE

rbd migration commit TARGET_IMAGECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd migration commit pool2/image2

[root@rbd-client ~]# rbd migration commit pool2/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the source image is a parent of one or more clones, use the

--forceoption after ensuring that the clone images are not in use:Example

rbd migration commit pool2/image2 --force

[root@rbd-client ~]# rbd migration commit pool2/image2 --forceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you did not restart the clients after the preparation step, restart them using the new target image name.

2.13. Migrating pools

You can migrate or copy RADOS Block Device (RBD) images.

During this process, the source image is exported and then imported.

Use this migration process if the workload contains only RBD images. No rados cppool images can exist in the workload. If rados cppool images exist in the workload, see Migrating a pool in the Storage Strategies Guide.

While running the export and import commands, be sure that there is no active I/O in the related RBD images. It is recommended to take production down during this pool migration time.

Prerequisites

- Stop all active I/O in the RBD images which are being exported and imported.

- Root-level access to the client node.

Procedure

Migrate the volume.

Syntax

rbd export volumes/VOLUME_NAME - | rbd import --image-format 2 - volumes_new/VOLUME_NAME

rbd export volumes/VOLUME_NAME - | rbd import --image-format 2 - volumes_new/VOLUME_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd export volumes/volume-3c4c63e3-3208-436f-9585-fee4e2a3de16 - | rbd import --image-format 2 - volumes_new/volume-3c4c63e3-3208-436f-9585-fee4e2a3de16

[root@rbd-client ~]# rbd export volumes/volume-3c4c63e3-3208-436f-9585-fee4e2a3de16 - | rbd import --image-format 2 - volumes_new/volume-3c4c63e3-3208-436f-9585-fee4e2a3de16Copy to Clipboard Copied! Toggle word wrap Toggle overflow If using the local drive for import or export is necessary, the commands can be divided, first exporting to a local drive and then importing the files to a new pool.

Syntax

rbd export volume/VOLUME_NAME FILE_PATH rbd import --image-format 2 FILE_PATH volumes_new/VOLUME_NAME

rbd export volume/VOLUME_NAME FILE_PATH rbd import --image-format 2 FILE_PATH volumes_new/VOLUME_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd export volumes/volume-3c4c63e3-3208-436f-9585-fee4e2a3de16 <path of export file> rbd import --image-format 2 <path> volumes_new/volume-3c4c63e3-3208-436f-9585-fee4e2a3de16

[root@rbd-client ~]# rbd export volumes/volume-3c4c63e3-3208-436f-9585-fee4e2a3de16 <path of export file> [root@rbd-client ~]# rbd import --image-format 2 <path> volumes_new/volume-3c4c63e3-3208-436f-9585-fee4e2a3de16Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.14. The rbdmap service

The systemd unit file, rbdmap.service, is included with the ceph-common package. The rbdmap.service unit executes the rbdmap shell script.

This script automates the mapping and unmapping of RADOS Block Devices (RBD) for one or more RBD images. The script can be ran manually at any time, but the typical use case is to automatically mount RBD images at boot time, and unmount at shutdown. The script takes a single argument, which can be either map, for mounting or unmap, for unmounting RBD images. The script parses a configuration file, the default is /etc/ceph/rbdmap, but can be overridden using an environment variable called RBDMAPFILE. Each line of the configuration file corresponds to an RBD image.

The format of the configuration file format is as follows:

IMAGE_SPEC RBD_OPTS

Where IMAGE_SPEC specifies the POOL_NAME / IMAGE_NAME, or just the IMAGE_NAME, in which case the POOL_NAME defaults to rbd. The RBD_OPTS is an optional list of options to be passed to the underlying rbd map command. These parameters and their values should be specified as a comma-separated string:

OPT1=VAL1,OPT2=VAL2,…,OPT_N=VAL_N

This will cause the script to issue an rbd map command like the following:

Syntax

rbd map POOLNAME/IMAGE_NAME --OPT1 VAL1 --OPT2 VAL2

rbd map POOLNAME/IMAGE_NAME --OPT1 VAL1 --OPT2 VAL2For options and values which contain commas or equality signs, a simple apostrophe can be used to prevent replacing them.

When successful, the rbd map operation maps the image to a /dev/rbdX device, at which point a udev rule is triggered to create a friendly device name symlink, for example, /dev/rbd/POOL_NAME/IMAGE_NAME, pointing to the real mapped device. For mounting or unmounting to succeed, the friendly device name must have a corresponding entry in /etc/fstab file. When writing /etc/fstab entries for RBD images, it is a good idea to specify the noauto or nofail mount option. This prevents the init system from trying to mount the device too early, before the device exists.

2.15. Configuring the rbdmap service

To automatically map and mount, or unmap and unmount, RADOS Block Devices (RBD) at boot time, or at shutdown respectively.

Prerequisites

- Root-level access to the node doing the mounting.

-

Installation of the

ceph-commonpackage.

Procedure

-

Open for editing the

/etc/ceph/rbdmapconfiguration file. Add the RBD image or images to the configuration file:

Example

foo/bar1 id=admin,keyring=/etc/ceph/ceph.client.admin.keyring foo/bar2 id=admin,keyring=/etc/ceph/ceph.client.admin.keyring,options='lock_on_read,queue_depth=1024'

foo/bar1 id=admin,keyring=/etc/ceph/ceph.client.admin.keyring foo/bar2 id=admin,keyring=/etc/ceph/ceph.client.admin.keyring,options='lock_on_read,queue_depth=1024'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save changes to the configuration file.

Enable the RBD mapping service:

Example

systemctl enable rbdmap.service

[root@client ~]# systemctl enable rbdmap.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.16. Persistent Write Log Cache

In a Red Hat Ceph Storage cluster, Persistent Write Log (PWL) cache provides a persistent, fault-tolerant write-back cache for librbd-based RBD clients.

PWL cache uses a log-ordered write-back design which maintains checkpoints internally so that writes that get flushed back to the cluster are always crash consistent. If the client cache is lost entirely, the disk image is still consistent but the data appears stale. You can use PWL cache with persistent memory (PMEM) or solid-state disks (SSD) as cache devices.

For PMEM, the cache mode is replica write log (RWL) and for SSD, the cache mode is (SSD). Currently, PWL cache supports RWL and SSD modes and is disabled by default.

Primary benefits of PWL cache are:

- PWL cache can provide high performance when the cache is not full. The larger the cache, the longer the duration of high performance.

- PWL cache provides persistence and is not much slower than RBD cache. RBD cache is faster but volatile and cannot guarantee data order and persistence.

- In a steady state, where the cache is full, performance is affected by the number of I/Os in flight. For example, PWL can provide higher performance at low io_depth, but at high io_depth, such as when the number of I/Os is greater than 32, the performance is often worse than that in cases without cache.

Use cases for PMEM caching are:

- Different from RBD cache, PWL cache has non-volatile characteristics and is used in scenarios where you do not want data loss and need performance.

- RWL mode provides low latency. It has a stable low latency for burst I/Os and it is suitable for those scenarios with high requirements for stable low latency.

- RWL mode also has high continuous and stable performance improvement in scenarios with low I/O depth or not too much inflight I/O.

Use case for SSD caching is:

- The advantages of SSD mode are similar to RWL mode. SSD hardware is relatively cheap and popular, but its performance is slightly lower than PMEM.

2.17. Persistent write log cache limitations

When using Persistent Write Log (PWL) cache, there are several limitations that should be considered.

- The underlying implementation of persistent memory (PMEM) and solid-state disks (SSD) is different, with PMEM having higher performance. At present, PMEM can provide "persist on write" and SSD is "persist on flush or checkpoint". In future releases, these two modes will be configurable.

-

When users switch frequently and open and close images repeatedly, Ceph displays poor performance. If PWL cache is enabled, the performance is worse. It is not recommended to set

num_jobsin a Flexible I/O (fio) test, but instead setup multiple jobs to write different images.

2.18. Enabling persistent write log cache

You can enable persistent write log cache (PWL) on a Red Hat Ceph Storage cluster by setting the Ceph RADOS block device (RBD) rbd_persistent_cache_mode and rbd_plugins options.

The exclusive-lock feature must be enabled to enable persistent write log cache. The cache can be loaded only after the exclusive-lock is acquired. Exclusive-locks are enabled on newly created images by default unless overridden by the rbd_default_features configuration option or the --image-feature flag for the rbd create command. See the Enabling and disabling image features section for more details on the exclusive-lock feature.

Set the persistent write log cache options at the host level by using the ceph config set command. Set the persistent write log cache options at the pool or image level by using the rbd config pool set or the rbd config image set commands.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the monitor node.

- The exclusive-lock feature is enabled.

- Client-side disks are persistent memory (PMEM) or solid-state disks (SSD).

- RBD cache is disabled.

Procedure

Enable PWL cache:

At the host level, use the

ceph config setcommand:Syntax

ceph config set client rbd_persistent_cache_mode CACHE_MODE ceph config set client rbd_plugins pwl_cache

ceph config set client rbd_persistent_cache_mode CACHE_MODE ceph config set client rbd_plugins pwl_cacheCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace CACHE_MODE with

rwlorssd.Example

[ceph: root@host01 /]# ceph config set client rbd_persistent_cache_mode ssd [ceph: root@host01 /]# ceph config set client rbd_plugins pwl_cache

[ceph: root@host01 /]# ceph config set client rbd_persistent_cache_mode ssd [ceph: root@host01 /]# ceph config set client rbd_plugins pwl_cacheCopy to Clipboard Copied! Toggle word wrap Toggle overflow At the pool level, use the

rbd config pool setcommand:Syntax

rbd config pool set POOL_NAME rbd_persistent_cache_mode CACHE_MODE rbd config pool set POOL_NAME rbd_plugins pwl_cache

rbd config pool set POOL_NAME rbd_persistent_cache_mode CACHE_MODE rbd config pool set POOL_NAME rbd_plugins pwl_cacheCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace CACHE_MODE with

rwlorssd.Example

[ceph: root@host01 /]# rbd config pool set pool1 rbd_persistent_cache_mode ssd [ceph: root@host01 /]# rbd config pool set pool1 rbd_plugins pwl_cache

[ceph: root@host01 /]# rbd config pool set pool1 rbd_persistent_cache_mode ssd [ceph: root@host01 /]# rbd config pool set pool1 rbd_plugins pwl_cacheCopy to Clipboard Copied! Toggle word wrap Toggle overflow At the image level, use the

rbd config image setcommand:Syntax

rbd config image set POOL_NAME/IMAGE_NAME rbd_persistent_cache_mode CACHE_MODE rbd config image set POOL_NAME/IMAGE_NAME rbd_plugins pwl_cache

rbd config image set POOL_NAME/IMAGE_NAME rbd_persistent_cache_mode CACHE_MODE rbd config image set POOL_NAME/IMAGE_NAME rbd_plugins pwl_cacheCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace CACHE_MODE with

rwlorssd.Example

[ceph: root@host01 /]# rbd config image set pool1/image1 rbd_persistent_cache_mode ssd [ceph: root@host01 /]# rbd config image set pool1/image1 rbd_plugins pwl_cache

[ceph: root@host01 /]# rbd config image set pool1/image1 rbd_persistent_cache_mode ssd [ceph: root@host01 /]# rbd config image set pool1/image1 rbd_plugins pwl_cacheCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Optional: Set the additional RBD options at the host, the pool, or the image level:

Syntax

rbd_persistent_cache_mode CACHE_MODE rbd_plugins pwl_cache rbd_persistent_cache_path /PATH_TO_CACHE_DIRECTORY rbd_persistent_cache_size PERSISTENT_CACHE_SIZE

rbd_persistent_cache_mode CACHE_MODE rbd_plugins pwl_cache rbd_persistent_cache_path /PATH_TO_CACHE_DIRECTORY1 rbd_persistent_cache_size PERSISTENT_CACHE_SIZE2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- 1

rbd_persistent_cache_path- A file folder to cache data that must have direct access (DAX) enabled when using therwlmode to avoid performance degradation.- 2

rbd_persistent_cache_size- The cache size per image, with a minimum cache size of 1 GB. The larger the cache size, the better the performance.Setting additional RBD options for

rwlmode:Example

rbd_cache false rbd_persistent_cache_mode rwl rbd_plugins pwl_cache rbd_persistent_cache_path /mnt/pmem/cache/ rbd_persistent_cache_size 1073741824

rbd_cache false rbd_persistent_cache_mode rwl rbd_plugins pwl_cache rbd_persistent_cache_path /mnt/pmem/cache/ rbd_persistent_cache_size 1073741824Copy to Clipboard Copied! Toggle word wrap Toggle overflow Setting additional RBD options for

ssdmode:Example

rbd_cache false rbd_persistent_cache_mode ssd rbd_plugins pwl_cache rbd_persistent_cache_path /mnt/nvme/cache rbd_persistent_cache_size 1073741824

rbd_cache false rbd_persistent_cache_mode ssd rbd_plugins pwl_cache rbd_persistent_cache_path /mnt/nvme/cache rbd_persistent_cache_size 1073741824Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.19. Checking persistent write log cache status

You can check the status of the Persistent Write Log (PWL) cache. The cache is used when an exclusive lock is acquired, and when the exclusive-lock is released, the persistent write log cache is closed. The cache status shows information about the cache size, location, type, and other cache-related information. Updates to the cache status are done when the cache is opened and closed.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the monitor node.

- A running process with PWL cache enabled.

Procedure

View the PWL cache status:

Syntax

rbd status POOL_NAME/IMAGE_NAME

rbd status POOL_NAME/IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.20. Flushing persistent write log cache

You can flush the cache file with the rbd command, specifying persistent-cache flush, the pool name, and the image name before discarding the persistent write log (PWL) cache. The flush command can explicitly write cache files back to the OSDs. If there is a cache interruption or the application dies unexpectedly, all the entries in the cache are flushed to the OSDs so that you can manually flush the data and then invalidate the cache.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the monitor node.

- PWL cache is enabled.

Procedure

Flush the PWL cache:

Syntax

rbd persistent-cache flush POOL_NAME/IMAGE_NAME

rbd persistent-cache flush POOL_NAME/IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd persistent-cache flush pool1/image1

[ceph: root@host01 /]# rbd persistent-cache flush pool1/image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.21. Discarding persistent write log cache

You might need to manually discard the Persistent Write Log (PWL) cache, for example, if the data in the cache has expired. You can discard a cache file for an image by using the rbd persistent-cache invalidate command. The command removes the cache metadata for the specified image, disables the cache feature, and deletes the local cache file, if it exists.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the monitor node.

- PWL cache is enabled.

Procedure

Discard PWL cache:

Syntax

rbd persistent-cache invalidate POOL_NAME/IMAGE_NAME

rbd persistent-cache invalidate POOL_NAME/IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd persistent-cache invalidate pool1/image1

[ceph: root@host01 /]# rbd persistent-cache invalidate pool1/image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.22. Monitoring performance of Ceph Block Devices using the command-line interface

Starting with Red Hat Ceph Storage 4.1, a performance metrics gathering framework is integrated within the Ceph OSD and Manager components. This framework provides a built-in method to generate and process performance metrics upon which other Ceph Block Device performance monitoring solutions are built.

A new Ceph Manager module,rbd_support, aggregates the performance metrics when enabled. The rbd command has two new actions: iotop and iostat.

The initial use of these actions can take around 30 seconds to populate the data fields.

Prerequisites

- User-level access to a Ceph Monitor node.

Procedure

Ensure the

rbd_supportCeph Manager module is enabled:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To display an "iotop"-style of images:

Example

rbd perf image iotop

[user@mon ~]$ rbd perf image iotopCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe write ops, read-ops, write-bytes, read-bytes, write-latency, and read-latency columns can be sorted dynamically by using the right and left arrow keys.

To display an "iostat"-style of images:

Example

rbd perf image iostat

[user@mon ~]$ rbd perf image iostatCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe output from this command can be in JSON or XML format, and then can be sorted using other command-line tools.

Chapter 3. Live migration of images

As a storage administrator, you can live-migrate RBD images between different pools or even with the same pool, within the same storage cluster.

You can migrate between different images formats and layouts and even from external data sources. When live migration is initiated, the source image is deep copied to the destination image, pulling all snapshot history while preserving the sparse allocation of data where possible.

Images with encryption support live migration.

Currently, the krbd kernel module does not support live migration.

Prerequisites

- A running Red Hat Ceph Storage cluster.

3.1. The live migration process

By default, during the live migration of the RBD images with the same storage cluster, the source image is marked read-only. All clients redirect the Input/Output (I/O) to the new target image. Additionally, this mode can preserve the link to the source image’s parent to preserve sparseness, or it can flatten the image during the migration to remove the dependency on the source image’s parent. You can use the live migration process in an import-only mode, where the source image remains unmodified. You can link the target image to an external data source, such as a backup file, HTTP(s) file, or an S3 object or an NBD export. The live migration copy process can safely run in the background while the new target image is being used.

The live migration process consists of three steps:

Prepare Migration: The first step is to create new target image and link the target image to the source image. If the import-only mode is not configured, the source image will also be linked to the target image and marked read-only. Attempts to read uninitialized data extents within the target image will internally redirect the read to the source image, and writes to uninitialized extents within the target image will internally deep copy, the overlapping source image extents to the target image.

Execute Migration: This is a background operation that deep-copies all initialized blocks from the source image to the target. You can run this step when clients are actively using the new target image.

Finish Migration: You can commit or abort the migration, once the background migration process is completed. Committing the migration removes the cross-links between the source and target images, and will remove the source image if not configured in the import-only mode. Aborting the migration remove the cross-links, and will remove the target image.

3.2. Formats

Native, qcow and raw formats are currently supported.

You can use the native format to describe a native RBD image within a Red Hat Ceph Storage cluster as the source image. The source-spec JSON document is encoded as:

Syntax

Note that the native format does not include the stream object since it utilizes native Ceph operations. For example, to import from the image rbd/ns1/image1@snap1, the source-spec could be encoded as:

Example

You can use the qcow format to describe a QEMU copy-on-write (QCOW) block device. Both the QCOW v1 and v2 formats are currently supported with the exception of advanced features such as compression, encryption, backing files, and external data files. You can link the qcow format data to any supported stream source:

Example

You can use the raw format to describe a thick-provisioned, raw block device export that is rbd export –export-format 1 SNAP_SPEC. You can link the raw format data to any supported stream source:

Example

The inclusion of the snapshots array is optional and currently only supports thick-provisioned raw snapshot exports.

3.3. Streams

Currently, file, HTTP, S3 and NBD streams are supported.

File stream

You can use the file stream to import from a locally accessible POSIX file source.

Syntax

For example, to import a raw-format image from a file located at /mnt/image.raw, the source-spec JSON file is:

Example

HTTP stream

You can use the HTTP stream to import from a remote HTTP or HTTPS web server.

Syntax

For example, to import a raw-format image from a file located at http://download.ceph.com/image.raw, the source-spec JSON file is:

Example

S3 stream

You can use the s3 stream to import from a remote S3 bucket.

Syntax

For example, to import a raw-format image from a file located at http://s3.ceph.com/bucket/image.raw, its source-spec JSON is encoded as follows:

Example

NBD stream

You can use the NBD stream to import from a remote NBD export.

Syntax

For example, to import a raw-format image from an NBD export located at nbd://nbd.ceph.com/image.raw, its source-spec JSON is encoded as follows: .Example

nbd-uri parameter must follow the NBD URI specification. The default NBD port is 10809.

3.4. Preparing the live migration process

You can prepare the default live migration process for RBD images within the same Red Hat Ceph Storage cluster. The rbd migration prepare command accepts all the same layout options as the rbd create command. The rbd create command allows changes to the on-disk layout of the immutable image. If you only want to change the on-disk layout and want to keep the original image name, skip the migration_target argument. All clients using the source image must be stopped before preparing a live migration. The prepare step will fail if it finds any running clients with the image open in read/write mode. You can restart the clients using the new target image once the prepare step is completed.

You cannot restart the clients using the source image as it will result in a failure.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Two block device pools.

- One block device image.

Cloned images are implicitly flattened during importing (using --import-only parameter) and these images are disassociated from any parent chain in the source cluster when migrated to another Ceph cluster.

Procedure

Optional: If you are migrating the image from one Ceph cluster to another, copy the

ceph.confandceph.client.admin.keyringof both the clusters to a common node. This ensures the client node has access to both clusters for migration.Example

Copying

ceph.confandceph.client.admin.keyringof cluster c1 to a common node:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copying

ceph.confandceph.client.admin.keyringof cluster c2 to a common node:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Prepare the live migration within the storage cluster:

Syntax

rbd migration prepare SOURCE_POOL_NAME/SOURCE_IMAGE_NAME TARGET_POOL_NAME/SOURCE_IMAGE_NAME

rbd migration prepare SOURCE_POOL_NAME/SOURCE_IMAGE_NAME TARGET_POOL_NAME/SOURCE_IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@rbd-client /]# rbd migration prepare sourcepool1/sourceimage1 targetpool1/sourceimage1

[ceph: root@rbd-client /]# rbd migration prepare sourcepool1/sourceimage1 targetpool1/sourceimage1Copy to Clipboard Copied! Toggle word wrap Toggle overflow OR

If you want to rename the source image:

Syntax

rbd migration prepare SOURCE_POOL_NAME/SOURCE_IMAGE_NAME TARGET_POOL_NAME/NEW_SOURCE_IMAGE_NAME

rbd migration prepare SOURCE_POOL_NAME/SOURCE_IMAGE_NAME TARGET_POOL_NAME/NEW_SOURCE_IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@rbd-client /]# rbd migration prepare sourcepool1/sourceimage1 targetpool1/newsourceimage1

[ceph: root@rbd-client /]# rbd migration prepare sourcepool1/sourceimage1 targetpool1/newsourceimage1Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the example,

newsourceimage1is the renamed source image.You can check the current state of the live migration process with the following command:

Syntax

rbd status TARGET_POOL_NAME/SOURCE_IMAGE_NAME

rbd status TARGET_POOL_NAME/SOURCE_IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantDuring the migration process, the source image is moved into the RBD trash to prevent mistaken usage.

Example

[ceph: root@rbd-client /]# rbd info sourceimage1 rbd: error opening image sourceimage1: (2) No such file or directory

[ceph: root@rbd-client /]# rbd info sourceimage1 rbd: error opening image sourceimage1: (2) No such file or directoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@rbd-client /]# rbd trash ls --all sourcepool1 adb429cb769a sourceimage1

[ceph: root@rbd-client /]# rbd trash ls --all sourcepool1 adb429cb769a sourceimage1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.5. Preparing import-only migration

You can initiate the import-only live migration process by running the rbd migration prepare command with the --import-only and either, --source-spec or --source-spec-path options, passing a JSON document that describes how to access the source image data directly on the command line or from a file.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- A bucket and an S3 object are created.

Procedure

Create a JSON file:

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Prepare the

import-onlylive migration process:Syntax

rbd migration prepare --import-only --source-spec-path "JSON_FILE" TARGET_POOL_NAME

rbd migration prepare --import-only --source-spec-path "JSON_FILE" TARGET_POOL_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@rbd-client /]# rbd migration prepare --import-only --source-spec-path "testspec.json" targetpool1

[ceph: root@rbd-client /]# rbd migration prepare --import-only --source-spec-path "testspec.json" targetpool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

rbd migration preparecommand accepts all the same image options as therbd createcommand.You can check the status of the

import-onlylive migration:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following example shows migrating data from Ceph cluster

c1to Ceph clusterc2:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.6. Executing the live migration process

After you prepare for the live migration, you must copy the image blocks from the source image to the target image.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Two block device pools.

- One block device image.

Procedure

Execute the live migration:

Syntax

rbd migration execute TARGET_POOL_NAME/SOURCE_IMAGE_NAME

rbd migration execute TARGET_POOL_NAME/SOURCE_IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@rbd-client /]# rbd migration execute targetpool1/sourceimage1 Image migration: 100% complete...done.

[ceph: root@rbd-client /]# rbd migration execute targetpool1/sourceimage1 Image migration: 100% complete...done.Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can check the feedback on the progress of the migration block deep-copy process:

Syntax

rbd status TARGET_POOL_NAME/SOURCE_IMAGE_NAME

rbd status TARGET_POOL_NAME/SOURCE_IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.7. Committing the live migration process

You can commit the migration, once the live migration has completed deep-copying all the data blocks from the source image to the target image.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Two block device pools.

- One block device image.

Procedure

Commit the migration, once deep-copying is completed:

Syntax

rbd migration commit TARGET_POOL_NAME/SOURCE_IMAGE_NAME

rbd migration commit TARGET_POOL_NAME/SOURCE_IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@rbd-client /]# rbd migration commit targetpool1/sourceimage1 Commit image migration: 100% complete...done.

[ceph: root@rbd-client /]# rbd migration commit targetpool1/sourceimage1 Commit image migration: 100% complete...done.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Committing the live migration will remove the cross-links between the source and target images, and also removes the source image from the source pool:

Example

[ceph: root@rbd-client /]# rbd trash list --all sourcepool1

[ceph: root@rbd-client /]# rbd trash list --all sourcepool13.8. Aborting the live migration process

You can revert the live migration process. Aborting live migration reverts the prepare and execute steps.

You can abort only if you have not committed the live migration.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Two block device pools.

- One block device image.

Procedure

Abort the live migration process:

Syntax

rbd migration abort TARGET_POOL_NAME/SOURCE_IMAGE_NAME

rbd migration abort TARGET_POOL_NAME/SOURCE_IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@rbd-client /]# rbd migration abort targetpool1/sourceimage1 Abort image migration: 100% complete...done.

[ceph: root@rbd-client /]# rbd migration abort targetpool1/sourceimage1 Abort image migration: 100% complete...done.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

When the live migration process is aborted, the target image is deleted and access to the original source image is restored in the source pool:

Example

[ceph: root@rbd-client /]# rbd ls sourcepool1 sourceimage1

[ceph: root@rbd-client /]# rbd ls sourcepool1

sourceimage1Chapter 4. Image encryption

As a storage administrator, you can set a secret key that is used to encrypt a specific RBD image. Image level encryption is handled internally by RBD clients.

The krbd module does not support image level encryption.

You can use external tools such as dm-crypt or QEMU to encrypt an RBD image.

Prerequisites

- A running Red Hat Ceph Storage 8 cluster.

-

rootlevel permissions.

4.1. Encryption format

RBD images are not encrypted by default. You can encrypt an RBD image by formatting to one of the supported encryption formats. The format operation persists the encryption metadata to the RBD image. The encryption metadata includes information such as the encryption format and version, cipher algorithm and mode specifications, as well as the information used to secure the encryption key.

The encryption key is protected by a user kept secret that is a passphrase, which is never stored as persistent data in the RBD image. The encryption format operation requires you to specify the encryption format, cipher algorithm, and mode specification as well as a passphrase. The encryption metadata is stored in the RBD image, currently as an encryption header that is written at the start of the raw image. This means that the effective image size of the encrypted image would be lower than the raw image size.

Unless explicitly (re-)formatted, clones of an encrypted image are inherently encrypted using the same format and secret.

Any data written to the RBD image before formatting might become unreadable, even though it might still occupy storage resources. RBD images with the journal feature enabled cannot be encrypted.

4.2. Encryption load

By default, all RBD APIs treat encrypted RBD images the same way as unencrypted RBD images. You can read or write raw data anywhere in the image. Writing raw data into the image might risk the integrity of the encryption format. For example, the raw data could override the encryption metadata located at the beginning of the image. To safely perform encrypted Input/Output(I/O) or maintenance operations on the encrypted RBD image, an additional encryption load operation must be applied immediately after opening the image.

The encryption load operation requires you to specify the encryption format and a passphrase for unlocking the encryption key for the image itself and each of its explicitly formatted ancestor images. All I/Os for the opened RBD image are encrypted or decrypted, for a cloned RBD image, this includes IOs for the parent images. The encryption key is stored in memory by the RBD client until the image is closed.

Once the encryption is loaded on the RBD image, no other encryption load or format operation can be applied. Additionally, API calls for retrieving the RBD image size and the parent overlap using the opened image context returns the effective image size and the effective parent overlap respectively. The encryption is loaded automatically when mapping the RBD images as block devices through rbd-nbd.

API calls for retrieving the image size and the parent overlap using the opened image context returns the effective image size and the effective parent overlap.

If a clone of an encrypted image is explicitly formatted, flattening or shrinking of the cloned image ceases to be transparent since the parent data must be re-encrypted according to the cloned image format as it is copied from the parent snapshot. If encryption is not loaded before the flatten operation is issued, any parent data that was previously accessible in the cloned image might become unreadable.

If a clone of an encrypted image is explicitly formatted, the operation of shrinking the cloned image ceases to be transparent. This is because, in scenarios such as the cloned image containing snapshots or the cloned image being shrunk to a size that is not aligned with the object size, the action of copying some data from the parent snapshot, similar to flattening is involved. If encryption is not loaded before the shrink operation is issued, any parent data that was previously accessible in the cloned image might become unreadable.

4.3. Supported formats

Both Linux Unified Key Setup (LUKS) 1 and 2 are supported. The data layout is fully compliant with the LUKS specification. External LUKS compatible tools such as dm-crypt or QEMU can safely perform encrypted Input/Output (I/O) on encrypted RBD images. Additionally, you can import existing LUKS images created by external tools, by copying the raw LUKS data into the RBD image.

Currently, only Advanced Encryption Standards (AES) 128 and 256 encryption algorithms are supported. xts-plain64 is currently the only supported encryption mode.

To use the LUKS format, format the RBD image with the following command:

You need to create a file named passphrase.txt and enter a passphrase. You can randomly generate the passphrase, which might contain NULL characters. If the passphrase ends with a newline character, it is stripped off.

Syntax

rbd encryption format POOL_NAME/LUKS_IMAGE luks1|luks2 PASSPHRASE_FILE

rbd encryption format POOL_NAME/LUKS_IMAGE luks1|luks2 PASSPHRASE_FILEExample

[ceph: root@host01 /]# rbd encryption format pool1/luksimage1 luks1 passphrase.bin

[ceph: root@host01 /]# rbd encryption format pool1/luksimage1 luks1 passphrase.bin

You can select either luks1 or luks encryption format.

The encryption format operation generates a LUKS header and writes it at the start of the RBD image. A single keyslot is appended to the header. The keyslot holds a randomly generated encryption key, and is protected by the passphrase read from the passphrase file. By default, AES-256 in xts-plain64 mode, which is the current recommended mode and the default for other LUKS tools, is used. Adding or removing additional passphrases is currently not supported natively, but can be achieved using LUKS tools such as cryptsetup. The LUKS header size can vary that is upto 136MiB in LUKS, but it is usually upto 16MiB, dependent on the version of libcryptsetup installed. For optimal performance, the encryption format sets the data offset to be aligned with the image object size. For example, expect a minimum overhead of 8MiB if using an image configured with an 8MiB object size.

In LUKS1, sectors, which are the minimal encryption units, are fixed at 512 bytes. LUKS2 supports larger sectors, and for better performance, the default sector size is set to the maximum of 4KiB. Writes which are either smaller than a sector, or are not aligned to a sector start, trigger a guarded read-modify-write chain on the client, with a considerable latency penalty. A batch of such unaligned writes can lead to I/O races which further deteriorates performance. Red Hat recommends to avoid using RBD encryption in cases where incoming writes cannot be guaranteed to be LUKS sector aligned.

To map a LUKS encrypted image, run the following command:

Syntax

rbd device map -t nbd -o encryption-format=luks1|luks2,encryption-passphrase-file=passphrase.txt POOL_NAME/LUKS_IMAGE

rbd device map -t nbd -o encryption-format=luks1|luks2,encryption-passphrase-file=passphrase.txt POOL_NAME/LUKS_IMAGEExample

[ceph: root@host01 /]# rbd device map -t nbd -o encryption-format=luks1,encryption-passphrase-file=passphrase.txt pool1/luksimage1

[ceph: root@host01 /]# rbd device map -t nbd -o encryption-format=luks1,encryption-passphrase-file=passphrase.txt pool1/luksimage1

You can select either luks1 or luks2 encryption format.

For security reasons, both the encryption format and encryption load operations are CPU-intensive, and might take a few seconds to complete. For encrypted I/O, assuming AES-NI is enabled, a relatively small microseconds latency might be added, as well as a small increase in CPU utilization.

4.4. Adding encryption format to images and clones

Layered-client-side encryption is supported. The cloned images can be encrypted with their own format and passphrase, potentially different from that of the parent image.

Add encryption format to images and clones with the rbd encryption format command. Given a LUKS2-formatted image, you can create both a LUKS2-formatted clone and a LUKS1-formatted clone.

Prerequisites

- A running Red Hat Ceph Storage cluster with Block Device (RBD) configured.

- Root-level access to the node.

Procedure

Create a LUKS2-formatted image:

Syntax

rbd create --size SIZE POOL_NAME/LUKS_IMAGE rbd encryption format POOL_NAME/LUKS_IMAGE luks1|luks2 PASSPHRASE_FILE rbd resize --size 50G --encryption-passphrase-file PASSPHRASE_FILE POOL_NAME/LUKS_IMAGE

rbd create --size SIZE POOL_NAME/LUKS_IMAGE rbd encryption format POOL_NAME/LUKS_IMAGE luks1|luks2 PASSPHRASE_FILE rbd resize --size 50G --encryption-passphrase-file PASSPHRASE_FILE POOL_NAME/LUKS_IMAGECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd create --size 50G mypool/myimage [ceph: root@host01 /]# rbd encryption format mypool/myimage luks2 passphrase.txt [ceph: root@host01 /]# rbd resize --size 50G --encryption-passphrase-file passphrase.txt mypool/myimage

[ceph: root@host01 /]# rbd create --size 50G mypool/myimage [ceph: root@host01 /]# rbd encryption format mypool/myimage luks2 passphrase.txt [ceph: root@host01 /]# rbd resize --size 50G --encryption-passphrase-file passphrase.txt mypool/myimageCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

rbd resizecommand grows the image to compensate for the overhead associated with the LUKS2 header.With the LUKS2-formatted image, create a LUKS2-formatted clone with the same effective size:

Syntax

rbd snap create POOL_NAME/IMAGE_NAME@SNAP_NAME rbd snap protect POOL_NAME/IMAGE_NAME@SNAP_NAME rbd clone POOL_NAME/IMAGE_NAME@SNAP_NAME POOL_NAME/CLONE_NAME rbd encryption format POOL_NAME/CLONE_NAME luks1 CLONE_PASSPHRASE_FILE

rbd snap create POOL_NAME/IMAGE_NAME@SNAP_NAME rbd snap protect POOL_NAME/IMAGE_NAME@SNAP_NAME rbd clone POOL_NAME/IMAGE_NAME@SNAP_NAME POOL_NAME/CLONE_NAME rbd encryption format POOL_NAME/CLONE_NAME luks1 CLONE_PASSPHRASE_FILECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd snap create mypool/myimage@snap [ceph: root@host01 /]# rbd snap protect mypool/myimage@snap [ceph: root@host01 /]# rbd clone mypool/myimage@snap mypool/myclone [ceph: root@host01 /]# rbd encryption format mypool/myclone luks1 clone-passphrase.bin

[ceph: root@host01 /]# rbd snap create mypool/myimage@snap [ceph: root@host01 /]# rbd snap protect mypool/myimage@snap [ceph: root@host01 /]# rbd clone mypool/myimage@snap mypool/myclone [ceph: root@host01 /]# rbd encryption format mypool/myclone luks1 clone-passphrase.binCopy to Clipboard Copied! Toggle word wrap Toggle overflow With the LUKS2-formatted image, create a LUKS1-formatted clone with the same effective size:

Syntax

rbd snap create POOL_NAME/IMAGE_NAME@SNAP_NAME rbd snap protect POOL_NAME/IMAGE_NAME@SNAP_NAME rbd clone POOL_NAME/IMAGE_NAME@SNAP_NAME POOL_NAME/CLONE_NAME rbd encryption format POOL_NAME/CLONE_NAME luks1 CLONE_PASSPHRASE_FILE rbd resize --size SIZE --allow-shrink --encryption-passphrase-file CLONE_PASSPHRASE_FILE --encryption-passphrase-file PASSPHRASE_FILE POOL_NAME/CLONE_NAME

rbd snap create POOL_NAME/IMAGE_NAME@SNAP_NAME rbd snap protect POOL_NAME/IMAGE_NAME@SNAP_NAME rbd clone POOL_NAME/IMAGE_NAME@SNAP_NAME POOL_NAME/CLONE_NAME rbd encryption format POOL_NAME/CLONE_NAME luks1 CLONE_PASSPHRASE_FILE rbd resize --size SIZE --allow-shrink --encryption-passphrase-file CLONE_PASSPHRASE_FILE --encryption-passphrase-file PASSPHRASE_FILE POOL_NAME/CLONE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# rbd snap create mypool/myimage@snap [ceph: root@host01 /]# rbd snap protect mypool/myimage@snap [ceph: root@host01 /]# rbd clone mypool/myimage@snap mypool/myclone [ceph: root@host01 /]# rbd encryption format mypool/myclone luks1 clone-passphrase.bin [ceph: root@host01 /]# rbd resize --size 50G --allow-shrink --encryption-passphrase-file clone-passphrase.bin --encryption-passphrase-file passphrase.bin mypool/myclone

[ceph: root@host01 /]# rbd snap create mypool/myimage@snap [ceph: root@host01 /]# rbd snap protect mypool/myimage@snap [ceph: root@host01 /]# rbd clone mypool/myimage@snap mypool/myclone [ceph: root@host01 /]# rbd encryption format mypool/myclone luks1 clone-passphrase.bin [ceph: root@host01 /]# rbd resize --size 50G --allow-shrink --encryption-passphrase-file clone-passphrase.bin --encryption-passphrase-file passphrase.bin mypool/mycloneCopy to Clipboard Copied! Toggle word wrap Toggle overflow Since LUKS1 header is usually smaller than LUKS2 header, the

rbd resizecommand at the end shrinks the cloned image to get rid of unwanted space allowance.With the LUKS-1-formatted image, create a LUKS2-formatted clone with the same effective size:

Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Since LUKS2 header is usually bigger than LUKS1 header, the

rbd resizecommand at the beginning temporarily grows the parent image to reserve some extra space in the parent snapshot and consequently the cloned image. This is necessary to make all parent data accessible in the cloned image. Therbd resizecommand at the end shrinks the parent image back to its original size and does not impact the parent snapshot and the cloned image to get rid of the unused reserved spaceThe same applies to creating a formatted clone of an unformatted image, since an unformatted image does not have a header at all.

Chapter 5. Managing snapshots

As a storage administrator, being familiar with Ceph’s snapshotting feature can help you manage the snapshots and clones of images stored in the Red Hat Ceph Storage cluster.

Prerequisites

- A running Red Hat Ceph Storage cluster.

5.1. Ceph block device snapshots

A snapshot is a read-only copy of the state of an image at a particular point in time. One of the advanced features of Ceph block devices is that you can create snapshots of the images to retain a history of an image’s state. Ceph also supports snapshot layering, which allows you to clone images quickly and easily, for example a virtual machine image. Ceph supports block device snapshots using the rbd command and many higher level interfaces, including QEMU, libvirt, OpenStack and CloudStack.

If a snapshot is taken while I/O is occurring, then the snapshot might not get the exact or latest data of the image and the snapshot might have to be cloned to a new image to be mountable. Red Hat recommends stopping I/O before taking a snapshot of an image. If the image contains a filesystem, the filesystem must be in a consistent state before taking a snapshot. To stop I/O you can use fsfreeze command. For virtual machines, the qemu-guest-agent can be used to automatically freeze filesystems when creating a snapshot.

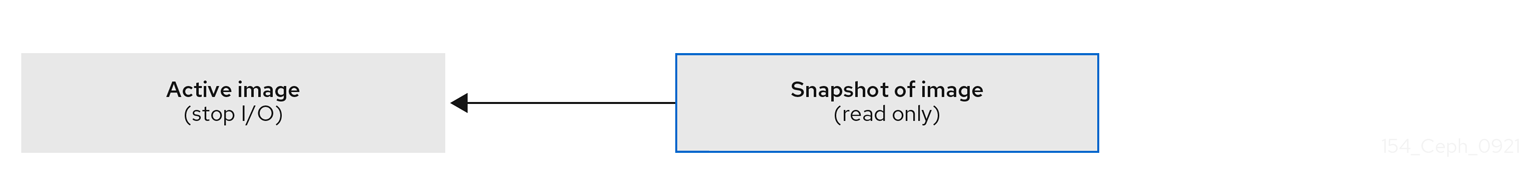

Figure 5.1. Ceph Block device snapshots

Additional Resources

-

See the

fsfreeze(8)man page for more details.

5.2. The Ceph user and keyring

When cephx is enabled, you must specify a user name or ID and a path to the keyring containing the corresponding key for the user.

cephx is enabled by default.

You might also add the CEPH_ARGS environment variable to avoid re-entry of the following parameters:

Syntax

rbd --id USER_ID --keyring=/path/to/secret [commands] rbd --name USERNAME --keyring=/path/to/secret [commands]

rbd --id USER_ID --keyring=/path/to/secret [commands]

rbd --name USERNAME --keyring=/path/to/secret [commands]Example

rbd --id admin --keyring=/etc/ceph/ceph.keyring [commands] rbd --name client.admin --keyring=/etc/ceph/ceph.keyring [commands]

[root@rbd-client ~]# rbd --id admin --keyring=/etc/ceph/ceph.keyring [commands]

[root@rbd-client ~]# rbd --name client.admin --keyring=/etc/ceph/ceph.keyring [commands]

Add the user and secret to the CEPH_ARGS environment variable so that you do not need to enter them each time.

5.3. Creating a block device snapshot

Create a snapshot of a Ceph block device.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

Specify the

snap createoption, the pool name and the image name:Method 1:

Syntax

rbd --pool POOL_NAME snap create --snap SNAP_NAME IMAGE_NAME

rbd --pool POOL_NAME snap create --snap SNAP_NAME IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 snap create --snap snap1 image1

[root@rbd-client ~]# rbd --pool pool1 snap create --snap snap1 image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Method 2:

Syntax

rbd snap create POOL_NAME/IMAGE_NAME@SNAP_NAME

rbd snap create POOL_NAME/IMAGE_NAME@SNAP_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd snap create pool1/image1@snap1

[root@rbd-client ~]# rbd snap create pool1/image1@snap1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4. Listing the block device snapshots

List the block device snapshots.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

Specify the pool name and the image name:

Syntax

rbd --pool POOL_NAME --image IMAGE_NAME snap ls rbd snap ls POOL_NAME/IMAGE_NAME

rbd --pool POOL_NAME --image IMAGE_NAME snap ls rbd snap ls POOL_NAME/IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 --image image1 snap ls rbd snap ls pool1/image1

[root@rbd-client ~]# rbd --pool pool1 --image image1 snap ls [root@rbd-client ~]# rbd snap ls pool1/image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5. Rolling back a block device snapshot

Rollback a block device snapshot.

Rolling back an image to a snapshot means overwriting the current version of the image with data from a snapshot. The time it takes to execute a rollback increases with the size of the image. It is faster to clone from a snapshot than to rollback an image to a snapshot, and it is the preferred method of returning to a pre-existing state.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

Specify the

snap rollbackoption, the pool name, the image name and the snap name:Syntax

rbd --pool POOL_NAME snap rollback --snap SNAP_NAME IMAGE_NAME rbd snap rollback POOL_NAME/IMAGE_NAME@SNAP_NAME

rbd --pool POOL_NAME snap rollback --snap SNAP_NAME IMAGE_NAME rbd snap rollback POOL_NAME/IMAGE_NAME@SNAP_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 snap rollback --snap snap1 image1 rbd snap rollback pool1/image1@snap1

[root@rbd-client ~]# rbd --pool pool1 snap rollback --snap snap1 image1 [root@rbd-client ~]# rbd snap rollback pool1/image1@snap1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.6. Deleting a block device snapshot

Delete a snapshot for Ceph block devices.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

To delete a block device snapshot, specify the

snap rmoption, the pool name, the image name and the snapshot name:Syntax

rbd --pool POOL_NAME snap rm --snap SNAP_NAME IMAGE_NAME rbd snap rm POOL_NAME-/IMAGE_NAME@SNAP_NAME

rbd --pool POOL_NAME snap rm --snap SNAP_NAME IMAGE_NAME rbd snap rm POOL_NAME-/IMAGE_NAME@SNAP_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 snap rm --snap snap2 image1 rbd snap rm pool1/image1@snap1

[root@rbd-client ~]# rbd --pool pool1 snap rm --snap snap2 image1 [root@rbd-client ~]# rbd snap rm pool1/image1@snap1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If an image has any clones, the cloned images retain reference to the parent image snapshot. To delete the parent image snapshot, you must flatten the child images first.

Ceph OSD daemons delete data asynchronously, so deleting a snapshot does not free up the disk space immediately.

Additional Resources

- See the Flattening cloned images in the Red Hat Ceph Storage Block Device Guide for details.

5.7. Purging the block device snapshots

Purge block device snapshots.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

Specify the

snap purgeoption and the image name on a specific pool:Syntax

rbd --pool POOL_NAME snap purge IMAGE_NAME rbd snap purge POOL_NAME/IMAGE_NAME

rbd --pool POOL_NAME snap purge IMAGE_NAME rbd snap purge POOL_NAME/IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 snap purge image1 rbd snap purge pool1/image1

[root@rbd-client ~]# rbd --pool pool1 snap purge image1 [root@rbd-client ~]# rbd snap purge pool1/image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.8. Renaming a block device snapshot

Rename a block device snapshot.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

To rename a snapshot:

Syntax

rbd snap rename POOL_NAME/IMAGE_NAME@ORIGINAL_SNAPSHOT_NAME POOL_NAME/IMAGE_NAME@NEW_SNAPSHOT_NAME

rbd snap rename POOL_NAME/IMAGE_NAME@ORIGINAL_SNAPSHOT_NAME POOL_NAME/IMAGE_NAME@NEW_SNAPSHOT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd snap rename data/dataset@snap1 data/dataset@snap2

[root@rbd-client ~]# rbd snap rename data/dataset@snap1 data/dataset@snap2Copy to Clipboard Copied! Toggle word wrap Toggle overflow This renames

snap1snapshot of thedatasetimage on thedatapool tosnap2.-

Execute the

rbd help snap renamecommand to display additional details on renaming snapshots.

5.9. Ceph block device layering

Ceph supports the ability to create many copy-on-write (COW) or copy-on-read (COR) clones of a block device snapshot. Snapshot layering enables Ceph block device clients to create images very quickly. For example, you might create a block device image with a Linux VM written to it. Then, snapshot the image, protect the snapshot, and create as many clones as you like. A snapshot is read-only, so cloning a snapshot simplifies semantics—making it possible to create clones rapidly.

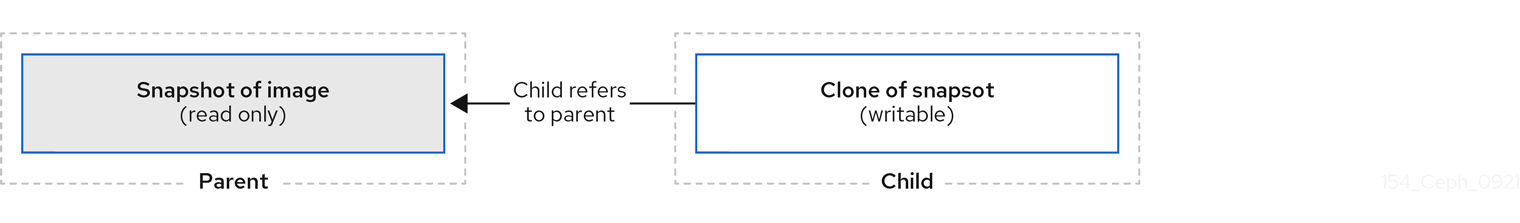

Figure 5.2. Ceph Block device layering

The terms parent and child mean a Ceph block device snapshot, parent, and the corresponding image cloned from the snapshot, child. These terms are important for the command line usage below.

Each cloned image, the child, stores a reference to its parent image, which enables the cloned image to open the parent snapshot and read it. This reference is removed when the clone is flattened that is, when information from the snapshot is completely copied to the clone.

A clone of a snapshot behaves exactly like any other Ceph block device image. You can read to, write from, clone, and resize the cloned images. There are no special restrictions with cloned images. However, the clone of a snapshot refers to the snapshot, so you MUST protect the snapshot before you clone it.

A clone of a snapshot can be a copy-on-write (COW) or copy-on-read (COR) clone. Copy-on-write (COW) is always enabled for clones while copy-on-read (COR) has to be enabled explicitly. Copy-on-write (COW) copies data from the parent to the clone when it writes to an unallocated object within the clone. Copy-on-read (COR) copies data from the parent to the clone when it reads from an unallocated object within the clone. Reading data from a clone will only read data from the parent if the object does not yet exist in the clone. Rados block device breaks up large images into multiple objects. The default is set to 4 MB and all copy-on-write (COW) and copy-on-read (COR) operations occur on a full object, that is writing 1 byte to a clone will result in a 4 MB object being read from the parent and written to the clone if the destination object does not already exist in the clone from a previous COW/COR operation.

Whether or not copy-on-read (COR) is enabled, any reads that cannot be satisfied by reading an underlying object from the clone will be rerouted to the parent. Since there is practically no limit to the number of parents, meaning that you can clone a clone, this reroute continues until an object is found or you hit the base parent image. If copy-on-read (COR) is enabled, any reads that fail to be satisfied directly from the clone result in a full object read from the parent and writing that data to the clone so that future reads of the same extent can be satisfied from the clone itself without the need of reading from the parent.