Managing, monitoring, and updating the kernel

A guide to managing the Linux kernel on Red Hat Enterprise Linux 8

Abstract

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

- Log in to the Jira website.

- Click Create in the top navigation bar.

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- Click Create at the bottom of the dialogue.

Chapter 1. The Linux kernel

Learn about the Linux kernel and the Linux kernel RPM package provided and maintained by Red Hat (Red Hat kernel). Keep the Red Hat kernel updated, which ensures the operating system has all the latest bug fixes, performance enhancements, and patches, and is compatible with new hardware.

1.1. What the kernel is

The kernel is a core part of a Linux operating system that manages the system resources and provides interface between hardware and software applications.

The Red Hat kernel is a custom-built kernel based on the upstream Linux mainline kernel that Red Hat engineers further develop and harden with a focus on stability and compatibility with the latest technologies and hardware.

Before Red Hat releases a new kernel version, the kernel needs to pass a set of rigorous quality assurance tests.

The Red Hat kernels are packaged in the RPM format so that they are easily upgraded and verified by the YUM package manager.

Kernels that are not compiled by Red Hat are not supported by Red Hat.

1.2. RPM packages

An RPM package consists of an archive of files and metadata used to install and erase these files. Specifically, the RPM package contains the following parts:

- GPG signature

- The GPG signature is used to verify the integrity of the package.

- Header (package metadata)

- The RPM package manager uses this metadata to determine package dependencies, where to install files, and other information.

- Payload

-

The payload is a

cpioarchive that contains files to install to the system.

There are two types of RPM packages. Both types share the file format and tooling, but have different contents and serve different purposes:

Source RPM (SRPM)

An SRPM contains source code and a

specfile, which describes how to build the source code into a binary RPM. Optionally, the SRPM can contain patches to source code.Binary RPM

A binary RPM contains the binaries built from the sources and patches.

1.3. The Linux kernel RPM package overview

The kernel RPM is a meta package that does not contain any files, but rather ensures that the following required sub-packages are properly installed:

kernel-core-

Provides the binary image of the kernel, all

initramfs-related objects to bootstrap the system, and a minimal number of kernel modules to ensure core functionality. This sub-package alone could be used in virtualized and cloud environments to provide a Red Hat Enterprise Linux 8 kernel with a quick boot time and a small disk size footprint. kernel-modules-

Provides the remaining kernel modules that are not present in

kernel-core.

The small set of kernel sub-packages above aims to provide a reduced maintenance surface to system administrators especially in virtualized and cloud environments.

Optional kernel packages are for example:

kernel-modules-extra- Provides kernel modules for rare hardware. Loading of the module is disabled by default.

kernel-debug- Provides a kernel with many debugging options enabled for kernel diagnosis, at the expense of reduced performance.

kernel-tools- Provides tools for manipulating the Linux kernel and supporting documentation.

kernel-devel-

Provides the kernel headers and makefiles that are enough to build modules against the

kernelpackage. kernel-abi-stablelists-

Provides information pertaining to the RHEL kernel ABI, including a list of kernel symbols required by external Linux kernel modules and a

yumplug-in to aid enforcement. kernel-headers- Includes the C header files that specify the interface between the Linux kernel and user-space libraries and programs. The header files define structures and constants required for building most standard programs.

1.4. Displaying contents of a kernel package

By querying the repository, you can see if a kernel package provides a specific file, such as a module. It is not necessary to download or install the package to display the file list.

Use the dnf utility to query the file list, for example, of the kernel-core, kernel-modules-core, or kernel-modules package. Note that the kernel package is a meta package that does not contain any files.

Procedure

List the available versions of a package:

yum repoquery <package_name>

$ yum repoquery <package_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the list of files in a package:

yum repoquery -l <package_name>

$ yum repoquery -l <package_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.5. Installing specific kernel versions

Install new kernels using the yum package manager.

Procedure

To install a specific kernel version, enter the following command:

yum install kernel-5.14.0

# yum install kernel-5.14.0Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.6. Updating the kernel

Update the kernel using the yum package manager.

Procedure

To update the kernel, enter the following command:

yum update kernel

# yum update kernelCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command updates the kernel along with all dependencies to the latest available version.

- Reboot your system for the changes to take effect.

When upgrading from RHEL 7 to RHEL 8, follow relevant sections of the Upgrading from RHEL 7 to RHEL 8 document.

1.7. Setting a kernel as default

Set a specific kernel as default by using the grubby command-line tool and GRUB.

Procedure

Setting the kernel as default by using the

grubbytool.Enter the following command to set the kernel as default using the

grubbytool:grubby --set-default $kernel_path

# grubby --set-default $kernel_pathCopy to Clipboard Copied! Toggle word wrap Toggle overflow The command uses a machine ID without the

.confsuffix as an argument.NoteThe machine ID is located in the

/boot/loader/entries/directory.

Setting the kernel as default by using the

idargument.List the boot entries using the

idargument and then set an intended kernel as default:grubby --info ALL | grep id grubby --set-default /boot/vmlinuz-<version>.<architecture>

# grubby --info ALL | grep id # grubby --set-default /boot/vmlinuz-<version>.<architecture>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteTo list the boot entries using the

titleargument, execute the# grubby --info=ALL | grep titlecommand.

Setting the default kernel for only the next boot.

Execute the following command to set the default kernel for only the next reboot using the

grub2-rebootcommand:grub2-reboot <index|title|id>

# grub2-reboot <index|title|id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow WarningSet the default kernel for only the next boot with care. Installing new kernel RPMs, self-built kernels, and manually adding the entries to the

/boot/loader/entries/directory might change the index values.

Chapter 2. Managing kernel modules

Learn about kernel modules, how to display their information, and how to perform basic administrative tasks with kernel modules.

2.1. Introduction to kernel modules

The Red Hat Enterprise Linux kernel can be extended with kernel modules, which provide optional additional pieces of functionality, without having to reboot the system. On RHEL 8, kernel modules are extra kernel code built into compressed <KERNEL_MODULE_NAME>.ko.xz object files.

The most common functionality enabled by kernel modules are:

- Device driver which adds support for new hardware

- Support for a file system such as GFS2 or NFS

- System calls

On modern systems, kernel modules are automatically loaded when needed. However, in some cases it is necessary to load or unload modules manually.

Similarly to the kernel, modules accept parameters that customize their behavior.

You can use the kernel tools to perform the following actions on modules:

- Inspect modules that are currently running.

- Inspect modules that are available to load into the kernel.

- Inspect parameters that a module accepts.

- Enable a mechanism to load and unload kernel modules into the running kernel.

2.2. Kernel module dependencies

Certain kernel modules sometimes depend on one or more other kernel modules. The /lib/modules/<KERNEL_VERSION>/modules.dep file contains a complete list of kernel module dependencies for the corresponding kernel version.

depmod

The dependency file is generated by the depmod program, included in the kmod package. Many utilities provided by kmod consider module dependencies when performing operations. Therefore, manual dependency-tracking is rarely necessary.

The code of kernel modules executes in kernel-space in the unrestricted mode. Be mindful of what modules you are loading.

weak-modules

In addition to depmod, Red Hat Enterprise Linux provides the weak-modules script, which is a part of the kmod package. weak-modules determines the modules that are kABI-compatible with installed kernels. While checking modules kernel compatibility, weak-modules processes modules symbol dependencies from higher to lower release of kernel for which they were built. It processes each module independently of the kernel release.

2.3. Listing installed kernel modules

The grubby --info=ALL command displays an indexed list of installed kernels on !BLS and BLS installs.

Procedure

List the installed kernels using the following command:

grubby --info=ALL | grep title

# grubby --info=ALL | grep titleCopy to Clipboard Copied! Toggle word wrap Toggle overflow The list of all installed kernels is displayed as follows:

title=Red Hat Enterprise Linux (4.18.0-20.el8.x86_64) 8.0 (Ootpa) title=Red Hat Enterprise Linux (4.18.0-19.el8.x86_64) 8.0 (Ootpa) title=Red Hat Enterprise Linux (4.18.0-12.el8.x86_64) 8.0 (Ootpa) title=Red Hat Enterprise Linux (4.18.0) 8.0 (Ootpa) title=Red Hat Enterprise Linux (0-rescue-2fb13ddde2e24fde9e6a246a942caed1) 8.0 (Ootpa)

title=Red Hat Enterprise Linux (4.18.0-20.el8.x86_64) 8.0 (Ootpa) title=Red Hat Enterprise Linux (4.18.0-19.el8.x86_64) 8.0 (Ootpa) title=Red Hat Enterprise Linux (4.18.0-12.el8.x86_64) 8.0 (Ootpa) title=Red Hat Enterprise Linux (4.18.0) 8.0 (Ootpa) title=Red Hat Enterprise Linux (0-rescue-2fb13ddde2e24fde9e6a246a942caed1) 8.0 (Ootpa)Copy to Clipboard Copied! Toggle word wrap Toggle overflow

This is the list of installed kernels of grubby-8.40-17 from the GRUB menu.

2.4. Listing currently loaded kernel modules

View the currently loaded kernel modules.

Prerequisites

-

The

kmodpackage is installed.

Procedure

To list all currently loaded kernel modules, enter:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the example above:

-

The

Modulecolumn provides the names of currently loaded modules. -

The

Sizecolumn displays the amount of memory per module in kilobytes. -

The

Used bycolumn shows the number, and optionally the names of modules that are dependent on a particular module.

-

The

2.5. Listing all installed kernels

Use the grubby utility to list all installed kernels on your system.

Prerequisites

- You have root permissions.

Procedure

To list all installed kernels, enter:

grubby --info=ALL | grep ^kernel

# grubby --info=ALL | grep ^kernel kernel="/boot/vmlinuz-4.18.0-305.10.2.el8_4.x86_64" kernel="/boot/vmlinuz-4.18.0-240.el8.x86_64" kernel="/boot/vmlinuz-0-rescue-41eb2e172d7244698abda79a51778f1b"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The output shows the path and versions of all the kernels installed.

2.6. Displaying information about kernel modules

Use the modinfo command to display some detailed information about the specified kernel module.

Prerequisites

-

The

kmodpackage is installed.

Procedure

To display information about any kernel module, enter:

modinfo <KERNEL_MODULE_NAME>

$ modinfo <KERNEL_MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can query information about all available modules, regardless of whether they are loaded. The

parmentries show parameters the user is able to set for the module, and what type of value they expect.NoteWhen entering the name of a kernel module, do not append the

.ko.xzextension to the end of the name. Kernel module names do not have extensions; their corresponding files do.

2.7. Loading kernel modules at system runtime

The optimal way to expand the functionality of the Linux kernel is by loading kernel modules. Use the modprobe command to find and load a kernel module into the currently running kernel.

The changes described in this procedure will not persist after rebooting the system. For information about how to load kernel modules to persist across system reboots, see Loading kernel modules automatically at system boot time.

Prerequisites

- Root permissions

-

The

kmodpackage is installed. - The respective kernel module is not loaded. To ensure this is the case, list the Listing currently loaded kernel modules.

Procedure

Select a kernel module you want to load.

The modules are located in the

/lib/modules/$(uname -r)/kernel/<SUBSYSTEM>/directory.Load the relevant kernel module:

modprobe <MODULE_NAME>

# modprobe <MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteWhen entering the name of a kernel module, do not append the

.ko.xzextension to the end of the name. Kernel module names do not have extensions; their corresponding files do.

Verification

Optionally, verify the relevant module was loaded:

lsmod | grep <MODULE_NAME>

$ lsmod | grep <MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the module was loaded correctly, this command displays the relevant kernel module. For example:

lsmod | grep serio_raw

$ lsmod | grep serio_raw serio_raw 16384 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.8. Unloading kernel modules at system runtime

To unload certain kernel modules from the running kernel, use the modprobe command to find and unload a kernel module at system runtime from the currently loaded kernel.

You must not unload the kernel modules that are used by the running system because it can lead to an unstable or non-operational system.

After finishing the unloading of inactive kernel modules, the modules that are defined to be automatically loaded on boot, will not remain unloaded after rebooting the system. For information about how to prevent this outcome, see Preventing kernel modules from being automatically loaded at system boot time.

Prerequisites

- You have root permissions.

-

The

kmodpackage is installed.

Procedure

List all the loaded kernel modules:

lsmod

# lsmodCopy to Clipboard Copied! Toggle word wrap Toggle overflow Select the kernel module you want to unload.

If a kernel module has dependencies, unload those prior to unloading the kernel module. For details on identifying modules with dependencies, see Listing currently loaded kernel modules and Kernel module dependencies.

Unload the relevant kernel module:

modprobe -r <MODULE_NAME>

# modprobe -r <MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow When entering the name of a kernel module, do not append the

.ko.xzextension to the end of the name. Kernel module names do not have extensions; their corresponding files do.

Verification

Optionally, verify the relevant module was unloaded:

lsmod | grep <MODULE_NAME>

$ lsmod | grep <MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the module is unloaded successfully, this command does not display any output.

2.9. Unloading kernel modules at early stages of the boot process

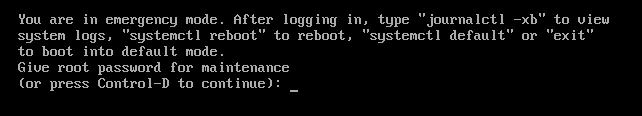

In certain situations, for example, when the kernel module has a code that causes the system to become unresponsive, and the user is not able to reach the stage to permanently disable the rogue kernel module, you might need to unload a kernel module early in the booting process. To temporarily block the loading of the kernel module, you can use a boot loader.

You can edit the relevant boot loader entry to unload the required kernel module before the booting sequence continues.

The changes described in this procedure will not persist after the next reboot. For information about how to add a kernel module to a denylist so that it will not be automatically loaded during the boot process, see Preventing kernel modules from being automatically loaded at system boot time.

Prerequisites

- You have a loadable kernel module that you want to prevent from loading for some reason.

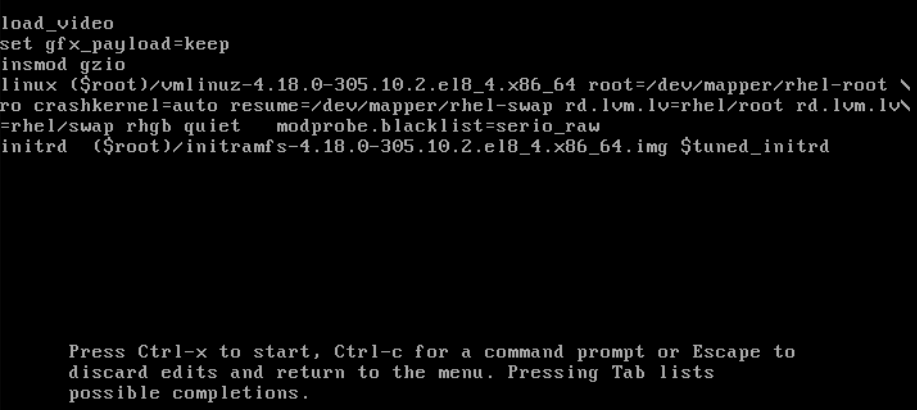

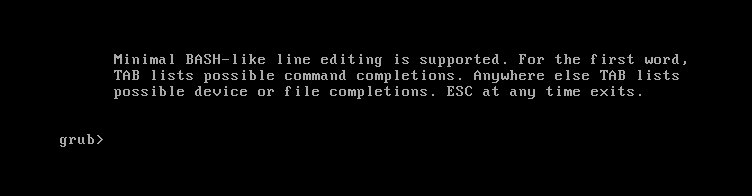

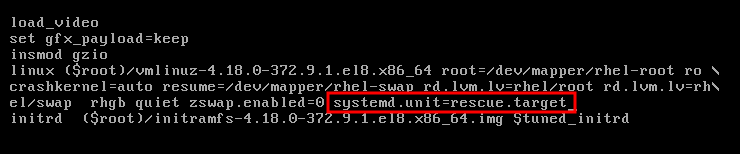

Procedure

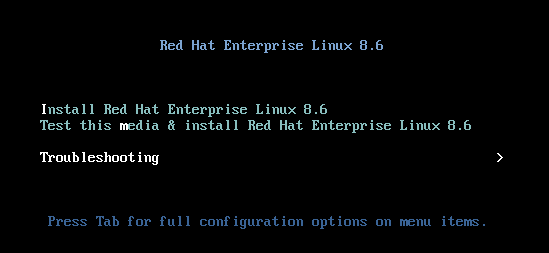

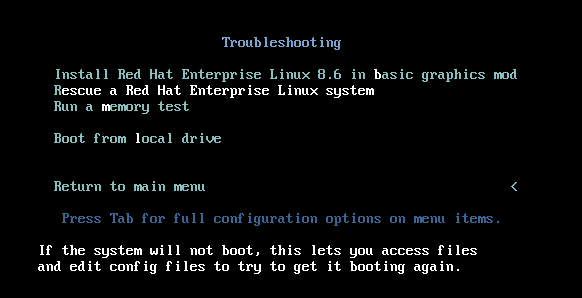

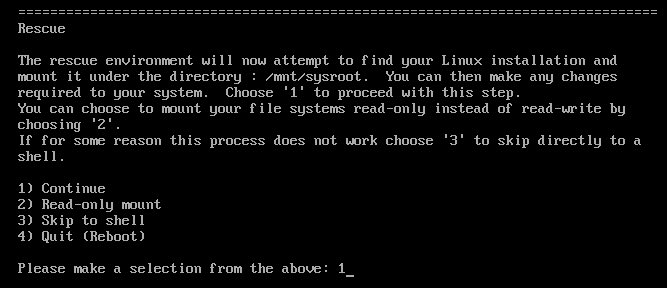

- Boot the system into the boot loader.

- Use the cursor keys to highlight the relevant boot loader entry.

Press the e key to edit the entry.

- Use the cursor keys to navigate to the line that starts with linux.

Append

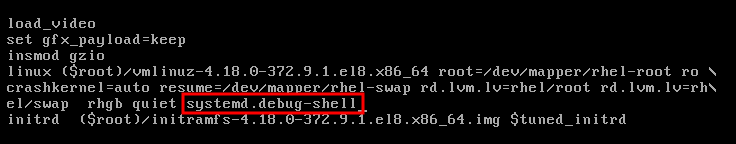

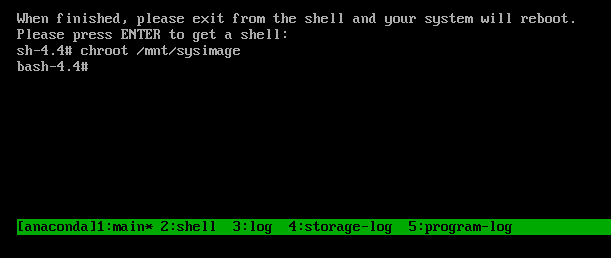

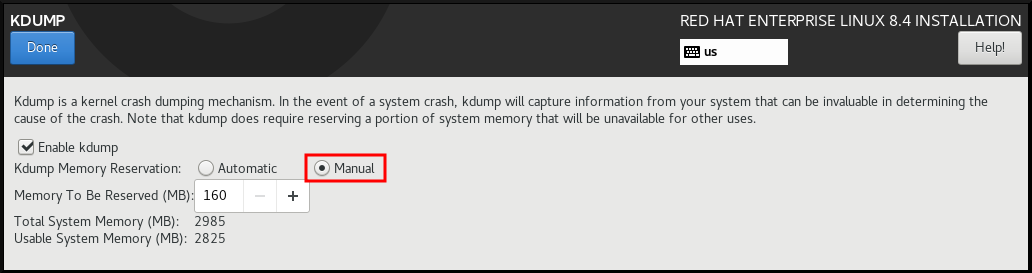

modprobe.blacklist=module_nameto the end of the line.Figure 2.2. Kernel boot entry

The

serio_rawkernel module illustrates a rogue module to be unloaded early in the boot process.- Press Ctrl+X to boot using the modified configuration.

Verification

After the system boots, verify that the relevant kernel module is not loaded:

lsmod | grep serio_raw

# lsmod | grep serio_rawCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.10. Loading kernel modules automatically at system boot time

Configure a kernel module to load it automatically during the boot process.

Prerequisites

- Root permissions

-

The

kmodpackage is installed.

Procedure

Select a kernel module you want to load during the boot process.

The modules are located in the

/lib/modules/$(uname -r)/kernel/<SUBSYSTEM>/directory.Create a configuration file for the module:

echo <MODULE_NAME> > /etc/modules-load.d/<MODULE_NAME>.conf

# echo <MODULE_NAME> > /etc/modules-load.d/<MODULE_NAME>.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteWhen entering the name of a kernel module, do not append the

.ko.xzextension to the end of the name. Kernel module names do not have extensions; their corresponding files do.

Verification

After reboot, verify the relevant module is loaded:

lsmod | grep <MODULE_NAME>

$ lsmod | grep <MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The changes described in this procedure will persist after rebooting the system.

2.11. Preventing kernel modules from being automatically loaded at system boot time

You can prevent the system from loading a kernel module automatically during the boot process by listing the module in modprobe configuration file with a corresponding command.

Prerequisites

-

The commands in this procedure require root privileges. Either use

su -to switch to the root user or preface the commands withsudo. -

The

kmodpackage is installed. - Ensure that your current system configuration does not require a kernel module you plan to deny.

Procedure

List modules loaded to the currently running kernel by using the

lsmodcommand:Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the output, identify the module you want to prevent from getting loaded.

Alternatively, identify an unloaded kernel module you want to prevent from potentially loading in the

/lib/modules/<KERNEL-VERSION>/kernel/<SUBSYSTEM>/directory, for example:ls /lib/modules/4.18.0-477.20.1.el8_8.x86_64/kernel/crypto/

$ ls /lib/modules/4.18.0-477.20.1.el8_8.x86_64/kernel/crypto/ ansi_cprng.ko.xz chacha20poly1305.ko.xz md4.ko.xz serpent_generic.ko.xz anubis.ko.xz cmac.ko.xz…Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a configuration file serving as a denylist:

touch /etc/modprobe.d/denylist.conf

# touch /etc/modprobe.d/denylist.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow In a text editor of your choice, combine the names of modules you want to exclude from automatic loading to the kernel with the

blacklistconfiguration command, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Because the

blacklistcommand does not prevent the module from getting loaded as a dependency for another kernel module that is not in a denylist, you must also define theinstallline. In this case, the system runs/bin/falseinstead of installing the module. The lines starting with a hash sign are comments you can use to make the file more readable.NoteWhen entering the name of a kernel module, do not append the

.ko.xzextension to the end of the name. Kernel module names do not have extensions; their corresponding files do.Create a backup copy of the current initial RAM disk image before rebuilding:

cp /boot/initramfs-$(uname -r).img /boot/initramfs-$(uname -r).bak.$(date +%m-%d-%H%M%S).img

# cp /boot/initramfs-$(uname -r).img /boot/initramfs-$(uname -r).bak.$(date +%m-%d-%H%M%S).imgCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, create a backup copy of an initial RAM disk image which corresponds to the kernel version for which you want to prevent kernel modules from automatic loading:

cp /boot/initramfs-<VERSION>.img /boot/initramfs-<VERSION>.img.bak.$(date +%m-%d-%H%M%S)

# cp /boot/initramfs-<VERSION>.img /boot/initramfs-<VERSION>.img.bak.$(date +%m-%d-%H%M%S)Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Generate a new initial RAM disk image to apply the changes:

dracut -f -v

# dracut -f -vCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you build an initial RAM disk image for a different kernel version than your system currently uses, specify both target

initramfsand kernel version:dracut -f -v /boot/initramfs-<TARGET-VERSION>.img <CORRESPONDING-TARGET-KERNEL-VERSION>

# dracut -f -v /boot/initramfs-<TARGET-VERSION>.img <CORRESPONDING-TARGET-KERNEL-VERSION>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Restart the system:

reboot

$ rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The changes described in this procedure will take effect and persist after rebooting the system. If you incorrectly list a key kernel module in the denylist, you can switch the system to an unstable or non-operational state.

2.12. Compiling custom kernel modules

You can build a sampling kernel module as requested by various configurations at hardware and software level.

Prerequisites

You installed the

kernel-devel,gcc, andelfutils-libelf-develpackages.dnf install kernel-devel-$(uname -r) gcc elfutils-libelf-devel

# dnf install kernel-devel-$(uname -r) gcc elfutils-libelf-develCopy to Clipboard Copied! Toggle word wrap Toggle overflow - You have root permissions.

-

You created the

/root/testmodule/directory where you compile the custom kernel module.

Procedure

Create the

/root/testmodule/test.cfile with the following content.Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

test.cfile is a source file that provides the main functionality to the kernel module. The file has been created in a dedicated/root/testmodule/directory for organizational purposes. After the module compilation, the/root/testmodule/directory will contain multiple files.The

test.cfile includes from the system libraries:-

The

linux/kernel.hheader file is necessary for theprintk()function in the example code. -

The

linux/module.hheader file contains function declarations and macro definitions that are shared across multiple C source files.

-

The

-

Follow the

init_module()andcleanup_module()functions to start and end the kernel logging functionprintk(), which prints text. Create the

/root/testmodule/Makefilefile with the following content.obj-m := test.o

obj-m := test.oCopy to Clipboard Copied! Toggle word wrap Toggle overflow The Makefile contains instructions for the compiler to produce an object file named

test.o. Theobj-mdirective specifies that the resultingtest.kofile is going to be compiled as a loadable kernel module. Alternatively, theobj-ydirective can instruct to buildtest.koas a built-in kernel module.Compile the kernel module.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The compiler creates an object file (

test.o) for each source file (test.c) as an intermediate step before linking them together into the final kernel module (test.ko).After a successful compilation,

/root/testmodule/contains additional files that relate to the compiled custom kernel module. The compiled module itself is represented by thetest.kofile.

Verification

Optional: check the contents of the

/root/testmodule/directory:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the kernel module to the

/lib/modules/$(uname -r)/directory:cp /root/testmodule/test.ko /lib/modules/$(uname -r)/

# cp /root/testmodule/test.ko /lib/modules/$(uname -r)/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the modular dependency list:

depmod -a

# depmod -aCopy to Clipboard Copied! Toggle word wrap Toggle overflow Load the kernel module:

modprobe -v test

# modprobe -v test insmod /lib/modules/4.18.0-305.el8.x86_64/test.koCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the kernel module was successfully loaded:

lsmod | grep test

# lsmod | grep test test 16384 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Read the latest messages from the kernel ring buffer:

dmesg

# dmesg [74422.545004] Hello World This is a testCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Signing a kernel and modules for Secure Boot

You can enhance the security of your system by using a signed kernel and signed kernel modules. On UEFI-based build systems where Secure Boot is enabled, you can self-sign a privately built kernel or kernel modules. Furthermore, you can import your public key into a target system where you want to deploy your kernel or kernel modules.

If Secure Boot is enabled, all of the following components have to be signed with a private key and authenticated with the corresponding public key:

- UEFI operating system boot loader

- The Red Hat Enterprise Linux kernel

- All kernel modules

If any of these components are not signed and authenticated, the system cannot finish the booting process.

RHEL 8 includes:

- Signed boot loaders

- Signed kernels

- Signed kernel modules

In addition, the signed first-stage boot loader and the signed kernel include embedded Red Hat public keys. These signed executable binaries and embedded keys enable RHEL 8 to install, boot, and run with the Microsoft UEFI Secure Boot Certification Authority keys. These keys are provided by the UEFI firmware on systems that support UEFI Secure Boot.

- Not all UEFI-based systems include support for Secure Boot.

- The build system, where you build and sign your kernel module, does not need to have UEFI Secure Boot enabled and does not even need to be a UEFI-based system.

3.1. Prerequisites

To be able to sign externally built kernel modules, install the utilities from the following packages:

yum install pesign openssl kernel-devel mokutil keyutils

# yum install pesign openssl kernel-devel mokutil keyutilsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expand Table 3.1. Required utilities Utility Provided by package Used on Purpose efikeygenpesignBuild system

Generates public and private X.509 key pair

opensslopensslBuild system

Exports the unencrypted private key

sign-filekernel-develBuild system

Executable file used to sign a kernel module with the private key

mokutilmokutilTarget system

Optional utility used to manually enroll the public key

keyctlkeyutilsTarget system

Optional utility used to display public keys in the system keyring

3.2. What is UEFI Secure Boot

With the Unified Extensible Firmware Interface (UEFI) Secure Boot technology, you can prevent the execution of the kernel-space code that is not signed by a trusted key. The system boot loader is signed with a cryptographic key. The database of public keys in the firmware authorizes the process of signing the key. You can subsequently verify a signature in the next-stage boot loader and the kernel.

UEFI Secure Boot establishes a chain of trust from the firmware to the signed drivers and kernel modules as follows:

-

An UEFI private key signs, and a public key authenticates the

shimfirst-stage boot loader. A certificate authority (CA) in turn signs the public key. The CA is stored in the firmware database. -

The

shimfile contains the Red Hat public key Red Hat Secure Boot (CA key 1) to authenticate the GRUB boot loader and the kernel. - The kernel in turn contains public keys to authenticate drivers and modules.

Secure Boot is the boot path validation component of the UEFI specification. The specification defines:

- Programming interface for cryptographically protected UEFI variables in non-volatile storage.

- Storing the trusted X.509 root certificates in UEFI variables.

- Validation of UEFI applications such as boot loaders and drivers.

- Procedures to revoke known-bad certificates and application hashes.

UEFI Secure Boot helps in the detection of unauthorized changes but does not:

- Prevent installation or removal of second-stage boot loaders.

- Require explicit user confirmation of such changes.

- Stop boot path manipulations. Signatures are verified during booting but, not when the boot loader is installed or updated.

If the boot loader or the kernel are not signed by a system trusted key, Secure Boot prevents them from starting.

3.3. UEFI Secure Boot support

You can install and run RHEL 8 on systems with enabled UEFI Secure Boot if the kernel and all the loaded drivers are signed with a trusted key. Red Hat provides kernels and drivers that are signed and authenticated by the relevant Red Hat keys.

If you want to load externally built kernels or drivers, you must sign them as well.

Restrictions imposed by UEFI Secure Boot

- The system only runs the kernel-mode code after its signature has been properly authenticated.

- GRUB module loading is disabled because there is no infrastructure for signing and verification of GRUB modules. Allowing module loading would run untrusted code within the security perimeter defined by Secure Boot.

- Red Hat provides a signed GRUB binary that has all supported modules on RHEL 8.

3.4. Requirements for authenticating kernel modules with X.509 keys

In RHEL 8, when a kernel module is loaded, the kernel checks the signature of the module against the public X.509 keys from the kernel system keyring (.builtin_trusted_keys) and the kernel platform keyring (.platform). The .platform keyring provides keys from third-party platform providers and custom public keys. The keys from the kernel system .blacklist keyring are excluded from verification.

You need to meet certain conditions to load kernel modules on systems with enabled UEFI Secure Boot functionality:

If UEFI Secure Boot is enabled or if the

module.sig_enforcekernel parameter has been specified:-

You can only load those signed kernel modules whose signatures were authenticated against keys from the system keyring (

.builtin_trusted_keys) and the platform keyring (.platform). -

The public key must not be on the system revoked keys keyring (

.blacklist).

-

You can only load those signed kernel modules whose signatures were authenticated against keys from the system keyring (

If UEFI Secure Boot is disabled and the

module.sig_enforcekernel parameter has not been specified:- You can load unsigned kernel modules and signed kernel modules without a public key.

If the system is not UEFI-based or if UEFI Secure Boot is disabled:

-

Only the keys embedded in the kernel are loaded onto

.builtin_trusted_keysand.platform. - You have no ability to augment that set of keys without rebuilding the kernel.

-

Only the keys embedded in the kernel are loaded onto

| Module signed | Public key found and signature valid | UEFI Secure Boot state | sig_enforce | Module load | Kernel tainted |

|---|---|---|---|---|---|

| Unsigned | - | Not enabled | Not enabled | Succeeds | Yes |

| Not enabled | Enabled | Fails | - | ||

| Enabled | - | Fails | - | ||

| Signed | No | Not enabled | Not enabled | Succeeds | Yes |

| Not enabled | Enabled | Fails | - | ||

| Enabled | - | Fails | - | ||

| Signed | Yes | Not enabled | Not enabled | Succeeds | No |

| Not enabled | Enabled | Succeeds | No | ||

| Enabled | - | Succeeds | No |

3.5. Sources for public keys

During boot, the kernel loads X.509 keys from a set of persistent key stores into the following keyrings:

-

The system keyring (

.builtin_trusted_keys) -

The

.platformkeyring -

The system

.blacklistkeyring

| Source of X.509 keys | User can add keys | UEFI Secure Boot state | Keys loaded during boot |

|---|---|---|---|

| Embedded in kernel | No | - |

|

|

UEFI | Limited | Not enabled | No |

| Enabled |

| ||

|

Embedded in the | No | Not enabled | No |

| Enabled |

| ||

| Machine Owner Key (MOK) list | Yes | Not enabled | No |

| Enabled |

|

.builtin_trusted_keys- A keyring that is built on boot.

- Provides trusted public keys.

-

rootprivileges are required to view the keys.

.platform- A keyring that is built on boot.

- Provides keys from third-party platform providers and custom public keys.

-

rootprivileges are required to view the keys.

.blacklist- A keyring with X.509 keys which have been revoked.

-

A module signed by a key from

.blacklistwill fail authentication even if your public key is in.builtin_trusted_keys.

- UEFI Secure Boot

db - A signature database.

- Stores keys (hashes) of UEFI applications, UEFI drivers, and boot loaders.

- The keys can be loaded on the machine.

- UEFI Secure Boot

dbx - A revoked signature database.

- Prevents keys from getting loaded.

-

The revoked keys from this database are added to the

.blacklistkeyring.

3.6. Generating a public and private key pair

To use a custom kernel or custom kernel modules on a Secure Boot-enabled system, you must generate a public and private X.509 key pair. You can use the generated private key to sign the kernel or the kernel modules. You can also validate the signed kernel or kernel modules by adding the corresponding public key to the Machine Owner Key (MOK) for Secure Boot.

Apply strong security measures and access policies to guard the contents of your private key. In the wrong hands, the key could be used to compromise any system which is authenticated by the corresponding public key.

Procedure

Create an X.509 public and private key pair:

If you only want to sign custom kernel modules:

efikeygen --dbdir /etc/pki/pesign \ --self-sign \ --module \ --common-name 'CN=Organization signing key' \ --nickname 'Custom Secure Boot key'# efikeygen --dbdir /etc/pki/pesign \ --self-sign \ --module \ --common-name 'CN=Organization signing key' \ --nickname 'Custom Secure Boot key'Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you want to sign custom kernel:

efikeygen --dbdir /etc/pki/pesign \ --self-sign \ --kernel \ --common-name 'CN=Organization signing key' \ --nickname 'Custom Secure Boot key'# efikeygen --dbdir /etc/pki/pesign \ --self-sign \ --kernel \ --common-name 'CN=Organization signing key' \ --nickname 'Custom Secure Boot key'Copy to Clipboard Copied! Toggle word wrap Toggle overflow When the RHEL system is running FIPS mode:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIn FIPS mode, you must use the

--tokenoption so thatefikeygenfinds the default "NSS Certificate DB" token in the PKI database.The public and private keys are now stored in the

/etc/pki/pesign/directory.

It is a good security practice to sign the kernel and the kernel modules within the validity period of its signing key. However, the sign-file utility does not warn you and the key will be usable in RHEL 8 regardless of the validity dates.

3.7. Example output of system keyrings

You can display information about the keys on the system keyrings using the keyctl utility from the keyutils package.

Prerequisites

- You have root permissions.

-

You have installed the

keyctlutility from thekeyutilspackage.

Example 3.1. Keyrings output

The following is a shortened example output of .builtin_trusted_keys, .platform, and .blacklist keyrings from a RHEL 8 system where UEFI Secure Boot is enabled.

The .builtin_trusted_keys keyring in the example shows the addition of two keys from the UEFI Secure Boot db keys as well as the Red Hat Secure Boot (CA key 1), which is embedded in the shim boot loader.

Example 3.2. Kernel console output

The following example shows the kernel console output. The messages identify the keys with an UEFI Secure Boot related source. These include UEFI Secure Boot db, embedded shim, and MOK list.

3.8. Enrolling public key on target system by adding the public key to the MOK list

You must authenticate your public key on a system for kernel or kernel module access and enroll it in the platform keyring (.platform) of the target system. When RHEL 8 boots on a UEFI-based system with Secure Boot enabled, the kernel imports public keys from the db key database and excludes revoked keys from the dbx database.

The Machine Owner Key (MOK) facility allows expanding the UEFI Secure Boot key database. When booting RHEL 8 on UEFI-enabled systems with Secure Boot enabled, keys on the MOK list are added to the platform keyring (.platform), along with the keys from the Secure Boot database. The list of MOK keys is stored securely and persistently in the same way, but it is a separate facility from the Secure Boot databases.

The MOK facility is supported by shim, MokManager, GRUB, and the mokutil utility that enables secure key management and authentication for UEFI-based systems.

To get the authentication service of your kernel module on your systems, consider requesting your system vendor to incorporate your public key into the UEFI Secure Boot key database in their factory firmware image.

Prerequisites

- You have generated a public and private key pair and know the validity dates of your public keys. For details, see Generating a public and private key pair.

Procedure

Export your public key to the

sb_cert.cerfile:certutil -d /etc/pki/pesign \ -n 'Custom Secure Boot key' \ -Lr \ > sb_cert.cer# certutil -d /etc/pki/pesign \ -n 'Custom Secure Boot key' \ -Lr \ > sb_cert.cerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Import your public key into the MOK list:

mokutil --import sb_cert.cer

# mokutil --import sb_cert.cerCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Enter a new password for this MOK enrollment request.

Reboot the machine.

The

shimboot loader notices the pending MOK key enrollment request and it launchesMokManager.efito enable you to complete the enrollment from the UEFI console.Choose

Enroll MOK, enter the password you previously associated with this request when prompted, and confirm the enrollment.Your public key is added to the MOK list, which is persistent.

Once a key is on the MOK list, it will be automatically propagated to the

.platformkeyring on this and subsequent boots when UEFI Secure Boot is enabled.

3.9. Signing a kernel with the private key

You can obtain enhanced security benefits on your system by loading a signed kernel if the UEFI Secure Boot mechanism is enabled.

Prerequisites

- You have generated a public and private key pair and know the validity dates of your public keys. For details, see Generating a public and private key pair.

- You have enrolled your public key on the target system. For details, see Enrolling public key on target system by adding the public key to the MOK list.

- You have a kernel image in the ELF format available for signing.

Procedure

On the x64 architecture:

Create a signed image:

pesign --certificate 'Custom Secure Boot key' \ --in vmlinuz-version \ --sign \ --out vmlinuz-version.signed# pesign --certificate 'Custom Secure Boot key' \ --in vmlinuz-version \ --sign \ --out vmlinuz-version.signedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

versionwith the version suffix of yourvmlinuzfile, andCustom Secure Boot keywith the name that you chose earlier.Optional: Check the signatures:

pesign --show-signature \ --in vmlinuz-version.signed# pesign --show-signature \ --in vmlinuz-version.signedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Overwrite the unsigned image with the signed image:

mv vmlinuz-version.signed vmlinuz-version

# mv vmlinuz-version.signed vmlinuz-versionCopy to Clipboard Copied! Toggle word wrap Toggle overflow

On the 64-bit ARM architecture:

Decompress the

vmlinuzfile:zcat vmlinuz-version > vmlinux-version

# zcat vmlinuz-version > vmlinux-versionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a signed image:

pesign --certificate 'Custom Secure Boot key' \ --in vmlinux-version \ --sign \ --out vmlinux-version.signed# pesign --certificate 'Custom Secure Boot key' \ --in vmlinux-version \ --sign \ --out vmlinux-version.signedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Check the signatures:

pesign --show-signature \ --in vmlinux-version.signed# pesign --show-signature \ --in vmlinux-version.signedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Compress the

vmlinuxfile:gzip --to-stdout vmlinux-version.signed > vmlinuz-version

# gzip --to-stdout vmlinux-version.signed > vmlinuz-versionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the uncompressed

vmlinuxfile:rm vmlinux-version*

# rm vmlinux-version*Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.10. Signing a GRUB build with the private key

On a system where the UEFI Secure Boot mechanism is enabled, you can sign a GRUB build with a custom existing private key. You must do this if you are using a custom GRUB build, or if you have removed the Microsoft trust anchor from your system.

Prerequisites

- You have generated a public and private key pair and know the validity dates of your public keys. For details, see Generating a public and private key pair.

- You have enrolled your public key on the target system. For details, see Enrolling public key on target system by adding the public key to the MOK list.

- You have a GRUB EFI binary available for signing.

Procedure

On the x64 architecture:

Create a signed GRUB EFI binary:

pesign --in /boot/efi/EFI/redhat/grubx64.efi \ --out /boot/efi/EFI/redhat/grubx64.efi.signed \ --certificate 'Custom Secure Boot key' \ --sign# pesign --in /boot/efi/EFI/redhat/grubx64.efi \ --out /boot/efi/EFI/redhat/grubx64.efi.signed \ --certificate 'Custom Secure Boot key' \ --signCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

Custom Secure Boot keywith the name that you chose earlier.Optional: Check the signatures:

pesign --in /boot/efi/EFI/redhat/grubx64.efi.signed \ --show-signature# pesign --in /boot/efi/EFI/redhat/grubx64.efi.signed \ --show-signatureCopy to Clipboard Copied! Toggle word wrap Toggle overflow Overwrite the unsigned binary with the signed binary:

mv /boot/efi/EFI/redhat/grubx64.efi.signed \ /boot/efi/EFI/redhat/grubx64.efi# mv /boot/efi/EFI/redhat/grubx64.efi.signed \ /boot/efi/EFI/redhat/grubx64.efiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

On the 64-bit ARM architecture:

Create a signed GRUB EFI binary:

pesign --in /boot/efi/EFI/redhat/grubaa64.efi \ --out /boot/efi/EFI/redhat/grubaa64.efi.signed \ --certificate 'Custom Secure Boot key' \ --sign# pesign --in /boot/efi/EFI/redhat/grubaa64.efi \ --out /boot/efi/EFI/redhat/grubaa64.efi.signed \ --certificate 'Custom Secure Boot key' \ --signCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

Custom Secure Boot keywith the name that you chose earlier.Optional: Check the signatures:

pesign --in /boot/efi/EFI/redhat/grubaa64.efi.signed \ --show-signature# pesign --in /boot/efi/EFI/redhat/grubaa64.efi.signed \ --show-signatureCopy to Clipboard Copied! Toggle word wrap Toggle overflow Overwrite the unsigned binary with the signed binary:

mv /boot/efi/EFI/redhat/grubaa64.efi.signed \ /boot/efi/EFI/redhat/grubaa64.efi# mv /boot/efi/EFI/redhat/grubaa64.efi.signed \ /boot/efi/EFI/redhat/grubaa64.efiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.11. Signing kernel modules with the private key

You can enhance the security of your system by loading signed kernel modules if the UEFI Secure Boot mechanism is enabled.

Your signed kernel module is also loadable on systems where UEFI Secure Boot is disabled or on a non-UEFI system. As a result, you do not need to provide both, a signed and unsigned version of your kernel module.

Prerequisites

- You have generated a public and private key pair and know the validity dates of your public keys. For details, see Generating a public and private key pair.

- You have enrolled your public key on the target system. For details, see Enrolling public key on target system by adding the public key to the MOK list.

- You have a kernel module in ELF image format available for signing.

Procedure

Export your public key to the

sb_cert.cerfile:certutil -d /etc/pki/pesign \ -n 'Custom Secure Boot key' \ -Lr \ > sb_cert.cer# certutil -d /etc/pki/pesign \ -n 'Custom Secure Boot key' \ -Lr \ > sb_cert.cerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Extract the key from the NSS database as a PKCS #12 file:

pk12util -o sb_cert.p12 \ -n 'Custom Secure Boot key' \ -d /etc/pki/pesign# pk12util -o sb_cert.p12 \ -n 'Custom Secure Boot key' \ -d /etc/pki/pesignCopy to Clipboard Copied! Toggle word wrap Toggle overflow - When the previous command prompts, enter a new password that encrypts the private key.

Export the unencrypted private key:

openssl pkcs12 \ -in sb_cert.p12 \ -out sb_cert.priv \ -nocerts \ -nodes# openssl pkcs12 \ -in sb_cert.p12 \ -out sb_cert.priv \ -nocerts \ -nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantKeep the unencrypted private key secure.

Sign your kernel module. The following command appends the signature directly to the ELF image in your kernel module file:

/usr/src/kernels/$(uname -r)/scripts/sign-file \ sha256 \ sb_cert.priv \ sb_cert.cer \ my_module.ko# /usr/src/kernels/$(uname -r)/scripts/sign-file \ sha256 \ sb_cert.priv \ sb_cert.cer \ my_module.koCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Your kernel module is now ready for loading.

In RHEL 8, the validity dates of the key pair matter. The key does not expire, but the kernel module must be signed within the validity period of its signing key. The sign-file utility will not warn you of this. For example, a key that is only valid in 2019 can be used to authenticate a kernel module signed in 2019 with that key. However, users cannot use that key to sign a kernel module in 2020.

Verification

Display information about the kernel module’s signature:

modinfo my_module.ko | grep signer

# modinfo my_module.ko | grep signer signer: Your Name KeyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the signature lists your name as entered during generation.

NoteThe appended signature is not contained in an ELF image section and is not a formal part of the ELF image. Therefore, utilities such as

readelfcannot display the signature on your kernel module.Load the module:

insmod my_module.ko

# insmod my_module.koCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove (unload) the module:

modprobe -r my_module.ko

# modprobe -r my_module.koCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.12. Loading signed kernel modules

After enrolling your public key in the system keyring (.builtin_trusted_keys) and the MOK list, and signing kernel modules with your private key, you can load them using the modprobe command.

Prerequisites

- You have generated the public and private key pair. For details, see Generating a public and private key pair.

- You have enrolled the public key into the system keyring. For details, see Enrolling public key on target system by adding the public key to the MOK list.

- You have signed a kernel module with the private key. For details, see Signing kernel modules with the private key.

Install the

kernel-modules-extrapackage, which creates the/lib/modules/$(uname -r)/extra/directory:yum -y install kernel-modules-extra

# yum -y install kernel-modules-extraCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Verify that your public keys are on the system keyring:

keyctl list %:.platform

# keyctl list %:.platformCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the kernel module into the

extra/directory of the kernel that you want:cp my_module.ko /lib/modules/$(uname -r)/extra/

# cp my_module.ko /lib/modules/$(uname -r)/extra/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the modular dependency list:

depmod -a

# depmod -aCopy to Clipboard Copied! Toggle word wrap Toggle overflow Load the kernel module:

modprobe -v my_module

# modprobe -v my_moduleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: To load the module on boot, add it to the

/etc/modules-loaded.d/my_module.conffile:echo "my_module" > /etc/modules-load.d/my_module.conf

# echo "my_module" > /etc/modules-load.d/my_module.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that the module was successfully loaded:

lsmod | grep my_module

# lsmod | grep my_moduleCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 4. Configuring kernel command-line parameters

With kernel command-line parameters, you can change the behavior of certain aspects of the Red Hat Enterprise Linux kernel at boot time. As a system administrator, you control which options get set at boot. Note that certain kernel behaviors can only be set at boot time.

Changing the behavior of the system by modifying kernel command-line parameters can have negative effects on your system. Always test changes before deploying them in production. For further guidance, contact Red Hat Support.

4.1. What are kernel command-line parameters

With kernel command-line parameters, you can overwrite default values and set specific hardware settings. At boot time, you can configure the following features:

- The Red Hat Enterprise Linux kernel

- The initial RAM disk

- The user space features

By default, the kernel command-line parameters for systems using the GRUB boot loader are defined in the kernelopts variable of the /boot/grub2/grubenv file for each kernel boot entry.

For IBM Z, the kernel command-line parameters are stored in the boot entry configuration file because the zipl boot loader does not support environment variables. Thus, the kernelopts environment variable cannot be used.

You can manipulate boot loader configuration files by using the grubby utility. With grubby, you can perform these actions:

- Change the default boot entry.

- Add or remove arguments from a GRUB menu entry.

4.2. Understanding boot entries

A boot entry is a collection of options stored in a configuration file and tied to a particular kernel version. In practice, you have at least as many boot entries as your system has installed kernels. The boot entry configuration file is located in the /boot/loader/entries/ directory:

6f9cc9cb7d7845d49698c9537337cedc-4.18.0-5.el8.x86_64.conf

6f9cc9cb7d7845d49698c9537337cedc-4.18.0-5.el8.x86_64.conf

The file name above consists of a machine ID stored in the /etc/machine-id file, and a kernel version.

The boot entry configuration file contains information about the kernel version, the initial ramdisk image, and the kernelopts environment variable that contains the kernel command-line parameters. The configuration file can have the following contents:

The kernelopts environment variable is defined in the /boot/grub2/grubenv file.

4.3. Changing kernel command-line parameters for all boot entries

Change kernel command-line parameters for all boot entries on your system.

Prerequisites

-

grubbyutility is installed on your system. -

ziplutility is installed on your IBM Z system.

Procedure

To add a parameter:

grubby --update-kernel=ALL --args="<NEW_PARAMETER>"

# grubby --update-kernel=ALL --args="<NEW_PARAMETER>"Copy to Clipboard Copied! Toggle word wrap Toggle overflow For systems that use the GRUB boot loader, the command updates the

/boot/grub2/grubenvfile by adding a new kernel parameter to thekerneloptsvariable in that file.On IBM Z, update the boot menu:

zipl

# ziplCopy to Clipboard Copied! Toggle word wrap Toggle overflow

To remove a parameter:

grubby --update-kernel=ALL --remove-args="<PARAMETER_TO_REMOVE>"

# grubby --update-kernel=ALL --remove-args="<PARAMETER_TO_REMOVE>"Copy to Clipboard Copied! Toggle word wrap Toggle overflow On IBM Z, update the boot menu:

zipl

# ziplCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Newly installed kernels inherit the kernel command-line parameters from your previously configured kernels.

4.4. Changing kernel command-line parameters for a single boot entry

Make changes in kernel command-line parameters for a single boot entry on your system.

Prerequisites

-

grubbyandziplutilities are installed on your system.

Procedure

To add a parameter:

grubby --update-kernel=/boot/vmlinuz-$(uname -r) --args="<NEW_PARAMETER>"

# grubby --update-kernel=/boot/vmlinuz-$(uname -r) --args="<NEW_PARAMETER>"Copy to Clipboard Copied! Toggle word wrap Toggle overflow On IBM Z, update the boot menu:

grubby --args="<NEW_PARAMETER> --update-kernel=ALL --zipl

# grubby --args="<NEW_PARAMETER> --update-kernel=ALL --ziplCopy to Clipboard Copied! Toggle word wrap Toggle overflow

To remove a parameter:

grubby --update-kernel=/boot/vmlinuz-$(uname -r) --remove-args="<PARAMETER_TO_REMOVE>"

# grubby --update-kernel=/boot/vmlinuz-$(uname -r) --remove-args="<PARAMETER_TO_REMOVE>"Copy to Clipboard Copied! Toggle word wrap Toggle overflow On IBM Z, update the boot menu:

grubby --args="<PARAMETER_TO_REMOVE> --update-kernel=ALL --zipl

# grubby --args="<PARAMETER_TO_REMOVE> --update-kernel=ALL --ziplCopy to Clipboard Copied! Toggle word wrap Toggle overflow

On systems that use the grub.cfg file, there is, by default, the options parameter for each kernel boot entry, which is set to the kernelopts variable. This variable is defined in the /boot/grub2/grubenv configuration file.

On GRUB systems:

-

If the kernel command-line parameters are modified for all boot entries, the

grubbyutility updates thekerneloptsvariable in the/boot/grub2/grubenvfile. -

If kernel command-line parameters are modified for a single boot entry, the

kerneloptsvariable is expanded, the kernel parameters are modified, and the resulting value is stored in the respective boot entry’s/boot/loader/entries/<RELEVANT_KERNEL_BOOT_ENTRY.conf>file.

On zIPL systems:

-

grubbymodifies and stores the kernel command-line parameters of an individual kernel boot entry in the/boot/loader/entries/<ENTRY>.conffile.

4.5. Changing kernel command-line parameters temporarily at boot time

Make temporary changes to a Kernel Menu Entry by changing the kernel parameters only during a single boot process.

This procedure applies only for a single boot and does not persistently make the changes.

Procedure

- Boot into the GRUB boot menu.

- Select the kernel you want to start.

- Press the e key to edit the kernel parameters.

-

Find the kernel command line by moving the cursor down. The kernel command line starts with

linuxon 64-Bit IBM Power Series and x86-64 BIOS-based systems, orlinuxefion UEFI systems. Move the cursor to the end of the line.

NotePress Ctrl+a to jump to the start of the line and Ctrl+e to jump to the end of the line. On some systems, Home and End keys might also work.

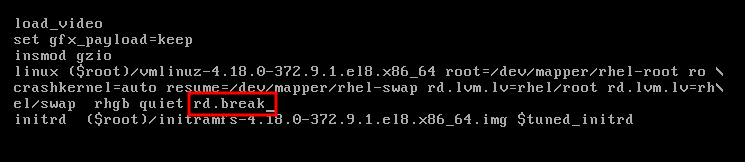

Edit the kernel parameters as required. For example, to run the system in emergency mode, add the

emergencyparameter at the end of thelinuxline:linux ($root)/vmlinuz-4.18.0-348.12.2.el8_5.x86_64 root=/dev/mapper/rhel-root ro crashkernel=auto resume=/dev/mapper/rhel-swap rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap rhgb quiet emergency

linux ($root)/vmlinuz-4.18.0-348.12.2.el8_5.x86_64 root=/dev/mapper/rhel-root ro crashkernel=auto resume=/dev/mapper/rhel-swap rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap rhgb quiet emergencyCopy to Clipboard Copied! Toggle word wrap Toggle overflow To enable the system messages, remove the

rhgbandquietparameters.- Press Ctrl+x to boot with the selected kernel and the modified command line parameters.

If you press the Esc key to leave command line editing, it will drop all the user made changes.

4.6. Configuring GRUB settings to enable serial console connection

The serial console is beneficial when you need to connect to a headless server or an embedded system and the network is down. Or when you need to avoid security rules and obtain login access on a different system.

You need to configure some default GRUB settings to use the serial console connection.

Prerequisites

- You have root permissions.

Procedure

Add the following two lines to the

/etc/default/grubfile:GRUB_TERMINAL="serial" GRUB_SERIAL_COMMAND="serial --speed=9600 --unit=0 --word=8 --parity=no --stop=1"

GRUB_TERMINAL="serial" GRUB_SERIAL_COMMAND="serial --speed=9600 --unit=0 --word=8 --parity=no --stop=1"Copy to Clipboard Copied! Toggle word wrap Toggle overflow The first line disables the graphical terminal. The

GRUB_TERMINALkey overrides values ofGRUB_TERMINAL_INPUTandGRUB_TERMINAL_OUTPUTkeys.The second line adjusts the baud rate (

--speed), parity and other values to fit your environment and hardware. Note that a much higher baud rate, for example 115200, is preferable for tasks such as following log files.Update the GRUB configuration file.

On BIOS-based machines:

grub2-mkconfig -o /boot/grub2/grub.cfg

# grub2-mkconfig -o /boot/grub2/grub.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow On UEFI-based machines:

grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg

# grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Reboot the system for the changes to take effect.

Chapter 5. Configuring kernel parameters at runtime

As a system administrator, you can modify many facets of the Red Hat Enterprise Linux kernel’s behavior at runtime. Configure kernel parameters at runtime by using the sysctl command and by modifying the configuration files in the /etc/sysctl.d/ and /proc/sys/ directories.

Configuring kernel parameters on a production system requires careful planning. Unplanned changes can render the kernel unstable, requiring a system reboot. Verify that you are using valid options before changing any kernel values.

For more information about tuning kernel on IBM DB2, see Tuning Red Hat Enterprise Linux for IBM DB2.

5.1. What are kernel parameters

Kernel parameters are tunable values that you can adjust while the system is running. Note that for changes to take effect, you do not need to reboot the system or recompile the kernel.

It is possible to address the kernel parameters through:

-

The

sysctlcommand -

The virtual file system mounted at the

/proc/sys/directory -

The configuration files in the

/etc/sysctl.d/directory

Tunables are divided into classes by the kernel subsystem. Red Hat Enterprise Linux has the following tunable classes:

| Tunable class | Subsystem |

|---|---|

|

| Execution domains and personalities |

|

| Cryptographic interfaces |

|

| Kernel debugging interfaces |

|

| Device-specific information |

|

| Global and specific file system tunables |

|

| Global kernel tunables |

|

| Network tunables |

|

| Sun Remote Procedure Call (NFS) |

|

| User Namespace limits |

|

| Tuning and management of memory, buffers, and cache |

5.2. Configuring kernel parameters temporarily with sysctl

Use the sysctl command to temporarily set kernel parameters at runtime. The command is also useful for listing and filtering tunables.

Prerequisites

- Root permissions

Procedure

List all parameters and their values.

sysctl -a

# sysctl -aCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

# sysctl -acommand displays kernel parameters, which can be adjusted at runtime and at boot time.To configure a parameter temporarily, enter:

sysctl <TUNABLE_CLASS>.<PARAMETER>=<TARGET_VALUE>

# sysctl <TUNABLE_CLASS>.<PARAMETER>=<TARGET_VALUE>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The sample command above changes the parameter value while the system is running. The changes take effect immediately, without a need for restart.

NoteThe changes return back to default after your system reboots.

5.3. Configuring kernel parameters permanently with sysctl

Use the sysctl command to permanently set kernel parameters.

Prerequisites

- Root permissions

Procedure

List all parameters.

sysctl -a

# sysctl -aCopy to Clipboard Copied! Toggle word wrap Toggle overflow The command displays all kernel parameters that can be configured at runtime.

Configure a parameter permanently:

sysctl -w <TUNABLE_CLASS>.<PARAMETER>=<TARGET_VALUE> >> /etc/sysctl.conf

# sysctl -w <TUNABLE_CLASS>.<PARAMETER>=<TARGET_VALUE> >> /etc/sysctl.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow The sample command changes the tunable value and writes it to the

/etc/sysctl.conffile, which overrides the default values of kernel parameters. The changes take effect immediately and persistently, without a need for restart.

To permanently modify kernel parameters, you can also make manual changes to the configuration files in the /etc/sysctl.d/ directory.

5.4. Using configuration files in /etc/sysctl.d/ to adjust kernel parameters

You must modify the configuration files in the /etc/sysctl.d/ directory manually to permanently set kernel parameters.

Prerequisites

- You have root permissions.

Procedure

Create a new configuration file in

/etc/sysctl.d/:vim /etc/sysctl.d/<some_file.conf>

# vim /etc/sysctl.d/<some_file.conf>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Include kernel parameters, one per line:

<TUNABLE_CLASS>.<PARAMETER>=<TARGET_VALUE> <TUNABLE_CLASS>.<PARAMETER>=<TARGET_VALUE>

<TUNABLE_CLASS>.<PARAMETER>=<TARGET_VALUE> <TUNABLE_CLASS>.<PARAMETER>=<TARGET_VALUE>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the configuration file.

Reboot the machine for the changes to take effect.

Alternatively, apply changes without rebooting:

sysctl -p /etc/sysctl.d/<some_file.conf>

# sysctl -p /etc/sysctl.d/<some_file.conf>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The command enables you to read values from the configuration file, which you created earlier.

5.5. Configuring kernel parameters temporarily through /proc/sys/

Set kernel parameters temporarily through the files in the /proc/sys/ virtual file system directory.

Prerequisites

- Root permissions

Procedure

Identify a kernel parameter you want to configure.

ls -l /proc/sys/<TUNABLE_CLASS>/

# ls -l /proc/sys/<TUNABLE_CLASS>/Copy to Clipboard Copied! Toggle word wrap Toggle overflow The writable files returned by the command can be used to configure the kernel. The files with read-only permissions provide feedback on the current settings.

Assign a target value to the kernel parameter.

echo <TARGET_VALUE> > /proc/sys/<TUNABLE_CLASS>/<PARAMETER>

# echo <TARGET_VALUE> > /proc/sys/<TUNABLE_CLASS>/<PARAMETER>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The configuration changes applied by using a command are not permanent and will disappear once the system is restarted.

Verification

Verify the value of the newly set kernel parameter.

cat /proc/sys/<TUNABLE_CLASS>/<PARAMETER>

# cat /proc/sys/<TUNABLE_CLASS>/<PARAMETER>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 7. Making persistent changes to the GRUB boot loader

Use the grubby tool to make persistent changes in GRUB.

7.1. Prerequisites

- You have successfully installed RHEL on your system.

- You have root permission.

7.2. Listing the default kernel

By listing the default kernel, you can find the file name and the index number of the default kernel to make permanent changes to the GRUB boot loader.

Procedure

- To get the file name of the default kernel, enter:

grubby --default-kernel

# grubby --default-kernel

/boot/vmlinuz-4.18.0-372.9.1.el8.x86_64- To get the index number of the default kernel, enter:

grubby --default-index

# grubby --default-index

07.4. Editing a Kernel Argument

You can change a value in an existing kernel argument. For example, you can change the virtual console (screen) font and size.

Procedure

Change the virtual console font to

latarcyrheb-sunwith the size of32:grubby --args=vconsole.font=latarcyrheb-sun32 --update-kernel /boot/vmlinuz-4.18.0-372.9.1.el8.x86_64

# grubby --args=vconsole.font=latarcyrheb-sun32 --update-kernel /boot/vmlinuz-4.18.0-372.9.1.el8.x86_64Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.6. Adding a new boot entry

You can add a new boot entry to the boot loader menu entries.

Procedure

Copy all the kernel arguments from your default kernel to this new kernel entry:

grubby --add-kernel=new_kernel --title="entry_title" --initrd="new_initrd" --copy-default

# grubby --add-kernel=new_kernel --title="entry_title" --initrd="new_initrd" --copy-defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the list of available boot entries:

ls -l /boot/loader/entries/*

# ls -l /boot/loader/entries/* -rw-r--r--. 1 root root 408 May 27 06:18 /boot/loader/entries/67db13ba8cdb420794ef3ee0a8313205-0-rescue.conf -rw-r--r--. 1 root root 536 Jun 30 07:53 /boot/loader/entries/67db13ba8cdb420794ef3ee0a8313205-4.18.0-372.9.1.el8.x86_64.conf -rw-r--r-- 1 root root 336 Aug 15 15:12 /boot/loader/entries/d88fa2c7ff574ae782ec8c4288de4e85-4.18.0-193.el8.x86_64.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new boot entry. For example, for the 4.18.0-193.el8.x86_64 kernel, issue the command as follows:

grubby --grub2 --add-kernel=/boot/vmlinuz-4.18.0-193.el8.x86_64 --title="Red Hat Enterprise 8 Test" --initrd=/boot/initramfs-4.18.0-193.el8.x86_64.img --copy-default

# grubby --grub2 --add-kernel=/boot/vmlinuz-4.18.0-193.el8.x86_64 --title="Red Hat Enterprise 8 Test" --initrd=/boot/initramfs-4.18.0-193.el8.x86_64.img --copy-defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that the newly added boot entry is listed among the available boot entries:

ls -l /boot/loader/entries/*

# ls -l /boot/loader/entries/* -rw-r--r--. 1 root root 408 May 27 06:18 /boot/loader/entries/67db13ba8cdb420794ef3ee0a8313205-0-rescue.conf -rw-r--r--. 1 root root 536 Jun 30 07:53 /boot/loader/entries/67db13ba8cdb420794ef3ee0a8313205-4.18.0-372.9.1.el8.x86_64.conf -rw-r--r-- 1 root root 287 Aug 16 15:17 /boot/loader/entries/d88fa2c7ff574ae782ec8c4288de4e85-4.18.0-193.el8.x86_64.0~custom.conf -rw-r--r-- 1 root root 287 Aug 16 15:29 /boot/loader/entries/d88fa2c7ff574ae782ec8c4288de4e85-4.18.0-193.el8.x86_64.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.7. Changing the default boot entry with grubby

With the grubby tool, you can change the default boot entry.

Procedure

- To make a persistent change in the kernel designated as the default kernel, enter:

grubby --set-default /boot/vmlinuz-4.18.0-372.9.1.el8.x86_64

# grubby --set-default /boot/vmlinuz-4.18.0-372.9.1.el8.x86_64

The default is /boot/loader/entries/67db13ba8cdb420794ef3ee0a8313205-4.18.0-372.9.1.el8.x86_64.conf with index 0 and kernel /boot/vmlinuz-4.18.0-372.9.1.el8.x86_647.9. Changing default kernel options for current and future kernels

By using the kernelopts variable, you can change the default kernel options for both current and future kernels.

Procedure

List the kernel parameters from the

kerneloptsvariable:grub2-editenv - list | grep kernelopts

# grub2-editenv - list | grep kernelopts kernelopts=root=/dev/mapper/rhel-root ro crashkernel=auto resume=/dev/mapper/rhel-swap rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap rhgb quietCopy to Clipboard Copied! Toggle word wrap Toggle overflow Make the changes to the kernel command-line parameters. You can add, remove or modify a parameter. For example, to add the

debugparameter, enter:grub2-editenv - set "$(grub2-editenv - list | grep kernelopts) <debug>"

# grub2-editenv - set "$(grub2-editenv - list | grep kernelopts) <debug>"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Verify the parameter newly added to

kernelopts:grub2-editenv - list | grep kernelopts

# grub2-editenv - list | grep kernelopts kernelopts=root=/dev/mapper/rhel-root ro crashkernel=auto resume=/dev/mapper/rhel-swap rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap rhgb quiet debugCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Reboot the system for the changes to take effect.

As an alternative, you can use the grubby command to pass the arguments to current and future kernels:

grubby --update-kernel ALL --args="<PARAMETER>"

# grubby --update-kernel ALL --args="<PARAMETER>"Chapter 9. Reinstalling GRUB

You can reinstall the GRUB boot loader to fix certain problems, usually caused by an incorrect installation of GRUB, missing files, or a broken system. You can resolve these issues by restoring the missing files and updating the boot information.

Reasons to reinstall GRUB:

- Upgrading the GRUB boot loader packages.

- Adding the boot information to another drive.

- The user requires the GRUB boot loader to control installed operating systems. However, some operating systems are installed with their own boot loaders and reinstalling GRUB returns control to the desired operating system.

GRUB restores files only if they are not corrupted.

9.1. Reinstalling GRUB on BIOS-based machines

You can reinstall the GRUB boot loader on your BIOS-based system. Always reinstall GRUB after updating the GRUB packages.

This overwrites the existing GRUB to install the new GRUB. Ensure that the system does not cause data corruption or boot crash during the installation.

Procedure

Reinstall GRUB on the device where it is installed. For example, if

sdais your device:grub2-install /dev/sda

# grub2-install /dev/sdaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reboot your system for the changes to take effect:

reboot

# rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow

9.2. Reinstalling GRUB on UEFI-based machines

You can reinstall the GRUB boot loader on your UEFI-based system.

Ensure that the system does not cause data corruption or boot crash during the installation.

Procedure

Reinstall the

grub2-efiandshimboot loader files:yum reinstall grub2-efi shim

# yum reinstall grub2-efi shimCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reboot your system for the changes to take effect:

reboot

# rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow

9.3. Reinstalling GRUB on IBM Power machines

You can reinstall the GRUB boot loader on the Power PC Reference Platform (PReP) boot partition of your IBM Power system. Always reinstall GRUB after updating the GRUB packages.

This overwrites the existing GRUB to install the new GRUB. Ensure that the system does not cause data corruption or boot crash during the installation.

Procedure

Determine the disk partition that stores GRUB:

bootlist -m normal -o

# bootlist -m normal -o sda1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Reinstall GRUB on the disk partition:

grub2-install partition

# grub2-install partitionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

partitionwith the identified GRUB partition, such as/dev/sda1.Reboot your system for the changes to take effect:

reboot

# rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow

9.4. Resetting GRUB

Resetting GRUB completely removes all GRUB configuration files and system settings, and reinstalls the boot loader. You can reset all the configuration settings to their default values, and therefore fix failures caused by corrupted files and invalid configuration.

The following procedure will remove all the customization made by the user.

Procedure

Remove the configuration files:

rm /etc/grub.d/* rm /etc/sysconfig/grub

# rm /etc/grub.d/* # rm /etc/sysconfig/grubCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reinstall packages.

On BIOS-based machines:

yum reinstall grub2-tools

# yum reinstall grub2-toolsCopy to Clipboard Copied! Toggle word wrap Toggle overflow On UEFI-based machines:

yum reinstall grub2-efi shim grub2-tools grub2-common

# yum reinstall grub2-efi shim grub2-tools grub2-commonCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Rebuild the

grub.cfgfile for the changes to take effect.On BIOS-based machines:

grub2-mkconfig -o /boot/grub2/grub.cfg

# grub2-mkconfig -o /boot/grub2/grub.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow On UEFI-based machines:

grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg

# grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Follow Reinstalling GRUB procedure to restore GRUB on the

/boot/partition.

Chapter 10. Protecting GRUB with a password

You can protect GRUB with a password in two ways:

- Password is required for modifying menu entries but not for booting existing menu entries.

- Password is required for modifying menu entries and for booting existing menu entries.

Chapter 11. Keeping kernel panic parameters disabled in virtualized environments

When configuring a Virtual Machine in RHEL 8, do not enable the softlockup_panic and nmi_watchdog kernel parameters, because the Virtual Machine might suffer from a spurious soft lockup. And that should not require a kernel panic.

Find the reasons behind this advice in the following sections.

11.1. What is a soft lockup

A soft lockup is a situation usually caused by a bug, when a task is executing in kernel space on a CPU without rescheduling. The task also does not allow any other task to execute on that particular CPU. As a result, a warning is displayed to a user through the system console. This problem is also referred to as the soft lockup firing.

11.2. Parameters controlling kernel panic

The following kernel parameters can be set to control a system’s behavior when a soft lockup is detected.

softlockup_panicControls whether or not the kernel will panic when a soft lockup is detected.

Expand Type Value Effect Integer

0

kernel does not panic on soft lockup

Integer

1

kernel panics on soft lockup

By default, on RHEL 8, this value is 0.

The system needs to detect a hard lockup first to be able to panic. The detection is controlled by the

nmi_watchdogparameter.nmi_watchdogControls whether lockup detection mechanisms (

watchdogs) are active or not. This parameter is of integer type.Expand Value Effect 0

disables lockup detector

1

enables lockup detector

The hard lockup detector monitors each CPU for its ability to respond to interrupts.