Chapter 32. Tuning scheduling policy

In Red Hat Enterprise Linux, the smallest unit of process execution is called a thread. The system scheduler determines which processor runs a thread, and for how long the thread runs. However, because the scheduler’s primary concern is to keep the system busy, it may not schedule threads optimally for application performance.

For example, say an application on a NUMA system is running on Node A when a processor on Node B becomes available. To keep the processor on Node B busy, the scheduler moves one of the application’s threads to Node B. However, the application thread still requires access to memory on Node A. But, this memory will take longer to access because the thread is now running on Node B and Node A memory is no longer local to the thread. Thus, it may take longer for the thread to finish running on Node B than it would have taken to wait for a processor on Node A to become available, and then to execute the thread on the original node with local memory access.

32.1. Categories of scheduling policies

Performance sensitive applications often benefit from the designer or administrator determining where threads are run. The Linux scheduler implements a number of scheduling policies which determine where and for how long a thread runs.

The following are the two major categories of scheduling policies:

Normal policies- Normal threads are used for tasks of normal priority.

Realtime policiesRealtime policies are used for time-sensitive tasks that must complete without interruptions. Realtime threads are not subject to time slicing. This means the thread runs until they block, exit, voluntarily yield, or are preempted by a higher priority thread.

The lowest priority realtime thread is scheduled before any thread with a normal policy. For more information, see Static priority scheduling with SCHED_FIFO and Round robin priority scheduling with SCHED_RR.

Additional resources

-

sched(7),sched_setaffinity(2),sched_getaffinity(2),sched_setscheduler(2), andsched_getscheduler(2)man pages on your system

32.2. Static priority scheduling with SCHED_FIFO

The SCHED_FIFO, also called static priority scheduling, is a realtime policy that defines a fixed priority for each thread. This policy allows administrators to improve event response time and reduce latency. It is recommended to not execute this policy for an extended period of time for time sensitive tasks.

When SCHED_FIFO is in use, the scheduler scans the list of all the SCHED_FIFO threads in order of priority and schedules the highest priority thread that is ready to run. The priority level of a SCHED_FIFO thread can be any integer from 1 to 99, where 99 is treated as the highest priority. Red Hat recommends starting with a lower number and increasing priority only when you identify latency issues.

Because realtime threads are not subject to time slicing, Red Hat does not recommend setting a priority as 99. This keeps your process at the same priority level as migration and watchdog threads; if your thread goes into a computational loop and these threads are blocked, they will not be able to run. Systems with a single processor will eventually hang in this situation.

Administrators can limit SCHED_FIFO bandwidth to prevent realtime application programmers from initiating realtime tasks that monopolize the processor.

The following are some of the parameters used in this policy:

/proc/sys/kernel/sched_rt_period_us-

This parameter defines the time period, in microseconds, that is considered to be one hundred percent of the processor bandwidth. The default value is

1000000 μs, or1 second. /proc/sys/kernel/sched_rt_runtime_us-

This parameter defines the time period, in microseconds, that is devoted to running real-time threads. The default value is

950000 μs, or0.95 seconds.

32.3. Round robin priority scheduling with SCHED_RR

The SCHED_RR is a round-robin variant of the SCHED_FIFO. This policy is useful when multiple threads need to run at the same priority level.

Like SCHED_FIFO, SCHED_RR is a realtime policy that defines a fixed priority for each thread. The scheduler scans the list of all SCHED_RR threads in order of priority and schedules the highest priority thread that is ready to run. However, unlike SCHED_FIFO, threads that have the same priority are scheduled in a round-robin style within a certain time slice.

You can set the value of this time slice in milliseconds with the sched_rr_timeslice_ms kernel parameter in the /proc/sys/kernel/sched_rr_timeslice_ms file. The lowest value is 1 millisecond.

32.4. Normal scheduling with SCHED_OTHER

The SCHED_OTHER is the default scheduling policy in Red Hat Enterprise Linux 8. This policy uses the Completely Fair Scheduler (CFS) to allow fair processor access to all threads scheduled with this policy. This policy is most useful when there are a large number of threads or when data throughput is a priority, as it allows more efficient scheduling of threads over time.

When this policy is in use, the scheduler creates a dynamic priority list based partly on the niceness value of each process thread. Administrators can change the niceness value of a process, but cannot change the scheduler’s dynamic priority list directly.

32.5. Setting scheduler policies

Check and adjust scheduler policies and priorities by using the chrt command line tool. It can start new processes with the desired properties, or change the properties of a running process. It can also be used for setting the policy at runtime.

Procedure

View the process ID (PID) of the active processes:

# ps

Use the

--pidor-poption with thepscommand to view the details of the particular PID.Check the scheduling policy, PID, and priority of a particular process:

# chrt -p 468 pid 468's current scheduling policy: SCHED_FIFO pid 468's current scheduling priority: 85 # chrt -p 476 pid 476's current scheduling policy: SCHED_OTHER pid 476's current scheduling priority: 0

Here, 468 and 476 are PID of a process.

Set the scheduling policy of a process:

For example, to set the process with PID 1000 to SCHED_FIFO, with a priority of 50:

# chrt -f -p 50 1000For example, to set the process with PID 1000 to SCHED_OTHER, with a priority of 0:

# chrt -o -p 0 1000For example, to set the process with PID 1000 to SCHED_RR, with a priority of 10:

# chrt -r -p 10 1000To start a new application with a particular policy and priority, specify the name of the application:

# chrt -f 36 /bin/my-app

Additional resources

-

chrt(1)man page on your system - Policy Options for the chrt command

- Changing the priority of services during the boot process

32.6. Policy options for the chrt command

Using the chrt command, you can view and set the scheduling policy of a process.

The following table describes the appropriate policy options, which can be used to set the scheduling policy of a process.

| Short option | Long option | Description |

|---|---|---|

|

|

|

Set schedule to |

|

|

|

Set schedule to |

|

|

|

Set schedule to |

32.7. Changing the priority of services during the boot process

Using the systemd service, it is possible to set up real-time priorities for services launched during the boot process. The unit configuration directives are used to change the priority of a service during the boot process.

The boot process priority change is done by using the following directives in the service section:

CPUSchedulingPolicy=-

Sets the CPU scheduling policy for executed processes. It is used to set

other,fifo, andrrpolicies. CPUSchedulingPriority=-

Sets the CPU scheduling priority for executed processes. The available priority range depends on the selected CPU scheduling policy. For real-time scheduling policies, an integer between

1(lowest priority) and99(highest priority) can be used.

The following procedure describes how to change the priority of a service, during the boot process, using the mcelog service.

Prerequisites

Install the TuneD package:

# yum install tuned

Enable and start the TuneD service:

# systemctl enable --now tuned

Procedure

View the scheduling priorities of running threads:

# tuna --show_threads thread ctxt_switches pid SCHED_ rtpri affinity voluntary nonvoluntary cmd 1 OTHER 0 0xff 3181 292 systemd 2 OTHER 0 0xff 254 0 kthreadd 3 OTHER 0 0xff 2 0 rcu_gp 4 OTHER 0 0xff 2 0 rcu_par_gp 6 OTHER 0 0 9 0 kworker/0:0H-kblockd 7 OTHER 0 0xff 1301 1 kworker/u16:0-events_unbound 8 OTHER 0 0xff 2 0 mm_percpu_wq 9 OTHER 0 0 266 0 ksoftirqd/0 [...]Create a supplementary

mcelogservice configuration directory file and insert the policy name and priority in this file:# cat << EOF > /etc/systemd/system/mcelog.service.d/priority.conf [Service] CPUSchedulingPolicy=fifo CPUSchedulingPriority=20 EOF

Reload the

systemdscripts configuration:# systemctl daemon-reload

Restart the

mcelogservice:# systemctl restart mcelog

Verification

Display the

mcelogpriority set bysystemdissue:# tuna -t mcelog -P thread ctxt_switches pid SCHED_ rtpri affinity voluntary nonvoluntary cmd 826 FIFO 20 0,1,2,3 13 0 mcelog

Additional resources

-

systemd(1)andtuna(8)man pages on your system - Description of the priority range

32.8. Priority map

Priorities are defined in groups, with some groups dedicated to certain kernel functions. For real-time scheduling policies, an integer between 1 (lowest priority) and 99 (highest priority) can be used.

The following table describes the priority range, which can be used while setting the scheduling policy of a process.

| Priority | Threads | Description |

|---|---|---|

| 1 | Low priority kernel threads |

This priority is usually reserved for the tasks that need to be just above |

| 2 - 49 | Available for use | The range used for typical application priorities. |

| 50 | Default hard-IRQ value | |

| 51 - 98 | High priority threads | Use this range for threads that execute periodically and must have quick response times. Do not use this range for CPU-bound threads as you will starve interrupts. |

| 99 | Watchdogs and migration | System threads that must run at the highest priority. |

32.9. TuneD cpu-partitioning profile

For tuning Red Hat Enterprise Linux 8 for latency-sensitive workloads, Red Hat recommends to use the cpu-partitioning TuneD profile.

Prior to Red Hat Enterprise Linux 8, the low-latency Red Hat documentation described the numerous low-level steps needed to achieve low-latency tuning. In Red Hat Enterprise Linux 8, you can perform low-latency tuning more efficiently by using the cpu-partitioning TuneD profile. This profile is easily customizable according to the requirements for individual low-latency applications.

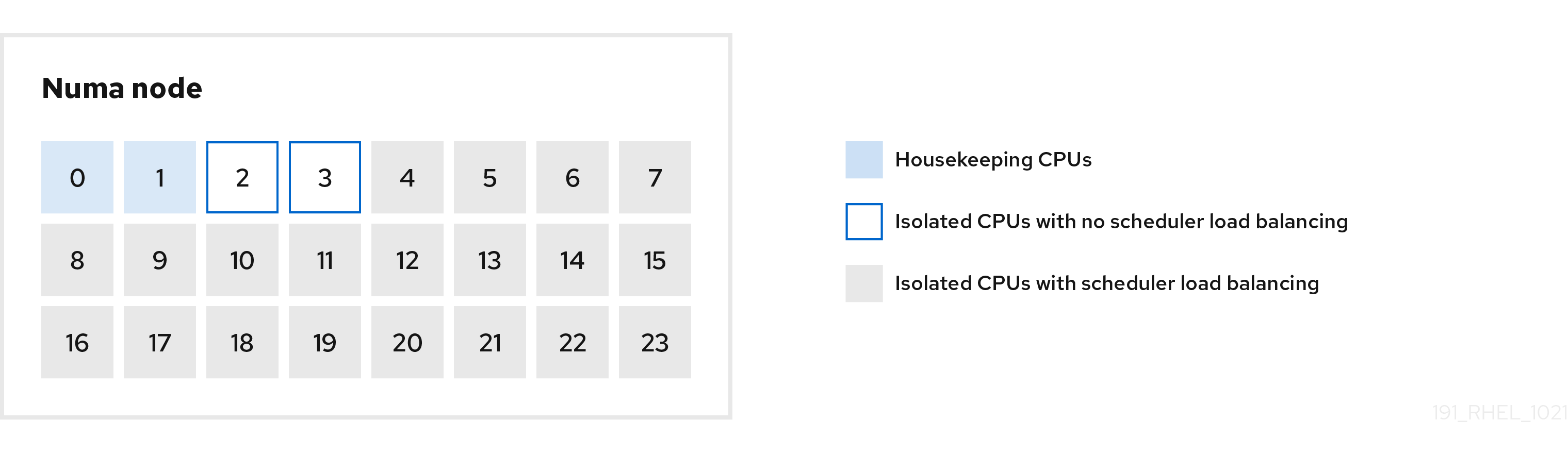

The following figure is an example to demonstrate how to use the cpu-partitioning profile. This example uses the CPU and node layout.

Figure 32.1. Figure cpu-partitioning

You can configure the cpu-partitioning profile in the /etc/tuned/cpu-partitioning-variables.conf file using the following configuration options:

- Isolated CPUs with load balancing

In the cpu-partitioning figure, the blocks numbered from 4 to 23, are the default isolated CPUs. The kernel scheduler’s process load balancing is enabled on these CPUs. It is designed for low-latency processes with multiple threads that need the kernel scheduler load balancing.

You can configure the cpu-partitioning profile in the

/etc/tuned/cpu-partitioning-variables.conffile using theisolated_cores=cpu-listoption, which lists CPUs to isolate that will use the kernel scheduler load balancing.The list of isolated CPUs is comma-separated or you can specify a range using a dash, such as

3-5. This option is mandatory. Any CPU missing from this list is automatically considered a housekeeping CPU.- Isolated CPUs without load balancing

In the cpu-partitioning figure, the blocks numbered 2 and 3, are the isolated CPUs that do not provide any additional kernel scheduler process load balancing.

You can configure the cpu-partitioning profile in the

/etc/tuned/cpu-partitioning-variables.conffile using theno_balance_cores=cpu-listoption, which lists CPUs to isolate that will not use the kernel scheduler load balancing.Specifying the

no_balance_coresoption is optional, however any CPUs in this list must be a subset of the CPUs listed in theisolated_coreslist.Application threads using these CPUs need to be pinned individually to each CPU.

- Housekeeping CPUs

-

Any CPU not isolated in the

cpu-partitioning-variables.conffile is automatically considered a housekeeping CPU. On the housekeeping CPUs, all services, daemons, user processes, movable kernel threads, interrupt handlers, and kernel timers are permitted to execute.

Additional resources

-

tuned-profiles-cpu-partitioning(7)man page on your system

32.10. Using the TuneD cpu-partitioning profile for low-latency tuning

This procedure describes how to tune a system for low-latency using the TuneD’s cpu-partitioning profile. It uses the example of a low-latency application that can use cpu-partitioning and the CPU layout as mentioned in the cpu-partitioning figure.

The application in this case uses:

- One dedicated reader thread that reads data from the network will be pinned to CPU 2.

- A large number of threads that process this network data will be pinned to CPUs 4-23.

- A dedicated writer thread that writes the processed data to the network will be pinned to CPU 3.

Prerequisites

-

You have installed the

cpu-partitioningTuneD profile by using theyum install tuned-profiles-cpu-partitioningcommand as root.

Procedure

Edit

/etc/tuned/cpu-partitioning-variables.conffile and add the following information:# All isolated CPUs: isolated_cores=2-23 # Isolated CPUs without the kernel’s scheduler load balancing: no_balance_cores=2,3

Set the

cpu-partitioningTuneD profile:# tuned-adm profile cpu-partitioning

Reboot

After rebooting, the system is tuned for low-latency, according to the isolation in the cpu-partitioning figure. The application can use taskset to pin the reader and writer threads to CPUs 2 and 3, and the remaining application threads on CPUs 4-23.

Additional resources

-

tuned-profiles-cpu-partitioning(7)man page on your system

32.11. Customizing the cpu-partitioning TuneD profile

You can extend the TuneD profile to make additional tuning changes.

For example, the cpu-partitioning profile sets the CPUs to use cstate=1. In order to use the cpu-partitioning profile but to additionally change the CPU cstate from cstate1 to cstate0, the following procedure describes a new TuneD profile named my_profile, which inherits the cpu-partitioning profile and then sets C state-0.

Procedure

Create the

/etc/tuned/my_profiledirectory:# mkdir /etc/tuned/my_profileCreate a

tuned.conffile in this directory, and add the following content:# vi /etc/tuned/my_profile/tuned.conf [main] summary=Customized tuning on top of cpu-partitioning include=cpu-partitioning [cpu] force_latency=cstate.id:0|1Use the new profile:

# tuned-adm profile my_profile

In the shared example, a reboot is not required. However, if the changes in the my_profile profile require a reboot to take effect, then reboot your machine.

Additional resources

-

tuned-profiles-cpu-partitioning(7)man page on your system