Using image mode for RHEL to build, deploy, and manage operating systems

Using RHEL bootc images on Red Hat Enterprise Linux 9

Abstract

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

- Log in to the Jira website.

- Click Create in the top navigation bar

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- Click Create at the bottom of the dialogue.

Chapter 1. Introducing image mode for RHEL

Use image mode for RHEL to build, test, and deploy operating systems by using the same tools and techniques as application containers. Image mode for RHEL is available by using the registry.redhat.io/rhel9/rhel-bootc bootc image. The RHEL bootc images differ from the existing application Universal Base Images (UBI) in that they contain additional components necessary to boot that were traditionally excluded, such as, kernel, initrd, boot loader, firmware, among others.

The rhel-bootc and user-created containers based on rhel-bootc container image are subject to the Red Hat Enterprise Linux end user license agreement (EULA). You are not allowed to publicly redistribute these images.

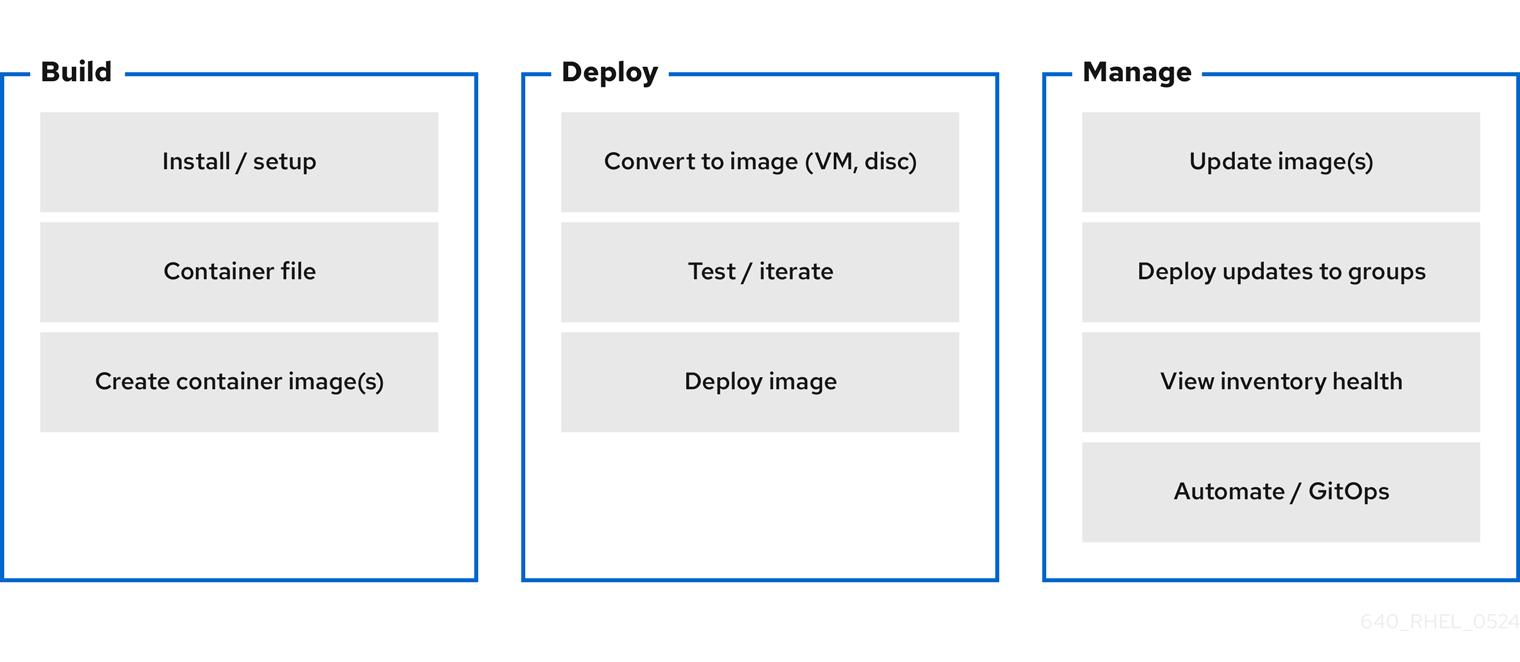

Figure 1.1. Building, deploying, and managing operating system by using image mode for RHEL

Red Hat provides bootc image for the following computer architectures:

- AMD and Intel 64-bit architectures (x86-64-v2)

- The 64-bit ARM architecture (ARMv8.0-A)

- IBM Power Systems 64-bit Little Endian architecture (ppc64le)

- IBM Z 64-bit architecture (s390x)

The benefits of image mode for RHEL occur across the lifecycle of a system. The following list contains some of the most important advantages:

- Container images are easier to understand and use than other image formats and are fast to build

- Containerfiles, also known as Dockerfiles, provide a straightforward approach to defining the content and build instructions for an image. Container images are often significantly faster to build and iterate on compared to other image creation tools.

- Consolidate process, infrastructure, and release artifacts

- As you distribute applications as containers, you can use the same infrastructure and processes to manage the underlying operating system.

- Immutable updates

-

Just as containerized applications are updated in an immutable way, with image mode for RHEL, the operating system is also. You can boot into updates and roll back when needed in the same way that you use

rpm-ostreesystems.

The use of rpm-ostree to make changes, or install content, is not supported.

- Portability across hybrid cloud environments

- You can use bootc images across physical, virtualized, cloud, and edge environments.

Although containers provide the foundation to build, transport, and run images, it is important to understand that after you deploy these bootc images, either by using an installation mechanism, or you convert them to a disk image, the system does not run as a container.

- Bootc supports the following container image formats and disk image formats:

| Image type | Target environment |

|---|---|

|

| Physical, virtualized, cloud, and edge environments. |

|

| Amazon Machine Image. |

|

| QEMU (targeted for environments such as Red Hat OpenStack, Red Hat OpenStack services for OpenShift, and OpenShift Virtualization), Libvirt (RHEL). |

|

| VMDK for vSphere. |

|

| An unattended Anaconda installer that installs to the first disk found. |

|

| Unformatted raw disk. Also supported in QEMU and Libvirt |

|

| VHD for Virtual PC, among others. |

|

| Google Compute Engine (GCE) environment. |

Containers help streamline the lifecycle of a RHEL system by offering the following possibilities:

- Building container images

-

You can configure your operating system at a build time by modifying the Containerfile. Image mode for RHEL is available by using the

registry.redhat.io/rhel9/rhel-bootccontainer image. You can use Podman, OpenShift Container Platform, or other standard container build tools to manage your containers and container images. You can automate the build process by using CI/CD pipelines. - Versioning, mirroring, and testing container images

- You can version, mirror, introspect, and sign your derived bootc image by using any container tools such as Podman or OpenShift Container Platform.

- Deploying container images to the target environment

You have several options on how to deploy your image:

- Anaconda: is the installation program used by RHEL. You can deploy all image types to the target environment by using Anaconda and Kickstart to automate the installation process.

-

bootc-image-builder: is a containerized tool that converts the container image to different types of disk images, and optionally uploads them to an image registry or object storage. -

bootc: is a tool responsible for fetching container images from a container registry and installing them to a system, updating the operating system, or switching from an existing ostree-based system. The RHEL bootc image contains thebootcutility by default and works with all image types. However, remember that therpm-ostreeis not supported and must not be used to make changes.

- Updating your operating system

-

The system supports in-place transactional updates with rollback after deployment. Automatic updates are on by default. A systemd service unit and systemd timer unit files check the container registry for updates and apply them to the system. As the updates are transactional, a reboot is required. For environments that require more sophisticated or scheduled rollouts, disable auto updates and use the

bootcutility to update your operating system.

RHEL has two deployment modes. Both provide the same stability, reliability, and performance during deployment. See their differences:

-

Package mode: You can build package-based images and OSTree images by using RHEL image builder, and you can manage the package mode images by using

composer-clior web console. The operating system uses RPM packages and is updated by using thednfpackage manager. The root filesystem is mutable. However, the operating system cannot be managed as a containerized application. See Composing a customized RHEL system image product documentation. -

Image mode: a container-native approach to build, deploy, and manage RHEL. The same RPM packages are delivered as a base image and updates are deployed as a container image. The root filesystem is immutable by default, except for

/etcand/var, with most content coming from the container image.

You can choose to use either the Image mode or the Package mode deployment to build, test, and share your operating system. Image mode additionally enables you to manage your operating system in the same way as any other containerized application.

1.1. Prerequisites

- You have a subscribed RHEL 9 system. For more information, see Getting Started with RHEL System Registration documentation.

- You have a container registry. You can create your registry locally or create a free account on the Quay.io service. To create the Quay.io account, see Red Hat Quay.io page.

- You have a Red Hat account with either production or developer subscriptions. No cost developer subscriptions are available on the Red Hat Enterprise Linux Overview page.

- You have authenticated to registry.redhat.io. For more information, see Red Hat Container Registry Authentication article.

Chapter 2. Building and testing RHEL bootc images

The following procedures use Podman to build and test your container image. You can also use other tools, for example, OpenShift Container Platform. For more examples of configuring RHEL systems by using containers, see the rhel-bootc-examples repository.

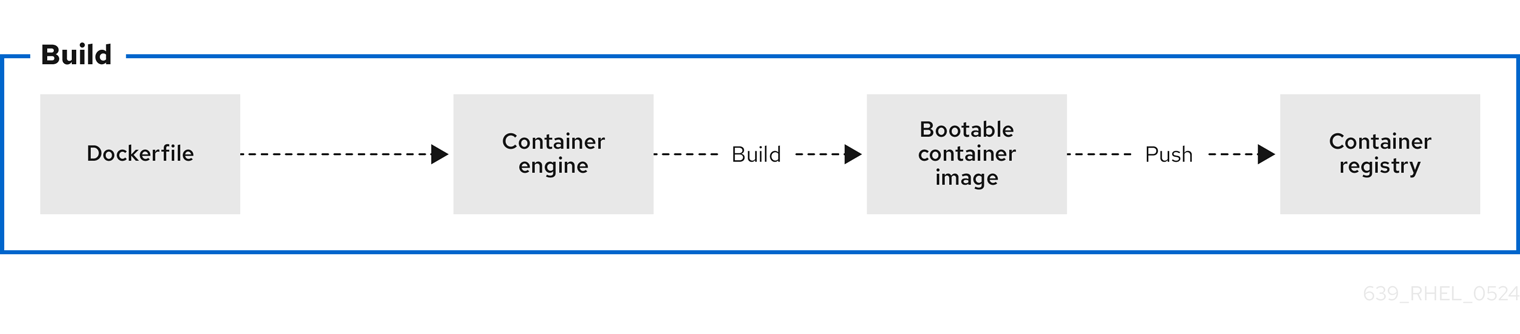

Figure 2.1. Building an image by using instructions from a Containerfile, testing the container, pushing an image to a registry, and sharing it with others

A general Containerfile structure is the following:

The available commands that are usable inside a Containerfile and a Dockerfile are equivalent.

However, the following commands in a Containerfile are ignored when the rhel-9-bootc image is installed to a system:

-

ENTRYPOINTandCMD(OCI:Entrypoint/Cmd): you can setCMD /sbin/initinstead. -

ENV(OCI:Env): change thesystemdconfiguration to configure the global system environment. -

EXPOSE(OCI:exposedPorts): it is independent of how the system firewall and network function at runtime. -

USER(OCI:User): configure individual services inside the RHEL bootc to run as unprivileged users instead.

The rhel-9-bootc container image reuses the OCI image format.

-

The

rhel-9-bootccontainer image ignores the container config section (Config) when it is installed to a system. -

The

rhel-9-bootccontainer image does not ignore the container config section (Config) when you run this image by using container runtimes such aspodmanordocker.

Building custom rhel-bootc base images is not supported in this release.

2.1. Building a container image

Use the podman build command to build an image using instructions from a Containerfile.

Prerequisites

-

The

container-toolsmeta-package is installed.

Procedure

Create a

Containerfile:cat Containerfile FROM registry.redhat.io/rhel9/rhel-bootc:latest RUN dnf -y install cloud-init && \ ln -s ../cloud-init.target /usr/lib/systemd/system/default.target.wants && \ ln -s ../cloud-init.target /usr/lib/systemd/system/default.target.wants && \ dnf clean all dnf clean all$ cat Containerfile FROM registry.redhat.io/rhel9/rhel-bootc:latest RUN dnf -y install cloud-init && \ ln -s ../cloud-init.target /usr/lib/systemd/system/default.target.wants && \ dnf clean allCopy to Clipboard Copied! Toggle word wrap Toggle overflow This

Containerfileexample adds thecloud-inittool, so it automatically fetches SSH keys and can run scripts from the infrastructure and also gather configuration and secrets from the instance metadata. For example, you can use this container image for pre-generated AWS or KVM guest systems.Build the

<image>image by usingContainerfilein the current directory:podman build -t quay.io/<namespace>/<image>:<tag> .

$ podman build -t quay.io/<namespace>/<image>:<tag> .Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

List all images:

podman images

$ podman images REPOSITORY TAG IMAGE ID CREATED SIZE localhost/<image> latest b28cd00741b3 About a minute ago 2.1 GBCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2. Building derived bootable images by using multi-stage builds

The deployment image should include only the application and its required runtime, without adding any build tools or unnecessary libraries. To achieve this, use a two-stage Containerfile: one stage for building the artifacts and another for hosting the application.

With multi-stage builds, you use multiple FROM instructions in your Containerfile. Each FROM instruction can use a different base, and each of them begins a new stage of the build. You can selectively copy artifacts from one stage to another, and exclude everything you do not need in the final image.

Multi-stage builds offer several advantages:

- Smaller image size

- By separating the build environment from the runtime environment, only the necessary files and dependencies are included in the final image, significantly reducing its size.

- Improved security

- Since build tools and unnecessary libraries are excluded from the final image, the attack surface is reduced, leading to a more secure container.

- Optimized performance

- A smaller image size means faster download, deployment, and startup times, improving the overall efficiency of the containerized application.

- Simplified maintenance

- With the build and runtime environments separated, the final image is cleaner and easier to maintain, containing only what is needed to run the application.

- Cleaner builds

- Multi-stage builds help avoid clutter from intermediate files, which could accumulate during the build process, ensuring that only essential artifacts make it into the final image.

- Resource efficiency

- The ability to build in one stage and discard unnecessary parts minimizes the use of storage and bandwidth during deployment.

- Better Layer Caching

- With clearly defined stages, Podman can efficiently cache the results of previous stages, by accelerating up future builds.

The following Containerfile consists of two stages. The first stage is typically named builder and it compiles a golang binary. The second stage copies the binary from the first stage. The default working directory for the go-toolset builder is opt/ap-root/src.

As a result, the final container image includes the helloworld binary but no data from the previous stage.

You can also use multi-stage builds to perform the following scenarios:

- Stopping at a specific build stage

- When you build your image, you can stop at a specified build stage. For example:

podman build --target build -t hello .

$ podman build --target build -t hello .For example, you can use this approach to debugging a specific build stage.

- Using an external image as a stage

-

You can use the

COPY --frominstruction to copy from a separate image either using the local image name, a tag available locally or on a container registry, or a tag ID. For example:

COPY --from=<image> <source_path> <destination_path>

COPY --from=<image> <source_path> <destination_path>- Using a previous stage as a new stage

-

You can continue where a previous stage ended by using the

FROMinstruction. From example:

2.3. Running a container image

Use the podman run command to run and test your container.

Prerequisites

-

The

container-toolsmeta-package is installed.

Procedure

Run the container named

mybootcbased on thequay.io/<namespace>/<image>:<tag>container image:podman run -it --rm --name mybootc quay.io/<namespace>/<image>:<tag> /bin/bash

$ podman run -it --rm --name mybootc quay.io/<namespace>/<image>:<tag> /bin/bashCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

The

-ioption creates an interactive session. Without the-toption, the shell stays open, but you cannot type anything to the shell. -

The

-toption opens a terminal session. Without the-ioption, the shell opens and then exits. -

The

--rmoption removes thequay.io/<namespace>/<image>:<tag>container image after the container exits.

-

The

Verification

List all running containers:

podman ps

$ podman ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 7ccd6001166e quay.io/<namespace>/<image>:<tag> /sbin/init 6 seconds ago Up 5 seconds ago mybootcCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. Pushing a container image to the registry

Use the podman push command to push an image to your own, or a third party, registry and share it with others. The following procedure uses the Red Hat Quay registry.

Prerequisites

-

The

container-toolsmeta-package is installed. - An image is built and available on the local system.

- You have created the Red Hat Quay registry. For more information see Proof of Concept - Deploying Red Hat Quay.

Procedure

Push the

quay.io/<namespace>/<image>:<tag>container image from your local storage to the registry:podman push quay.io/<namespace>/<image>:<tag>

$ podman push quay.io/<namespace>/<image>:<tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Building and managing logically bound images

Logically bound images give you support for container images that are lifecycle bound to the base bootc image. This helps combine different operational processes for applications and operating systems, and the container application images are referenced from the base image as image files or an equivalent. Consequently, you can manage multiple container images for system installations.

You can use containers for lifecycle-bound workloads, such as security agents and monitoring tools. You can also upgrade such workloads by using the bootc upgrade command.

3.1. Logically bound images

Logically bound images enable an association of the container application images to a base bootc system image. The term logically bound is used to contrast with physically bound images. The logically bound images offer the following benefits:

- You can update the bootc system without re-downloading the application container images.

- You can update the application container images without modifying the bootc system image, which is especially useful for development work.

The following are examples for lifecycle bound workloads, whose activities are usually not updated outside of the host:

- Logging, for example, journald→remote log forwarder container

- Monitoring, for example, Prometheus node_exporter

- Configuration management agents

- Security agents

Another important property of the logically bound images is that they must be present and available on the host, possibly from a very early stage in the boot process.

Differently from the default usage of tools like Podman or Docker, images might be pulled dynamically after the boot starts, which requires a functioning network. For example, if the remote registry is temporarily unavailable, the host system might run longer without log forwarding or monitoring, which is not desirable. Logically bound images enable you to reference container images similarly to you can with ExecStart= in a systemd unit.

When using logically bound images, you must manage multiple container images for the system to install the logically bound images. This is an advantage and also a disadvantage. For example, for a disconnected or offline installation, you must mirror all the containers, not just one. The application images are only referenced from the base image as .image files or an equivalent.

- Logically bound images installation

-

When you run

bootc install, logically bound images must be present in the default/var/lib/containerscontainer store. The images will be copied into the target system and present directly at boot, alongside the bootc base image. - Logically bound images lifecycle

-

Logically bound images are referenced by the bootable container and have guaranteed availability when the bootc based server starts. The image is always upgraded by using

bootc upgradeand is available asread-onlyto other processes, such as Podman. - Logically bound images management on upgrades, rollbacks, and garbage collection

- During upgrades, the logically bound images are managed exclusively by bootc.

- During rollbacks, the logically bound images corresponding to rollback deployments are retained.

- bootc performs garbage collection of unused bound images.

3.2. Using logically bound images

Each logically bound image is defined in a Podman Quadlet .image or .container file. To use logically bound images, follow the steps:

Prerequisites

-

The

container-toolsmeta-package is installed.

Procedure

- Select the image that you want to logically bound.

Create a

Containerfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the

.containerdefinition, use:GlobalArgs=--storage-opt=additionalimagestore=/usr/lib/bootc/storage

GlobalArgs=--storage-opt=additionalimagestore=/usr/lib/bootc/storageCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the

Containerfileexample, the image is selected to be logically bound by creating a symlink in the/usr/lib/bootc/bound-images.ddirectory pointing to either an.imageor a.containerfile.Run the

bootc upgradecommand.bootc upgrade

$ bootc upgradeCopy to Clipboard Copied! Toggle word wrap Toggle overflow The bootc upgrade performs the following overall steps:

- Fetches the new base image from the image repository. See linkhttps://docs.redhat.com/en/documentation/red_hat_enterprise_linux/9/html/using_image_mode_for_rhel_to_build_deploy_and_manage_operating_systems/managing-users-groups-ssh-key-and-secrets-in-image-mode-for-rhel#configuring-container-pull-secrets_managing-users-groups-ssh-key-and-secrets-in-image-mode-for-rhel[Configuring container pull secrets].

- Reads the new base image root file system to discover logically bound images.

-

Automatically pulls any discovered logically bound images defined in the new bootc image into the bootc-owned

/usr/lib/bootc/storageimage storage.

Make the bound images become available to container runtimes such as Podman. For that, you must explicitly configure bound images to point to the bootc storage as an "additional image store". For example:

podman --storage-opt=additionalimagestore=/usr/lib/bootc/storage run <image>

podman --storage-opt=additionalimagestore=/usr/lib/bootc/storage run <image>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantDo not attempt to globally enable the

/usr/lib/bootc/storageimage storage in/etc/containers/storage.conf. Only use the bootc storage for logically bound images.

The bootc image store is owned by bootc. The logically bound images will be garbage collected when they are no longer referenced by a file in the /usr/lib/bootc/bound-images.d directory.

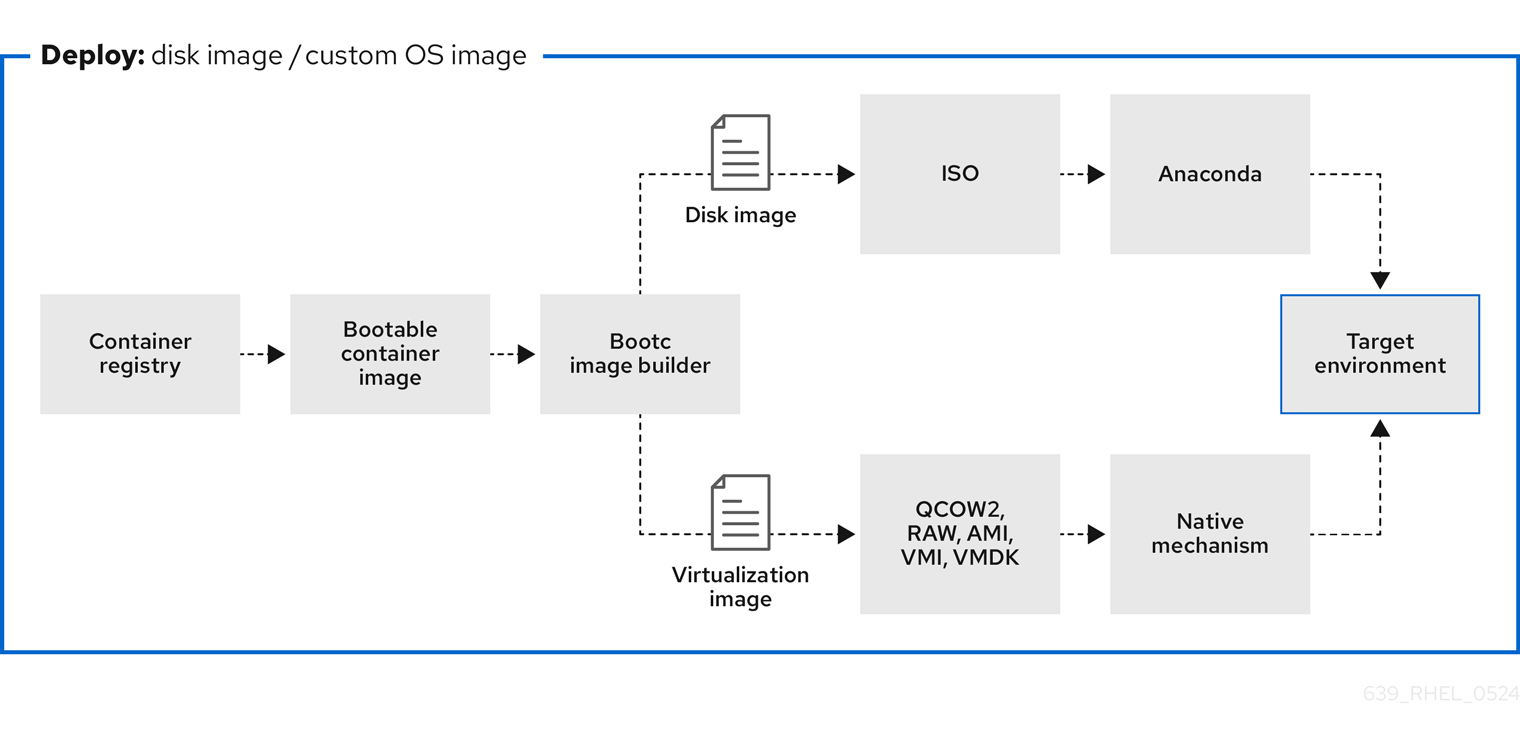

Chapter 4. Creating bootc compatible base disk images with bootc-image-builder

The bootc-image-builder, available as a Technology Preview, is a containerized tool to create disk images from bootc images. You can use the images that you build to deploy disk images in different environments, such as the edge, server, and clouds.

4.1. Introducing image mode for RHEL for bootc-image-builder

By using the bootc-image-builder tool, you can convert bootc images into disk images for a variety of different platforms and formats. Converting bootc images into disk images is equivalent to installing a bootc. After you deploy these disk images to the target environment, you can update them directly from the container registry.

Building base disk images which come from private registries by using bootc-image-builder is not supported in this release. Building base disk images which come from private registries by using bootc-image-builder is not supported in this release. The bootc-image-builder tool is intended to create images from Red Hat official registry.redhat.io repository, to ensure compliance with the Red Hat Enterprise Linux End User License Agreement (EULA).

While you cannot directly use bootc-image-builder with private registries, you can still build your base images by using one of the following methods:

- Use a local RHEL system, installing the Podman tool, and building your image locally. Then, you can push the images to your private registry.

- Use a CI/CD pipeline: Create a CI/CD pipeline that uses a RHEL based system to build images and push them to your private registry.

The bootc-image-builder tool supports generating the following image types: The bootc-image-builder tool supports generating the following image types:

- Disk image formats, such as ISO, suitable for disconnected installations.

Virtual disk images formats, such as:

- QEMU copy-on-write (QCOW2)

- Amazon Machine Image (AMI)

- Unformatted raw disk (Raw)

- Virtual Machine Image (VMI)

Deploying from a container image is beneficial when you run VMs or servers because you can achieve the same installation result. That consistency extends across multiple different image types and platforms when you build them from the same container image. Consequently, you can minimize the effort in maintaining operating system images across platforms. You can also update systems that you deploy from these disk images by using the bootc tool, instead of re-creating and uploading new disk images with bootc-image-builder.

Generic base container images do not include any default passwords or SSH keys. Also, the disk images that you create by using the bootc-image-builder tool do not contain the tools that are available in common disk images, such as cloud-init. These disk images are transformed container images only.

Although you can deploy a rhel-10-bootc image directly, you can also create your own customized images that are derived from this bootc image. The bootc-image-builder tool takes the rhel-10-bootc OCI container image as an input.

4.2. Installing bootc-image-builder

The bootc-image-builder is intended to be used as a container and it is not available as an RPM package in RHEL. To access it, follow the procedure.

Prerequisites

-

The

container-toolsmeta-package is installed. The meta-package contains all container tools, such as Podman, Buildah, and Skopeo. -

You are authenticated to

registry.redhat.io. For details, see Red Hat Container Registry Authentication.

Procedure

Login to authenticate to

registry.redhat.io:sudo podman login registry.redhat.io

$ sudo podman login registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the

bootc-image-buildertool:sudo podman pull registry.redhat.io/rhel9/bootc-image-builder

$ sudo podman pull registry.redhat.io/rhel9/bootc-image-builderCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

List all images pulled to your local system:

sudo podman images

$ sudo podman images REPOSITORY TAG IMAGE ID CREATED SIZE registry.redhat.io/rhel9/bootc-image-builder latest b361f3e845ea 24 hours ago 676 MBCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Creating QCOW2 images by using bootc-image-builder

Build a RHEL bootc image into a QEMU (QCOW2) image for the architecture that you are running the commands on.

The RHEL base image does not include a default user. Optionally, you can inject a user configuration by using the --config option to run the bootc-image-builder container. Alternatively, you can configure the base image with cloud-init to inject users and SSH keys on first boot. See Users and groups configuration - Injecting users and SSH keys by using cloud-init. .Prerequisites

- You have Podman installed on your host machine.

-

You have

virt-installinstalled on your host machine. -

You have root access to run the

bootc-image-buildertool, and run the containers in--privilegedmode, to build the images.

Procedure

Optional: Create a

config.tomlto configure user access, for example:[[customizations.user]] name = "user" password = "pass" key = "ssh-rsa AAA ... user@email.com" groups = ["wheel"]

[[customizations.user]] name = "user" password = "pass" key = "ssh-rsa AAA ... user@email.com" groups = ["wheel"]Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run

bootc-image-builder. Optionally, if you want to use user access configuration, pass theconfig.tomlas an argument.NoteIf you do not have the container storage mount and

--localimage options, your image must be public.- The following is an example of creating a public QCOW2 image:

The following example creates a public QCOW2 image. The image must be accessible from a registry, such as

registry.redhat.io/rhel10/bootc-image-builder:latest.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - The following is an example of creating a private QCOW2 image:

This example creates a private QCOW2 image from a local container.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can find the

.qcow2image in the output folder.

4.4. Creating VMDK images by using bootc-image-builder

Create a Virtual Machine Disk (VMDK) from a bootc image and use it within VMware’s virtualization platforms, such as vSphere, or use in Oracle VirtualBox.

Prerequisites

- You have Podman installed on your host machine.

-

You have authenticated to the Red Hat Registry by using the

podman login registry.redhat.io. -

You have pulled the

rhel10/bootc-image-buildercontainer image.

Procedure

Create a

Containerfilewith the following content:FROM registry.redhat.io/rhel10/rhel-bootc:latest RUN dnf -y install cloud-init open-vm-tools && \ ln -s ../cloud-init.target /usr/lib/systemd/system/default.target.wants && \ rm -rf /var/{cache,log} /var/lib/{dnf,rhsm} && \ systemctl enable vmtoolsd.serviceFROM registry.redhat.io/rhel10/rhel-bootc:latest RUN dnf -y install cloud-init open-vm-tools && \ ln -s ../cloud-init.target /usr/lib/systemd/system/default.target.wants && \ rm -rf /var/{cache,log} /var/lib/{dnf,rhsm} && \ systemctl enable vmtoolsd.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Build the bootc image:

podman build . -t localhost/rhel-bootc-vmdk

# podman build . -t localhost/rhel-bootc-vmdkCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a VMDK file from the previously created bootc image:

Create a VMDK file from the previously created bootc image. The image must be accessible from a registry, such as

registry.redhat.io/rhel10/bootc-image-builder:latest.Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

--localoption uses the local container storage to source the originating image to produce the VMDK instead of a remote repository.

A VMDK disk file for the bootc image is stored in the output/vmdk directory.

4.5. Creating GCE images by using bootc-image-builder

Build a RHEL bootc image into a GCE image for the architecture that you are running the commands on. The RHEL base image does not include a default user. Optionally, you can inject a user configuration by using the --config option to run the bootc-image-builder container. Alternatively, you can configure the base image with cloud-init to inject users and SSH keys on first boot. See Users and groups configuration - Injecting users and SSH keys by using cloud-init.

Prerequisites

- You have Podman installed on your host machine.

-

You have root access to run the

bootc-image-buildertool, and run the containers in--privilegedmode, to build the images.

Procedure

Optional: Create a

config.tomlto configure user access, for example:[[customizations.user]] name = "user" password = "pass" key = "ssh-rsa AAA ... user@email.com" groups = ["wheel"]

[[customizations.user]] name = "user" password = "pass" key = "ssh-rsa AAA ... user@email.com" groups = ["wheel"]Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run

bootc-image-builder. Optionally, if you want to use user access configuration, pass theconfig.tomlas an argument.NoteIf you do not have the container storage mount and

--localimage options, your image must be public.Run

bootc-image-builder. Optionally, if you want to use user access configuration, pass theconfig.tomlas an argument. The image must be accessible from a registry, such asregistry.redhat.io/rhel10/bootc-image-builder:latest.The following is an example of creating a

gceimage:Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can find the

gceimage in the output folder.

4.6. Creating AMI images by using bootc-image-builder and uploading it to AWS

Create an Amazon Machine Image (AMI) from a bootc image and use it to launch an Amazon Web Services (AWS) Amazon Elastic Compute Cloud (EC2) instance.

Prerequisites

- You have Podman installed on your host machine.

-

You have an existing

AWS S3bucket within your AWS account. -

You have root access to run the

bootc-image-buildertool, and run the containers in--privilegedmode, to build the images. -

You have the

vmimportservice role configured on your account to import an AMI into your AWS account.

Procedure

Create a disk image from the bootc image.

- Configure the user details in the Containerfile. Make sure that you assign it with sudo access.

- Build a customized operating system image with the configured user from the Containerfile. It creates a default user with passwordless sudo access.

Optional: Configure the machine image with

cloud-init. See Users and groups configuration - Injecting users and SSH keys by using cloud-init. The following is an example:FROM registry.redhat.io/rhel10/rhel-bootc:latest RUN dnf -y install cloud-init && \ ln -s ../cloud-init.target /usr/lib/systemd/system/default.target.wants && \ rm -rf /var/{cache,log} /var/lib/{dnf,rhsm}FROM registry.redhat.io/rhel10/rhel-bootc:latest RUN dnf -y install cloud-init && \ ln -s ../cloud-init.target /usr/lib/systemd/system/default.target.wants && \ rm -rf /var/{cache,log} /var/lib/{dnf,rhsm}Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou can also use

cloud-initto add users and additional configuration by using instance metadata.Build the bootc image. For example, to deploy the image to an

x86_64AWS machine, use the following commands:podman build -t quay.io/<namespace>/<image>:<tag> . podman push quay.io/<namespace>/<image>:<tag> .

$ podman build -t quay.io/<namespace>/<image>:<tag> . $ podman push quay.io/<namespace>/<image>:<tag> .Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Use the

bootc-image-buildertool to create an AMI from the bootc container image. Use the

bootc-image-buildertool to create a public AMI image from the bootc container image. The image must be accessible from a registry, such asregistry.redhat.io/rhel10/bootc-image-builder:latest.Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe following flags must be specified all together. If you do not specify any flag, the AMI is exported to your output directory.

-

--aws-ami-name- The name of the AMI image in AWS -

--aws-bucket- The target S3 bucket name for intermediate storage when you are creating the AMI --aws-region- The target region for AWS uploadsThe

bootc-image-buildertool builds an AMI image and uploads it to your AWS s3 bucket by using your AWS credentials to push and register an AMI image after building it. Thebootc-image-buildertool builds an AMI image and uploads it to yourAWS S3 bucketby using your AWS credentials to push and register an AMI image after building it.

-

Next steps

- You can deploy your image. See Deploying a container image to AWS with an AMI disk image.

You can make updates to the image and push the changes to a registry. See Managing RHEL bootc images.

- If you have any issues configuring the requirements for your AWS image, see the following documentation

- AWS IAM account manager

- Using high-level (s3) commands with the AWS CLI.

- S3 buckets.

- Regions and Zones.

- Launching a customized RHEL image on AWS.

- Launching a customized RHEL image on AWS.

For more details on users, groups, SSH keys, and secrets, see Managing users, groups, SSH keys, and secrets in image mode for RHEL. For more details on users, groups, SSH keys, and secrets, see Managing users, groups, SSH keys, and secrets in image mode for RHEL.

Additional resources

4.7. Creating raw disk images by using bootc-image-builder

You can convert a bootc image to a raw image with an MBR or GPT partition table by using bootc-image-builder.

The RHEL base image does not include a default user, so optionally, you can inject a user configuration by using the --config option to run the bootc-image-builder container. Alternatively, you can configure the base image with cloud-init to inject users and SSH keys on first boot. See Users and groups configuration - Injecting users and SSH keys by using cloud-init.

Prerequisites

- You have Podman installed on your host machine.

-

You have root access to run the

bootc-image-buildertool, and run the containers in--privilegedmode, to build the images. - You have pulled your target container image in the container storage.

Procedure

Optional: Create a

config.tomlto configure user access, for example:[[customizations.user]] name = "user" password = "pass" key = "ssh-rsa AAA ... user@email.com" groups = ["wheel"]

[[customizations.user]] name = "user" password = "pass" key = "ssh-rsa AAA ... user@email.com" groups = ["wheel"]Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Run

bootc-image-builder. If you want to use user access configuration, pass theconfig.tomlas an argument: Run

bootc-image-builder. If you want to use user access configuration, pass theconfig.tomlas an argument. The image must be accessible from a registry, such asregistry.redhat.io/rhel10/bootc-image-builder:latest.Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can find the

.rawimage in the output folder.

4.8. Creating ISO images by using bootc-image-builder

You can use bootc-image-builder to create an ISO image from which you can perform an offline deployment of a bootable container.

Prerequisites

- You have Podman installed on your host machine.

- Your host system is subscribed or you have injected repository configuration by using bind mounts to ensure the image build process can fetch RPMs.

-

You have root access to run the

bootc-image-buildertool, and run the containers in--privilegedmode, to build the images.

Procedure

Optional: Create a

config.tomlto which overrides the default embedded Kickstart which performs an automatic installation.Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Run

bootc-image-builder. If you do not want to add any configuration, omit the-v $(pwd)/config.toml:/config.tomlargument. Run

bootc-image-builderto create a public ISO image. If you do not want to add any configuration, omit the-v $(pwd)/config.toml:/config.tomlargument. The image must be accessible from a registry, such asregistry.redhat.io/rhel10/bootc-image-builder:latest.Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can find the

.isoimage in the output folder.

Next steps

You can use the ISO image on unattended installation methods, such as USB sticks or Install-on-boot. The installable boot ISO contains a configured Kickstart file. See Deploying a container image by using Anaconda and Kickstart.

WarningBooting the ISO on a machine with an existing operating system or data can be destructive, because the Kickstart is configured to automatically reformat the first disk on the system.

- You can make updates to the image and push the changes to a registry. See Managing RHEL bootable images.

4.9. Using bootc-image-builder to build ISO images with a Kickstart file

You can use a Kickstart file to configure various parts of the installation process, such as setting up users, customizing partitioning, and adding an SSH key. You can include the Kickstart file in an ISO build to configure any part of the installation process, except the deployment of the base image. For ISOs with bootc container base images, you can use a Kickstart file to configure anything except the ostreecontainer command.

For example, you can use a Kickstart to perform either a partial installation, a full installation, or even omit the user creation. Use bootc-image-builder to build an ISO image that contains the custom Kickstart to configure your installation process.

Prerequisites

- You have Podman installed on your host machine.

-

You have root access to run the

bootc-image-buildertool, and run the containers in--privilegedmode, to build the images.

Procedure

Create your Kickstart file. The following Kickstart file is an example of a fully unattended Kickstart file configuration that contains user creation, and partition instructions.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Save the Kickstart configuration in the

tomlformat to inject the Kickstart content. For example,config.toml. Run

bootc-image-builder, and include the Kickstart file configuration that you want to add to the ISO build. Thebootc-image-builderautomatically adds theostreecontainercommand that installs the container image.NoteIf you do not have the container storage mount and

--localimage options, your image must be public.The following is an example of creating a public ISO image:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following is an example of creating a private ISO image:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can find the

.isoimage in the output folder.

4.10. Verification and troubleshooting

- If you have any issues configuring the requirements for your AWS image, see the following documentation

- For more details on users, groups, SSH keys, and secrets, see

Chapter 5. Customizing disk images of RHEL image mode with advanced partitioning

There are 2 options to customize advanced partitions:

- Disk customizations

- Filesystem customizations

However, the two customizations are incompatible with each other. You cannot use both customizations in the same blueprint.

5.1. Understanding partitions

The following are the general principles about partitions:

- The full disk image size is always larger than the size of the sum of the partitions, due to requirements for headers and metadata. Consequently, all sizes are treated as minimum requirements, whether for specific filesystems, partitions, logical volumes, or the image itself.

- When the partition is automatically added, the partition that contains the root filesystem is always the last in the partition table layout. This is valid for a plain formatted partition, an LVM Volume Group, or a Btrfs partition. For disk customizations, the order that you defined is respected.

-

For the raw partitioning, that is, with no LVM, the partition containing the root filesystem is grown to fill any leftover space on the partition table. Logical Volumes are not grown to fill the space in the Volume Group because they are simple to grow on a live system. Some directories have hard-coded minimum sizes which cannot be overridden. These are 1 GiB for

/and 2 GiB for/usr. As a result, if/usris not on a separate partition, the root filesystem size is at least 3 GiB.

5.2. The disk customizations option

The disk customizations option provides a more powerful interface to control the whole partitioning layout of the image.

- Allowed mountpoints

When using

bootc-image-builder, only the following directories allow customization:-

The

/(root) directory. -

Custom directories under

/var, but not/varitself.

-

The

- Not allowed mountpoints

Under

/var, the following mount points do not allow customization:-

/var/home -

/var/lock -

/var/mail -

/var/mnt -

/var/roothome -

/var/run -

/var/srv -

/var/usrlocal

-

5.3. Describing disk customizations in a blueprint

When using the Disk customizations, you can describe the partition table almost entirely by using a blueprint. The customizations have the following structure:

Partitions: The top level is a list of partitions.

type: Each partition has a type, which can be eitherplainorlvm. If the type is not set, it defaults toplain. The remaining required and optional properties of the partition depend on the type.plain: A plain partition is a partition with a filesystem. It supports the following properties:-

fs_type: The filesystem type, which should be one ofxfs,ext4,vfat, orswap. Setting it toswapwill create a swap partition. The mountpoint for a swap partition must be empty. -

minsize: The minimum size of the partition, as an integer (in bytes) or a string with a data unit (for example 3 GiB). The final size of the partition in the image might be larger for specific mountpoints. See Understanding partitions section. -

mountpointThe mountpoint for the filesystem. For swap partitions, this must be empty. -

label: The label for the filesystem (optional).

-

lvm: An lvm partition is a partition with an LVM volume group. Only single Persistent Volumes volume groups are supported. It supports the following properties:-

name: The name of the volume group (optional; if unset, a name will be generated automatically). -

minsize: The minimum size of the volume group, as an integer (in bytes) or a string with a data unit (for example 3 GiB). The final size of the partition and volume group in the image might be larger if the value is smaller than the sum of logical volumes it contains. logical_volumes: One or more logical volumes for the volume group. Each volume group supports the following properties:-

name: The name of the logical volume (optional; if unset, a name will be generated automatically based on the mountpoint). -

minsize: The minimum size of the logical volume, as an integer (in bytes) or a string with a data unit (for example 3 GiB). The final size of the logical volume in the image might be larger for specific mountpoints. See the General principles chapter for more details). -

label: The label for the filesystem (optional). -

fs_type: The filesystem type, which should be one ofxfs,ext4,vfat, orswap. Setting it to swap will create a swap logical volume. The mountpoint for a swap logical volume must be empty. -

mountpoint: The mountpoint for the logical volume’s filesystem. For swap logical volumes, this must be empty.

-

-

- Order:

The order of each element in a list is respected when creating the partition table. The partitions are created in the order they are defined, regardless of their type.

- Incomplete partition tables:

Incomplete partitioning descriptions are valid. Partitions, LVM logical volumes, are added automatically to create a valid partition table. The following rules are applied:

-

A root filesystem is added if one is not defined. This is identified by the mountpoint

/. If an LVM volume group is defined, the root filesystem is created as a logical volume, otherwise it will be created as a plain partition with a filesystem. The type of the filesystem, for plain and LVM, depends on the distribution (xfs for RHEL and CentOS, ext4 for Fedora). See Understanding partitions section for information about the sizing and order of the root partition. -

A boot partition is created if needed and if one is not defined. This is identified by the mountpoint

/boot. A boot partition is needed when the root partition (mountpoint/) is on an LVM logical volume. It is created as the first partition after the ESP (see next item). -

An EFI system partition (ESP) is created if needed. This is identified by the mountpoint

/boot/efi. An ESP is needed when the image is configured to boot with UEFI. This is defined by the image definition and depends on the image type, the distribution, and the architecture. The type of the filesystem is alwaysvfat. By default, the ESP is 200 MiB and is the first partition after the BIOS boot (see next item). - A 1 MiB unformatted BIOS boot partition is created at the start of the partition table if the image is configured to boot with BIOS. This is defined by the image definition and depends on the image type, the distribution, and the architecture. Both a BIOS boot partition and an ESP are created for images that are configured for hybrid boot.

- Combining partition types:

You can define multiple partitions. The following combination of partition types are valid:

-

plainandlvm: Plain partitions can be created alongside an LVM volume group. However, only one LVM volume group can be defined. - Examples: Blueprint to define two partitions

The following blueprint defines two partitions. The first is a 50 GiB partition with an ext4 filesystem that will be mounted at /data. The second is an LVM volume group with three logical volumes, one for root /, one for home directories /home, and a swap space in that order. The LVM volume group will have 15 GiB of non-allocated space.

5.4. The filesystem customization option

The filesystem customization option provides the final partition table of an image that you built with image builder, and it is determined by a combination of the following factors:

- The base partition table for a given image type.

The relevant blueprint customizations:

- Partitioning mode.

- Filesystem customizations.

The image size parameter of the build request:

-

On the command line, this is the

--sizeoption of thecomposer-cli compose startcommand.

-

On the command line, this is the

The following describes how these factors affect the final layout of the partition table.

- Modifying partition tables

You can modify the partition table by taking the following aspects in consideration:

- Partitioning modes

The partitioning mode controls how the partition table is modified from the image type’s default layout.

-

The

rawpartition type does not convert any partition to LVM. -

The

lvmpartition type always converts the partition that contains the/root mountpoint to an LVM Volume Group and creates a root Logical Volume. Except from/boot, any extra mountpoint is added to the Volume Group as new Logical Volumes. The

auto-lvmmode is the default mode and converts a raw partition table to an LVM-based one if and only if new mountpoints are defined in the filesystems customization. See the Mountpoints entry for more details.- Mountpoints

You can define new filesystems and minimum partition sizes by using the filesystems customization in the blueprint. By default, if new mountpoints are created, a partition table is automatically converted to LVM. See the Partitioning modes entry for more details.

- Image size The minimum size of the partition table is the size of the disk image. The final size of the image will either be the value of the size parameter or the sum of all partitions and their associated metadata, depending on which one is the larger.

5.5. Creating images with specific sizes

To create a disk image of a very specific size, you must ensure that the following requirements are met:

-

You must specify the exact

[Image size]in the build request. - The mountpoints that you define as customizations must specify sizes that are smaller than the total size in sum. This is required, because the partition table, partitions, and other entities often require extra space for metadata and headers, so the space required to fit all the mountpoints is always larger than the sum of the size of the partitions. However, the exact size of the extra space that is required varies based on many factors, such as image type, for example.

The following are overall steps to create a disk image of a very specific size in the TOML file:

-

Set the

[Image size]parameter to the size that you want. -

Add any extra mountpoints with their required minimum sizes. Ensure that the sum of the sizes is smaller than the image size by at least 3.01 GiB if there is no

/usrmountpoint, or at least 1.01 GiB if there is. The extra 0.01 MiB is more than enough for the headers and metadata for which extra space might be reserved. -

Do not specify a size for the

/mountpoint.

With this, you create a disk with a partition table of the desired size with each partition sized to fit the desired mountpoints. The root partition, root LVM Logical Volume, will be at least 3 GiB, or 1 GiB if /usr is specified. See Understanding partitions for more details.

- If the partition table does not have any LVM Volume Groups (VG), the root partition will be grown to fill the remaining space.

- If the partition table contains an LVM Volume Group (VG), the VG will have unallocated extents that can be used to grow any of the Logical Volumes.

5.6. Using bootc-image-builder to add with advanced partitioning to disk images of image mode

You can customize your bootc-image-builder blueprint to implement advanced partitioning for osbuild-composer. The following are possible custom mountpoints:

-

You can create LVM-based images under all partitions on LVs except,

/bootand/boot/efi. - You can create an LV-based swap.

- You can give VGs and LVs custom names.

Include partitioning configurations in the base image that bootc-image-builder will read to create the partitioning layout, making the container itself the source of truth for the partition table. Mountpoints for partitions and logical volumes should be created in the base container image used to build the disk. This is particularly important for top-level mountpoints, such as the /app mountpoint. The bootc-image-builder will validate the configuration against the bootc container before building, in order to avoid creating unbootable images.

Prerequisites

- You have Podman installed on your host machine.

-

You have root access to run the

bootc-image-buildertool, and run the containers in--privilegedmode, to build the images. - QEMU is installed.

Procedure

Create a

config.tomfile with the following content:Add a user to the image.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Run the

bootc-image-builder. Optionally, if you want to use user access configuration, pass theconfig.tomlas an argument. The following is an example of creating a public QCOW2 image:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

You can find the .qcow2 image in the output folder.

Verification

Run the resulting QCOW2 file on a virtual machine.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Access the system in the virtual machine launched with SSH.

ssh -i /<path_to_private_ssh-key> <user1>@<ip-address>

# ssh -i /<path_to_private_ssh-key> <user1>@<ip-address>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Next steps

- You can deploy your image. See Deploying a container image using KVM with a QCOW2 disk image.

- You can make updates to the image and push the changes to a registry. See Managing RHEL bootc images.

5.7. Building disk images of image mode RHEL with advanced partitioning

Create image mode disk images with advanced partitioning by using bootc-image-builder. The image mode disk images that you create of image mode RHEL with custom mount points, include custom mount options, LVM-based partitions and LVM-based SWAP. With that you can, for example, change the size of the / and the /boot directories by using a config.toml file. When installing the RHEL image mode on bare-metal machines, you can benefit from all partitioning features available on Anaconda.

Prerequisites

- You have Podman installed on your host machine.

-

You have

virt-installinstalled on your host machine. -

You have root access to run the

bootc-image-buildertool, and run the containers in--privilegedmode, to build the images.

Procedure

Create a

config.tomlto configure custom mount options, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run

bootc-image-builder, passing theconfig.tomlas an argument.NoteIf you do not have the container storage mount and --local image options, your image must be public.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can find your image with the customized advanced partitioning in the output folder.

Chapter 6. Best practices for running containers using local sources

You can access content hosted in an internal registry that requires a custom Transport Layer Security (TLS) root certificate, when running RHEL bootc images.

There are two options available to install content to a container by using only local resources:

-

Bind mounts: Use for example

-v /etc/pki:/etc/pkito override the container’s store with the host’s. -

Derived image: Create a new container image with your custom certificates by building it using a

Containerfile.

You can use the same techniques to run a bootc-image-builder container or a bootc container when appropriate.

6.1. Importing custom certificate to a container by using bind mounts

Use bound mounts to override the container’s store with the host’s.

Procedure

Run RHEL bootc image and use bind mount, for example

-v /etc/pki:/etc/pki, to override the container’s store with the host’s:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

List certificates inside the container:

ls -l /etc/pki

# ls -l /etc/pkiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2. Importing custom certificates to a container by using Containerfile

Create a new container image with your custom certificates by building it using a Containerfile.

Procedure

Create a

Containerfile:FROM <internal_repository>/<image> RUN mkdir -p /etc/pki/ca-trust/extracted/pem/ COPY tls-ca-bundle.pem /etc/pki/ca-trust/extracted/pem/ RUN rm -rf /etc/yum.repos.d/* COPY echo-rhel9_4.repo /etc/yum.repos.d/

FROM <internal_repository>/<image> RUN mkdir -p /etc/pki/ca-trust/extracted/pem/ COPY tls-ca-bundle.pem /etc/pki/ca-trust/extracted/pem/ RUN rm -rf /etc/yum.repos.d/* COPY echo-rhel9_4.repo /etc/yum.repos.d/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Build the custom image:

podman build -t <your_image> .

# podman build -t <your_image> .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

<your_image>:podman run -it --rm <your_image>

# podman run -it --rm <your_image>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

List the certificates inside the container:

ls -l /etc/pki/ca-trust/extracted/pem/

# ls -l /etc/pki/ca-trust/extracted/pem/ tls-ca-bundle.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 7. Deploying the RHEL bootc images

You can deploy the rhel-bootc container image by using the following different mechanisms.

- Anaconda

-

bootc-image-builder -

bootc install

The following bootc image types are available:

Disk images that you generated by using the

bootc image-buildersuch as:- QCOW2 (QEMU copy-on-write, virtual disk)

- Raw (Mac Format)

- AMI (Amazon Cloud)

- ISO: Unattended installation method, by using an USB Sticks or Install-on-boot.

After you have created a layered image that you can deploy, there are several ways that the image can be installed to a host:

You can use RHEL installer and Kickstart to install the layered image to a bare metal system, by using the following mechanisms:

- Deploy by using USB

- PXE

-

You can also use

bootc-image-builderto convert the container image to a bootc image and deploy it to a bare metal or to a cloud environment.

The installation method happens only one time. After you deploy your image, any future updates will apply directly from the container registry as the updates are published.

Figure 7.1. Deploying a bootc image by using a basic build installer bootc install, or deploying a container image by using Anaconda and Kickstart

Figure 7.2. Using bootc-image-builder to create disk images from bootc images and deploying disk images in different environments, such as the edge, servers, and clouds by using Anaconda, bootc-image-builder or bootc install

7.1. Deploying a container image by using KVM with a QCOW2 disk image

After creating a QCOW2 image from a RHEL bootc image by using the bootc-image-builder tool, you can use a virtualization software to boot it.

Prerequisites

- You created a container image.

- You pushed the container image to an accessible repository.

-

You created a QCOW2 image by using

bootc-image-builder. For instructions, see Creating QCOW2 images by using bootc-image-builder.

Procedure

By using

libvirt, create a virtual machine (VM) with the disk image that you previously created from the container image. For more details, see Creating virtual machines by using the command line.The following example uses

virt-installto create a VM. Replace<qcow2/disk.qcow2>with the path to your QCOW2 file:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

- Connect to the VM in which you are running the container image. See Configuring bridges on a network bond to connect virtual machines with the network for more details.

Next steps

- You can make updates to the image and push the changes to a registry. See Managing RHEL bootc images.

7.2. Deploying a container image to AWS with an AMI disk image

After using the bootc-image-builder tool to create an AMI from a bootc image, and uploading it to a AWS s3 bucket, you can deploy a container image to AWS with the AMI disk image.

Prerequisites

- You created an Amazon Machine Image (AMI) from a bootc image. See Creating AMI images by using bootc-image-builder and uploading it to AWS.

-

cloud-initis available in the Containerfile that you previously created so that you can create a layered image for your use case.

Procedure

- In a browser, access Service→EC2 and log in.

- On the AWS console dashboard menu, choose the correct region. The image must have the Available status, to indicate that it was correctly uploaded.

- On the AWS dashboard, select your image and click .

- In the new window that opens, choose an instance type according to the resources you need to start your image. Click .

- Review your instance details. You can edit each section if you need to make any changes. Click .

- Before you start the instance, select a public key to access it. You can either use the key pair you already have or you can create a new key pair.

Click to start your instance. You can check the status of the instance, which displays as Initializing.

After the instance status is Running, the button becomes available.

- Click . A window appears with instructions on how to connect by using SSH.

Run the following command to set the permissions of your private key file so that only you can read it. See Connect to your Linux instance.

chmod 400 <your-instance-name.pem>

$ chmod 400 <your-instance-name.pem>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Connect to your instance by using its Public DNS:

ssh -i <your-instance-name.pem>ec2-user@<your-instance-IP-address>

$ ssh -i <your-instance-name.pem>ec2-user@<your-instance-IP-address>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Your instance continues to run unless you stop it.

Verification

After launching your image, you can:

- Try to connect to http://<your_instance_ip_address> in a browser.

- Check if you are able to perform any action while connected to your instance by using SSH.

7.3. Deploying a container image from the network by using Anaconda and Kickstart

You can deploy an ISO image by using Anaconda and Kickstart to install your container image. The installable boot ISO already contains the ostreecontainer Kickstart file configured that you can use to provision your custom container image.

Prerequisites

- You have downloaded the 9.4 Boot ISO for your architecture from Red Hat. See Downloading RH boot images.

Procedure

Create an

ostreecontainerKickstart file to fetch the image from the network. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Boot a system by using the 9.4 Boot ISO installation media.

Append the Kickstart file with the following to the kernel argument:

inst.ks=http://<path_to_your_kickstart>

inst.ks=http://<path_to_your_kickstart>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Press CTRL+X to boot the system.

7.4. Deploying a custom ISO container image in disconnected environments

By using using bootc-image-builder to convert a bootc image to an ISO image, you create a system similar to the RHEL ISOs available for download, except that your container image content is embedded in the ISO disk image. You do not need to have access to the network during installation. You can install the ISO disk image that you created from bootc-image-builder to a bare metal system.

Prerequisites

- You have created an ISO image with your bootc image embedded.

Procedure

- Copy your ISO disk image to a USB flash drive.

- Perform a bare-metal installation by using the content in the USB stick into a disconnected environment.

7.5. Deploying an ISO bootc image over PXE boot

You can use a network installation to deploy the RHEL ISO image over PXE boot to run your ISO bootc image.

Prerequisites

- You have downloaded the 9.4 Boot ISO for your architecture from Red Hat. See Downloading RH boot images.

You have configured the server for the PXE boot. Choose one of the following options:

- For HTTP clients, see Configuring the DHCPv4 server for HTTP and PXE boot.

- For UEFI-based clients, see Configuring a TFTP server for UEFI-based clients.

- For BIOS-based clients, see Configuring a TFTP server for BIOS-based clients.

- You have a client, also known as the system to which you are installing your ISO image.

Procedure

- Export the RHEL installation ISO image to the HTTP server. The PXE boot server is now ready to serve PXE clients.

- Boot the client and start the installation.

- Select PXE Boot when prompted to specify a boot source. If the boot options are not displayed, press the Enter key on your keyboard or wait until the boot window opens.

- From the Red Hat Enterprise Linux boot window, select the boot option that you want, and press Enter.

- Start the network installation.

Next steps

- You can make updates to the image and push the changes to a registry. See Managing RHEL bootc images.

7.6. Injecting configuration in the resulting disk images with bootc-image-builder

You can inject configuration into a custom image by using a build config, that is, a .toml or a .json file with customizations for the resulting image. The `build config file is mapped into the container directory to /config.toml. The following example shows how to add a user to the resulting disk image:

Procedure

Create a

./config.toml. The following example shows how to add a user to the disk image.[[customizations.user]] name = "user" password = "pass" key = "ssh-rsa AAA ... user@email.com" groups = ["wheel"]

[[customizations.user]] name = "user" password = "pass" key = "ssh-rsa AAA ... user@email.com" groups = ["wheel"]Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

name- Mandatory. Name of the user. -

password- Not mandatory. Nonencrypted password. -

key- Not mandatory. Public SSH key contents. -

groups- Not mandatory. An array of groups to add the user into.

-

Run

bootc-image-builderand pass the following arguments, including theconfig.toml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Launch a VM, for example, by using

virt-install:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Access the system with SSH:

ssh -i /<path_to_private_ssh-key> <user1>_@_<ip-address>

# ssh -i /<path_to_private_ssh-key> <user1>_@_<ip-address>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Next steps

- After you deploy your container image, you can make updates to the image and push the changes to a registry. See Managing RHEL bootable images.

7.7. Deploying a container image to bare metal by using bootc install

You can perform a bare-metal installation to a device by using a RHEL ISO image. Bootc contains a basic build installer and it is available as the following methods: bootc install to-disk or bootc install to-filesystem.

-

bootc install to-disk: By using this method, you do not need to perform any additional steps to deploy the container image, because the container images include a basic installer. -

bootc install to-filesystem: By using this method, you can configure a target device and root filesystem by using a tool of your choice, for example, LVM.

Prerequisites

- You have downloaded a RHEL 10 Boot ISO from Red Hat for your architecture. See Downloading RHEL boot images.

- You have created a configuration file.

Procedure

Inject a configuration into the running ISO image.

By using

bootc install to-disk:Copy to Clipboard Copied! Toggle word wrap Toggle overflow By using

bootc install to-filesystem:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.8. Deploying a container image by using a single command

The system-reinstall-bootc command provides an interactive CLI that wraps the bootc install to-existing root command. You can deploy a container image into a RHEL cloud instance by using a signal command. The system-reinstall-bootc command performs the following actions:

- Pull the supplied image to set up SSH keys or access the system.

-

Run the

bootc install to-existing-rootcommand with all the bind mounts and SSH keys configured.

The following procedure deploys a bootc image to a new RHEL 10 instance on AWS. When launching the instance, make sure to select your SSH key, or create a new one. Otherwise, the default instance configuration settings can be used.

Prerequisites

- Red Hat Account or Access to Red Hat RPMS

- A package-based RHEL (9.6 / 10.0 or greater) virtual system running in an AWS environment.

- Ability and permissions to SSH into the package system and make "destructive changes."

Procedure

After the instance starts, connect to it by using SSH using the key you selected when creating the instance:

ssh -i <ssh-key-file> <cloud-user@ip>

$ ssh -i <ssh-key-file> <cloud-user@ip>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Make sure that the

system-reinstall-bootcsubpackage is installed:rpm -q system-reinstall-bootc

# rpm -q system-reinstall-bootcCopy to Clipboard Copied! Toggle word wrap Toggle overflow If not, install the

system-reinstall-bootcsubpackage:dnf -y install system-reinstall-bootc

# dnf -y install system-reinstall-bootcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Convert the system to use a bootc image:

system-reinstall-bootc <image>

# system-reinstall-bootc <image>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - You can use the container image from the Red Hat Ecosystem Catalog or the customized bootc image built from a Containerfile.

- Select users to import to the bootc image by pressing the "a" key.

- Confirm your selection twice and wait until the image is downloaded.

Reboot the system:

reboot

# rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the stored SSH host key for the given

<ip>from your/.ssh/known_hostsfile:ssh-keygen -R <ip>

# ssh-keygen -R <ip>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The bootc system is now using a new public SSH host key. When attempting to connect to the same IP address with a different key than what is stored locally, SSH will raise a warning or refuse the connection due to a host key mismatch. Since this change is expected, the existing host key entry can be safely removed from the

~/.ssh/known_hostsfile using the following command.Connect to the bootc system:

ssh -i <ssh-key-file> root@<ip>

# ssh -i <ssh-key-file> root@<ip>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Confirm that the system OS has changed:

bootc status

# bootc statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.9. Advanced installation with to-filesystem

The bootc install contains two subcommands: bootc install to-disk and bootc install to-filesystem.

-

The

bootc-install-to-filesystemperforms installation to the target filesystem. The

bootc install to-disksubcommand consists of a set of opinionated lower level tools that you can also call independently. The command consist of the following tools:-

mkfs.$fs /dev/disk -

mount /dev/disk /mnt -

bootc install to-filesystem --karg=root=UUID=<uuid of /mnt> --imgref $self /mnt

-

7.9.1. Using bootc install to-existing-root

The bootc install to-existing-root is a variant of install to-filesystem. You can use it to convert an existing system into the target container image.

This conversion eliminates the /boot and /boot/efi partitions and can delete the existing Linux installation. The conversion process reuses the filesystem, and even though the user data is preserved, the system no longer boots in package mode.

Prerequisites

- You must have root permissions to complete the procedure.

-

You must match the host environment and the target container version, for example, if your host is a RHEL 9 host, then you must have a RHEL 9 container. Installing a RHEL container on a Fedora host by using

btrfsas the RHEL kernel will not support that filesystem.

Procedure

Run the following command to convert an existing system into the target container image. Pass the target

rootfsby using the-v /:/targetoption.podman run --rm --privileged -v /dev:/dev -v /var/lib/containers:/var/lib/containers -v /:/target \ --pid=host --security-opt label=type:unconfined_t \ <image> \ bootc install to-existing-root# podman run --rm --privileged -v /dev:/dev -v /var/lib/containers:/var/lib/containers -v /:/target \ --pid=host --security-opt label=type:unconfined_t \ <image> \ bootc install to-existing-rootCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command deletes the data in

/boot, but everything else in the existing operating system is not automatically deleted. This can be useful because the new image can automatically import data from the previous host system. Consequently, container images, database, the user home directory data, configuration files in/etcare all available after the subsequent reboot in/sysroot.You can also use the

--root-ssh-authorized-keysflag to inherit the root user SSH keys, by adding--root-ssh-authorized-keys /target/root/.ssh/authorized_keys. For example:podman run --rm --privileged -v /dev:/dev -v /var/lib/containers:/var/lib/containers -v /:/target \ --pid=host --security-opt label=type:unconfined_t \ <image> \ bootc install to-existing-root --root-ssh-authorized-keys /target/root/.ssh/authorized_keys# podman run --rm --privileged -v /dev:/dev -v /var/lib/containers:/var/lib/containers -v /:/target \ --pid=host --security-opt label=type:unconfined_t \ <image> \ bootc install to-existing-root --root-ssh-authorized-keys /target/root/.ssh/authorized_keysCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 8. Creating bootc images from scratch

With bootc images from scratch, you can have control over the underlying image content, and tailor your system environment to your requirements.

You can use the bootc-base-imagectl command to create a bootc image from scratch by using an existing bootc base image as a build environment, providing greater control over the content included in the build process. This process takes the user RPMs as input, so you need to rebuild the image if the RPMs change.

The custom base derives from the base container, and does not automatically consume changes to the default base image unless you make them part of a container pipeline.

You can use the bootc-base-imagectl rechunk subcommand on any bootc container image.

If you want to perform kernel management, you do not need to create a bootc image from scratch. See Managing kernel arguments in bootc systems.

8.1. Using pinned content to build images

To ensure the base image version contains a set of packages at exactly specific versions, for example, defined by a lockfile, or an rpm-md or yum repository, you can use several tools to manage snapshots of rpm-md or yum repository repositories.

With the bootc image from scratch feature, you can configure and override package information in source RPM repositories, while referencing mirrored, pinned, or snapshotted repository content. Consequently, you gain control over package versions and their dependencies.

For example, you might want to gain control over package versions and their dependencies in the following situations:

- You need to use a specific package version because of strict certification and compliance requirements.

- You need to use specific software versions to support critical dependencies.

Prerequisites

- A standard bootc base image.

Procedure

The following example creates a bootc image from scratch with pinned content:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps

Save and build your image.

podman build -t quay.io/<namespace>/<image>:<tag> . --cap-add=all --security-opt=label=type:container_runtime_t --device /dev/fuse

$ podman build -t quay.io/<namespace>/<image>:<tag> . --cap-add=all --security-opt=label=type:container_runtime_t --device /dev/fuseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Build <_image_> image by using the

Containerfilein the current directory:podman build -t quay.io/<namespace>/<image>:<tag> .

$ podman build -t quay.io/<namespace>/<image>:<tag> .Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.2. Building a base image up from minimal