Chapter 8. Managing Snapshots

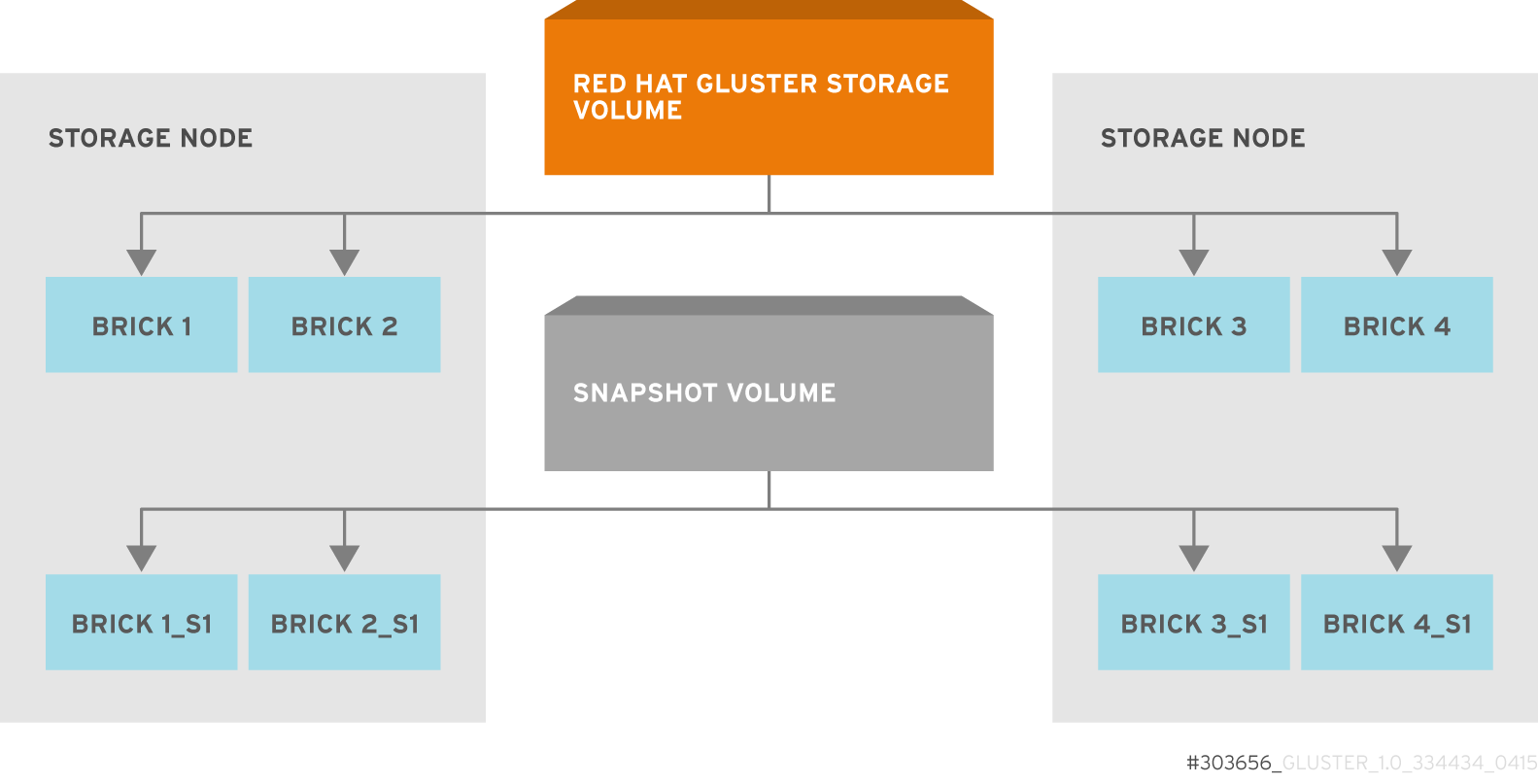

Figure 8.1. Snapshot Architecture

- Crash Consistency

A crash consistent snapshot is captured at a particular point-in-time. When a crash consistent snapshot is restored, the data is identical as it was at the time of taking a snapshot.

Note

Currently, application level consistency is not supported. - Online Snapshot

Snapshot is an online snapshot hence the file system and its associated data continue to be available for the clients even while the snapshot is being taken.

- Quorum Based

The quorum feature ensures that the volume is in a good condition while the bricks are down. If any brick that is down for a n way replication, where n <= 2 , quorum is not met. In a n-way replication where n >= 3, quorum is met when m bricks are up, where m >= (n/2 +1) where n is odd and m >= n/2 and the first brick is up where n is even. If quorum is not met snapshot creation fails.

Note

The quorum check feature in snapshot is in technology preview. Snapshot delete and restore feature checks node level quorum instead of brick level quorum. Snapshot delete and restore is successful only when m number of nodes of a n node cluster is up, where m >= (n/2+1). - Barrier

To guarantee crash consistency some of the fops are blocked during a snapshot operation.

These fops are blocked till the snapshot is complete. All other fops is passed through. There is a default time-out of 2 minutes, within that time if snapshot is not complete then these fops are unbarriered. If the barrier is unbarriered before the snapshot is complete then the snapshot operation fails. This is to ensure that the snapshot is in a consistent state.

Note

8.1. Prerequisites

- Snapshot is based on thinly provisioned LVM. Ensure the volume is based on LVM2. Red Hat Gluster Storage is supported on Red Hat Enterprise Linux 6.7 and later and Red Hat Enterprise Linux 7.1 and later. Both these versions of Red Hat Enterprise Linux is based on LVM2 by default. For more information, see https://access.redhat.com/site/documentation/en-US/Red_Hat_Enterprise_Linux/6/html/Logical_Volume_Manager_Administration/thinprovisioned_volumes.html

- Each brick must be independent thinly provisioned logical volume(LV).

- The logical volume which contains the brick must not contain any data other than the brick.

- Only linear LVM is supported with Red Hat Gluster Storage. For more information, see https://access.redhat.com/site/documentation/en-US/Red_Hat_Enterprise_Linux/4/html-single/Cluster_Logical_Volume_Manager/#lv_overview

- Each snapshot creates as many bricks as in the original Red Hat Gluster Storage volume. Bricks, by default, use privileged ports to communicate. The total number of privileged ports in a system is restricted to 1024. Hence, for supporting 256 snapshots per volume, the following options must be set on Gluster volume. These changes will allow bricks and glusterd to communicate using non-privileged ports.

- Run the following command to permit insecure ports:

gluster volume set VOLNAME server.allow-insecure on

# gluster volume set VOLNAME server.allow-insecure onCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Edit the

/etc/glusterfs/glusterd.volin each Red Hat Gluster Storage node, and add the following setting:option rpc-auth-allow-insecure on

option rpc-auth-allow-insecure onCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart glusterd service on each Red Hat Server node using the following command:

service glusterd restart

# service glusterd restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- For each volume brick, create a dedicated thin pool that contains the brick of the volume and its (thin) brick snapshots. With the current thin-p design, avoid placing the bricks of different Red Hat Gluster Storage volumes in the same thin pool, as this reduces the performance of snapshot operations, such as snapshot delete, on other unrelated volumes.

- The recommended thin pool chunk size is 256KB. There might be exceptions to this in cases where we have a detailed information of the customer's workload.

- The recommended pool metadata size is 0.1% of the thin pool size for a chunk size of 256KB or larger. In special cases, where we recommend a chunk size less than 256KB, use a pool metadata size of 0.5% of thin pool size.

- Create a physical volume(PV) by using the

pvcreatecommand.pvcreate /dev/sda1

pvcreate /dev/sda1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the correctdataalignmentoption based on your device. For more information, Section 20.2, “Brick Configuration” - Create a Volume Group (VG) from the PV using the following command:

vgcreate dummyvg /dev/sda1

vgcreate dummyvg /dev/sda1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a thin-pool using the following command:

lvcreate --size 1T --thin dummyvg/dummypool --chunksize 1280k --poolmetadatasize 16G --zero n

# lvcreate --size 1T --thin dummyvg/dummypool --chunksize 1280k --poolmetadatasize 16G --zero nCopy to Clipboard Copied! Toggle word wrap Toggle overflow A thin pool of size 1 TB is created, using a chunksize of 256 KB. Maximum pool metadata size of 16 G is used. - Create a thinly provisioned volume from the previously created pool using the following command:

lvcreate --virtualsize 1G --thin dummyvg/dummypool --name dummylv

# lvcreate --virtualsize 1G --thin dummyvg/dummypool --name dummylvCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a file system (XFS) on this. Use the recommended options to create the XFS file system on the thin LV.For example,

mkfs.xfs -f -i size=512 -n size=8192 /dev/dummyvg/dummylv

mkfs.xfs -f -i size=512 -n size=8192 /dev/dummyvg/dummylvCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Mount this logical volume and use the mount path as the brick.

mount /dev/dummyvg/dummylv /mnt/brick1

mount /dev/dummyvg/dummylv /mnt/brick1Copy to Clipboard Copied! Toggle word wrap Toggle overflow