6.3. SMB

Note

Warning

- The Samba version 3 is not supported. Ensure that you are using Samba-4.x. For more information regarding the installation and upgrade steps refer the Red Hat Gluster Storage 3.2 Installation Guide.

- CTDB version 4.x is required for Red Hat Gluster Storage 3.2. This is provided in the Red Hat Gluster Storage Samba channel. For more information regarding the installation and upgrade steps refer the Red Hat Gluster Storage 3.2 Installation Guide.

Important

firewall-cmd --get-active-zones

# firewall-cmd --get-active-zonesfirewall-cmd --zone=zone_name --add-service=samba firewall-cmd --zone=zone_name --add-service=samba --permanent

# firewall-cmd --zone=zone_name --add-service=samba

# firewall-cmd --zone=zone_name --add-service=samba --permanent6.3.1. Setting up CTDB for Samba

Important

firewall-cmd --get-active-zones

# firewall-cmd --get-active-zonesfirewall-cmd --zone=zone_name --add-port=4379/tcp firewall-cmd --zone=zone_name --add-port=4379/tcp --permanent

# firewall-cmd --zone=zone_name --add-port=4379/tcp

# firewall-cmd --zone=zone_name --add-port=4379/tcp --permanentNote

Follow these steps before configuring CTDB on a Red Hat Gluster Storage Server:

- If you already have an older version of CTDB (version <= ctdb1.x), then remove CTDB by executing the following command:

yum remove ctdb

# yum remove ctdbCopy to Clipboard Copied! Toggle word wrap Toggle overflow After removing the older version, proceed with installing the latest CTDB.Note

Ensure that the system is subscribed to the samba channel to get the latest CTDB packages. - Install CTDB on all the nodes that are used as Samba servers to the latest version using the following command:

yum install ctdb

# yum install ctdbCopy to Clipboard Copied! Toggle word wrap Toggle overflow - In a CTDB based high availability environment of Samba , the locks will not be migrated on failover.

- You must ensure to open TCP port 4379 between the Red Hat Gluster Storage servers: This is the internode communication port of CTDB.

To configure CTDB on Red Hat Gluster Storage server, execute the following steps

- Create a replicate volume. This volume will host only a zero byte lock file, hence choose minimal sized bricks. To create a replicate volume run the following command:

gluster volume create volname replica n ipaddress:/brick path.......N times

# gluster volume create volname replica n ipaddress:/brick path.......N timesCopy to Clipboard Copied! Toggle word wrap Toggle overflow where,N: The number of nodes that are used as Samba servers. Each node must host one brick.For example:gluster volume create ctdb replica 4 10.16.157.75:/rhgs/brick1/ctdb/b1 10.16.157.78:/rhgs/brick1/ctdb/b2 10.16.157.81:/rhgs/brick1/ctdb/b3 10.16.157.84:/rhgs/brick1/ctdb/b4

# gluster volume create ctdb replica 4 10.16.157.75:/rhgs/brick1/ctdb/b1 10.16.157.78:/rhgs/brick1/ctdb/b2 10.16.157.81:/rhgs/brick1/ctdb/b3 10.16.157.84:/rhgs/brick1/ctdb/b4Copy to Clipboard Copied! Toggle word wrap Toggle overflow - In the following files, replace "all" in the statement META="all" to the newly created volume name

/var/lib/glusterd/hooks/1/start/post/S29CTDBsetup.sh /var/lib/glusterd/hooks/1/stop/pre/S29CTDB-teardown.sh

/var/lib/glusterd/hooks/1/start/post/S29CTDBsetup.sh /var/lib/glusterd/hooks/1/stop/pre/S29CTDB-teardown.shCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:META="all" to META="ctdb"

META="all" to META="ctdb"Copy to Clipboard Copied! Toggle word wrap Toggle overflow - In the /etc/samba/smb.conf file add the following line in the global section on all the nodes:

clustering=yes

clustering=yesCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Start the volume.The S29CTDBsetup.sh script runs on all Red Hat Gluster Storage servers, adds an entry in

/etc/fstab/for the mount, and mounts the volume at/gluster/lockon all the nodes with Samba server. It also enables automatic start of CTDB service on reboot.Note

When you stop the special CTDB volume, the S29CTDB-teardown.sh script runs on all Red Hat Gluster Storage servers and removes an entry in/etc/fstab/for the mount and unmounts the volume at/gluster/lock. - Verify if the file

/etc/sysconfig/ctdbexists on all the nodes that is used as Samba server. This file contains Red Hat Gluster Storage recommended CTDB configurations. - Create

/etc/ctdb/nodesfile on all the nodes that is used as Samba servers and add the IPs of these nodes to the file.10.16.157.0 10.16.157.3 10.16.157.6 10.16.157.9

10.16.157.0 10.16.157.3 10.16.157.6 10.16.157.9Copy to Clipboard Copied! Toggle word wrap Toggle overflow The IPs listed here are the private IPs of Samba servers. - On all the nodes that are used as Samba server which require IP failover, create /etc/ctdb/public_addresses file and add the virtual IPs that CTDB should create to this file. Add these IP address in the following format:

<Virtual IP>/<routing prefix><node interface>

<Virtual IP>/<routing prefix><node interface>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:192.168.1.20/24 eth0 192.168.1.21/24 eth0

192.168.1.20/24 eth0 192.168.1.21/24 eth0Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Start the CTDB service on all the nodes by executing the following command:

service ctdb start

# service ctdb startCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.2. Sharing Volumes over SMB

- Run the following command to allow Samba to communicate with brick processes even with untrusted ports.

gluster volume set VOLNAME server.allow-insecure on

# gluster volume set VOLNAME server.allow-insecure onCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run the following command to enable SMB specific caching

gluster volume set <volname> performance.cache-samba-metadata on volume set success

# gluster volume set <volname> performance.cache-samba-metadata on volume set successCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

Enable generic metadata caching to improve the performance of SMB access to Red Hat Gluster Storage volumes. For more information see Section 20.7, “Directory Operations” - Edit the

/etc/glusterfs/glusterd.volin each Red Hat Gluster Storage node, and add the following setting:option rpc-auth-allow-insecure on

option rpc-auth-allow-insecure onCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

This allows Samba to communicate with glusterd even with untrusted ports. - Restart

glusterdservice on each Red Hat Gluster Storage node. - Run the following command to verify proper lock and I/O coherency.

gluster volume set VOLNAME storage.batch-fsync-delay-usec 0

# gluster volume set VOLNAME storage.batch-fsync-delay-usec 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To verify if the volume can be accessed from the SMB/CIFS share, run the following command:

smbclient -L <hostname> -U%

# smbclient -L <hostname> -U%Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To verify if the SMB/CIFS share can be accessed by the user, run the following command:

smbclient //<hostname>/gluster-<volname> -U <username>%<password>

# smbclient //<hostname>/gluster-<volname> -U <username>%<password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

gluster volume start VOLNAME command, the volume is automatically exported through Samba on all Red Hat Gluster Storage servers running Samba.

- Open the

/etc/samba/smb.conffile in a text editor and add the following lines for a simple configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The configuration options are described in the following table:Expand Table 6.7. Configuration Options Configuration Options Required? Default Value Description Path Yes n/a It represents the path that is relative to the root of the gluster volume that is being shared. Hence /represents the root of the gluster volume. Exporting a subdirectory of a volume is supported and /subdir in path exports only that subdirectory of the volume.glusterfs:volumeYes n/a The volume name that is shared. glusterfs:logfileNo NULL Path to the log file that will be used by the gluster modules that are loaded by the vfs plugin. Standard Samba variable substitutions as mentioned in smb.confare supported.glusterfs:loglevelNo 7 This option is equivalent to the client-log-leveloption of gluster. 7 is the default value and corresponds to the INFO level.glusterfs:volfile_serverNo localhost The gluster server to be contacted to fetch the volfile for the volume. It takes the value, which is a list of white space separated elements, where each element is unix+/path/to/socket/file or [tcp+]IP|hostname|\[IPv6\][:port] - Run

service smb [re]startto start or restart thesmbservice. - Run

smbpasswdto set the SMB password.smbpasswd -a username

# smbpasswd -a usernameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Specify the SMB password. This password is used during the SMB mount.

6.3.3. Mounting Volumes using SMB

- Add the user on all the Samba servers based on your configuration:

# adduser username - Add the user to the list of Samba users on all Samba servers and assign password by executing the following command:

# smbpasswd -a username - Perform a FUSE mount of the gluster volume on any one of the Samba servers:

# mount -t glusterfs -o acl ip-address:/volname /mountpointFor example:mount -t glusterfs -o acl rhs-a:/repvol /mnt

# mount -t glusterfs -o acl rhs-a:/repvol /mntCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Provide required permissions to the user by executing appropriate

setfaclcommand. For example:# setfacl -m user:username:rwx mountpointFor example:setfacl -m user:cifsuser:rwx /mnt

# setfacl -m user:cifsuser:rwx /mntCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.3.1. Manually Mounting Volumes Using SMB on Red Hat Enterprise Linux and Windows

- Mounting a Volume Manually using SMB on Red Hat Enterprise Linux

- Mounting a Volume Manually using SMB through Microsoft Windows Explorer

- Mounting a Volume Manually using SMB on Microsoft Windows Command-line.

Mounting a Volume Manually using SMB on Red Hat Enterprise Linux

- Install the

cifs-utilspackage on the client.yum install cifs-utils

# yum install cifs-utilsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run

mount -t cifsto mount the exported SMB share, using the syntax example as guidance.mount -t cifs -o user=<username>,pass=<password> //<hostname>/gluster-<volname> /<mountpoint>

# mount -t cifs -o user=<username>,pass=<password> //<hostname>/gluster-<volname> /<mountpoint>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:mount -t cifs -o user=cifsuser,pass=redhat //rhs-a/gluster-repvol /cifs

# mount -t cifs -o user=cifsuser,pass=redhat //rhs-a/gluster-repvol /cifsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run

# smbstatus -Son the server to display the status of the volume:Service pid machine Connected at ------------------------------------------------------------------- gluster-VOLNAME 11967 __ffff_192.168.1.60 Mon Aug 6 02:23:25 2012

Service pid machine Connected at ------------------------------------------------------------------- gluster-VOLNAME 11967 __ffff_192.168.1.60 Mon Aug 6 02:23:25 2012Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Mounting a Volume Manually using SMB through Microsoft Windows Explorer

- In Windows Explorer, click

. to open the Map Network Drive screen. - Choose the drive letter using the drop-down list.

- In the Folder text box, specify the path of the server and the shared resource in the following format: \\SERVER_NAME\VOLNAME.

- Click to complete the process, and display the network drive in Windows Explorer.

- Navigate to the network drive to verify it has mounted correctly.

Mounting a Volume Manually using SMB on Microsoft Windows Command-line.

- Click

, and then type cmd. - Enter

net use z: \\SERVER_NAME\VOLNAME, where z: is the drive letter to assign to the shared volume.For example,net use y: \\server1\test-volume - Navigate to the network drive to verify it has mounted correctly.

6.3.3.2. Automatically Mounting Volumes Using SMB on Red Hat Enterprise Linux and Windows

- Mounting a Volume Automatically using SMB on Red Hat Enterprise Linux

- Mounting a Volume Automatically on Server Start using SMB through Microsoft Windows Explorer

Mounting a Volume Automatically using SMB on Red Hat Enterprise Linux

- Open the

/etc/fstabfile in a text editor. - Append the following configuration to the

fstabfile.You must specify the filename and its path that contains the user name and/or password in thecredentialsoption in/etc/fstabfile. See themount.cifsman page for more information.\\HOSTNAME|IPADDRESS\SHARE_NAME MOUNTDIR

\\HOSTNAME|IPADDRESS\SHARE_NAME MOUNTDIRCopy to Clipboard Copied! Toggle word wrap Toggle overflow Using the example server names, the entry contains the following replaced values.\\server1\test-volume /mnt/glusterfs cifs credentials=/etc/samba/passwd,_netdev 0 0

\\server1\test-volume /mnt/glusterfs cifs credentials=/etc/samba/passwd,_netdev 0 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Run

# smbstatus -Son the client to display the status of the volume:Service pid machine Connected at ------------------------------------------------------------------- gluster-VOLNAME 11967 __ffff_192.168.1.60 Mon Aug 6 02:23:25 2012

Service pid machine Connected at ------------------------------------------------------------------- gluster-VOLNAME 11967 __ffff_192.168.1.60 Mon Aug 6 02:23:25 2012Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Mounting a Volume Automatically on Server Start using SMB through Microsoft Windows Explorer

- In Windows Explorer, click

. to open the Map Network Drive screen. - Choose the drive letter using the drop-down list.

- In the Folder text box, specify the path of the server and the shared resource in the following format: \\SERVER_NAME\VOLNAME.

- Click the Reconnect at logon check box.

- Click to complete the process, and display the network drive in Windows Explorer.

- If the Windows Security screen pops up, enter the username and password and click OK.

- Navigate to the network drive to verify it has mounted correctly.

6.3.4. Starting and Verifying your Configuration

Verify the Configuration

- Verify that CTDB is running using the following commands:

ctdb status ctdb ip ctdb ping -n all

# ctdb status # ctdb ip # ctdb ping -n allCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Mount a Red Hat Gluster Storage volume using any one of the VIPs.

- Run

# ctdb ipto locate the physical server serving the VIP. - Shut down the CTDB VIP server to verify successful configuration.When the Red Hat Gluster Storage server serving the VIP is shut down there will be a pause for a few seconds, then I/O will resume.

6.3.6. Accessing Snapshots in Windows

Note

6.3.6.1. Configuring Shadow Copy

Note

vfs objects = shadow_copy2 glusterfs

vfs objects = shadow_copy2 glusterfs| Configuration Options | Required? | Default Value | Description |

|---|---|---|---|

| shadow:snapdir | Yes | n/a | Path to the directory where snapshots are kept. The snapdir name should be .snaps. |

| shadow:basedir | Yes | n/a | Path to the base directory that snapshots are from. The basedir value should be /. |

| shadow:sort | Optional | unsorted | The supported values are asc/desc. By this parameter one can specify that the shadow copy directories should be sorted before they are sent to the client. This can be beneficial as unix filesystems are usually not listed alphabetically sorted. If enabled, it is specified in descending order. |

| shadow:localtime | Optional | UTC | This is an optional parameter that indicates whether the snapshot names are in UTC/GMT or in local time. |

| shadow:format | Yes | n/a | This parameter specifies the format specification for the naming of snapshots. The format must be compatible with the conversion specifications recognized by str[fp]time. The default value is _GMT-%Y.%m.%d-%H.%M.%S. |

| shadow:fixinodes | Optional | No | If you enable shadow:fixinodes then this module will modify the apparent inode number of files in the snapshot directories using a hash of the files path. This is needed for snapshot systems where the snapshots have the same device:inode number as the original files (such as happens with GPFS snapshots). If you don't set this option then the 'restore' button in the shadow copy UI will fail with a sharing violation. |

| shadow:snapprefix | Optional | n/a | Regular expression to match prefix of snapshot name. Red Hat Gluster Storage only supports Basic Regular Expression (BRE) |

| shadow:delimiter | Optional | _GMT | delimiter is used to separate shadow:snapprefix and shadow:format. |

Snap_GMT-2016.06.06-06.06.06 Sl123p_GMT-2016.07.07-07.07.07 xyz_GMT-2016.08.08-08.08.08

Snap_GMT-2016.06.06-06.06.06

Sl123p_GMT-2016.07.07-07.07.07

xyz_GMT-2016.08.08-08.08.08- Run

service smb [re]startto start or restart thesmbservice. - Enable User Serviceable Snapshot (USS) for Samba. For more information see Section 8.13, “User Serviceable Snapshots”

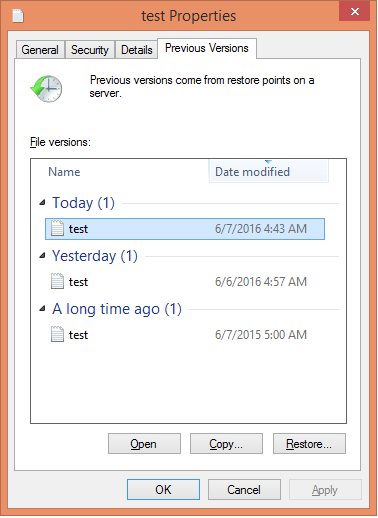

6.3.6.2. Accessing Snapshot

- Right Click on the file or directory for which the previous version is required.

- Click on .

- In the dialog box, select the Date/Time of the previous version of the file, and select either , , or .where,Open: Lets you open the required version of the file in read-only mode.Restore: Restores the file back to the selected version.Copy: Lets you copy the file to a different location.

Figure 6.1. Accessing Snapshot

6.3.7. Tuning Performance

- Listing of directories (recursive)

- Creating files

- Deleting files

- Renaming files

6.3.7.1. Enabling Metadata Caching

- To enable cache invalidation and increase the timeout to 10 minutes execute the following commands:

gluster volume set <volname> features.cache-invalidation on volume set success

# gluster volume set <volname> features.cache-invalidation on volume set successCopy to Clipboard Copied! Toggle word wrap Toggle overflow gluster volume set <volname> features.cache-invalidation-timeout 600 volume set success

# gluster volume set <volname> features.cache-invalidation-timeout 600 volume set successCopy to Clipboard Copied! Toggle word wrap Toggle overflow To enable metadata caching on the client and to maintain cache consistency execute the following commands:gluster volume set <volname> performance.stat-prefetch on volume set success

# gluster volume set <volname> performance.stat-prefetch on volume set successCopy to Clipboard Copied! Toggle word wrap Toggle overflow gluster volume set <volname> performance.cache-invalidation on volume set success

# gluster volume set <volname> performance.cache-invalidation on volume set successCopy to Clipboard Copied! Toggle word wrap Toggle overflow gluster volume set <volname> performance.cache-samba-metadata on volume set success

# gluster volume set <volname> performance.cache-samba-metadata on volume set successCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To increase the client side metadata cache timeout to 10 minutes, execute the following command:

gluster volume set <volname> performance.md-cache-timeout 600 volume set success

# gluster volume set <volname> performance.md-cache-timeout 600 volume set successCopy to Clipboard Copied! Toggle word wrap Toggle overflow