Chapter 11. Creating virtualized control planes

A virtualized control plane is a control plane located on virtual machines (VMs) rather than on bare metal. A virtualized control plane reduces the number of bare-metal machines required for the control plane.

This chapter explains how to virtualize your Red Hat OpenStack Platform (RHOSP) control plane for the overcloud using RHOSP and Red Hat Virtualization.

11.1. Virtualized control plane architecture

You use the Red Hat OpenStack Platform (RHOSP) director to provision an overcloud using Controller nodes that are deployed in a Red Hat Virtualization cluster. You can then deploy these virtualized controllers as the virtualized control plane nodes.

Virtualized Controller nodes are supported only on Red Hat Virtualization.

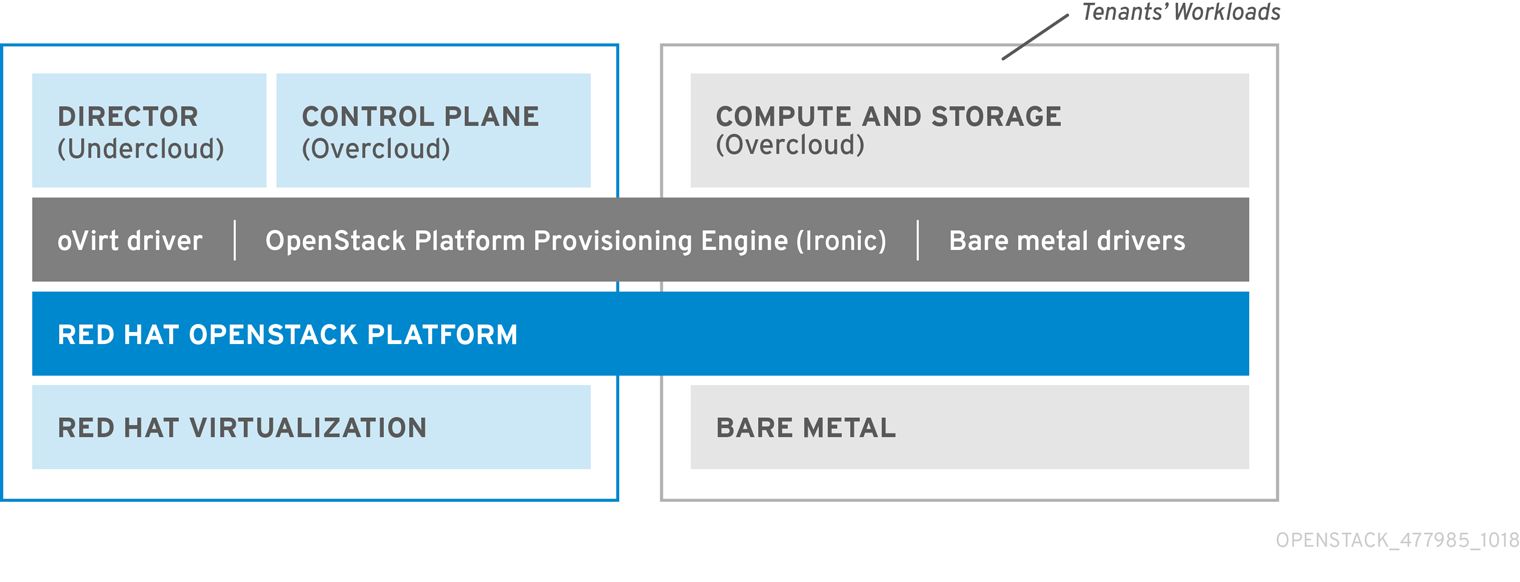

The following architecture diagram illustrates how to deploy a virtualized control plane. You distribute the overcloud with the Controller nodes running on VMs on Red Hat Virtualization. You run the Compute and storage nodes on bare metal.

You run the OpenStack virtualized undercloud on Red Hat Virtualization.

Virtualized control plane architecture

The OpenStack Bare Metal Provisioning (ironic) service includes a driver for Red Hat Virtualization VMs, staging-ovirt. You can use this driver to manage virtual nodes within a Red Hat Virtualization environment. You can also use it to deploy overcloud controllers as virtual machines within a Red Hat Virtualization environment.

11.2. Benefits and limitations of virtualizing your RHOSP overcloud control plane

Although there are a number of benefits to virtualizing your RHOSP overcloud control plane, this is not an option in every configuration.

Benefits

Virtualizing the overloud control plane has a number of benefits that prevent downtime and improve performance.

- You can allocate resources to the virtualized controllers dynamically, using hot add and hot remove to scale CPU and memory as required. This prevents downtime and facilitates increased capacity as the platform grows.

- You can deploy additional infrastructure VMs on the same Red Hat Virtualization cluster. This minimizes the server footprint in the data center and maximizes the efficiency of the physical nodes.

- You can use composable roles to define more complex RHOSP control planes. This allows you to allocate resources to specific components of the control plane.

- You can maintain systems without service interruption by using the VM live migration feature.

- You can integrate third-party or custom tools supported by Red Hat Virtualization.

Limitations

Virtualized control planes limit the types of configurations that you can use.

- Virtualized Ceph Storage nodes and Compute nodes are not supported.

- Block Storage (cinder) image-to-volume is not supported for back ends that use Fiber Channel. Red Hat Virtualization does not support N_Port ID Virtualization (NPIV). Therefore, Block Storage (cinder) drivers that need to map LUNs from a storage back end to the controllers, where cinder-volume runs by default, do not work. You need to create a dedicated role for cinder-volume instead of including it on the virtualized controllers. For more information, see Composable Services and Custom Roles.

11.3. Provisioning virtualized controllers using the Red Hat Virtualization driver

This section details how to provision a virtualized RHOSP control plane for the overcloud using RHOSP and Red Hat Virtualization.

Prerequisites

- You must have a 64-bit x86 processor with support for the Intel 64 or AMD64 CPU extensions.

You must have the following software already installed and configured:

- Red Hat Virtualization. For more information, see Red Hat Virtualization Documentation Suite.

- Red Hat OpenStack Platform (RHOSP). For more information, see Director Installation and Usage.

- You must have the virtualized Controller nodes prepared in advance. These requirements are the same as for bare-metal Controller nodes. For more information, see Controller Node Requirements.

- You must have the bare-metal nodes being used as overcloud Compute nodes, and the storage nodes, prepared in advance. For hardware specifications, see the Compute Node Requirements and Ceph Storage Node Requirements. To deploy overcloud Compute nodes on POWER (ppc64le) hardware, see Red Hat OpenStack Platform for POWER.

- You must have the logical networks created, and your cluster or host networks ready to use network isolation with multiple networks. For more information, see Logical Networks.

- You must have the internal BIOS clock of each node set to UTC. This prevents issues with future-dated file timestamps when hwclock synchronizes the BIOS clock before applying the timezone offset.

To avoid performance bottlenecks, use composable roles and keep the data plane services on the bare-metal Controller nodes.

Procedure

Enable the

staging-ovirtdriver in the director undercloud by adding the driver toenabled_hardware_typesin theundercloud.confconfiguration file:enabled_hardware_types = ipmi,redfish,ilo,idrac,staging-ovirt

enabled_hardware_types = ipmi,redfish,ilo,idrac,staging-ovirtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the undercloud contains the

staging-ovirtdriver:openstack baremetal driver list

(undercloud) [stack@undercloud ~]$ openstack baremetal driver listCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the undercloud is set up correctly, the command returns the following result:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Install the

python-ovirt-engine-sdk4.x86_64package:sudo yum install python-ovirt-engine-sdk4

$ sudo yum install python-ovirt-engine-sdk4Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the overcloud node definition template, for instance,

nodes.json, to register the VMs hosted on Red Hat Virtualization with director. For more information, see Registering Nodes for the Overcloud. Use the following key:value pairs to define aspects of the VMs to deploy with your overcloud:Expand Table 11.1. Configuring the VMs for the overcloud Key Set to this value pm_typeOpenStack Bare Metal Provisioning (ironic) service driver for oVirt/RHV VMs,

staging-ovirt.pm_userRed Hat Virtualization Manager username.

pm_passwordRed Hat Virtualization Manager password.

pm_addrHostname or IP of the Red Hat Virtualization Manager server.

pm_vm_nameName of the virtual machine in Red Hat Virtualization Manager where the controller is created.

For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure one controller on each Red Hat Virtualization Host

- Configure an affinity group in Red Hat Virtualization with "soft negative affinity" to ensure high availability is implemented for your controller VMs. For more information, see Affinity Groups.

- Open the Red Hat Virtualization Manager interface, and use it to map each VLAN to a separate logical vNIC in the controller VMs. For more information, see Logical Networks.

-

Set

no_filterin the vNIC of the director and controller VMs, and restart the VMs, to disable the MAC spoofing filter on the networks attached to the controller VMs. For more information, see Virtual Network Interface Cards. Deploy the overcloud to include the new virtualized controller nodes in your environment:

openstack overcloud deploy --templates

(undercloud) [stack@undercloud ~]$ openstack overcloud deploy --templatesCopy to Clipboard Copied! Toggle word wrap Toggle overflow