Este contenido no está disponible en el idioma seleccionado.

Chapter 5. Using Container Storage Interface (CSI)

5.1. Configuring CSI volumes

The Container Storage Interface (CSI) allows OpenShift Dedicated to consume storage from storage back ends that implement the CSI interface as persistent storage.

OpenShift Dedicated 4 supports version 1.6.0 of the CSI specification.

5.1.1. CSI architecture

CSI drivers are typically shipped as container images. These containers are not aware of OpenShift Dedicated where they run. To use CSI-compatible storage back end in OpenShift Dedicated, the cluster administrator must deploy several components that serve as a bridge between OpenShift Dedicated and the storage driver.

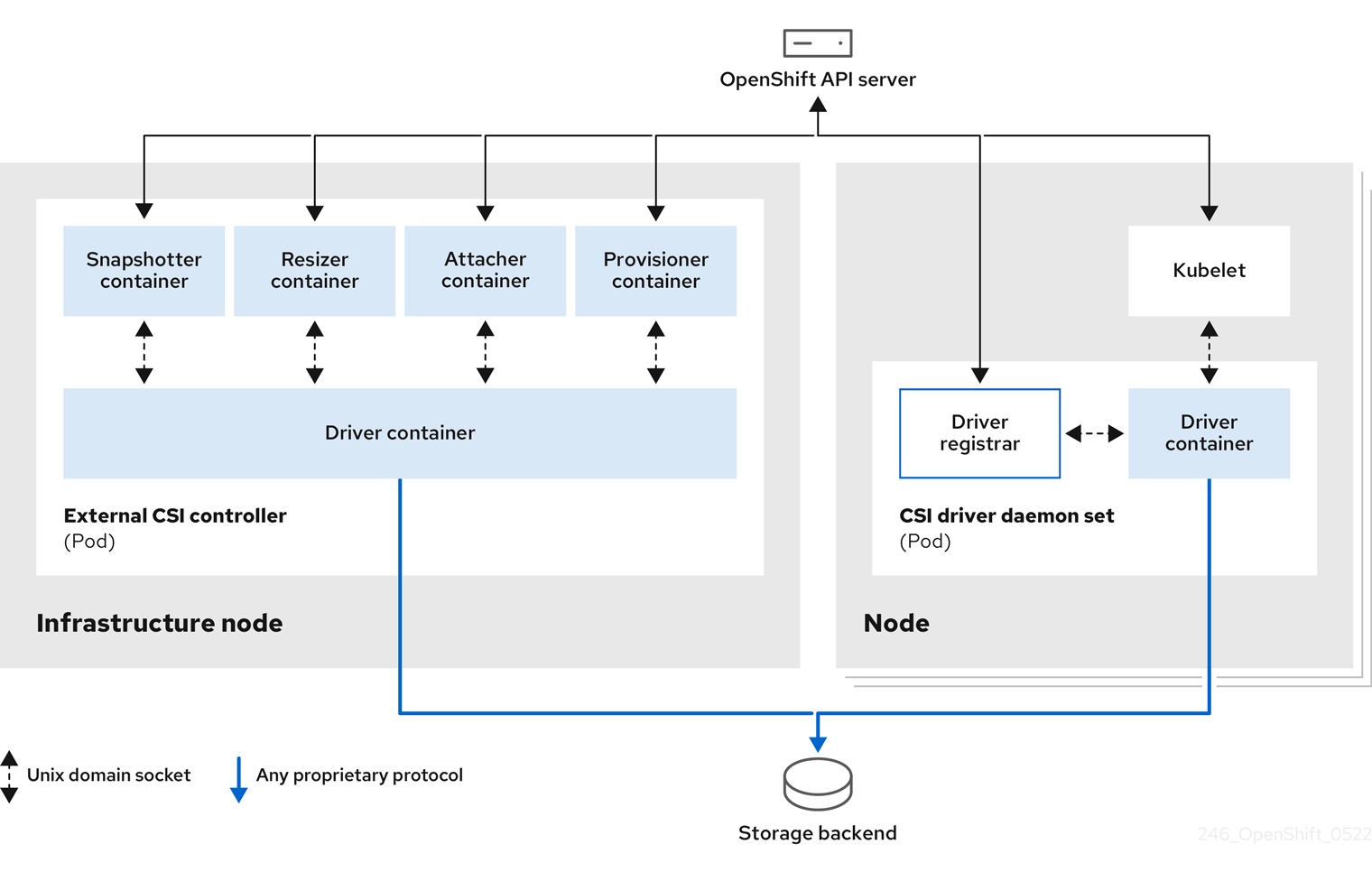

The following diagram provides a high-level overview about the components running in pods in the OpenShift Dedicated cluster.

It is possible to run multiple CSI drivers for different storage back ends. Each driver needs its own external controllers deployment and daemon set with the driver and CSI registrar.

5.1.1.1. External CSI controllers

External CSI controllers is a deployment that deploys one or more pods with five containers:

-

The snapshotter container watches

VolumeSnapshotandVolumeSnapshotContentobjects and is responsible for the creation and deletion ofVolumeSnapshotContentobject. -

The resizer container is a sidecar container that watches for

PersistentVolumeClaimupdates and triggersControllerExpandVolumeoperations against a CSI endpoint if you request more storage onPersistentVolumeClaimobject. -

An external CSI attacher container translates

attachanddetachcalls from OpenShift Dedicated to respectiveControllerPublishandControllerUnpublishcalls to the CSI driver. -

An external CSI provisioner container that translates

provisionanddeletecalls from OpenShift Dedicated to respectiveCreateVolumeandDeleteVolumecalls to the CSI driver. - A CSI driver container.

The CSI attacher and CSI provisioner containers communicate with the CSI driver container using UNIX Domain Sockets, ensuring that no CSI communication leaves the pod. The CSI driver is not accessible from outside of the pod.

The attach, detach, provision, and delete operations typically require the CSI driver to use credentials to the storage backend. Run the CSI controller pods on infrastructure nodes so the credentials are never leaked to user processes, even in the event of a catastrophic security breach on a compute node.

The external attacher must also run for CSI drivers that do not support third-party attach or detach operations. The external attacher will not issue any ControllerPublish or ControllerUnpublish operations to the CSI driver. However, it still must run to implement the necessary OpenShift Dedicated attachment API.

5.1.1.2. CSI driver daemon set

The CSI driver daemon set runs a pod on every node that allows OpenShift Dedicated to mount storage provided by the CSI driver to the node and use it in user workloads (pods) as persistent volumes (PVs). The pod with the CSI driver installed contains the following containers:

-

A CSI driver registrar, which registers the CSI driver into the

openshift-nodeservice running on the node. Theopenshift-nodeprocess running on the node then directly connects with the CSI driver using the UNIX Domain Socket available on the node. - A CSI driver.

The CSI driver deployed on the node should have as few credentials to the storage back end as possible. OpenShift Dedicated will only use the node plugin set of CSI calls such as NodePublish/NodeUnpublish and NodeStage/NodeUnstage, if these calls are implemented.

5.1.2. CSI drivers supported by OpenShift Dedicated

OpenShift Dedicated installs certain CSI drivers by default, giving users storage options that are not possible with in-tree volume plugins.

To create CSI-provisioned persistent volumes that mount to these supported storage assets, OpenShift Dedicated installs the necessary CSI driver Operator, the CSI driver, and the required storage class by default. For more details about the default namespace of the Operator and driver, see the documentation for the specific CSI Driver Operator.

The AWS EFS and GCP Filestore CSI drivers are not installed by default, and must be installed manually. For instructions on installing the AWS EFS CSI driver, see Setting up AWS Elastic File Service CSI Driver Operator. For instructions on installing the GCP Filestore CSI driver, see Google Cloud Filestore CSI Driver Operator.

The following table describes the CSI drivers that are supported by OpenShift Dedicated, and which CSI features they support, such as volume snapshots and resize.

If your CSI driver is not listed in the following table, you must follow the installation instructions provided by your CSI storage vendor to use their supported CSI features.

For a list of third-party-certified CSI drivers, see the Red Hat ecosystem portal under Additional resources.

| CSI driver | CSI volume snapshots | CSI volume group snapshots [1] | CSI cloning | CSI resize | Inline ephemeral volumes |

|---|---|---|---|---|---|

| AWS EBS |

✅ |

|

|

✅ |

|

| AWS EFS |

|

|

|

|

|

| Google Compute Platform (GCP) persistent disk (PD) |

✅ |

|

✅[2] |

✅ |

|

| GCP Filestore |

✅ |

|

|

✅ |

|

| LVM Storage |

✅ |

|

✅ |

✅ |

|

5.1.3. Dynamic provisioning

Dynamic provisioning of persistent storage depends on the capabilities of the CSI driver and underlying storage back end. The provider of the CSI driver should document how to create a storage class in OpenShift Dedicated and the parameters available for configuration.

The created storage class can be configured to enable dynamic provisioning.

Procedure

Create a default storage class that ensures all PVCs that do not require any special storage class are provisioned by the installed CSI driver.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.1.4. Example using the CSI driver

The following example installs a default MySQL template without any changes to the template.

Prerequisites

- The CSI driver has been deployed.

- A storage class has been created for dynamic provisioning.

Procedure

Create the MySQL template:

oc new-app mysql-persistent

# oc new-app mysql-persistentCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

--> Deploying template "openshift/mysql-persistent" to project default ...

--> Deploying template "openshift/mysql-persistent" to project default ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get pvc

# oc get pvcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS VOLUME CAPACITY mysql Bound kubernetes-dynamic-pv-3271ffcb4e1811e8 1Gi ACCESS MODES STORAGECLASS AGE RWO gp3-csi 3s

NAME STATUS VOLUME CAPACITY mysql Bound kubernetes-dynamic-pv-3271ffcb4e1811e8 1Gi ACCESS MODES STORAGECLASS AGE RWO gp3-csi 3sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. Managing the default storage class

5.2.1. Overview

Managing the default storage class allows you to accomplish several different objectives:

- Enforcing static provisioning by disabling dynamic provisioning.

- When you have other preferred storage classes, preventing the storage operator from re-creating the initial default storage class.

- Renaming, or otherwise changing, the default storage class

To accomplish these objectives, you change the setting for the spec.storageClassState field in the ClusterCSIDriver object. The possible settings for this field are:

- Managed: (Default) The Container Storage Interface (CSI) operator is actively managing its default storage class, so that most manual changes made by a cluster administrator to the default storage class are removed, and the default storage class is continuously re-created if you attempt to manually delete it.

- Unmanaged: You can modify the default storage class. The CSI operator is not actively managing storage classes, so that it is not reconciling the default storage class it creates automatically.

- Removed: The CSI operators deletes the default storage class.

5.2.2. Managing the default storage class using the web console

Prerequisites

- Access to the OpenShift Dedicated web console.

- Access to the cluster with cluster-admin privileges.

Procedure

To manage the default storage class using the web console:

- Log in to the web console.

- Click Administration > CustomResourceDefinitions.

-

On the CustomResourceDefinitions page, type

clustercsidriverto find theClusterCSIDriverobject. - Click ClusterCSIDriver, and then click the Instances tab.

- Click the name of the desired instance, and then click the YAML tab.

Add the

spec.storageClassStatefield with a value ofManaged,Unmanaged, orRemoved.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

spec.storageClassStatefield set to "Unmanaged"

- Click Save.

5.2.3. Managing the default storage class using the CLI

Prerequisites

- Access to the cluster with cluster-admin privileges.

Procedure

To manage the storage class using the CLI, run the following command:

oc patch clustercsidriver $DRIVERNAME --type=merge -p "{\"spec\":{\"storageClassState\":\"${STATE}\"}}"

$ oc patch clustercsidriver $DRIVERNAME --type=merge -p "{\"spec\":{\"storageClassState\":\"${STATE}\"}}" - 1

- Where

${STATE}is "Removed" or "Managed" or "Unmanaged".Where

$DRIVERNAMEis the provisioner name. You can find the provisioner name by running the commandoc get sc.

5.2.4. Absent or multiple default storage classes

5.2.4.1. Multiple default storage classes

Multiple default storage classes can occur if you mark a non-default storage class as default and do not unset the existing default storage class, or you create a default storage class when a default storage class is already present. With multiple default storage classes present, any persistent volume claim (PVC) requesting the default storage class (pvc.spec.storageClassName=nil) gets the most recently created default storage class, regardless of the default status of that storage class, and the administrator receives an alert in the alerts dashboard that there are multiple default storage classes, MultipleDefaultStorageClasses.

5.2.4.2. Absent default storage class

There are two possible scenarios where PVCs can attempt to use a non-existent default storage class:

- An administrator removes the default storage class or marks it as non-default, and then a user creates a PVC requesting the default storage class.

- During installation, the installer creates a PVC requesting the default storage class, which has not yet been created.

In the preceding scenarios, PVCs remain in the pending state indefinitely. To resolve this situation, create a default storage class or declare one of the existing storage classes as the default. As soon as the default storage class is created or declared, the PVCs get the new default storage class. If possible, the PVCs eventually bind to statically or dynamically provisioned PVs as usual, and move out of the pending state.

5.2.5. Changing the default storage class

Use the following procedure to change the default storage class.

For example, if you have two defined storage classes, gp3 and standard, and you want to change the default storage class from gp3 to standard.

Prerequisites

- Access to the cluster with cluster-admin privileges.

Procedure

To change the default storage class:

List the storage classes:

oc get storageclass

$ oc get storageclassCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME TYPE gp3 (default) ebs.csi.aws.com standard ebs.csi.aws.com

NAME TYPE gp3 (default) ebs.csi.aws.com1 standard ebs.csi.aws.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

(default)indicates the default storage class.

Make the desired storage class the default.

For the desired storage class, set the

storageclass.kubernetes.io/is-default-classannotation totrueby running the following command:oc patch storageclass standard -p '{"metadata": {"annotations": {"storageclass.kubernetes.io/is-default-class": "true"}}}'$ oc patch storageclass standard -p '{"metadata": {"annotations": {"storageclass.kubernetes.io/is-default-class": "true"}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou can have multiple default storage classes for a short time. However, you should ensure that only one default storage class exists eventually.

With multiple default storage classes present, any persistent volume claim (PVC) requesting the default storage class (

pvc.spec.storageClassName=nil) gets the most recently created default storage class, regardless of the default status of that storage class, and the administrator receives an alert in the alerts dashboard that there are multiple default storage classes,MultipleDefaultStorageClasses.Remove the default storage class setting from the old default storage class.

For the old default storage class, change the value of the

storageclass.kubernetes.io/is-default-classannotation tofalseby running the following command:oc patch storageclass gp3 -p '{"metadata": {"annotations": {"storageclass.kubernetes.io/is-default-class": "false"}}}'$ oc patch storageclass gp3 -p '{"metadata": {"annotations": {"storageclass.kubernetes.io/is-default-class": "false"}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the changes:

oc get storageclass

$ oc get storageclassCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME TYPE gp3 ebs.csi.aws.com standard (default) ebs.csi.aws.com

NAME TYPE gp3 ebs.csi.aws.com standard (default) ebs.csi.aws.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3. AWS Elastic Block Store CSI Driver Operator

5.3.1. Overview

OpenShift Dedicated is capable of provisioning persistent volumes (PVs) using the AWS EBS CSI driver.

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a Container Storage Interface (CSI) Operator and driver.

To create CSI-provisioned PVs that mount to AWS EBS storage assets, OpenShift Dedicated installs the AWS EBS CSI Driver Operator (a Red Hat operator) and the AWS EBS CSI driver by default in the openshift-cluster-csi-drivers namespace.

- The AWS EBS CSI Driver Operator provides a StorageClass by default that you can use to create PVCs. You can disable this default storage class if desired (see Managing the default storage class). You also have the option to create the AWS EBS StorageClass as described in Persistent storage using Amazon Elastic Block Store.

- The AWS EBS CSI driver enables you to create and mount AWS EBS PVs.

5.3.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Dedicated users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

OpenShift Dedicated defaults to using the CSI plugin to provision Amazon Elastic Block Store (Amazon EBS) storage.

For information about dynamically provisioning AWS EBS persistent volumes in OpenShift Dedicated, see Persistent storage using Amazon Elastic Block Store.

5.4. AWS Elastic File Service CSI Driver Operator

This procedure is specific to the AWS EFS CSI Driver Operator (a Red Hat Operator), which is only applicable for OpenShift Dedicated 4.10 and later versions.

5.4.1. Overview

OpenShift Dedicated is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for AWS Elastic File Service (EFS).

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a CSI Operator and driver.

After installing the AWS EFS CSI Driver Operator, OpenShift Dedicated installs the AWS EFS CSI Operator and the AWS EFS CSI driver by default in the openshift-cluster-csi-drivers namespace. This allows the AWS EFS CSI Driver Operator to create CSI-provisioned PVs that mount to AWS EFS assets.

-

The AWS EFS CSI Driver Operator, after being installed, does not create a storage class by default to use to create persistent volume claims (PVCs). However, you can manually create the AWS EFS

StorageClass. The AWS EFS CSI Driver Operator supports dynamic volume provisioning by allowing storage volumes to be created on-demand. This eliminates the need for cluster administrators to pre-provision storage. - The AWS EFS CSI driver enables you to create and mount AWS EFS PVs.

5.4.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Dedicated users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

5.4.3. Setting up the AWS EFS CSI Driver Operator

- If you are using AWS EFS with AWS Secure Token Service (STS), obtain a role Amazon Resource Name (ARN) for STS. This is required for installing the AWS EFS CSI Driver Operator.

- Install the AWS EFS CSI Driver Operator.

- Install the AWS EFS CSI Driver.

5.4.3.1. Obtaining a role Amazon Resource Name for Security Token Service

This procedure explains how to obtain a role Amazon Resource Name (ARN) to configure the AWS EFS CSI Driver Operator with OpenShift Dedicated on AWS Security Token Service (STS).

Perform this procedure before you install the AWS EFS CSI Driver Operator (see Installing the AWS EFS CSI Driver Operator procedure).

Prerequisites

- Access to the cluster as a user with the cluster-admin role.

- AWS account credentials

Procedure

Create an IAM policy JSON file with the following content:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an IAM trust JSON file with the following content:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify your AWS account ID and the OpenShift OIDC provider endpoint.

Obtain your AWS account ID by running the following command:

aws sts get-caller-identity --query Account --output text

$ aws sts get-caller-identity --query Account --output textCopy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain the OpenShift OIDC endpoint by running the following command:

openshift_oidc_provider=`oc get authentication.config.openshift.io cluster \ -o json | jq -r .spec.serviceAccountIssuer | sed -e "s/^https:\/\///"`; \ echo $openshift_oidc_provider

$ openshift_oidc_provider=`oc get authentication.config.openshift.io cluster \ -o json | jq -r .spec.serviceAccountIssuer | sed -e "s/^https:\/\///"`; \ echo $openshift_oidc_providerCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 2

- Specify the OpenShift OIDC endpoint again.

Create the IAM role:

ROLE_ARN=$(aws iam create-role \ --role-name "<your_cluster_name>-aws-efs-csi-operator" \ --assume-role-policy-document file://<your_trust_file_name>.json \ --query "Role.Arn" --output text); echo $ROLE_ARN

ROLE_ARN=$(aws iam create-role \ --role-name "<your_cluster_name>-aws-efs-csi-operator" \ --assume-role-policy-document file://<your_trust_file_name>.json \ --query "Role.Arn" --output text); echo $ROLE_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the role ARN. You will need it when you install the AWS EFS CSI Driver Operator.

Create the IAM policy:

POLICY_ARN=$(aws iam create-policy \ --policy-name "<your_cluster_name>-aws-efs-csi" \ --policy-document file://<your_policy_file_name>.json \ --query 'Policy.Arn' --output text); echo $POLICY_ARN

POLICY_ARN=$(aws iam create-policy \ --policy-name "<your_cluster_name>-aws-efs-csi" \ --policy-document file://<your_policy_file_name>.json \ --query 'Policy.Arn' --output text); echo $POLICY_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow Attach the IAM policy to the IAM role:

aws iam attach-role-policy \ --role-name "<your_cluster_name>-aws-efs-csi-operator" \ --policy-arn $POLICY_ARN

$ aws iam attach-role-policy \ --role-name "<your_cluster_name>-aws-efs-csi-operator" \ --policy-arn $POLICY_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Next steps

5.4.3.2. Installing the AWS EFS CSI Driver Operator

The AWS EFS CSI Driver Operator (a Red Hat Operator) is not installed in OpenShift Dedicated by default. Use the following procedure to install and configure the AWS EFS CSI Driver Operator in your cluster.

Prerequisites

- Access to the OpenShift Dedicated web console.

Procedure

To install the AWS EFS CSI Driver Operator from the web console:

- Log in to the web console.

Install the AWS EFS CSI Operator:

-

Click Ecosystem

Software Catalog. - Locate the AWS EFS CSI Operator by typing AWS EFS CSI in the filter box.

- Click the AWS EFS CSI Driver Operator button.

ImportantBe sure to select the AWS EFS CSI Driver Operator and not the AWS EFS Operator. The AWS EFS Operator is a community Operator and is not supported by Red Hat.

- On the AWS EFS CSI Driver Operator page, click Install.

On the Install Operator page, ensure that:

- If you are using AWS EFS with AWS Secure Token Service (STS), in the role ARN field, enter the ARN role copied from the last step of the Obtaining a role Amazon Resource Name for Security Token Service procedure.

- All namespaces on the cluster (default) is selected.

- Installed Namespace is set to openshift-cluster-csi-drivers.

Click Install.

After the installation finishes, the AWS EFS CSI Operator is listed in the Installed Operators section of the web console.

-

Click Ecosystem

Next steps

5.4.3.3. Installing the AWS EFS CSI Driver

After installing the AWS EFS CSI Driver Operator (a Red Hat operator), you install the AWS EFS CSI driver.

Prerequisites

- Access to the OpenShift Dedicated web console.

Procedure

-

Click Administration

CustomResourceDefinitions ClusterCSIDriver. - On the Instances tab, click Create ClusterCSIDriver.

Use the following YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Click Create.

Wait for the following Conditions to change to a "True" status:

- AWSEFSDriverNodeServiceControllerAvailable

- AWSEFSDriverControllerServiceControllerAvailable

5.4.4. Creating the AWS EFS storage class

Storage classes are used to differentiate and delineate storage levels and usages. By defining a storage class, users can obtain dynamically provisioned persistent volumes.

The AWS EFS CSI Driver Operator (a Red Hat operator), after being installed, does not create a storage class by default. However, you can manually create the AWS EFS storage class.

5.4.4.1. Creating the AWS EFS storage class using the console

Procedure

-

In the OpenShift Dedicated web console, click Storage

StorageClasses. - On the StorageClasses page, click Create StorageClass.

On the StorageClass page, perform the following steps:

- Enter a name to reference the storage class.

- Optional: Enter the description.

- Select the reclaim policy.

-

Select

efs.csi.aws.comfrom the Provisioner drop-down list. - Optional: Set the configuration parameters for the selected provisioner.

- Click Create.

5.4.4.2. Creating the AWS EFS storage class using the CLI

Procedure

Create a

StorageClassobject:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

provisioningModemust beefs-apto enable dynamic provisioning.- 2

fileSystemIdmust be the ID of the EFS volume created manually.- 3

directoryPermsis the default permission of the root directory of the volume. In this example, the volume is accessible only by the owner.- 4 5

gidRangeStartandgidRangeEndset the range of POSIX Group IDs (GIDs) that are used to set the GID of the AWS access point. If not specified, the default range is 50000-7000000. Each provisioned volume, and thus AWS access point, is assigned a unique GID from this range.- 6

basePathis the directory on the EFS volume that is used to create dynamically provisioned volumes. In this case, a PV is provisioned as “/dynamic_provisioning/<random uuid>” on the EFS volume. Only the subdirectory is mounted to pods that use the PV.

NoteA cluster admin can create several

StorageClassobjects, each using a different EFS volume.

5.4.5. AWS EFS CSI cross account support

Cross account support allows you to have an OpenShift Dedicated cluster in one AWS account and mount your file system in another AWS account by using the AWS Elastic File System (EFS) Container Storage Interface (CSI) driver.

Prerequisites

- Access to an OpenShift Dedicated cluster with administrator rights

- Two valid AWS accounts

- The EFS CSI Operator has been installed. For information about installing the EFS CSI Operator, see the Installing the AWS EFS CSI Driver Operator section.

- Both the OpenShift Dedicated cluster and EFS file system must be located in the same AWS region.

- Ensure that the two virtual private clouds (VPCs) used in the following procedure use different network Classless Inter-Domain Routing (CIDR) ranges.

-

Access to OpenShift Dedicated CLI (

oc). - Access to AWS CLI.

-

Access to

jqcommand-line JSON processor.

Procedure

The following procedure explains how to set up:

- OpenShift Dedicated AWS Account A: Contains a Red Hat OpenShift Dedicated cluster v4.16, or later, deployed within a VPC

- AWS Account B: Contains a VPC (including subnets, route tables, and network connectivity). The EFS filesystem will be created in this VPC.

To use AWS EFS across accounts:

Set up the environment:

Configure environment variables by running the following commands:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Cluster name of choice.

- 2

- AWS region of choice.

- 3

- AWS Account A ID.

- 4

- AWS Account B ID.

- 5

- CIDR range of VPC in Account A.

- 6

- CIDR range of VPC in Account B.

- 7

- VPC ID in Account A (cluster)

- 8

- VPC ID in Account B (EFS cross account)

- 9

- Any writeable directory of choice to use to store temporary files.

- 10

- If your driver is installed in a non-default namespace, change this value.

- 11

- Makes AWS CLI output everything directly to stdout.

Create the working directory by running the following command:

mkdir -p $SCRATCH_DIR

mkdir -p $SCRATCH_DIRCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify cluster connectivity by running the following command in the OpenShift Dedicated CLI:

oc whoami

$ oc whoamiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Determine the OpenShift Dedicated cluster type and set node selector:

The EFS cross account feature requires assigning AWS IAM policies to nodes running EFS CSI controller pods. However, this is not consistent for every OpenShift Dedicated type.

If your cluster is deployed as a Hosted Control Plane (HyperShift), set the

NODE_SELECTORenvironment variable to hold the worker node label by running the following command:export NODE_SELECTOR=node-role.kubernetes.io/worker

export NODE_SELECTOR=node-role.kubernetes.io/workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow For all other OpenShift Dedicated types, set the

NODE_SELECTORenvironment variable to hold the master node label by running the following command:export NODE_SELECTOR=node-role.kubernetes.io/master

export NODE_SELECTOR=node-role.kubernetes.io/masterCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Configure AWS CLI profiles as environment variables for account switching by running the following commands:

export AWS_ACCOUNT_A="<ACCOUNT_A_NAME>" export AWS_ACCOUNT_B="<ACCOUNT_B_NAME>"

export AWS_ACCOUNT_A="<ACCOUNT_A_NAME>" export AWS_ACCOUNT_B="<ACCOUNT_B_NAME>"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure that your AWS CLI is configured with JSON output format as the default for both accounts by running the following commands:

export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_A} aws configure get output export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_B} aws configure get outputexport AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_A} aws configure get output export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_B} aws configure get outputCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the preceding commands return:

- No value: The default output format is already set to JSON and no changes are required.

- Any value: Reconfigure your AWS CLI to use JSON format. For information about changing output formats, see Setting the output format in the AWS CLI in the AWS documentation.

Unset

AWS_PROFILEin your shell to prevent conflicts withAWS_DEFAULT_PROFILEby running the following command:unset AWS_PROFILE

unset AWS_PROFILECopy to Clipboard Copied! Toggle word wrap Toggle overflow

Configure the AWS Account B IAM roles and policies:

Switch to your Account B profile by running the following command:

export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_B}export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_B}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Define the IAM role name for the EFS CSI Driver Operator by running the following command:

export ACCOUNT_B_ROLE_NAME=${CLUSTER_NAME}-cross-account-aws-efs-csi-operatorexport ACCOUNT_B_ROLE_NAME=${CLUSTER_NAME}-cross-account-aws-efs-csi-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the IAM trust policy file by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the IAM role for the EFS CSI Driver Operator by running the following command:

ACCOUNT_B_ROLE_ARN=$(aws iam create-role \ --role-name "${ACCOUNT_B_ROLE_NAME}" \ --assume-role-policy-document file://$SCRATCH_DIR/AssumeRolePolicyInAccountB.json \ --query "Role.Arn" --output text) \ && echo $ACCOUNT_B_ROLE_ARNACCOUNT_B_ROLE_ARN=$(aws iam create-role \ --role-name "${ACCOUNT_B_ROLE_NAME}" \ --assume-role-policy-document file://$SCRATCH_DIR/AssumeRolePolicyInAccountB.json \ --query "Role.Arn" --output text) \ && echo $ACCOUNT_B_ROLE_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the IAM policy file by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the IAM policy by running the following command:

ACCOUNT_B_POLICY_ARN=$(aws iam create-policy --policy-name "${CLUSTER_NAME}-efs-csi-policy" \ --policy-document file://$SCRATCH_DIR/EfsPolicyInAccountB.json \ --query 'Policy.Arn' --output text) \ && echo ${ACCOUNT_B_POLICY_ARN}ACCOUNT_B_POLICY_ARN=$(aws iam create-policy --policy-name "${CLUSTER_NAME}-efs-csi-policy" \ --policy-document file://$SCRATCH_DIR/EfsPolicyInAccountB.json \ --query 'Policy.Arn' --output text) \ && echo ${ACCOUNT_B_POLICY_ARN}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Attach the policy to the role by running the following command:

aws iam attach-role-policy \ --role-name "${ACCOUNT_B_ROLE_NAME}" \ --policy-arn "${ACCOUNT_B_POLICY_ARN}"aws iam attach-role-policy \ --role-name "${ACCOUNT_B_ROLE_NAME}" \ --policy-arn "${ACCOUNT_B_POLICY_ARN}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Configure the AWS Account A IAM roles and policies:

Switch to your Account A profile by running the following command:

export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_A}export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_A}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the IAM policy document by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In AWS Account A, attach the AWS-managed policy "AmazonElasticFileSystemClientFullAccess" to the OpenShift Dedicated cluster master role by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Attach the policy to the IAM entity to allow role assumption:

This step depends on your cluster configuration. In both of the following scenarios, the EFS CSI Driver Operator uses an entity to authenticate to AWS, and this entity must be granted permission to assume roles in Account B.

If your cluster:

- Does not have STS enabled: The EFS CSI Driver Operator uses an IAM User entity for AWS authentication. Continue with the step "Attach policy to IAM User to allow role assumption".

- Has STS enabled: The EFS CSI Driver Operator uses an IAM role entity for AWS authentication. Continue with the step "Attach policy to IAM Role to allow role assumption".

Attach policy to IAM User to allow role assumption

Identify the IAM User used by the EFS CSI Driver Operator by running the following command:

EFS_CSI_DRIVER_OPERATOR_USER=$(oc -n openshift-cloud-credential-operator get credentialsrequest/openshift-aws-efs-csi-driver -o json | jq -r '.status.providerStatus.user')

EFS_CSI_DRIVER_OPERATOR_USER=$(oc -n openshift-cloud-credential-operator get credentialsrequest/openshift-aws-efs-csi-driver -o json | jq -r '.status.providerStatus.user')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Attach the policy to the IAM user by running the following command:

aws iam put-user-policy \ --user-name "${EFS_CSI_DRIVER_OPERATOR_USER}" \ --policy-name efs-cross-account-inline-policy \ --policy-document file://$SCRATCH_DIR/AssumeRoleInlinePolicyPolicyInAccountA.jsonaws iam put-user-policy \ --user-name "${EFS_CSI_DRIVER_OPERATOR_USER}" \ --policy-name efs-cross-account-inline-policy \ --policy-document file://$SCRATCH_DIR/AssumeRoleInlinePolicyPolicyInAccountA.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Attach the policy to the IAM role to allow role assumption:

Identify the IAM role name currently used by the EFS CSI Driver Operator by running the following command:

EFS_CSI_DRIVER_OPERATOR_ROLE=$(oc -n ${CSI_DRIVER_NAMESPACE} get secret/aws-efs-cloud-credentials -o jsonpath='{.data.credentials}' | base64 -d | grep role_arn | cut -d'/' -f2) && echo ${EFS_CSI_DRIVER_OPERATOR_ROLE}EFS_CSI_DRIVER_OPERATOR_ROLE=$(oc -n ${CSI_DRIVER_NAMESPACE} get secret/aws-efs-cloud-credentials -o jsonpath='{.data.credentials}' | base64 -d | grep role_arn | cut -d'/' -f2) && echo ${EFS_CSI_DRIVER_OPERATOR_ROLE}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Attach the policy to the IAM role used by the EFS CSI Driver Operator by running the following command:

aws iam put-role-policy \ --role-name "${EFS_CSI_DRIVER_OPERATOR_ROLE}" \ --policy-name efs-cross-account-inline-policy \ --policy-document file://$SCRATCH_DIR/AssumeRoleInlinePolicyPolicyInAccountA.jsonaws iam put-role-policy \ --role-name "${EFS_CSI_DRIVER_OPERATOR_ROLE}" \ --policy-name efs-cross-account-inline-policy \ --policy-document file://$SCRATCH_DIR/AssumeRoleInlinePolicyPolicyInAccountA.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Configure VPC peering:

Initiate a peering request from Account A to Account B by running the following command:

export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_A} PEER_REQUEST_ID=$(aws ec2 create-vpc-peering-connection --vpc-id "${AWS_ACCOUNT_A_VPC_ID}" --peer-vpc-id "${AWS_ACCOUNT_B_VPC_ID}" --peer-owner-id "${AWS_ACCOUNT_B_ID}" --query VpcPeeringConnection.VpcPeeringConnectionId --output text)export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_A} PEER_REQUEST_ID=$(aws ec2 create-vpc-peering-connection --vpc-id "${AWS_ACCOUNT_A_VPC_ID}" --peer-vpc-id "${AWS_ACCOUNT_B_VPC_ID}" --peer-owner-id "${AWS_ACCOUNT_B_ID}" --query VpcPeeringConnection.VpcPeeringConnectionId --output text)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Accept the peering request from Account B by running the following command:

export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_B} aws ec2 accept-vpc-peering-connection --vpc-peering-connection-id "${PEER_REQUEST_ID}"export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_B} aws ec2 accept-vpc-peering-connection --vpc-peering-connection-id "${PEER_REQUEST_ID}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Retrieve the route table IDs for Account A and add routes to the Account B VPC by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Retrieve the route table IDs for Account B and add routes to the Account A VPC by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Configure security groups in Account B to allow NFS traffic from Account A to EFS:

Switch to your Account B profile by running the following command:

export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_B}export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_B}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the VPC security groups for EFS access by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a region-wide EFS filesystem in Account B:

Switch to your Account B profile by running the following command:

export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_B}export AWS_DEFAULT_PROFILE=${AWS_ACCOUNT_B}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a region-wide EFS file system by running the following command:

CROSS_ACCOUNT_FS_ID=$(aws efs create-file-system --creation-token efs-token-1 \ --region ${AWS_REGION} \ --encrypted | jq -r '.FileSystemId') \ && echo $CROSS_ACCOUNT_FS_IDCROSS_ACCOUNT_FS_ID=$(aws efs create-file-system --creation-token efs-token-1 \ --region ${AWS_REGION} \ --encrypted | jq -r '.FileSystemId') \ && echo $CROSS_ACCOUNT_FS_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure region-wide mount targets for EFS by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This creates a mount point in each subnet of your VPC.

Configure the EFS Operator for cross-account access:

Define custom names for the secret and storage class that you will create in subsequent steps by running the following command:

export SECRET_NAME=my-efs-cross-account export STORAGE_CLASS_NAME=efs-sc-cross

export SECRET_NAME=my-efs-cross-account export STORAGE_CLASS_NAME=efs-sc-crossCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a secret that references the role ARN in Account B by running the following command in the OpenShift Dedicated CLI:

oc create secret generic ${SECRET_NAME} -n ${CSI_DRIVER_NAMESPACE} --from-literal=awsRoleArn="${ACCOUNT_B_ROLE_ARN}"oc create secret generic ${SECRET_NAME} -n ${CSI_DRIVER_NAMESPACE} --from-literal=awsRoleArn="${ACCOUNT_B_ROLE_ARN}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Grant the CSI driver controller access to the newly created secret by running the following commands in the OpenShift Dedicated CLI:

oc -n ${CSI_DRIVER_NAMESPACE} create role access-secrets --verb=get,list,watch --resource=secrets oc -n ${CSI_DRIVER_NAMESPACE} create rolebinding --role=access-secrets default-to-secrets --serviceaccount=${CSI_DRIVER_NAMESPACE}:aws-efs-csi-driver-controller-saoc -n ${CSI_DRIVER_NAMESPACE} create role access-secrets --verb=get,list,watch --resource=secrets oc -n ${CSI_DRIVER_NAMESPACE} create rolebinding --role=access-secrets default-to-secrets --serviceaccount=${CSI_DRIVER_NAMESPACE}:aws-efs-csi-driver-controller-saCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new storage class that references the EFS ID from Account B and the secret created previously by running the following command in the OpenShift Dedicated CLI:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4.6. One Zone file systems

5.4.6.1. One Zone file systems overview

OpenShift Dedicated supports AWS Elastic File System (EFS) One Zone file system, which is an EFS storage option that stores data redundantly within a single Availability Zone (AZ). This contrasts with the default EFS storage option, which stores data redundantly across multiple AZs within a region.

Clusters upgraded from OpenShift Dedicated 4.19 are compatible with the regional EFS volumes.

Dynamic provisioning of One Zone volumes is supported only in single-zone clusters. All nodes in the cluster must be in the same AZ as the EFS volume that is used for the dynamic provisioning.

Manually provisioned One Zone volumes in regional clusters is supported, assuming that the persistent volumes (PVs) have correct spec.nodeAffinity that indicates the zone that the volume is in.

For Cloud Credential Operator (CCO) Mint mode or Passthrough, no extra configuration is required. However, for Security Token Service (STS), use the procedure in Section Setting up One Zone file systems with STS.

5.4.6.2. Setting up One Zone file systems with STS

The following procedure explains how to set up AWS One Zone file systems with Security Token Service (STS):

Prerequisites

- Access to the cluster as a user with the cluster-admin role.

- AWS account credentials

Procedure

To configure One Zone file systems with STS:

Create two

CredentialsRequestsin thecredrequestsdirectory following the procedure under Section Obtaining a role Amazon Resource Name for Security Token Service.:-

For the controller

CredentialsRequest, follow the procedure without any changes. For the driver node

CredentialsRequestuse the following example file:Example CredentialsRequest YAML file for driver node

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Set

metadata.annotations.credentials.openshift.io/role-arns-varstoNODE_ROLEARN.

Example

ccoctloutputCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-

For the controller

- Install the AWS EFS CSI driver using the controller ARN created earlier in this procedure.

Edit the operator’s subscription and add

NODE_ROLEARNwith the driver node’s ARN by running a command similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4.7. Dynamic provisioning for Amazon Elastic File Storage

The AWS EFS CSI driver supports a different form of dynamic provisioning than other CSI drivers. It provisions new PVs as subdirectories of a pre-existing EFS volume. The PVs are independent of each other. However, they all share the same EFS volume. When the volume is deleted, all PVs provisioned out of it are deleted too. The EFS CSI driver creates an AWS Access Point for each such subdirectory. Due to AWS AccessPoint limits, you can only dynamically provision 1000 PVs from a single StorageClass/EFS volume.

Note that PVC.spec.resources is not enforced by EFS.

In the example below, you request 5 GiB of space. However, the created PV is limitless and can store any amount of data (like petabytes). A broken application, or even a rogue application, can cause significant expenses when it stores too much data on the volume.

Using monitoring of EFS volume sizes in AWS is strongly recommended.

Prerequisites

- You have created Amazon Elastic File Storage (Amazon EFS) volumes.

- You have created the AWS EFS storage class.

Procedure

To enable dynamic provisioning:

Create a PVC (or StatefulSet or Template) as usual, referring to the

StorageClasscreated previously.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If you have problems setting up dynamic provisioning, see AWS EFS troubleshooting.

5.4.8. Creating static PVs with Amazon Elastic File Storage

It is possible to use an Amazon Elastic File Storage (Amazon EFS) volume as a single PV without any dynamic provisioning. The whole volume is mounted to pods.

Prerequisites

- You have created Amazon EFS volumes.

Procedure

Create the PV using the following YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

spec.capacitydoes not have any meaning and is ignored by the CSI driver. It is used only when binding to a PVC. Applications can store any amount of data to the volume.- 2

volumeHandlemust be the same ID as the EFS volume you created in AWS. If you are providing your own access point,volumeHandleshould be<EFS volume ID>::<access point ID>. For example:fs-6e633ada::fsap-081a1d293f0004630.- 3

- If desired, you can disable encryption in transit. Encryption is enabled by default.

If you have problems setting up static PVs, see AWS EFS troubleshooting.

5.4.9. Amazon Elastic File Storage security

The following information is important for Amazon Elastic File Storage (Amazon EFS) security.

When using access points, for example, by using dynamic provisioning as described earlier, Amazon automatically replaces GIDs on files with the GID of the access point. In addition, EFS considers the user ID, group ID, and secondary group IDs of the access point when evaluating file system permissions. EFS ignores the NFS client’s IDs. For more information about access points, see https://docs.aws.amazon.com/efs/latest/ug/efs-access-points.html.

As a consequence, EFS volumes silently ignore FSGroup; OpenShift Dedicated is not able to replace the GIDs of files on the volume with FSGroup. Any pod that can access a mounted EFS access point can access any file on it.

Unrelated to this, encryption in transit is enabled by default. For more information, see https://docs.aws.amazon.com/efs/latest/ug/encryption-in-transit.html.

5.4.10. AWS EFS storage CSI usage metrics

5.4.10.1. Usage metrics overview

Amazon Web Services (AWS) Elastic File Service (EFS) storage Container Storage Interface (CSI) usage metrics allow you to monitor how much space is used by either dynamically or statically provisioned EFS volumes.

This features is disabled by default, because turning on metrics can lead to performance degradation.

The AWS EFS usage metrics feature collects volume metrics in the AWS EFS CSI Driver by recursively walking through the files in the volume. Because this effort can degrade performance, administrators must explicitly enable this feature.

5.4.10.2. Enabling usage metrics using the web console

To enable Amazon Web Services (AWS) Elastic File Service (EFS) Storage Container Storage Interface (CSI) usage metrics using the web console:

- Click Administration > CustomResourceDefinitions.

-

On the CustomResourceDefinitions page next to the Name dropdown box, type

clustercsidriver. - Click CRD ClusterCSIDriver.

- Click the YAML tab.

Under

spec.aws.efsVolumeMetrics.state, set the value toRecursiveWalk.RecursiveWalkindicates that volume metrics collection in the AWS EFS CSI Driver is performed by recursively walking through the files in the volume.Example ClusterCSIDriver efs.csi.aws.com YAML file

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: To define how the recursive walk operates, you can also set the following fields:

-

refreshPeriodMinutes: Specifies the refresh frequency for volume metrics in minutes. If this field is left blank, a reasonable default is chosen, which is subject to change over time. The current default is 240 minutes. The valid range is 1 to 43,200 minutes. -

fsRateLimit: Defines the rate limit for processing volume metrics in goroutines per file system. If this field is left blank, a reasonable default is chosen, which is subject to change over time. The current default is 5 goroutines. The valid range is 1 to 100 goroutines.

-

- Click Save.

To disable AWS EFS CSI usage metrics, use the preceding procedure, but for spec.aws.efsVolumeMetrics.state, change the value from RecursiveWalk to Disabled.

5.4.10.3. Enabling usage metrics using the CLI

To enable Amazon Web Services (AWS) Elastic File Service (EFS) storage Container Storage Interface (CSI) usage metrics using the CLI:

Edit ClusterCSIDriver by running the following command:

oc edit clustercsidriver efs.csi.aws.com

$ oc edit clustercsidriver efs.csi.aws.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Under

spec.aws.efsVolumeMetrics.state, set the value toRecursiveWalk.RecursiveWalkindicates that volume metrics collection in the AWS EFS CSI Driver is performed by recursively walking through the files in the volume.Example ClusterCSIDriver efs.csi.aws.com YAML file

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: To define how the recursive walk operates, you can also set the following fields:

-

refreshPeriodMinutes: Specifies the refresh frequency for volume metrics in minutes. If this field is left blank, a reasonable default is chosen, which is subject to change over time. The current default is 240 minutes. The valid range is 1 to 43,200 minutes. -

fsRateLimit: Defines the rate limit for processing volume metrics in goroutines per file system. If this field is left blank, a reasonable default is chosen, which is subject to change over time. The current default is 5 goroutines. The valid range is 1 to 100 goroutines.

-

-

Save the changes to the

efs.csi.aws.comobject.

To disable AWS EFS CSI usage metrics, use the preceding procedure, but for spec.aws.efsVolumeMetrics.state, change the value from RecursiveWalk to Disabled.

5.4.11. Amazon Elastic File Storage troubleshooting

The following information provides guidance on how to troubleshoot issues with Amazon Elastic File Storage (Amazon EFS):

-

The AWS EFS Operator and CSI driver run in namespace

openshift-cluster-csi-drivers. To initiate gathering of logs of the AWS EFS Operator and CSI driver, run the following command:

oc adm must-gather [must-gather ] OUT Using must-gather plugin-in image: quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:125f183d13601537ff15b3239df95d47f0a604da2847b561151fedd699f5e3a5 [must-gather ] OUT namespace/openshift-must-gather-xm4wq created [must-gather ] OUT clusterrolebinding.rbac.authorization.k8s.io/must-gather-2bd8x created [must-gather ] OUT pod for plug-in image quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:125f183d13601537ff15b3239df95d47f0a604da2847b561151fedd699f5e3a5 created

$ oc adm must-gather [must-gather ] OUT Using must-gather plugin-in image: quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:125f183d13601537ff15b3239df95d47f0a604da2847b561151fedd699f5e3a5 [must-gather ] OUT namespace/openshift-must-gather-xm4wq created [must-gather ] OUT clusterrolebinding.rbac.authorization.k8s.io/must-gather-2bd8x created [must-gather ] OUT pod for plug-in image quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:125f183d13601537ff15b3239df95d47f0a604da2847b561151fedd699f5e3a5 createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow To show AWS EFS Operator errors, view the

ClusterCSIDriverstatus:oc get clustercsidriver efs.csi.aws.com -o yaml

$ oc get clustercsidriver efs.csi.aws.com -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow If a volume cannot be mounted to a pod (as shown in the output of the following command):

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Warning message indicating volume not mounted.

This error is frequently caused by AWS dropping packets between an OpenShift Dedicated node and Amazon EFS.

Check that the following are correct:

- AWS firewall and Security Groups

- Networking: port number and IP addresses

5.4.12. Uninstalling the AWS EFS CSI Driver Operator

All EFS PVs are inaccessible after uninstalling the AWS EFS CSI Driver Operator (a Red Hat operator).

Prerequisites

- Access to the OpenShift Dedicated web console.

Procedure

To uninstall the AWS EFS CSI Driver Operator from the web console:

- Log in to the web console.

- Stop all applications that use AWS EFS PVs.

Delete all AWS EFS PVs:

-

Click Storage

PersistentVolumeClaims. - Select each PVC that is in use by the AWS EFS CSI Driver Operator, click the drop-down menu on the far right of the PVC, and then click Delete PersistentVolumeClaims.

-

Click Storage

Uninstall the AWS EFS CSI driver:

NoteBefore you can uninstall the Operator, you must remove the CSI driver first.

-

Click Administration

CustomResourceDefinitions ClusterCSIDriver. - On the Instances tab, for efs.csi.aws.com, on the far left side, click the drop-down menu, and then click Delete ClusterCSIDriver.

- When prompted, click Delete.

-

Click Administration

Uninstall the AWS EFS CSI Operator:

-

Click Ecosystem

Installed Operators. - On the Installed Operators page, scroll or type AWS EFS CSI into the Search by name box to find the Operator, and then click it.

-

On the upper, right of the Installed Operators > Operator details page, click Actions

Uninstall Operator. When prompted on the Uninstall Operator window, click the Uninstall button to remove the Operator from the namespace. Any applications deployed by the Operator on the cluster need to be cleaned up manually.

After uninstalling, the AWS EFS CSI Driver Operator is no longer listed in the Installed Operators section of the web console.

-

Click Ecosystem

Before you can destroy a cluster (openshift-install destroy cluster), you must delete the EFS volume in AWS. An OpenShift Dedicated cluster cannot be destroyed when there is an EFS volume that uses the cluster’s VPC. Amazon does not allow deletion of such a VPC.

5.5. GCP PD CSI Driver Operator

5.5.1. Overview

OpenShift Dedicated can provision persistent volumes (PVs) using the Container Storage Interface (CSI) driver for Google Cloud Platform (GCP) persistent disk (PD) storage.

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a Container Storage Interface (CSI) Operator and driver.

To create CSI-provisioned persistent volumes (PVs) that mount to GCP PD storage assets, OpenShift Dedicated installs the GCP PD CSI Driver Operator and the GCP PD CSI driver by default in the openshift-cluster-csi-drivers namespace.

- GCP PD CSI Driver Operator: By default, the Operator provides a storage class that you can use to create PVCs. You can disable this default storage class if desired (see Managing the default storage class). You also have the option to create the GCP PD storage class as described in Persistent storage using GCE Persistent Disk.

GCP PD driver: The driver enables you to create and mount GCP PD PVs.

GCP PD CSI driver supports the C3 instance type for bare metal and N4 machine series. The C3 instance type and N4 machine series support the hyperdisk-balanced disks. For more information, see Section C3 instance type for bare metal and N4 machine series.

5.5.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Dedicated users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

5.5.3. Reducing permissions while using the GCP PD CSI Driver Operator

The default installation allows the Google Cloud Platform (GCP) persistent disk (PD) Container Storage Interface (CSI) Driver to impersonate any service account in the Google Cloud project. You can reduce the scope of permissions granted to the GCP PD CSI Driver service account in your Google Cloud project to only the required node service accounts.

To reduce permissions, grant the iam.serviceAccountUser role to the control plane and compute node service accounts, and then remove the iam.serviceAccountUser role from the project-wide service account, thus reducing the scope of the permission.

Reducing permissions only applies to GCP clusters using Workload Identity Federation (WIF).

Procedure

Grant scoped

iam.serviceAccountUserrole for node service accounts by running the following Bash commands:gcloud iam service-accounts add-iam-policy-binding "${MASTER_NODE_SA}" --project="${GOOGLE_PROJECT_ID}" --member="serviceAccount:${SERVICE_ACCOUNT_EMAIL}" --role="roles/iam.serviceAccountUser" --condition=None gcloud iam service-accounts add-iam-policy-binding "${WORKER_NODE_SA}" --project="${GOOGLE_PROJECT_ID}" --member="serviceAccount:${SERVICE_ACCOUNT_EMAIL}" --role="roles/iam.serviceAccountUser" --condition=Nonegcloud iam service-accounts add-iam-policy-binding "${MASTER_NODE_SA}" --project="${GOOGLE_PROJECT_ID}" --member="serviceAccount:${SERVICE_ACCOUNT_EMAIL}" --role="roles/iam.serviceAccountUser" --condition=None gcloud iam service-accounts add-iam-policy-binding "${WORKER_NODE_SA}" --project="${GOOGLE_PROJECT_ID}" --member="serviceAccount:${SERVICE_ACCOUNT_EMAIL}" --role="roles/iam.serviceAccountUser" --condition=NoneCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

GOOGLE_PROJECT_ID: The unique ID of your Google Cloud project. -

SERVICE_ACCOUNT_EMAIL: The email address of the "Member" (the person or service account) who is being granted the new permissions. To find the service account, on WIF clusters, there is a default service account on GCP for the CSI driver based on the cluster name, for example:${CLUSTER_NAME}-openshift-gcp-pd-csi-*. -

MASTER_NODE_SA: The email address of the service account used by your cluster’s master node. -

WORKER_NODE_SA: The email address of the service account used by your cluster’s worker nodes.

-

Remove project-level

iam.serviceAccountUserrole from the binding created by the installation program by running the following Bash commands:gcloud projects remove-iam-policy-binding "${GOOGLE_PROJECT_ID}" --member="serviceAccount:${SERVICE_ACCOUNT_EMAIL}" --role="roles/iam.serviceAccountUser" --condition=Nonegcloud projects remove-iam-policy-binding "${GOOGLE_PROJECT_ID}" --member="serviceAccount:${SERVICE_ACCOUNT_EMAIL}" --role="roles/iam.serviceAccountUser" --condition=NoneCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

SERVICE_ACCOUNT_EMAIL: The email address of the account losing the permission. For example,my-app-sa@my-project.iam.gserviceaccount.com. To find the service account, on WIF clusters, there is a default service account on GCP for the CSI driver based on the cluster name, for example:${CLUSTER_NAME}-openshift-gcp-pd-csi-*. -

GOOGLE_PROJECT_ID: The unique ID of the Google Cloud project where this is occurring. For example,prod-data-789.

-

5.5.4. GCP PD CSI driver storage class parameters

The Google Cloud Platform (GCP) persistent disk (PD) Container Storage Interface (CSI) driver uses the CSI external-provisioner sidecar as a controller. This is a separate helper container that is deployed with the CSI driver. The sidecar manages persistent volumes (PVs) by triggering the CreateVolume operation.

The GCP PD CSI driver uses the csi.storage.k8s.io/fstype parameter key to support dynamic provisioning. The following table describes all the GCP PD CSI storage class parameters that are supported by OpenShift Dedicated.

| Parameter | Values | Default | Description |

|---|---|---|---|

|

|

|

| Allows you to choose between standard PVs or solid-state-drive PVs. The driver does not validate the value, thus all the possible values are accepted. |

|

|

|

| Allows you to choose between zonal or regional PVs. |

|

| Fully qualified resource identifier for the key to use to encrypt new disks. | Empty string | Uses customer-managed encryption keys (CMEK) to encrypt new disks. |

5.5.5. C3 instance type for bare metal and N4 machine series

5.5.5.1. C3 and N4 instance type limitations

The GCP PD CSI driver support for the C3 instance type for bare metal and N4 machine series have the following limitations:

- You must set the volume size to at least 4Gi when you create hyperdisk-balanced disks. OpenShift Dedicated does not round up to the minimum size, so you must specify the correct size yourself.

- Cloning volumes is not supported when using storage pools.

- For cloning or resizing, hyperdisk-balanced disks original volume size must be 6Gi or greater.

The default storage class is standard-csi.

ImportantYou need to manually create a storage class.

For information about creating the storage class, see Step 2 in Section Setting up hyperdisk-balanced disks.

5.5.5.2. Storage pools for hyperdisk-balanced disks overview

Hyperdisk storage pools can be used with Compute Engine for large-scale storage. A hyperdisk storage pool is a purchased collection of capacity, throughput, and IOPS, which you can then provision for your applications as needed. You can use hyperdisk storage pools to create and manage disks in pools and use the disks across multiple workloads. By managing disks in aggregate, you can save costs while achieving expected capacity and performance growth. By using only the storage that you need in hyperdisk storage pools, you reduce the complexity of forecasting capacity and reduce management by going from managing hundreds of disks to managing a single storage pool.

To set up storage pools, see Setting up hyperdisk-balanced disks.

5.5.5.3. Setting up hyperdisk-balanced disks

Prerequisites

- Access to the cluster with administrative privileges

Procedure

Complete the following steps to set up hyperdisk-balanced disks:

- Create an OpenShift Dedicated cluster on Google Cloud with attached disks provisioned with hyperdisk-balanced disks. This can be achieved by provisioning the cluster with compute node types that support hyperdisk-balanced disks, such as the C3 and N4 machine series from Google Cloud.

Once the OSD cluster is ready, navigate to the OpenShift console for Storage Class creation. Within the console, navigate to the Storage section to create a Storage Class specifying the hyperdisk-balanced disk:

Example StorageClass YAML file

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the name for your storage class. In this example, the name is

hyperdisk-sc. - 2

- Specify the GCP CSI provisioner as

pd.csi.storage.gke.io. - 3

- If using storage pools, specify a list of specific storage pools that you want to use in the following format:

projects/PROJECT_ID/zones/ZONE/storagePools/STORAGE_POOL_NAME. - 4

- Specify the disk type as

hyperdisk-balanced.

NoteIf you use storage pools, you must first create a Hyperdisk Storage Pool of the type "Hyperdisk Balanced" in the Google Cloud console prior to referencing it in the OpenShift Storage Class. The Hyperdisk Storage Pool must be created in the same zone as the compute node supporting Hyperdisk is installed in the cluster configuration. For more information about creating a Hyperdisk Storage Pool, see Create a Hyperdisk Storage Pool in the Google Cloud documentation.

Create a persistent volume claim (PVC) that uses the hyperdisk-specific storage class using the following example YAML file:

Example PVC YAML file

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a deployment that uses the PVC that you just created. Using a deployment helps ensure that your application has access to the persistent storage even after the pod restarts and rescheduling:

- Ensure a node pool with the specified machine series is up and running before creating the deployment. Otherwise, the pod fails to schedule.

Use the following example YAML file to create the deployment:

Example deployment YAML file

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the deployment was successfully created by running the following command:

oc get deployment

$ oc get deploymentCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE postgres 0/1 1 0 42s

NAME READY UP-TO-DATE AVAILABLE AGE postgres 0/1 1 0 42sCopy to Clipboard Copied! Toggle word wrap Toggle overflow It might take a few minutes for hyperdisk instances to complete provisioning and display a READY status.

Confirm that PVC

my-pvchas been successfully bound to a persistent volume (PV) by running the following command:oc get pvc my-pvc

$ oc get pvc my-pvcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE my-pvc Bound pvc-1ff52479-4c81-4481-aa1d-b21c8f8860c6 2Ti RWO hyperdisk-sc <unset> 2m24s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE my-pvc Bound pvc-1ff52479-4c81-4481-aa1d-b21c8f8860c6 2Ti RWO hyperdisk-sc <unset> 2m24sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm the expected configuration of your hyperdisk-balanced disk:

gcloud compute disks list

$ gcloud compute disks listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME LOCATION LOCATION_SCOPE SIZE_GB TYPE STATUS instance-20240914-173145-boot us-central1-a zone 150 pd-standard READY instance-20240914-173145-data-workspace us-central1-a zone 100 pd-balanced READY c4a-rhel-vm us-central1-a zone 50 hyperdisk-balanced READY

NAME LOCATION LOCATION_SCOPE SIZE_GB TYPE STATUS instance-20240914-173145-boot us-central1-a zone 150 pd-standard READY instance-20240914-173145-data-workspace us-central1-a zone 100 pd-balanced READY c4a-rhel-vm us-central1-a zone 50 hyperdisk-balanced READY1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Hyperdisk-balanced disk.

If using storage pools, check that the volume is provisioned as specified in your storage class and PVC by running the following command:

gcloud compute storage-pools list-disks pool-us-east4-c --zone=us-east4-c

$ gcloud compute storage-pools list-disks pool-us-east4-c --zone=us-east4-cCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS PROVISIONED_IOPS PROVISIONED_THROUGHPUT SIZE_GB pvc-1ff52479-4c81-4481-aa1d-b21c8f8860c6 READY 3000 140 2048

NAME STATUS PROVISIONED_IOPS PROVISIONED_THROUGHPUT SIZE_GB pvc-1ff52479-4c81-4481-aa1d-b21c8f8860c6 READY 3000 140 2048Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5.6. Creating a custom-encrypted persistent volume

When you create a PersistentVolumeClaim object, OpenShift Dedicated provisions a new persistent volume (PV) and creates a PersistentVolume object. You can add a custom encryption key in Google Cloud Platform (GCP) to protect a PV in your cluster by encrypting the newly created PV.

For encryption, the newly attached PV that you create uses customer-managed encryption keys (CMEK) on a cluster by using a new or existing Google Cloud Key Management Service (KMS) key.

Prerequisites

- You are logged in to a running OpenShift Dedicated cluster.

- You have created a Cloud KMS key ring and key version.

For more information about CMEK and Cloud KMS resources, see Using customer-managed encryption keys (CMEK).

Procedure

To create a custom-encrypted PV, complete the following steps:

Create a storage class with the Cloud KMS key. The following example enables dynamic provisioning of encrypted volumes:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This field must be the resource identifier for the key that will be used to encrypt new disks. Values are case-sensitive. For more information about providing key ID values, see Retrieving a resource’s ID and Getting a Cloud KMS resource ID.

NoteYou cannot add the

disk-encryption-kms-keyparameter to an existing storage class. However, you can delete the storage class and recreate it with the same name and a different set of parameters. If you do this, the provisioner of the existing class must bepd.csi.storage.gke.io.Deploy the storage class on your OpenShift Dedicated cluster using the

occommand:oc describe storageclass csi-gce-pd-cmek

$ oc describe storageclass csi-gce-pd-cmekCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a file named

pvc.yamlthat matches the name of your storage class object that you created in the previous step:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you marked the new storage class as default, you can omit the

storageClassNamefield.Apply the PVC on your cluster:

oc apply -f pvc.yaml

$ oc apply -f pvc.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the status of your PVC and verify that it is created and bound to a newly provisioned PV:

oc get pvc

$ oc get pvcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE podpvc Bound pvc-e36abf50-84f3-11e8-8538-42010a800002 10Gi RWO csi-gce-pd-cmek 9s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE podpvc Bound pvc-e36abf50-84f3-11e8-8538-42010a800002 10Gi RWO csi-gce-pd-cmek 9sCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf your storage class has the

volumeBindingModefield set toWaitForFirstConsumer, you must create a pod to use the PVC before you can verify it.

Your CMEK-protected PV is now ready to use with your OpenShift Dedicated cluster.

5.6. Google Cloud Filestore CSI Driver Operator

5.6.1. Overview

OpenShift Dedicated is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for Google Compute Platform (GCP) Filestore Storage.

Familiarity with persistent storage and configuring CSI volumes is recommended when working with a CSI Operator and driver.

To create CSI-provisioned PVs that mount to Google Cloud Filestore Storage assets, you install the Google Cloud Filestore CSI Driver Operator and the Google Cloud Filestore CSI driver in the openshift-cluster-csi-drivers namespace.

- The Google Cloud Filestore CSI Driver Operator does not provide a storage class by default, but you can create one if needed. The Google Cloud Filestore CSI Driver Operator supports dynamic volume provisioning by allowing storage volumes to be created on demand, eliminating the need for cluster administrators to pre-provision storage.

- The Google Cloud Filestore CSI driver enables you to create and mount Google Cloud Filestore PVs.

OpenShift Dedicated Google Cloud Filestore supports Workload Identity. This allows users to access Google Cloud resources using federated identities instead of a service account key. GCP Workload Identity must be enabled globally during installation, and then configured for the Google Cloud Filestore CSI Driver Operator.

5.6.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plugins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Dedicated users storage options, such as volume snapshots, that are not possible with in-tree volume plugins.

5.6.3. Installing the Google Cloud Filestore CSI Driver Operator

5.6.3.1. Preparing to install the Google Cloud Filestore CSI Driver Operator with Workload Identity

If you are planning to use GCP Workload Identity with Google Compute Platform Filestore, you must obtain certain parameters that you will use during the installation of the Google Cloud Filestore Container Storage Interface (CSI) Driver Operator.

Prerequisites

- Access to the cluster as a user with the cluster-admin role.

Procedure

To prepare to install the Google Cloud Filestore CSI Driver Operator with Workload Identity:

Obtain the project number:

Obtain the project ID by running the following command:

export PROJECT_ID=$(oc get infrastructure/cluster -o jsonpath='{.status.platformStatus.gcp.projectID}')$ export PROJECT_ID=$(oc get infrastructure/cluster -o jsonpath='{.status.platformStatus.gcp.projectID}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain the project number, using the project ID, by running the following command:

gcloud projects describe $PROJECT_ID --format="value(projectNumber)"

$ gcloud projects describe $PROJECT_ID --format="value(projectNumber)"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Find the identity pool ID and the provider ID:

During cluster installation, the names of these resources are provided to the Cloud Credential Operator utility (

ccoctl) with the--name parameter. See "Creating Google Cloud resources with the Cloud Credential Operator utility".Create Workload Identity resources for the Google Cloud Filestore Operator:

Create a

CredentialsRequestfile using the following example file:Example Credentials Request YAML file

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

CredentialsRequestfile to create a Google Cloud service account by running the following command:./ccoctl gcp create-service-accounts --name=<filestore-service-account> \ --workload-identity-pool=<workload-identity-pool> \ --workload-identity-provider=<workload-identity-provider> \ --project=<project-id> \ --credentials-requests-dir=/tmp/credreq

$ ./ccoctl gcp create-service-accounts --name=<filestore-service-account> \1 --workload-identity-pool=<workload-identity-pool> \2 --workload-identity-provider=<workload-identity-provider> \3 --project=<project-id> \4 --credentials-requests-dir=/tmp/credreq5 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The current directory.

Find the service account email of the newly created service account by running the following command:

cat /tmp/install-dir/manifests/openshift-cluster-csi-drivers-gcp-filestore-cloud-credentials-credentials.yaml | yq '.data["service_account.json"]' | base64 -d | jq '.service_account_impersonation_url'

$ cat /tmp/install-dir/manifests/openshift-cluster-csi-drivers-gcp-filestore-cloud-credentials-credentials.yaml | yq '.data["service_account.json"]' | base64 -d | jq '.service_account_impersonation_url'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

https://iamcredentials.googleapis.com/v1/projects/-/serviceAccounts/filestore-se-openshift-g-ch8cm@openshift-gce-devel.iam.gserviceaccount.com:generateAccessToken

https://iamcredentials.googleapis.com/v1/projects/-/serviceAccounts/filestore-se-openshift-g-ch8cm@openshift-gce-devel.iam.gserviceaccount.com:generateAccessTokenCopy to Clipboard Copied! Toggle word wrap Toggle overflow In this example output, the service account email is

filestore-se-openshift-g-ch8cm@openshift-gce-devel.iam.gserviceaccount.com.

Results

You now have the following parameters that you need to install the Google Cloud Filestore CSI Driver Operator:

- Project number - from Step 1.b

- Pool ID - from Step 2

- Provider ID - from Step 2

- Service account email - from Step 3.c

5.6.3.2. Installing the Google Cloud Filestore CSI Driver Operator

The Google Compute Platform (Google Cloud) Filestore Container Storage Interface (CSI) Driver Operator is not installed in OpenShift Dedicated by default. Use the following procedure to install the Google Cloud Filestore CSI Driver Operator in your cluster.

Prerequisites

- Access to the OpenShift Dedicated web console.

- If using GCP Workload Identity, certain GCP Workload Identity parameters are needed. See the preceding Section Preparing to install the Google Cloud Filestore CSI Driver Operator with Workload Identity.

Procedure

To install the Google Cloud Filestore CSI Driver Operator from the web console:

- Log in to the OpenShift Cluster Manager.

- Select your cluster.

- Click Open console and log in with your credentials.

Enable the Filestore API in the GCE project by running the following command:

gcloud services enable file.googleapis.com --project <my_gce_project>

$ gcloud services enable file.googleapis.com --project <my_gce_project>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<my_gce_project>with your Google Cloud project.

You can also do this using Google Cloud web console.

Install the Google Cloud Filestore CSI Operator:

-

Click Ecosystem

Software Catalog. - Locate the Google Cloud Filestore CSI Operator by typing Google Cloud Filestore in the filter box.

- Click the Google Cloud Filestore CSI Driver Operator button.

- On the Google Cloud Filestore CSI Driver Operator page, click Install.

On the Install Operator page, ensure that:

- All namespaces on the cluster (default) is selected.

Installed Namespace is set to openshift-cluster-csi-drivers.

If using GCP Workload Identity, enter values for the following fields obtained from the procedure in Section Preparing to install the Google Cloud Filestore CSI Driver Operator with Workload Identity:

- Google Cloud Project Number

- Google Cloud Pool ID

- Google Cloud Provider ID

- Google Cloud Service Account Email

Click Install.

After the installation finishes, the Google Cloud Filestore CSI Operator is listed in the Installed Operators section of the web console.

-