Ce contenu n'est pas disponible dans la langue sélectionnée.

2.2. Volume drivers

cinder-volume service, use the parameters described in these sections.

volume_driver flag. The default is:

volume_driver = cinder.volume.drivers.lvm.LVMISCSIDriver

volume_driver = cinder.volume.drivers.lvm.LVMISCSIDriver2.2.1. Ceph RADOS Block Device (RBD)

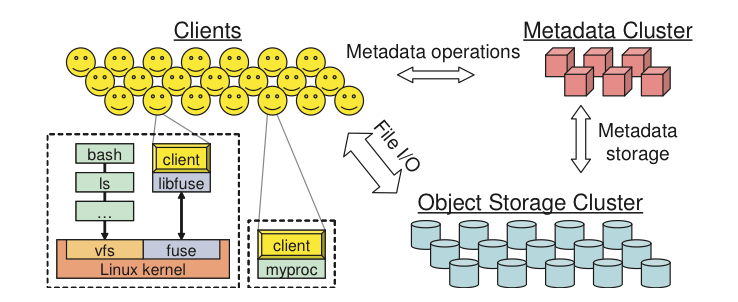

Figure 2.1. Ceph architecture

RADOS

- Object Storage Device (OSD) Daemon. The storage daemon for the RADOS service, which interacts with the OSD (physical or logical storage unit for your data).You must run this daemon on each server in your cluster. For each OSD, you can have an associated hard drive disk. For performance purposes, pool your hard drive disk with raid arrays, logical volume management (LVM), or B-tree file system (

Btrfs) pooling. By default, the following pools are created: data, metadata, and RBD. - Meta-Data Server (MDS). Stores metadata. MDSs build a POSIX file system on top of objects for Ceph clients. However, if you do not use the Ceph file system, you do not need a metadata server.

- Monitor (MON). A lightweight daemon that handles all communications with external applications and clients. It also provides a consensus for distributed decision making in a Ceph/RADOS cluster. For instance, when you mount a Ceph shared on a client, you point to the address of a MON server. It checks the state and the consistency of the data. In an ideal setup, you must run at least three

ceph-mondaemons on separate servers.

Btrfs as a file system for storage. XFS might be a better alternative for production environments;XFS is an excellent alternative to Btrfs. The ext4 file system is also compatible but does not exploit the power of Ceph.

Btrfs, ensure that you use the correct version (see Ceph Dependencies).

Ways to store, use, and expose data

- RADOS. Use as an object, default storage mechanism.

- RBD. Use as a block device. The Linux kernel RBD (RADOS block device) driver allows striping a Linux block device over multiple distributed object store data objects. It is compatible with the KVM RBD image.

- CephFS. Use as a file, POSIX-compliant file system.

- RADOS Gateway. OpenStack Object Storage and Amazon-S3 compatible RESTful interface (see RADOS_Gateway).

- librados, and its related C/C++ bindings.

- RBD and QEMU-RBD. Linux kernel and QEMU block devices that stripe data across multiple objects.

Driver options

volume_tmp_dir option has been deprecated and replaced by image_conversion_dir.

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

rados_connect_timeout = -1

|

(IntOpt) Timeout value (in seconds) used when connecting to ceph cluster. If value < 0, no timeout is set and default librados value is used. |

rbd_ceph_conf =

|

(StrOpt) Path to the ceph configuration file |

rbd_flatten_volume_from_snapshot = False

|

(BoolOpt) Flatten volumes created from snapshots to remove dependency from volume to snapshot |

rbd_max_clone_depth = 5

|

(IntOpt) Maximum number of nested volume clones that are taken before a flatten occurs. Set to 0 to disable cloning. |

rbd_pool = rbd

|

(StrOpt) The RADOS pool where rbd volumes are stored |

rbd_secret_uuid = None

|

(StrOpt) The libvirt uuid of the secret for the rbd_user volumes |

rbd_store_chunk_size = 4

|

(IntOpt) Volumes will be chunked into objects of this size (in megabytes). |

rbd_user = None

|

(StrOpt) The RADOS client name for accessing rbd volumes - only set when using cephx authentication |

volume_tmp_dir = None

|

(StrOpt) Directory where temporary image files are stored when the volume driver does not write them directly to the volume. Warning: this option is now deprecated, please use image_conversion_dir instead. |

2.2.2. Dell EqualLogic volume driver

Supported operations

- Create, delete, attach, and detach volumes.

- Create, list, and delete volume snapshots.

- Clone a volume.

- Multiple instances of Dell EqualLogic Groups or Dell EqualLogic Group Storage Pools and multiple pools on a single array.

- Multiple instances of Dell EqualLogic Groups or Dell EqualLogic Group Storage Pools or multiple pools on a single array.

/etc/cinder/cinder.conf file (see Section 2.4, “Block Storage sample configuration files” for reference).

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

eqlx_chap_login = admin

|

(StrOpt) Existing CHAP account name. Note that this option is deprecated in favour of "chap_username" as specified in cinder/volume/driver.py and will be removed in next release. |

eqlx_chap_password = password

|

(StrOpt) Password for specified CHAP account name. Note that this option is deprecated in favour of "chap_password" as specified in cinder/volume/driver.py and will be removed in the next release |

eqlx_cli_max_retries = 5

|

(IntOpt) Maximum retry count for reconnection. Default is 5. |

eqlx_cli_timeout = 30

|

(IntOpt) Timeout for the Group Manager cli command execution. Default is 30. |

eqlx_group_name = group-0

|

(StrOpt) Group name to use for creating volumes. Defaults to "group-0". |

eqlx_pool = default

|

(StrOpt) Pool in which volumes will be created. Defaults to "default". |

eqlx_use_chap = False

|

(BoolOpt) Use CHAP authentication for targets. Note that this option is deprecated in favour of "use_chap_auth" as specified in cinder/volume/driver.py and will be removed in next release. |

/etc/cinder/cinder.conf configuration lists the relevant settings for a typical Block Storage service using a single Dell EqualLogic Group:

Example 2.1. Default (single-instance) configuration

- IP_EQLX

- The IP address used to reach the Dell EqualLogic Group through SSH. This field has no default value.

- SAN_UNAME

- The user name to login to the Group manager via SSH at the

san_ip. Default user name isgrpadmin. - SAN_PW

- The corresponding password of SAN_UNAME. Not used when

san_private_keyis set. Default password ispassword. - EQLX_GROUP

- The group to be used for a pool where the Block Storage service will create volumes and snapshots. Default group is

group-0. - EQLX_POOL

- The pool where the Block Storage service will create volumes and snapshots. Default pool is

default. This option cannot be used for multiple pools utilized by the Block Storage service on a single Dell EqualLogic Group. - EQLX_UNAME

- The CHAP login account for each volume in a pool, if

eqlx_use_chapis set totrue. Default account name ischapadmin. - EQLX_PW

- The corresponding password of EQLX_UNAME. The default password is randomly generated in hexadecimal, so you must set this password manually.

- SAN_KEY_PATH (optional)

- The filename of the private key used for SSH authentication. This provides password-less login to the EqualLogic Group. Not used when

san_passwordis set. There is no default value.

san_thin_provision = true setting.

Example 2.2. Multi back-end Dell EqualLogic configuration

- Thin provisioning for SAN volumes is enabled (

san_thin_provision = true). This is recommended when setting up Dell EqualLogic back ends. - Each Dell EqualLogic back-end configuration (

[backend1]and[backend2]) has the same required settings as a single back-end configuration, with the addition ofvolume_backend_name. - The

san_ssh_portoption is set to its default value, 22. This option sets the port used for SSH. - The

ssh_conn_timeoutoption is also set to its default value, 30. This option sets the timeout in seconds for CLI commands over SSH. - The

IP_EQLX1andIP_EQLX2refer to the IP addresses used to reach the Dell EqualLogic Group ofbackend1andbackend2through SSH, respectively.

2.2.3. Dell Storage Center iSCSI drivers

cinder.conf file.

Supported operations

- Create, delete, attach (map), and detach (unmap) volumes.

- Create, list, and delete volume snapshots.

- Create a volume from a snapshot.

- Copy an image to a volume.

- Copy a volume to an image.

- Clone a volume.

- Extend a volume.

Extra spec options

storagetype:storageprofile with the value of the name of the Storage Profile on the Storage Center can be set to allow to use Storage Profiles other than the default.

High Priority and Low Priority Storage Profiles:

cinder type-create "GoldVolumeType" cinder type-key "GoldVolumeType" set storagetype:storageprofile=highpriority cinder type-create "BronzeVolumeType" cinder type-key "BronzeVolumeType" set storagetype:storageprofile=lowpriority

$ cinder type-create "GoldVolumeType"

$ cinder type-key "GoldVolumeType" set storagetype:storageprofile=highpriority

$ cinder type-create "BronzeVolumeType"

$ cinder type-key "BronzeVolumeType" set storagetype:storageprofile=lowpriorityDriver options

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

dell_sc_api_port = 3033

|

(IntOpt) Dell API port |

dell_sc_server_folder = openstack

|

(StrOpt) Name of the server folder to use on the Storage Center |

dell_sc_ssn = 64702

|

(IntOpt) Storage Center System Serial Number |

dell_sc_volume_folder = openstack

|

(StrOpt) Name of the volume folder to use on the Storage Center |

iSCSI configuration

Example 2.3. Sample iSCSI Configuration

- IP_SC

- The IP address used to reach the Dell Enterprise Manager. This field has no default value.

- SAN_UNAME

- The user name to login to the Dell Enterprise Manager at the IP_EQLX. Default user name is

Admin. - SAN_PW

- The corresponding password of SAN_UNAME. Default password is

password. - ISCSI_IP

- The IP address that the iSCSI daemon is listening on. In this case, ISCSI_IP is the IP address of the Dell Storage Center iSCSI.

- SERIAL

- The Dell Storage Center serial number to use. Default is

64702. - API_PORT

- The Dell Enterprise Manager API port. Default is

3033 - SERVFOLDER

- The

Serverfolder in Dell Storage Center where the new server definitions are placed. - VOLFOLDER

- The

Serverfolder in Dell Storage Center where the new volumes are created. - ISCSI_PORT

- The corresponding port of the Dell Storage Center array. This parameter is optional, and defaults to

3036

delliscsi). To enable a back end, add its name to the enabled_backends setting. In the case of multiple back ends, enable them by adding their respective names to enabled_backends as a comma-delimited list.

2.2.4. EMC VMAX iSCSI and FC drivers

EMCVMAXISCSIDriver and EMCVMAXFCDriver, support the use of EMC VMAX storage arrays under OpenStack Block Storage. They both provide equivalent functions and differ only in support for their respective host attachment methods.

2.2.4.1. System requirements

2.2.4.2. Supported operations

- Create, delete, attach, and detach volumes.

- Create, list, and delete volume snapshots.

- Copy an image to a volume.

- Copy a volume to an image.

- Clone a volume.

- Extend a volume.

- Retype a volume.

- Create a volume from a snapshot.

- FAST automated storage tiering policy.

- Dynamic masking view creation.

- Striped volume creation.

2.2.4.3. Set up the VMAX drivers

Procedure 2.1. To set up the EMC VMAX drivers

- Install the python-pywbem package for your distribution. See Section 2.2.4.3.1, “Install the python-pywbem package”.

- Download SMI-S from PowerLink and install it. Add your VMAX arrays to SMI-S.For information, see Section 2.2.4.3.2, “Set up SMI-S” and the SMI-S release notes.

- Change configuration files. See Section 2.2.4.3.3, “

cinder.confconfiguration file” and Section 2.2.4.3.4, “cinder_emc_config_CONF_GROUP_ISCSI.xmlconfiguration file”. - Configure connectivity. For FC driver, see Section 2.2.4.3.5, “FC Zoning with VMAX”. For iSCSI driver, see Section 2.2.4.3.6, “iSCSI with VMAX”.

2.2.4.3.1. Install the python-pywbem package

yum install pywbem

# yum install pywbem2.2.4.3.2. Set up SMI-S

/opt/emc/ECIM/ECOM/bin on Linux and C:\Program Files\EMC\ECIM\ECOM\bin on Windows. After you install and configure SMI-S, go to that directory and type TestSmiProvider.exe.

2.2.4.3.3. cinder.conf configuration file

/etc/cinder/cinder.conf.

10.10.61.45 is the IP address of the VMAX iSCSI target:

CONF_GROUP_ISCSI and CONF_GROUP_FC. Each configuration group has a section describing unique parameters for connections, drivers, the volume_backend_name, and the name of the EMC-specific configuration file containing additional settings. Note that the file name is in the format /etc/cinder/cinder_emc_config_[confGroup].xml.

cinder.conf and EMC-specific configuration files have been created, cinder commands need to be issued in order to create and associate OpenStack volume types with the declared volume_backend_names:

cinder type-create VMAX_ISCSI cinder type-key VMAX_ISCSI set volume_backend_name=ISCSI_backend cinder type-create VMAX_FC cinder type-key VMAX_FC set volume_backend_name=FC_backend

$ cinder type-create VMAX_ISCSI

$ cinder type-key VMAX_ISCSI set volume_backend_name=ISCSI_backend

$ cinder type-create VMAX_FC

$ cinder type-key VMAX_FC set volume_backend_name=FC_backendVMAX_ISCSI is associated with the ISCSI_backend, and the type VMAX_FC is associated with the FC_backend.

cinder-volume service.

2.2.4.3.4. cinder_emc_config_CONF_GROUP_ISCSI.xml configuration file

/etc/cinder/cinder_emc_config_CONF_GROUP_ISCSI.xml file. You do not need to restart the service for this change.

EcomServerIpandEcomServerPortare the IP address and port number of the ECOM server which is packaged with SMI-S.EcomUserNameandEcomPasswordare credentials for the ECOM server.PortGroupssupplies the names of VMAX port groups that have been pre-configured to expose volumes managed by this backend. Each supplied port group should have sufficient number and distribution of ports (across directors and switches) as to ensure adequate bandwidth and failure protection for the volume connections.PortGroupscan contain one or more port groups of either iSCSI or FC ports. When a dynamic masking view is created by the VMAX driver, the port group is chosen randomly from thePortGrouplist, to evenly distribute load across the set of groups provided. Make sure that thePortGroupsset contains either all FC or all iSCSI port groups (for a given backend), as appropriate for the configured driver (iSCSI or FC).- The

Arraytag holds the unique VMAX array serial number. - The

Pooltag holds the unique pool name within a given array. For backends not using FAST automated tiering, the pool is a single pool that has been created by the administrator. For backends exposing FAST policy automated tiering, the pool is the bind pool to be used with the FAST policy. - The

FastPolicytag conveys the name of the FAST Policy to be used. By including this tag, volumes managed by this backend are treated as under FAST control. Omitting theFastPolicytag means FAST is not enabled on the provided storage pool.

2.2.4.3.5. FC Zoning with VMAX

2.2.4.3.6. iSCSI with VMAX

- Make sure the iscsi-initiator-utils package is installed on the host (use apt-get, zypper, or yum, depending on Linux flavor).

- Verify host is able to ping VMAX iSCSI target ports.

2.2.4.4. VMAX masking view and group naming info

Masking view names

OS-[shortHostName][poolName]-I-MV (for Masking Views using iSCSI)

OS-[shortHostName][poolName]-I-MV (for Masking Views using iSCSI)OS-[shortHostName][poolName]-F-MV (for Masking Views using FC)

OS-[shortHostName][poolName]-F-MV (for Masking Views using FC)Initiator group names

OS-[shortHostName]-I-IG (for iSCSI initiators)

OS-[shortHostName]-I-IG (for iSCSI initiators)OS-[shortHostName]-F-IG (for Fibre Channel initiators)

OS-[shortHostName]-F-IG (for Fibre Channel initiators)FA port groups

Storage group names

OS-[shortHostName][poolName]-I-SG (attached over iSCSI)

OS-[shortHostName][poolName]-I-SG (attached over iSCSI)OS-[shortHostName][poolName]-F-SG (attached over Fibre Channel)

OS-[shortHostName][poolName]-F-SG (attached over Fibre Channel)2.2.4.5. Concatenated or striped volumes

storagetype:stripecount representing the number of meta members in the striped volume. The example below means that each volume created under the GoldStriped volume type will be striped and made up of 4 meta members.

cinder type-create GoldStriped cinder type-key GoldStriped set volume_backend_name=GOLD_BACKEND cinder type-key GoldStriped set storagetype:stripecount=4

$ cinder type-create GoldStriped

$ cinder type-key GoldStriped set volume_backend_name=GOLD_BACKEND

$ cinder type-key GoldStriped set storagetype:stripecount=42.2.5. EMC VNX direct driver

EMC VNX direct driver (consists of EMCCLIISCSIDriver and EMCCLIFCDriver) supports both iSCSI and FC protocol. EMCCLIISCSIDriver (VNX iSCSI direct driver) and EMCCLIFCDriver (VNX FC direct driver) are separately based on the ISCSIDriver and FCDriver defined in Block Storage.

EMCCLIISCSIDriver and EMCCLIFCDriver perform the volume operations by executing Navisphere CLI (NaviSecCLI) which is a command line interface used for management, diagnostics and reporting functions for VNX.

2.2.5.1. Supported OpenStack release

EMC VNX direct driver supports the Kilo release.

2.2.5.2. System requirements

- VNX Operational Environment for Block version 5.32 or higher.

- VNX Snapshot and Thin Provisioning license should be activated for VNX.

- Navisphere CLI v7.32 or higher is installed along with the driver.

2.2.5.3. Supported operations

- Create, delete, attach, and detach volumes.

- Create, list, and delete volume snapshots.

- Create a volume from a snapshot.

- Copy an image to a volume.

- Clone a volume.

- Extend a volume.

- Migrate a volume.

- Retype a volume.

- Get volume statistics.

- Create and delete consistency groups.

- Create, list, and delete consistency group snapshots.

- Modify consistency groups.

2.2.5.4. Preparation

2.2.5.4.2. Install Block Storage driver

EMCCLIISCSIDriver and EMCCLIFCDriver are provided in the installer package:

emc_vnx_cli.pyemc_cli_fc.py(forEMCCLIFCDriver)emc_cli_iscsi.py(forEMCCLIISCSIDriver)

cinder/volume/drivers/emc/ directory of the OpenStack node(s) where cinder-volume is running.

2.2.5.4.3. FC zoning with VNX (EMCCLIFCDriver only)

2.2.5.4.4. Register with VNX

cinder-volume service(Block Storage nodes) must be registered with the VNX as well.

2.2.5.4.4.1. EMCCLIFCDriver

EMCCLIFCDriver:

- Assume

20:00:00:24:FF:48:BA:C2:21:00:00:24:FF:48:BA:C2is the WWN of a FC initiator port name of the compute node whose hostname and IP aremyhost1and10.10.61.1. Register20:00:00:24:FF:48:BA:C2:21:00:00:24:FF:48:BA:C2in Unisphere:- Login to Unisphere, go to .

- Refresh and wait until the initiator

20:00:00:24:FF:48:BA:C2:21:00:00:24:FF:48:BA:C2with SP PortA-1appears. - Click the button, select CLARiiON/VNX and enter the hostname (which is the output of the linux command

hostname) and IP address:- Hostname :

myhost1 - IP :

10.10.61.1 - Click

- Then host

10.10.61.1will appear under as well.

- Register the wwn with more ports if needed.

2.2.5.4.4.2. EMCCLIISCSIDriver

EMCCLIISCSIDriver:

- On the compute node with IP address

10.10.61.1and hostnamemyhost1, execute the following commands (assuming10.10.61.35is the iSCSI target):- Start the iSCSI initiator service on the node

/etc/init.d/open-iscsi start

# /etc/init.d/open-iscsi startCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Discover the iSCSI target portals on VNX

iscsiadm -m discovery -t st -p 10.10.61.35

# iscsiadm -m discovery -t st -p 10.10.61.35Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Enter

/etc/iscsicd /etc/iscsi

# cd /etc/iscsiCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Find out the iqn of the node

more initiatorname.iscsi

# more initiatorname.iscsiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Login to VNX from the compute node using the target corresponding to the SPA port:

iscsiadm -m node -T iqn.1992-04.com.emc:cx.apm01234567890.a0 -p 10.10.61.35 -l

# iscsiadm -m node -T iqn.1992-04.com.emc:cx.apm01234567890.a0 -p 10.10.61.35 -lCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Assume

iqn.1993-08.org.debian:01:1a2b3c4d5f6gis the initiator name of the compute node. Registeriqn.1993-08.org.debian:01:1a2b3c4d5f6gin Unisphere:- Login to Unisphere, go to .

- Refresh and wait until the initiator

iqn.1993-08.org.debian:01:1a2b3c4d5f6gwith SP PortA-8v0appears. - Click the button, select CLARiiON/VNX and enter the hostname (which is the output of the linux command

hostname) and IP address:- Hostname :

myhost1 - IP :

10.10.61.1 - Click

- Then host

10.10.61.1will appear under as well.

- Logout iSCSI on the node:

iscsiadm -m node -u

# iscsiadm -m node -uCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Login to VNX from the compute node using the target corresponding to the SPB port:

iscsiadm -m node -T iqn.1992-04.com.emc:cx.apm01234567890.b8 -p 10.10.61.36 -l

# iscsiadm -m node -T iqn.1992-04.com.emc:cx.apm01234567890.b8 -p 10.10.61.36 -lCopy to Clipboard Copied! Toggle word wrap Toggle overflow - In Unisphere register the initiator with the SPB port.

- Logout iSCSI on the node:

iscsiadm -m node -u

# iscsiadm -m node -uCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Register the iqn with more ports if needed.

2.2.5.5. Backend configuration

/etc/cinder/cinder.conf:

- where

san_ipis one of the SP IP addresses of the VNX array andsan_secondary_ipis the other SP IP address of VNX array.san_secondary_ipis an optional field, and it serves the purpose of providing a high availability(HA) design. In case that one SP is down, the other SP can be connected automatically.san_ipis a mandatory field, which provides the main connection. - where

Pool_01_SASis the pool from which the user wants to create volumes. The pools can be created using Unisphere for VNX. Refer to the Section 2.2.5.18, “Multiple pools support” on how to manage multiple pools. - where

storage_vnx_security_file_diris the directory path of the VNX security file. Make sure the security file is generated following the steps in Section 2.2.5.6, “Authentication”. - where

iscsi_initiatorsis a dictionary of IP addresses of the iSCSI initiator ports on all OpenStack nodes which want to connect to VNX via iSCSI. If this option is configured, the driver will leverage this information to find an accessible iSCSI target portal for the initiator when attaching volume. Otherwise, the iSCSI target portal will be chosen in a relative random way. - Restart

cinder-volumeservice to make the configuration change take effect.

2.2.5.6. Authentication

- Find out the linux user id of the

/usr/bin/cinder-volumeprocesses. Assuming the service/usr/bin/cinder-volumeis running by accountcinder. - Switch to

rootaccount - Change

cinder:x:113:120::/var/lib/cinder:/bin/falsetocinder:x:113:120::/var/lib/cinder:/bin/bashin/etc/passwd(This temporary change is to make step 4 work). - Save the credentials on behalf of

cinderuser to a security file (assuming the array credentials areadmin/admininglobalscope). In below command, switch-secfilepathis used to specify the location to save the security file (assuming saving to directory/etc/secfile/array1).su -l cinder -c '/opt/Navisphere/bin/naviseccli -AddUserSecurity -user admin -password admin -scope 0 -secfilepath /etc/secfile/array1'

# su -l cinder -c '/opt/Navisphere/bin/naviseccli -AddUserSecurity -user admin -password admin -scope 0 -secfilepath /etc/secfile/array1'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the security file to the different locations for different arrays except where the same credentials are shared between all arrays managed by the host. Otherwise, the credentials in the security file will be overwritten. If-secfilepathis not specified in the command above, the security file will be saved to the default location which is the home directory of the executor. - Change

cinder:x:113:120::/var/lib/cinder:/bin/bashback tocinder:x:113:120::/var/lib/cinder:/bin/falsein/etc/passwd. - Remove the credentials options

san_login,san_passwordandstorage_vnx_authentication_typefromcinder.conf(normally it is/etc/cinder/cinder.conf). Add the optionstorage_vnx_security_file_dirand set its value to the directory path supplied with switch-secfilepathin step 4. Omit this option if-secfilepathis not used in step 4.#Directory path that contains the VNX security file. Generate the security file first storage_vnx_security_file_dir = /etc/secfile/array1

#Directory path that contains the VNX security file. Generate the security file first storage_vnx_security_file_dir = /etc/secfile/array1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart

cinder-volumeservice to make the change take effect.

/etc/cinder/cinder.conf through the three options below:

2.2.5.7. Restriction of deployment

cinder upload-to-image --force True is used against an in-use volume. Otherwise, cinder upload-to-image --force True will terminate the vm instance's data access to the volume.

2.2.5.8. Restriction of volume extension

error_extending.

2.2.5.9. Restriction of iSCSI attachment

cinder-volume service or wait 5 minutes before any volume attachment operation. Otherwise, the attachment may fail because the old iSCSI port configurations were used.

2.2.5.10. Provisioning type (thin, thick, deduplicated and compressed)

storagetype:provisioning in volume type to set the provisioning type of a volume. The provisioning type can be thick, thin, deduplicated or compressed.

thickprovisioning type means the volume is fully provisioned.thinprovisioning type means the volume is virtually provisioned.deduplicatedprovisioning type means the volume is virtually provisioned and the deduplication is enabled on it. Administrator shall go to VNX to configure the system level deduplication settings. To create a deduplicated volume, the VNX deduplication license should be activated on VNX first, and use keydeduplication_support=Trueto let Block Storage scheduler find a volume back end which manages a VNX with deduplication license activated.compressedprovisioning type means the volume is virtually provisioned and the compression is enabled on it. Administrator shall go to the VNX to configure the system level compression settings. To create a compressed volume, the VNX compression license should be activated on VNX first, and the user should specify keycompression_support=Trueto let Block Storage scheduler find a volume back end which manages a VNX with compression license activated. VNX does not support to create a snapshot on a compressed volume. If the user tries to create a snapshot on a compressed volume, the operation would fail and OpenStack would show the new snapshot in error state.

ThickVolume, ThinVolume, DeduplicatedVolume and CompressedVolume. For ThickVolume, storagetype:provisioning is set to thick. Similarly for other volume types. If storagetype:provisioning is not specified or an invalid value, the default value thick is adopted.

ThickVolume, is user-defined and can be any name. Extra spec key storagetype:provisioning shall be the exact name listed here. Extra spec value for storagetype:provisioning shall be thick, thin, deduplicated or compressed. During volume creation, if the driver finds storagetype:provisioning in the extra spec of the volume type, it will create the volume with the provisioning type accordingly. Otherwise, the volume will be thick as the default.

2.2.5.11. Fully automated storage tiering support

storagetype:tiering to set the tiering policy of a volume and use the extra spec key fast_support=True to let Block Storage scheduler find a volume back end which manages a VNX with FAST license activated. Here are the five supported values for the extra spec key storagetype:tiering:

StartHighThenAuto(Default option)AutoHighestAvailableLowestAvailableNoMovement

cinder type-create "AutoTieringVolume" cinder type-key "AutoTieringVolume" set storagetype:tiering=Auto fast_support=True cinder type-create "ThinVolumeOnLowestAvaibleTier" cinder type-key "CompressedVolumeOnLowestAvaibleTier" set storagetype:provisioning=thin storagetype:tiering=Auto fast_support=True

$ cinder type-create "AutoTieringVolume"

$ cinder type-key "AutoTieringVolume" set storagetype:tiering=Auto fast_support=True

$ cinder type-create "ThinVolumeOnLowestAvaibleTier"

$ cinder type-key "CompressedVolumeOnLowestAvaibleTier" set storagetype:provisioning=thin storagetype:tiering=Auto fast_support=True2.2.5.12. FAST Cache support

fast_cache_enabled to choose whether to create a volume on the volume back end which manages a pool with FAST Cache enabled. The value of the extra spec key fast_cache_enabled is either True or False. When creating a volume, if the key fast_cache_enabled is set to True in the volume type, the volume will be created by a back end which manages a pool with FAST Cache enabled.

2.2.5.13. Storage group automatic deletion

destroy_empty_storage_group=True, the driver will remove the empty storage group when its last volume is detached. For data safety, it does not suggest to set the option destroy_empty_storage_group=True unless the VNX is exclusively managed by one Block Storage node because consistent lock_path is required for operation synchronization for this behavior.

2.2.5.14. EMC storage-assisted volume migration

EMC VNX direct driver supports storage-assisted volume migration, when the user starts migrating with cinder migrate --force-host-copy False volume_id host or cinder migrate volume_id host, cinder will try to leverage the VNX's native volume migration functionality.

- Volume migration between back ends with different storage protocol, ex, FC and iSCSI.

- Volume is being migrated across arrays.

2.2.5.15. Initiator auto registration

initiator_auto_registration=True, the driver will automatically register iSCSI initiators with all working iSCSI target ports on the VNX array during volume attaching (The driver will skip those initiators that have already been registered).

2.2.5.16. Initiator auto deregistration

initiator_auto_deregistration=True is set, the driver will deregister all the iSCSI initiators of the host after its storage group is deleted.

2.2.5.17. Read-only volumes

cinder readonly-mode-update volume True

$ cinder readonly-mode-update volume True2.2.5.18. Multiple pools support

storage_vnx_pool_name is not given in the configuration file, the Block Storage back end uses all the pools on the VNX array, and the scheduler chooses the pool to place the volume based on its capacities and capabilities. This kind of Block Storage back end is named as array-based back end.

cinder type-create "HighPerf" cinder type-key "HighPerf" set pool_name=Pool_02_SASFLASH volume_backend_name=vnx_41

$ cinder type-create "HighPerf"

$ cinder type-key "HighPerf" set pool_name=Pool_02_SASFLASH volume_backend_name=vnx_412.2.5.19. Volume number threshold

check_max_pool_luns_threshold is False. When check_max_pool_luns_threshold=True, the pool-based back end will check the limit and will report 0 free capacity to the scheduler if the limit is reached. So the scheduler will be able to skip this kind of pool-based back end that runs out of the pool volume number.

2.2.5.20. FC SAN auto zoning

zoning_mode to fabric in back-end configuration section to enable this feature. For ZoneManager configuration, please refer to Section 2.6, “Fibre Channel Zone Manager”.

2.2.5.21. Multi-backend configuration

2.2.5.22. Force delete volumes in storage groups

force_delete_lun_in_storagegroup is introduced to allow the user to delete the available volumes in this tricky situation.

force_delete_lun_in_storagegroup=True is set in the back-end section, the driver will move the volumes out of storage groups and then delete them if the user tries to delete the volumes that remain in storage groups on the VNX array.

force_delete_lun_in_storagegroup is False.

2.2.6. EMC XtremIO Block Storage driver configuration

2.2.6.1. Support matrix

- Xtremapp: Version 3.0 and 4.0

2.2.6.2. Supported operations

- Create, delete, clone, attach, and detach volumes

- Create and delete volume snapshots

- Create a volume from a snapshot

- Copy an image to a volume

- Copy a volume to an image

- Extend a volume

- Manage and unmanage a volume

- Get volume statistics

2.2.6.3. XtremIO Block Storage driver configuration

cinder.conf file by adding the configuration below under the [DEFAULT] section of the file in case of a single back end or under a separate section in case of multiple back ends (for example [XTREMIO]). The configuration file is usually located under the following path /etc/cinder/cinder.conf.

2.2.6.3.1. XtremIO driver name

- For iSCSI

volume_driver = cinder.volume.drivers.emc.xtremio.XtremIOIscsiDriver - For Fibre Channel

volume_driver = cinder.volume.drivers.emc.xtremio.XtremIOFibreChannelDriver

2.2.6.3.2. XtremIO management server (XMS) IP

san_ip = XMS Management IP

2.2.6.3.3. XtremIO cluster name

xtremio_cluster_name = Cluster-Name

2.2.6.3.4. XtremIO user credentials

san_login = XMS username

san_password = XMS username password

2.2.6.4. Multiple back ends

2.2.6.5. Setting thin provisioning and multipathing parameters

- Thin ProvisioningAll XtremIO volumes are thin provisioned. The default value of 20 should be maintained for the

max_over_subscription_ratioparameter.Theuse_cow_imagesparameter in thenova.conffile should be set to False as follows:use_cow_images = false - MultipathingThe

use_multipath_for_image_xferparameter in thecinder.conffile should be set to True as follows:use_multipath_for_image_xfer = true

2.2.6.6. Restarting OpenStack Block Storage

cinder.conffile and restart cinder by running the following command:

openstack-service restart cinder-volume

$ openstack-service restart cinder-volume2.2.6.7. Configuring CHAP

modify-chap chap-authentication-mode=initiator

$ modify-chap chap-authentication-mode=initiator2.2.6.8. Configuration example

cinder.conf example file

cinder.conf file by editing the necessary parameters as follows:

2.2.7. GlusterFS driver

volume_driver in cinder.conf:

volume_driver=cinder.volume.drivers.glusterfs.GlusterfsDriver

volume_driver=cinder.volume.drivers.glusterfs.GlusterfsDriver| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

glusterfs_mount_point_base = $state_path/mnt

|

(StrOpt) Base dir containing mount points for gluster shares. |

glusterfs_qcow2_volumes = False

|

(BoolOpt) Create volumes as QCOW2 files rather than raw files. |

glusterfs_shares_config = /etc/cinder/glusterfs_shares

|

(StrOpt) File with the list of available gluster shares |

glusterfs_sparsed_volumes = True

|

(BoolOpt) Create volumes as sparsed files which take no space.If set to False volume is created as regular file.In such case volume creation takes a lot of time. |

2.2.8. HDS HNAS iSCSI and NFS driver

2.2.8.1. Supported operations

- Create, delete, attach, and detach volumes.

- Create, list, and delete volume snapshots.

- Create a volume from a snapshot.

- Copy an image to a volume.

- Copy a volume to an image.

- Clone a volume.

- Extend a volume.

- Get volume statistics.

2.2.8.2. HNAS storage requirements

replication targets. Additionally:

- For NFS:

- Create NFS exports, choose a path for them (it must be different from "/") and set the Show snapshots option to

hide and disable access.Also, configure the optionnorootsquashas"* (rw, norootsquash)",so cinder services can change the permissions of its volumes.In order to use the hardware accelerated features of NFS HNAS, we recommend settingmax-nfs-versionto 3. Refer to HNAS command line reference to see how to configure this option. - For iSCSI:

- You need to set an iSCSI domain.

2.2.8.3. Block storage host requirements

- The HNAS driver is supported for Red Hat. The following packages must be installed:

- nfs-utils for Red Hat

- If you are not using SSH, you need the HDS SSC package (hds-ssc-v1.0-1) to communicate with an HNAS array using the SSC command. This utility package is available in the RPM package distributed with the hardware through physical media or it can be manually copied from the SMU to the Block Storage host.

2.2.8.4. Package installation

- Install SSC:In Red Hat:

rpm -i hds-ssc-v1.0-1.rpm

# rpm -i hds-ssc-v1.0-1.rpmCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Install the dependencies:In Red Hat:

yum install nfs-utils nfs-utils-lib

# yum install nfs-utils nfs-utils-libCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Configure the driver as described in the "Driver Configuration" section.

- Restart all cinder services (volume, scheduler and backup).

2.2.8.5. Driver configuration

cinder.conf configuration file. Below are the configuration needed in the cinder.conf configuration file [1]:

[DEFAULT] enabled_backends = hnas_iscsi1, hnas_nfs1

[DEFAULT]

enabled_backends = hnas_iscsi1, hnas_nfs1[hnas_iscsi1] volume_driver = cinder.volume.drivers.hds.iscsi.HDSISCSIDriver hds_hnas_iscsi_config_file = /path/to/config/hnas_config_file.xml volume_backend_name = HNAS-ISCSI

[hnas_iscsi1]

volume_driver = cinder.volume.drivers.hds.iscsi.HDSISCSIDriver

hds_hnas_iscsi_config_file = /path/to/config/hnas_config_file.xml

volume_backend_name = HNAS-ISCSI[hnas_nfs1] volume_driver = cinder.volume.drivers.hds.nfs.HDSNFSDriver hds_hnas_nfs_config_file = /path/to/config/hnas_config_file.xml volume_backend_name = HNAS-NFS

[hnas_nfs1]

volume_driver = cinder.volume.drivers.hds.nfs.HDSNFSDriver

hds_hnas_nfs_config_file = /path/to/config/hnas_config_file.xml

volume_backend_name = HNAS-NFS2.2.8.6. HNAS volume driver XML configuration options

svc_n tag (svc_0, svc_1, svc_2, or svc_3 [2], for example). These are the configuration options available for each service label:

| Option | Type | Default | Description |

volume_type

|

Required

|

default

|

When a

create_volume call with a certain volume type happens, the volume type will try to be matched up with this tag. In each configuration file you must define the default volume type in the service labels and, if no volume type is specified, the default is used. Other labels are case sensitive and should match exactly. If no configured volume types match the incoming requested type, an error occurs in the volume creation.

|

iscsi_ip

|

Required only for iSCSI

|

|

An iSCSI IP address dedicated to the service.

|

hdp

|

Required

|

|

For iSCSI driver: virtual file system label associated with the service.

For NFS driver: path to the volume (<ip_address>:/<path>) associated with the service.

Additionally, this entry must be added in the file used to list available NFS shares. This file is located, by default, in

/etc/cinder/nfs_shares or you can specify the location in the nfs_shares_config option in the cinder.conf configuration file.

|

config section of the XML config file:

| Option | Type | Default | Description |

mgmt_ip0

|

Required

|

|

Management Port 0 IP address. Should be the IP address of the "Admin" EVS.

|

hnas_cmd

|

Optional

|

ssc

|

Command to communicate to HNAS array.

|

chap_enabled

|

Optional (iSCSI only)

|

True

|

Boolean tag used to enable CHAP authentication protocol.

|

username

|

Required

|

supervisor

|

It's always required on HNAS.

|

password

|

Required

|

supervisor

|

Password is always required on HNAS.

|

svc_0, svc_1, svc_2, svc_3

|

Optional

|

(at least one label has to be defined)

|

Service labels: these four predefined names help four different sets of configuration options. Each can specify HDP and a unique volume type.

|

cluster_admin_ip0

|

Optional if

ssh_enabled is True

|

The address of HNAS cluster admin.

|

|

ssh_enabled

|

Optional

|

False

|

Enables SSH authentication between Block Storage host and the SMU.

|

ssh_private_key

|

Required if

ssh_enabled is True

|

False

|

Path to the SSH private key used to authenticate in HNAS SMU. The public key must be uploaded to HNAS SMU using

ssh-register-public-key (this is an SSH subcommand). Note that copying the public key HNAS using ssh-copy-id doesn't work properly as the SMU periodically wipe out those keys.

|

2.2.8.7. Service labels

volume_type per service. Each volume_type must have the metadata service_label with the same name configured in the <volume_type> section of that service. If this is not set, OpenStack Block Storage will schedule the volume creation to the pool with largest available free space or other criteria configured in volume filters.

cinder type-create 'default' cinder type-key 'default' set service_label = 'default' cinder type-create 'platinun-tier' cinder type-key 'platinun' set service_label = 'platinun'

$ cinder type-create 'default'

$ cinder type-key 'default' set service_label = 'default'

$ cinder type-create 'platinun-tier'

$ cinder type-key 'platinun' set service_label = 'platinun'2.2.8.8. Multi-back-end configuration

volume_backend_name option to the appropriate back end. Then, create volume_type configurations with the same volume_backend_name .

cinder type-create 'iscsi' cinder type-key 'iscsi' set volume_backend_name = 'HNAS-ISCSI' cinder type-create 'nfs' cinder type-key 'nfs' set volume_backend_name = 'HNAS-NFS'

$ cinder type-create 'iscsi'

$ cinder type-key 'iscsi' set volume_backend_name = 'HNAS-ISCSI'

$ cinder type-create 'nfs'

$ cinder type-key 'nfs' set volume_backend_name = 'HNAS-NFS'svc_0, svc_1, svc_2, svc_3) on the instances need to have a volume_type and service_label metadata associated with it. If no metadata is associated with a pool, OpenStack Block Storage filtering algorithm selects the pool with the largest available free space.

2.2.8.9. SSH configuration

- If you don't have a pair of public keys already generated, create it in the Block Storage host (leave the pass-phrase empty):

mkdir -p /opt/hds/ssh ssh-keygen -f /opt/hds/ssh/hnaskey

$ mkdir -p /opt/hds/ssh $ ssh-keygen -f /opt/hds/ssh/hnaskeyCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Change the owner of the key to

cinder(or the user the volume service will be run):chown -R cinder.cinder /opt/hds/ssh

# chown -R cinder.cinder /opt/hds/sshCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create the directory "ssh_keys" in the SMU server:

ssh [manager|supervisor]@<smu-ip> 'mkdir -p /var/opt/mercury-main/home/[manager|supervisor]/ssh_keys/'

$ ssh [manager|supervisor]@<smu-ip> 'mkdir -p /var/opt/mercury-main/home/[manager|supervisor]/ssh_keys/'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Copy the public key to the "ssh_keys" directory:

scp /opt/hds/ssh/hnaskey.pub [manager|supervisor]@<smu-ip>:/var/opt/mercury-main/home/[manager|supervisor]/ssh_keys/

$ scp /opt/hds/ssh/hnaskey.pub [manager|supervisor]@<smu-ip>:/var/opt/mercury-main/home/[manager|supervisor]/ssh_keys/Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Access the SMU server:

ssh [manager|supervisor]@<smu-ip>

$ ssh [manager|supervisor]@<smu-ip>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Run the command to register the SSH keys:

ssh-register-public-key -u [manager|supervisor] -f ssh_keys/hnaskey.pub

$ ssh-register-public-key -u [manager|supervisor] -f ssh_keys/hnaskey.pubCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Check the communication with HNAS in the Block Storage host:

ssh -i /opt/hds/ssh/hnaskey [manager|supervisor]@<smu-ip> 'ssc <cluster_admin_ip0> df -a'

$ ssh -i /opt/hds/ssh/hnaskey [manager|supervisor]@<smu-ip> 'ssc <cluster_admin_ip0> df -a'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

<cluster_admin_ip0> is "localhost" for single node deployments. This should return a list of available file systems on HNAS.

2.2.8.10. Editing the XML config file:

- Set the "username".

- Enable SSH adding the line

"<ssh_enabled> True</ssh_enabled>"under"<config>"session. - Set the private key path:

"<ssh_private_key> /opt/hds/ssh/hnaskey</ssh_private_key>"under"<config>"session. - If the HNAS is in a multi-cluster configuration set

"<cluster_admin_ip0>"to the cluster node admin IP. In a single node HNAS, leave it empty. - Restart the cinder service.

2.2.8.11. Additional notes

- The

get_volume_stats()function always provides the available capacity based on the combined sum of all the HDPs that are used in these services labels. - After changing the configuration on the storage, the OpenStack Block Storage driver must be restarted.

- HNAS iSCSI driver, due to an HNAS limitation, allows only 32 volumes per target.

- On Red Hat, if the system is configured to use SELinux, you need to set

"virt_use_nfs = on"for NFS driver work properly.

2.2.9. HDS HUS iSCSI driver

2.2.9.1. System requirements

2.2.9.2. Supported operations

- Create, delete, attach, and detach volumes.

- Create, list, and delete volume snapshots.

- Create a volume from a snapshot.

- Copy an image to a volume.

- Copy a volume to an image.

- Clone a volume.

- Extend a volume.

- Get volume statistics.

2.2.9.3. Configuration

volume_type option in its configuration file.

- Configuration is read from an XML file. This example shows the configuration for single back-end and for multi-back-end cases.

- It is not recommended to manage an HUS array simultaneously from multiple OpenStack Block Storage instances or servers. [4]

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

hds_cinder_config_file = /opt/hds/hus/cinder_hus_conf.xml

|

(StrOpt) The configuration file for the Cinder HDS driver for HUS |

HUS setup

Single back-end

- Set the

hds_cinder_config_fileoption in the/etc/cinder/cinder.conffile to use the HDS volume driver. This option points to a configuration file.[5]volume_driver = cinder.volume.drivers.hds.hds.HUSDriver hds_cinder_config_file = /opt/hds/hus/cinder_hds_conf.xml

volume_driver = cinder.volume.drivers.hds.hds.HUSDriver hds_cinder_config_file = /opt/hds/hus/cinder_hds_conf.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Configure

hds_cinder_config_fileat the location specified previously. For example,/opt/hds/hus/cinder_hds_conf.xml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Multi back-end

- Configure

/etc/cinder/cinder.conf: thehus1hus2configuration blocks are created. Set thehds_cinder_config_fileoption to point to a unique configuration file for each block. Set thevolume_driveroption for each back-end tocinder.volume.drivers.hds.hds.HUSDriverCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Configure

/opt/hds/hus/cinder_hus1_conf.xml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Configure the

/opt/hds/hus/cinder_hus2_conf.xmlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Type extra specs: volume_backend and volume type

volume_backend_name option to the appropriate back-end. In the previous multi back-end example, the platinum volume type is served by hus-2, and the regular volume type is served by hus-1.

cinder type-key regular set volume_backend_name=hus-1 cinder type-key platinum set volume_backend_name=hus-2

cinder type-key regular set volume_backend_name=hus-1

cinder type-key platinum set volume_backend_name=hus-2Non differentiated deployment of HUS arrays

default volume_type in the service labels.

2.2.9.4. HDS iSCSI volume driver configuration options

svc_0, svc_1, svc_2, and svc_3[6]. Each respective service label associates with these parameters and tags:

volume-types: A create_volume call with a certain volume type shall be matched up with this tag.defaultis special in that any service associated with this type is used to create volume when no other labels match. Other labels are case sensitive and should exactly match. If no configured volume_types match the incoming requested type, an error occurs in volume creation.HDP, the pool ID associated with the service.- An iSCSI port dedicated to the service.

svc_0, svc_1, svc_2, or svc_3 can be associated with it. But any mix of these service labels can be used in the same instance [7].

| Option | Type | Default | Description |

mgmt_ip0

|

Required

|

|

Management Port 0 IP address

|

mgmt_ip1

|

Required

|

|

Management Port 1 IP address

|

hus_cmd

|

Optional

|

|

hus_cmd is the command used to communicate with the HUS array. If it is not set, the default value is hus-cmd.

|

username

|

Optional

|

|

Username is required only if secure mode is used

|

password

|

Optional

|

|

Password is required only if secure mode is used

|

svc_0, svc_1, svc_2, svc_3

|

Optional

|

(at least one label has to be defined)

|

Service labels: these four predefined names help four different sets of configuration options -- each can specify iSCSI port address, HDP and a unique volume type.

|

snapshot

|

Required

|

|

A service label which helps specify configuration for snapshots, such as, HDP.

|

volume_type

|

Required

|

|

volume_type tag is used to match volume type. Default meets any type of volume_type, or if it is not specified. Any other volume_type is selected if exactly matched during create_volume.

|

iscsi_ip

|

Required

|

|

iSCSI port IP address where volume attaches for this volume type.

|

hdp

|

Required

|

|

HDP, the pool number where volume, or snapshot should be created.

|

lun_start

|

Optional

|

0

|

LUN allocation starts at this number.

|

lun_end

|

Optional

|

4096

|

LUN allocation is up to, but not including, this number.

|

2.2.10. IBM Storwize family and SVC volume driver

2.2.10.1. Configure the Storwize family and SVC system

Network configuration

storwize_svc_multipath_enabled flag is set to True in the Cinder configuration file, the driver uses all available WWPNs to attach the volume to the instance (details about the configuration flags appear in the next section). If the flag is not set, the driver uses the WWPN associated with the volume's preferred node (if available), otherwise it uses the first available WWPN of the system. The driver obtains the WWPNs directly from the storage system; you do not need to provide these WWPNs directly to the driver.

iSCSI CHAP authentication

storwize_svc_iscsi_chap_enabled is set to True, the driver will associate randomly-generated CHAP secrets with all hosts on the Storwize family system. OpenStack compute nodes use these secrets when creating iSCSI connections.

Configure storage pools

storwize_svc_volpool_name configuration flag. Details about the configuration flags and how to provide the flags to the driver appear in the next section.

Configure user authentication for the driver

san_ip flag, and the management port should be provided by the san_ssh_port flag. By default, the port value is configured to be port 22 (SSH).

cinder-volume management driver has SSH network access to the storage system.

san_login and san_password, respectively.

san_private_key configuration flag.

Create a SSH key pair with OpenSSH

ssh-keygen -t rsa

$ ssh-keygen -t rsakey and key.pub. The key file holds the private SSH key and key.pub holds the public SSH key.

san_private_key configuration flag. The public key should be uploaded to the Storwize family or SVC system using the storage management GUI or command line interface.

2.2.10.2. Configure the Storwize family and SVC driver

Enable the Storwize family and SVC driver

volume_driver option in cinder.conf as follows:

volume_driver = cinder.volume.drivers.ibm.storwize_svc.StorwizeSVCDriver

volume_driver = cinder.volume.drivers.ibm.storwize_svc.StorwizeSVCDriverStorwize family and SVC driver options in cinder.conf

| Flag name | Type | Default | Description | ||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

san_ip

|

Required

|

|

Management IP or host name

|

||||||||||||||||||||||||||||||||||||||||||||||

san_ssh_port

|

Optional

|

22

|

Management port

|

||||||||||||||||||||||||||||||||||||||||||||||

san_login

|

Required

|

|

Management login username

|

||||||||||||||||||||||||||||||||||||||||||||||

san_password

|

Required [a]

|

|

Management login password

|

||||||||||||||||||||||||||||||||||||||||||||||

san_private_key

|

Required [a]

|

|

Management login SSH private key

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_volpool_name

|

Required

|

|

Default pool name for volumes

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_vol_rsize

|

Optional

|

2

|

Initial physical allocation (percentage) [b]

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_vol_warning

|

Optional

|

0 (disabled)

|

Space allocation warning threshold (percentage) [b]

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_vol_autoexpand

|

Optional

|

True

|

Enable or disable volume auto expand [c]

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_vol_grainsize

|

Optional

|

256

|

Volume grain size [b] in KB

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_vol_compression

|

Optional

|

False

|

Enable or disable Real-time Compression [d]

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_vol_easytier

|

Optional

|

True

|

Enable or disable Easy Tier [e]

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_vol_iogrp

|

Optional

|

0

|

The I/O group in which to allocate vdisks

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_flashcopy_timeout

|

Optional

|

120

|

FlashCopy timeout threshold [f] (seconds)

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_connection_protocol

|

Optional

|

iSCSI

|

Connection protocol to use (currently supports 'iSCSI' or 'FC')

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_iscsi_chap_enabled

|

Optional

|

True

|

Configure CHAP authentication for iSCSI connections

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_multipath_enabled

|

Optional

|

False

|

Enable multipath for FC connections [g]

|

||||||||||||||||||||||||||||||||||||||||||||||

storwize_svc_multihost_enabled

|

Optional

|

True

|

Enable mapping vdisks to multiple hosts [h]

|

||||||||||||||||||||||||||||||||||||||||||||||

[a]

The authentication requires either a password ( san_password) or SSH private key (san_private_key). One must be specified. If both are specified, the driver uses only the SSH private key.

[b]

The driver creates thin-provisioned volumes by default. The storwize_svc_vol_rsize flag defines the initial physical allocation percentage for thin-provisioned volumes, or if set to -1, the driver creates full allocated volumes. More details about the available options are available in the Storwize family and SVC documentation.

[c]

Defines whether thin-provisioned volumes can be auto expanded by the storage system, a value of True means that auto expansion is enabled, a value of False disables auto expansion. Details about this option can be found in the –autoexpand flag of the Storwize family and SVC command line interface mkvdisk command.

[d]

Defines whether Real-time Compression is used for the volumes created with OpenStack. Details on Real-time Compression can be found in the Storwize family and SVC documentation. The Storwize or SVC system must have compression enabled for this feature to work.

[e]

Defines whether Easy Tier is used for the volumes created with OpenStack. Details on EasyTier can be found in the Storwize family and SVC documentation. The Storwize or SVC system must have Easy Tier enabled for this feature to work.

[f]

The driver wait timeout threshold when creating an OpenStack snapshot. This is actually the maximum amount of time that the driver waits for the Storwize family or SVC system to prepare a new FlashCopy mapping. The driver accepts a maximum wait time of 600 seconds (10 minutes).

[g]

Multipath for iSCSI connections requires no storage-side configuration and is enabled if the compute host has multipath configured.

[h]

This option allows the driver to map a vdisk to more than one host at a time. This scenario occurs during migration of a virtual machine with an attached volume; the volume is simultaneously mapped to both the source and destination compute hosts. If your deployment does not require attaching vdisks to multiple hosts, setting this flag to False will provide added safety.

| |||||||||||||||||||||||||||||||||||||||||||||||||

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

storwize_svc_allow_tenant_qos = False

|

(BoolOpt) Allow tenants to specify QOS on create |

storwize_svc_connection_protocol = iSCSI

|

(StrOpt) Connection protocol (iSCSI/FC) |

storwize_svc_flashcopy_timeout = 120

|

(IntOpt) Maximum number of seconds to wait for FlashCopy to be prepared. Maximum value is 600 seconds (10 minutes) |

storwize_svc_iscsi_chap_enabled = True

|

(BoolOpt) Configure CHAP authentication for iSCSI connections (Default: Enabled) |

storwize_svc_multihostmap_enabled = True

|

(BoolOpt) Allows vdisk to multi host mapping |

storwize_svc_multipath_enabled = False

|

(BoolOpt) Connect with multipath (FC only; iSCSI multipath is controlled by Nova) |

storwize_svc_npiv_compatibility_mode = False

|

(BoolOpt) Indicate whether svc driver is compatible for NPIV setup. If it is compatible, it will allow no wwpns being returned on get_conn_fc_wwpns during initialize_connection |

storwize_svc_stretched_cluster_partner = None

|

(StrOpt) If operating in stretched cluster mode, specify the name of the pool in which mirrored copies are stored.Example: "pool2" |

storwize_svc_vol_autoexpand = True

|

(BoolOpt) Storage system autoexpand parameter for volumes (True/False) |

storwize_svc_vol_compression = False

|

(BoolOpt) Storage system compression option for volumes |

storwize_svc_vol_easytier = True

|

(BoolOpt) Enable Easy Tier for volumes |

storwize_svc_vol_grainsize = 256

|

(IntOpt) Storage system grain size parameter for volumes (32/64/128/256) |

storwize_svc_vol_iogrp = 0

|

(IntOpt) The I/O group in which to allocate volumes |

storwize_svc_vol_rsize = 2

|

(IntOpt) Storage system space-efficiency parameter for volumes (percentage) |

storwize_svc_vol_warning = 0

|

(IntOpt) Storage system threshold for volume capacity warnings (percentage) |

storwize_svc_volpool_name = volpool

|

(StrOpt) Storage system storage pool for volumes |

Placement with volume types

extra specs of volume types, and used by the filter scheduler to determine placement of new volumes. Make sure to prefix these keys with capabilities: to indicate that the scheduler should use them. The following extra specs are supported:

- capabilities:volume_back-end_name - Specify a specific back-end where the volume should be created. The back-end name is a concatenation of the name of the IBM Storwize/SVC storage system as shown in

lssystem, an underscore, and the name of the pool (mdisk group). For example:capabilities:volume_back-end_name=myV7000_openstackpool

capabilities:volume_back-end_name=myV7000_openstackpoolCopy to Clipboard Copied! Toggle word wrap Toggle overflow - capabilities:compression_support - Specify a back-end according to compression support. A value of

Trueshould be used to request a back-end that supports compression, and a value ofFalsewill request a back-end that does not support compression. If you do not have constraints on compression support, do not set this key. Note that specifyingTruedoes not enable compression; it only requests that the volume be placed on a back-end that supports compression. Example syntax:capabilities:compression_support='<is> True'

capabilities:compression_support='<is> True'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - capabilities:easytier_support - Similar semantics as the

compression_supportkey, but for specifying according to support of the Easy Tier feature. Example syntax:capabilities:easytier_support='<is> True'

capabilities:easytier_support='<is> True'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - capabilities:storage_protocol - Specifies the connection protocol used to attach volumes of this type to instances. Legal values are

iSCSIandFC. Thisextra specsvalue is used for both placement and setting the protocol used for this volume. In the example syntax, note <in> is used as opposed to <is> used in the previous examples.capabilities:storage_protocol='<in> FC'

capabilities:storage_protocol='<in> FC'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Configure per-volume creation options

extra specs keys are supported by the IBM Storwize/SVC driver:

- rsize

- warning

- autoexpand

- grainsize

- compression

- easytier

- multipath

- iogrp

rsize=2 or compression=False.

Example: Volume types

cinder type-create compressed cinder type-key compressed set capabilities:storage_protocol='<in> iSCSI' capabilities:compression_support='<is> True' drivers:compression=True

$ cinder type-create compressed

$ cinder type-key compressed set capabilities:storage_protocol='<in> iSCSI' capabilities:compression_support='<is> True' drivers:compression=Truecinder create --display-name "compressed volume" --volume-type compressed 50

$ cinder create --display-name "compressed volume" --volume-type compressed 50- performance levels (such as, allocating entirely on an HDD tier, using Easy Tier for an HDD-SDD mix, or allocating entirely on an SSD tier)

- resiliency levels (such as, allocating volumes in pools with different RAID levels)

- features (such as, enabling/disabling Real-time Compression)

QOS

etc/cinder/cinder.conf file and setting the storwize_svc_allow_tenant_qos to True.

IOThrotting parameter for storage volumes:

- Add the

qos:IOThrottlingkey into a QOS specification and associate it with a volume type. - Add the

qos:IOThrottlingkey into an extra specification with a volume type. - Add the

qos:IOThrottlingkey to the storage volume metadata.

2.2.10.3. Operational notes for the Storwize family and SVC driver

Migrate volumes

extent_size. If the pools have different values for extent_size, the data will still be moved directly between the pools (not host-side copy), but the operation will be synchronous.

Extend volumes

Snapshots and clones

Volume retype

- rsize

- warning

- autoexpand

- grainsize

- compression

- easytier

- iogrp

rsize, grainsize or compression properties, volume copies are asynchronously synchronized on the array.

iogrp property, IBM Storwize/SVC firmware version 6.4.0 or later is required.

2.2.11. IBM XIV and DS8000 volume driver

cinder.conf, and use the following options to configure it.

volume_driver = cinder.volume.drivers.xiv_ds8k.XIVDS8KDriver

volume_driver = cinder.volume.drivers.xiv_ds8k.XIVDS8KDriver| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

san_clustername =

|

(StrOpt) Cluster name to use for creating volumes |

san_ip =

|

(StrOpt) IP address of SAN controller |

san_login = admin

|

(StrOpt) Username for SAN controller |

san_password =

|

(StrOpt) Password for SAN controller |

xiv_chap = disabled

|

(StrOpt) CHAP authentication mode, effective only for iscsi (disabled|enabled) |

xiv_ds8k_connection_type = iscsi

|

(StrOpt) Connection type to the IBM Storage Array |

xiv_ds8k_proxy = xiv_ds8k_openstack.nova_proxy.XIVDS8KNovaProxy

|

(StrOpt) Proxy driver that connects to the IBM Storage Array |

2.2.12. LVM

cinder.conf configuration file, and use the following options to configure for iSCSI transport:

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

iscsi_protocol = iscsi

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

iscsi_protocol = iscsi

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

iscsi_protocol = iser

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

iscsi_protocol = iser

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

lvm_conf_file = /etc/cinder/lvm.conf

|

(StrOpt) LVM conf file to use for the LVM driver in Cinder; this setting is ignored if the specified file does not exist (You can also specify 'None' to not use a conf file even if one exists). |

lvm_mirrors = 0

|

(IntOpt) If >0, create LVs with multiple mirrors. Note that this requires lvm_mirrors + 2 PVs with available space |

lvm_type = default

|

(StrOpt) Type of LVM volumes to deploy |

volume_group = cinder-volumes

|

(StrOpt) Name for the VG that will contain exported volumes |

2.2.13. NetApp unified driver

2.2.13.1. NetApp clustered Data ONTAP storage family

2.2.13.1.1. NetApp iSCSI configuration for clustered Data ONTAP

Configuration options for clustered Data ONTAP family with iSCSI protocol

volume_driver, netapp_storage_family and netapp_storage_protocol options in cinder.conf as follows:

netapp_storage_protocol with iscsi.

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

netapp_login = None

|

(StrOpt) Administrative user account name used to access the storage system or proxy server. |

netapp_partner_backend_name = None

|

(StrOpt) The name of the config.conf stanza for a Data ONTAP (7-mode) HA partner. This option is only used by the driver when connecting to an instance with a storage family of Data ONTAP operating in 7-Mode, and it is required if the storage protocol selected is FC. |

netapp_password = None

|

(StrOpt) Password for the administrative user account specified in the netapp_login option. |

netapp_server_hostname = None

|

(StrOpt) The hostname (or IP address) for the storage system or proxy server. |

netapp_server_port = None

|

(IntOpt) The TCP port to use for communication with the storage system or proxy server. If not specified, Data ONTAP drivers will use 80 for HTTP and 443 for HTTPS; E-Series will use 8080 for HTTP and 8443 for HTTPS. |

netapp_size_multiplier = 1.2

|

(FloatOpt) The quantity to be multiplied by the requested volume size to ensure enough space is available on the virtual storage server (Vserver) to fulfill the volume creation request. |

netapp_storage_family = ontap_cluster

|

(StrOpt) The storage family type used on the storage system; valid values are ontap_7mode for using Data ONTAP operating in 7-Mode, ontap_cluster for using clustered Data ONTAP, or eseries for using E-Series. |

netapp_storage_protocol = None

|

(StrOpt) The storage protocol to be used on the data path with the storage system. |

netapp_transport_type = http

|

(StrOpt) The transport protocol used when communicating with the storage system or proxy server. |

netapp_vserver = None

|

(StrOpt) This option specifies the virtual storage server (Vserver) name on the storage cluster on which provisioning of block storage volumes should occur. |

netapp_login that only has virtual storage server (Vserver) administration privileges (rather than cluster-wide administration privileges), some advanced features of the NetApp unified driver will not work and you may see warnings in the OpenStack Block Storage logs.

2.2.13.1.2. NetApp NFS configuration for clustered Data ONTAP

Configuration options for the clustered Data ONTAP family with NFS protocol

volume_driver, netapp_storage_family and netapp_storage_protocol options in cinder.conf as follows:

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

expiry_thres_minutes = 720

|

(IntOpt) This option specifies the threshold for last access time for images in the NFS image cache. When a cache cleaning cycle begins, images in the cache that have not been accessed in the last M minutes, where M is the value of this parameter, will be deleted from the cache to create free space on the NFS share. |

netapp_copyoffload_tool_path = None

|

(StrOpt) This option specifies the path of the NetApp copy offload tool binary. Ensure that the binary has execute permissions set which allow the effective user of the cinder-volume process to execute the file. |

netapp_login = None

|

(StrOpt) Administrative user account name used to access the storage system or proxy server. |

netapp_partner_backend_name = None

|

(StrOpt) The name of the config.conf stanza for a Data ONTAP (7-mode) HA partner. This option is only used by the driver when connecting to an instance with a storage family of Data ONTAP operating in 7-Mode, and it is required if the storage protocol selected is FC. |

netapp_password = None

|

(StrOpt) Password for the administrative user account specified in the netapp_login option. |

netapp_server_hostname = None

|

(StrOpt) The hostname (or IP address) for the storage system or proxy server. |

netapp_server_port = None

|

(IntOpt) The TCP port to use for communication with the storage system or proxy server. If not specified, Data ONTAP drivers will use 80 for HTTP and 443 for HTTPS; E-Series will use 8080 for HTTP and 8443 for HTTPS. |

netapp_storage_family = ontap_cluster

|

(StrOpt) The storage family type used on the storage system; valid values are ontap_7mode for using Data ONTAP operating in 7-Mode, ontap_cluster for using clustered Data ONTAP, or eseries for using E-Series. |

netapp_storage_protocol = None

|

(StrOpt) The storage protocol to be used on the data path with the storage system. |

netapp_transport_type = http

|

(StrOpt) The transport protocol used when communicating with the storage system or proxy server. |

netapp_vserver = None

|

(StrOpt) This option specifies the virtual storage server (Vserver) name on the storage cluster on which provisioning of block storage volumes should occur. |

thres_avl_size_perc_start = 20

|

(IntOpt) If the percentage of available space for an NFS share has dropped below the value specified by this option, the NFS image cache will be cleaned. |

thres_avl_size_perc_stop = 60

|

(IntOpt) When the percentage of available space on an NFS share has reached the percentage specified by this option, the driver will stop clearing files from the NFS image cache that have not been accessed in the last M minutes, where M is the value of the expiry_thres_minutes configuration option. |

netapp_login that only has virtual storage server (Vserver) administration privileges (rather than cluster-wide administration privileges), some advanced features of the NetApp unified driver will not work and you may see warnings in the OpenStack Block Storage logs.

NetApp NFS Copy Offload client

- The Image Service is configured to store images in an NFS share that is exported from a NetApp FlexVol volume and the destination for the new Block Storage volume will be on an NFS share exported from a different FlexVol volume than the one used by the Image Service. Both FlexVols must be located within the same cluster.

- The source image from the Image Service has already been cached in an NFS image cache within a Block Storage backend. The cached image resides on a different FlexVol volume than the destination for the new Block Storage volume. Both FlexVols must be located within the same cluster.

- Set the

default_storeconfiguration option tofile. - Set the

filesystem_store_datadirconfiguration option to the path to the Image Service NFS export. - Set the

show_image_direct_urlconfiguration option toTrue. - Set the

show_multiple_locationsconfiguration option toTrue.ImportantIf configured without the proper policy settings, a non-admin user of the Image Service can replace active image data (that is, switch out a current image without other users knowing). See the OSSN announcement (recommended actions) for configuration information: https://wiki.openstack.org/wiki/OSSN/OSSN-0065 - Set the

filesystem_store_metadata_fileconfiguration option to a metadata file. The metadata file should contain a JSON object that contains the correct information about the NFS export used by the Image Service, similar to:{ "share_location": "nfs://192.168.0.1/myGlanceExport", "mount_point": "/var/lib/glance/images", "type": "nfs" }{ "share_location": "nfs://192.168.0.1/myGlanceExport", "mount_point": "/var/lib/glance/images", "type": "nfs" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Set the

netapp_copyoffload_tool_pathconfiguration option to the path to the NetApp Copy Offload binary. - Set the

glance_api_versionconfiguration option to2.

- The storage system must have Data ONTAP v8.2 or greater installed.

- The vStorage feature must be enabled on each storage virtual machine (SVM, also known as a Vserver) that is permitted to interact with the copy offload client.

- To configure the copy offload workflow, enable NFS v4.0 or greater and export it from the SVM.

netapp_copyoffload_tool_path configuration option, please visit the Utility Toolchest page at the NetApp Support portal (login is required).

2.2.13.1.3. NetApp-supported extra specs for clustered Data ONTAP

| Extra spec | Type | Description | |||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

netapp_raid_type

|

String |

Limit the candidate volume list based on one of the following raid types: raid4, raid_dp.

|

|||||||||||||||||||||||||||||||||||||||||||||||

netapp_disk_type

|

String |

Limit the candidate volume list based on one of the following disk types: ATA, BSAS, EATA, FCAL, FSAS, LUN, MSATA, SAS, SATA, SCSI, XATA, XSAS, or SSD.

|

|||||||||||||||||||||||||||||||||||||||||||||||

netapp:qos_policy_group[a]

|