Ce contenu n'est pas disponible dans la langue sélectionnée.

Chapter 1. Introduction to RHOSP dynamic routing

Red Hat OpenStack Platform (RHOSP) supports dynamic routing with border gateway protocol (BGP).

The topics included in this section are:

1.1. About RHOSP dynamic routing

Red Hat OpenStack Platform (RHOSP) supports ML2/OVN dynamic routing with border gateway protocol (BGP) in the control and data planes. Deploying clusters in a pure Layer 3 (L3) data center overcomes the scaling issues of traditional Layer 2 (L2) infrastructures such as large failure domains, high volume broadcast traffic, or long convergence times during failure recoveries.

RHOSP dynamic routing provides a mechanism for load balancing and high availability which differs from the traditional approaches employed by most internet service providers today. With RHOSP dynamic routing you improve high availability by using L3 routing for shared VIPs on Controller nodes. On control plane servers that you deploy across availability zones, you maintain separate L2 segments, physical sites, and power circuits.

With RHOSP dynamic routing you can expose IP addresses for VMs and load balancers on provider networks during creation and startup or whenever they are associated with a floating IP address. The same functionality is available on project networks, when a special flag is set.

RHOSP dynamic routing also provides these benefits:

- Improved management of data plane traffic.

- Simplified configuration with fewer differences between roles.

- Distributed L2 provider VLANs and floating IP (FIP) subnets across L3 boundaries with no requirement to span VLANs across racks for non-overlapping CIDRs only.

- Distributed Controllers across racks and L3 boundaries in data centers and at edge sites.

- Failover of the whole subnet for public provider IPs or FIPs from one site to another.

- Next-generation data center and hyperscale fabric support.

1.2. BGP components used in RHOSP dynamic routing

Red Hat OpenStack Platform (RHOSP) relies on several components to provide dynamic routing to the Layer 3 data center.

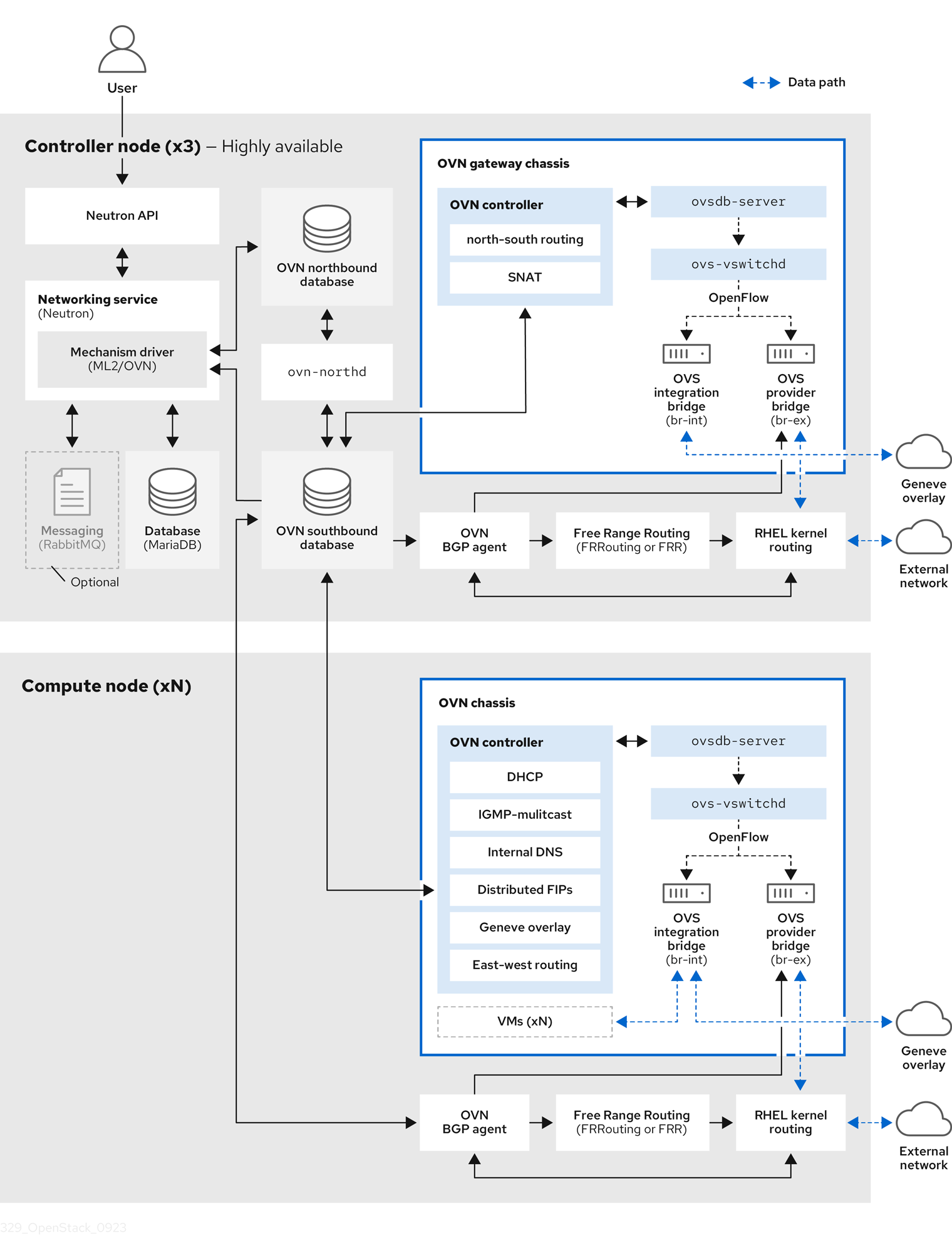

Figure 1.1. RHOSP dynamic routing components

- OVN BGP agent (

ovn-bgp-agentcontainer) -

A Python-based daemon that runs in the

ovn-controllercontainer on each RHOSP Controller and Compute nodes. The agent monitors the Open Virtual Network (OVN) southbound database for certain VM and floating IP (FIP) events. When these events occur, the agent notifies the FRR BGP daemon (bgpd) to advertise the IP address or FIP associated with the VM. The agent also triggers actions that route the external traffic to the OVN overlay. Because the agent uses a multi-driver implementation, you can configure the agent for the specific infrastructure that runs on top of OVN, such as RHOSP or Red Hat OpenShift Platform. - Free Range Routing (FRRouting, or FRR) (

frrcontainer) -

An IP routing protocol suite of daemons that run in the

frrcontainer on all composable roles and work together to build the routing table. FRR supports equal-cost multi-path routing (ECMP), although each protocol daemon uses different methods to manage ECMP policy. The FRR suite, supplied with Red Hat Enterprise Linux (RHEL), provides several daemons. RHOSP primarily uses the FRRbgpd,bfdd, and Zebra daemons. - BGP daemon (

frrcontainer) -

A daemon (

bgpd) that runs in thefrrcontainer to implement version 4 of Border Gateway Protocol (BGP). Thebgpddaemon uses capability negotiation to detect the remote peer’s capabilities. If a peer is exclusively configured as an IPv4 unicast neighbor,bgpddoes not send capability negotiation packets. The BGP daemon communicates with the kernel routing table through the Zebra daemon. - BFD daemon (

frrcontainer) -

A daemon (

bfdd) in the FRR suite that implements Bidirectional Forwarding Detection (BFD). This protocol provides failure detection between adjacent forwarding engines. - Zebra daemon (

frrcontainer) - A daemon that coordinates information from the various FRR daemons, and communicates routing decisions directly to the kernel routing table.

- VTY shell (

frrcontainer) -

A shell for FRR daemons, VTY shell (

vtysh) aggregates all the CLI commands defined in each of the daemons and presents them in a single shell.

Additional resources

1.3. BGP advertisement and traffic redirection

In deployments that use Red Hat OpenStack Platform (RHOSP) dynamic routing, network traffic flows to and from VMs, load balancers, and floating IPs (FIPs) using advertised routes. After the traffic arrives at the node, the OVN BGP agent adds the IP rules, routes, and OVS flow rules to redirect traffic to the OVS provider bridge (br-ex) by using Red Hat Enterprise Linux (RHEL) kernel networking.

The process of advertising a network route begins with the OVN BGP agent triggering Free Range Routing (FRRouting, or FRR) to advertise and withdraw directly connected routes. The OVN BGP agent performs these steps to properly configure FRR to ensure that IP addresses are advertised whenever they are added to the bgp-nic interface:

FRR launches VTY shell to connect to the FRR socket:

$ vtysh --vty_socket -c <command_file>

VTY shell passes a file that contains the following commands:

LEAK_VRF_TEMPLATE = ''' router bgp {{ bgp_as }} address-family ipv4 unicast import vrf {{ vrf_name }} exit-address-family address-family ipv6 unicast import vrf {{ vrf_name }} exit-address-family router bgp {{ bgp_as }} vrf {{ vrf_name }} bgp router-id {{ bgp_router_id }} address-family ipv4 unicast redistribute connected exit-address-family address-family ipv6 unicast redistribute connected exit-address-family ...The commands that VTY shell pass, do the following:

-

Create a VRF named

bgp_vrfby default. Associate a dummy interface type with the VRF.

By default, the dummy interface is named,

bgp-nic.- Add an IP address to the OVS provider bridges to ensure that Address Resolution Protocol (ARP) and Neighbor Discovery Protocol (NDP) is enabled.

-

Create a VRF named

The Zebra daemon monitors IP addresses for VMs and load balancers as they are added and deleted on any local interface, and Zebra advertises and withdraws the route.

Because FRR is configured with the

redistribute connectedoption enabled, advertising and withdrawing routes merely consists of exposing or removing the route from the dummy interface,bgp-nic.NoteExposing VMs connected to tenant networks is disabled by default. If it is enabled in the RHOSP configuration, the OVN BGP agent exposes the neutron router gateway port. The OVN BGP agent injects traffic that flows to VMs on tenant networks into the OVN overlay through the node that hosts the

chassisredirectlogical router ports (CR-LRP).- FRR exposes the IP address on either the node hosting the VM or the load balancer or on the node containing the OVN router gateway port.

The OVN BGP agent performs the necessary configuration to redirect traffic to and from the OVN overlay by using RHEL kernel networking and OVS, and then FRR exposes the IP address on the proper node.

When the OVN BGP agent starts, it does the following actions:

- Adds an IP address to the OVS provider bridges to enable ARP and NDP.

Adds an entry to the routing table for each OVS provider bridge in

/etc/iproute2/rt_tables.NoteIn the RHEL kernel the maximum number of routing tables is 252. This limits the number of provider networks to 252.

- Connects a VLAN device to the bridge and enables ARP and NDP (on VLAN provider networks only).

- Cleans up any extra OVS flows at the OVS provider bridges.

During regular resync events or during start-up, the OVN BGP agent performs the following actions:

Adds an IP address rule to apply specific routes to the routing table.

In the following example, this rule is associated with the OVS provider bridge:

$ ip rule 0: from all lookup local 1000: from all lookup [l3mdev-table] *32000: from all to IP lookup br-ex* # br-ex is the OVS provider bridge *32000: from all to CIDR lookup br-ex* # for VMs in tenant networks 32766: from all lookup main 32767: from all lookup default

Adds an IP address route to the OVS provider bridge routing table to route traffic to the OVS provider bridge device:

$ ip route show table br-ex default dev br-ex scope link *CIDR via CR-LRP_IP dev br-ex* # for VMs in tenant networks *CR-LRP_IP dev br-ex scope link* # for the VM in tenant network redirection *IP dev br-ex scope link* # IPs on provider or FIPs

Routes traffic to OVN through the OVS provider bridge (

br-ex), using one of the following methods depending on whether IPv4 or IPv6 is used:For IPv4, adds a static ARP entry for the OVN router gateway ports,

CR-LRP, because OVN does not reply to ARP requests outside its L2 network:$ ip nei ... CR-LRP_IP dev br-ex lladdr CR-LRP_MAC PERMANENT ...

For IPv6, adds an NDP proxy:

$ ip -6 nei add proxy CR-LRP_IP dev br-ex

Sends the traffic from the OVN overlay to kernel networking by adding a new flow at the OVS provider bridges so that the destination MAC address changes to the MAC address of the OVS provider bridge (

actions=mod_dl_dst:OVN_PROVIDER_BRIDGE_MAC,NORMAL):$ sudo ovs-ofctl dump-flows br-ex cookie=0x3e7, duration=77.949s, table=0, n_packets=0, n_bytes=0, priority= 900,ip,in_port="patch-provnet-1" actions=mod_dl_dst:3a:f7:e9:54:e8:4d,NORMAL cookie=0x3e7, duration=77.937s, table=0, n_packets=0, n_bytes=0, priority= 900,ipv6,in_port="patch-provnet-1" actions=mod_dl_dst:3a:f7:e9:54:e8:4d,NORMAL