Ce contenu n'est pas disponible dans la langue sélectionnée.

Chapter 1. Introduction to director

Red Hat OpenStack Platform (RHOSP) director is a toolset for installing and managing a complete RHOSP environment. Director is based primarily on the OpenStack project TripleO. With director you can install a fully-operational, lean, and robust RHOSP environment that can provision and control bare metal systems to use as RHOSP nodes.

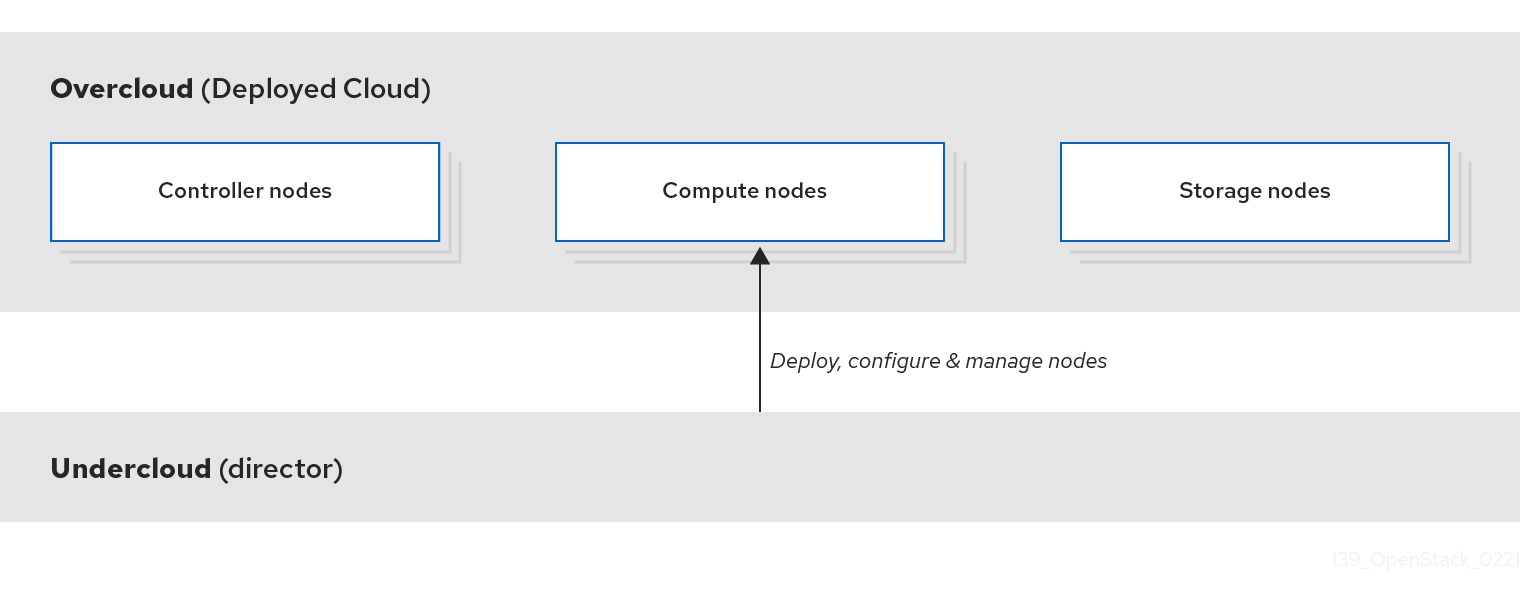

Director uses two main concepts: an undercloud and an overcloud. First you install the undercloud, and then you use the undercloud as a tool to install and configure the overcloud.

The information and procedures in this guide are for using director to deploy a RHOSP environment only. If you want to deploy a RHOSP environment in conjunction with Red Hat Ceph Storage, see Deploying Red Hat Ceph Storage and Red Hat OpenStack Platform together with director.

1.1. Understanding the undercloud

The undercloud is the main management node that contains the Red Hat OpenStack Platform (RHOSP) director toolset. It is a single-system RHOSP installation that includes components for provisioning and managing the RHOSP nodes that form your RHOSP environment: the overcloud. The components that form the undercloud have multiple functions:

- RHOSP services

The undercloud uses RHOSP service components as its base tool set. Each service operates within a separate container on the undercloud:

- Identity service (keystone): Provides authentication and authorization for director services.

- Bare Metal Provisioning service (ironic) and Compute service (nova): Manages bare-metal nodes.

- Networking service (neutron) and Open vSwitch: Control networking for bare-metal nodes.

- Orchestration service (heat): Provides the orchestration of nodes after director writes the overcloud image to disk.

- Environment planning

- The undercloud includes planning functions that you can use to create and assign certain node roles. The undercloud includes a default set of node roles that you can assign to specific nodes: Compute, Controller, and various Storage roles. You can also design custom roles. Additionally, you can select which RHOSP services to include on each node role, which provides a method to model new node types or isolate certain components on their own host.

- Bare metal system control

- The undercloud uses the out-of-band management interface, usually Intelligent Platform Management Interface (IPMI), of each node for power management control and a PXE-based service to discover hardware attributes and install RHOSP on each node. You can use this feature to provision bare metal systems as RHOSP nodes. For a full list of power management drivers, see Power management drivers.

- Orchestration

- The undercloud contains a set of YAML templates that represent a set of plans for your environment. The undercloud imports these plans and follows their instructions to create the resulting RHOSP environment. The plans also include hooks that you can use to incorporate your own customizations as certain points in the environment creation process.

1.2. Understanding the overcloud

The overcloud is the resulting Red Hat OpenStack Platform (RHOSP) environment that the undercloud creates. The overcloud consists of multiple nodes with different roles that you define based on the RHOSP environment that you want to create. The undercloud includes a default set of overcloud node roles:

- Controller

Controller nodes provide administration, networking, and high availability for the RHOSP environment. A recommended RHOSP environment contains three Controller nodes together in a high availability cluster.

A default Controller node role supports the following components. Not all of these services are enabled by default. Some of these components require custom or pre-packaged environment files to enable:

- Dashboard service (horizon)

- Identity service (keystone)

- Compute service (nova)

- Networking service (neutron)

- Image Service (glance)

- Block Storage service (cinder)

- Object Storage service (swift)

- Orchestration service (heat)

- Shared File Systems service (manila)

- Bare Metal Provisioning service (ironic)

- Load Balancing-as-a-Service (octavia)

- Key Manager service (barbican)

- MariaDB

- Open vSwitch

- Pacemaker and Galera for high availability services.

- Compute

Compute nodes provide computing resources for the RHOSP environment. You can add more Compute nodes to scale out your environment over time. A default Compute node contains the following components:

- Compute service (nova)

- KVM/QEMU

- Open vSwitch

- Storage

Storage nodes provide storage for the RHOSP environment. The following list contains information about the various types of Storage node in RHOSP:

- Ceph Storage nodes - Used to form storage clusters. Each node contains a Ceph Object Storage Daemon (OSD). Additionally, director installs Ceph Monitor onto the Controller nodes in situations where you deploy Ceph Storage nodes as part of your environment.

Block Storage (cinder) - Used as external block storage for highly available Controller nodes. This node contains the following components:

- Block Storage (cinder) volume

- Telemetry agents

- Open vSwitch.

Object Storage (swift) - These nodes provide an external storage layer for RHOSP Object Storage. The Controller nodes access object storage nodes through the Swift proxy. Object storage nodes contain the following components:

- Object Storage (swift) storage

- Telemetry agents

- Open vSwitch.

1.3. Working with Red Hat Ceph Storage in RHOSP

It is common for large organizations that use Red Hat OpenStack Platform (RHOSP) to serve thousands of clients or more. Each OpenStack client is likely to have their own unique needs when consuming block storage resources. Deploying the Image service (glance), the Block Storage service (cinder), and the Compute service (nova) on a single node can become impossible to manage in large deployments with thousands of clients. Scaling RHOSP externally resolves this challenge.

However, there is also a practical requirement to virtualize the storage layer with a solution like Red Hat Ceph Storage so that you can scale the RHOSP storage layer from tens of terabytes to petabytes, or even exabytes of storage. Red Hat Ceph Storage provides this storage virtualization layer with high availability and high performance while running on commodity hardware. While virtualization might seem like it comes with a performance penalty, Red Hat Ceph Storage stripes block device images as objects across the cluster, meaning that large Ceph Block Device images have better performance than a standalone disk. Ceph Block devices also support caching, copy-on-write cloning, and copy-on-read cloning for enhanced performance.

For more information about Red Hat Ceph Storage, see Red Hat Ceph Storage.